Abstract

Digital images represent the primary tool for diagnostics and documentation of the state of preservation of artifacts. Today the interpretive filters that allow one to characterize information and communicate it are extremely subjective. Our research goal is to study a quantitative analysis methodology to facilitate and semi-automate the recognition and polygonization of areas corresponding to the characteristics searched. To this end, several algorithms have been tested that allow for separating the characteristics and creating binary masks to be statistically analyzed and polygonized. Since our methodology aims to offer a conservator-restorer model to obtain useful graphic documentation in a short time that is usable for design and statistical purposes, this process has been implemented in a single Geographic Information Systems (GIS) application.

1. Introduction

The artworks undergo profound changes in time due to several factors: the natural aging of materials, pathologies of degradation, wrong restoration or remaking that introduce new materials and chemical interactions. For this reason, any technique for detecting and reporting what is not directly visible or perceptible is an essential means of diagnostic investigation. This need is currently widely met by techniques inspired by remote sensing, such as multispectral and hyperspectral imaging, as they can provide information on the composition of materials without taking samples. The technical information related to the nature and conditions of the artwork is transcribed in the graphic documentation.

The documentation refers to the systematic collection of information derived from the diagnostic investigation, restoration, monitoring, and maintenance performed on cultural heritage. Specifically, the graphic documentation, also called the thematic map, is the primary tool for communication and synthesis of the information collected on the nature and conditions of the artwork, which are transcribed into geometrically correct drawings and translated into conventional graphic symbols [1,2]. Thematic maps are generally used by different types of professionals operating at different times and in different ways, representing the formal and unequivocal means of communication, comparison, and guidance for subsequent conservation and preservation operations. The graphic documentation should always be well archived, accessible, and usable; therefore, it should be possible to obtain or arrive at the information when and where it is needed.

In the documentation process, these graphic drawings are intended for critical analysis of data. They differ from the artifact’s photographic reproduction, which detects and reproduces all its complexities in an undifferentiated way, without a critical/interpretative filter. The vector drawing allows us to realize a process of synthesis, discrepancy, and characterization of data, making the results immediately readable and statistically analyzable. Although software for the documentation of three-dimensional models is being developed very slowly and pioneeringly, graphic documentation in the form of thematic maps is always required during a conservation or preservation intervention.

Both for artifacts with greater three-dimensionality and for artifacts with reduced three-dimensionality, the vector graphic drawing is always based on a two-dimensional photographic reproduction of the artifact, which is often not geometrically correct. The artifact is then photographed in all its sides at 360° and a thematic map is created for each side or prospect and the graphic documentation operation is performed during the entire intervention. In the current practice, this process is carried out through manual drawing by restorers, so it is strongly influenced by their skills.

In these subjective analysis processes, the operations of area graphicization and interpretation of the characteristics constitute a joint phase. Indeed, those who perform the mapping outline the areas of interest directly following the boundaries dictated by their experience and visual perception.

To date, the automatic extraction of drawings from raster images has only been made in archaeology by a specific technique called Stippling. It has been developed to produce illustrations in raster format, extracted from photographs of archaeological objects [3]. Unfortunately, these techniques do not meet the requirements of graphic documentation in restoration.

Our research aims to study a quantitative analysis methodology to facilitate and semi-automate the recognition and vectorization of areas corresponding to the characteristics under consideration. To this aim, some segmentation algorithms have been tested to separate the characteristics and facilitate identifying the areas and their vectorization. The choice to go beyond the analysis carried out directly on the pixel areas by performing a vectorization was dictated by the fact that the documentation in the restoration of any artifact requires a series of vector drawings, non-illustrative and non-raster, without nuances and with closed polygons topographically consistent with each other. So, the research topic we are developing is not only about one algorithm, but is about a formal methodology involving the cascaded application of a series of algorithms, which has been consolidated over the years. Our work’s novelty is the methodology itself rather than the algorithms used to apply it in practice. This contribution includes the description of the supporting algorithms and the software tools implementing them. The study of it would allow for the full reproducibility of the methodology in practice.

This methodology has been developed in three years, during which it has been tested on several paintings on canvas, mosaics, frescoes/wall paintings, and paper/parchment artifacts, involving the profiles of diagnosticians, art historians, conservator/restorers and Geographic Information Systems (GIS) professionals. The model presented in this document is the one that has allowed us to obtain the best results in all the tested case studies.

The rest of the paper is organized as follows. In Section 2, the main problems present today in the documentation process are analyzed, and research projects focused on their solution are mentioned. We also mention the graphical documentation software, highlighting those tools that can support our methodology’s semi-automatic implementation, such as, e.g., Geographic Information Systems (GIS).

2. State of the Art

Although the importance of documentation has been widely recognized and considerable experience has been gained in applying innovative documentation systems [4], there are still many unsolved problems in analyzing and digitizing artifacts.

2.1. Creation of Thematic Maps

Standardized techniques of architectural and archaeological survey (especially those related to the detection of the constituent materials of the external surfaces of buildings) have been the guide for the development of current thematic maps for the planning of conservation, as well as buildings and monuments of all other categories of cultural heritage. For this reason, only in the context of historical architectural monuments and archaeological sites can restorers rely on professionals, e.g., architects, and ad hoc standards for the generation of thematic maps. For interior decorations of buildings and small movable objects such as painted canvases and wood panels, sculptures, and utensils, the conservator-restorer tries to conform to the same architectural survey criteria, often without following a standard methodology.

The lack of standardization concerns four fundamental aspects: the modalities of photographic acquisition, the modalities of post-production and study of diagnostic investigations, the textual/graphic vocabulary of thematic maps and the use of software to create it. In this section, we focus on using software to create thematic maps and on the study and post-production of diagnostic investigations, because the other two aspects involve complex issues, often dependent on the type of artifact and the regulations in force in each country.

However, we think it is useful to provide some clarification about these issues: the modalities of photographic acquisition change according to the typology of the artifacts and the techniques used to respect the geometric correctness of the artifact represent a very broad field of research. In Italy, the only official document has been drawn up by the Central Institute for Catalogue and Documentation (ICCD) in Rome [5]. Concerning the textual and graphic vocabulary, very few standards are currently in use for digital architectural design [6] and the UNI Beni Culturali NorMal (Norme Materiale Lapideo) standards [1] mainly refer to undecorated stone material, mortars, and ceramics.

2.2. Software in Use

Currently, the most used software and platforms supporting graphic documentation are Computer-aided design (CAD) [7,8] and Geographic Information System (GIS) [9]. An interesting approach is offered in the geographical setting since the problems related to statistical analysis of satellite images and cartography creation are similar to cultural heritage documentation. GIS technologies offer flexible image analysis and data management toolboxes by integrating various functionalities, data types, and formats. In the field of restoration, implementations of GIS were developed in Italy in the 1990s through a project called “Carta del rischio” [10]. GIS and CAD functionalities are also merged into hybrid platforms, like SICAR® [11] a web-based geographic information system officially adopted in 2012 by the Italian Ministry of Cultural Heritage and Activities and Tourism.

All these tools suffer from some significant practical limitations. CAD is optimal for the mathematical processing of geometric data. However, its use is particularly challenging when the graphic survey to be produced is characterized by irregular and highly jagged edges and shapes. Using CAD drawing tools, operators tend to approximate the area’s perimeters making the edges inaccurate. Furthermore, CAD does not allow for organizing one’s files in a structured database.

Both CAD and SICAR do not allow raster editing; the operator cannot query pixels or optimize the image. Color data are crucial to characterize some types of artifacts, especially those with a decorated or painted surface, of which, for example, the specific conservation problems of each color should be analyzed.

CAD and SICAR do not allow for any interaction between raster and vector graphics, and each graphic survey is executed by manual drawing. The result is highly subjective, and each thematic mapping is different from any other, even if carried out by the same operator on the same photographic basis. Finally, restorers rarely use a unique system to compile their thematic maps. When dealing with canvases, painted tables, ceramics, fresco paintings, and mosaics, restorers use, often empirically, diverse commercial software for vector graphics and image processing without adopting a standard methodology.

2.3. Geographic Information System

In more recent years, the development of low-cost and easy-to-use Geographic Information System software including spatial attributes and mapping elements, has made it possible to use this technology for non-geographic projects. From relatively large areas, GIS has been used on mobile artifacts; especially in Italy, Spain and Portugal, experimentation on the statistical analysis of the degradation of painted canvas and tables has started [12,13,14,15]. Two GIS systems were used for these experiments: QGIS®—free and open-source, and ArcGIS®, proprietary software of Esri®. In particular, QGIS® has proven to be widely used for scientific research in territorial, archaeological, and artistic history. For this software, significant plugins have been developed, among them the Semi-automatic Classification Plugin (Version 7.8.0)that combines multispectral images and raster analysis, allowing the operator-controlled semi-automatic classification of environmental remote sensing images and providing tools to accelerate the process of classification of soil areas [16].

In the archaeological field, a plugin called pyArchInit (Version 2.4.6,) has been developed for QGIS® that allows access to a global database server that can be consulted and modified-like PostreSQL-favoring the homogeneity promoting the homogeneity of the solutions adopted and exporting projects through interactive systems that can be used on the web: the so-called web-GIS [17]. This plugin satisfies the archaeological community’s growing need to computerize excavation documentation, but can also manage documentation in the architectural and historical-artistic fields.

2.4. Diagnostic Investigations

As far as diagnostic investigations are concerned, white balance and color correction, post-production, and interpretation modes are the main issues.

Digital diagnostic images require a colorimetric correction process, during both acquisition and post-production. In general, all the adjustment and balance operations greatly influence the interpretation of multispectral images as they modify the color tones expressed in the visible spectrum, corresponding to the wavelengths reflected by the material surface. In the case of induced luminescence images that give a response in the field of visible, such as ultraviolet-induced luminescence (UVL) and visible-induced visible luminescence (VIVL) images, artistic materials show different grades and tones of fluorescence according to their composition and aging. Therefore, white balance in shooting and color correction can substantially affect the fidelity and reproducibility of images, making interpretation inaccurate and comparisons between images taken at different times and with different settings inconsistent. As far as IR and UV reflected images and visible-induced infrared luminescence (VIL) images are concerned, white balance mostly impacts on the post-production of false-color reflected images, such as ultraviolet-reflected false color (UVRFC) and infrared-reflected false color (IRRFC).

To obtain consistent and comparable data, it is necessary to follow standards currently represented by the results of the Charisma project of the British Museum [18]. However, these standards are not easy to implement, and in practice, more readily available commercial colorimetric references are used, but they do not offer optimal results. Furthermore, commercially available cameras are designed to provide aesthetically pleasing images and not for scientific analysis of artifacts and, as a result, may make undesired changes to captured multispectral images. The automatic adjustments incorporated into the cameras include white balance adjustments, contrast, brightness, sharpness, Automatic Gain Control (AGC) and control of the dynamic range in low light situations. To solve these problems, in addition to the Charisma Project, several manuals and scientific articles have been published for the correct use of colorimetric references for multispectral imaging [19,20].

The interpretation of multispectral images changes depending on the technique used, the artifact under investigation, and its history dating. Concerning grayscale monochrome images such as infrared-reflected (IRR) and ultraviolet-reflected (UVR), reading and interpretation difficulties depend exclusively on the state of preservation of the artifact under examination and the information searched, so contrast and opacity of the objects in the scene are the only elements on which the restorer and the diagnostician use to recognize the characteristics.

Concerning the phenomenon of reflectance of the materials presented in color diagnostic images, such as induced luminescence images and false-color reflected images, identifying the characteristics searched is more complicated. The characteristics are shown in areas of color that are more or less homogeneous and with variations in tonality and opacity that are always different. These variations depend on the artifact’s execution techniques, the surface materials used and their aging. In addition, the materials, such as pigments and binders, are mixed and layered with each other, and the application techniques vary greatly depending on the historical period. As a result, materials react in multiple spectrum bands simultaneously with different levels of intensity. Therefore, the recognition of characteristics through reading the fluorescence phenomenon is subject to an interpretative process depending on the examiner’s experience and the reference literature for any specific case.

Over the years, many scientific types of research have been conducted in support of the recognition of fluorescent materials. Among the main ones we can mention (a) a mathematical model based on the Kubelka-Munk theory that studies the pigment-binder interaction [21,22], (b) a false color imaging technique called ChromaDI that enhances in the visible image the differences between the optical behavior of the various pigments taking into account the changes that occur during the transition from short to longer wavelengths [23], and (c) a methodology to classify different pigments through Hyper Spectral Imaging (HSI) that acts in the Short Wavelength Infrared (SWIR) region [24].

Regarding the statistical analysis of the multispectral image, the same techniques we use here have proved to be very efficient for improving the readability of ancient, degraded manuscripts and palimpsests [25]. Furthermore, in archaeology, these techniques have been used to reveal details otherwise invisible or difficult to discern on the surface of painted walls [26]. However, multispectral techniques are rarely combined with statistical image processing and have never been used to facilitate the restorer in creating thematic maps for documentation. Finally, it is central to specify that regardless of the type of technique and artifact investigated, archaeologists and conservators/restorers are the only ones who know the artifact’s material characteristics and must always be involved in the reading interpretation of diagnostic investigations.

3. A New Methodological Approach

In the common documentation practice, the available diagnostic images are analyzed separately and their analysis is based on visual observation of the reflectance phenomena of materials, although in some cases they can be distorted and difficult to interpret. Our strategy instead considers the set of images available as a whole. The aim is to subdivide the painted area into regions through strategies of Blind Source Separation (BSS) [27], which have long been used in other areas, such as document image processing [28]. Then, we propose a rigorous and semi-automated analysis procedure for an easy, fast, and repeatable automatic polygonization of the extracted regions using standard software tools [29]. Therefore, the restorer’s fundamental choice is the only remaining subjectivity, but it is based on a precise and repeatable set of objective and scientifically valid measurements. It is essential to point out that the restorer’s role in directing the investigations and interpreting the images is central and cannot be delegated.

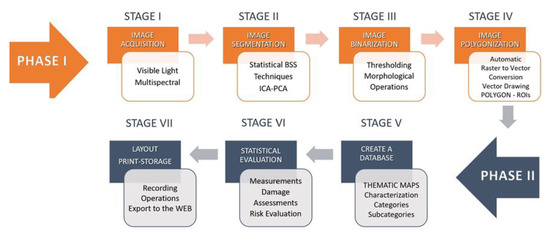

As illustrated in Figure 1, our methodology includes a first phase during which the diagnostic images are acquired and manipulated for analysis purposes. It includes four stages: image acquisition, image segmentation, threshold-based extraction of the regions of interest (ROI), and mapping from raster to vector representation. In the second phase, the methodology applies classification and analysis methods to determine the state of conservation of the artwork. The resulting output is then archived according to the specified requirements.

Figure 1.

Methodology lifecycle.

3.1. Stage 1: Acquisition

The methodology’s efficacy is strongly affected by the quantity and quality of the digital images provided, representing the input for the following stages’ processing and analysis algorithms. As a general rule, the main requirements of photographic reproduction for documentation are precision and consistency with respect to the original dimensions of the artwork, readability of all its parts, high accuracy in color reproduction, uniform illumination on the whole image, and absence of reflections that could impair the analysis. Regarding the respect of the accuracy of the digital reproduction of an artifact, the most used software tools in cultural heritage are Agisoft Fotoscan© and Archis-Siscan©; in particular, Archis is highly useful to the rectification of complex objects or architectures and allows for a two-dimensional graphic restitution of the artifact. Below is a list of the requirements necessary for the methodology we propose.

One of the problems presented by a quantitative approach to the study of artifacts through digital images lies in the strong dependence of obtainable results by changing scale of analysis: indeed, reducing spatial resolution, the information related to the morphology of material features present on the surface is lost. So, for a complete characterization of the survey, it becomes necessary to work on images with a correct spatial resolution, in proportion to the artifact’s size and to the enlargement level on which the analysis will be carried out. Spatial resolution indicates the amount of detail visible in it. As for our methodology, the main advantage consists exactly in the great number of available details, as each detail represents further information. The methodology has been tested on images with different degrees of spatial resolution, we recommend using images with a spatial resolution of 300 pixels per inch—or a minimum of 150 pixels per inch—and 16 bits per channel.

Since the image processing techniques work on multiple images simultaneously, image data coherence is one of the fundamental requirements of the methodology. In order to proceed to the second phase concerning the application of segmentation algorithms, it is necessary that all the images acquired on the same artifact, in the same phase of the conservation/restoration intervention, are correctly registered with each other. Image registration is used to align images of the same subject taken with different acquisition techniques. Alignment involves eliminating slight rotations and tilts and re-sampling the images to the same scale.

It is not always possible to obtain full alignment of all acquired data because artifacts may change their morphology during the restoration intervention. In these cases, it is essential to provide consistency between sets of data acquired during the same phase of the intervention. Reference [30], dating 2003, aims to present a review of recent and classical image registration methods. More recently, a new image registration framework has been proposed in [31], based on multivariate mixture model (MvMM) and neural network estimation. Reproducibility of the shooting session must be guaranteed by filling out an activity report and a technical diagnostic report, to be kept both to verify the methodology correctness and for further comparisons in time. Reproducibility should also be ensured by saving, cataloguing and archiving all the original files and the connected meta-data, relating to all work phases.

The choice of the spectral range for image acquisition affects the analysis process, since each range is associated with different quantitative and qualitative information. Concerning the choice of the diagnostic acquisition technique to be applied, this must be assessed by conservators/restorers or by researchers, and will have to be aimed at providing answers to specific (previously expressed) problems, and to questions concerning artifacts conservation and restoration, in their cultural and technological framework. In the absence of multispectral images, the methodology can be applied to images only taken in the visible spectrum. Reference [32] describes an experimental approach to use “color” uniformity alone as a criterion for dividing the image into disjoint regions of interest corresponding to distinguishable features on the surface of the artifact. In detail, this approach was used to highlight overwritten text in a palimpsest, showing that satisfactory results can be obtained with a method of color decorrelation even starting from visible-light images. The results can often be as highly discriminative as those provided by diagnostic and multispectral images. In this case, the color space’s choice is central, as different spaces represent color information in different ways; see, e.g., RGB, HSV, and L*A*B* channels [33,34]. In our tests, the HSV color model has proven to be very useful for color segmentation in complex contexts such as artistic artifacts’ images. The main reason for this usefulness is in V’s components, i.e., in the values corresponding to brightness gradations, which allow to detect even the slightest variations in light intensity, and therefore the smallest discontinuities between areas of interest. Color properties thus described have an immediate perceptive interpretation by conservators/restorers, who are accustomed to distinguish colors according to their visual perception, on which this model is based.

3.2. Stage 2: Segmentation

Segmentation is the process of grouping spatial data into multiple homogeneous areas with similar properties. In our case, the properties (or features) we consider are the spectral responses of the different materials, that is, the local reflectance values measured in all the available channels. The regions of interest (ROI) we want to distinguish, segment, and extract are the pixel sets with homogeneous features (such as locations, sizes, and color), which correspond to parts of the artifact made of similar materials. Unfortunately, regions showing different features typically overlap with one another in all the channels, because typically, the materials are mixed and stratified with each other. This often makes segmentation and ROI extraction difficult. The visual inspection performed by diagnosticians or conservators-restorers can be very complicated, time-consuming, or even impossible, particularly when just slightly different spectral characteristics must be distinguished from many channels. Thus, this task can be performed more efficiently and objectively through automated image analysis techniques. In particular, manipulating the input channels to produce a number of maps, each showing a single or a few ROIs, can significantly facilitate the segmentation. Mathematically, this would be accomplished easily if the different materials’ spectral emissions were known, but this is seldom the case. To extract the different regions from multispectral data with no knowledge of their spectra, some assumptions must be made on the regions themselves and the mixing mechanism that produces their spectral appearance. For the mechanism, we assume an instantaneous linear model with hyperspectral channels and distinct features

where is the value of the data at channel i and at pixel t, is the spectral emission of the j-th feature in the i-th channel, and is the value of the j-th feature at pixel t. Notice that an additional assumption in this model is that the spectra are assumed to be uniform all over the image. This model is also called instantaneous because the data values at each pixel only depend on the feature values in that pixel and not on any neighborhood of it. If we are able to extract the map from the data , then it will be easy to extract the ROIs related to the j-th feature by just locating the regions where assumes significant values. Extracting from with no knowledge of , is a blind source separation problem (BSS), which can only be solved by further assumptions on . In particular, statistical BSS techniques such as principal component analysis (PCA) [27], and independent component analysis (ICA) [35] reasonably assume that the different features have some degree of statistical independence. Indeed, as the patterns formed by different materials in the painted surface are likely to be independent of one another, it is also likely that their central mutual statistics nearly vanish all over the images, that is, assuming zero-mean feature maps:

where α and β are arbitrary integers, and the angle brackets denote statistical expectation.

Particular cases of (2) are zero-correlation (α = β = 1), leading to PCA and other second-order approaches, and statistical independence, i.e., (2) is true for all α and β, leading to ICA. By these assumptions, the result is obtained by minimizing the following summation with respect to all the :

If the preliminary assumptions are satisfied, this produces a new set of images, each depicting one and only one of the desired feature maps, that is, something approximately proportional to . By estimating matrix {}, both PCA and ICA estimate the features by combining linearly the normally correlated to produce a different set of images that are uncorrelated or statistically independent.

Since each output map assumes significant values only where a single feature is present, the ROIs characterized by such a feature can be extracted by just distinguishing between foreground and background. In fact, at best, two primary gray levels dominate each output channel, and only a specific ROI is highlighted. All pixels in the ROI will have similar gray values, and the rest of the image will get confused in the background.

3.3. Stage 3: Binarization

Since the purpose of the methodology is to obtain a precise vector polygon for each classified ROI, the third stage consists of eliminating the overabundance of information caused by the average gray levels, which cause the typical ramp edges between the area of interest and the background. Gradients, in this case, can be classified as noise that slows down and complicates the process of identifying ROIs. To further segment the ROIs from the background, we use a simple recursive thresholding algorithm [36].

Given the output channels of the second stage, which correspond to images in grayscale, a gray gradation is fixed, called intensity threshold . In the binary output image, the pixels labeled with 1 are called object points, while those set to 0 are the background points. The segmentation outcome is strongly influenced by the choice of the threshold , which can either be constant throughout the image (global thresholding) or vary dynamically from pixel to pixel (local thresholding).

Global thresholding fails in the cases where the image content is not evenly illuminated. Hence, it is essential to respect the lighting consistency during the shooting stage to avoid further optimization pre-processing. The value can be chosen canonically, using the average value in the grayscale, but in many cases the restorer’s choice is made by trial and error, that is, testing different values to determine one that makes the output satisfactory [37]. This can be done on a single channel and adapted to the others, or by choosing a value for each channel. In any case, each selected value must be included in the report accompanying the thematic map to guarantee the results’ reproducibility.

It is worth observing that the choice of the output image to work with is up to the restorer. This choice is needed to filter only the ROIs for the specific thematic map to be generated; in fact, a fully automatic execution would lead to the identification and selection of undesired regions. The final result is a set of binary masks that identify the ROIs by step edges that will facilitate the subsequent stages. In rare cases, the extracted masks may still have noise pixels inside or outside the ROIs. These pixels need to be removed in order not to interfere with the subsequent polygonization processes. For this purpose, basic morphological operations such as region filling, thinning, and thickening can be used to clean noise and modify regions [38].

Alternative approaches to the generation of the binary masks use neural networks, such as, e.g., the Kohonen self-organizing map (SOM) [39,40], which is based on competitive training algorithms [41]. The advantage of SOM is preserving the input samples’ topology [42]. We tested this type of neural network on different types of artifacts, but they proved to be very useful only in some cases, when the ROIs are already visible in all diagnostic images, that is, in cases of easy segmentation. Moreover, the extraction of binary masks with this method proved to be too time-consuming and not easy to use for non-experts. For this reason, we decided not to include them in the final methodology proposed here.

3.4. Stage 4: Polygonization

Raster to vector data conversion is a central function in GIS image processing and remote sensing (RS) for data integration between RS and GIS [43]. In general, there are two types of algorithms, namely, vectorization of lines and vectorization of polygons; only the latter is used in our methodology. This function creates vector polygons for all connected regions sharing a communal pixel value [29]. The precision of the mapping depends on several factors, including the spatial complexity of the images. As the resolution of an image becomes sharper, the data volume increases; for this reason, it is important to perform the pre-processing operation of stage 3 to classify information, clean the shapes and the edges of the ROIs, and ensure their topological coherence.

3.5. Methodology in QGIS

In the first phase of the methodology, the raster images are inserted into QGIS using a metric reference system, such as WGS84 EPSG:4326, and associating a worldfile to each image. A worldfile is a collateral file of six plain text lines used by geographic information systems to georeference raster map images. The file specification was introduced by Esri® and consists of six coefficients of a similar transformation that describes the position, scale, and rotation of a raster on a map. This procedure allows us to have the starting data consistent with each other, geometrically correct and divided into different layers according to the acquisition mode.

The second stage involves the analysis of all diagnostic images simultaneously. The PCA algorithm implemented in QGIS is adequate for our purposes—see the Processing Tools for Raster Analysis, in GRASS tools (i.pca), while ICA would require new modules to be implemented in Python. Alternatively, both algorithms can be implemented entirely in Matlab (Version 9.7 R2019b, MathWorks, Natick, MA, USA) [44].

The third stage of binarization is performed individually on each output image. The conservator-restorer chooses among the outputs the ones that best fulfil their cognitive needs. The thresholding algorithm and the morphological functions are present in QGIS, in the list of processing tools of raster analysis, classified for layers or tables. Then, QGIS can perform raster to vector conversion of each binary mask extracted in the third stage. The tool is found in the main menu, in section Raster, Conversion, Polygonization (from Raster to Vector). This conversion occurs very quickly even with complex files and allows for the creation of a vector polygon that faithfully reflects the edges of areas of interest recognized and highlighted by diagnostic investigations.

All the stages of the second phase, related to the operation of classification, analysis, and storage, are performed using computing tools of QGIS to create a database supporting measurements and statistical analysis. Hence, the output is a detailed and personalized description of each polygonal space created, possibly in different file formats, according to the documentation’s needs. Each extracted polygon is classified into categories and subcategories through an attribute table, which favors different analysis types, such as damage assessments and risk evaluation, which can be conducted quantitatively and objectively.

4. Case Study

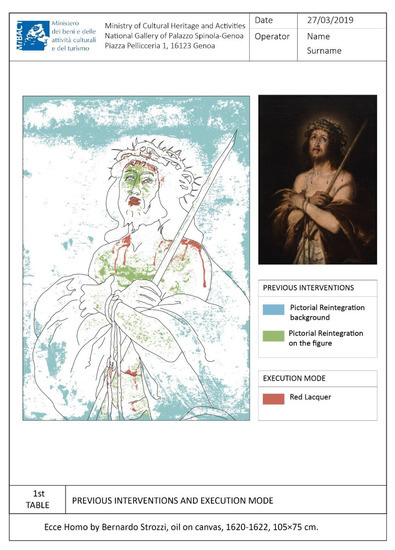

The methodology’s effectiveness is demonstrated in the specific case of a canvas painting by showing that the thematic map that is typically extracted manually can be derived through the stages described above. The chosen artwork is Ecce Homo by Bernardo Strozzi, an oil painting on canvas, 1620–1622, in size 105 × 75 cm2, which is in good conservation status. It underwent a restoration in recent times, including a cleaning of the superficial paint layer and the removal of the old restoration interventions; subsequently, a pictorial retouch with paint was carried out. For all phases of our methodology, apart from stage 2 that requires MatLab’s use for the segmentation algorithms, we used the open-source software QGIS (version 3.10.2-A Coruña, whit Grass 7.8.2.).

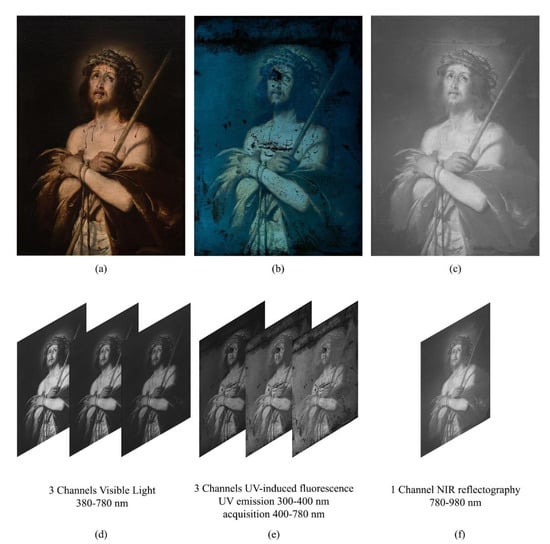

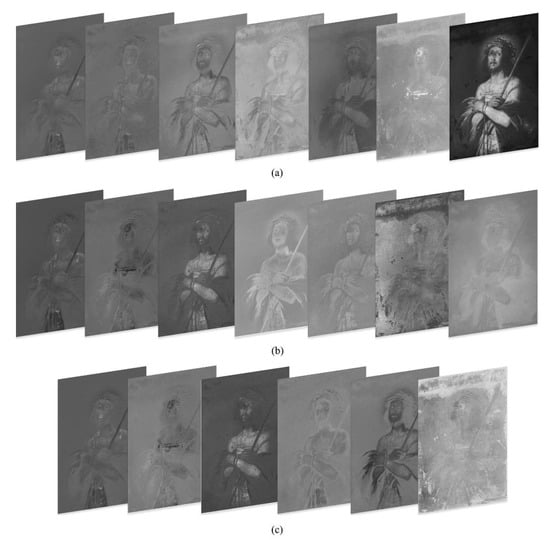

By following stage 1 of the proposed method, the painting was captured in three different modalities, under visible light illumination (Figure 2a), UV-fluorescence (Figure 2b) and Near-Infrared Reflectography 780–980 nm (Figure 2c). The fluorescence image was subtracted of the visible stray light to highlight the regions that really produce fluorescence under ultraviolet illumination (see [19,45] for details). In the second stage, the three images were processed by PCA and ICA; the output images are shown in Figure 3a,b. A further attempt with ICA has been made on a subset of six channels, obtained excluding the infrared and resulting in the outputs of Figure 3c. It is essential to make explicit that every image produced by the statistical processing no longer corresponds to a specific wavelength range of, but is a recombination of their intensities, highlighting one or more of the ROIs required, which appear in different gray levels. The 20 output images in Figure 3a–c are the new data set that the restorer can inspect for study and feature recognition.

Figure 2.

Phase I, stage 1—Ecce Homo by Bernardo Strozzi, oil on canvas, 1620–1622, 105 × 75 cm2: (a) Standard RGB; (b) UV-induced fluorescence; (c) NIR reflectography; (d–f) Respective spectral Channels of the acquired images. Images captured by Paolo Triolo, under permission of the Ministry of Cultural Heritage and Activities and Tourism, National Gallery of Palazzo Spinola, Genova, Italy.

Figure 3.

Phase I, stage 2: (a) Output channels obtained by principal component analysis (PCA) from the entire data set in Figure 2; (b) Output channels obtained by independent component analysis (ICA) from the entire data set in Figure 2; (c) Output channels obtained by ICA from the multispectral cube with no IR data in Figure 2a,b,d,e.

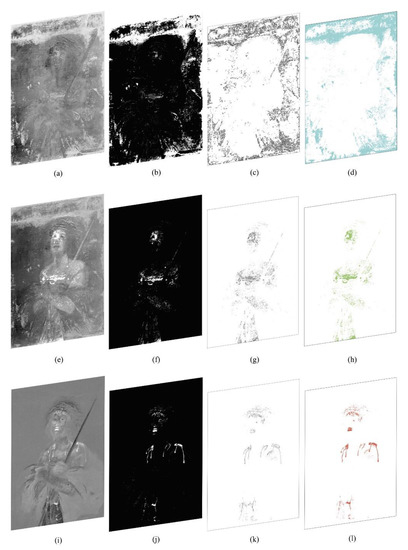

After inspecting the output images, the restorer chose those where the significant regions are most noticeable. These images, reported in Figure 4a,e,i, show some features related to the materials used: Figure 4a highlights the pictorial retouches performed on the background and Figure 4e highlights the pictorial retouches performed on the body of Christ. These two features in the original data were visually superimposed in the same spectral channel, while in this phase they are separated in different outputs, despite having been operated at the same time. Therefore, we can say that segmentation is due to the different pigments used for retouching. In fact, the figure of Christ has likely been restored using titanium white pigment, and the background has been restored using varnish colors. Even without (destructive) chemical analysis or any other more specialized technique, image processing has allowed us to distinguish between regions that look similar in the acquired channels. Analogously, in Figure 4i–l, the red lacquer used for the blood of Christ is highlighted.

Figure 4.

Phase I stages 3–4: (a,e,i) Images processed from the previous stage (in Figure 3) and chosen to identify regions of interest (ROIs); (b,f,j) Corresponding binarized versions; (c,g,k) Image polygonization, raster to vector conversion. Phase II stage 5: (d,h,l) Characterization of the extracted polygons.

The third stage of the methodology, the creation of binary masks through the threshold algorithm, was only carried out on the three outputs chosen by the restorer. In our case, in the range 0–255, we chose a threshold T = 180. Our result is shown in Figure 4b,f,j. For stage 3, we have used the QGIS Plugin Value tool to choose the threshold value, and the raster analysis processing tool Classified for layers or tables for creating the binary masks.

The fourth stage consists of the graphic design’s automatic extraction to create the thematic map. The binary masks shown in Figure 4c,g,k have been transformed from raster to vector drawings, thus obtaining automatically closed vector polygons that comply with topographical rules of adjacency and overlap. For stage 4, we have used the QGIS polygonizer tool from raster to vector.

In the second-phase stages, each extracted polygon is then classified in a corresponding ROI layer and characterized by a different color and texture, see, to create the legend in the thematic map, see Figure 4d,h,l and Figure 5. In particular, for stage 5, we used the characterization in the QGIS Layer Style tool. The extracted polygons corresponding to the ROI have been estimated as a percentage of the total area of interest, dividing them by Layers and associating them into a Table of Attributes. The topological relationship between the database and the graphics is that it is possible to query the data directly by querying the graphic design and automatically exporting the legend and the statistical analysis results in the printing layout phase. QGIS has a useful and versatile Layout Window supporting creating complex sheets and can save templates to be reused in the future. The metadata have been included in the layout, which contains the institution’s logo, the author’s name, the dimensional characteristics of the object, the table number, the date, the documentation operator, and a legend (or glossary) linked to the drawing. This type of metric result is useful for monitoring, conservation, and restoration, see Figure 5. For this case study, we chose to show only the front of the artifact as the back and side profile of the canvas did not have any interesting feature.

Figure 5.

Thematic map-specific colors are assigned to the ROIs (statistical data are omitted).

5. Conclusions

We examined some of the problems concerning the graphic documentation in cultural heritage, i.e., the difficulty in analyzing the diagnostic images, the excessive subjectivity and approximation of the transcription of the relevant information, the complexity, and the long time needed to transcribe information through manual procedures. These problems lead the restorers to choose only the essential information to document, thus making the graphic documentation incomplete and far from guaranteeing reproducibility. There is currently no official or de facto methodological standard that considers all the possibilities offered by image processing and scientific visualization, and commercially available software tools. Consequently, we propose a semi-automated methodology to facilitate and improve the diagnostic investigation while reducing drastically manual interventions. The result of its application is an objective, formal, and accurate graphic documentation to plan restoration, monitoring, and conservation interventions.

Moreover, as a novelty in this field, image segmentation algorithms have demonstrated their potential to reduce subjectivity and accelerate the entire process. The time needed for the entire methodology to be applied can be evaluated according to two factors. The first is the characteristic and speed of the device in use. Basically, all the algorithms used require a short calculation time ranging from a minimum 4–5 s and a maximum of 1–5 min. These times were evaluated considering a spatial resolution of 300 dpi and a low/medium power device. The power of the device’s graphics card and the massive number of images to be examined are the only two factors that can increase the computation time of the BSS algorithms. The second factor includes both the operator’s ability to use the software and the number of thematic maps to be performed. The application of the entire methodology requires a medium/advanced knowledge of the software mentioned. Furthermore, the timing of the creation of the thematic maps depends on the reasoning time of the user themselves and on the features to extract and document.

Based on image analysis processes, the methodology can be applied to any surface, regardless of the spatial complexity of the object or its extent. However, in order to obtain real data, it is necessary that the photographic reproduction of the artefact respects the real dimensions, with the minimum margin of error.

To date, QGIS has been assessed as the most appropriate tool to support each step of the methodology, as in this framework the operator has all the necessary image analysis tools, combining high potential and ease of use. In a previous, empirical and preliminary study preceding the formal methodology [46] we tested the BBS algorithms coupled to neural networks. As future work, we plan to investigate additional algorithms, and in order to ensure a more general applicability of the results we expect to replicate the experiment on a larger number of case studies and the implementation of well-known blind methods to assess the reliability and stability of the results achieved. Another future development could be to integrate our method with some recent experiences and advanced strands of research that are trying to overcome some of the limitations of documentation, offering web-based solutions/platforms able to perform the operations of survey (mapping) of conservation, restoration and preservation in a single environment/system; also by exploiting three-dimensional models [47,48,49,50].

Author Contributions

Conceptualization, A.A. (Annamaria Amura), A.A. (Alessandro Aldini) and A.T.; methodology, A.A. (Annamaria Amura), S.P., E.S., A.T. and P.T.; software, A.A. (Annamaria Amura), and A.T.; validation, A.A. (Annamaria Amura), A.T. and P.T.; formal analysis, E.S. and A.T.; data curation, P.T. and A.A. (Annamaria Amura); writing—original draft preparation, A.A. (Annamaria Amura); writing—review and editing, A.A. (Annamaria Amura), A.A. (Alessandro Aldini) and E.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to produce the results shown here have been acquired by one of the authors (P.T.) under permission of the Ministry of Cultural Heritage and Activities and Tourism, National Gallery of Palazzo Spinola, Genova, Italy, which maintains all the property rights.

Acknowledgments

The authors are grateful to the QGIS Italia community for their technical support in adapting the software to the needs of the methodology presented.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Normal 17/84: Elementi Metrologici e Caratteristiche Dimensionali: Determinazione Grafica., in Raccomandazioni Normal. Alterazioni dei Materiali Lapidei e Trattamenti Conservativi: Proposte per L’unificazione dei Metodi Sperimentali di Studio e di Controllo; Consiglio Nazionale delle Ricerche (CNR), Istituto Centrale per il Restauro (ICR): Roma, Italy, 1984.

- Sacco, F. Sistematica Della Documentazione e Progetto di Restauro; Boll. ICR. Nuova Ser. N.4; ICR: Roma, Italy, 2002; pp. 28–50. [Google Scholar]

- Bezzi, L.; Bezzi, A. Proposta per un metodo informatizzato di disegno archeologico Il disegno archeologico. In Proceedings of the ArcheoFOSS. Open Source, Free Software e Open Format nei Processi di Ricerca Archeologici, Foggia, Italy, 5–6 May 2010; De Felice, G., Sibilano, M.G., Eds.; Edipuglia: Puglia, Italy, 2011; pp. 113–123. [Google Scholar]

- Agosto, E.; Ardissone, P.; Bornaz, L.; Dago, F. 3D Documentation of Cultural Heritage: Design and Exploitation of 3D Metric Surveys. In Applying Innovative Technologies in Heritage Science; George Pavlidis (Athena—Research and Innovation Center in Information, Communication and Knowledge Technologies, Greece); IGI Global: Hershey, PA, USA, 2020; pp. 1–15. [Google Scholar]

- ICCD-Istituto Centrale per il Catalogo e la Documentazione. La Documentazione Fotografica Delle Schede di Catalogo: Metodologie e Tecniche di Ripresa; Galasso, R., Giffi, E., Eds.; ICCD: Roma, Italy, 1998; ISBN IEI0127676. [Google Scholar]

- International Standard Organization (ISO). ISO/3567-1:1 998. Technical Product Documentation; Organization and Naming of Layers for CAD. Part 1: Overwiev and Principles; International Standard Organization (ISO): Geneva, Switzerland, 1998. [Google Scholar]

- Eiteljorg, H., II. Documentation with CAD. In Boll. Dell’istituto Cent. per Restauro; ICR: Roma, Italy, 2002; Volume 5, pp. 45–50. [Google Scholar]

- ISO 13567-1 Technical Product Documentation. Organization and Naming of Layers for CAD. Part 1: Overview and Principles. 1998. Available online: https://www.iso.org/obp/ui/#iso:std:iso:13567:-1:ed-1:en (accessed on 10 March 2021).

- Rinaudo, F.; Eros, A.; Ardissone, P. Gis and Web-Gis, Commercial and Open Source Platforms: General Rules for Cultural Heritage Documentation. In Proceedings of the XXI International CIPA Symposium, Athens, Greece, 1–6 October 2007. [Google Scholar]

- Rajcic, V. Risks and resilience of cultural heritage assets. In Proceedings of the SBE 16 Malta Europe and the Mediterranean Towards a Sustainable Built Environment; Borg, R.P., Gauci, P., Staines, C.S., Eds.; SBE Malta: Msida, Malta, 2016; pp. 325–334. [Google Scholar]

- Fabiani, F.; Grilli, R.; Musetti, V. Verso nuove modalità di gestione e presentazione della documentazione di restauro: SICaR web la piattaforma in rete del Ministero dei Beni e delle Attività Culturali e del Turismo. Boll. Ing. Coll. Degli Ing. Della Toscana 2016, 3, 3–13. [Google Scholar] [CrossRef]

- Baratin, L.; Bertozzi, S.; Moretti, E.; Saccuman, R. GIS applications for a new approach to the analysis of panel paintings. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); LNCS; Springer: Berlin/Heidelberg, Germany, 2016; Volume 10058, pp. 711–723. [Google Scholar] [CrossRef]

- Fuentes-Porto, A. La Tecnología Sig Al Servicio De La Cuantificación Numérica Del Deterioro En Superficies Pictóricas. Un Paso Más Hacia La Objetivización De Los Diagnósticos Patológicos. In Proceedings of the V Congreso Grupo Español del IIC. Patrimonio Cultural, Criterios de Calidad en Intervenciones, Madrid, Spain, 18–20 April 2012; GE publicaciones: Madrid, Spain, 2012; pp. 363–369. [Google Scholar]

- Henriques, F.; Gonçalves, A.; Bailão, A. Tear feature extraction with spatial analysis: A thangka case study. Estud. Conserv. Restauro 2013, 1, 10–23. [Google Scholar] [CrossRef][Green Version]

- Henriques, F.; Gonçalves, A. Analysis of lacunae and retouching areas in panel paintings using landscape metrics. In Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics); LNCS; Springer: Berlin/Heidelberg, Germany, 2010; Volume 6436, pp. 99–109. [Google Scholar] [CrossRef]

- Congedo, L.; Macchi, S. Semi-Automatic Plugin Classificazione per QGIS; ACC Dar: Roma, Italy, 2013; Available online: http://www.planning4adaptation.eu/ (accessed on 10 March 2021).

- Gugnali, S.; Mandolesi, L.; Drudi, V.; Miulli, A.; Maioli, M.G.; Frelat, M.A.; Gruppioni, G. Design and implementation of an open source G.I.S. platform for management of anthropological data. J. Biol. Res. 2012, 85, 350–353. [Google Scholar] [CrossRef]

- Dyer, J.; Verri, G.; Cupitt, J. (Eds.) Multispectral Imaging in Reflectance and Photo-Induced Luminescence Modes: A User Manual, 1st ed.; Online: European CHARISMA Project.: Web publication/Site; British Museum: London, UK, 2013. [Google Scholar]

- Triolo, P.A.M. Manuale Pratico di Documentazione e Diagnostica per Immagine per i BB.CC; Il Prato: Padova, Italy, 2019. [Google Scholar]

- Verri, G.; Clementi, C.; Comelli, D.; Cather, S.; Piqué, F. Correction of ultraviolet-induced fluorescence spectra for the examination of polychromy. Appl. Spectrosc. 2008, 62, 1295–1302. [Google Scholar] [CrossRef]

- Verri, G.; Comelli, D.; Cather, S.; Saunders, D.; Piqué, F. Post-capture data analysis as an aid to the interpretation of ultraviolet-induced fluorescence images. In SPIE 6810 Computer Image Analysis in the Study of Art; Stork, D.G., Coddington, J., Eds.; SPIE: Bellingham, WA, USA, 2008; Volume 6810, pp. 1–12. [Google Scholar] [CrossRef]

- Zhao, Y. Image Segmentation and Pigment Mapping of Cultural Heritage Based on Spectral Imaging; Rochester Institute of Technology: New York, NY, USA, 2008. [Google Scholar]

- Legnaioli, S.; Lorenzetti, G.; Cavalcanti, G.H.; Grifoni, E.; Marras, L.; Tonazzini, A.; Salerno, E.; Pallecchi, P.; Giachi, G.; Palleschi, V. Recovery of archaeological wall paintings using novel multispectral imaging approaches. Herit. Sci. 2013, 1, 33. [Google Scholar] [CrossRef]

- Capobianco, G.; Prestileo, F.; Serranti, S.; Bonifazi, G. Application of hyperspectral imaging for the study of pigments in paintings. In Proceedings of the 6th International Congress “Science and Technology for the Safeguard of Cultural Heritage in the Mediterranean Basin, Athens, Greece, 22–25 October 2013; Available online: https://www.researchgate.net/profile/Giuseppe-Capobianco-2/publication/261223438_APPLICATION_OF_HYPERSPECTRAL_IMAGING_FOR_THE_STUDY_OF_PIGMENTS_IN_PAINTINGS/links/0a85e533a74e02f2fb000000/APPLICATION-OF-HYPERSPECTRAL-IMAGING-FOR-THE-STUDY-OF-PIGMENTS-IN-PAINTINGS.pdf (accessed on 10 March 2021).

- Salerno, E.; Tonazzini, A.; Bedini, L. Digital image analysis to enhance underwritten text in the Archimedes palimpsest. Int. J. Doc. Anal. Recognit. 2007, 9, 79–87. [Google Scholar] [CrossRef]

- Salerno, E.; Tonazzini, A.; Grifoni, E.; Lorenzetti, G.; Legnaioli, S.; Lezzerini, M.; Marras, L.; Pagnotta, S.; Palleschi, V. Analysis of Multispectral Images in Cultural Heritage and Archaeology. J. Appl. Spectrosc. 2014, 1, 22–27. Available online: http://www.alslab.net/wp-content/uploads/2018/03/JALS-1-art.4.pdf (accessed on 10 March 2021).

- Cichocki, A.; Amari, S. Adaptive Blind Signal and Image Processing: Learning Algorithms and Applications; Wiley: New York, NY, USA, 2002; ISBN 978-0-471-60791-5. [Google Scholar]

- Tonazzini, A.; Bedini, L.; Salerno, E. Independent component analysis for document restoration. J. Doc. Anal. Recognit. 2004, 7, 17–27. [Google Scholar] [CrossRef]

- Junhua, T.; Fahui, W.; Yu, L. An Efficient Algorithm for Raster-to-Vector Data Conversion. Geogr. Inf. Sci. 2008, 14, 54–62. [Google Scholar]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Luo, X.; Zhuang, X. MvMM-RegNet: A New Image Registration Framework Based on Multivariate Mixture Model and Neural Network Estimation. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); LNCS; Springer Science and Business Media Deutschland GmbH: Berlin, Germany, 2020; Volume 12263, pp. 149–159. ISBN 9783030597153. [Google Scholar]

- Salerno, E.; Tonazzini, A. Extracting erased text from palimpsests by using visible light. In Proceedings of the 4-th Int. Congress Science and Technology for the Safeguard of Cultural Heritage in the Mediterranean Basin, Cairo, Egypt, 6–8 December 2009; Ferrari, A., Ed.; Associazione Investire in Cultura–Fondazione Roma Mediterraneo: Roma, Italy, 2010; Volume II, pp. 532–535. [Google Scholar]

- Jurio, A.; Pagola, M.; Galar, M.; Lopez-Molina, C.; Paternain, D. A comparison study of different color spaces in clustering based image segmentation. In International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems; Springer: Berlin/Heidelberg, Germany, 2010; Volume 81, pp. 532–541. [Google Scholar] [CrossRef]

- Stigell, P.; Miyata, K.; Hauta-Kasari, M. Wiener estimation method in estimating of spectral reflectance from RGB images. Pattern Recognit. Image Anal. 2007, 17, 233–242. [Google Scholar] [CrossRef]

- Hyvärinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; John Wiley & Sons, Inc.: New York, NY, USA, 2001; ISBN 047140540X. [Google Scholar]

- Wu, B.; Chen, Y.; Chiu, C. Recursive Algorithms for Image Segmentation Based on a Discriminant Criterion. World Acad. Sci. Eng. Technol. Int. J. Comput. Inf. Eng. 2007, 1, 833–838. [Google Scholar]

- Singh, S.; Talwar, R. Performance analysis of different threshold determination techniques for change vector analysis. J. Geol. Soc. India 2015, 86, 52–58. [Google Scholar] [CrossRef]

- Shih, F.Y. Image Processing and Mathematical Morphology. Fundamentals and Applications.; CRC Press, Inc.: Boca Raton, FL, USA, 2009; ISBN 978-1-4200-8943. [Google Scholar]

- Kohonen, T. The Self-Organizing Map. Proc. IEEE 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Koh, J.; Suk, M.; Bhandarkar, S.M. A multilayer self-organizing feature map for range image segmentation. Neural Netw. 1995, 8, 67–86. [Google Scholar] [CrossRef]

- Yeo, N.C.; Lee, K.H.; Venkatesh, Y.V.; Ong, S.H. Colour image segmentation using the self-organizing map and adaptive resonance theory. Image Vis. Comput. 2005, 23, 1060–1079. [Google Scholar] [CrossRef]

- Azorin-Lopez, J.; Saval-Calvo, M.; Fuster-Guillo, A.; Mora-Mora, H.; Villena-Martinez, V. Topology preserving self-organizing map of features in image space for trajectory classification. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9108, pp. 271–280. [Google Scholar] [CrossRef]

- Zhou, G.; Pan, Q.; Yue, T.; Wang, Q.; Sha, H.; Huang, S.; Liu, X. VECTOR AND RASTER DATA STORAGE BASED ON MORTON CODE. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 2523–2526. [Google Scholar] [CrossRef]

- Hyvärinen, A. Fast and Robust Fixed-Point Algorithms for Independent Component Analysis. IEEE Trans. Neural Netw. 1999, 10, 626–634. [Google Scholar] [CrossRef] [PubMed]

- Triolo, P.A.M. Quattro opere a confronto. Interpretazioni e confronti delle ana-lisi di diagnostica per immagine sulle tele di Bernardo Strozzi. In Bernardo Strozzi. Allegoria della Pittura; Zanelli, G., Ed.; Sagep: Genova, Italy, 2018. [Google Scholar]

- Amura, A.; Tonazzini, A.; Salerno, E.; Pagnotta, S.; Palleschi, V. Color segmentation and neural networks for automatic graphic relief of the state of conservation of artworks. Color Cult. Sci. J. 2020, 12, 7–15. [Google Scholar]

- Grilli, E.; Remondino, F. Classification of 3D digital heritage. Remote Sens. 2019, 11, 847. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Basilissi, V.; Callieri, M.; Dellepiane, M.; Gaiani, M.; Ponchio, F.; Rizzo, F.; Rubino, A.R.; Scopigno, R. A 3D-centered Information System for the documentation of a complex restoration intervention. J. Cult. Herit. 2018, 29, 89–99. [Google Scholar] [CrossRef]

- Apollonio, F.I.; Gaiani, M.; Bertacchi, S. Managing cultural heritage with integrated services platform. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences; Copernicus GmbH: Göttingen, Germany, 2019; Volume 42, pp. 91–98. [Google Scholar]

- Soler, F.; Melero, F.J.; Luzón, M.V. A complete 3D information system for cultural heritage documentation. J. Cult. Herit. 2017, 23, 49–57. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).