Fast Blob and Air Line Defects Detection for High Speed Glass Tube Production Lines

Abstract

:1. Introduction

2. Related Works

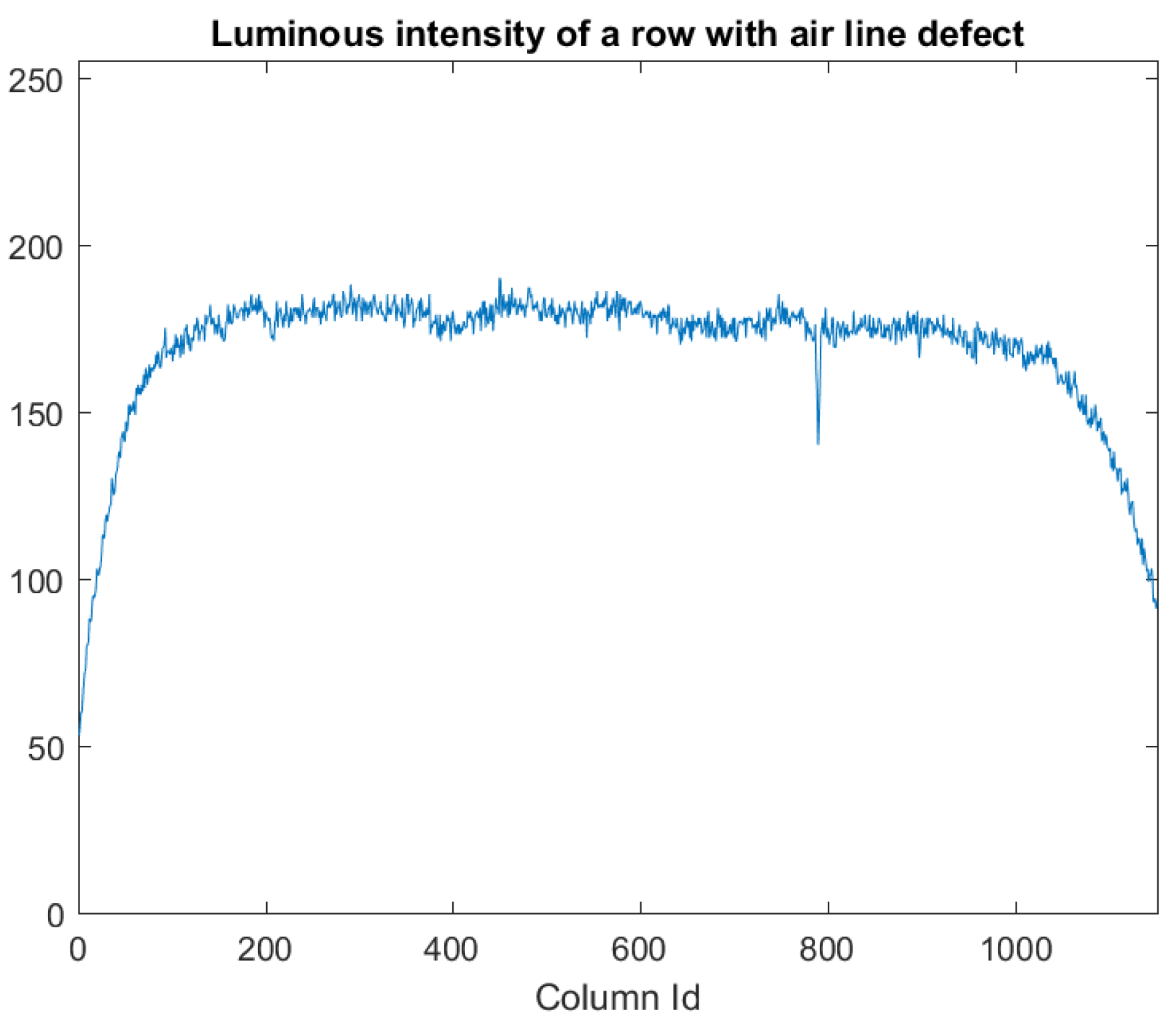

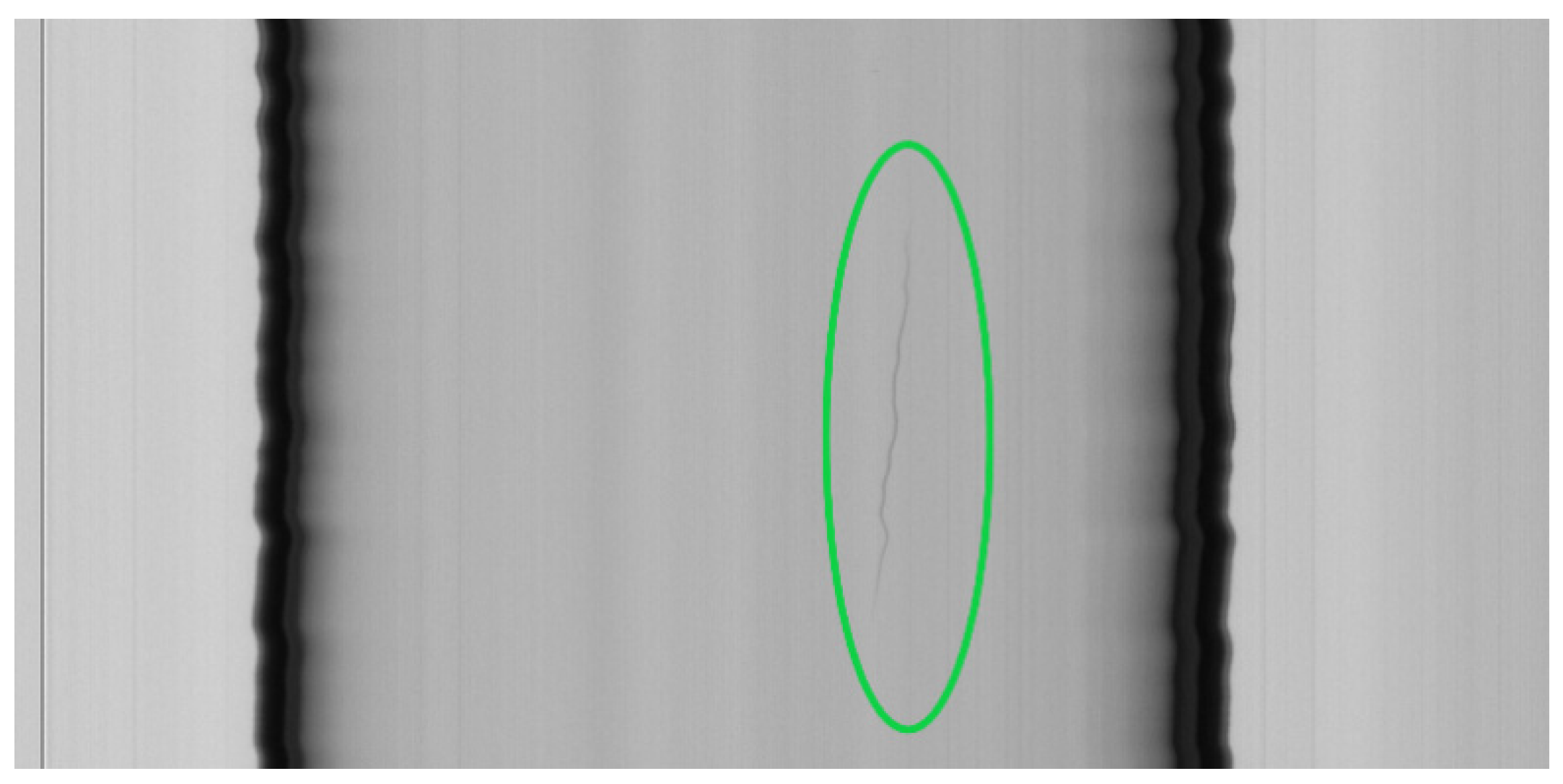

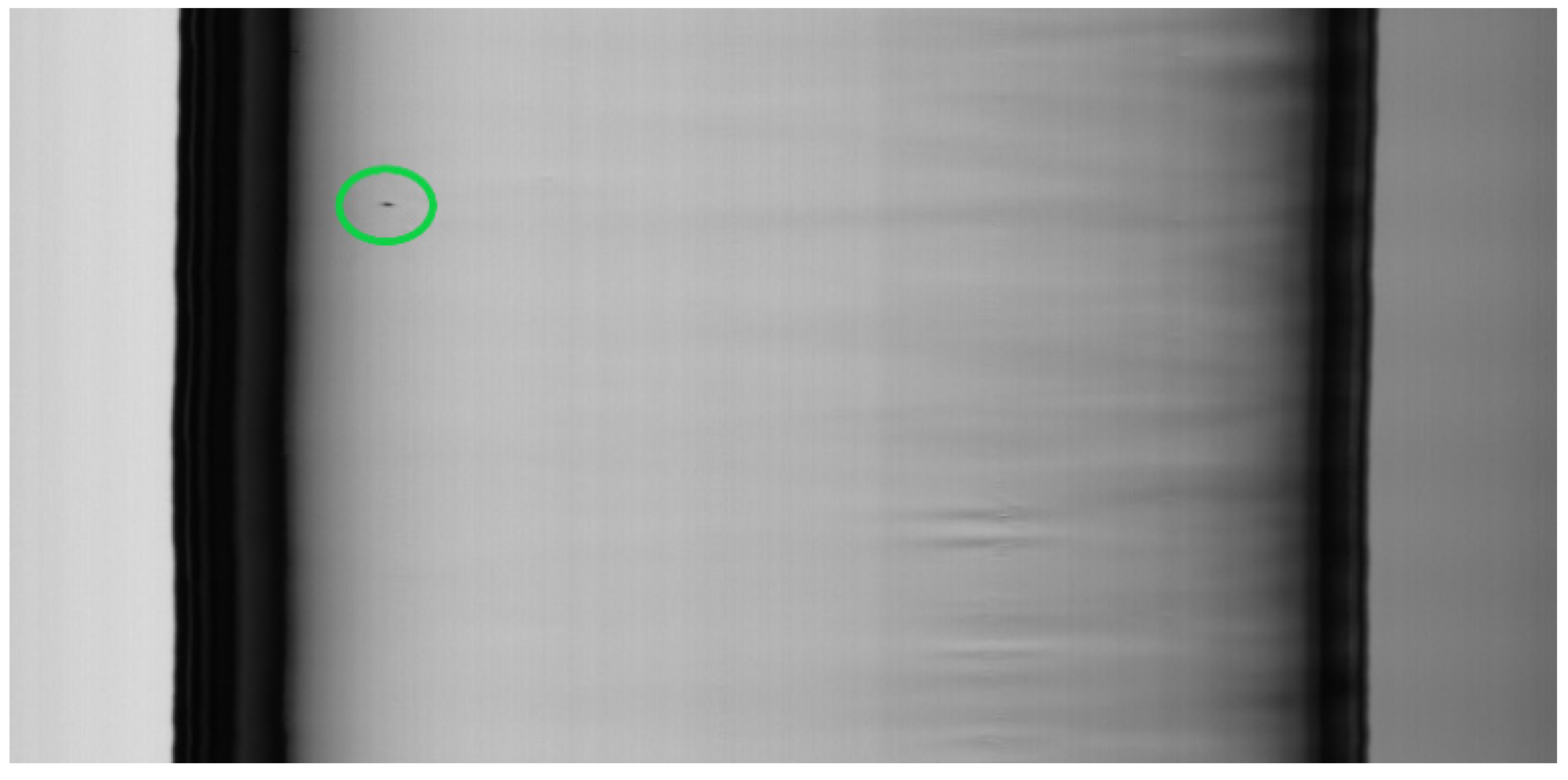

2.1. Related Inspection Systems

2.2. Algorithms

- Pre-processing stage;

- Defects detection stage;

- Defects classification stage.

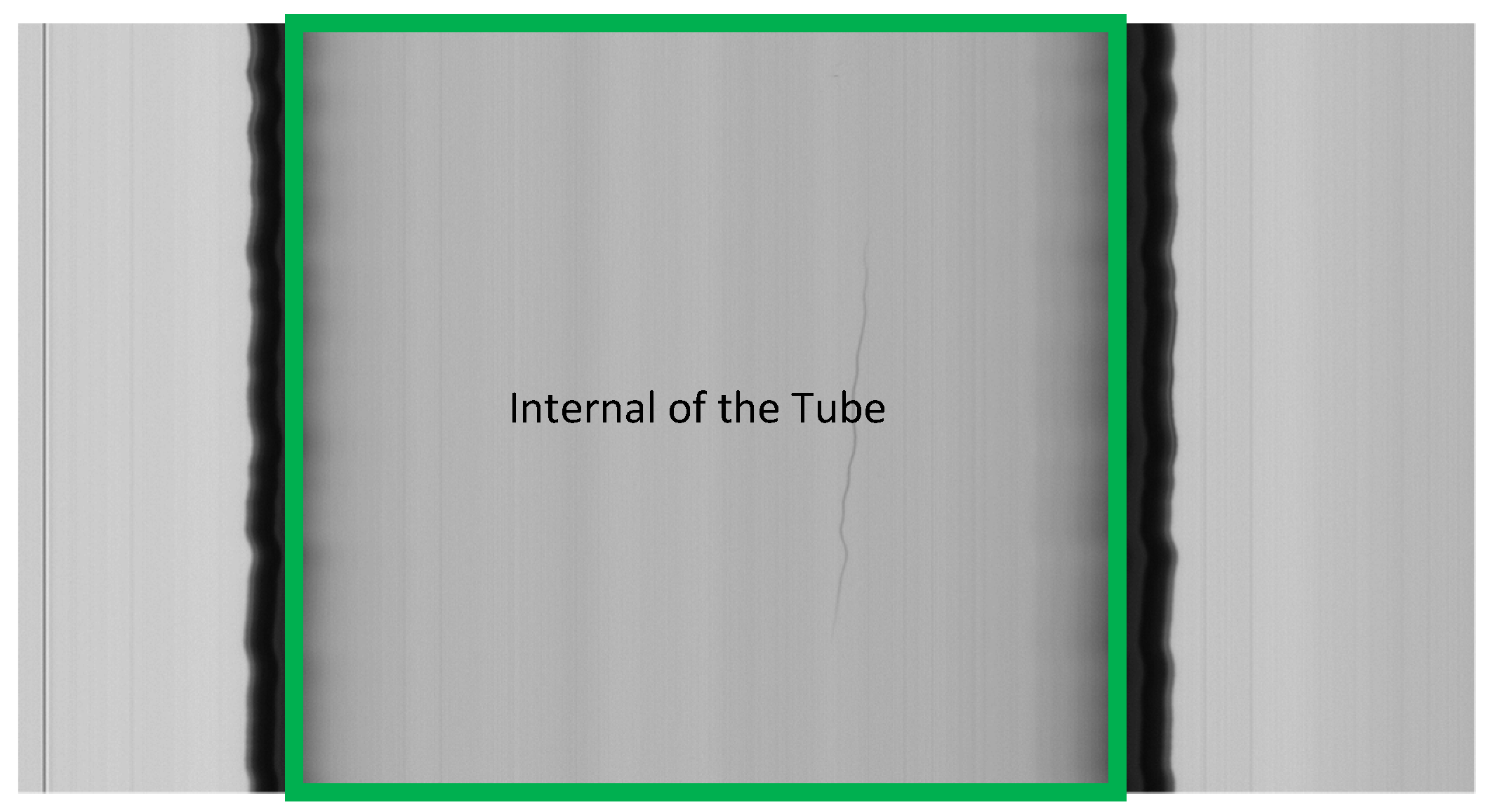

3. Image Capture and Processing

3.1. Image Acquisition Settings and Requirements on Performance and Quality

3.2. Rational of the Proposal

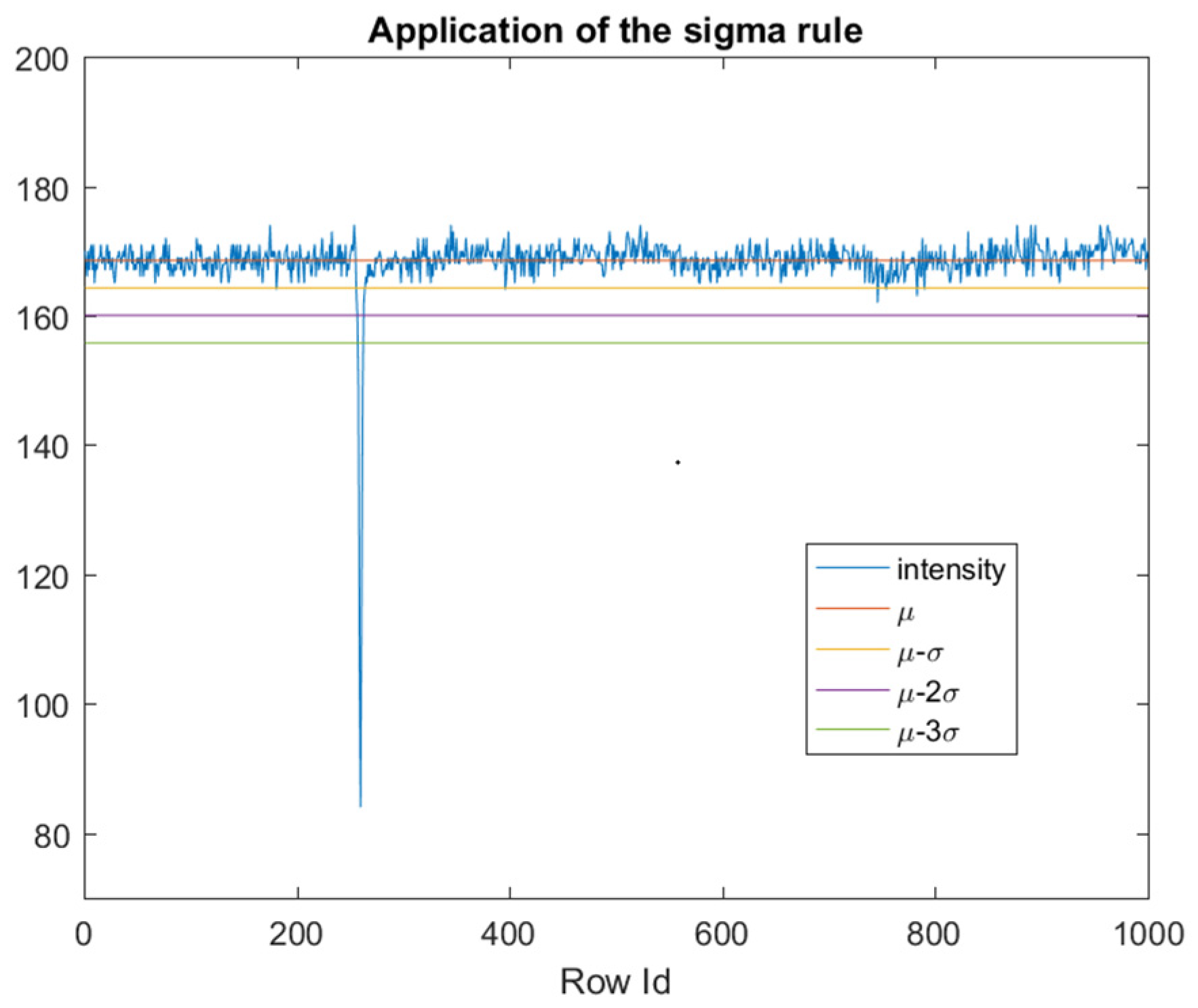

4. The Sigma Algorithm

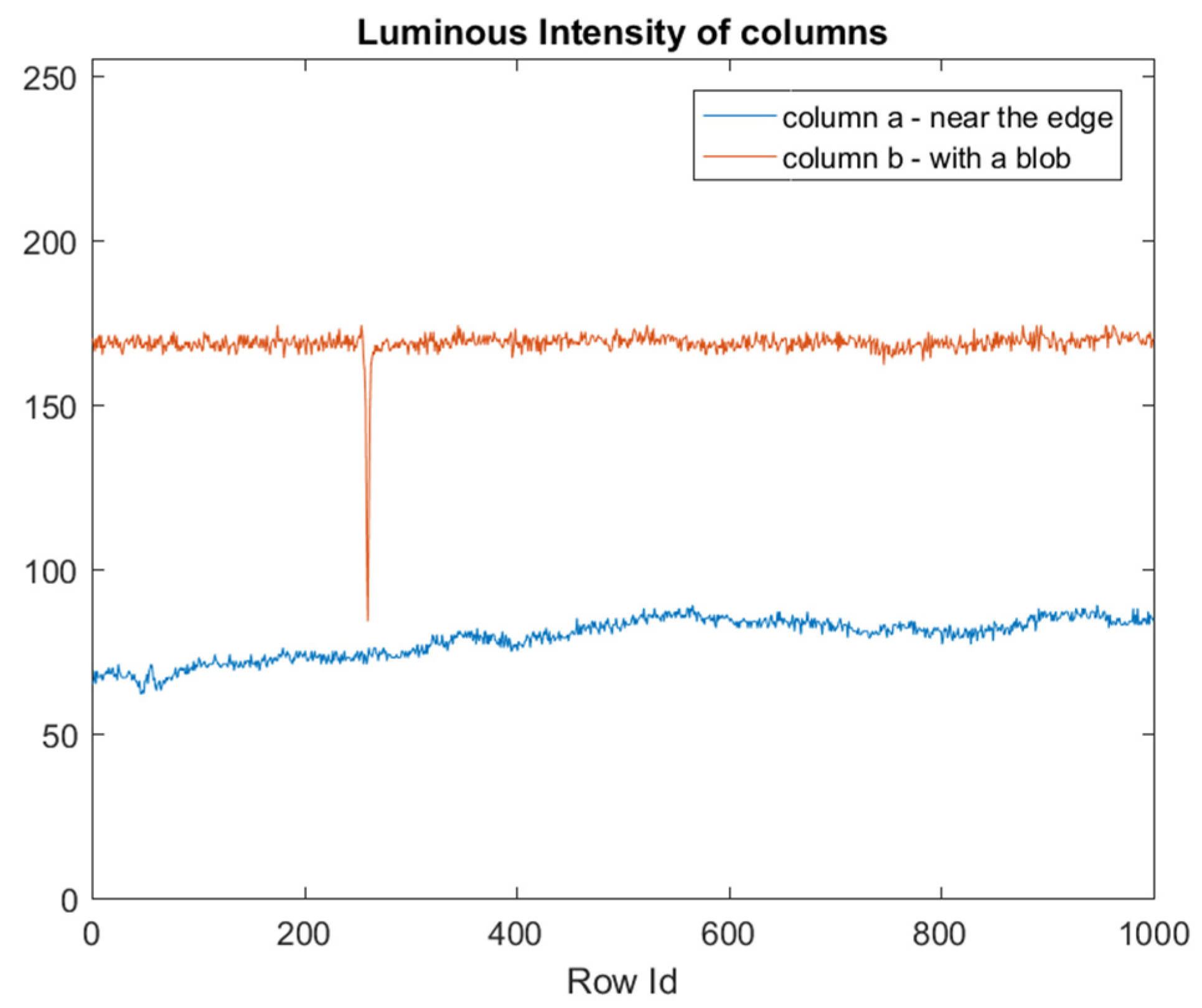

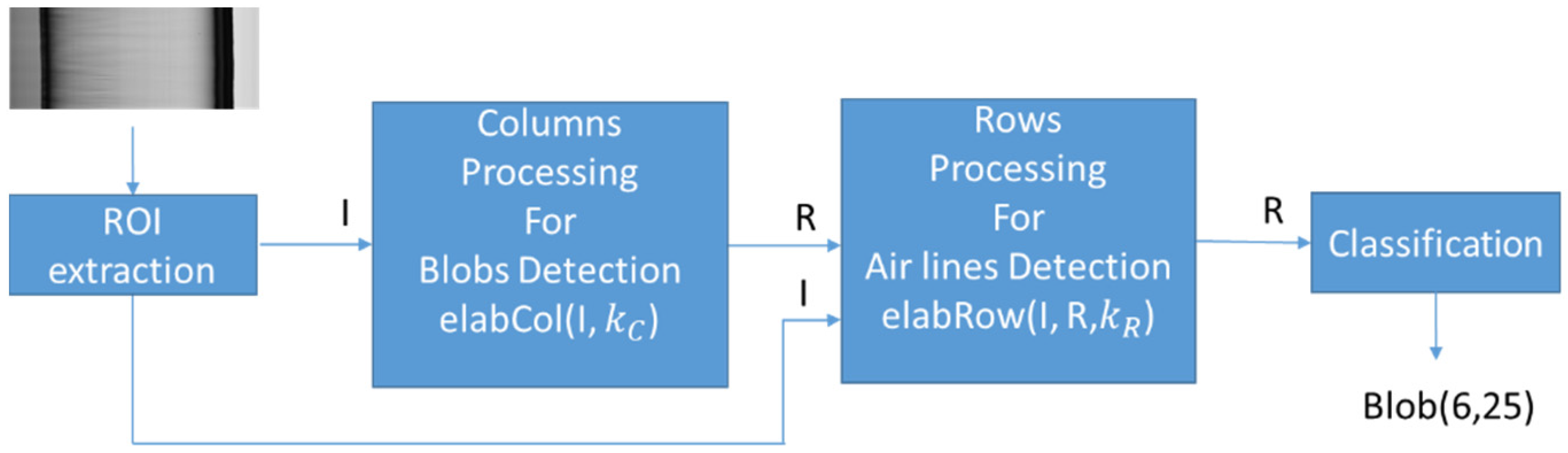

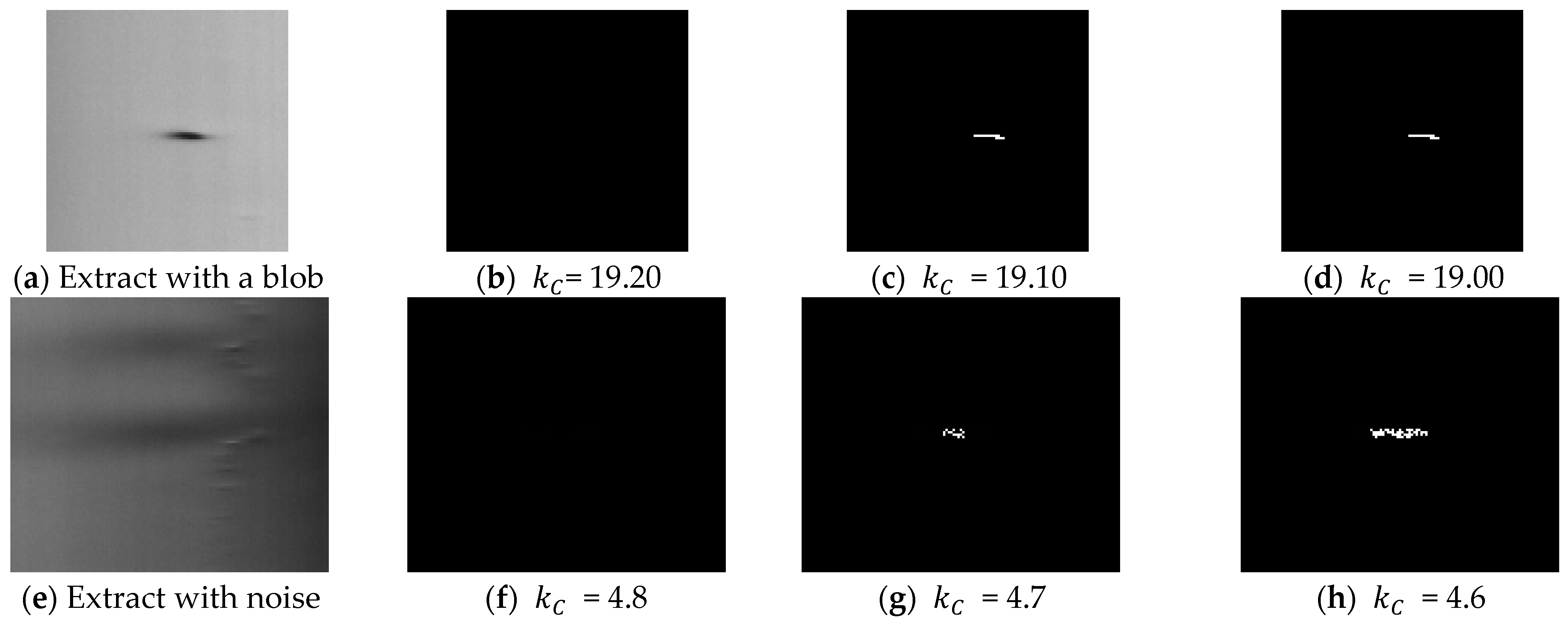

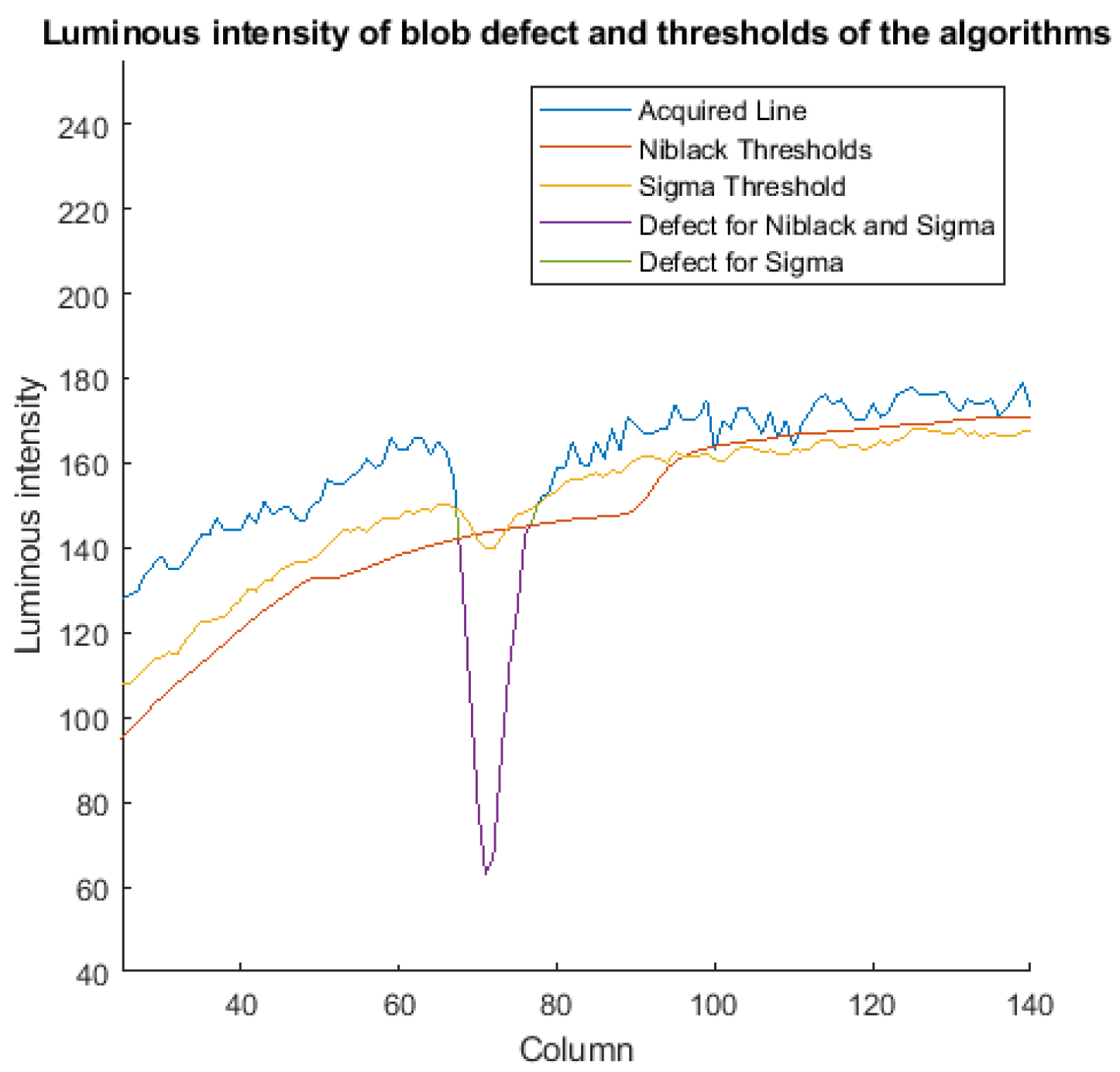

4.1. Processing of Columns for Blobs Detection

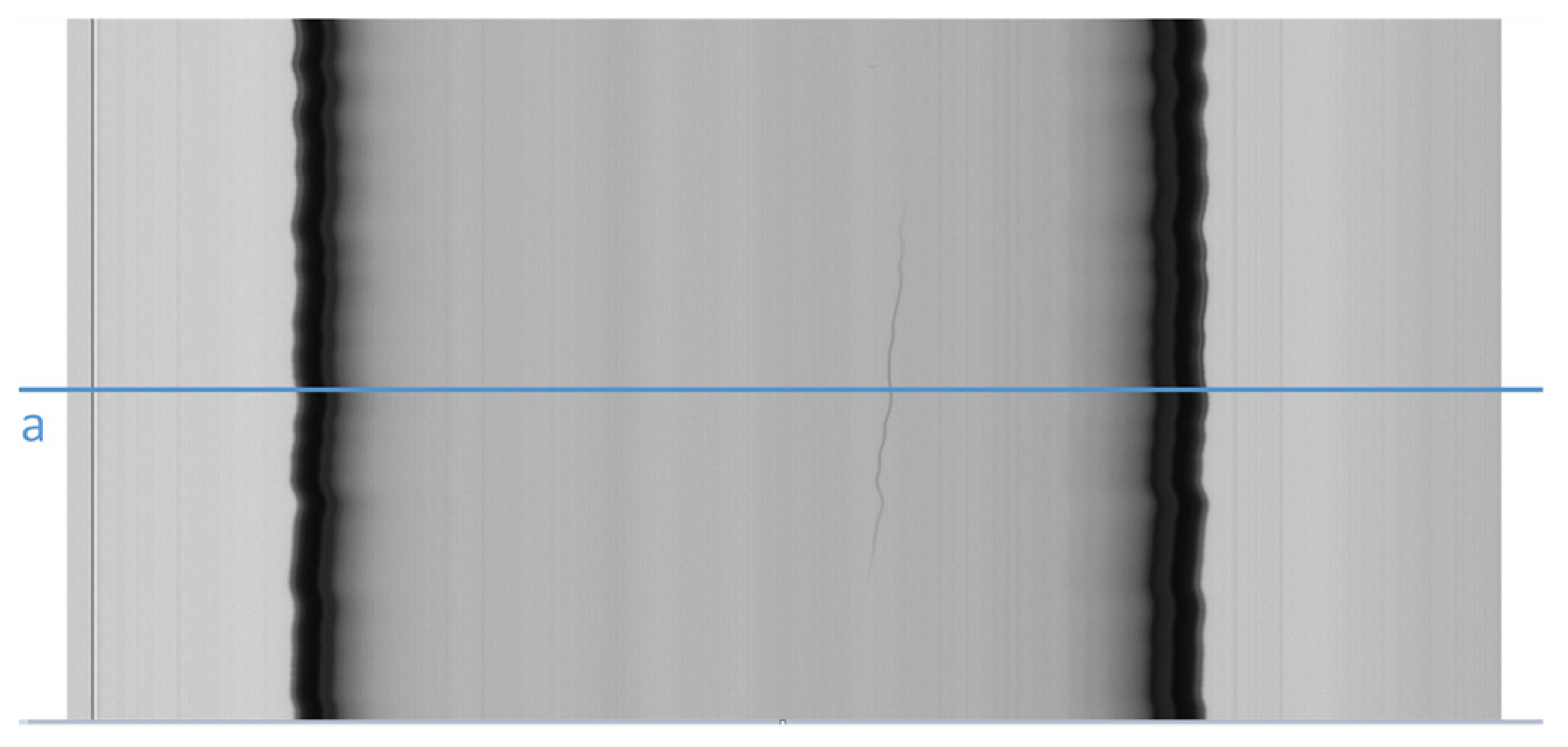

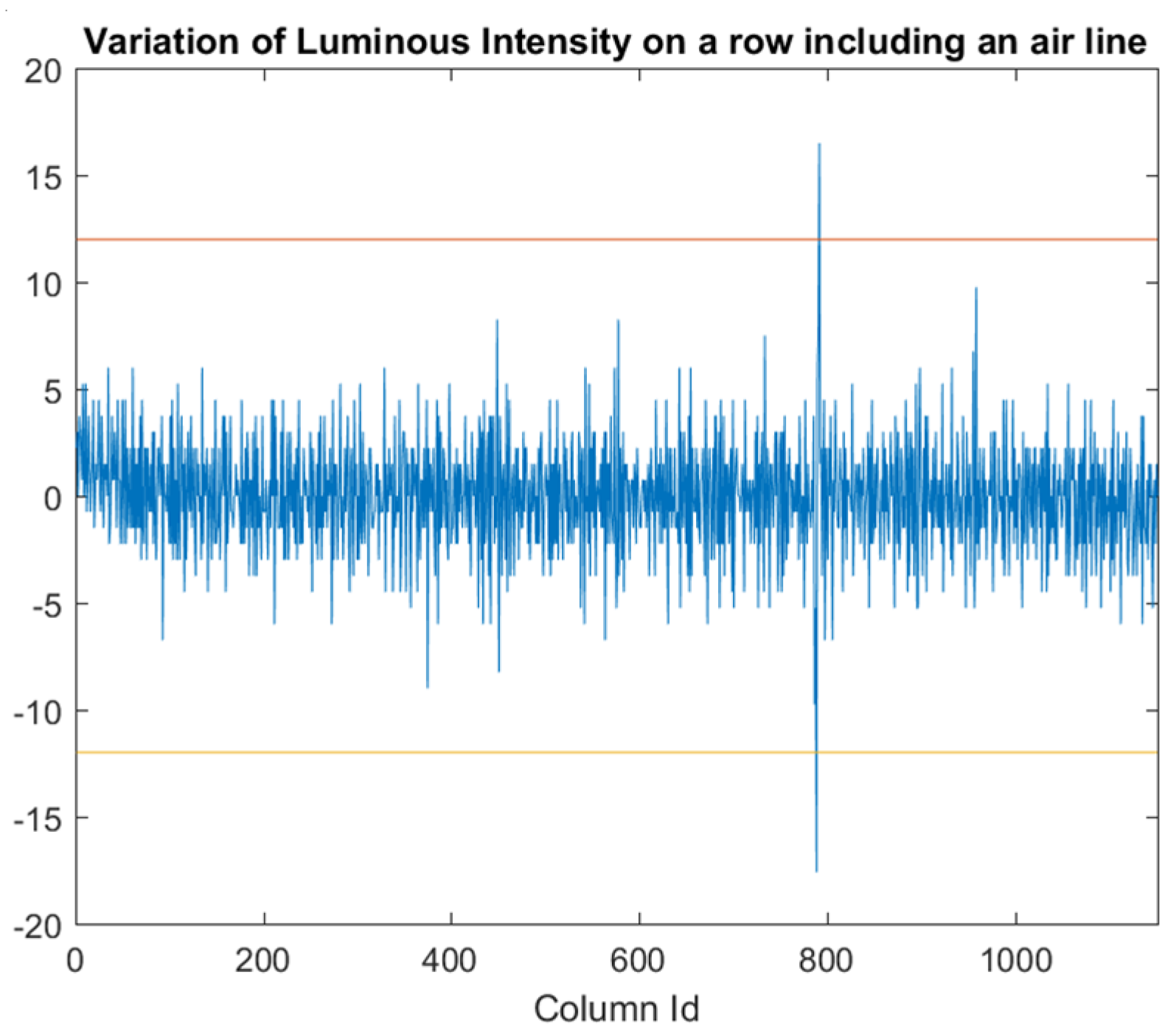

4.2. Processing of Rows for Air Lines Detection

4.3. Algorithm

| Algorithm 1 Proposed algorithm (Sigma). |

| 1. function elabCol (I, ) |

| 2. N = Number Of Rows (I); |

| 3. M = Number Of Columns (I); |

| 4. m = mean_column (I); |

| 5. s = std_column (I); |

| 6. for (i = 1; i ≤ N; i++) |

| 7. for (j = 1; j ≤ M; j++) |

| 8. if (I(i,j) < m(j) −*s(j)) |

| 9. then R(i,j) = 255; |

| 10. else R(i,j) = 0; |

| 11. end if |

| 12. end for |

| 13. end for |

| 14. return R; |

| 15. end function |

| 16. function elabRow (I, , R) |

| 17. N = Number Of Rows (I); |

| 18. M = Number Of Columns (I); |

| 19. for (i = 1; i ≤ N; i++) |

| 20. for (j = 2; j ≤ M; j++) |

| 21. if (abs(I(i,j) − I(i,j − 1)) >) |

| 22. then R(i,j) = 255; |

| 23. end if |

| 24. end for |

| 25. end for |

| 26. return R; |

| 27. end function |

| 28. I = ROI (acquired_image) |

| 29. R = elabCol (I, ) |

| 30. R = elabRow (I, , R) |

4.4. Classification

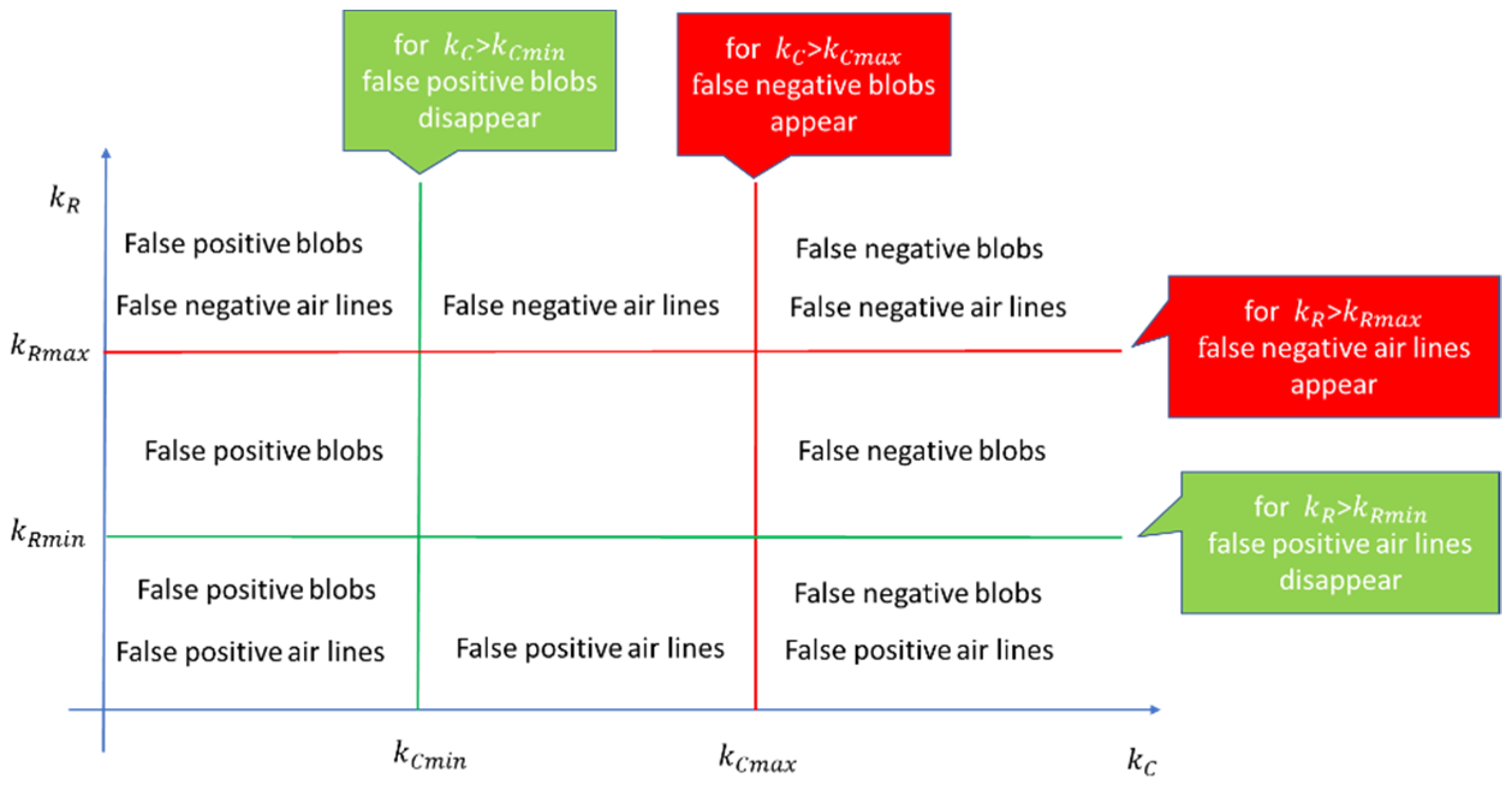

4.5. Setting of and Values—Tuning

| Algorithm 2 Tuning algorithm for the parameter. |

|

| Algorithm 3 Tuning algorithm for the parameter. |

|

5. Results

5.1. Comparison with Other Solutions

5.2. Performance and Quality Assessment in a Real World Implementation

6. Discussion and Implementation Issues

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Berry, H. Pharmaceutical aspects of glass and rubber. J. Pharm. Pharmacol. 2011, 5, 1008–1023. [Google Scholar] [CrossRef] [PubMed]

- Sacha, G.A.; Saffell-Clemmer, W.; Abram, K.; Akers, M.J. Practical fundamentals of glass, rubber, and plastic sterile packaging systems. Pharm. Dev. Technol. 2010, 15, 6–34. [Google Scholar] [CrossRef] [PubMed]

- Schaut, R.A.; Weeks, W.P. Historical review of glasses used for parenteral packaging. PDA J. Pharm. Sci. Technol. 2017, 71, 279–296. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Reynolds, G.; Peskiest, D. Glass delamination and breakage, new answers for a growing problem. BioProcess Int. 2011, 9, 52–57. [Google Scholar]

- Ennis, R.; Pritchard, R.; Nakamura, C.; Coulon, M.; Yang, T.; Visor, G.C.; Lee, W.A. Glass vials for small volume parenteral: Influence of drug and manufacturing processes on glass delamination. Pharm. Dev. Technol. 2001, 6, 393–405. [Google Scholar] [CrossRef]

- Iacocca, R.G.; Allgeier, M. Corrosive attack of glass by a pharmaceutical compound. J. Mater. Sci. 2007, 42, 801–811. [Google Scholar] [CrossRef]

- Guadagnino, E.; Zuccato, D. Delamination propensity of pharmaceutical Glass containers by accelerated testing with different extraction media. PDA J. Pharm. Sci. Technol. 2011, 66, 116–125. [Google Scholar] [CrossRef]

- Iacocca, R.G.; Toltl, N.; Allgeier, M.; Bustard, B.; Dong, X.; Foubert, M.; Hofer, J.; Peoples, S.; Shelbourn, T. Factors Affecting the Chemical Durability of Glass Used in the Pharmaceutical Industry. AAPS Pharm. Sci. Tech. 2010, 11, 1340–1349. [Google Scholar] [CrossRef] [Green Version]

- FDA. Recalls, Market Withdrawals, & Safety Alerts Search. Available online: https://www.fda.gov/Safety/Recalls/ (accessed on 11 February 2021).

- Bee, J.S.; Randolph, T.W.; Carpenter, J.F.; Bishop, S.M.; Dimitrova, M.N. Effects of surfaces and leachable on the stability of bio-pharmaceuticals. J. Pharm. Sci. 2011, 100, 4158–4170. [Google Scholar] [CrossRef]

- DeGrazio, F.; Paskiet, D. The glass quandary; Contract Pharma, 23 January 2012. Available online: https://www.contractpharma.com/issues/2012-01/view_features/the-glass-quandary/ (accessed on 17 October 2021).

- Breakage and Particle Problems in Glass Vials and Syringes Spurring Industry Interest in Plastics; IPQ In the News, IPQ Publications LLC. Available online: https://www.ipqpubs.com/2011/08/07/breakage-and-particle-problems-in-glass-vials-and-syringes-spurring-industry-interest-in-plastics/ (accessed on 7 August 2011).

- Peng, X.; Chen, Y.; Yu, W.; Zhou, Z.; Sun, G. An online defects inspection method for float glass fabrication based on machine vision. Int. J. Adv. Manuf. Technol. 2008, 39, 1180–1189. [Google Scholar] [CrossRef]

- Foglia, P.; Prete, C.A.; Zanda, M. An inspection system for pharmaceutical glass tubes. WSEAS Trans. Syst. 2015, 14, 123–136. [Google Scholar]

- Bradski, G.; Kaehler, A. Learning OpenCV: Computer Vision with the OpenCV Library; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Kumar, A. Computer-vision-based fabric defect detection: A survey. IEEE Trans. Ind. Electron. 2008, 55, 348–363. [Google Scholar] [CrossRef]

- Gabriele, A.; Foglia, P.; Prete, C.A. Row-level algorithm to improve real-time performance of glass tube defect detection in the production phase. IET Image Process. 2020, 14, 2911–2921. [Google Scholar]

- Park, Y.; Kweon, I.S. Ambiguous Surface Defect Image Classification of AMOLED Displays in Smartphones. IEEE Trans. Ind. Inform. 2016, 12, 597–607. [Google Scholar] [CrossRef]

- Canny, J.F. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 679–698. [Google Scholar] [CrossRef] [PubMed]

- Martínez, S.S.; Ortega, J.G.; García, J.G.; García, A.S.; Estévez, E.E. An industrial vision system for surface quality inspection of transparent parts. Int. J. Adv. Manuf. Technol. 2013, 68, 1123–1136. [Google Scholar] [CrossRef]

- Adamo, F.; Attivissimo, F.; Di Nisio, A.; Savino, M. A low-cost inspection system for online defects assessment in satin glass. Measurement 2009, 42, 1304–1311. [Google Scholar] [CrossRef]

- Shankar, N.G.; Zhong, Z.W. Defect detection on semiconductor wafer surfaces. Microelectron. Eng. 2005, 77, 337–346. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, F.; Li, H.; Liu, X. An Online Technology for Measuring Icing Shape on Conductor Based on Vision and Force Sensors. IEEE Trans. Instrum. Meas. 2017, 66, 3180–3189. [Google Scholar] [CrossRef]

- De Beer, M.M.; Keurentjes, J.T.F.; Schouten, J.C.; Van der Schaaf, J. Bubble formation in co-fed gas–liquid flows in a rotor-stator spinning disc reactor. Int. J. Multiph. Flow 2016, 83, 142–152. [Google Scholar] [CrossRef]

- Liu, H.G.; Chen, Y.P.; Peng, X.Q.; Xie, J.M. A classification method of glass defect based on multiresolution and information fusion. Int. J. Adv. Man. Technol. 2011, 56, 1079–1090. [Google Scholar] [CrossRef]

- Aminzadeh, M.; Kurfess, T. Automatic thresholding for defect detection by background histogram mode extents. J. Man. Sys. 2015, 37, 83–92. [Google Scholar] [CrossRef]

- Kim, C.M.; Kim, S.R.; Ahn, J.H. Development of Auto-Seeding System Using Image Processing Technology in the Sapphire Crystal Growth Process via the Kyropoulos Method. Appl. Sci. 2017, 7, 371. [Google Scholar] [CrossRef]

- Eshkevari, M.; Rezaee, M.J.; Zarinbal, M.; Izadbakhsh, H. Automatic dimensional defect detection for glass vials based on machine vision: A heuristic segmentation method. J. Manuf. Process. 2021, 68, 973–989. [Google Scholar] [CrossRef]

- Yang, Z.; Bai, J. Vial bottle mouth defect detection based on machine vision. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–10 August 2015; pp. 2638–2642. [Google Scholar]

- Liu, X.; Zhu, Q.; Wang, Y.; Zhou, X.; Li, K.; Liu, X. Machine vision based defect detection system for oral liquid vial. In Proceedings of the 2018 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018; pp. 945–950. [Google Scholar]

- Huang, B.; Ma, S.; Wang, P.; Wang, H.; Yang, J.; Guo, X.; Zhang, W.; Wang, H. Research and implementation of machine vision technologies for empty bottle inspection systems. Eng. Sci. Technol. Int. J. 2018, 21, 159–169. [Google Scholar] [CrossRef]

- Saxena, L.P. Niblack’s binarization method and its modifications to real-time applications: A review. Artif. Intell. Rev. 2017, 51, 1–33. [Google Scholar] [CrossRef]

- Kumar, M.; Rohini, S. Algorithm and technique on various edge detection: A survey. Sig. Image Process. 2013, 4, 65. [Google Scholar]

- Otsu, N. A threshold selection using an iterative selection method. IEEE Trans. Syst. Man. Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Pukelsheim, F. The three-sigma rule. Am. Stat. 1994, 48, 88–91. [Google Scholar]

- De Vitis, G.A.; Foglia, P.; Prete, C.A. Algorithms for the Detection of Blob Defects in High Speed Glass Tube Production Lines. In Proceedings of the 2019 IEEE 8th International Workshop on Advances in Sensors and Interfaces (IWASI), Otranto, Italy, 13–14 June 2019; pp. 97–102. [Google Scholar] [CrossRef]

- Ming, W.; Shen, F.; Li, X.; Zhang, Z.; Du, J.; Chen, Z.; Cao, Y. A comprehensive review of defect detection in 3C glass components. Measurement 2020, 158, 107722. [Google Scholar] [CrossRef]

- JLI Vision Glass Inspection. Available online: https://jlivision.com/vision-systems/glass-inspection (accessed on 1 July 2021).

- FiberVision Inspection System for Glass Tubes. Available online: https://www.fibervision.de/en/applications/glass-tube-inspection-system.htm (accessed on 1 July 2021).

- Schott perfeXion® System. Available online: https://microsites.schott.com/perfexion/english/index.html (accessed on 6 July 2021).

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall, Inc.: Hoboken, NJ, USA, 2007. [Google Scholar]

- Koerber Pharma Semiautomatic Inspection. Available online: https://www.koerber-pharma.com/en/solutions/inspection/semi-automatic-inspection-v90 (accessed on 21 July 2021).

- Malamas, E.N.; Petrakis, E.G.; Zervakis, M.; Petit, L.; Legat, J.D. A survey on industrial vision systems, applications and tools. Image Vis. Comput. 2003, 21, 171–188. [Google Scholar] [CrossRef]

- Li, D.; Liang, L.Q.; Zhang, W.J. Defect inspection and extraction of the mobile phone cover glass based on the principal components analysis. Int. J. Adv. Man. Tech. 2014, 73, 1605–1614. [Google Scholar] [CrossRef]

- Matić, T.; Aleksi, I.; Hocenski, Ž.; Kraus, D. Real-time biscuit tile image segmentation method based on edge detection. ISA Trans. 2018, 76, 246–254. [Google Scholar] [CrossRef] [PubMed]

- Karimi, M.H.; Asemani, D. Surface defect detection in tiling Industries using digital image processing methods: Analysis and evaluation. ISA Trans. 2014, 53, 834–844. [Google Scholar] [CrossRef] [PubMed]

- Wakaf, Z.; Jalab, H.A. Defect detection based on extreme edge of defective region histogram. J. King Saud Univ. Comput. Inf. Sci. 2018, 30, 33–40. [Google Scholar] [CrossRef] [Green Version]

- Kong, H.; Yang, J.; Chen, Z. Accurate and Efficient Inspection of Speckle and Scratch Defects on Surfaces of Planar Products. IEEE Trans. Ind. Inform. 2017, 13, 1855–1865. [Google Scholar] [CrossRef]

- Farid, S.; Ahmed, F. Application of Niblack’s method on image. In Proceedings of the 2009 IEEE International Conference on Emerging Technologies, Palma de Mallorca, Spain, 22–25 September 2009. [Google Scholar]

- Campanelli, S.; Foglia, P.; Prete, C.A. An architecture to integrate IEC 61131-3 systems in an IEC 61499 distributed solution. Comput. Ind. 2015, 72, 47–67. [Google Scholar] [CrossRef]

- Foglia, P.; Zanda, M. Towards relating physiological signals to usability metrics: A case study with a web avatar. WSEAS Trans. Comput. 2018, 13, 624–634. [Google Scholar]

- Foglia, P.; Solinas, M. Exploiting replication to improve performances of NUCA-based CMP systems. ACM Trans. Embed. Comput. Syst. (TECS) 2014, 13, 1–23. [Google Scholar] [CrossRef]

- Foglia, P.; Prete, C.A.; Solinas, M.; Monni, G. Re-NUCA: Boosting CMP performance through block replication. In Proceedings of the 13th Euromicro Conference on Digital System Design: Architectures, Methods and Tools, Lille, France, 1–3 September 2010; pp. 199–206. [Google Scholar]

- OPEN CV Documentation. Available online: https://opencv.org/ (accessed on 17 October 2021).

- PC Cards cifX User Manual. Available online: https://www.hilscher.com/products/product-groups/pc-cards/pci/cifx-50-reeis/ (accessed on 17 October 2021).

- Basler Racer Camera. Available online: https://www.baslerweb.com/en/products/cameras/line-scan-cameras/racer/ral2048-48gm/ (accessed on 17 October 2021).

- COBRATM Slim Line Scan Illuminator Datasheet. Available online: https://www.prophotonix.com/led-and-laser-products/led-products/led-line-lights/cobra-slim-led-line-light/ (accessed on 17 October 2021).

- Matrox Solios Doc. Available online: https://www.matrox.com/imaging/en/products/frame_grabbers/solios/solios_ecl_xcl_b/ (accessed on 17 October 2021).

- Abella, J.; Padilla, M.; Castillo, J.D.; Cazorla, F.J. Measurement-Based Worst-Case Execution Time Estimation Using the Coefficient of Variation. ACM Trans. Des. Autom. Electron. Syst. 2017, 4, 29. [Google Scholar] [CrossRef] [Green Version]

- De Vitis, G.A.; Foglia, P.; Prete, C.A. A pre-processing technique to decrease inspection time in glass tube production lines. IET Image Process. 2021, 15, 1–13. [Google Scholar] [CrossRef]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques, 1st ed.; Springer: Berlin/Heidelberg, Germany, 2008; p. 138. ISBN 3-540-76916-1. [Google Scholar]

- Jiang, J.; Cao, P.; Lu, Z.; Lou, W.; Yang, Y. Surface Defect Detection for Mobile Phone Back Glass Based on Symmetric Convolutional Neural Network Deep Learning. Appl. Sci. 2020, 10, 3621. [Google Scholar] [CrossRef]

| System Component | Adopted Hardware |

|---|---|

| Linear Camera | Basler Racer [56] |

| Illuminator | Red light COBRA Slim LED Line [57] |

| Frame Grabber | Matrox Solios eCL/XCL-B [58] (2K) |

| Experiment Name | Pre-Processing | Defect Detection | Parameters | Post-Processing |

|---|---|---|---|---|

| Canny | ROI identification [14] | Canny Algorithm [19] | Hysteresis Thresholds35, 80 | Class. of containers (Section 4.4) |

| Sigma | ROI identification [14] | Local and Global Threshold (Section 5) | kc = 4.91 kr = 12 | Class. of containers (Section 4.4) |

| Niblack | ROI identification [14] | Niblack Algorithm [32] | N = 20 × 20 K = −1.7 | Class. of containers (Section 4.4) |

| Blobs | Air lines | Defective Frames | ||

|---|---|---|---|---|

| Expected value | TP | 10 | 6 | 13 |

| Canny | TP/FP (FN) | 10/5 (0) | 6/0 (0) | 13/2 (0) |

| Sigma | TP/FP (FN) | 10/3 (0) | 6/0 (0) | 13/1 (0) |

| Niblack | TP/FP (FN) | 10/11 (0) | 6/0 (0) | 13/4 (0) |

| Expected Value | Canny Algorithm | Sigma | Niblack | |

|---|---|---|---|---|

| Cumulative Sum | 1796 | 3293 | 2016 | 664 |

| Cumulative Percentage | 100 | 183.35 | 111.38 | 36.69 |

| Avg Abs Error (%) | 0 | 167.27 | 22.86 | 44.38 |

| Expected Value | Canny Algorithm | Sigma | Niblack | |

|---|---|---|---|---|

| Cumulative Sum | 3025 | 2552 | 2691 | 2586 |

| Cumulative Percentage | 100 | 84.36 | 88.96 | 85.49 |

| Avg Abs Error (%) | 0 | 15.42 | 12.58 | 15.60 |

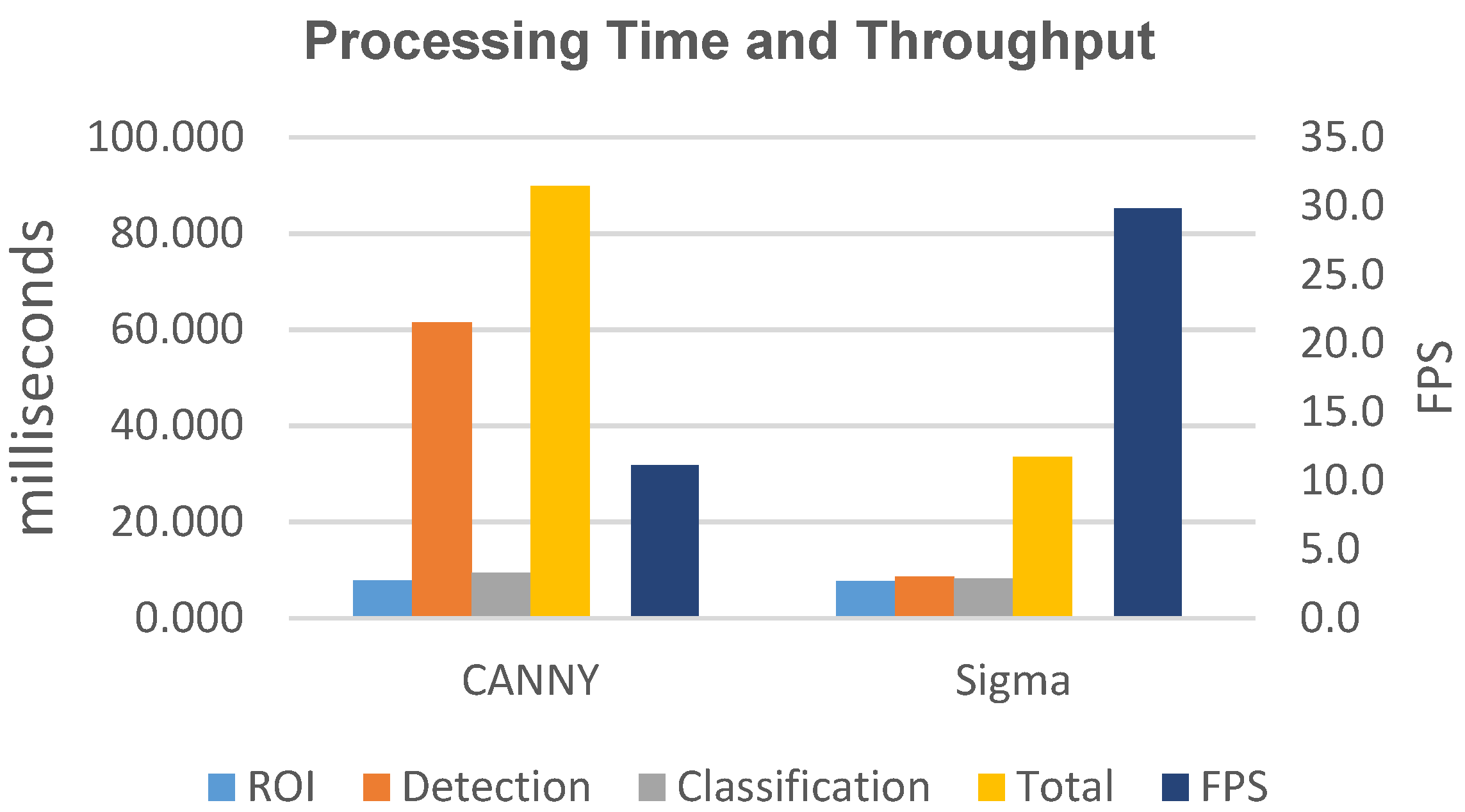

| Processing Time | Throughput | ||||

|---|---|---|---|---|---|

| Algorithm | ROI | Detection | Classification | Total | FPS |

| Canny | 7.845 | 61.538 | 9.395 | 89.824 | 11.1 |

| Sigma | 7.698 | 8.585 | 8.192 | 33.514 | 29.8 |

| Niblack | 7.934 | 2323.891 | 50.606 | 2642.169 | 0.4 |

| Processing Time | Throughput | |||||

|---|---|---|---|---|---|---|

| Algorithm | ROI | Detection | Classification | Total | FPS | |

| Canny | Without DSDRR | 7.845 | 61.538 | 9.395 | 89.824 | 11.1 |

| DSDRRD | 10.331 | 10.437 | 2.554 | 29.745 | 33.6 | |

| Sigma | Without DSDRR | 7.698 | 8.585 | 8.192 | 33.514 | 29.8 |

| DSDRRD | 10.321 | 1.485 | 2.469 | 17.133 | 58.3 | |

| Caliber (mm) | # Tubes | Tube Accepted | Tube Discarded | Tube Validated | Tube Invalidated | TubeFp | TubeFn | P | R |

|---|---|---|---|---|---|---|---|---|---|

| 8.65/.9 | 300 | 238 | 62 | 240 | 60 | 3 | 2 | 0.950 | 0.967 |

| 11.6/.9 | 300 | 257 | 43 | 257 | 43 | 2 | 2 | 0.953 | 0.953 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Vitis, G.A.; Di Tecco, A.; Foglia, P.; Prete, C.A. Fast Blob and Air Line Defects Detection for High Speed Glass Tube Production Lines. J. Imaging 2021, 7, 223. https://doi.org/10.3390/jimaging7110223

De Vitis GA, Di Tecco A, Foglia P, Prete CA. Fast Blob and Air Line Defects Detection for High Speed Glass Tube Production Lines. Journal of Imaging. 2021; 7(11):223. https://doi.org/10.3390/jimaging7110223

Chicago/Turabian StyleDe Vitis, Gabriele Antonio, Antonio Di Tecco, Pierfrancesco Foglia, and Cosimo Antonio Prete. 2021. "Fast Blob and Air Line Defects Detection for High Speed Glass Tube Production Lines" Journal of Imaging 7, no. 11: 223. https://doi.org/10.3390/jimaging7110223

APA StyleDe Vitis, G. A., Di Tecco, A., Foglia, P., & Prete, C. A. (2021). Fast Blob and Air Line Defects Detection for High Speed Glass Tube Production Lines. Journal of Imaging, 7(11), 223. https://doi.org/10.3390/jimaging7110223