Abstract

This paper investigates the usefulness of multi-fractal analysis and local binary patterns (LBP) as texture descriptors for classifying mammogram images into different breast density categories. Multi-fractal analysis is also used in the pre-processing step to segment the region of interest (ROI). We use four multi-fractal measures and the LBP method to extract texture features, and to compare their classification performance in experiments. In addition, a feature descriptor combining multi-fractal features and multi-resolution LBP (MLBP) features is proposed and evaluated in this study to improve classification accuracy. An autoencoder network and principal component analysis (PCA) are used for reducing feature redundancy in the classification model. A full field digital mammogram (FFDM) dataset, INBreast, which contains 409 mammogram images, is used in our experiment. BI-RADS density labels given by radiologists are used as the ground truth to evaluate the classification results using the proposed methods. Experimental results show that the proposed feature descriptor based on multi-fractal features and LBP result in higher classification accuracy than using individual texture feature sets.

1. Introduction

Breast density is a critical bio-marker which indicates the possibility of developing breast cancer in the future for women. High breast density is caused by a high percentage of fibro-glandular tissue and reduces the effectiveness of mammography screening [1]. Related research work shows that women with extremely dense breast could suffer four to six-fold higher risk of developing breast cancer than other females with low breast density [2]. Dense tissue areas in mammograms also cause the ‘masking’ effect leading to reduced sensitivity when radiologists visually assess breast lesions or early signs of cancer, such as lumps and calcification clusters [3]. Breast density is gaining significant attention because it is closely associated with higher cancer risk, increased incidence of interval cancer and reduced mammographic sensitivity.

Breast density measurements help identify and target women who could benefit from tailored screening options such as increased (or decreased) screening interval or supplemental screening via alternative modalities [4]. Breast density categories currently in use are six-class-categories (SCC) [2], Wolfe’s four categories [5], and the Breast Imaging-Reporting and Data System (BI-RADS) [6]. BI-RADS criterion proposed by the American College of Radiology (ACR) has been widely used in clinical applications and includes four density categories: fatty, scattered density, heterogeneously dense, and extremely dense. A simplified two-category model (“fatty and sparsely dense”, “heterogeneously and extremely dense”) is also sometimes used [7]. It may be noted here that the robustness of clinical workflows that heavily depend on radiologists’ subjective visual assessments may be affected by inter- and intra-observer disagreement [8].

The development of computerised mammogram interpretation methods is still an active research field. Advanced machine learning and image analysis algorithms are currently being developed to extract diagnostically relevant features and derive quantitative measurements for improving radiologists’ workflow [9]. For breast density classification in mammograms, extracting effective features plays an important role in obtaining accurate classification results. A number of feature extraction methods have been proposed and studied in literature, with the aim of capturing higher order statistical information and improving the classification accuracy.

Different image characteristics have been considered and used as the feature descriptors, including image intensity, texture patterns, morphological features, and statistical information. Mario et al. [7] extracted multiple features based on image intensity, histograms, and gray level co-occurrence matrix (GLCM) to classify mammograms. Wrappers were used for feature selection and improving the classification performance. Oliver et al. [10] combined intensity, texture, and morphological features to classify pixels in mammograms into two categories (fatty and dense) using an SVM classifier. Statistical features including mean, standard deviation, smoothness, third moment, uniformity, and entropy were used to classify mammograms into three density categories in [11]. Qu et al. [12] proposed a fuzzy-rough refined image processing method to enhance local image regions and extract GLCM based statistical features for classifying mammographic density. The method in [13] extracted 137 pixel-level features containing intensity, GLCM, and morphological features, to group pixels into fatty or dense classes. The work in [14] extracted 21 features based on intensity and fractal texture features, and SVM was used to classify the Mammographic Image Analysis Society (MIAS) mammograms into three categories. Muhimmah et al. [15] used multi-resolution histogram method to analyse texture features, and mammograms were classified to three density categories by a directed acyclic graph (DAG)-SVM classifier. Chen et al. [16] investigated five texture feature sets separately, including LBP, local grey level appearance (LGA), textons (MR8 texton and image-patch texton), and basic image features (BIF). Their experimental results showed that image-patch texton features produced a higher classification accuracy for 4 BI-RADS categories classification. A multi-scale blob detection method was proposed in [17] to recognize the fatty and dense tissue present in mammograms. This method was used to analyse MIAS mammograms and experimental results revealed some initial relations between the BI-RADS density category and the average relative tissue (fatty and dense) area in mammograms. Zheng et al. [18] used a lattice-based approach to extract statistical and structural features for analysing parenchymal texture in mammograms.

Recently, deep learning-based methods have been used to analyse mammograms for evaluating and classifying breast density, with promising results. Mohamed et al. [19] proposed a CNN model based on AlexNet to distinguish between two BI-RADS categories (‘scattered density’ and ‘heterogeneously dense’) which often lead to disagreements in radiology assessments [20]. A CNN architecture was designed in [21] to learn the features from a multitude of sub-images and to classify them into dense and fatty tissues. Lee et al. [22] used fully convolutional network (FCN) to segment breast regions and fibro-glandular areas with the aim of estimating percentage density. In [13] a deep convolutional neural network (DCNN) was trained to classify mammographic pixels into fatty or dense class. A probability map of breast density (PMD) was generated and used to estimate percentage density. Kallenberg et al. [1] proposed an unsupervised deep learning model to segment dense breast region from mammograms. However, applying deep learning methods to classify breast density requires a huge number of training images with accurate annotations by clinicians [23], and some datasets used in related work are not publicly available.

Texture analysis methods, particularly using descriptors such as LBP and its variants, have been shown to be useful in extracting relevant image structure information for classification tasks. Ojala et al. [24] proposed a method using local binary patterns (LBP) to describe image texture patterns. Due to its simplicity and efficiency, LBP has been studied widely and several variants were proposed for extracting texture features and classifying medical images. In [25], LBP was extended to elliptical LBP (ELBP), and mean-elliptical LBP (M-ELBP) by considering various neighbourhood topologies and different local region scales. It has been reported that M-ELBP gave a satisfactory classification result (77.38 ± 1.06) on MIAS dataset. Tan et al. [26] proposed local ternary patterns (LTP) using a three-value encoding approach compared to two-value encoding of LBP. Rampun et al. [27] extracted LTP based texture features to classify MIAS mammograms into four BI-RADS categories. Nanni et al. [28] proposed local quinary patterns (LQP) by extending LBP from a binary encoding system to a five-value encoding system, and used three different medical image classification tasks to test this new texture descriptor. Subsequently LQP was investigated and extended with multi-resolution and multi-orientation schemes in [29] to classify mammographic density. Their experimental results showed that the use of LQP based texture features improved the classification accuracy. In a recent study, Rampun et al. [30] tried local septenary patterns (LSP) method by using a seven-value encoding approach to further improve the classification performance. Their experimental results demonstrated that classification accuracy was slightly improved by LSP compared to LQP (80.5 ± 9.2 vs. 80.1 ± 10.5 on INBreast dataset).

Inspired by the success of texture analysis methods based on LBP and its variants, this paper investigates the usefulness of a richer texture descriptor by combining LBP and texture features obtained using multi-fractal analysis. Multi-fractal methods, due to their capability of enhancing image texture characteristics, have been used to process and interpret medical images [31,32,33]. Its applications in other image processing tasks also demonstrate that it can be an effective and promising tool for extracting texture features. To the best of our knowledge, multi-fractal analysis and its feature vectors have not been previously used to estimate breast density in mammograms. In this paper, we assume that a joint texture feature space consisting of LBP and multi-fractal features could possibly capture more effective and useful texture features related to breast density characteristics, thus improving the classification accuracy over methods using only one individual feature set. A FFDM dataset, INbreast, containing 409 mammogram images with the BI-RADS density labels annotated by radiologists as the ground truth is used in experiments to test the proposed methods. More details of our experimental approach are given in the following sections.

This paper is organized as follows. The next section describes a digital mammogram dataset used in experiments and the processing pipeline. Section 3 introduces two feature extraction methods: multi-fractal analysis and LBP. Section 4 discusses PCA and autoencoder model for feature selection, and Section 5 shows our experimental results and comparative analysis. Conclusions and some future work directions are given in Section 6.

2. Materials and Methods

2.1. Breast Density Classification

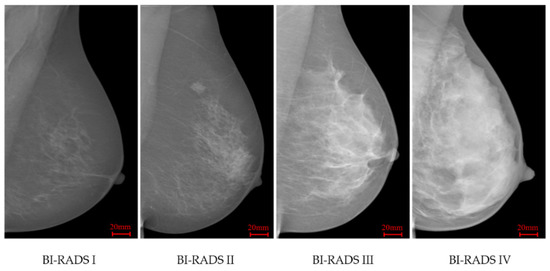

According to BI-RADS (4th edition) [6], breast density classification depends on the proportion of dense fibro-glandular tissue within the breast area in mammograms. The four categories are: (a) BI-RADS I (0–25% dense tissue, predominantly fat); (b) BI-RADS II (26–50% dense tissue, fat with some fibro-glandular tissue); (c) BI-RADS III (51–75% dense tissue, heterogeneously dense); and (d) BI-RADS IV (above 75% dense tissue, extremely dense). In many countries, radiologists estimate mammographic density using visual assessment, leading to the inter- and intra-readers variability [8].

2.2. Dataset

INbreast [34] is a FFDM database which contains 115 cases and 409 images including bilateral mediolateral oblique (MLO) and craniocaudal (CC) views. This database was acquired at the Breast Centre in CHSJ, Porto, under permission of both the Hospitals Ethics Committee and the National Committee of Data Protection. The images were acquired between April 2008 and July 2010; the acquisition equipment was the MammoNovation Siemens FFDM, with a solid-state detector of amorphous selenium, pixel size of 70 mm (microns), and 14-bit contrast resolution. The image matrix was 3328 × 4084 or 2560 × 3328 pixels, depending on the compression plate used in the acquisition (based on the breast size of the patient). This dataset offers carefully associated ground truth (GT) annotations by radiologists. For breast density, BI-RADS labels are available, including 136, 146, 99 and 28 images respectively in BI-RADS I to IV classes. Figure 1 illustrates four INbreast mammograms in different density categories.

Figure 1.

INbreast mammograms in four different breast density categories.

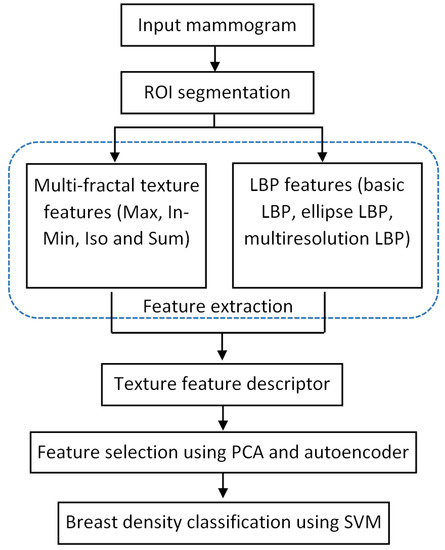

2.3. Processing Stages

Various stages of the processing pipeline are shown in Figure 2. After reading an input mammogram image, we first use a pre-processing step to segment a region of interest (ROI) which is the breast region in this work. The ROI segmentation results in a mask image which can be used in the feature extraction step, where we use texture features extracted from only the breast region areas (excluding the background area and pectoral muscle region). Multi-fractal analysis and local binary patterns are used to extract texture features from breast regions, constituting the texture descriptors. PCA and autoencoder network are used in the feature selection step to reduce the feature space. SVM is chosen as the classifier to predict the breast density label for each test mammogram image. These steps are detailed in the following sections.

Figure 2.

Processing steps for classifying breast density in mammograms.

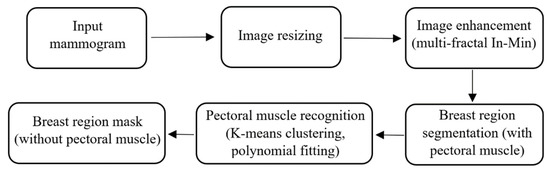

2.4. Mammogram Pre-Processing

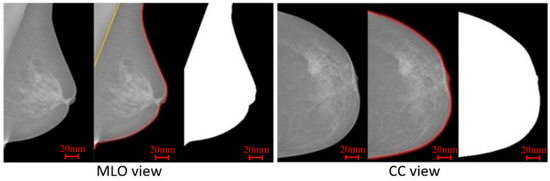

The main task performed in the pre-processing stage is the breast area segmentation. In MLO view mammograms, the pectoral muscle region is captured along with the breast region. However, the pectoral muscle represents a predominantly dense region which may easily affect breast density evaluation. Therefore, ROI segmentation is first applied to remove non-breast areas such as the background region and pectoral muscle. A pipeline for the pre-processing stage is shown in Figure 3. A multi-fractal method (introduced in Section 3.1 and Section 3.2) is used to enhance the contrast between image background and the breast tissue region. An intensity thresholding method and morphological operations [35] are used to separate the breast region from the background. A K-means algorithm and polynomial fitting approach [36] are employed to distinguish the pectoral muscle region from the breast region in MLO view mammograms. A median filter of 3 × 3 size is used to reduce noise. Finally, mask images are obtained, which are used to extract image features from only the region of interest (breast area) in the following steps. Figure 4 shows examples of segmenting the breast region from INbreast mammograms. A more detailed introduction of the methods used in this pre-processing stage can be found in the Supplementary Material (Figures S1 and S2).

Figure 3.

The pipeline of the pre-processing stage.

Figure 4.

Two examples from the INbreast dataset, showing the breast region segmentation and mask images.

3. Feature Extraction

3.1. Multi-Fractal Analysis

Multi-fractal analysis of image intensity variations computed using a set of local measures, can be used to describe image texture features for different classification tasks [31,32,33]. Let µw(p) denote a multi-fractal measure function, where p is the central pixel in a square window of size w × w. Then, a local singularity coefficient, known as the Hölder exponent or α-value [37], can be calculated to reveal the variation of the selected µw(p) function within the neighbourhoods of the pixel p.

where, C is an arbitrary constant and d is the total number of windows used in the computation of αp. The value of αp can be estimated from the slope of a linear regression line in a log-log plot where log(µw(p)) is plotted against log(w). Commonly used multi-fractal measures for calculating α are outlined below:

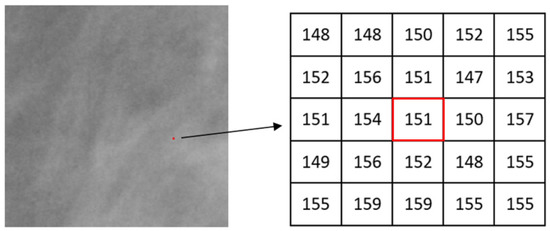

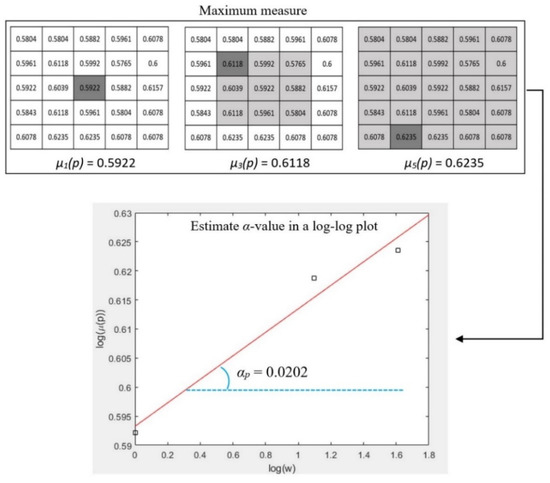

where, g(k, l) represents the intensity value of a pixel at position (k, l); Ω denotes the set of all neighbourhood pixels of p in the window; # is the number of pixels in a set. Pixel intensity values are normalized into the range of [0, 1] when considering maximum and inverse-minimum measures. Such normalization enhances subtle image features in the domain of Hölder exponents due to the non-linear amplifying effect of the logarithmic function. A patch (a small image region) of one mammogram is shown in Figure 5 and one pixel p (marked in red colour) is chosen for illustrating the calculation of the α value. Figure 6 shows the measured values of µw(p) by using maximum measure when the square window sizes of w are 1, 3, and 5 respectively. The α value is then estimated using the slope of the linear regression line in a log-log plot (Equation (2)).

Figure 5.

A local region (200 × 200 pixels) in one mammogram, and the central pixel p(151) and its neighbourhood.

Figure 6.

An example of estimating α value for the point p in Figure 5 using the maximum measure.

3.2. Alpha Image and Texture Features

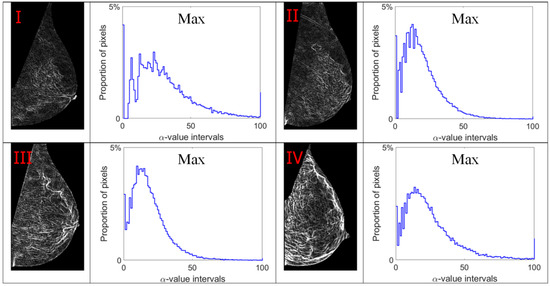

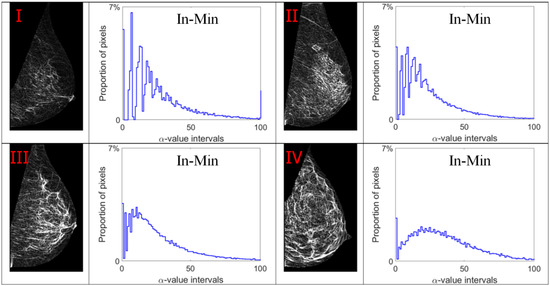

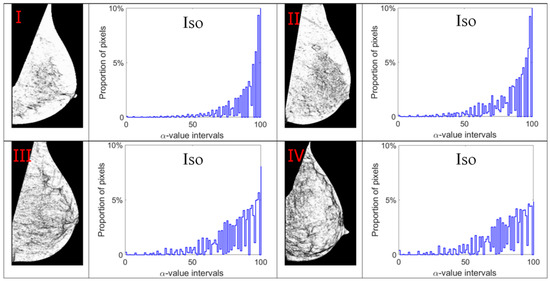

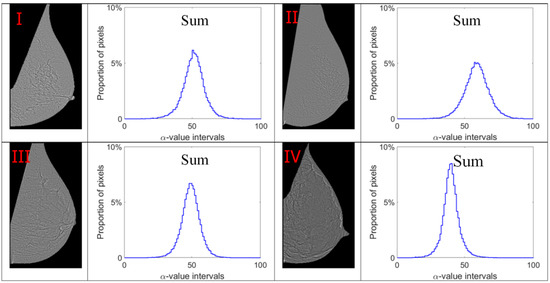

Alpha images (denoted as α-images) are obtained by replacing the intensity value at each position p by the αp value. In α-images, certain texture patterns have higher contrast compared to original images. The range of α values in an α-image is denoted by [αmin, αmax]. This range is subdivided into a set of bins, and pixels having the α values in the same bin are counted to obtain an α-histogram which can be used as one of the texture features [31,32]. In this study, the α value range in each mammogram image is divided into 100 bins which are used to obtain the corresponding α-histograms. As this work focuses on breast density classification, we extract texture features from only breast regions (pectoral muscle and background area excluded). Breast region mask images obtained in the pre-processing stage are used to control that the α-images and α-histograms reflect the image information in breast regions. In addition, since the area of breast region differs between different mammograms, we use the proportion of pixels in the region of interest to represent the height of bins in histograms instead of the pixel number, which makes the histogram based features uniform and comparable. Figure 7, Figure 8, Figure 9 and Figure 10 show α-images of mammograms in different breast density categories given earlier in Figure 1, and their α-histograms using four different multi-fractal measures.

Figure 7.

Alpha images and alpha histograms using the maximum measure. Up-left: BI-RADS (I) mammogram; up-right: BI-RADS (II) mammogram; down-left: BI-RADS (III) mammogram; down-right: BI-RADS (IV) mammogram.

Figure 8.

Alpha images and alpha histograms using the inverse-minimum measure. Up-left: BI-RADS (I) mammogram; up-right: BI-RADS (II) mammogram; down-left: BI-RADS (III) mammogram; down-right: BI-RADS (IV) mammogram.

Figure 9.

Alpha images and alpha histograms using the Iso measure. Up-left: BI-RADS (I) mammogram; up-right: BI-RADS (II) mammogram; down-left: BI-RADS (III) mammogram; down-right: BI-RADS (IV) mammogram.

Figure 10.

Alpha images and alpha histograms using the summation measure. Up-left: BI-RADS (I) mammogram; up-right: BI-RADS (II) mammogram; down-left: BI-RADS (III) mammogram; down-right: BI-RADS (IV) mammogram.

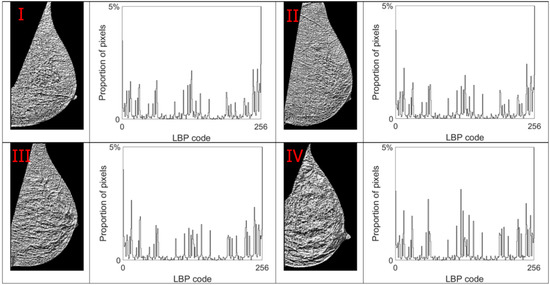

3.3. Local Binary Patterns

Local binary pattern (LBP) proposed by [24] is a powerful feature descriptor used for texture analysis and classification. Due to its simplicity and robustness, several research works and applications use it to extract image features. For mammographic density classification, LBP and its variants have been applied and tested to improve the classification accuracy in [25,26,27,28,29,30]. A binary pattern is derived by comparing the intensity at each pixel with its neighbours and encoding the information in a P-bit integer value. Concretely, for each central pixel c with a grey level value gc in a specific window size, its LBP code is calculated by comparing the gc value with its neighbourhood pixels which is located at a distance R from c. If gc is higher than the neighbouring pixel Pi, then the neighbour pixel is assigned a value 0, otherwise a value 1. Subsequently, a P-bit binary code is generated for the current pixel c. LBPP,R is used to denote this binary code and its calculation can be described as follows:

When P is set to 8, the total number of different binary patterns that can be generated using LBP is 256 (28). Therefore, an LBP histogram containing 256 bins is obtained and used as a local texture descriptor. Figure 11 shows LBP images and their LBP histograms for mammograms in different density categories.

Figure 11.

LBP code images and LBP histograms for mammograms in different density categories. Up-left: BI-RADS (I) mammogram; up-right: BI-RADS (II) mammogram; down-left: BI-RADS (III) mammogram; down-right: BI-RADS (IV) mammogram.

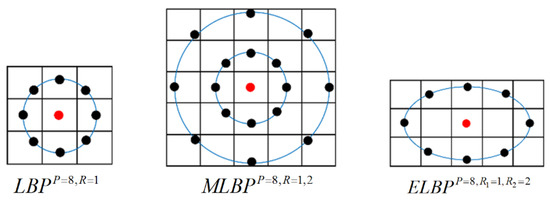

Larger neighbourhood areas represented by higher values of R provide more local image information and generate a longer texture feature vector. For example, when P = 8, setting R = 1 and R = 2 separately, two LBP histograms are obtained and concatenated, producing a 512-length feature vector. This descriptor is called a multi-resolution LBP (MLBP), and it contains more texture information and consequently has higher feature dimensionality compared to LBP.

In basic LBP, a circular neighbourhood with a radius of R is used for locating neighbouring pixels. As a variant of LBP, elliptical LBP (ELBP) is developed in [25], aiming to extract more local information from different directions. In ELBP, R1 and R2 denote the lengths of the semi-minor and semi-major axis of the elliptical image region, and the ELBP is calculated as follows:

Figure 12 illustrates the configuration of image pixels used in the computation of the basic LBP and its variants discussed above, and they will be used and compared in this classification work.

Figure 12.

Illustrations of the basic LBP, MLBP and ELBP.

3.4. Feature Descriptor with Concatenated Texture Features

As discussed above, multi-fractal features and LBP features are used as texture descriptors in this work to classify mammogram images. In addition to applying the two texture feature descriptors separately, we also create a novel feature descriptor by concatenating multi-fractal measure-based feature set with the LBP based feature set. By combining two different texture feature groups, we assume that more useful image information can be captured to reflect different tissue texture patterns related to breast density characteristics, thus improving the classification performance. In our experiments (Section 4), the four different multi-fractal measures (i.e., Max, In-Min, Iso, Sum) discussed in Section 3.2 are concatenated with LBP based texture features separately with the aim of obtaining a higher classification accuracy.

4. Feature Selection

The concatenation of several texture feature vectors could result in high feature dimensionality and a high level of feature redundancy. In this section, we consider using PCA and the autoencoder network to select the optimum subset of texture features by removing redundant features which possibly do not relate to the breast density characteristics well.

4.1. Principal Components Analysis

Principal components analysis (PCA) is used for efficient coding of various biological signals [38]. It is a well-known optimal linear scheme for dimension reduction in data analysis, which retains maximal variance in the data set, while improving algorithm performance and saving processing time.

In the proposed method, X is used to denote the input feature set. X is an M × N matrix which has N dimensional features and M elements in each dimensionality. We use Xi to refer to the entire set of elements in the ith dimension and Xji to refer to the jth element in this set. The covariance between the first two dimensions X1 and X2 is computed as below.

where, and denote means of the set of X1 and X2 respectively. After computing all the possible covariance values between different dimensions, a covariance matrix CM can be obtained as follows:

Eigenvalues and eigenvectors of the covariance matrix are calculated subsequently, and eigenvectors are sorted in descending order according to the eigenvalues. A matrix V can be constructed with these eigenvectors in columns. The final feature set X′ can be derived from X and the matrix V as follows:

In PCA, an assumption made for feature selection is that most information of the input feature set is contained in the subspace spanned by the first n principal axes, where n < N in an N-dimensional feature space. Therefore, each original feature vector can be represented by its principal component vector with the dimensionality of n.

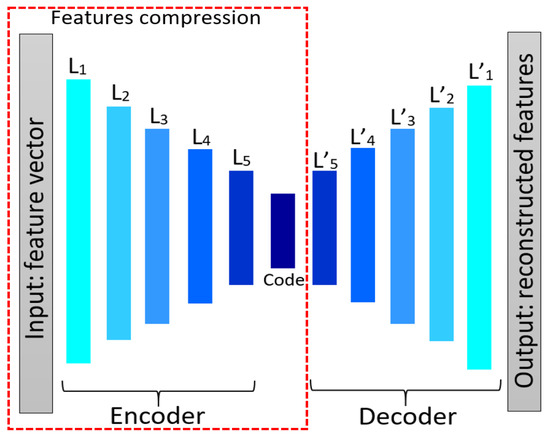

4.2. Autoencoder Network

Autoencoder network [39] is a feed-forward neural network with more than one hidden layer, which attempts to reconstruct input data at the output layer. The output layer is usually of the same size as the input layer and the network architecture represents an hour-glass shape. As the size of the hidden layer in an autoencoder neural network is smaller than the input layer, the high-dimensional input data can be reduced to narrower code space when using more hidden layers. Therefore, in addition to image reconstruction and compression [40], an autoencoder is also used to reduce feature dimensionality. Generally, an autoencoder network consists of two components, namely “encoder” and “decoder”. By reducing the hidden layer size, the encoder part is forced to learn important features of the input data, and the decoder part reconstructs the original data from the generated feature code. Once the training phase is over, the decoder part is discarded and the encoder is used to transform a data sample to a feature subspace.

We use an autoencoder to reduce the length of the feature vector. Therefore, a feature vector of size (1 × n) is input into the autoencoder network. Figure 13 shows an autoencoder model containing 11 hidden layers, in which the input layer receives and processes the initial texture features. Fully connected (FC) layers are used as hidden layers and rectified linear unit (ReLU) is used as the activation function in each hidden layer except the last layer which uses a sigmoid function. Binary cross entropy is employed as a loss function.

Figure 13.

The autoencoder architecture for reducing feature numbers.

4.3. Classification

SVM is used in this study for training the classification model and producing classification results on test images. Since this work aims to classify mammograms into multiple density categories, a multiclass SVM which is implemented by a one against all (OAA) method is used in this model. To obtain the optimal classification results, three commonly used kernels [41], RBF, Poly, and Sigmoid, are tested in this work. A grid-searching method is used to find the best combinations of parameters (gamma, C, and degree).

5. Experiments and Result Analysis

In our experiments, a five-fold cross validation is performed on INbreast dataset using the proposed texture descriptors and the SVM classifier. Table 1 lists the important parameters of the methods used and their selected values or value ranges.

Table 1.

Methods, parameters and their value ranges used in experimental analysis.

5.1. Classification Results Using Multi-Fractal Features

We first test and compare the efficacy of multi-fractal feature descriptors for classifying breast density. As discussed in Section 3.2, four different multi-fractal measures are used to obtain the α-histograms which contain 100 bins in each histogram. The α-histogram is used as the texture feature descriptor to represent the breast density related features. One out of four BI-RADS density labels are predicted for each test mammogram by the SVM classifier. The classification results are shown in confusion matrices in Table 2 for each of the four different multi-fractal measures. From the confusion matrices we can see that the classification results based on different feature descriptors differ significantly. The Iso based feature descriptor produces the highest classification accuracy of 73.8%, while an accuracy of 63.3% is obtained using features based on the Max measure. The other two multi-fractal measures, In-Min and Sum, yield lower accuracy results of 59.7% and 55.3% respectively.

Table 2.

Confusion matrices of classification results using multi-fractal feature descriptors.

5.2. Classification Results Using LBP Features

Three LBP based texture feature descriptors, LBP, ELBP, and MLBP, are tested under the same experimental setting. As introduced in Section 3.3, the LBP or ELBP based histogram contains 256 bins, while the MLBP calculates LBP codes from two different neighbourhoods, containing a total of 512 bins. Classification results in Table 3 show that the MLBP feature descriptor produces a slightly higher classification accuracy (73.3%) than the other two descriptors. The accuracy of 72.1% is obtained using ELBP and 70.9% is observed using LBP. The MLBP descriptor contains much more image features extracted from larger local areas, and as expected, produces a better classification performance. Therefore, MLBP is selected to be concatenated with different multi-fractal feature sets in next section, constituting new feature descriptors.

Table 3.

Confusion matrices of classification results using LBP based feature descriptors.

5.3. Classification Results Using Cascaded Features

In this section, we discuss the possibility of augmenting the feature space by concatenating multi-fractal texture features and the MLBP features. Each multi-fractal measure (Max, In-Min, Iso, and Sum) based feature set cascades the MLBP feature set, generating a 612-bin feature descriptor. To reduce the feature dimensionality by removing irrelevant texture features, PCA is used to optimize the feature space before using the feature descriptor to classify mammograms. The final classification results are shown in Table 4. The overall classification performance is improved by more than 10%, compared to the results obtained using individual multi-fractal feature descriptors or the LBP based features. The best classification accuracy of 84.6% is obtained using the Iso+MLBP feature descriptor. The Max+MLBP feature set also gives a high accuracy of 81.4%. By combining MLBP features, the In-Min and Sum based descriptors also show improved accuracy levels of 76.8% and 68.9% respectively. This indicates that the feature combination and the use of PCA contribute to improving the representation ability and discriminating power of texture features for distinguishing breast density related characteristics in mammograms.

Table 4.

Confusion matrices of classification results using cascaded texture feature descriptors.

5.4. Effect of Feature Selection

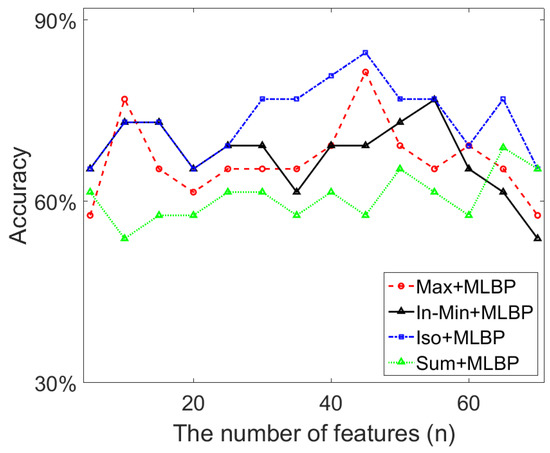

This section investigates the effect of feature selection using PCA and autoencoder network on classification accuracy. As discussed in previous sections, the cascaded texture feature set (multi-fractal features + MLBP) occupies a large feature space which may contain redundant image features, and need to be optimized before using it in the classification algorithm.

PCA re-computes the initial feature set and outputs new features in decreasing order, which can be used to select the optimum n features for the classification work. Figure 14 shows the top 70 features after applying PCA, in which range the best classification results shown in Table 4 are obtained when using the cascaded feature descriptors. In Figure 14, we can see that the highest classification accuracy (84.6%) based on the Iso+MLBP descriptor is obtained using the top 45 (i.e., n = 45) features. The Max+MLBP descriptor also uses n = 45 features to reach its best classification performance. For the In-Min and Sum based descriptors, 55 and 65 features respectively are used to achieve their best classification results. As the initial feature set contains 612 features, over 90% feature space is removed by PCA, which contributes to a significant reduction in feature dimensionality and the improvement of classification accuracy.

Figure 14.

The classification accuracy using the reduced texture features by PCA.

We also use the autoencoder network to select texture features for the cascaded feature descriptors. Different number of hidden layers in the autoencoder structure are tested using values derived from the set {5,7,9,11,13, and 15} and classification results based on the Iso+MLBP descriptor are shown in Table 5. An 11-layer architecture with the code layer size of 64 produces the highest classification result (80.7%). The results indicate that the autoencoder network can optimize the feature space for the cascaded feature descriptors and obtain a desirable classification accuracy. However, the classification performance does not surpass the accuracy (80.7% vs. 84.6%) by using PCA.

Table 5.

The classification accuracy using Iso+MLBP feature descriptor and different structures of the autoencoder network.

5.5. Results Comparison and Discussion

In this section, we further compare and discuss the experimental results presented in Section 5.1, Section 5.2 and Section 5.3 using different classification metrics such as accuracy (CA), AUCROC, Kappa coefficient and F1 score. In addition, statistical hypothesis test is conducted. The t-test with a significance level of 0.05 is conducted between the Iso+MLBP and every other method to calculate p-value, which shows the statistical difference between them. Table 6 shows that the Iso+MLBP joint feature descriptor outperforms other approaches, not only producing the highest AUC (95.3 ± 3.1) but also obtaining higher Kappa (0.79), and F1 score (0.85). In the t-test, all other methods present low p-values (<0.05), which means the difference of classification performance is statistically significant.

Table 6.

The comparison of classification performance between different feature descriptors, with the best classification performance highlighted in bold.

Based on the classification results shown in Table 2, Table 3, Table 4 and Table 6, we have the following findings:

- (1)

- For the LBP and its variants, we can see that the use of a different neighbourhood topologies (i.e., elliptical vs. circular) can improve the classification performance, which is consistent with the conclusion in [25]: the extracted anisotropic texture information have the potential in distinguishing objects. However, the MLBP which collects texture information from two different neighbourhood areas makes the improvement more evident. This indicates that for the breast density classification which is a very challenging task due to the heterogeneous texture patterns of breast tissue, capturing more (richer) texture features from different local regions can lead to the improvement of the classification performance.

- (2)

- The results in Table 2 lead to the following observations regarding multi-fractal features. The Iso measure produces a better classification result than the other measures. The reason for this can be ascribed to fact that the Max and In-Min measures consider only one pixel information (the maximum or the minimum intensity value) when computing the singularity coefficient (i.e., α-value), and fails to collect the image information from the other pixels within the local region.

- (3)

- From the results in Table 4 and Table 6, we can see that the use of the combined feature descriptor improves the classification accuracy significantly, which also indicates that the texture features extracted from the MLBP and multi-fractal methods are different. The feature sets collected by the two different methods can be considered as complementary to each other.

- (4)

- As shown in Section 5.4, the combination of different texture features produce a bigger feature space which contains redundant features that do not help distinguish the breast density related characteristics between different categories. Results in Figure 14 show that the classification accuracy can even be lower than using the individual feature set if the concatenated features are not selected properly, which demonstrate the importance of removing the redundant features and the necessity of using the feature selection scheme.

- (5)

- Even though BI-RADS uses four density categories, sometimes, breast density is discussed with binary labels of low density (fatty and sparsely dense, or BI-RADS I and II) and high density (heterogeneously and extremely dense, or BI-RADS III and IV) [7]. We conduct the binary classification using the Iso+MLBP descriptor which produces the best four-category classification results in our experiments. Table 7 shows the binary classification results. Although the texture features extracted by the multi-fractal Iso method and the MLBP provide desirable binary classification accuracies (89.2% and 91.9%), the joint feature descriptor Iso+MLBP shows a more powerful representation capability for image features, with a higher classification performance of 92.9% obtained. These observations are consistent with the results obtained in four-category classification work and demonstrates the robustness of the proposed feature descriptor.

Table 7. Confusion matrices of the binary density categories classification results using the proposed feature descriptors.

Table 7. Confusion matrices of the binary density categories classification results using the proposed feature descriptors.

A recent study [29] applied a local quinary pattern (LQP) method to extract texture features using different neighbourhood topologies for the same classification task. Their results indicate that the ellipse topology based LQP gives the best accuracy of 82.02% when using over 200 image features to test 206 images in the INbreast dataset (only MLO view images used). By contrast, our proposed method is tested on the whole INbreast dataset (409 images) and attains the accuracy of 84.6% using only 45 features. Table 8 shows the comparison of classification performance by using LQP, LBP and multi-fractal feature descriptors.

Table 8.

The classification accuracy comparison using different feature descriptors.

6. Conclusions and Future Work

In this paper, a comprehensive study towards the multi-fractal analysis is conducted to extract texture features in mammogram images for classifying BI-RADS density. Four different multi-fractal measures are used to capture breast density related image features, and LBP and its variants are employed to extract more features. Novel texture feature descriptors concatenating multi-fractal features and MLBP features are proposed to integrate rich image features based on different feature extraction methods. PCA and autoencoder network are used in this work to optimize the feature space by removing redundant features. The proposed method is tested using a FFDM dataset, INBreast, with 409 mammogram images. Experimental results show that the cascaded feature descriptor based on multi-fractal analysis and LBP obtains the higher classification accuracy than using individual feature set. In future work, other image feature descriptors such as LQP will be considered together with multi-fractal features. In addition, different mammogram datasets will be used in experiments to demonstrate the robustness of the proposed method.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/jimaging7100205/s1, Figure S1: Segmentation of breast region. (a) Input mammogram; (b) clustering operation based on the alpha image; (c) rough contour of the breast region; (d) smoothing breast contour; (e) finding pectoral muscle area; (f) breast mask image without pectoral muscle region; Figure S2: . Challenging cases with inaccurate mask images generated and their adjusted mask images based on manual operations. References [42,43,44] are cited in the Supplementary Materials.

Author Contributions

Conceptualization, H.L. and R.M.; formal analysis, H.L.; investigation, H.L.; methodology, H.L. and R.M.; software, H.L.; supervision, R.M. and S.B.; writing—original draft, H.L.; writing—review and editing, R.M. and S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kallenberg, M.; Petersen, K.; Nielsen, M.; Ng, A.Y.; Diao, P.; Igel, C.; Vachon, C.M.; Holland, K.; Winkel, R.R.; Karssemeijer, N.; et al. Unsupervised deep learning applied to breast density segmentation and mammographic risk scoring. IEEE Trans. Med. Imaging 2016, 35, 1322–1331. [Google Scholar] [CrossRef] [PubMed]

- Boyd, N.F.; Byng, J.W.; Jong, R.A.; Fishell, E.K.; Little, L.E.; Miller, A.B.; Lockwood, G.A.; Tritchler, D.L.; Yaffe, M.J. Quantitative Classification of Mammographic Densities and Breast Cancer Risk: Results from the Canadian National Breast Screening Study. J. Natl. Cancer Inst. 1995, 87, 670–675. [Google Scholar] [CrossRef]

- Kerlikowske, K.; Cook, A.J.; Buist, D.S.; Cummings, S.R.; Vachon, C.; Vacek, P.; Miglioretti, D.L. Breast Cancer Risk by Breast Density, Menopause, and Postmenopausal Hormone Therapy Use. J. Clin. Oncol. 2010, 28, 3830–3837. [Google Scholar] [CrossRef]

- McLean, K.E.; Stone, J. Role of breast density measurement in screening for breast cancer. Climacteric 2018, 21, 214–220. [Google Scholar] [CrossRef]

- Wolfe, J.N. Risk for breast cancer development determined by mammographic parenchymal pattern. Cancer 1976, 37, 2486–2492. [Google Scholar] [CrossRef]

- Sickles, E.A.; D’Orsi, C.J.; Bassett, L.W.; Appleton, C.M.; Berg, W.A.; Burnside, E.S.; Feig, S.A.; Gavenonis, S.C.; Newell, M.S.; Trinh, M.M. ACR BI-RADS® Mammography. In ACR BI-RADS® Atlas, Breast Imaging Reporting and Data System; American College of Radiology: Reston, VA, USA, 2013. [Google Scholar]

- Muštra, M.; Grgic, M.; Delač, K. Breast Density Classification Using Multiple Feature Selection. Automatika 2012, 53, 362–372. [Google Scholar] [CrossRef]

- Huo, C.W.; Chew, G.L.; Britt, K.; Ingman, W.; A Henderson, M.; Hopper, J.L.; Thompson, E.W. Mammographic density—a review on the current understanding of its association with breast cancer. Breast Cancer Res. Treat. 2014, 144, 479–502. [Google Scholar] [CrossRef] [PubMed]

- Jeffers, A.M.; Sieh, W.; Lipson, J.A.; Rothstein, J.H.; McGuire, V.; Whittemore, A.S.; Rubin, D.L. Breast Cancer Risk and Mammographic Density Assessed with Semiautomated and Fully Automated Methods and BI-RADS. Radiology 2017, 282, 348–355. [Google Scholar] [CrossRef] [PubMed]

- Oliver, A.; Tortajada, M.; Lladó, X.; Freixenet, J.; Ganau, S.; Tortajada, L.; Vilagran, M.; Sentis, M.; Martí, R. Breast Density Analysis Using an Automatic Density Segmentation Algorithm. J. Digit. Imaging 2015, 28, 604–612. [Google Scholar] [CrossRef] [PubMed]

- Subashini, T.; Ramalingam, V.; Palanivel, S. Automated assessment of breast tissue density in digital mammograms. Comput. Vis. Image Underst. 2010, 114, 33–43. [Google Scholar] [CrossRef]

- Qu, Y.; Fu, Q.; Shang, C.; Deng, A.; Zwiggelaar, R.; George, M.; Shen, Q. Fuzzy-rough assisted refinement of image processing procedure for mammographic risk assessment. Appl. Soft Comput. 2020, 91, 106230. [Google Scholar] [CrossRef]

- Li, S.; Wei, J.; Chan, H.-P.; Helvie, M.A.; Roubidoux, M.A.; Lu, Y.; Zhou, C.; Hadjiiski, L.M.; Samala, R.K. Computer-aided assessment of breast density: Comparison of supervised deep learning and feature-based statistical learning. Phys. Med. Biol. 2017, 63, 025005. [Google Scholar] [CrossRef] [PubMed]

- Tzikopoulos, S.D.; Mavroforakis, M.E.; Georgiou, H.V.; Dimitropoulos, N.; Theodoridis, S. A fully automated scheme for mammographic segmentation and classification based on breast density and asymmetry. Comput. Methods Programs Biomed. 2011, 102, 47–63. [Google Scholar] [CrossRef] [PubMed]

- Muhimmah, I.; Zwiggelaar, R. Mammographic density classification using multiresolution histogram information. In Proceedings of the International Special Topic Conference on Information Technology in Biomedicine, Ioannina, Greece, 26–28 October 2006; pp. 26–28. [Google Scholar]

- Chen, Z.; Denton, E.; Zwiggelaar, R. Local feature based mammographic tissue pattern modelling and breast density classification. In Proceedings of the 2011 4th International Conference on Biomedical Engineering and Informatics (BMEI), Shanghai, China, 15–17 October 2011. [Google Scholar]

- George, M.; Rampun, A.; Denton, E.; Zwiggelaar, R. Mammographic ellipse modelling towards birads density classification. In Proceedings of the 13th International Workshop on Breast Imaging IWDM, Malmö, Sweden, 19–22 June 2016; pp. 423–430. [Google Scholar]

- Zheng, Y.; Keller, B.M.; Ray, S.; Wang, Y.; Conant, E.F.; Gee, J.C.; Kontos, D. Parenchymal texture analysis in digital mammography: A fully automated pipeline for breast cancer risk assessment. Med. Phys. 2015, 42, 4149–4160. [Google Scholar] [CrossRef] [PubMed]

- Mohamed, A.A.; Berg, W.A.; Peng, H.; Luo, Y.; Jankowitz, R.C.; Wu, S. A deep learning method for classifying mammographic breast density categories. Med. Phys. 2018, 45, 314–321. [Google Scholar] [CrossRef] [PubMed]

- Berg, W.A.; Campassi, C.; Langenberg, P.; Sexton, M.J. Breast Imaging Reporting and Data System: Inter- and intraobserver variability in feature analysis and final assessment. AJR Am. J. Roentgenol. 2000, 174, 1769–1777. [Google Scholar] [CrossRef]

- Ahn, C.K.; Heo, C.; Jin, H.; Kim, J.H. A novel deep learning-based approach to high accuracy breast density estimation in digital mammography. In Proceedings of the SPIE Medical Imaging 2017: Computer-Aided Diagnosis, Orlando, FL, USA, 11–16 February 2017; Volume 10134. [Google Scholar] [CrossRef]

- Lee, J.; Nishikawa, R.M. Automated mammographic breast density estimation using a fully convolutional network. Med. Phys. 2018, 45, 1178–1190. [Google Scholar] [CrossRef]

- Hamidinekoo, A.; Denton, E.; Rampun, A.; Honnor, K.; Zwiggelaar, R. Deep learning in mammography and breast histology, an overview and future trends. Med. Image Anal. 2018, 47, 45–67. [Google Scholar] [CrossRef] [PubMed]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- George, M.; Zwiggelaar, R. Comparative Study on Local Binary Patterns for Mammographic Density and Risk Scoring. J. Imaging 2019, 5, 24. [Google Scholar] [CrossRef] [PubMed]

- Tan, X.; Triggs, W. Enhanced Local Texture Feature Sets for Face Recognition Under Difficult Lighting Conditions. IEEE Trans. Image Process. 2010, 19, 1635–1650. [Google Scholar] [CrossRef]

- Rampun, A.; Morrow, P.; Scotney, B.; Winder, J. Breast density classification using local ternary patterns in mammo-grams. In Proceedings of the Image Analysis and Recognition 14th International Conference, ICIAR 2017, Montreal, QC, Canada, 5–7 July 2017; pp. 463–470. [Google Scholar] [CrossRef]

- Nanni, L.; Lumini, A.; Brahnam, S. Local binary patterns variants as texture descriptors for medical image analysis. Artif. Intell. Med. 2010, 49, 117–125. [Google Scholar] [CrossRef] [PubMed]

- Rampun, A.; Scotney, B.W.; Morrow, P.J.; Wang, H.; Winder, J. Breast Density Classification Using Local Quinary Patterns with Various Neighbourhood Topologies. J. Imaging 2018, 4, 14. [Google Scholar] [CrossRef]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Wang, H. Breast density classification in mammograms: An investigation of encoding techniques in binary-based local patterns. Comput. Biol. Med. 2020, 122, 103842. [Google Scholar] [CrossRef]

- Ibrahim, M.; Mukundan, R. Multifractal Techniques for Emphysema Classification in Lung Tissue Images. In Proceedings of the 3rd International Conference on Future Bioengineering (ICFB), Kuala Lumpur, Malaysia, 6–7 December 2019; pp. 115–119. [Google Scholar]

- Reljin, I.; Reljin, B.; Pavlovic, I.; Rakocevic, I. Multifractal analysis of gray-scale images. In Proceedings of the 2000 10th Mediterranean Electrotechnical Conference. Information Technology and Electrotechnology for the Mediterranean Countries. Proceedings. MeleCon 2000, Lemesos, Cyprus, 29–31 May 2000; Volumes 1–3, pp. 490–493. [Google Scholar]

- Xue, Y.; Bogdan, P. Reliable Multi-Fractal Characterization of Weighted Complex Networks: Algorithms and Implications. Sci. Rep. 2017, 7, 1–22. [Google Scholar] [CrossRef]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J. INbreast: Toward a Full-field Digital Mammographic Database. Acad. Radiol. 2012, 19, 236–248. [Google Scholar] [CrossRef]

- Muštra, M.; Grgic, M.; Rangayyan, R. Review of recent advances in segmentation of the breast boundary and the pectoral muscle in mammograms. Med. Biol. Eng. Comput. 2015, 54, 1003–1024. [Google Scholar] [CrossRef] [PubMed]

- Slavkovic-Ilic, M.; Gavrovska, A.; Milivojević, M.; Reljin, I.; Reljin, B.; Marijeta, S.-I.; Ana, G.; Milan, M.; Irini, R.; Branimir, R. Breast region segmentation and pectoral muscle removal in mammograms. Telfor J. 2016, 8, 50–55. [Google Scholar] [CrossRef]

- Falconer, K. Random Fractals. Fractal Geometry: Mathematical Foundations and Applications; Second Chichester; John Wiley & Sons: London, UK, 2005. [Google Scholar]

- Hyvarinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; John Wiley & Sons: New York, NY, USA, 2001. [Google Scholar]

- Kramer, M.A. Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 1991, 37, 233–243. [Google Scholar] [CrossRef]

- Bai, J.; Dai, X.; Wu, Q.; Xie, L. Limited-view CT Reconstruction Based on Autoencoder-like Generative Adversarial Networks with Joint Loss. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018. [Google Scholar]

- Du, S.; Liu, C.; Xi, L. A Selective Multiclass Support Vector Machine Ensemble Classifier for Engineering Surface Classification Using High Definition Metrology. J. Manuf. Sci. Eng. 2015, 137, 011003. [Google Scholar] [CrossRef]

- Li, H.; Mukundan, R.; Boyd, S. Robust Texture Features for Breast Density Classification in Mammograms. In Proceedings of the 16th International Conference on Control, Automation, Robotics and Vision (ICARCV), Shenzhen, China, 13–15 December 2020; pp. 454–459. [Google Scholar] [CrossRef]

- Sreedevi, S.; Sherly, E. A Novel Approach for Removal of Pectoral Muscles in Digital Mammogram. Procedia Comput. Sci. 2015, 46, 1724–1731. [Google Scholar] [CrossRef]

- Rampun, A.; López-Linares, K.; Morrow, P.J.; Scotney, B.W.; Wang, H.; Ocaña, I.G.; Maclair, G.; Zwiggelaar, R.; Ballester, M.A.G.; Macía, I. Breast pectoral muscle segmentation in mammograms using a modified holistically-nested edge detection network. Med Image Anal. 2019, 57, 1–17. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).