Semi-Supervised Domain Adaptation for Holistic Counting under Label Gap

Abstract

1. Introduction

Contribution

- We propose a semi-supervised domain adaptation method for holistic regression tasks that jointly tackles covariate shift and label gap.

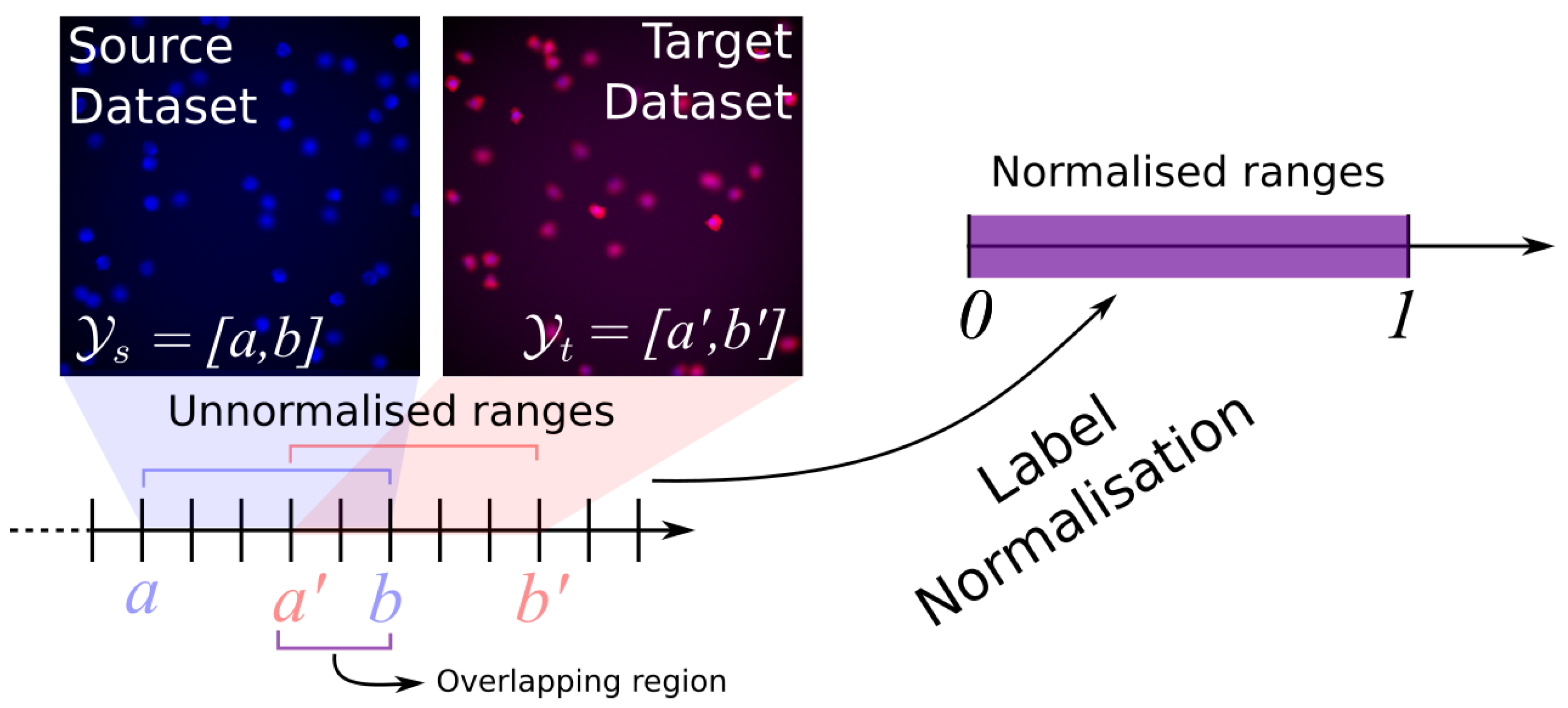

- Label gap is mitigated via label normalisation, i.e., . As a consequence, our method works under closed set, open set, and partial DA [1].

- We demonstrate that as few as 10 annotated images taken from the target dataset are enough to restore the target label range, i.e., remapping .

- We propose a stopping criterion that jointly monitors the MMD and the GAN Global Optimality Condition to prevent overfitting and, thus, to better align source and target features. We show the effectiveness of this stopping criterion with an ablation study.

2. Related Works

2.1. Domain Adaptation

2.2. Domain Adaptation for Regression Tasks

2.3. Label Gap

3. Proposed Method

- Features Extractor: We used ResNet-50 [34] as feature extractor that outputs a vector of size 2048.

- Regressor Network: It stacks 3 fully-connected (cf. Table 1) to learn the holistic regression task.

- Discriminator: The architecture of the discriminator is also detailed in Table 1. D is trained such that it cannot differentiate between source and target features.

3.1. Pretraining on the Source Dataset

3.2. Feature Alignment with Adversarial Adaptation

3.2.1. Variance-Based Regularisation Preventing Posterior Collapse

3.2.2. Stopping Criterion

3.3. Fine-Tuning of the Regressor R

3.4. Implementation Details

4. Experimental Results

4.1. Datasets

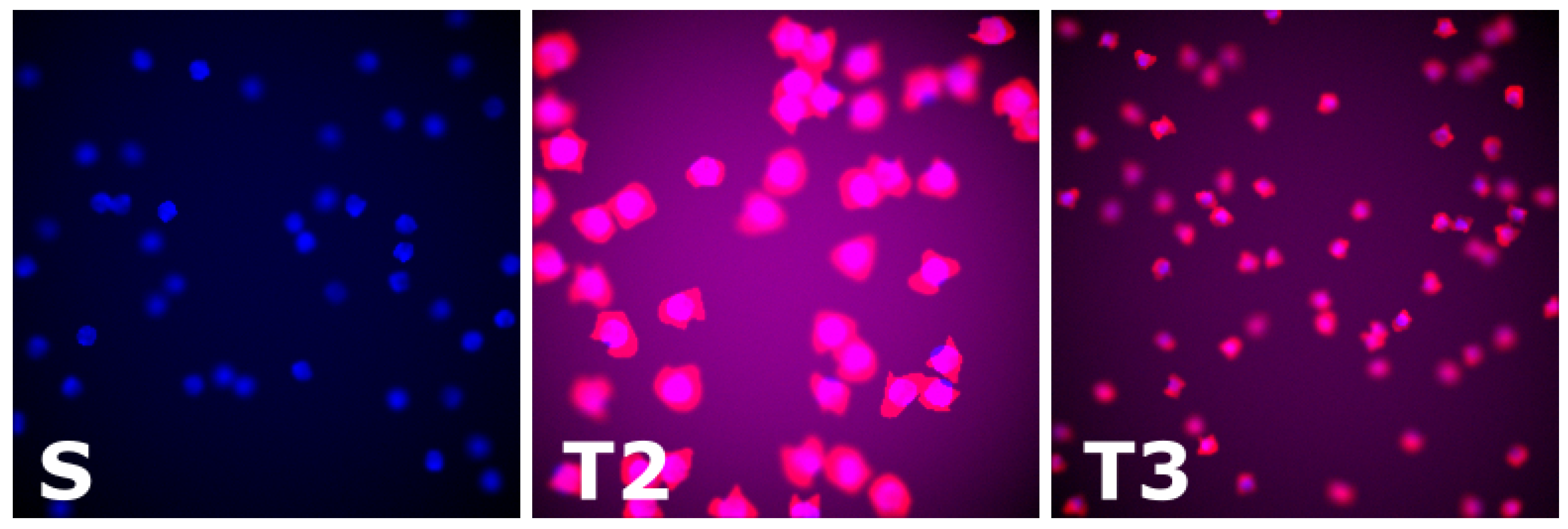

- S: it contains images of blue cells with counting ranging in . To generate these images, the option cytoplasm was disabled. We used this dataset as source domain. During training, we split the dataset as follows: 55% as training set, 20% as validation set (used for early stopping during pretraining), and 25% as test set.

- T2: it contains images of red cells (cytoplasm option enabled) with a counting ranging in as well. This dataset is used as target domain to benchmark our approach in a scenario of covariate shift only.

- T3: similar to T2, but with a different cell counting ranging in . To fit more cells in the same image, we generated smaller cells as in T2. This dataset exhibits both covariate and label gap.

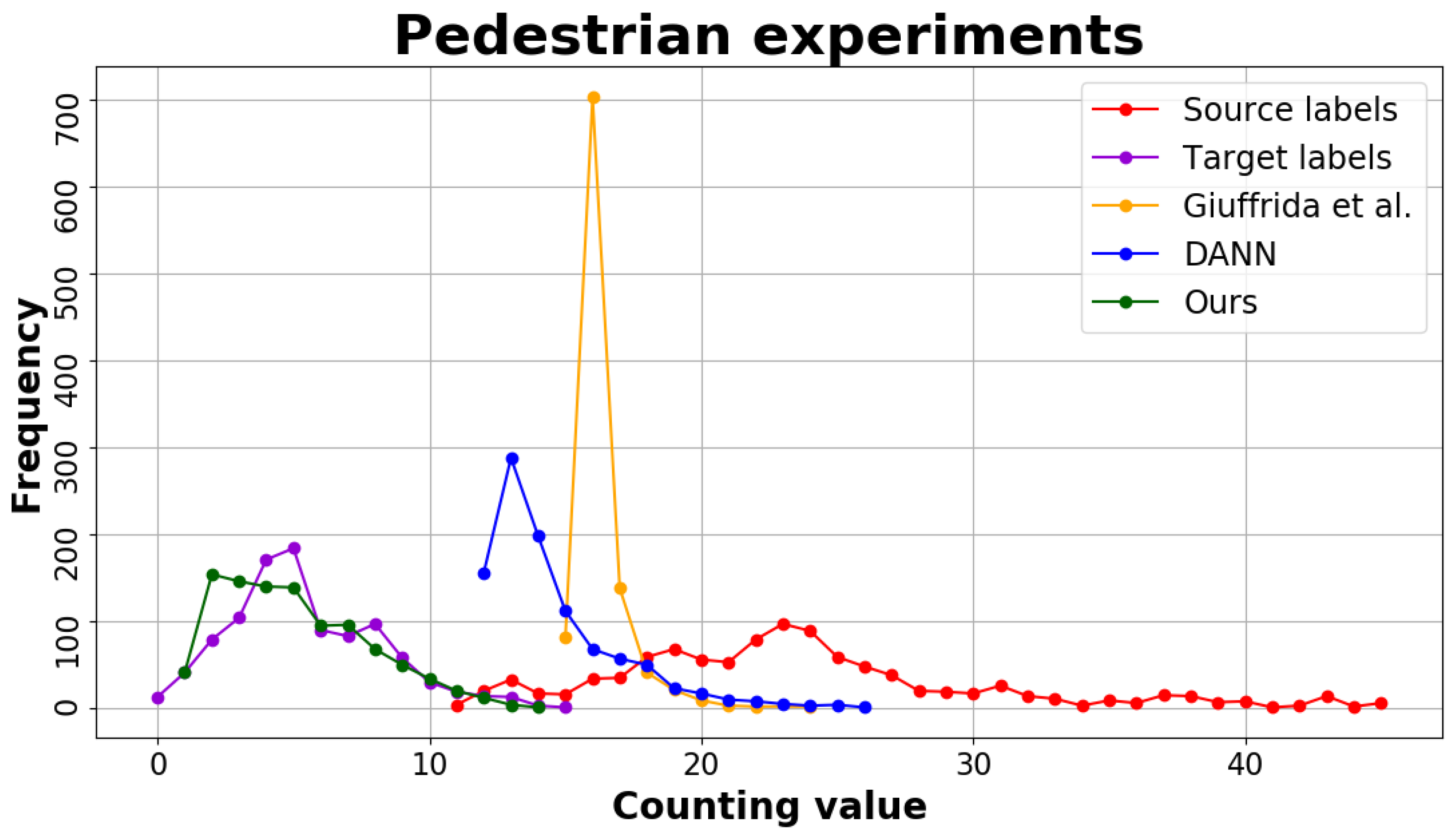

- Vidf: it contains 4000 frames with people walking towards and away from the camera, with some amount of perspective distortion. These images have a pedestrian counting ranging in . We used it as source domain for this experiment and the training/validation/testing sets are split as for the cell data.

- Vidd: it contains 4000 frames with pedestrian moving in parallel wrt the camera plane. The number of people appearing in the scene ranges in . This dataset will serve as target domain for this experiment.

- CVPPP*: The CVPPP2017 dataset contains three subsets of Arabidopsis thaliana (named A1, A2, and A4), and tobacco (A3) images [43,44]. We used A1, A2, and A4 as source domain, i.e., excluding the tobacco plants (as in [18], we named this group of images CVPPP*). Overall, the CVPPP* dataset contains 964 images and a number of leaves ranging in . For training, we split this dataset as in [17] to perform a 4-fold cross-validation for the pretraining step.

- MM: We use the RGB Arabidopsis thaliana images of the Multi-Modal Imagery for Plant Phenotyping [45] with 576 images and a leaf counting ranging in .

- Komatsuna: we use the Komatsuna, a Japanese plant, dataset [46], with 300 images and a leaf counting ranging in .

4.2. Evaluation Metrics

- Absolute Difference in Count [|DiC|]: . This metrics is also known as mean absolute error;

- Difference in Count [DiC]: ;

- Mean Squared Error [MSE]: ;

- Percentage Agreement [%]: , where is the indicator function. This metrics is similar to the accuracy used in classification.

4.3. Main Results

4.3.1. Cell Counting Results

4.3.2. Pedestrian Counting Results

4.3.3. Leaf Counting Results

4.4. Ablation Study

4.5. Fine-Tuning Performance Analysis

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, S.; Yue, X.; Zhang, S.; Li, B.; Zhao, H.; Wu, B.; Krishna, R.; Gonzalez, J.; Sangiovanni-Vincentelli, A.; Seshia, S.; et al. A Review of Single-Source Deep Unsupervised Visual Domain Adaptation. IEEE Trans. Neural Netw. Learn. Syst. 2020, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Tachet des Combes, R.; Zhao, H.; Wang, Y.X.; Gordon, G. Domain Adaptation with Conditional Distribution Matching and Generalized Label Shift. Neural Information Processing Systems (NeurIPS). arXiv 2020, arXiv:2003.04475. [Google Scholar]

- Long, M.; Cao, Y.; Cao, Z.; Wang, J.; Jordan, M.I. Transferable Representation Learning with Deep Adaptation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 3071–3085. [Google Scholar] [CrossRef]

- Di Mauro, D.; Furnari, A.; Patanè, G.; Battiato, S.; Farinella, G.M. SceneAdapt: Scene-based domain adaptation for semantic segmentation using adversarial learning. Pattern Recognit. Lett. 2020, 136, 175–182. [Google Scholar] [CrossRef]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-Adversarial Training of Neural Networks. In Domain Adaptation in Computer Vision Applications; Springer: Cham, Switzerland, 2017; pp. 189–209. [Google Scholar] [CrossRef]

- Liu, A.H.; Liu, Y.C.; Yeh, Y.Y.; Wang, Y.C.F. A Unified Feature Disentangler for Multi-Domain Image Translation and Manipulation. Advances in Neural Information Processing Systems. arXiv 2018, arXiv:1809.01361. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial Discriminative Domain Adaptation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017; pp. 7167–7176. [Google Scholar] [CrossRef]

- Atapour-Abarghouei, A.; Breckon, T. Real-Time Monocular Depth Estimation Using Synthetic Data with Domain Adaptation via Image Style Transfer. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 2800–2810. [Google Scholar]

- Gholami, B.; Sahu, P.; Rudovic, O.; Bousmalis, K.; Pavlovic, V. Unsupervised Multi-Target Domain Adaptation: An Information Theoretic Approach. IEEE Trans. Image Process. 2020, 29, 3993–4002. [Google Scholar] [CrossRef]

- Shrivastava, A.; Pfister, T.; Tuzel, O.; Susskind, J.; Wang, W.; Webb, R. Learning from Simulated and Unsupervised Images through Adversarial Training. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July, 2017; pp. 2242–2251. [Google Scholar] [CrossRef]

- Zhuo, J.; Wang, S.; Zhang, W.; Huang, Q. Deep Unsupervised Convolutional Domain Adaptation. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 261–269. [Google Scholar] [CrossRef]

- Busto, P.P.; Gall, J. Open Set Domain Adaptation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 24–27 October 2017; pp. 754–763. [Google Scholar] [CrossRef]

- Liu, H.; Cao, Z.; Long, M.; Wang, J.; Yang, Q. Separate to Adapt: Open Set Domain Adaptation via Progressive Separation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2922–2931. [Google Scholar]

- Cao, Z.; Long, M.; Wang, J.; Jordan, M.I. Partial Transfer Learning with Selective Adversarial Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–21 June 2018; pp. 2724–2732. [Google Scholar]

- Cao, Z.; Ma, L.; Long, M.; Wang, J. Partial Adversarial Domain Adaptation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 139–155. [Google Scholar]

- You, K.; Long, M.; Cao, Z.; Wang, J.; Jordan, M.I. Universal Domain Adaptation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 2715–2724. [Google Scholar] [CrossRef]

- Dobrescu, A.; Giuffrida, M.V.; Tsaftaris, S.A. Leveraging Multiple Datasets for Deep Leaf Counting. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshop (ICCVW), Venice, Italy, 22–29 October 2017; pp. 2072–2079. [Google Scholar] [CrossRef][Green Version]

- Giuffrida, M.V.; Dobrescu, A.; Doerner, P.; Tsaftaris, S.A. Leaf Counting Without Annotations Using Adversarial Unsupervised Domain Adaptation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–20 June 2019; pp. 2590–2599. [Google Scholar] [CrossRef]

- Puc, A.; Štruc, V.; Grm, K. Analysis of Race and Gender Bias in Deep Age Estimation Models. In Proceedings of the 2020 28th European Signal Processing Conference (EUSIPCO), Amsterdam, The Netherlands, 18–22 January 2021; pp. 830–834. [Google Scholar]

- Kuleshov, V.; Fenner, N.; Ermon, S. Accurate uncertainties for deep learning using calibrated regression. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2796–2804. [Google Scholar]

- Gretton, A.; Borgwardt, K.; Rasch, M.; Schölkopf, B.; Smola, A. A Kernel Method for the Two-Sample-Problem. In Advances in Neural Information Processing Systems 19: Proceedings of the 2006 Conference, Vancouver, BC, Canada, 4–7 December 2007; MIT Press: Cambridge, MA, USA, 2007; pp. 513–520. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Advances in Neural Information Processing Systems; The MIT Press: Cambridge, MA, USA, 2014; Volume 27. [Google Scholar]

- Gopalan, R.; Ruonan, L.; Chellappa, R. Domain adaptation for object recognition: An unsupervised approach. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 999–1006. [Google Scholar] [CrossRef]

- Luo, Z.; Hu, J.; Deng, W.; Shen, H. Deep Unsupervised Domain Adaptation for Face Recognition. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 453–457. [Google Scholar] [CrossRef]

- Javanmardi, M.; Tasdizen, T. Domain adaptation for biomedical image segmentation using adversarial training. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 554–558. [Google Scholar] [CrossRef]

- Sun, B.; Saenko, K. Deep CORAL: Correlation Alignment for Deep Domain Adaptation. In Proceedings of the European Conference on Computer Vision (ECCV) 2016 Workshops, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 443–450. [Google Scholar]

- Roy, S.; Siarohin, A.; Sangineto, E.; Bulo, S.R.; Sebe, N.; Ricci, E. Unsupervised Domain Adaptation Using Feature-Whitening and Consensus Loss. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Kundu, J.N.; Venkat, N.; Rahul, M.V.; Babu, R.V. Universal Source-Free Domain Adaptation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 14–19 June 2020. [Google Scholar]

- Dong, N.; Kampffmeyer, M.; Liang, X.; Wang, Z.; Dai, W.; Xing, E. Unsupervised Domain Adaptation for Automatic Estimation of Cardiothoracic Ratio. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2018, Granada, Spain, 16–20 September 2018; pp. 544–552. [Google Scholar]

- Hossain, M.A.; Reddy, M.K.K.; Cannons, K.; Xu, Z.; Wang, Y. Domain Adaptation in Crowd Counting. In Proceedings of the 2020 17th Conference on Computer and Robot Vision (CRV), Ottawa, ON, Canada, 13–15 May 2020; pp. 150–157. [Google Scholar] [CrossRef]

- Sindagi, V.A.; Patel, V.M. A survey of recent advances in CNN-based single image crowd counting and density estimation. Pattern Recognit. Lett. 2018, 107, 3–16. [Google Scholar] [CrossRef]

- Kuhnke, F.; Ostermann, J. Deep Head Pose Estimation Using Synthetic Images and Partial Adversarial Domain Adaption for Continuous Label Spaces. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 10163–10172. [Google Scholar] [CrossRef]

- Takahashi, R.; Hashimoto, A.; Sonogashira, M.; Iiyama, M. Partially-Shared Variational Auto-encoders for Unsupervised Domain Adaptation with Target Shift. In Proceedings of the European Conference on Computer Vision—ECCV 2020, Virtual, 23–28 August 2020; pp. 1–17. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Nowozin, S.; Cseke, B.; Tomioka, R. f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization. In Advances in Neural Information Processing Systems; Lee, D., Sugiyama, M., Luxburg, U., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; Volume 29. [Google Scholar]

- Mao, X.; Li, Q.; Xie, H.; Lau, R.Y.K.; Wang, Z.; Smolley, S.P. Least Squares Generative Adversarial Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 24–27 October 2017; pp. 2813–2821. [Google Scholar] [CrossRef]

- Razavi, A.; Oord, A.V.D.; Poole, B.; Vinyals, O. Preventing Posterior Collapse with delta-VAEs. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Gretton, A.; Borgwardt, K.M.; Rasch, M.J.; Schölkopf, B.; Smola, A. A Kernel Two-Sample Test. J. Mach. Learn. Res. 2012, 13, 723–773. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems 32; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- Lempitsky, V.; Zisserman, A. Learning to count objects in images. Adv. Neural Inf. Process. Syst. 2010, 23, 1324–1332. [Google Scholar]

- Lehmussola, A.; Ruusuvuori, P.; Selinummi, J.; Huttunen, H.; Yli-Harja, O. Computational Framework for Simulating Fluorescence Microscope Images With Cell Populations. IEEE Trans. Med. Imaging 2007, 26, 1010–1016. [Google Scholar] [CrossRef] [PubMed]

- Chan, A.B.; Vasconcelos, N. Counting People With Low-Level Features and Bayesian Regression. IEEE Trans. Image Process. 2012, 21, 2160–2177. [Google Scholar] [CrossRef] [PubMed]

- Bell, J.; Dee, H.M. Aberystwyth Leaf Evaluation Dataset. Zenodo. 2016. Available online: https://zenodo.org/record/168158 (accessed on 28 August 2021). [CrossRef]

- Minervini, M.; Fischbach, A.; Scharr, H.; Tsaftaris, S.A. Finely-grained annotated datasets for image-based plant phenotyping. Pattern Recognit. Lett. 2016, 81, 80–89. [Google Scholar] [CrossRef]

- Cruz, J.; Yin, X.; Liu, X.; Imran, S.; Morris, D.; Kramer, D.; Chen, J. Multi-modality Imagery Database for Plant Phenotyping. Mach. Vis. Appl. 2016, 27, 735–749. [Google Scholar] [CrossRef]

- Uchiyama, H.; Sakurai, S.; Mishima, M.; Arita, D.; Okayasu, T.; Shimada, A.; Taniguchi, R. An Easy-to-Setup 3D Phenotyping Platform for KOMATSUNA Dataset. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 24–27 October 2017; pp. 2038–2045. [Google Scholar] [CrossRef]

- Giuffrida, M.V.; Doerner, P.; Tsaftaris, S.A. Pheno-Deep Counter: A unified and versatile deep learning architecture for leaf counting. Plant J. 2018, 96, 880–890. [Google Scholar] [CrossRef] [PubMed]

- Scharr, H.; Minervini, M.; French, A.P.; Klukas, C.; Kramer, D.M.; Liu, X.; Luengo, I.; Pape, J.M.; Polder, G.; Vukadinovic, D.; et al. Leaf segmentation in plant phenotyping: A collation study. Mach. Vis. Appl. 2016, 27, 585–606. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein Generative Adversarial Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 7–9 August 2017; Volume 70, pp. 214–223. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved Training of Wasserstein GANs. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

| Layer | Input Size | Output Size | Activation |

|---|---|---|---|

| Regressor | |||

| Dense | 2048 | 1024 | ReLU |

| Dense | 1024 | 512 | ReLU |

| Dense | 512 | 1 | Linear |

| Discriminator | |||

| Dense | 2048 | 1024 | LeakyReLU |

| Dense | 1024 | 512 | LeakyReLU |

| Dense | 512 | 1 | Sigmoid |

| Method | |DiC| ↓ | DiC ↓ | MSE ↓ | % ↑ | |

|---|---|---|---|---|---|

| UB | T2 | ||||

| DANN [5] | T2 | ||||

| Giuffrida et al. [18] | T2 | ||||

| Ours | T2 | ||||

| LB | T2 | ||||

| UB | T3 | ||||

| DANN [5] | T3 | ||||

| Giuffrida et al. [18] | T3 | ||||

| Ours | T3 | ||||

| LB | T3 |

| Method | |DiC| ↓ | DiC ↓ | MSE ↓ | % ↑ |

|---|---|---|---|---|

| UB | ||||

| DANN [5] | ||||

| Giuffrida et al. [18] | ||||

| Ours | ||||

| LB |

| Method | |DiC| ↓ | DiC ↓ | MSE ↓ | % ↑ |

|---|---|---|---|---|

| Intra-species: CVPPP* → MM | ||||

| UB | ||||

| DANN [5] | ||||

| Giuffrida et al. [18] | ||||

| Ours | ||||

| LB | ||||

| Inter-species: CVPPP* → Komatsuna | ||||

| UB | ||||

| DANN [5] | ||||

| Giuffrida et al. [18] | ) | |||

| Ours | ||||

| LB | ||||

| GGO | MMD | MSE ↓ | % ↑ | |||

|---|---|---|---|---|---|---|

| Cell | UCSD | Cell | UCSD | |||

| ✓ | - | ✓ | ||||

| - | ✓ | ✓ | ||||

| ✓ | ✓ | - | ||||

| ✓ | ✓ | ✓ | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Litrico, M.; Battiato, S.; Tsaftaris, S.A.; Giuffrida, M.V. Semi-Supervised Domain Adaptation for Holistic Counting under Label Gap. J. Imaging 2021, 7, 198. https://doi.org/10.3390/jimaging7100198

Litrico M, Battiato S, Tsaftaris SA, Giuffrida MV. Semi-Supervised Domain Adaptation for Holistic Counting under Label Gap. Journal of Imaging. 2021; 7(10):198. https://doi.org/10.3390/jimaging7100198

Chicago/Turabian StyleLitrico, Mattia, Sebastiano Battiato, Sotirios A. Tsaftaris, and Mario Valerio Giuffrida. 2021. "Semi-Supervised Domain Adaptation for Holistic Counting under Label Gap" Journal of Imaging 7, no. 10: 198. https://doi.org/10.3390/jimaging7100198

APA StyleLitrico, M., Battiato, S., Tsaftaris, S. A., & Giuffrida, M. V. (2021). Semi-Supervised Domain Adaptation for Holistic Counting under Label Gap. Journal of Imaging, 7(10), 198. https://doi.org/10.3390/jimaging7100198