EXAM: A Framework of Learning Extreme and Moderate Embeddings for Person Re-ID

Abstract

1. Introduction

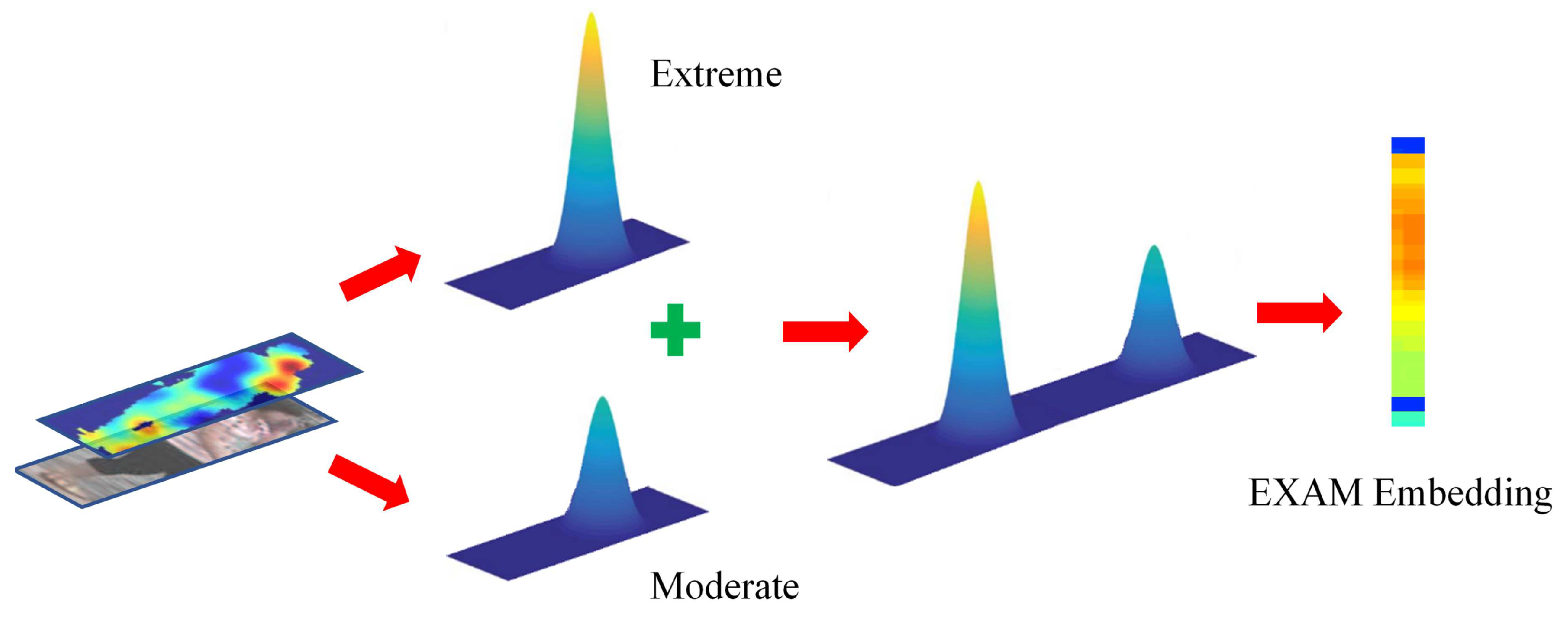

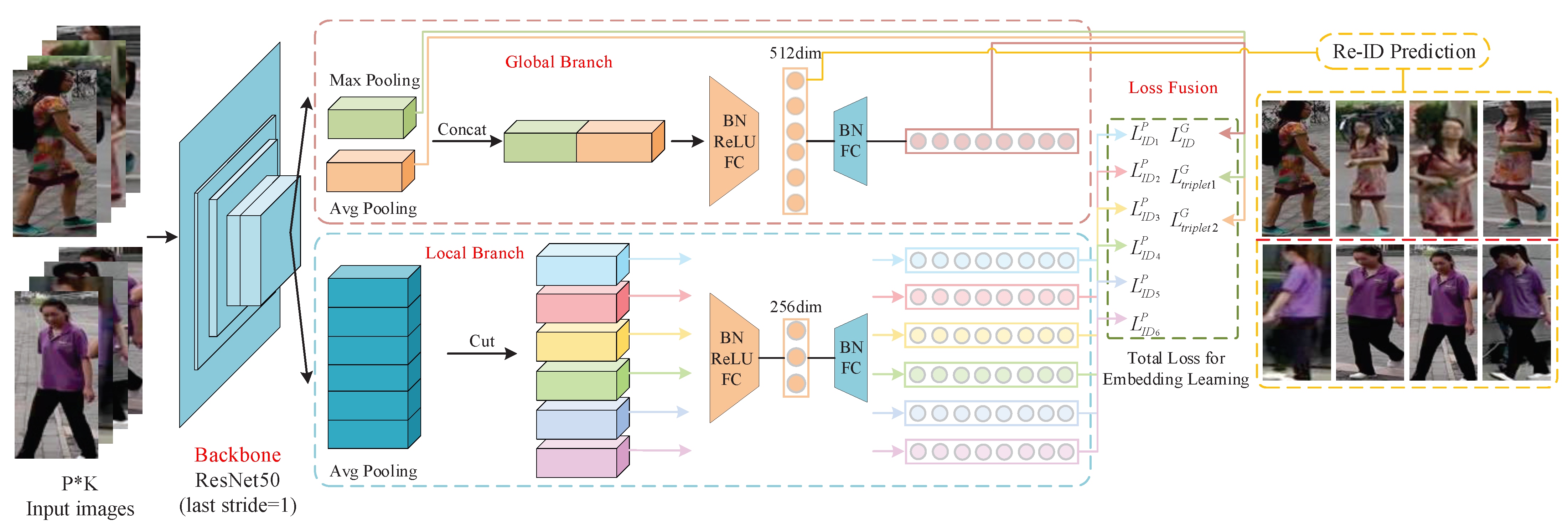

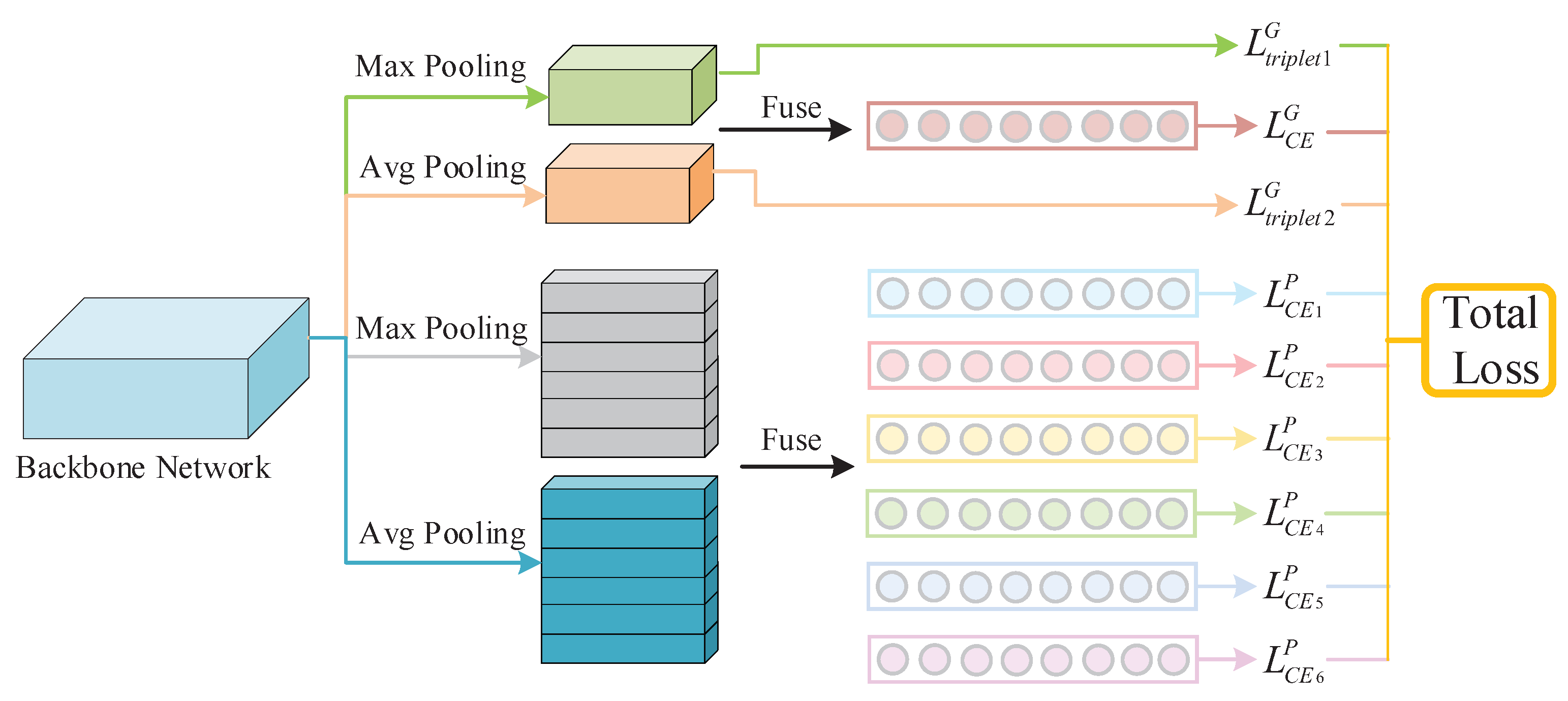

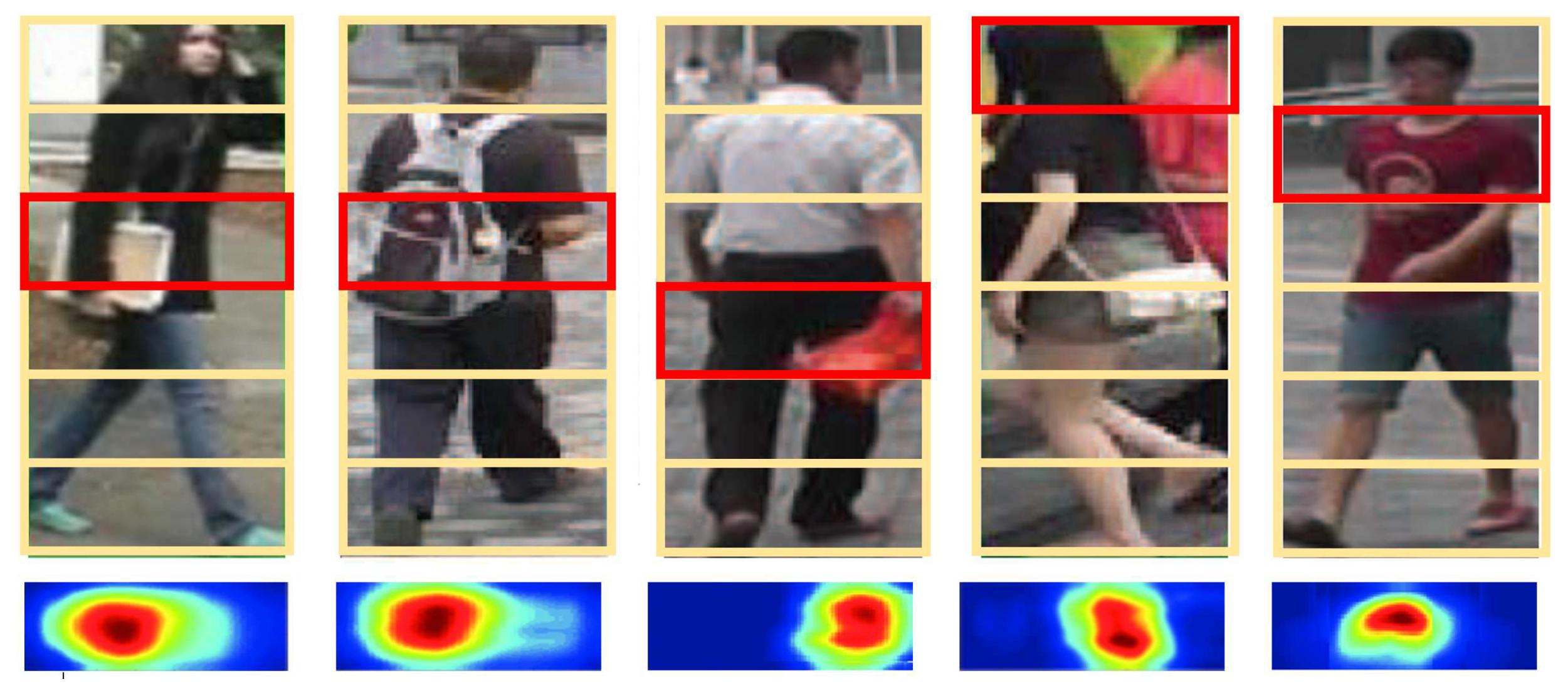

- We propose an extreme and moderate embedding learning framework EXAM for person Re-ID. This is an end-to-end network, providing attention cues to construct discriminative body representations.

- EXAM has global and local branches. The global extreme and moderate embeddings reflect the saliency and commonality of full human body appearance, while the local moderate embeddings capture the concepts of smoothness and local consistence.

- By integrating multiple loss functions, the process of deducing attention from EXAM embeddings provides deep supervision for discriminative feature learning. Both procedures are incorporated and benefit from each other.

2. Related Work

2.1. Feature Representation Learning

2.2. Attention Cues

3. The Proposed Method

3.1. Network Architecture

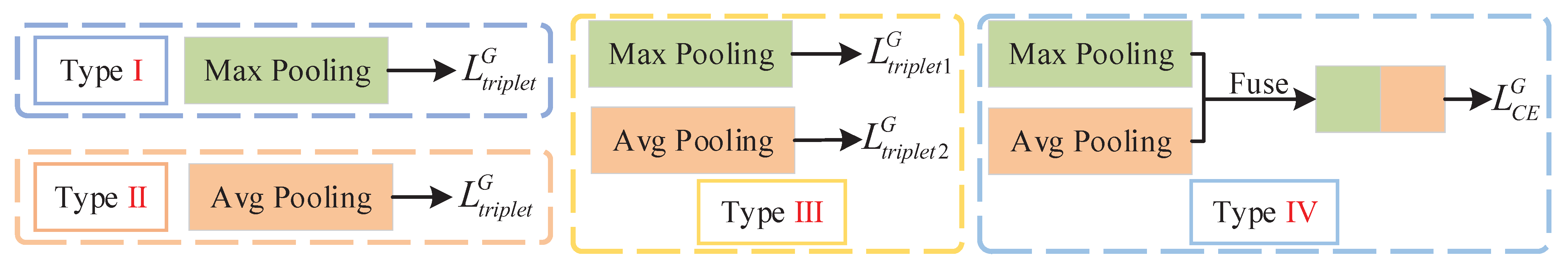

3.2. Multiple Loss Supervision

4. Experiments

4.1. Platform Settings

4.2. Datasets

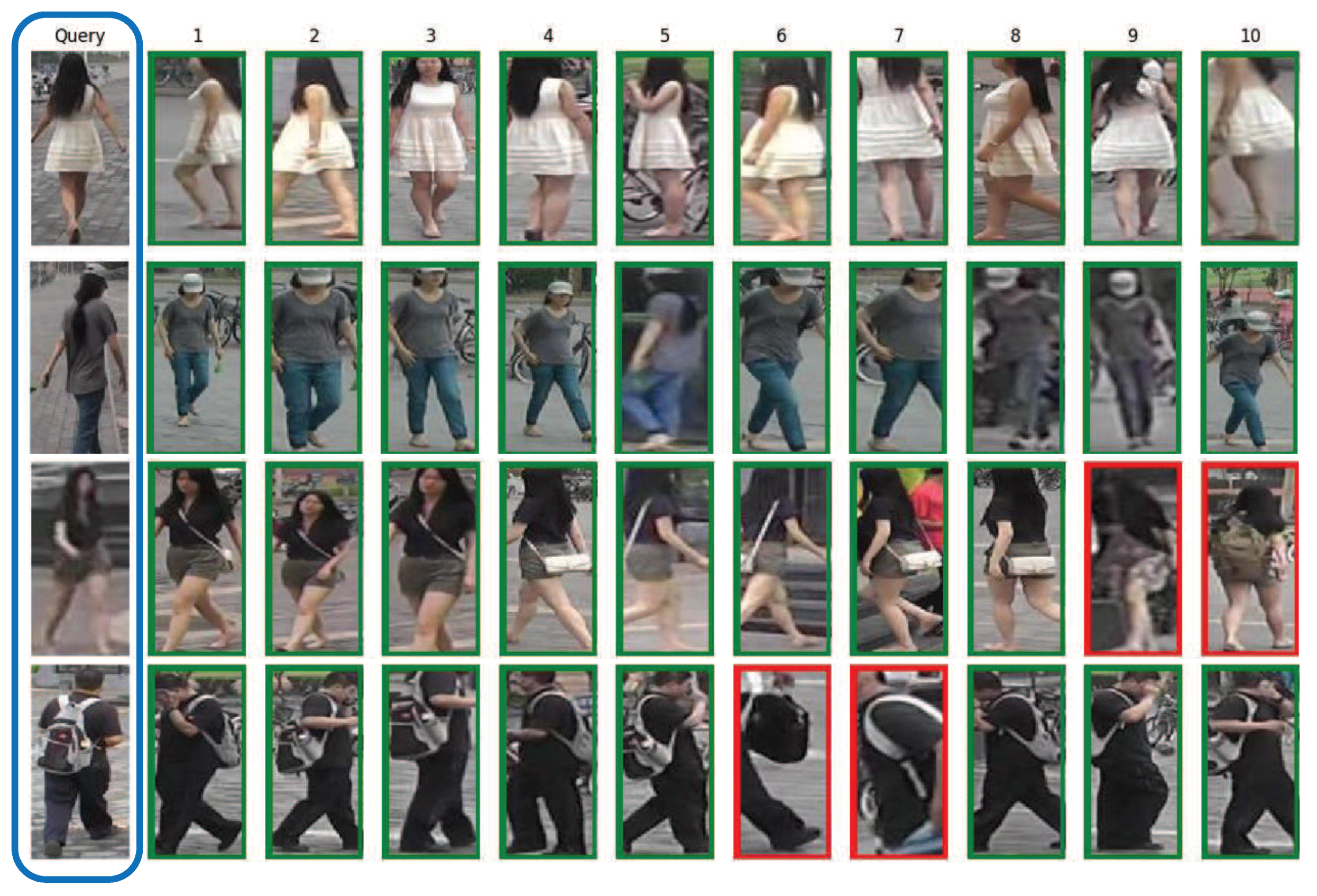

4.3. Comparison with State-of-the-Art Methods

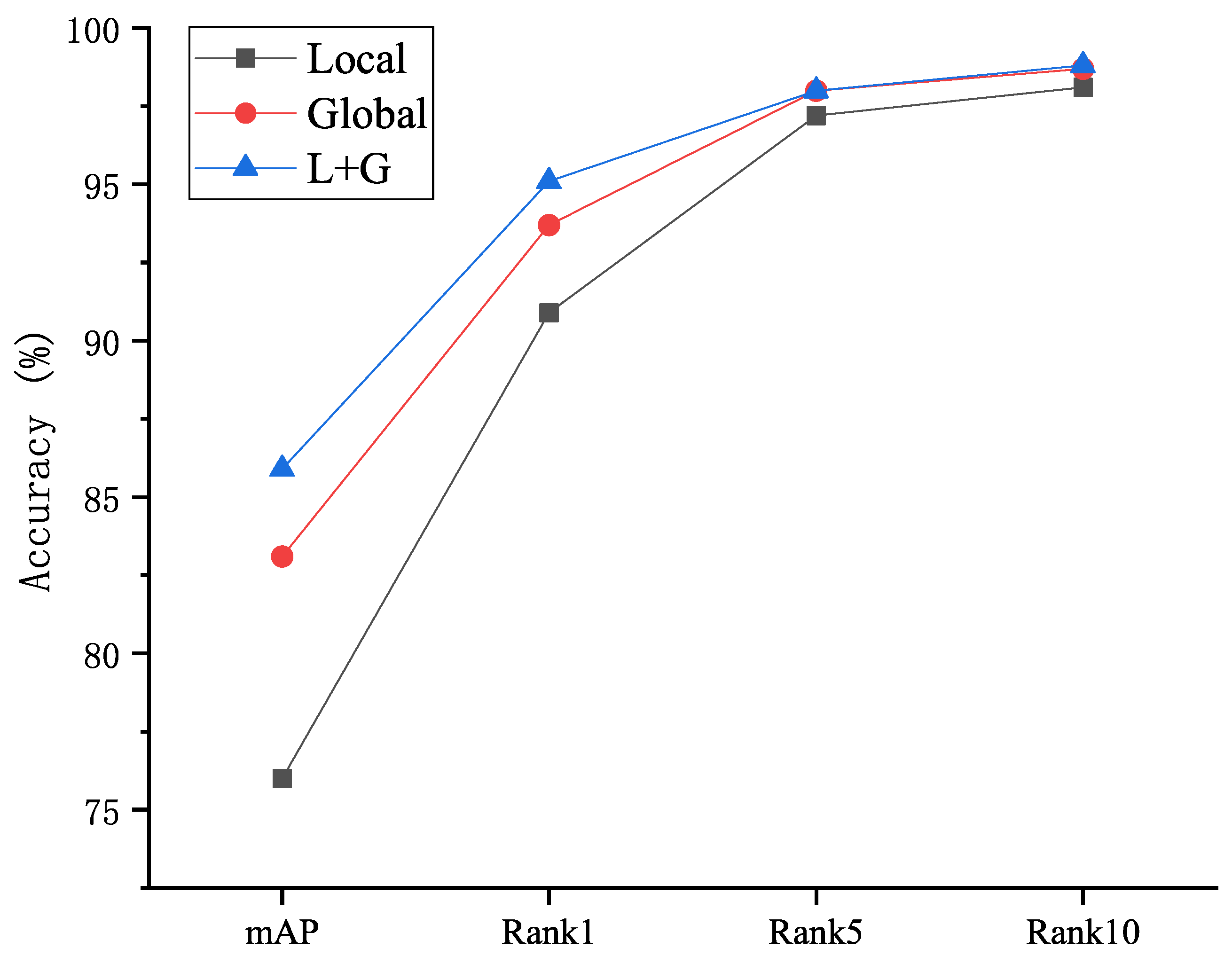

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, H.; Kuang, Z.; Yu, Z.; Luo, J. Structure alignment of attributes and visual features for cross-dataset person re-identification. Pattern Recognit. 2020, 106, 107414. [Google Scholar] [CrossRef]

- Zhao, D.; Wang, H.; Yin, H.; Yu, Z.; Li, H. Person re-identification by integrating metric learning and support vector machine. Signal Process. 2020, 166, 107277. [Google Scholar] [CrossRef]

- Li, H.; Chen, Y.; Tao, D.; Yu, Z.; Qi, G. Attribute-Aligned Domain-Invariant Feature Learning for Unsupervised Domain Adaptation Person Re-Identification. IEEE Trans. Inf. Forensics Secur. 2021, 16, 1480–1494. [Google Scholar] [CrossRef]

- Li, H.; Xu, J.; Zhu, J.; Tao, D.; Yu, Z. Top distance regularized projection and dictionary learning for person re-identification. Inf. Sci. 2019, 502, 472–491. [Google Scholar] [CrossRef]

- Li, H.; Zhou, W.; Yu, Z.; Yang, B.; Jin, H. Person re-identification with dictionary learning regularized by stretching regularization and label consistency constraint. Neurocomputing 2020, 379, 356–369. [Google Scholar] [CrossRef]

- Li, H.; Yan, S.; Yu, Z.; Tao, D. Attribute-Identity Embedding and Self-supervised Learning for Scalable Person Re-Identification. IEEE Trans. Circ. Syst. Video Technol. 2019, 30, 3472–3485. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Zhao, Y.; Lin, J.; Qi, X.; Xu, X. HPILN: A feature learning framework for cross-modality person re-identification. IET Image Process. 2019, 13, 2897–2904. [Google Scholar] [CrossRef]

- Zhang, X.; Luo, H.; Fan, X.; Xiang, W.; Sun, Y.; Xiao, Q.; Jiang, W.; Zhang, C.; Sun, J. AlignedReID: Surpassing Human-Level Performance in Person Re-Identification. arXiv 2017, arXiv:1711.08184. [Google Scholar]

- Zhao, H.; Tian, M.; Sun, S.; Shao, J.; Yan, J.; Yi, S.; Wang, X.; Tang, X. Spindle Net: Person Re-Identification with Human Body Region Guided Feature Decomposition and Fusion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1077–1085. [Google Scholar]

- Song, C.; Huang, Y.; Ouyang, W.; Wang, L. Mask-guided contrastive attention model for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1179–1188. [Google Scholar]

- Ning, X.B.; Yuan, G.; Yizhe, Z.; Christian, P. Second-Order Non-Local Attention Networks for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3760–3769. [Google Scholar]

- Shuang, L.; Slawomir, B.; Peter, C.; Xiaogang, W. Diversity regularized spatiotemporal attention for video-based person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 369–378. [Google Scholar]

- Chen, T.; Ding, S.; Xie, J.; Yuan, Y.; Chen, W.; Yang, Y.; Ren, Z.; Wang, Z. Abd-net: Attentive but diverse person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8351–8361. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. DeepReID: Deep Filter Pairing Neural Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Deep Metric Learning for Person Re-identification. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 34–39. [Google Scholar]

- Sun, Y.; Zheng, L.; Yang, Y.; Tian, Q.; Wang, S. Beyond Part Models: Person Retrieval with Refined Part Pooling (and A Strong Convolutional Baseline). In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 480–496. [Google Scholar]

- Tao, D.; Guo, Y.; Yu, B.; Pang, J.; Yu, Z. Deep Multi-View Feature Learning for Person Re-Identification. IEEE Trans. Circ. Syst. Video Technol. 2018, 28, 2657–2666. [Google Scholar] [CrossRef]

- Zheng, Z.; Zheng, L.; Yang, Y. A Discriminatively Learned CNN Embedding for Person Reidentification. ACM Trans. Multimed. Comput. Commun. Appl. 2017, 14, 1–20. [Google Scholar] [CrossRef]

- Li, D.; Chen, X.; Zhang, Z.; Huang, K. Learning Deep Context-Aware Features Over Body and Latent Parts for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 384–393. [Google Scholar]

- Saquib Sarfraz, M.; Schumann, A.; Eberle, A.; Stiefelhagen, R. A pose-sensitive embedding for person re-identification with expanded cross neighborhood re-ranking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 420–429. [Google Scholar]

- Wei, L.; Zhang, S.; Yao, H.; Gao, W.; Tian, Q. GLAD: Global-Local-Alignment Descriptor for Scalable Person Re-Identification. IEEE Trans. Multimed. 2019, 21, 986–999. [Google Scholar] [CrossRef]

- Yao, H.; Zhang, S.; Hong, R.; Zhang, Y.; Xu, C.; Tian, Q. Deep Representation Learning with Part Loss for Person Re-Identification. IEEE Trans. Image Process. 2019, 28, 2860–2871. [Google Scholar] [CrossRef] [PubMed]

- Jiang, D.; Qi, G.; Hu, G.; Mazur, N.; Zhu, Z.; Wang, D. A residual neural network based method for the classification of tobacco cultivation regions using near-infrared spectroscopy sensors. Infrared Phys. Technol. 2020, 111, 103494. [Google Scholar] [CrossRef]

- Li, W.; Zhu, X.; Gong, S. Harmonious Attention Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2285–2294. [Google Scholar]

- Wang, C.; Zhang, Q.; Huang, C.; Liu, W.; Wang, X. Mancs: A multi-task attentional network with curriculum sampling for person re-identification. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 365–381. [Google Scholar]

- Chen, G.; Lin, C.; Ren, L.; Lu, J.; Zhou, J. Self-Critical Attention Learning for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9637–9646. [Google Scholar]

- Binghui, C.; Weihong, D.; Jiani, H. Mixed high-order attention network for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 371–381. [Google Scholar]

- Hou, R.; Ma, B.; Chang, H.; Gu, X.; Shan, S.; Chen, X. Interaction-And-Aggregation Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9317–9326. [Google Scholar]

- Zhou, S.; Wang, F.; Huang, Z.; Wang, J. Discriminative feature learning with consistent attention regularization for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 8040–8049. [Google Scholar]

- Hu, G.; Gao, Q. A 3D gesture recognition framework based on hierarchical visual attention and perceptual organization models. In Proceedings of the IEEE 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1411–1414. [Google Scholar]

- Bai, X.; Yang, M.; Huang, T.; Dou, Z.; Yu, R.; Xu, Y. Deep-Person: Learning discriminative deep features for person Re-Identification. Pattern Recogn. 2020, 98, 107036. [Google Scholar] [CrossRef]

- Hu, G.; Dixit, C.; Luong, D.; Gao, Q.; Cheng, L. Salience Guided Pooling in Deep Convolutional Networks. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 360–364. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random Erasing Data Augmentation. arXiv 2017, arXiv:1708.04896. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Zhou, K.; Yang, Y.; Cavallaro, A.; Xiang, T. Omni-Scale Feature Learning for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3702–3712. [Google Scholar]

- Sun, Y.; Zheng, L.; Deng, W.; Wang, S. Svdnet for pedestrian retrieval. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3800–3808. [Google Scholar]

- Huang, H.; Li, D.; Zhang, Z.; Chen, X.; Huang, K. Adversarially occluded samples for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5098–5107. [Google Scholar]

- Du, Y.; Yuan, C.; Li, B.; Zhao, L.; Li, Y.; Hu, W. Interaction-aware spatio-temporal pyramid attention networks for action classification. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 373–389. [Google Scholar]

- Yang, W.; Huang, H.; Zhang, Z.; Chen, X.; Huang, K.; Zhang, S. Towards Rich Feature Discovery with Class Activation Maps Augmentation for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1389–1398. [Google Scholar]

- Ustinova, E.; Ganin, Y.; Lempitsky, V. Multi-region bilinear convolutional neural networks for person re-identification. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance, Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Zhao, L.; Li, X.; Zhuang, Y.; Wang, J. Deeply-Learned Part-Aligned Representations for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3219–3228. [Google Scholar]

- Su, C.; Li, J.; Zhang, S.; Xing, J.; Gao, W.; Tian, Q. Pose-Driven Deep Convolutional Model for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3960–3969. [Google Scholar]

- Xu, J.; Zhao, R.; Zhu, F.; Wang, H.; Ouyang, W. Attention-Aware Compositional Network for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2119–2128. [Google Scholar]

- Tay, C.P.; Roy, S.; Yap, K.H. AANet: Attribute Attention Network for Person Re-Identifications. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7134–7143. [Google Scholar]

- Quan, R.; Dong, X.; Wu, Y.; Zhu, L.; Yang, Y. Auto-ReID: Searching for a Part-Aware ConvNet for Person Re-Identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3750–3759. [Google Scholar]

- Chang, X.; Hospedales, T.M.; Xiang, T. Multi-Level Factorisation Net for Person Re-Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2109–2118. [Google Scholar]

- Si, J.; Zhang, H.; Li, C.G.; Kuen, J.; Kong, X.; Kot, A.C.; Wang, G. Dual attention matching network for context-aware feature sequence based person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5363–5372. [Google Scholar]

- Xiong, F.; Xiao, Y.; Cao, Z.; Gong, K.; Fang, Z.; Zhou, J.T. Towards good practices on building effective cnn baseline model for person re-identification. arXiv 2018, arXiv:1807.11042. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Pedestrian Alignment Network for Large-scale Person Re-Identification. IEEE Trans. Circ. Syst. Video Technol. 2019, 29, 3037–3045. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, X.; Gong, S. Person re-identification by deep learning multi-scale representations. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2590–2600. [Google Scholar]

- Wang, Y.; Wang, L.; You, Y.; Zou, X.; Chen, V.; Li, S.; Huang, G.; Hariharan, B.; Weinberger, K.Q. Resource aware person re-identification across multiple resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8042–8051. [Google Scholar]

- Zheng, Z.; Yang, X.; Yu, Z.; Zheng, L.; Yang, Y.; Kautz, J. Joint discriminative and generative learning for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2138–2147. [Google Scholar]

| Method | Rank1 | Rank2 | Rank3 | mAP |

|---|---|---|---|---|

| SVDNet [39] | 82.3 | 92.3 | 95.2 | 62.1 |

| MGCAM [11] | 83.7 | - | - | 74.3 |

| Triplet Loss [35] | 84.9 | 94.2 | 69.1 | |

| AOS [40] | 86.4 | - | - | 70.4 |

| Dual [41] | 91.4 | - | - | 76.6 |

| Mancs [26] | 93.1 | - | - | 82.3 |

| CAMA [42] | 94.7 | - | - | 84.5 |

| MultiRegion [43] | 66.4 | 85.0 | 90.2 | 41.2 |

| PAR [44] | 81.0 | 92.0 | 94.7 | 63.4 |

| PDC [45] | 84.4 | 92.7 | 94.9 | 63.4 |

| AACN [46] | 85.9 | - | - | 66.9 |

| HA-CNN [25] | 91.2 | - | - | 75.7 |

| PCB [17] | 92.3 | 97.2 | 98.2 | 77.4 |

| PCB+RPP [17] | 93.8 | 97.5 | 98.5 | 81.6 |

| AANet [47] | 93.9 | - | 98.5 | 83.4 |

| Auto-ReID [48] | 94.5 | - | - | 85.1 |

| OSNet [38] | 94.8 | - | - | 84.9 |

| CAR [30] | 96.1 | - | - | 84.7 |

| EXAM | 95.1 | 98.0 | 98.8 | 85.9 |

| Method | Rank1 | mAP |

|---|---|---|

| SVDNet [39] | 76.7 | 56.8 |

| AOS [40] | 79.2 | 62.1 |

| MLFN [49] | 81.0 | 62.8 |

| DuATM [50] | 81.8 | 64.6 |

| PCB+RPP [17] | 83.3 | 69.2 |

| PSE+ECN [21] | 84.5 | 75.7 |

| GP-reid [51] | 85.2 | 72.8 |

| CAMA [42] | 85.8 | 72.9 |

| CAR [30] | 86.3 | 73.1 |

| IANet [29] | 87.1 | 73.4 |

| EXAM | 87.4 | 76.0 |

| Method | Labeled | Detected | ||

|---|---|---|---|---|

| Rank1 | mAP | Rank1 | mAP | |

| PAN [52] | 36.9 | 35.0 | 36.3 | 34.0 |

| SVDNet [39] | 40.9 | 37.8 | 41.5 | 37.3 |

| DPFL [53] | 43.0 | 40.5 | 40.7 | 37.0 |

| HA-CNN [25] | 44.4 | 41.0 | 41.7 | 38.6 |

| MLFN [49] | 54.7 | 49.2 | 52.8 | 47.8 |

| DaRe+RE [54] | 66.1 | 61.6 | 63.3 | 59.0 |

| PCB+RPP [17] | - | - | 63.7 | 57.5 |

| Mancs [26] | 69.0 | 63.9 | 65.5 | 60.5 |

| DG-Net [55] | - | - | 65.6 | 61.1 |

| EXAM | 73.9 | 68.6 | 69.2 | 65.0 |

| Variant | Extreme | Moderate | Fusion | Accuracy(%) | ||||

|---|---|---|---|---|---|---|---|---|

| Triplet | CE | Triplet | CE | Triplet | CE | Rank1 | mAP | |

| Type1 | ✓ | 93.4 | 82.8 | |||||

| Type2 | ✓ | 93.2 | 82.1 | |||||

| Type3 | ✓ | ✓ | 93.5 | 82.8 | ||||

| Type4 | ✓ | 94.6 | 84.4 | |||||

| Type5 | ✓ | ✓ | ✓ | 92.6 | 81.2 | |||

| Type6 | ✓ | ✓ | ✓ | 94.9 | 85.1 | |||

| Type7 | ✓ | ✓ | 94.1 | 84.8 | ||||

| Type8 | ✓ | ✓ | ✓ | 94.2 | 84.0 | |||

| EXAM | ✓ | ✓ | ✓ | 95.1 | 85.9 | |||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, G.; Hu, G.; Wang, X.; Mazur, N.; Zhu, Z.; Haner, M. EXAM: A Framework of Learning Extreme and Moderate Embeddings for Person Re-ID. J. Imaging 2021, 7, 6. https://doi.org/10.3390/jimaging7010006

Qi G, Hu G, Wang X, Mazur N, Zhu Z, Haner M. EXAM: A Framework of Learning Extreme and Moderate Embeddings for Person Re-ID. Journal of Imaging. 2021; 7(1):6. https://doi.org/10.3390/jimaging7010006

Chicago/Turabian StyleQi, Guanqiu, Gang Hu, Xiaofei Wang, Neal Mazur, Zhiqin Zhu, and Matthew Haner. 2021. "EXAM: A Framework of Learning Extreme and Moderate Embeddings for Person Re-ID" Journal of Imaging 7, no. 1: 6. https://doi.org/10.3390/jimaging7010006

APA StyleQi, G., Hu, G., Wang, X., Mazur, N., Zhu, Z., & Haner, M. (2021). EXAM: A Framework of Learning Extreme and Moderate Embeddings for Person Re-ID. Journal of Imaging, 7(1), 6. https://doi.org/10.3390/jimaging7010006