A Comparative Study of Two State-of-the-Art Feature Selection Algorithms for Texture-Based Pixel-Labeling Task of Ancient Documents

Abstract

1. Introduction

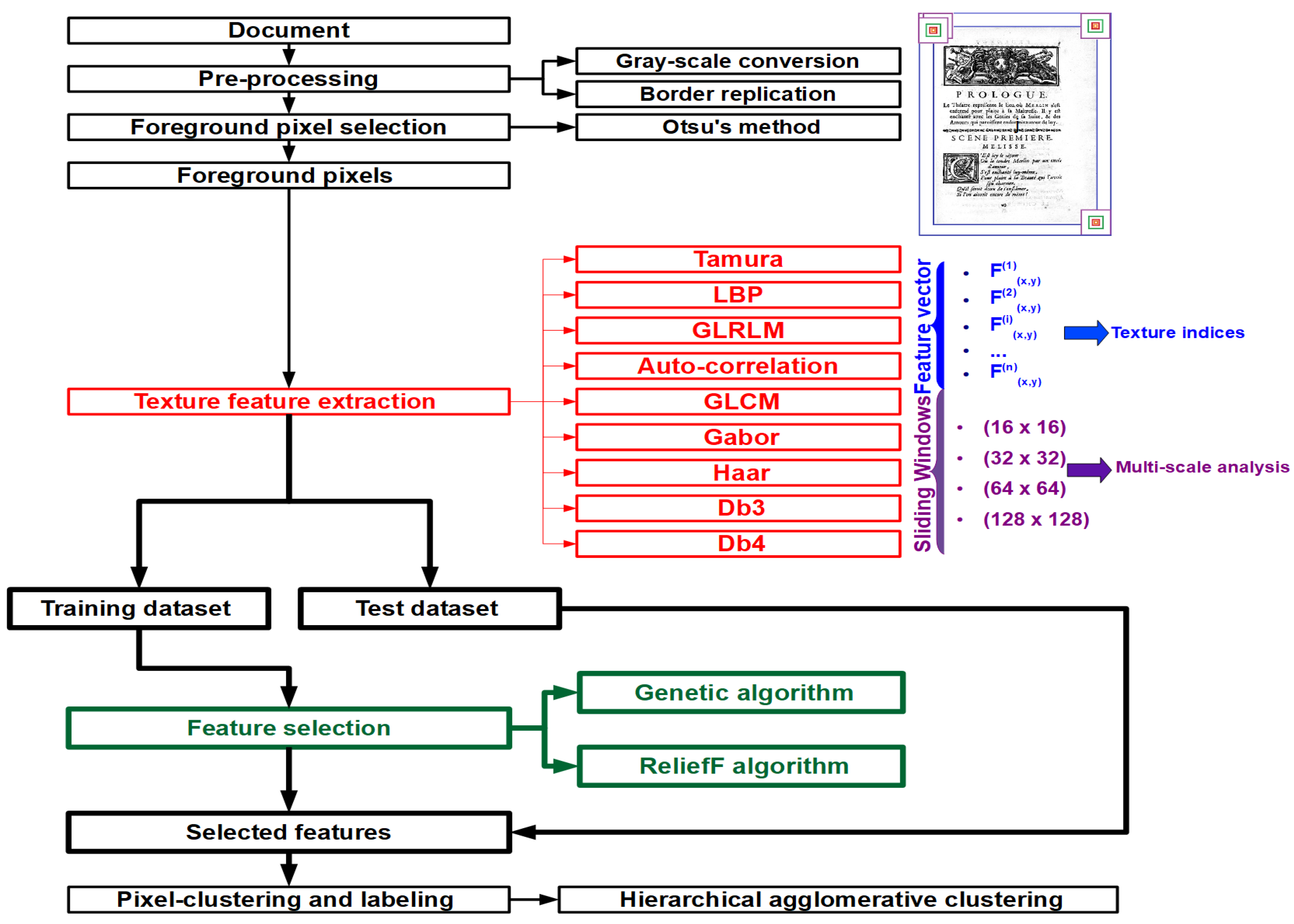

2. Texture Features

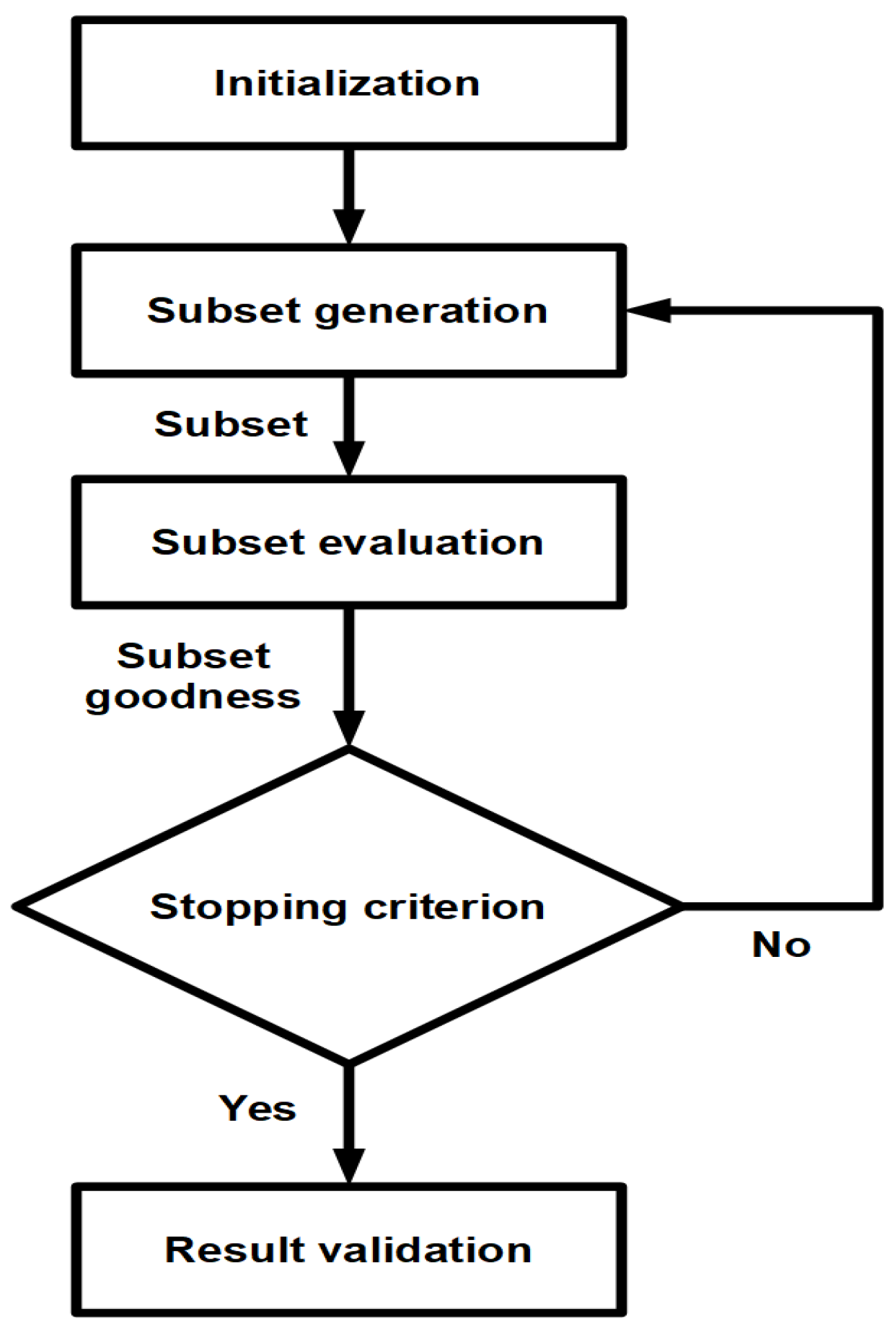

3. Feature Selection Algorithms

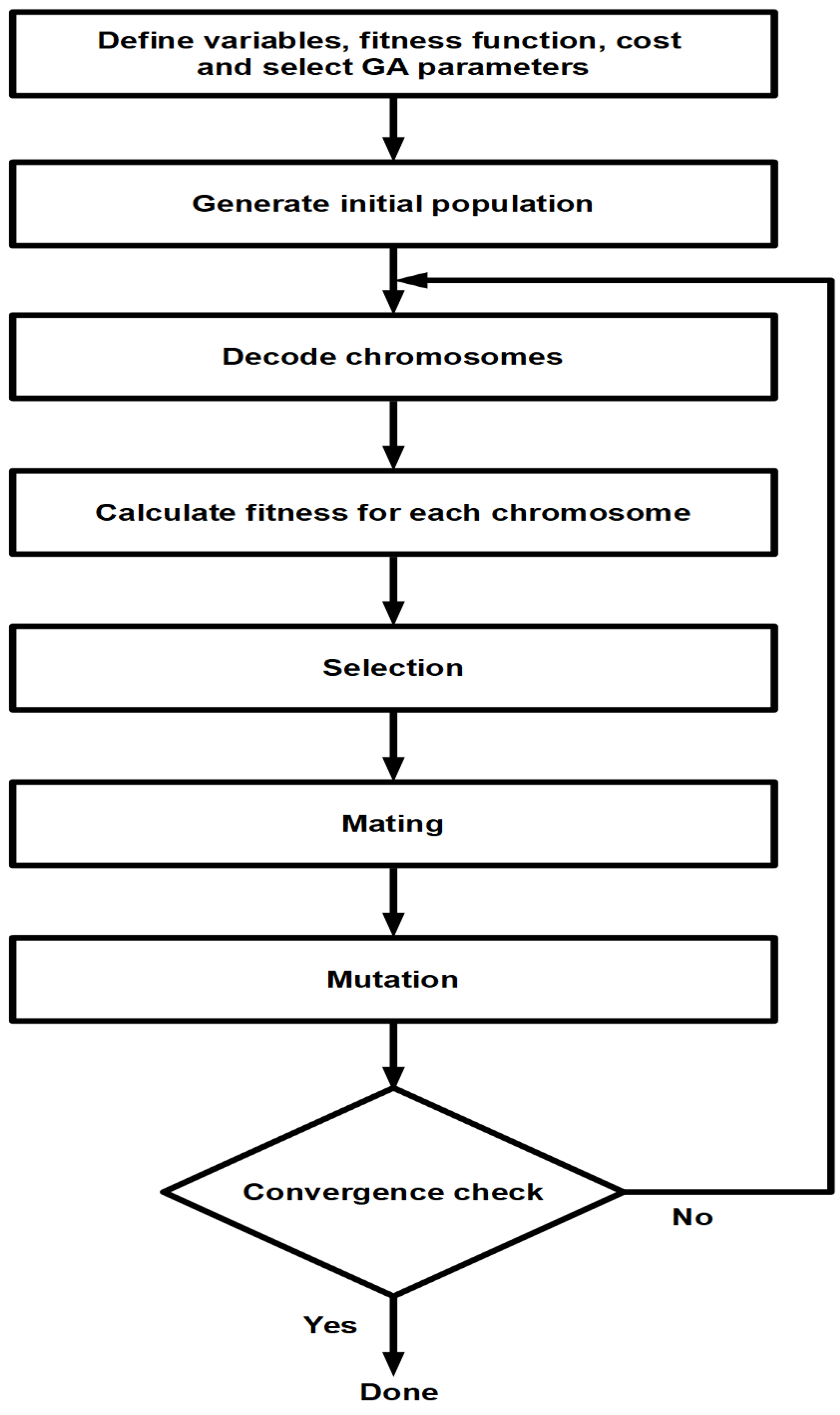

3.1. Genetic Algorithm

| Algorithm 1 Basic genetic algorithm [21] | |

| Input: Crossover probability () Mutation probability () Population size (L-chromosomes- or classifier- by N-bits) Criteria function () Fitness threshold () | |

| Output: Set of highest fitness chromosomes (best classifier) | |

| 1: | repeat |

| 2: | Determine the fitness of each chromosome: , |

| 3: | Rank the chromosomes |

| 4: | repeat |

| 5: | Select two chromosomes with highest score |

| 6: | if then |

| 7: | Crossover the pair at a randomly chosen bit |

| 8: | else |

| 9: | Change each bit with the probability |

| 10: | Remove the parent chromosomes |

| 11: | until N offspring have been created |

| 12: | until Any chromosome’s score exceeds |

| 13: | return Highest fitness chromosome (best classifier) |

3.2. ReliefF Algorithm

| Algorithm 2 ReliefF algorithm [24] |

| Input: For each training instance: Vector of attribute values () Class value (C) |

| Output: Vector W of the estimations of the qualities of attributes |

| 1: Set all weights |

| 2: for i:=1 to m do |

| 3: Randomly select an instance |

| 4: Find k nearest hits |

| 5: for each class do |

| 6: From class C find k nearest misses |

| 7: for A:=1 to a do |

| where m is a user-defined parameter. is a function that computes the difference between the values of the attribute A for two instances and . denotes the prior probability. |

4. Evaluation and Results

4.1. Pixel-Labeling Scheme

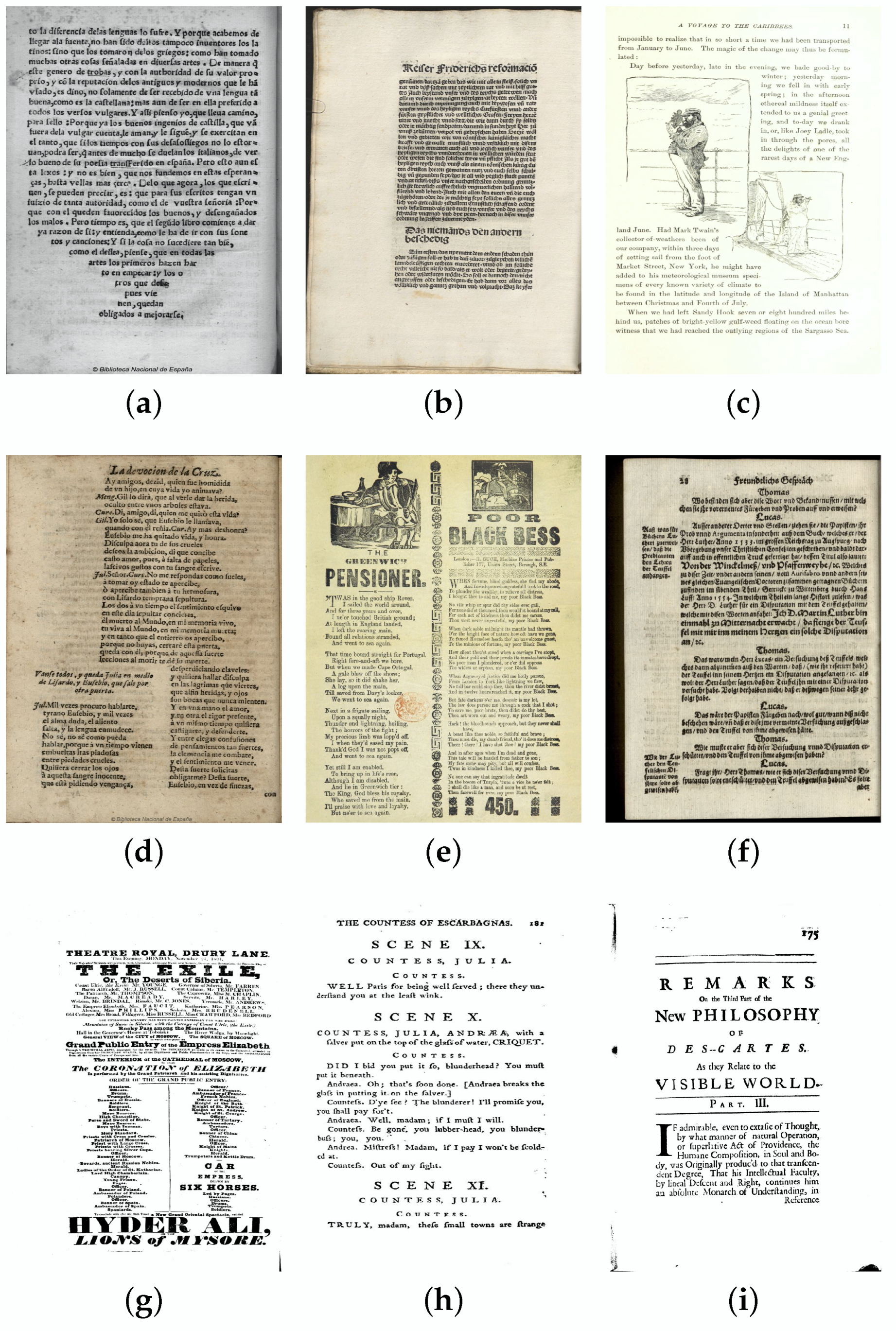

4.2. Corpus and Preparation of Ground Truth

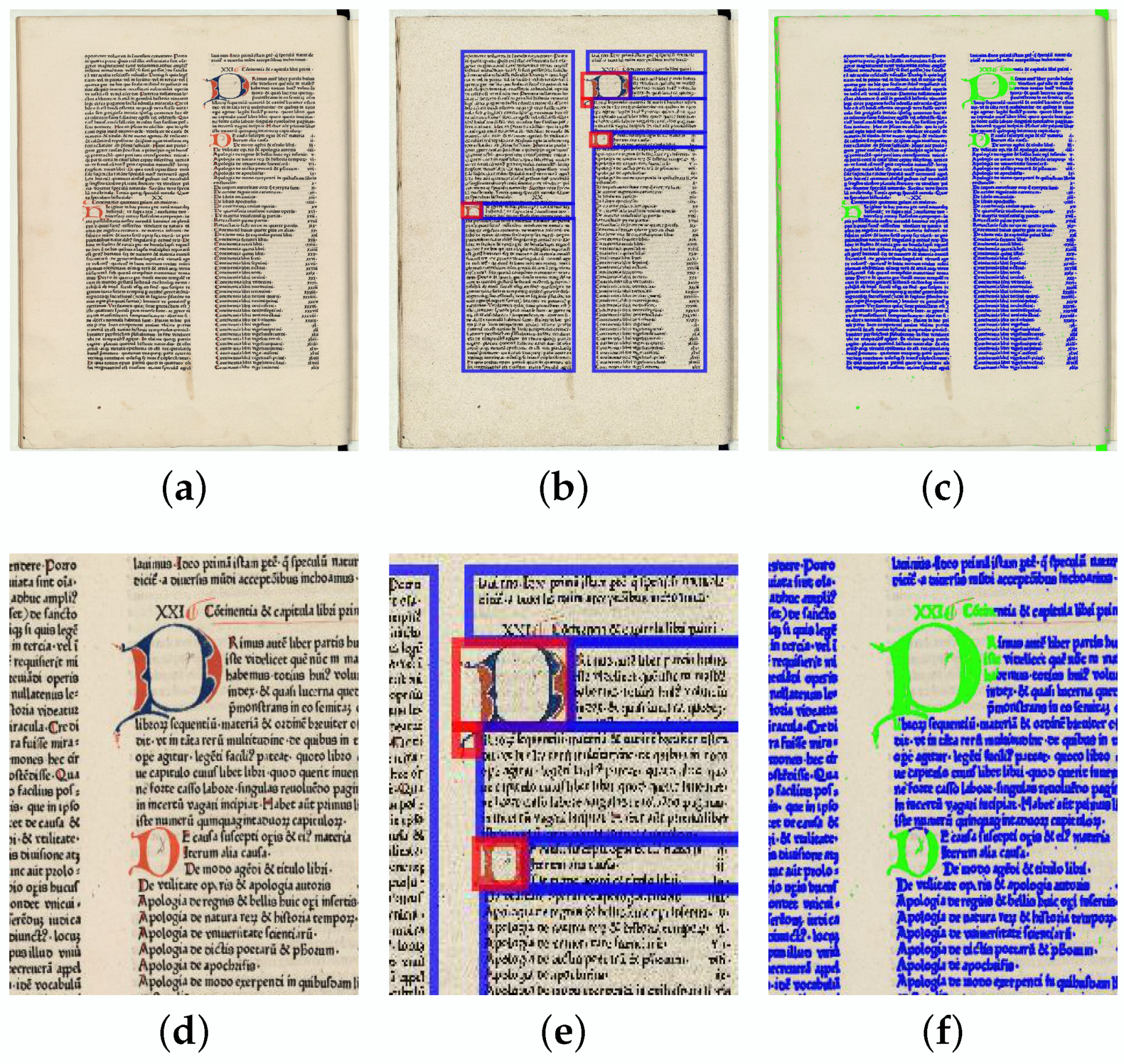

4.3. Qualitative Results

4.4. Benchmarking and Performance Evaluation

5. Conclusions and Further Work

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| Db3 | three-level wavelet transform using 3-tap Daubechies filter |

| Db4 | three-level wavelet transform using 4-tap Daubechies filter |

| DIA | Document image analysis |

| F | F-measure |

| GA | Genetic algorithm |

| GLCM | Gray-level co-occurrence matrix |

| GLRLM | Gray-level run-length matrix |

| Haar | three-level Haar wavelet transform |

| HAC | Hierarchical ascendant classification |

| HBR | Historical book recognition |

| LBP | Local binary patterns |

| RA | ReliefF algorithm |

| SW | Silhouette width |

| PPB | Purity per-block |

References

- Antonacopoulos, A.; Clausner, C.; Papadopoulos, C.; Pletschacher, S. Historical document layout analysis competition. In Proceedings of the International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; pp. 1516–1520. [Google Scholar]

- Antonacopoulos, A.; Clausner, C.; Papadopoulos, C.; Pletschacher, S. ICDAR 2013 Competition on Historical Book Recognition (HBR 2013). In Proceedings of the International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013; pp. 1459–1463. [Google Scholar]

- Wei, H.; Seuret, M.; Liwicki, M.; Ingold, R.; Fu, P. Selecting fine-tuned features for layout analysis of historical documents. In Proceedings of the International Conference on Document Analysis and Recognition, Kyoto, Japan, 9–15 November 2017; pp. 281–286. [Google Scholar]

- Chen, K.; Seuret, M.; Hennebert, J.; Ingold, R. Convolutional neural networks for page segmentation of historical document images. In Proceedings of the International Conference on Document Analysis and Recognition, Kyoto, Japan, 9–15 November 2017; pp. 965–970. [Google Scholar]

- Calvo-Zaragoza, J.; Castellanos, F.J.; Vigliensoni, G.; Fujinaga, I. Deep neural networks for document processing of music score images. Appl. Sci. 2018, 8, 654. [Google Scholar] [CrossRef]

- Okun, O.; Pietikäinen, M. A survey of texture-based methods for document layout analysis. In Texture Analysis in Machine Vision—Series in Machine Perception and Artificial Intelligence; World Scientific: Singapore, 2000; pp. 165–177. [Google Scholar]

- Kise, K. Page segmentation techniques in document analysis. In Handbook of Document Image Processing and Recognition; Springer: London, UK, 2014; pp. 135–175. [Google Scholar]

- Wahl, F.M.; Wong, K.Y.; Casey, R.G. Block segmentation and text extraction in mixed text/image documents. Comput. Graph. Image Proc. 1982, 20, 375–390. [Google Scholar] [CrossRef]

- PRImA. Available online: http://www.primaresearch.org/news/HBR2013 (accessed on 30 July 2018).

- PRImA. Available online: http://www.primaresearch.org/datasets (accessed on 30 July 2018).

- Mehri, M.; Héroux, P.; Gomez-Krämer, P.; Mullot, R. Texture feature benchmarking and evaluation for historical document image analysis. Int. J. Doc. Anal. Recognit. 2017, 20, 1–35. [Google Scholar] [CrossRef]

- Beyerer, J.; León, F.P.; Frese, C.C. Texture analysis. In Machine Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 649–683. [Google Scholar]

- Dubuf, J.; Kardan, M.; Spann, M. Texture feature performance for image segmentation. Pattern Recognit. 1990, 23, 291–309. [Google Scholar] [CrossRef]

- Journet, N.; Ramel, J.; Mullot, R.; Eglin, V. Document image characterization using a multiresolution analysis of the texture: Application to old documents. Int. J. Doc. Anal. Recognit. 2008, 11, 9–18. [Google Scholar] [CrossRef]

- Wei, H.; Seuret, M.; Chen, K.; Fischer, A.; Liwicki, M.; Ingold, R. Selecting autoencoder features for layout analysis of historical documents. In Proceedings of the International Workshop on Historical Document Imaging and Processing, Nancy, France, 22 August 2015; pp. 55–62. [Google Scholar]

- Xue, B.; Zhang, M.; Browne, W.N.; Yao, X. A survey on evolutionary computation approaches to feature selection. IEEE Trans. Evol. Comput. 2015, 20, 606–626. [Google Scholar] [CrossRef]

- Zongker, D.; Jain, A. Algorithms for feature selection: An evaluation. In Proceedings of the International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; pp. 18–22. [Google Scholar]

- Guyon, I.; Elisseeff, A. An introduction to variable and feature selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. [Google Scholar]

- Tao, D.; Jin, L.; Zhang, S.; Yang, Z.; Wang, Y. Sparse discriminative information preservation for Chinese character font categorization. Neurocomputing 2014, 129, 159–167. [Google Scholar] [CrossRef]

- Wei, H.; Chen, K.; Nicolaou, A.; Liwicki, M.; Ingold, R. Investigation of feature selection for historical document layout analysis. In Proceedings of the International Conference on Image Processing Theory, Tools and Applications, Paris, France, 14–17 October 2014; pp. 1–6. [Google Scholar]

- Duda, R.; Hart, P.; Stork, D. Pattern Classification, 2nd ed.; Wiley-Interscience: New York, NY, USA, 2000. [Google Scholar]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information: criteria of max-dependency, maxrelevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Lou, X.; Bao, B. A novel Relief feature selection algorithm based on mean-variance model. J. Inf. Comput. Sci. 2011, 8, 3921–3929. [Google Scholar]

- Šikonja, M.; Kononenko, I. Theoretical and empirical analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef]

- Ward, J.H. Hierarchical grouping to optimize an objective function. J. Am. Stat. Assoc. 1963, 58, 236–244. [Google Scholar] [CrossRef]

- Groundtruthing Environment for Document Images (GEDI). Available online: https://sourceforge.net/projects/gedigroundtruth/ (accessed on 30 July 2018).

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Mehri, M.; Gomez-Krämer, P.; Héroux, P.; Boucher, A.; Mullot, R. A texture-based pixel labeling approach for historical books. Pattern Anal. Appl. 2017, 20, 325–364. [Google Scholar] [CrossRef]

- Powers, D.W. Evaluation: From precision, recall and F-factor to ROC, informedness, markedness & correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- HBA Dataset. Available online: http://icdar2017hba.litislab.eu/index.php/dataset/description/ (accessed on 30 July 2018).

| Content | Number of Pages | Number of Fonts | Graphics |

|---|---|---|---|

| Only one font (cf. Figure 4a) | 3 | 1 | No |

| Only two fonts (cf. Figure 4b) | 17 | 2 | No |

| Graphics and text with two different fonts (cf. Figure 4c) | 9 | 2 | Yes |

| Only three fonts (cf. Figure 4d) | 20 | 3 | No |

| Graphics and text with three different fonts (cf. Figure 4e) | 6 | 3 | Yes |

| Only four fonts (cf. Figure 4f) | 11 | 4 | No |

| Graphics and text with four different fonts (cf. Figure 4g) | 15 | 4 | Yes |

| Only five fonts (cf. Figure 4h) | 5 | 5 | No |

| Graphics and text with five different fonts (cf. Figure 4i) | 14 | 5 | Yes |

| Training Dataset | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Tamura | LBP | GLRLM | Auto-Correlation | GLCM | Gabor | Haar | Db3 | Db4 | ||

| Full texture feature set | 0.35 | 0.21 | 0.13 | 0.07 | 0.21 | 0.26 | 0.26 | 0.31 | 0.28 | |

| 0.71 | 0.78 | 0.79 | 0.73 | 0.84 | 0.90 | 0.80 | 0.79 | 0.81 | ||

| 0.38 | 0.38 | 0.35 | 0.43 | 0.42 | 0.52 | 0.45 | 0.46 | 0.46 | ||

| 16 | 40 | 176 | 20 | 72 | 192 | 80 | 80 | 80 | ||

| Texture features selected using the GA | 0.40 | 0.04 | 0.14 | 0.12 | 0.20 | 0.29 | 0.29 | 0.28 | 0.31 | |

| 0.73 | 0.72 | 0.82 | 0.80 | 0.87 | 0.92 | 0.83 | 0.83 | 0.84 | ||

| 0.39 | 0.36 | 0.35 | 0.42 | 0.42 | 0.54 | 0.45 | 0.45 | 0.46 | ||

| 8 | 20 | 88 | 10 | 36 | 96 | 40 | 40 | 40 | ||

| Texture features selected using the RA | 0.30 | 0.07 | 0.14 | 0.11 | 0.26 | 0.26 | 0.24 | 0.29 | 0.28 | |

| 0.73 | 0.74 | 0.77 | 0.77 | 0.85 | 0.88 | 0.81 | 0.83 | 0.83 | ||

| 0.40 | 0.38 | 0.34 | 0.42 | 0.42 | 0.49 | 0.43 | 0.43 | 0.44 | ||

| 8 | 20 | 88 | 10 | 36 | 96 | 40 | 40 | 40 | ||

| Testing Dataset | ||||||||||

| Tamura | LBP | GLRLM | Auto-Correlation | GLCM | Gabor | Haar | Db3 | Db4 | ||

| Full texture feature set | 0.38 | 0.33 | 0.35 | 0.17 | 0.30 | 0.28 | 0.30 | 0.34 | 0.30 | |

| 0.77 | 0.83 | 0.82 | 0.81 | 0.86 | 0.91 | 0.83 | 0.83 | 0.84 | ||

| 0.40 | 0.39 | 0.37 | 0.43 | 0.43 | 0.52 | 0.44 | 0.45 | 0.46 | ||

| 16 | 40 | 176 | 20 | 72 | 192 | 80 | 80 | 80 | ||

| Texture features selected using the GA | 0.42 | 0.01 | 0.42 | 0.24 | 0.35 | 0.33 | 0.29 | 0.33 | 0.36 | |

| 0.76 | 0.72 | 0.85 | 0.85 | 0.85 | 0.91 | 0.86 | 0.85 | 0.85 | ||

| 0.40 | 0.37 | 0.37 | 0.43 | 0.41 | 0.51 | 0.43 | 0.42 | 0.43 | ||

| 8 | 17 | 95 | 10 | 30 | 90 | 46 | 42 | 45 | ||

| Texture features selected using the RA | 0.35 | 0.15 | 0.39 | 0.22 | 0.36 | 0.28 | 0.31 | 0.34 | 0.37 | |

| 0.79 | 0.76 | 0.81 | 0.80 | 0.87 | 0.89 | 0.85 | 0.85 | 0.86 | ||

| 0.41 | 0.38 | 0.36 | 0.42 | 0.43 | 0.49 | 0.42 | 0.42 | 0.42 | ||

| 7 | 20 | 89 | 10 | 36 | 98 | 40 | 44 | 38 | ||

| Full Texture Feature Set | Texture Features Selected Using the GA | Texture Features Selected Using the RA | |||||||

|---|---|---|---|---|---|---|---|---|---|

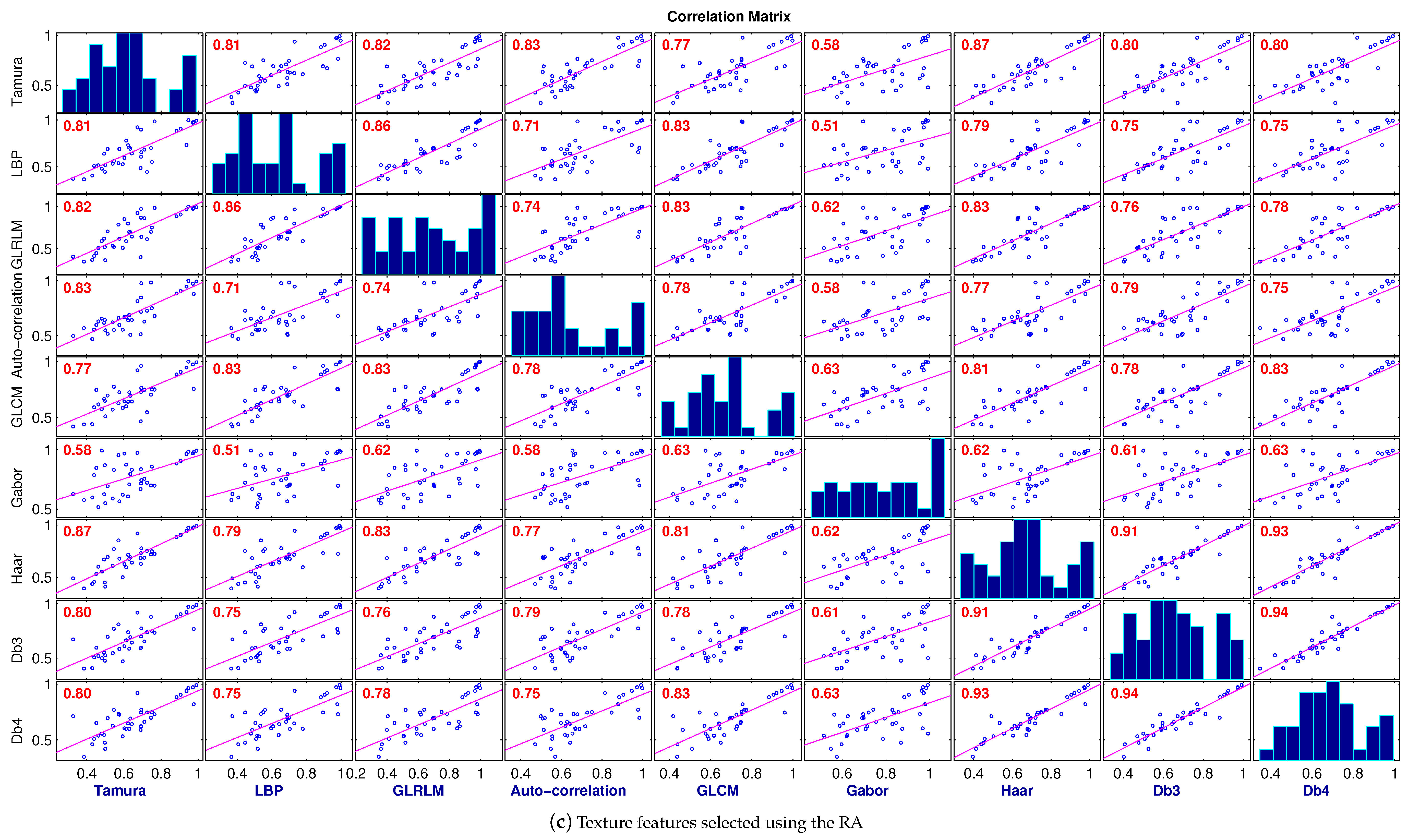

| Features | Minimum | Average | Maximum | Minimum | Average | Maximum | Minimum | Average | Maximum |

| Tamura | 0.50 (Gabor) | 0.58 | 0.63 (GLCM) | 0.47 (Gabor) | 0.75 | 0.87 (LBP) | 0.58 (Gabor) | 0.78 | 0.87 (Haar) |

| LBP | 0.61 (GLCM) | 0.67 | 0.72 (GLRLM) | 0.41 (Gabor) | 0.74 | 0.88 (GLCM) | 0.51 (Gabor) | 0.75 | 0.86 (GLRLM) |

| GLRLM | 0.61 (Tamura) | 0.73 | 0.86 (Haar) | 0.47 (Gabor) | 0.74 | 0.83 (Tamura) | 0.62 (Gabor) | 0.78 | 0.86 (LBP) |

| Auto-correlation | 0.60 (GLCM) | 0.68 | 0.76 (Haar) | 0.49 (Gabor) | 0.69 | 0.75 (Db3) | 0.58 (Gabor) | 0.74 | 0.83 (Tamura) |

| GLCM | 0.60 (Auto) | 0.7 | 0.82 (Haar) | 0.53 (Gabor) | 0.72 | 0.88 (LBP) | 0.63 (Gabor) | 0.78 | 0.83 (LBP) |

| Gabor | 0.50 (Tamura) | 0.67 | 0.77 (Db3) | 0.41 (LBP) | 0.49 | 0.56 (Db3) | 0.51 (LBP) | 0.59 | 0.63 (GLCM) |

| Haar | 0.58 (Tamura) | 0.78 | 0.93 (Db4) | 0.50 (Gabor) | 0.75 | 0.88 (Db4) | 0.62 (Gabor) | 0.81 | 0.93 (Db4) |

| Db3 | 0.59 (Tamura) | 0.78 | 0.92 (Db4) | 0.56 (Gabor) | 0.77 | 0.93 (Db4) | 0.61 (Gabor) | 0.79 | 0.94 (Db4) |

| Db4 | 0.53 (Tamura) | 0.76 | 0.93 (Haar) | 0.53 (Gabor) | 0.76 | 0.93 (Db3) | 0.63 (Gabor) | 0.8 | 0.94 (Db3) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehri, M.; Chaieb, R.; Kalti, K.; Héroux, P.; Mullot, R.; Essoukri Ben Amara, N. A Comparative Study of Two State-of-the-Art Feature Selection Algorithms for Texture-Based Pixel-Labeling Task of Ancient Documents. J. Imaging 2018, 4, 97. https://doi.org/10.3390/jimaging4080097

Mehri M, Chaieb R, Kalti K, Héroux P, Mullot R, Essoukri Ben Amara N. A Comparative Study of Two State-of-the-Art Feature Selection Algorithms for Texture-Based Pixel-Labeling Task of Ancient Documents. Journal of Imaging. 2018; 4(8):97. https://doi.org/10.3390/jimaging4080097

Chicago/Turabian StyleMehri, Maroua, Ramzi Chaieb, Karim Kalti, Pierre Héroux, Rémy Mullot, and Najoua Essoukri Ben Amara. 2018. "A Comparative Study of Two State-of-the-Art Feature Selection Algorithms for Texture-Based Pixel-Labeling Task of Ancient Documents" Journal of Imaging 4, no. 8: 97. https://doi.org/10.3390/jimaging4080097

APA StyleMehri, M., Chaieb, R., Kalti, K., Héroux, P., Mullot, R., & Essoukri Ben Amara, N. (2018). A Comparative Study of Two State-of-the-Art Feature Selection Algorithms for Texture-Based Pixel-Labeling Task of Ancient Documents. Journal of Imaging, 4(8), 97. https://doi.org/10.3390/jimaging4080097