Real-Time FPGA-Based Object Tracker with Automatic Pan-Tilt Features for Smart Video Surveillance Systems

Abstract

:1. Introduction

- ▪

- Real-time data capturing from the camera and on-the-fly processing and display need to be implemented.

- ▪

- The frame size should be of PAL (720 × 576) resolution, which is projected to be the most commonly-used video resolution for current generation video surveillance cameras.

- ▪

- The design of the external memory interface for storing the required number of intermediate frames necessary for robust object tracking (requirement depends on algorithm) needs to be addressed as on-chip FPGA memory (Block RAMs) is not enough for storing standard PAL (720 × 576) size multiple video frames.

- ▪

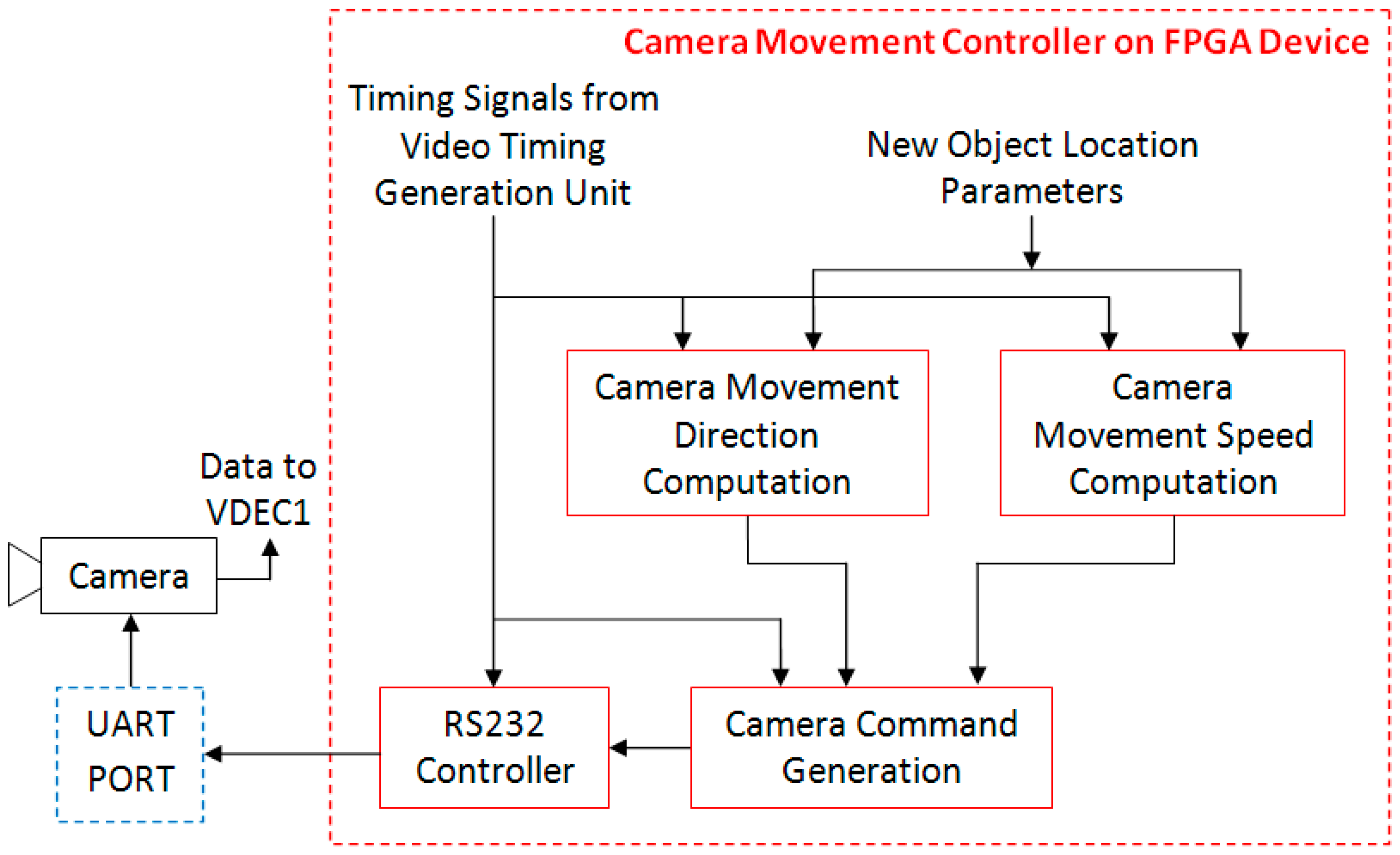

- The current generation of automated video surveillance systems requires a camera with Pan, Tilt and Zooming (PTZ) capabilities to cover a wide field of view; and these must be controlled automatically by system intelligence rather than manual interventions. Therefore, the design and implementation of a real-time automatic purposive camera movement controller is another important issue that needs to be addressed. The camera should move automatically to follow the tracked object over a larger area by keeping the tracked object in the field of view of the camera.

2. Object Tracking Algorithm

- (1)

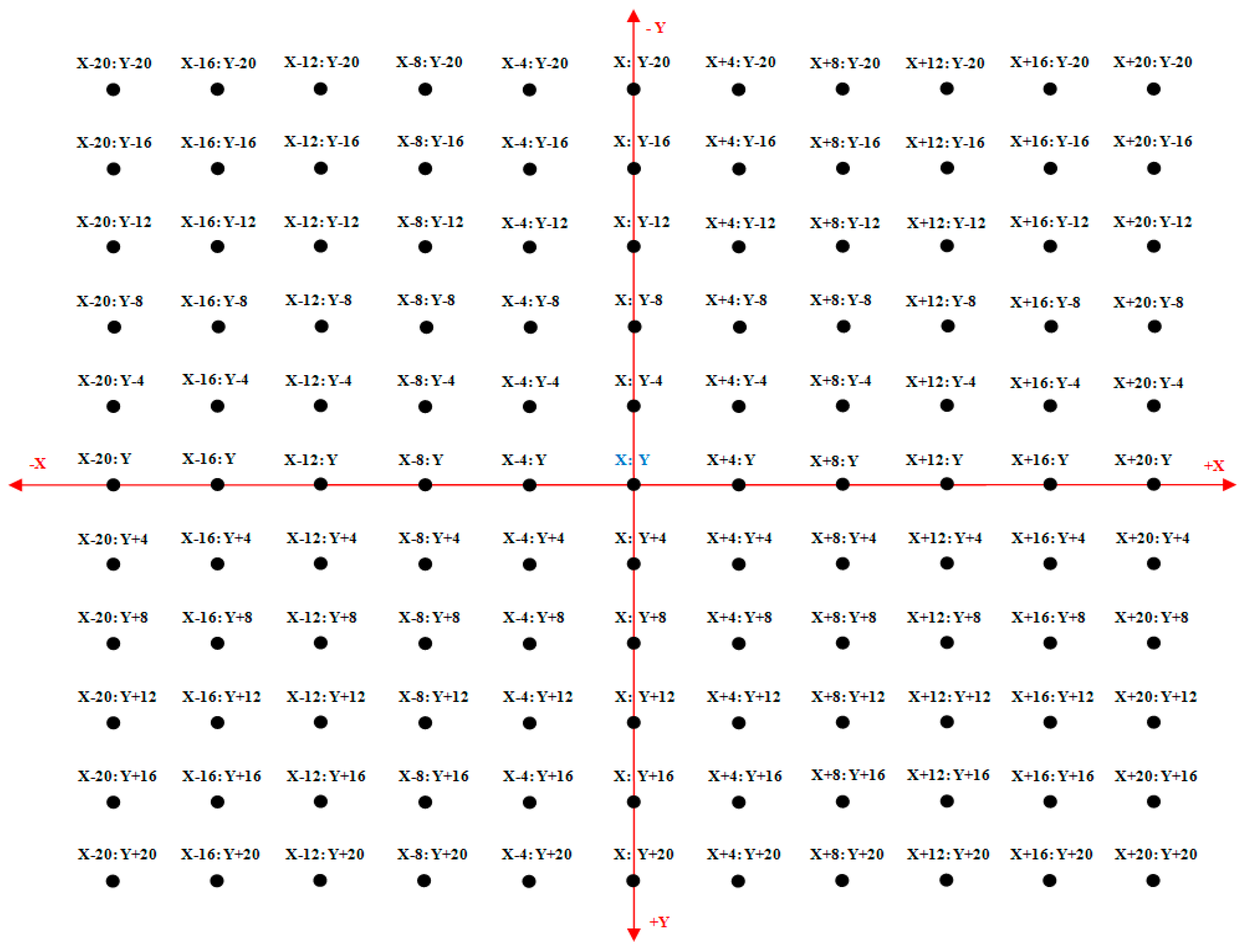

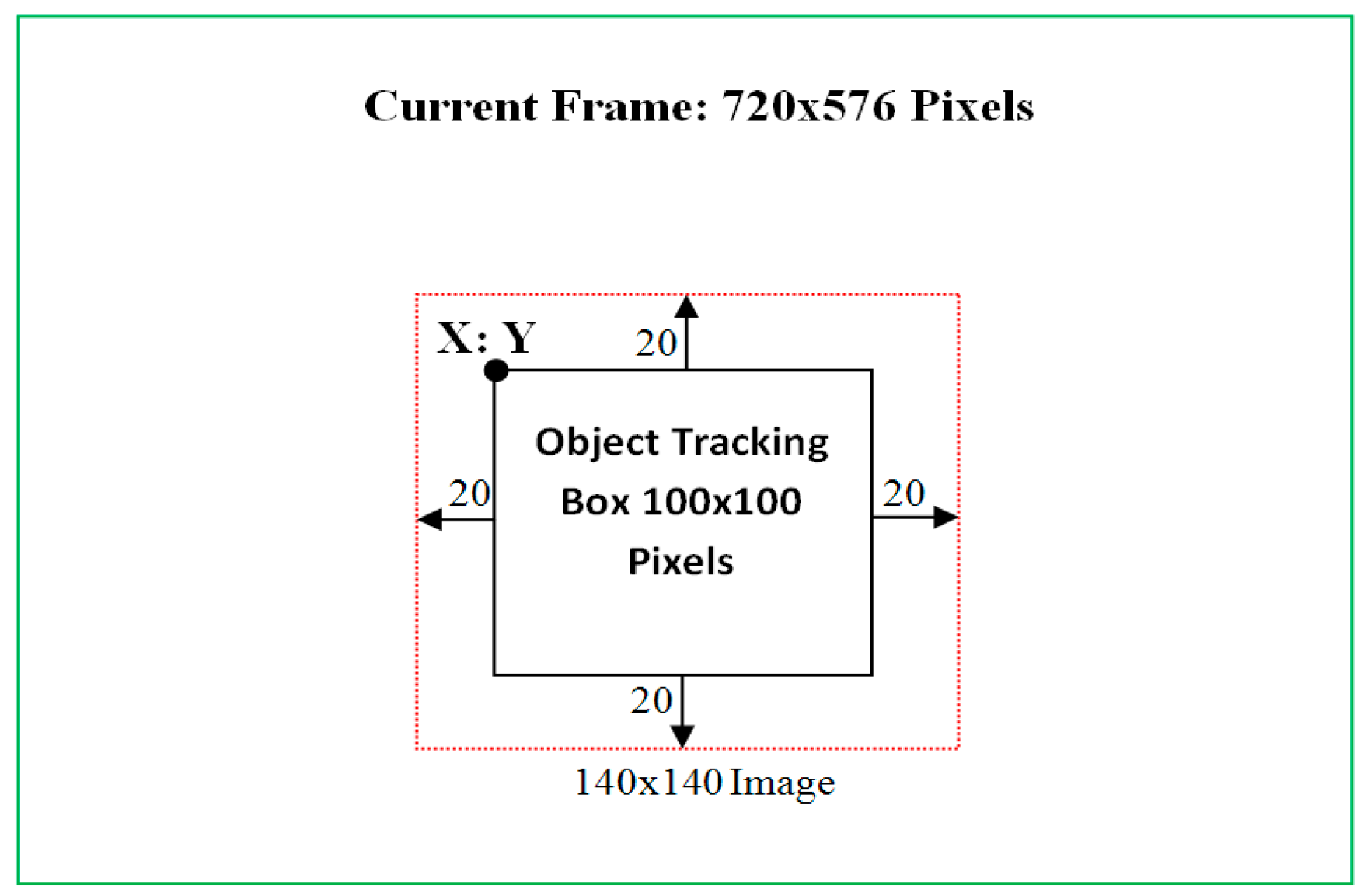

- As we have considered a maximum of 20 pixels’ movement of the tracked object in any direction from one frame to the next frame, therefore, 121 particles (each of size 100 × 100 pixels) around the location (X, Y) of the tracked object in last frame are considered to cover the complete region of 20 pixels’ movement in any direction. The location of each particle with respect to location (X, Y) of the tracked object in the previous frame is shown in Figure 1. For each particle, a 100 × 100 pixel image is extracted from the stored current frame based on the coordinate values shown in Figure 1.

- (2)

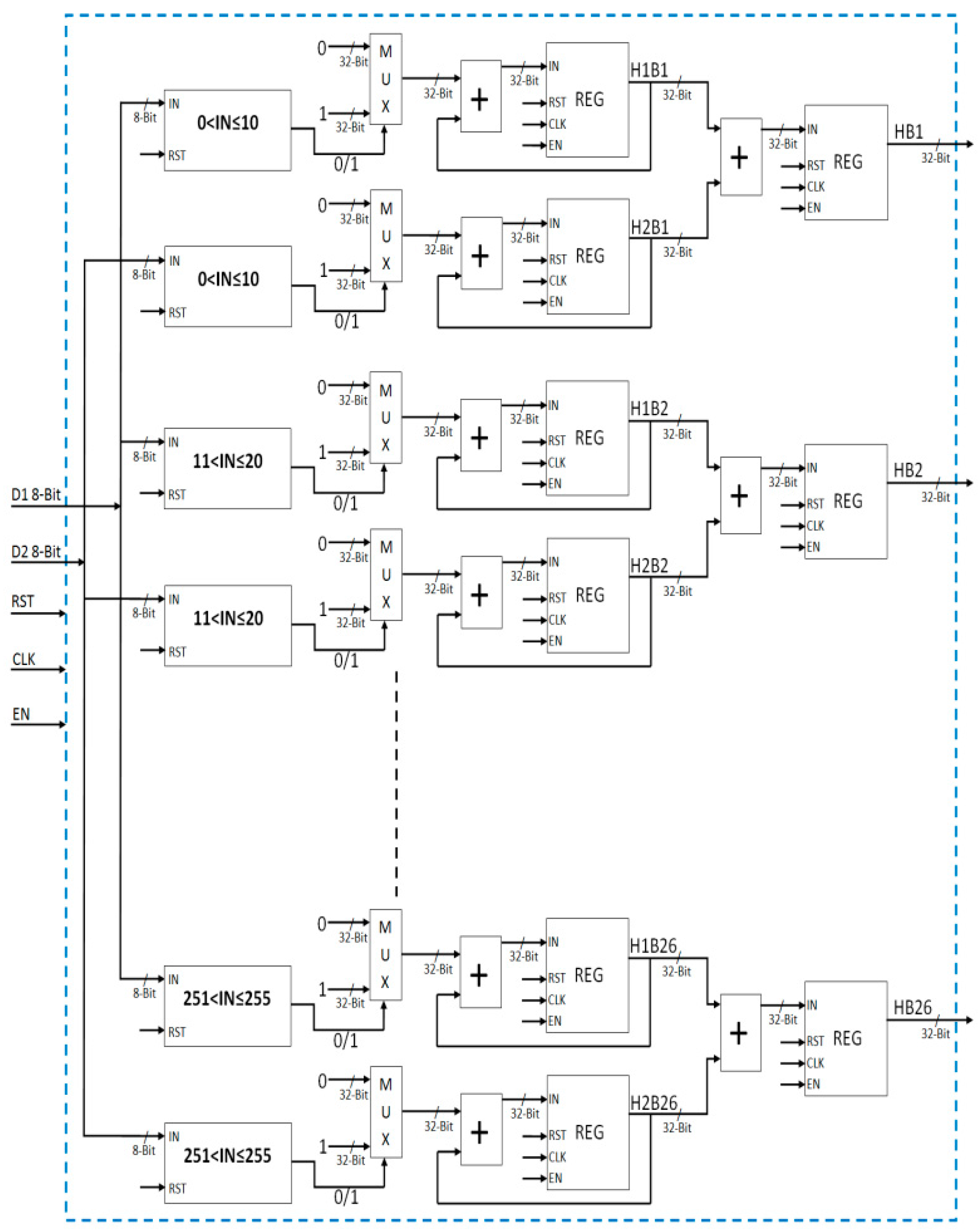

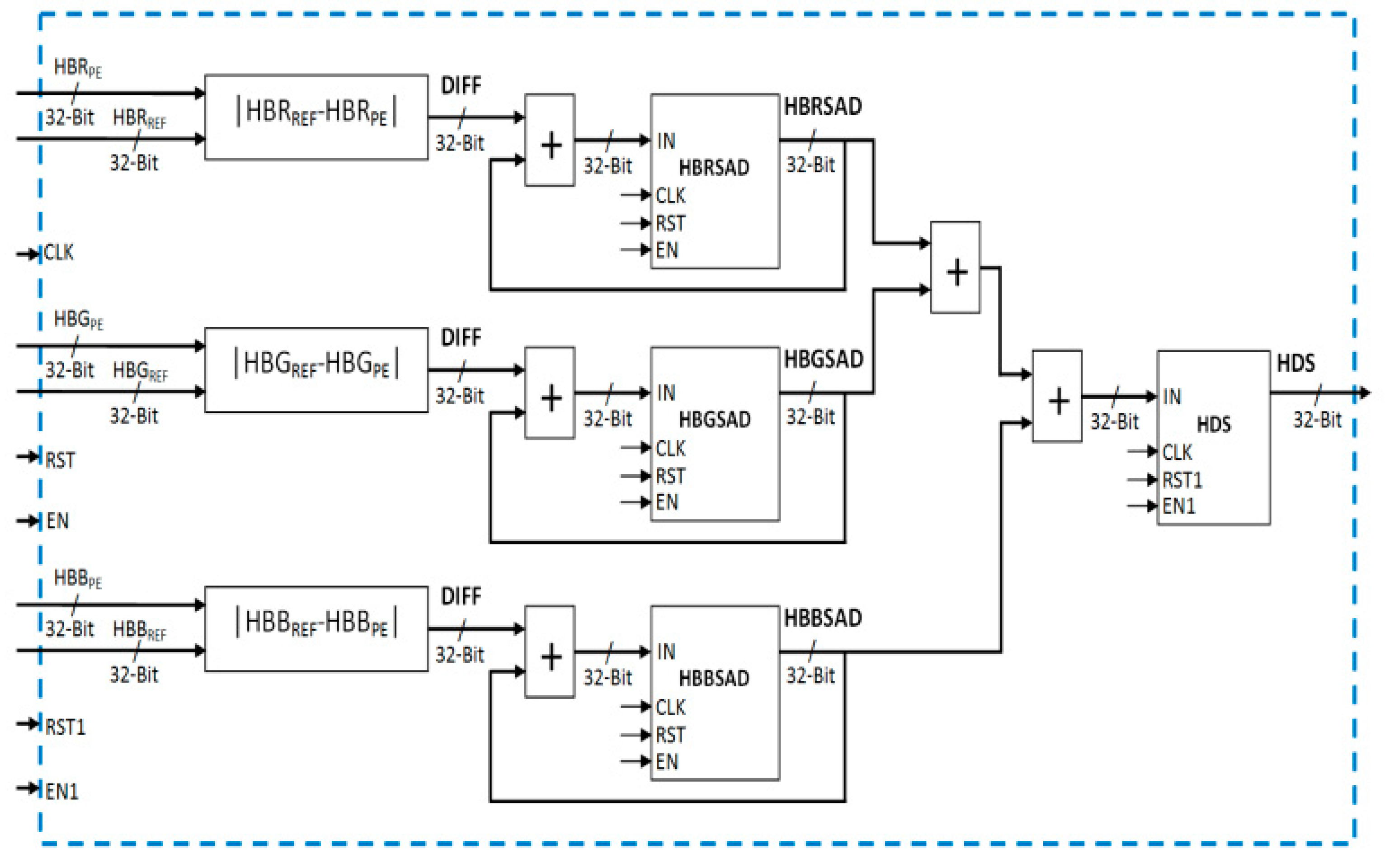

- The color histogram (for each R, G and B color channel) for each particle is computed by using the 100 × 100 pixel image of each particle. The absolute difference of each bin (a total of 26 bins for each color channel is considered as mentioned above) of each R, G and B color channel for each particle is computed with respect to their corresponding bins in the reference histogram of the tracked object (the reference image histogram is computed during the initialization phase as mentioned earlier in this section).

- (3)

- Next, the absolute difference values for each bin are added for each color channel separately, and finally, the three color channels’ values are added up. This results in the histogram difference value (denoted as Histogram_Difference). This histogram difference is computed for each particle.

- (4)

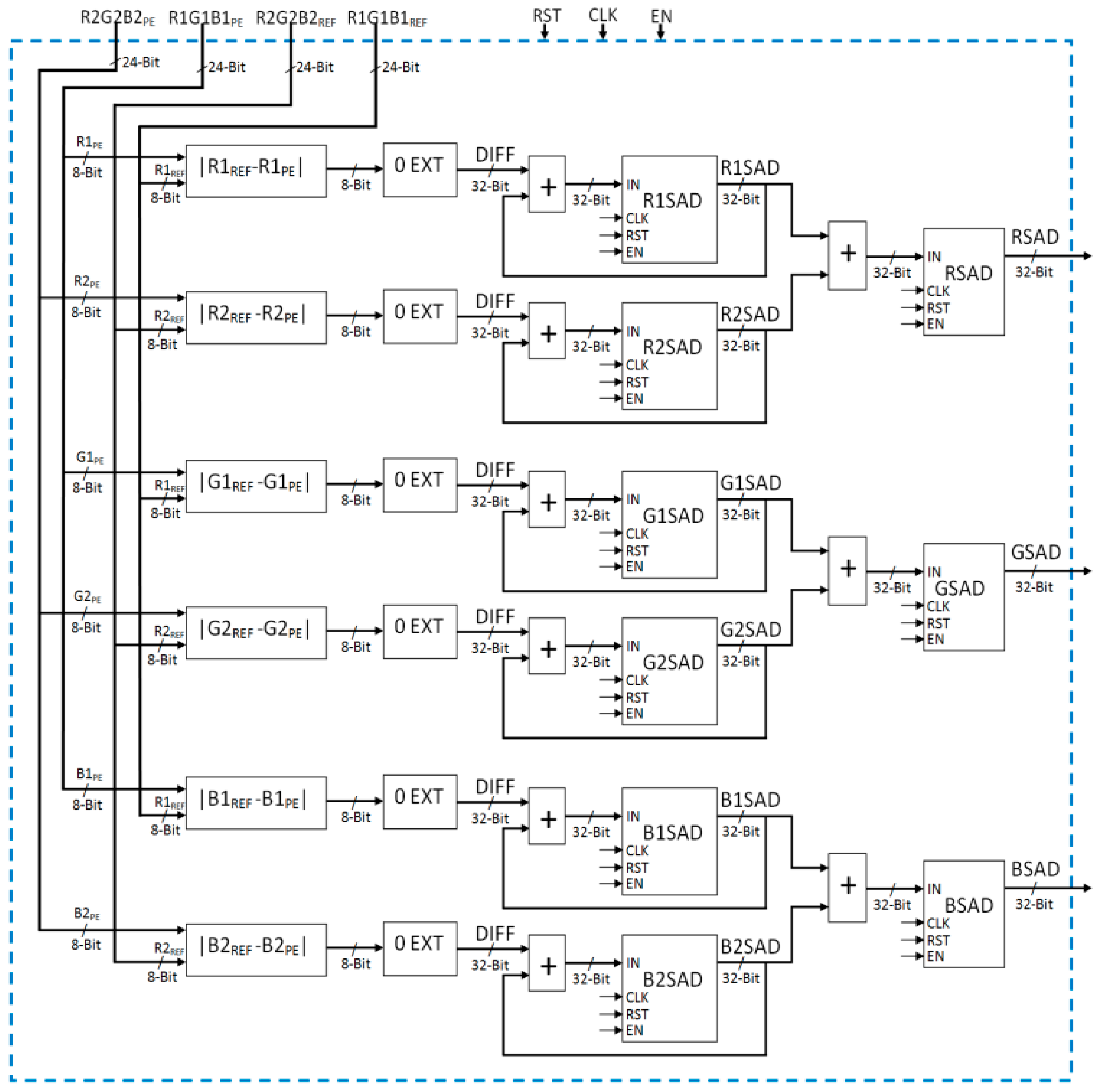

- In addition to the above, the Sum of Absolute Differences (SAD) is also computed for each particle with respect to the reference image of the tracked object.

- (5)

- The above process results in a total of 121 Histogram_Difference values and 121 SAD values. Now, the respective SAD value and the Histogram_Difference value are added up for each particle. This value is connoted as Histogram_Difference_Plus_SAD. Thus, in all, there are 121 values of Histogram_Difference_Plus_SAD.

- (6)

- The particle with minimum Histogram_Difference_Plus_SAD value among the 121 Histogram_Difference_Plus_SAD values is the best matched particle, and the location of this particle is the location of the rectangle for the tracked object in this current frame. The coordinates of this particle are computed, and a rectangle of a 100 × 100 pixel size is drawn using these coordinates.

- (7)

- The location of this particle will become the reference location for the next frame. In the next frame, 121 particles will be considered around this location. For every new frame, Steps 1–7 will be repeated.

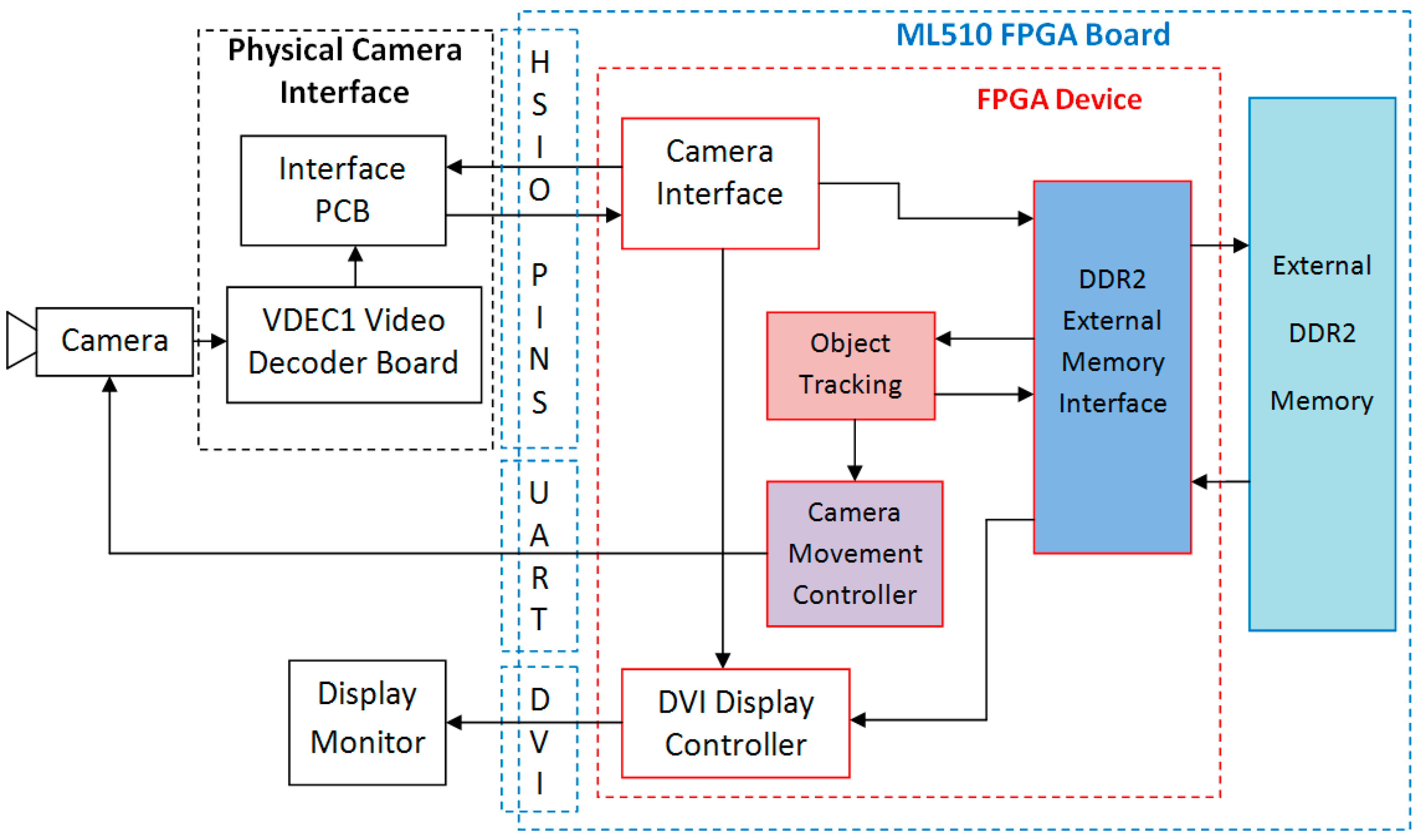

3. Implemented Object Tracking System

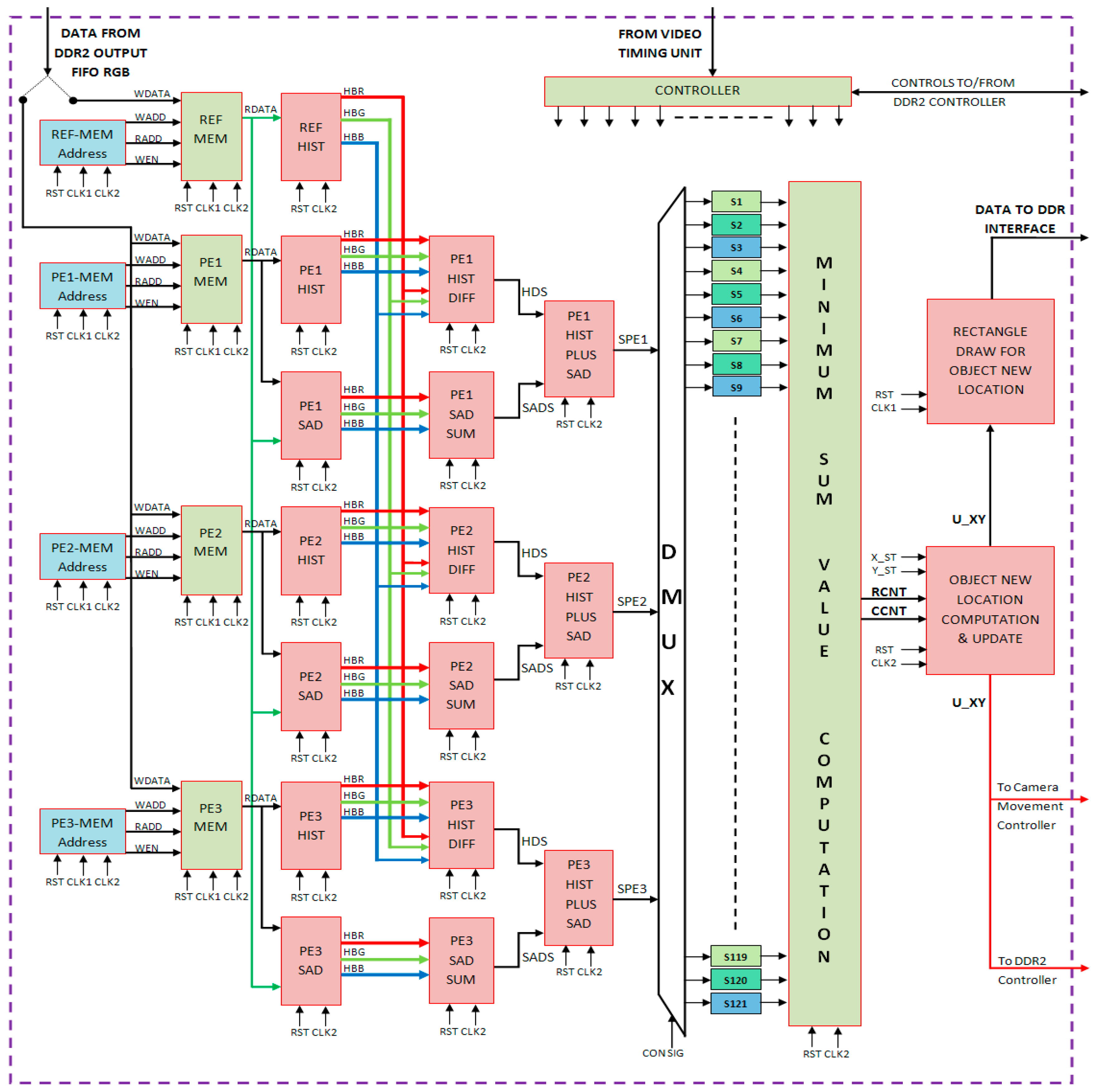

3.1. Proposed and Implemented Object Tracking Architecture

3.1.1. SAD Computation Module

3.1.2. Histogram Computation Module

3.1.3. Histogram Differences Sum Computation Module

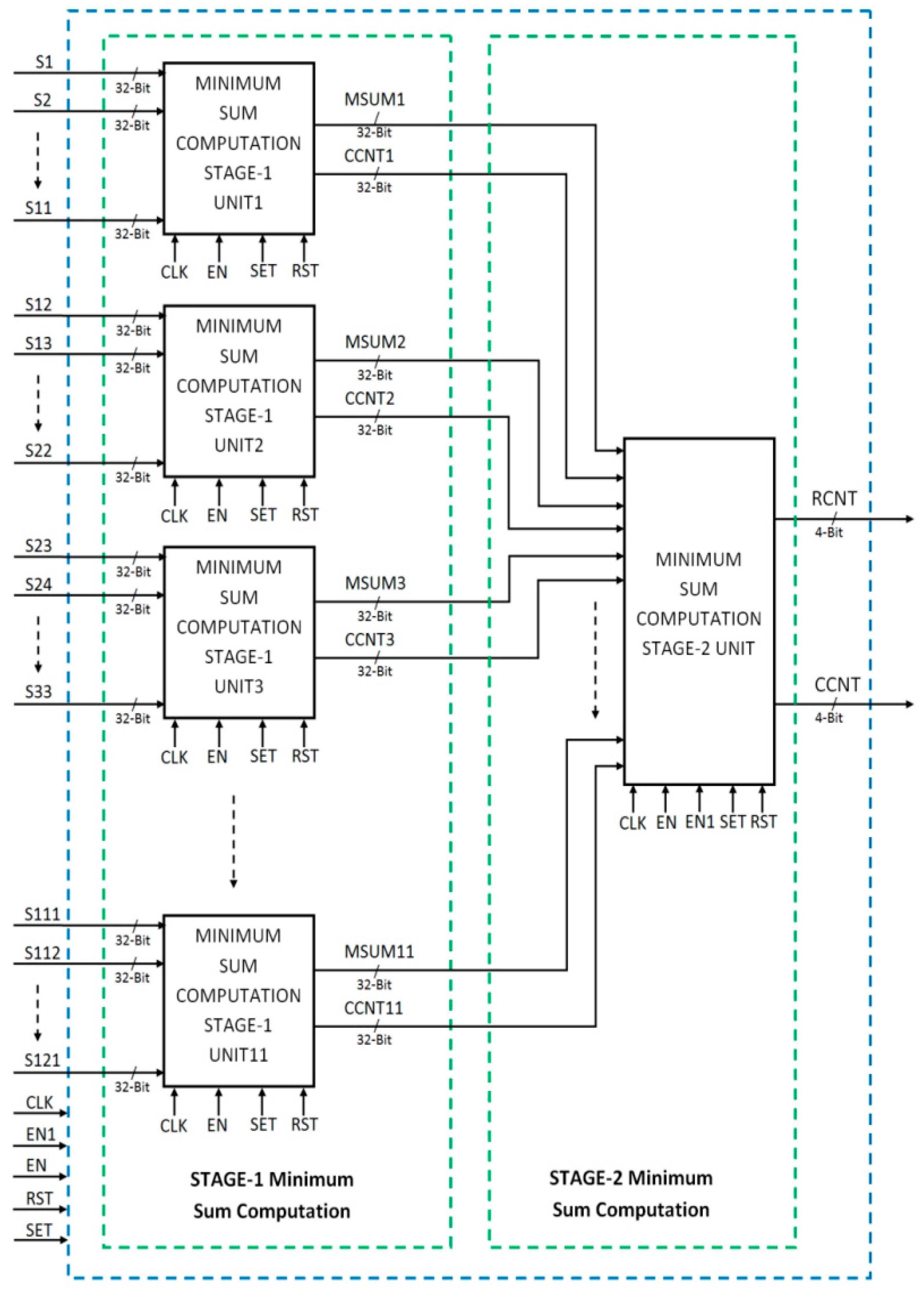

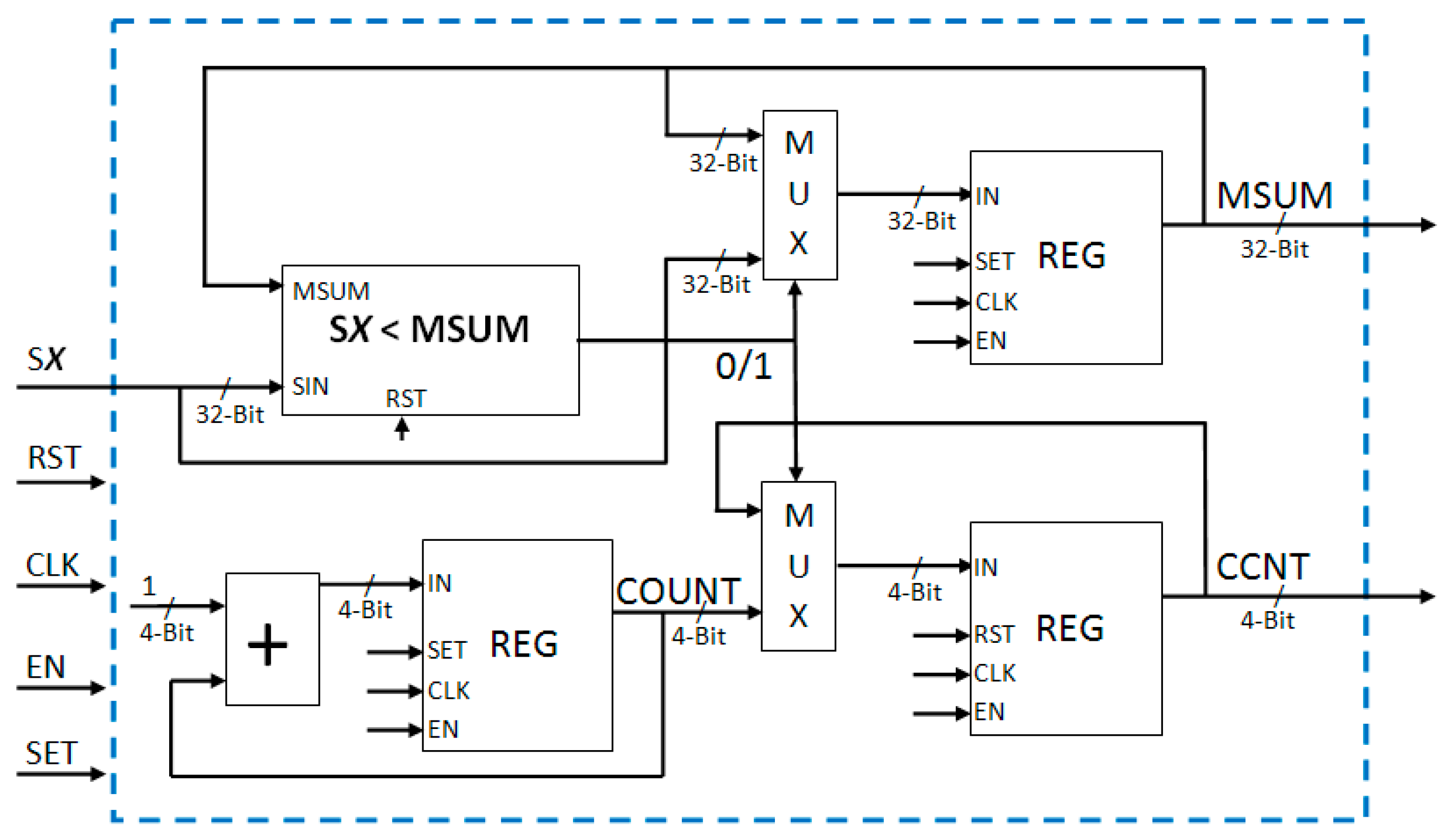

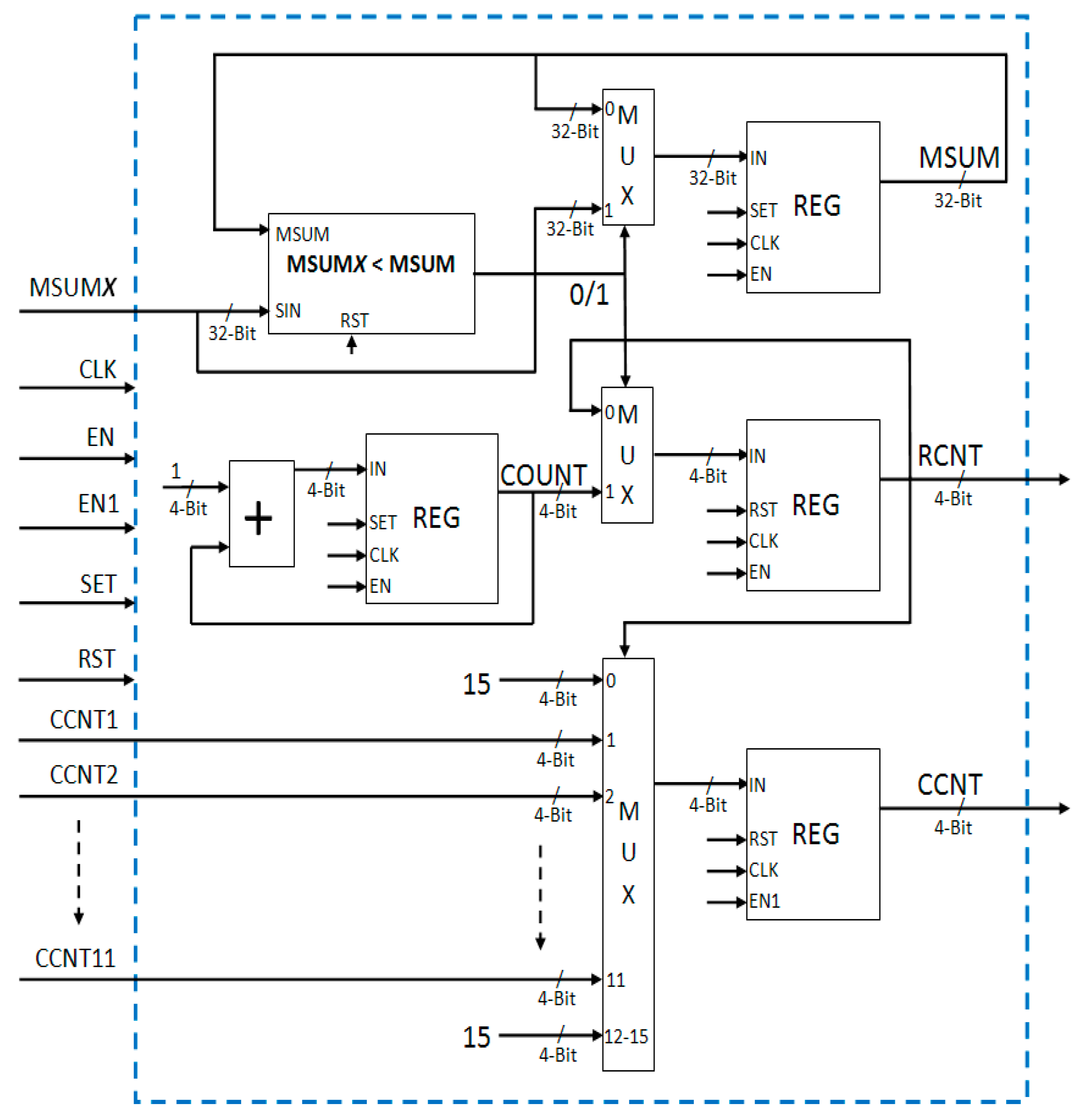

3.1.4. Minimum Sum Value Computation Module

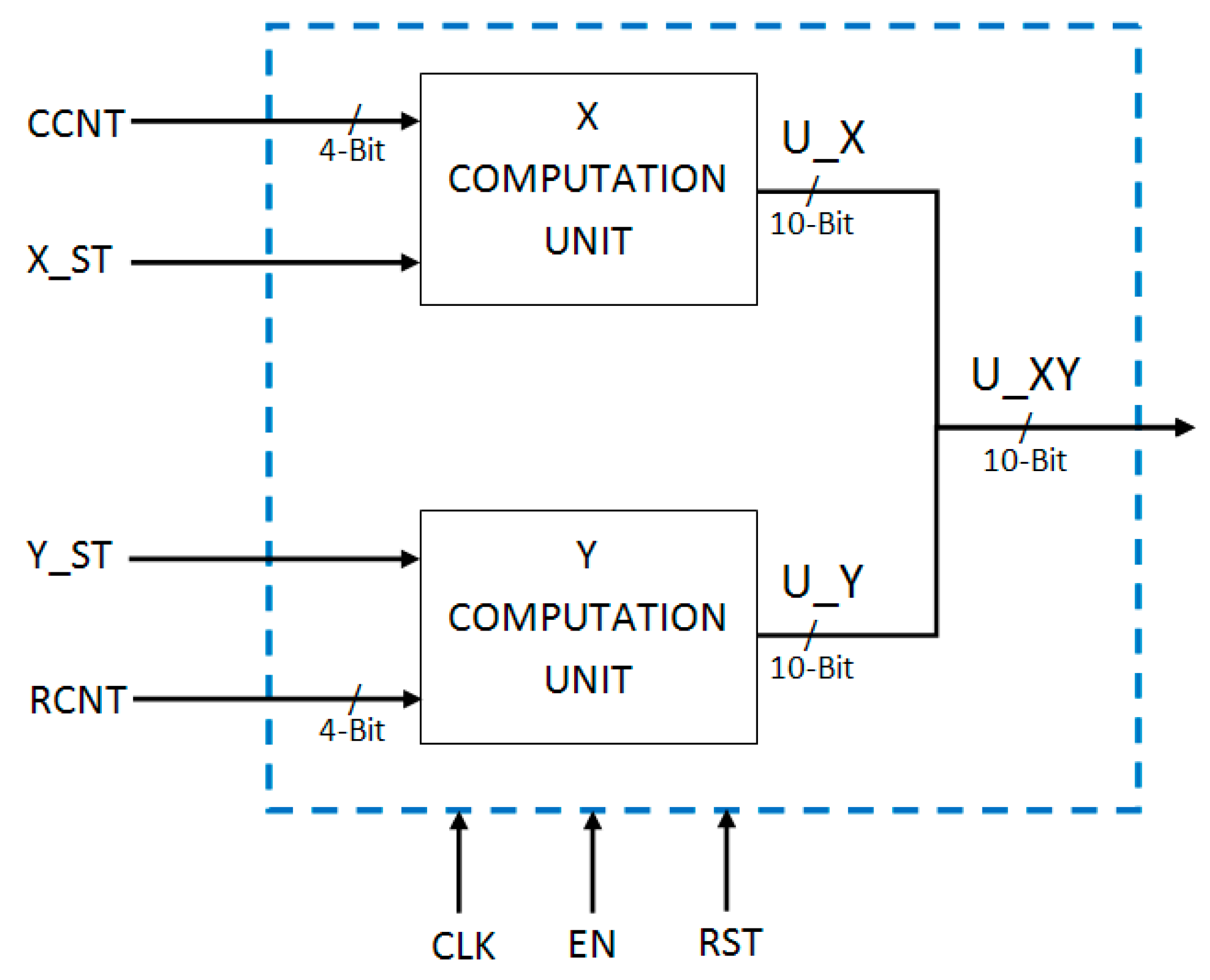

3.1.5. New Location Computation Module

4. Results and Discussions

4.1. Synthesis Results

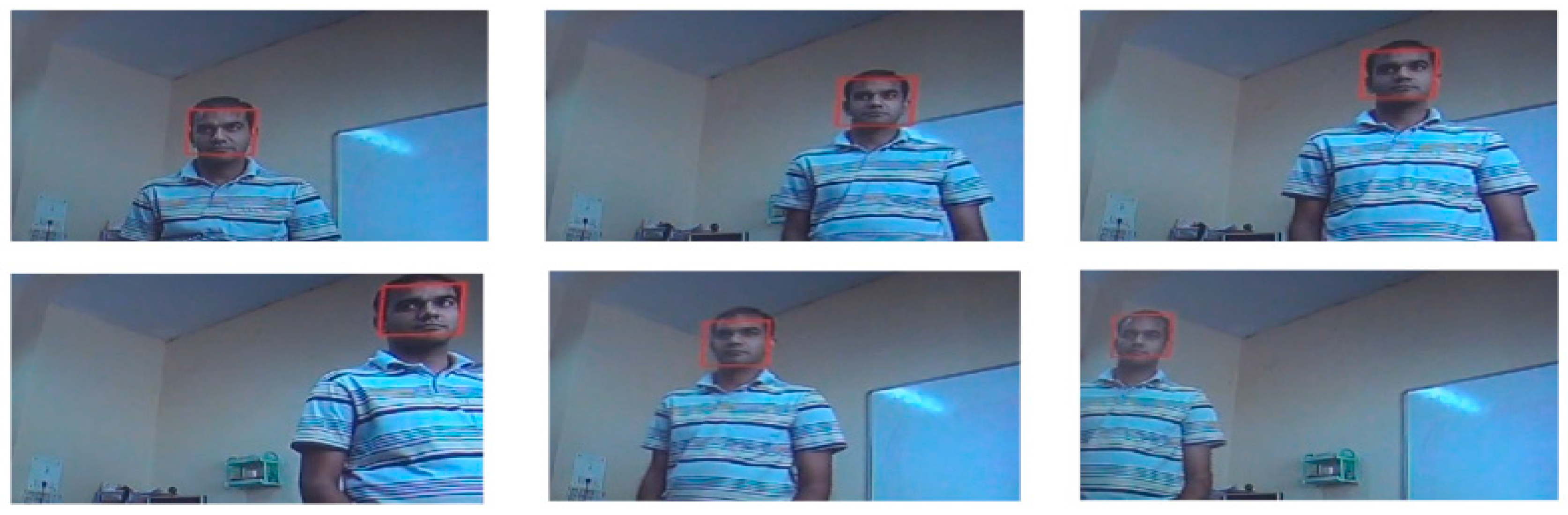

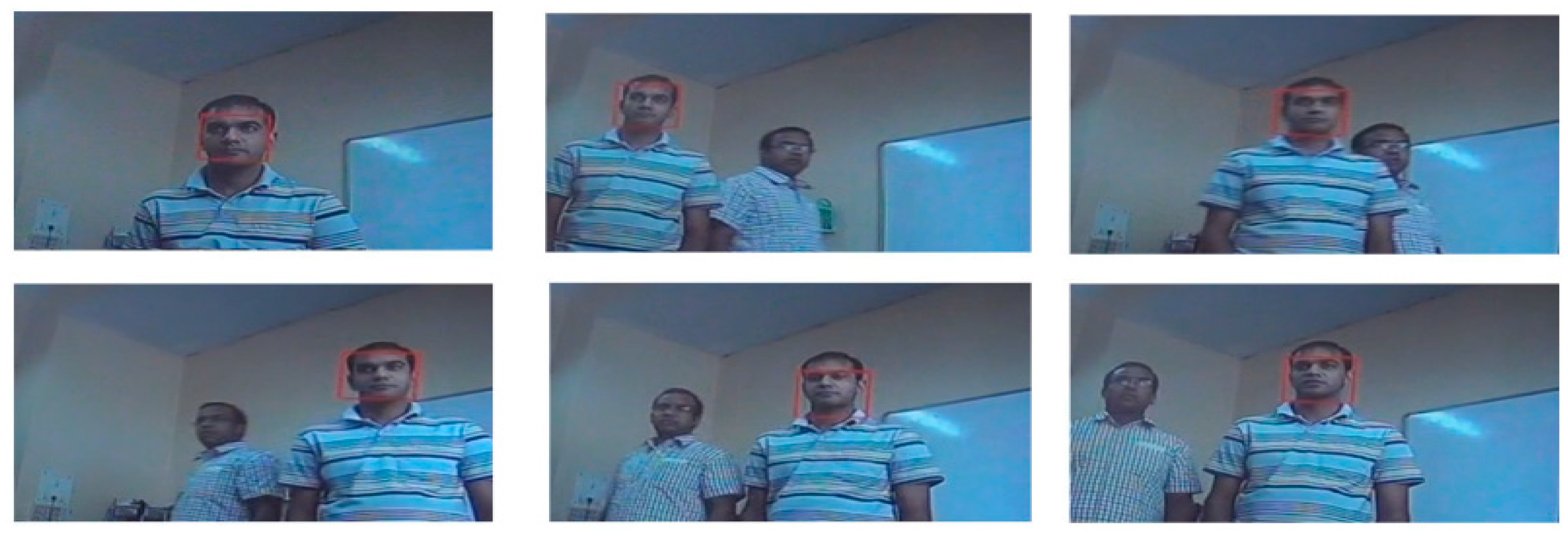

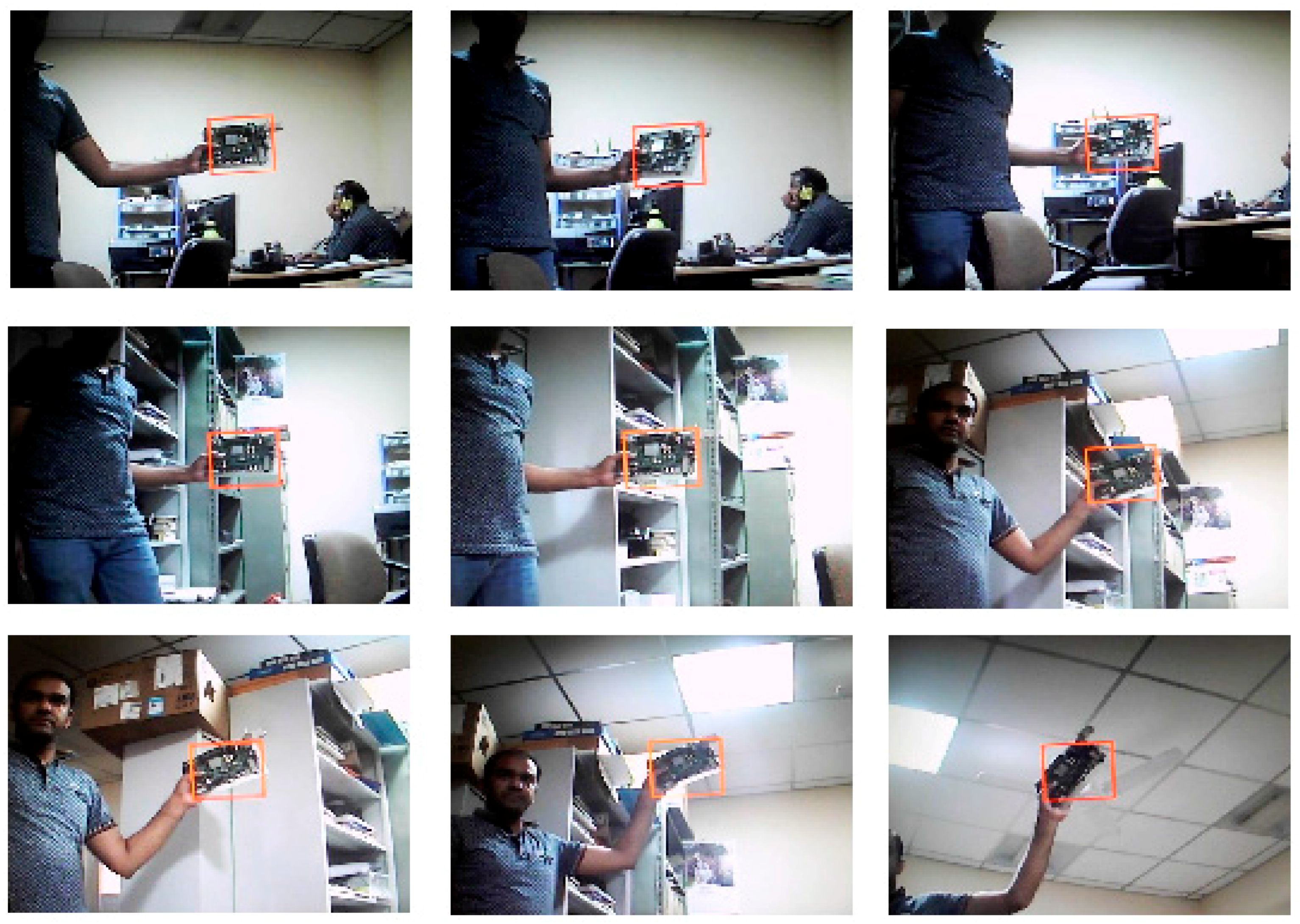

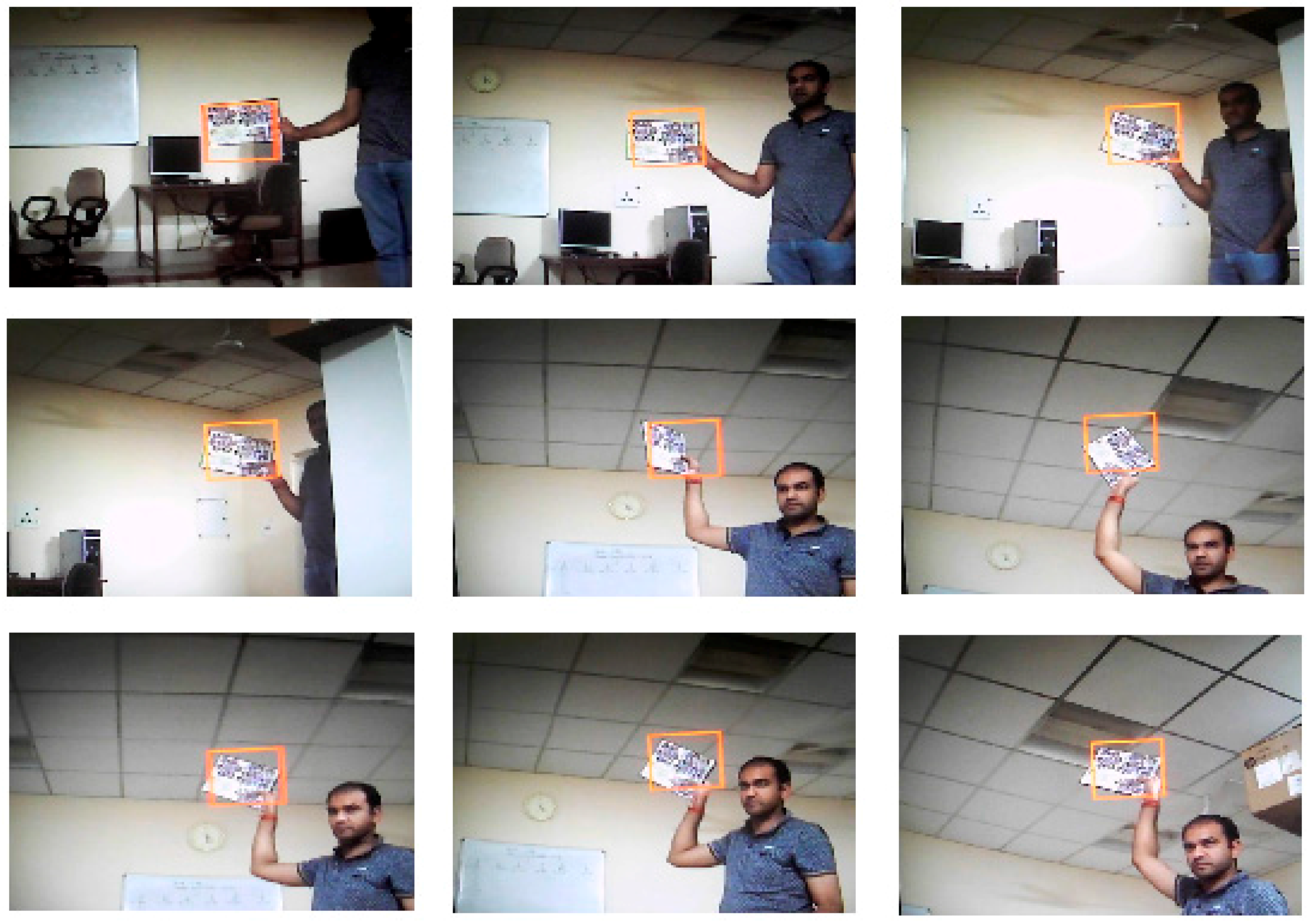

4.2. Object Tracking Results

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Yilmaz, A.; Javed, O.; Shah, M. Object Tracking: A Survey. ACM Comput. Surv. 2006, 38, 1–45. [Google Scholar] [CrossRef]

- Haritaoglu, I.; Harwood, D.; Davis, L.S. W4: Real-time Surveillance of People and Their Activities. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 809–830. [Google Scholar] [CrossRef]

- Chen, X.; Yang, J. Towards Monitoring Human Activities Using an Omni-directional Camera. In Proceedings of the Fourth IEEE International Conference on Multimodal Interfaces, Pittsburgh, PA, USA, 14–16 October 2002; pp. 423–428. [Google Scholar]

- Wren, C.R.; Azarbayejani, A.; Darrell, T.; Pentland, A.P. Pfinder: Real-Time Tracking of the Human Body. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 780–785. [Google Scholar] [CrossRef]

- Intille, S.S.; Davis, J.W.; Bobick, A.E. Real-Time Closed-World Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; pp. 697–703. [Google Scholar]

- Coifmana, B.; Beymerb, D.; McLauchlanb, P.; Malikb, J. A Real-Time Computer Vision System for Vehicle Tracking and Traffic Surveillance. Transp. Res. Part C Emerg. Technol. 1998, 6, 271–288. [Google Scholar] [CrossRef]

- Tai, J.C.; Tseng, S.T.; Lin, C.P.; Song, K.T. Real-Time Image Tracking for Automatic Traffic Monitoring and Enforcement Applications. Image Vis. Comput. 2004, 22, 485–501. [Google Scholar] [CrossRef]

- Pavlovic, V.I.; Sharma, R.; Huang, T.S. Visual Interpretation of Hand Gestures for Human-Computer Interaction: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 677–695. [Google Scholar] [CrossRef]

- Paschalakis, S.; Bober, M. Real-Time Face Detection and Tracking for Mobile Videoconferencing. Real Time Imaging 2004, 10, 81–94. [Google Scholar] [CrossRef]

- Sikora, T. The MPEG-4 Video Standard Verification Model. IEEE Trans. Circuits Syst. Video Technol. 1997, 7, 19–31. [Google Scholar] [CrossRef]

- Eleftheriadis, A.; Jacquinb, A. Automatic Face Location Detection and Tracking for Model-Assisted Coding of Video Teleconferencing Sequences at Low Bit-Rates. Signal Process. Image Commun. 1995, 7, 231–248. [Google Scholar] [CrossRef]

- Ahmed, J.; Shah, M.; Miller, A.; Harper, D.; Jafri, M.N. A Vision-Based System for a UGV to Handle a Road Intersection. In Proceedings of the 22nd National conference on Artificial intelligence, Vancouver, BC, Canada, 22–26 June 2007; pp. 1077–1082. [Google Scholar]

- Li, X.; Hu, W.; Shen, C.; Zhang, Z.; Dick, A.; Hengel, V.D. A Survey of Appearance Models in Visual Object Tracking. ACM Trans. Intell. Syst. Technol. 2013, 4, 1–48. [Google Scholar] [CrossRef]

- Porikli, F. Achieving Real-time Object Detection and Tracking under Extreme Condition. J. Real Time Imaging 2006, 1, 33–40. [Google Scholar] [CrossRef]

- Doulamis, A.; Doulamis, N.; Ntalianis, K.; Kollias, S. An Efficient Fully Unsupervised Video Object Segmentation Scheme Using an Adaptive Neural-Network Classifier Architecture. IEEE Trans. Neural Netw. 2003, 14, 616–630. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, J.; Jafri, M.N.; Ahmad, J.; Khan, M.I. Design and Implementation of a Neural Network for Real-Time Object Tracking. Int. J. Comput. Inf. Syst. Control Eng. 2007, 1, 1825–1828. [Google Scholar]

- Ahmed, J.; Jafri, M.N.; Ahmad, J. Target Tracking in an Image Sequence Using Wavelet Features and a Neural Network. In Proceedings of the IEEE Region 10 TENCON 2005 Conference, Melbourn, Australia, 21–24 November 2005; pp. 1–6. [Google Scholar]

- Stauffer, C.; Grimson, W. Learning Patterns of Activity using Real-time Tracking. IEEE Trans. Pattern Anal. Mach. Intel. 2000, 22, 747–757. [Google Scholar] [CrossRef]

- Kim, C.; Hwang, J.N. Fast and Automatic Video Object Segmentation and Tracking for Content-Based Applications. IEEE Trans. Circuits Syst. Video Technol. 2002, 12, 122–129. [Google Scholar]

- Gevers, T. Robust Segmentation and Tracking of Colored Objects in Video. IEEE Trans. Circuits Syst. Video Technol. 2004, 14, 776–781. [Google Scholar] [CrossRef]

- Erdem, C.E. Video Object Segmentation and Tracking using Region-based Statistics. Signal Process. Image Commun. 2007, 22, 891–905. [Google Scholar] [CrossRef]

- Papoutsakis, K.E.; Argyros, A.A. Object Tracking and Segmentation in a Closed Loop. Adv. Vis. Comput. Lect. Notes Comput. Sci. 2010, 6453, 405–416. [Google Scholar]

- Paddigari, V.; Kehtarnavaz, N. Real-time Predictive Zoom Tracking for Digital Still Cameras. J. Real Time Imaging 2007, 2, 45–54. [Google Scholar] [CrossRef]

- Isard, M.; Blake, A. CONDENSATION—Conditional Density Propagation for Visual Tracking. Int. J. Comput. Vis. 1998, 29, 5–28. [Google Scholar] [CrossRef]

- Porikli, F.; Tuzel, O.; Meer, P. Covariance Tracking using Model Update Based on Lie Algebra. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 728–735. [Google Scholar]

- Wong, S. Advanced Correlation Tracking of Objects in Cluttered Imagery. Proc. SPIE 2005, 5810, 1–12. [Google Scholar]

- Yilmaz, A.; Li, X.; Shah, M. Contour-based Object Tracking with Occlusion Handling in Video Acquired using Mobile Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1531–1536. [Google Scholar] [CrossRef] [PubMed]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active Contour Models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Black, M.J.; Jepson, A.D. EigenTracking: Robust Matching and Tracking of Articulated Objects Using a View-based Representation. Int. J. Comput. Vis. 1998, 26, 63–84. [Google Scholar] [CrossRef]

- Li, C.M.; Li, Y.S.; Zhuang, Q.D.; Li, Q.M.; Wu, R.H.; Li, Y. Moving Object Segmentation and Tracking in Video. In Proceedings of the Fourth International Conference on Machine Learning and Cybernetics, Guangzhou, China, 18–21 August 2005; pp. 4957–4960. [Google Scholar]

- Comaniciu, D.; Visvanathan, R.; Meer, P. Kernel based Object Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 564–577. [Google Scholar] [CrossRef]

- Comaniciu, D.; Ramesh, V.; Meer, P. Real-time Tracking of Non-Rigid Objects Using Mean Shift. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Guangzhou, China, 18–21 August 2000; Volume 2, pp. 142–149. [Google Scholar]

- Namboodiri, V.P.; Ghorawat, A.; Chaudhuri, S. Improved Kernel-Based Object Tracking Under Occluded Scenarios. Comput. Vis. Graph. Image Process. Lect. Notes Comput. Sci. 2006, 4338, 504–515. [Google Scholar]

- Dargazany, A.; Soleimani, A.; Ahmadyfard, A. Multibandwidth Kernel-Based Object Tracking. Adv. Artif. Intell. 2010, 2010, 175603. [Google Scholar] [CrossRef]

- Arulampalam, M.S.; Maskell, S.; Gordon, N.; Clapp, T. A Tutorial on Particle Filters for Online Nonlinear/Non-Gaussian Bayesian Tracking. IEEE Trans. Signal Process. 2002, 50, 174–188. [Google Scholar] [CrossRef]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle Filters for Positioning, Navigation, and Tracking. IEEE Trans. Signal Process. 2002, 50, 425–437. [Google Scholar] [CrossRef]

- Pérez, P.; Hue, C.; Vermaak, J.; Gangnet, M. Color-Based Probabilistic Tracking. Comput. Vis. Lect. Notes Comput. Sci. 2002, 2350, 661–675. [Google Scholar]

- Gupta, N.; Mittal, P.; Patwardhan, K.S.; Roy, S.D.; Chaudhury, S.; Banerjee, S. On Line Predictive Appearance-Based Tracking. In Proceedings of the IEEE International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 1041–1044. [Google Scholar]

- Ho, J.; Lee, K.C.; Yang, M.H.; Kriegman, D. Visual Tracking Using Learned Linear Subspaces. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. 782–789. [Google Scholar]

- Tripathi, S.; Chaudhury, S.; Roy, S.D. Online Improved Eigen Tracking. In Proceedings of the Seventh International Conference on Advances in Pattern Recognition, Kolkata, India, 4–6 February 2009; pp. 278–281. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust Fragments-based Tracking Using the Integral Histogram. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–23 June 2006; pp. 798–805. [Google Scholar]

- Porikli, F. Integral Histogram: A Fast Way to Extract Histograms in Cartesian Spaces. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 829–836. [Google Scholar]

- Ahmed, J.; Ali, A.; Khan, A. Stabilized active camera tracking system. J. Real Time Image Process. 2016, 11, 315–334. [Google Scholar] [CrossRef]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-Learning-Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1409–4122. [Google Scholar] [CrossRef] [PubMed]

- Smeulders, A.W.M.; Cucchiara, R.; Dehghan, A. Visual Tracking: An Experimental Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1422–1468. [Google Scholar]

- Johnston, C.T.; Gribbon, K.T.; Bailey, D.G. FPGA based Remote Object Tracking for Real-time Control. In Proceedings of the First International Conference on Sensing Technology, Palmerston North, New Zealand, 21–23 November 2005; pp. 66–71. [Google Scholar]

- Yamaoka, K.; Morimoto, T.; Adachi, H.; Awane, K.; Koide, T.; Mattausch, H.J. Multi-Object Tracking VLSI Architecture Using Image-Scan based Region Growing and Feature Matching. In Proceedings of the IEEE International Symposium on Circuits and Systems, San Diego, CA, USA, 20–25 June 2006; pp. 5575–5578. [Google Scholar]

- Liu, S.; Papakonstantinou, A.; Wang, H.; Chen, D. Real-Time Object Tracking System on FPGAs. In Proceedings of the Symposium on Application Accelerators in High-Performance Computing, Knoxville, Tennessee, 19–20 July 2011; pp. 1–7. [Google Scholar]

- Kristensen, F.; Hedberg, H.; Jiang, H.; Nilsson, P.; Wall, V.O. An Embedded Real-Time Surveillance System: Implementation and Evaluation. J. Signal Process. Syst. 2008, 52, 75–94. [Google Scholar] [CrossRef]

- Chan, S.C.; Zhang, S.; Wu, J.F.; Tan, H.J.; Ni, J.Q.; Hung, Y.S. On the Hardware/Software Design and Implementation of a High Definition Multiview Video Surveillance System. IEEE J. Emerg. Sel. Top. Circuits Syst. 2013, 3, 248–262. [Google Scholar] [CrossRef]

- Xu, J.; Dou, Y.; Li, J.; Zhou, X.; Dou, Q. FPGA Accelerating Algorithms of Active Shape Model in People Tracking Applications. In Proceedings of the 10th Euromicro Conference on Digital System Design Architectures, Methods and Tools, Lubeck, Germany, 29–31 August 2007; pp. 432–435. [Google Scholar]

- Shahzada, M.; Zahidb, S. Image Coprocessor: A Real-time Approach towards Object Tracking. In Proceedings of the International Conference on Digital Image Processing, Bangkok, Thailand, 7–9 March 2009; pp. 220–224. [Google Scholar]

- Raju, K.S.; Baruah, G.; Rajesham, M.; Phukan, P.; Pandey, M. Implementation of Moving Object Tracking using EDK. Int. J. Comput. Sci. Issues 2012, 9, 43–50. [Google Scholar]

- Raju, K.S.; Borgohain, D.; Pandey, M. A Hardware Implementation to Compute Displacement of Moving Object in a Real Time Video. Int. J. Comput. Appl. 2013, 69, 41–44. [Google Scholar]

- McErlean, M. An FPGA Implementation of Hierarchical Motion Estimation for Embedded Object Tracking. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology, Vancouver, BC, Canada, 12–14 December 2006; pp. 242–247. [Google Scholar]

- Hsu, Y.P.; Miao, H.C.; Tsai, C.C. FPGA Implementation of a Real-Time Image Tracking System. In Proceedings of the SICE Annual Conference, Taipei, Taiwan, 18–21 August 2010; pp. 2878–2884. [Google Scholar]

- Elkhatib, L.N.; Hussin, F.A.; Xia, L.; Sebastian, P. An Optimal Design of Moving Object Tracking Algorithm on FPGA. In Proceedings of the International Conference on Intelligent and Advanced Systems, Kuala Lumpur, Malaysia, 12–14 June 2012; pp. 745–749. [Google Scholar]

- Wong, S.; Collins, J. A Proposed FPGA Architecture for Real-Time Object Tracking using Commodity Sensors. In Proceedings of the 19th International Conference on Mechatronics and Machine Vision in Practice, Auckland, New Zealand, 28–30 November2012; pp. 156–161. [Google Scholar]

- Popescu, D.; Patarniche, D. FPGA Implementation of Video Processing-Based Algorithm for Object Tracking. Univ. Politeh. Buchar. Sci. Bull. Ser. C 2010, 72, 121–130. [Google Scholar]

- Lu, X.; Ren, D.; Yu, S. FPGA-based Real-Time Object Tracking for Mobile Robot. In Proceedings of the International Conference on Audio Language and Image Processing, Shanghai, China, 23–25 November 2010; pp. 1657–1662. [Google Scholar]

- El-Halym, H.A.A.; Mahmoud, I.I.; Habib, S.E.D. Efficient Hardware Architecture for Particle Filter Based Object Tracking. In Proceedings of the 17th International Conference on Image Processing, Rio de Janeiro, Brazil, 17–19 June 2010; pp. 4497–4500. [Google Scholar]

- Agrawal, S.; Engineer, P.; Velmurugan, R.; Patkar, S. FPGA Implementation of Particle Filter based Object Tracking in Video. In Proceedings of the International Symposium on Electronic System Design, Kolkata, India, 19–22 December 2012; pp. 82–86. [Google Scholar]

- Cho, J.U.; Jin, S.H.; Pham, X.D.; Kim, D.; Jeon, J.W. A Real-Time Color Feature Tracking System Using Color Histograms. In Proceedings of the International Conference on Control, Automation and Systems, Seoul, Korea, 17–20 October 2007; pp. 1163–1167. [Google Scholar]

- Cho, J.U.; Jin, S.H.; Pham, X.D.; Kim, D.; Jeon, J.W. FPGA-Based Real-Time Visual Tracking System Using Adaptive Color Histograms. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2007; pp. 172–177. [Google Scholar]

- Nummiaro, K.; Meier, E.K.; Gool, L.V. A Color-based Particle Filter. Image Vis. Comput. 2003, 21, 99–110. [Google Scholar] [CrossRef]

- Kang, S.; Paik, J.K.; Koschan, A.; Abidi, B.R.; Abidi, M.A. Real-time video tracking using PTZ cameras. Proc. SPIE 2003, 5132, 103–111. [Google Scholar]

- Dinh, T.; Yu, Q.; Medioni, G. Real Time Tracking using an Active Pan-Tilt-Zoom Network Camera. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MI, USA, 10–15 October 2009; pp. 3786–3793. [Google Scholar]

- Varcheie, P.D.Z.; Bilodea, G.A. Active People Tracking by a PTZ Camera in IP Surveillance System. In Proceedings of the IEEE International Workshop on Robotic and Sensors Environments, Lecco, Italy, 6–7 November 2009; pp. 98–103. [Google Scholar]

- Haj, M.A.; Bagdanov, A.D.; Gonzalez, J.; Roca, F.X. Reactive object tracking with a single PTZ camera. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1690–1693. [Google Scholar]

- Lee, S.G.; Batkhishig, R. Implementation of a Real-Time Image Object Tracking System for PTZ Cameras. Converg. Hybrid Inf. Technol. Commun. Comput. Inf. Sci. 2011, 206, 121–128. [Google Scholar]

| Resources | Camera Interface | DVI Display Interface | Camera Movement Controller | DDR2 External Memory Interface | Proposed and Designed Object Tracking Architecture |

|---|---|---|---|---|---|

| Slice Registers | 391 | 79 | 162 | 3609 | 23,174 |

| Slice LUTs | 434 | 101 | 487 | 2568 | 34,157 |

| Route-thrus | 42 | 39 | 48 | 113 | 9033 |

| Occupied Slices | 199 | 33 | 160 | 1616 | 10,426 |

| BRAMs 36K | 3 | 0 | 0 | 16 | 84 |

| Memory (Kb) | 108 | 0 | 0 | 576 | 3024 |

| DSP Slices | 3 | 0 | 0 | 0 | 0 |

| IOs | 16 | 22 | 5 | 255 | 292 |

| Resources | Complete System (Object Tracking Architecture + Four Interfaces) | Total Available Resources | Percentage of Utilization |

|---|---|---|---|

| Slice Registers | 27,396 | 81,920 | 33.44% |

| Slice LUTs | 37,715 | 81,920 | 46.04% |

| Route-thrus | 9265 | 163,840 | 5.65% |

| Occupied Slices | 12,414 | 20,840 | 59.56% |

| BRAMs 36K | 103 | 298 | 34.56% |

| Memory (Kb) | 3708 | 10,728 | 34.56% |

| DSP Slices | 3 | 320 | 0.94% |

| Target FPGA Device | Implementation | Video Resolution | Frame Rate (fps) |

|---|---|---|---|

| Virtex5 (xc5fx130t-2ff1738) | Our Implementation | PAL (720 × 576) | 303 |

| Virtex5 (xc5lx110t) | Our Implementation | PAL (720 × 576) | 303 |

| [62] | PAL (720 × 576) | 197 | |

| Virtex4 (xc4vlx200) | Our Implementation | PAL (720 × 576) | 200 |

| [63] | VGA (640 × 480) | 81 | |

| [64] | VGA (640 × 480) | 81 | |

| Virtex-IIPro (xc2pro30) | [49] | QVGA (320 × 240) | 25 |

| Spartan3 (xc3sd100a) | [59] | PAL (720 × 576) | 30 |

| Number of Particles | Tracked Object Size | Number of Histogram Bins in Each R, G and B Color Channel | Video Resolution | Maximum Clock Frequency | Frame Rate (fps) |

|---|---|---|---|---|---|

| 121 | 100 × 100 Pixels | 26 Bins | 720 × 576 | 125.8 MHz | 303 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, S.; Shekhar, C.; Vohra, A. Real-Time FPGA-Based Object Tracker with Automatic Pan-Tilt Features for Smart Video Surveillance Systems. J. Imaging 2017, 3, 18. https://doi.org/10.3390/jimaging3020018

Singh S, Shekhar C, Vohra A. Real-Time FPGA-Based Object Tracker with Automatic Pan-Tilt Features for Smart Video Surveillance Systems. Journal of Imaging. 2017; 3(2):18. https://doi.org/10.3390/jimaging3020018

Chicago/Turabian StyleSingh, Sanjay, Chandra Shekhar, and Anil Vohra. 2017. "Real-Time FPGA-Based Object Tracker with Automatic Pan-Tilt Features for Smart Video Surveillance Systems" Journal of Imaging 3, no. 2: 18. https://doi.org/10.3390/jimaging3020018

APA StyleSingh, S., Shekhar, C., & Vohra, A. (2017). Real-Time FPGA-Based Object Tracker with Automatic Pan-Tilt Features for Smart Video Surveillance Systems. Journal of Imaging, 3(2), 18. https://doi.org/10.3390/jimaging3020018