Machine-Vision Systems Selection for Agricultural Vehicles: A Guide

Abstract

:1. Introduction

- Tradeoff between vision system specifications and performances. Operating spectral ranges are to be identified, i.e., multispectral, hyperspectral, including visible, infrared, thermal or ultra-violet. Spectral and spatial sensor’s resolutions are also to be considered including the intrinsic parameters.

- Definition of the region of interest and panoramic view. Apart from the spatial resolutions mentioned above, the optical system plays an important role in acquiring images with sufficient quality, based on lens aperture. At the same time, lens distortions and aberrations are to be determined. The field of view, in conjunction with the sensor resolution, must also be determined.

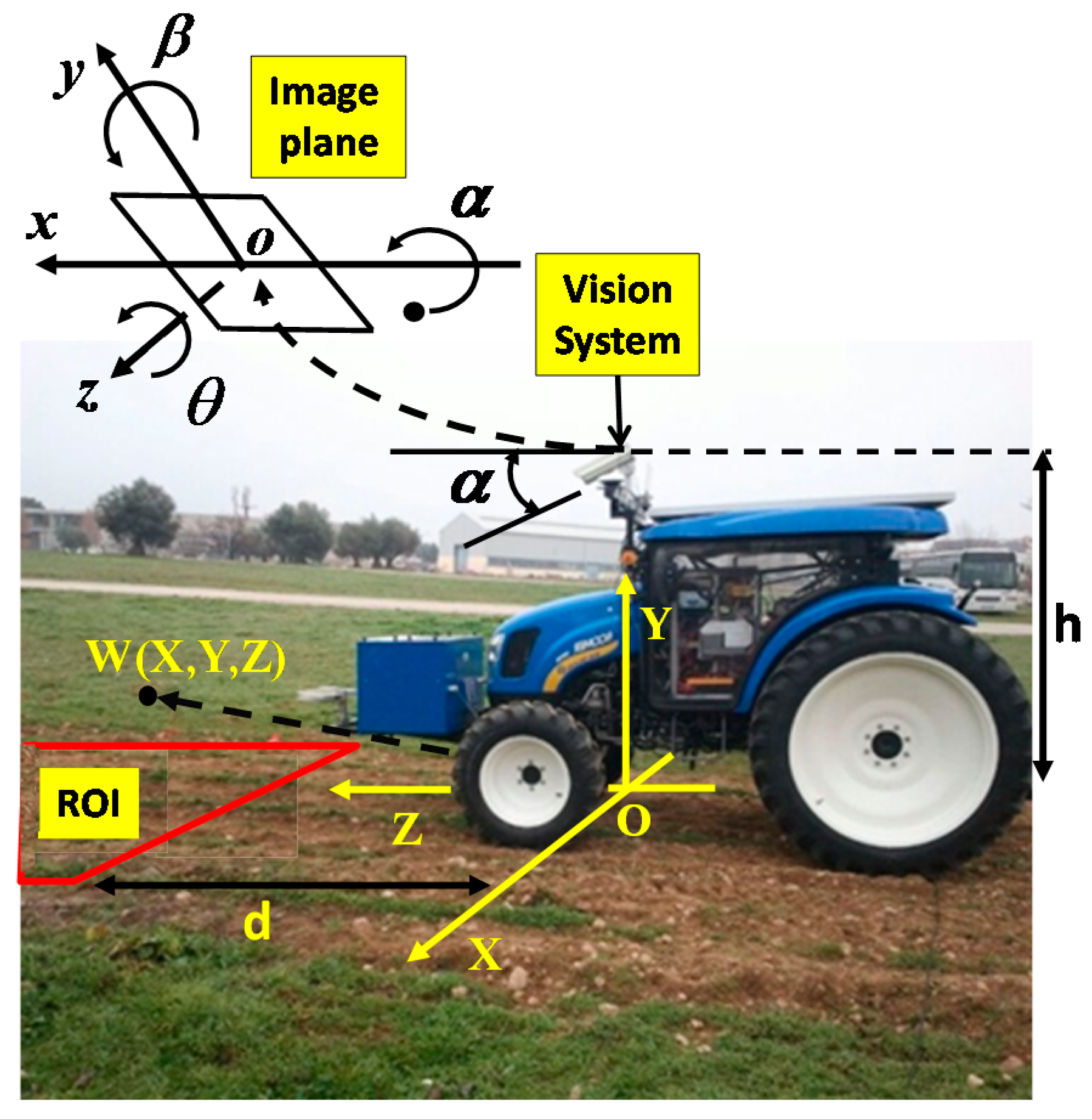

- Vision system arrangement with specific poses onboard the vehicles (ground or aerial). All issues concerning this point are related to the vision system location: height above the ground, distance to the working area or region of interest, rotation angles (roll, yaw and pitch). Extrinsic parameters are involved.

2. Spectral-Band Selection

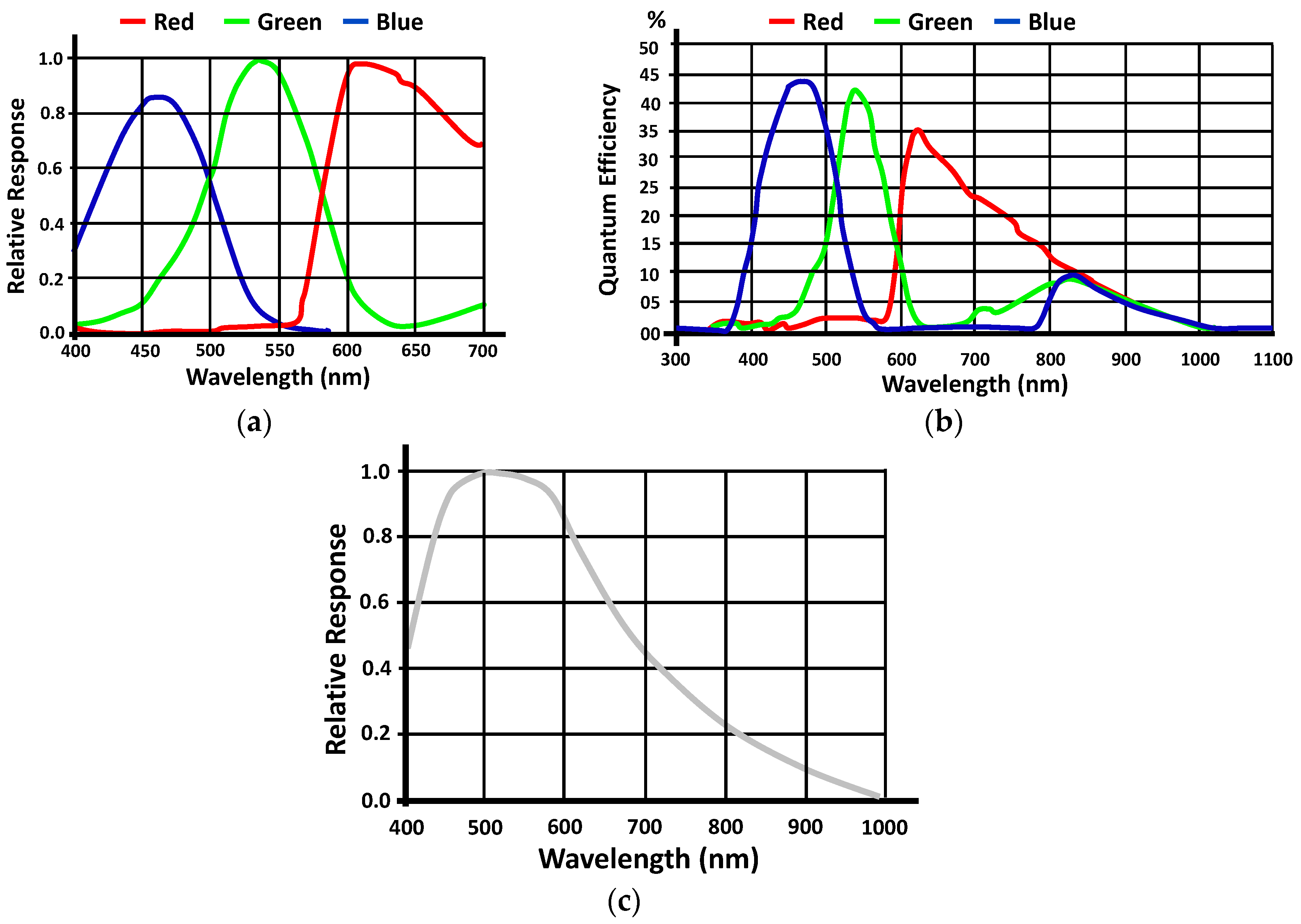

2.1. Visible Spectrum

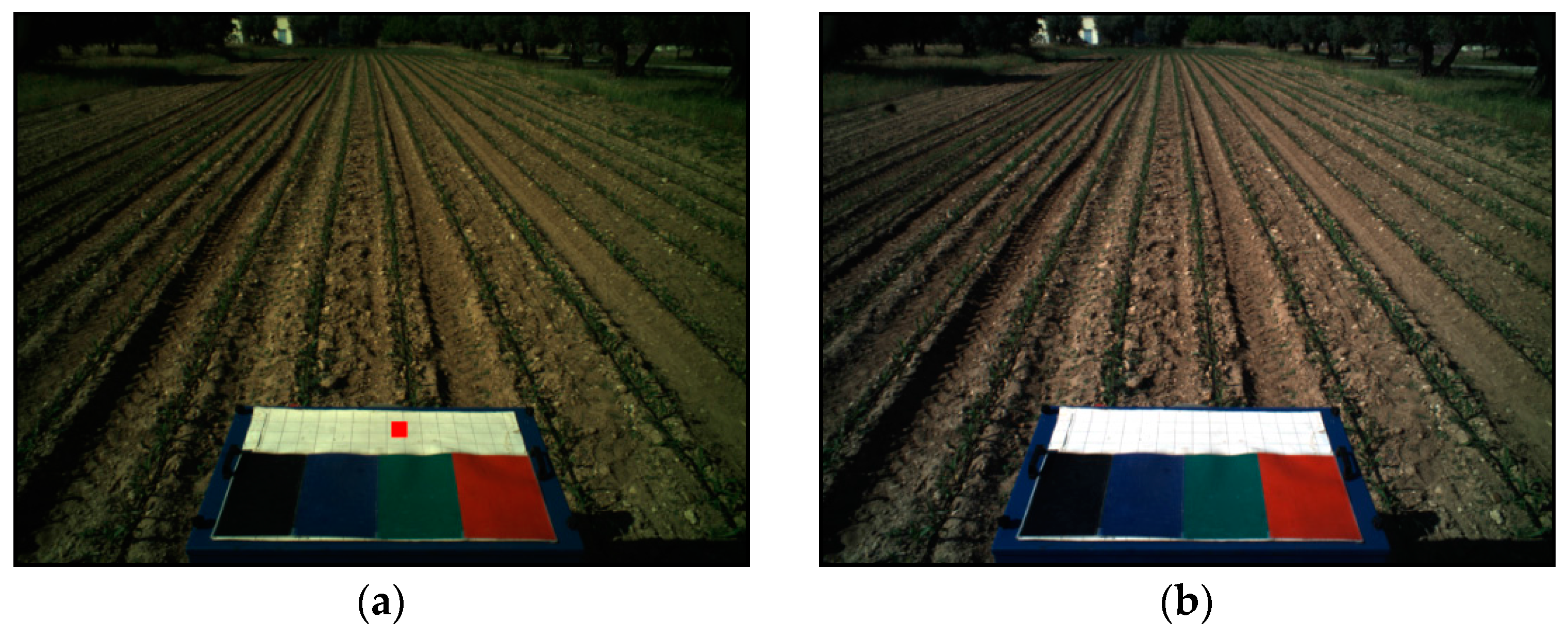

2.2. Spectral Corrections: Vignetting Effect and White Balance

2.3. Infrared Spectrum

2.4. Illustrative Examples and Summary

- Broad spectral dynamic range with adjustable parameters to control the amount of charge received by the sensor, considering the adverse environmental conditions that cause high variability on the illumination in such outdoor environments. In this regard, specific considerations are to be assumed depending on the vehicle (ground, aerial) where the machine vision system is to be installed onboard. Of particular relevance is the effect known as bidirectional reflectance, which appears in sunny days due to angular variations, which may become critical in aerial vehicles [45].

- Ability to produce images with the maximum spectral quality as possible, avoiding or removing undesired effects such as the vignetting effect.

- A system robust enough to cope with adverse situations and with responses as deterministic as possible.

3. Imaging sensors and Optical Systems Selection

3.1. Imaging Sensors

3.2. Optical Systems

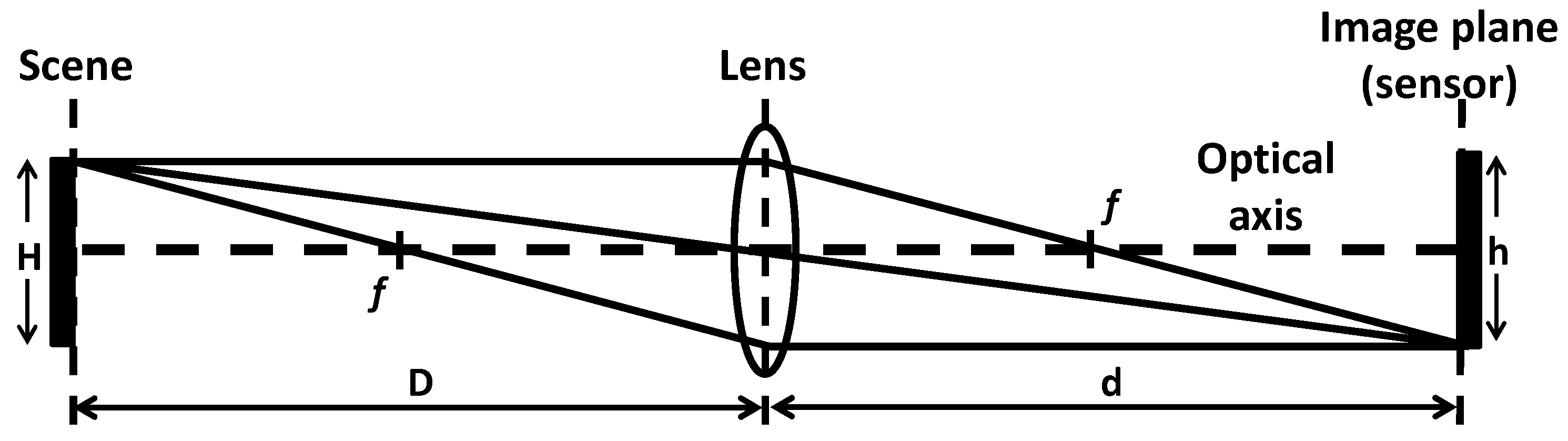

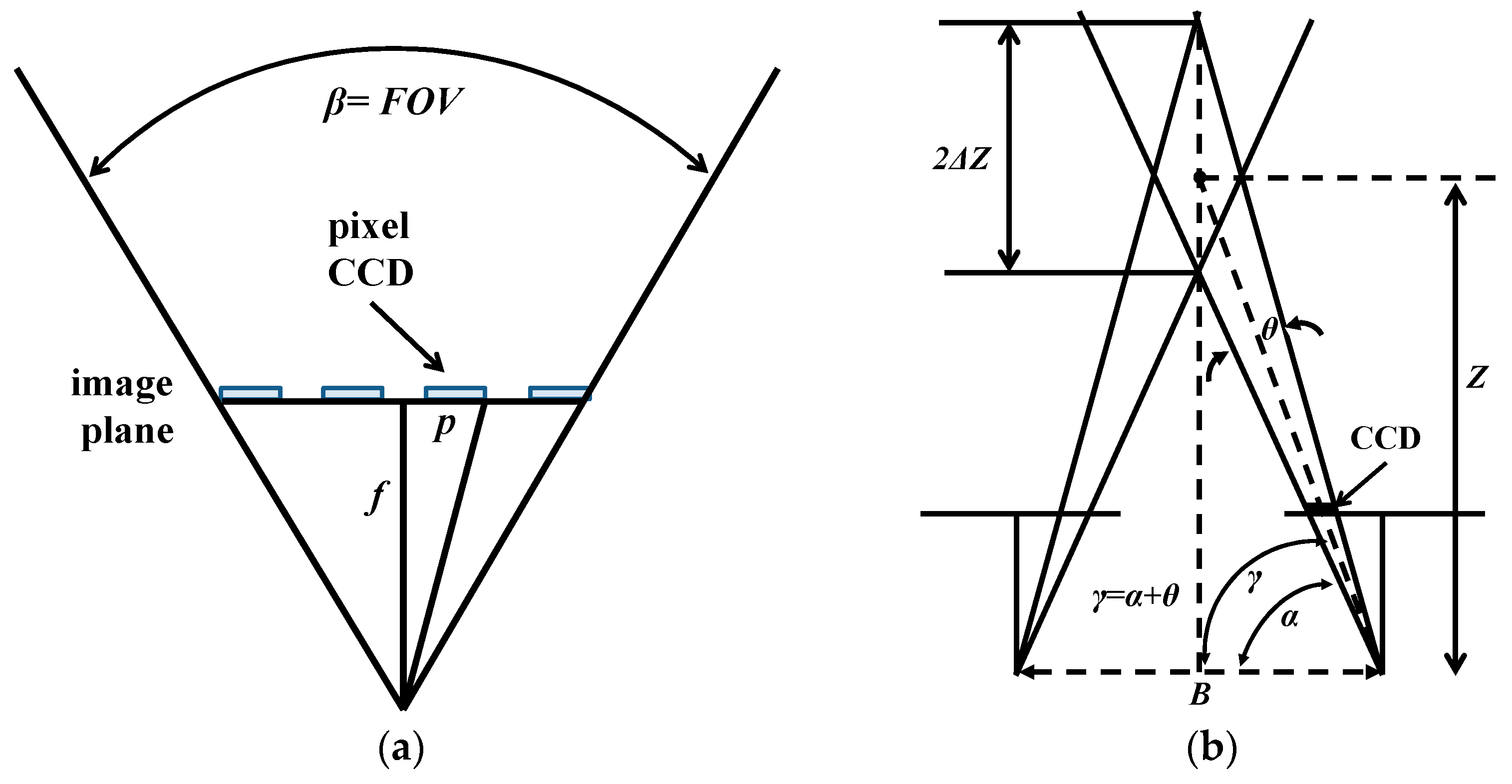

- Set of lenses, which is the main part of the optical system. Manufacturers provide information about the focal length (f) and related parameters. Sometimes includes a manual focus setting or autofocus to achieve images of objects with the appropriate sharpness. Systems with variable focal length exist, based on motorized equipment with external control. The focal length is a critical parameter in agricultural applications which is to be considered later for geometric machine vision system arrangement.

- Format. Specifying the area of the sensor to be illuminated. This area should be compatible with the type of imaging sensor, specified above. An optical system that does not illuminate the full area creates severe image distortions. Figure 6 displays a sensor of type 2/3″ and a lens of 1/2″, i.e., the full sensor area is greater than the area illuminated by the lens.

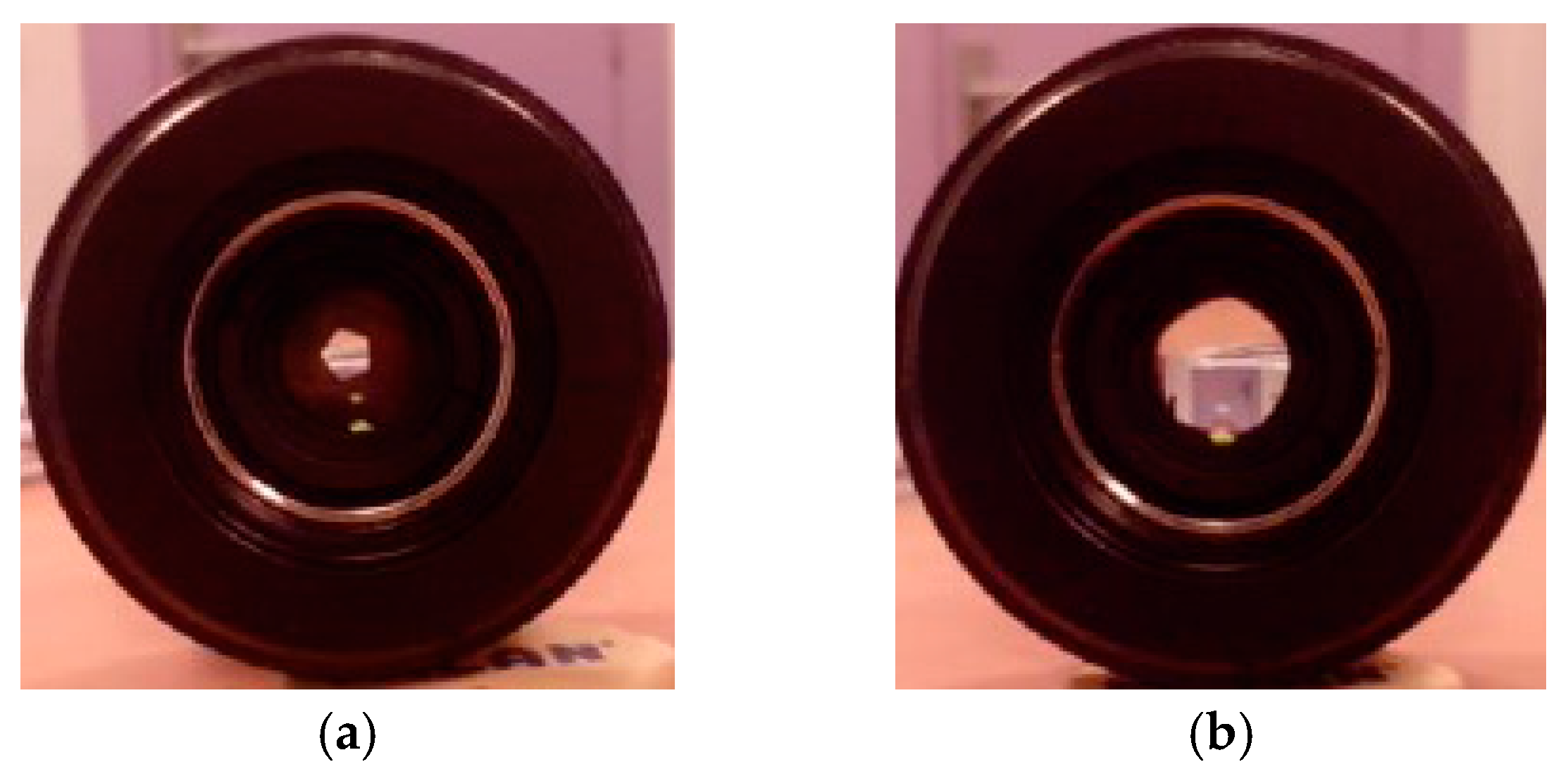

- Iris diaphragm automatic or manual. This consists of a structure with movable blades producing an aperture which controls the area where the light, traveling towards the sensor, passes. Manufacturers specify it in terms of a value called the f-stop or f-number, which determines the ratio of f, to the area of the opening or more specifically the diameter (A) of the aperture area, i.e., N = f/A. The aperture setting is defined as steps or f-numbers, where each step defines a reduction by a half of the intensity from the previous stop and consequently a reduction in the aperture diameter of 2−⅟2. Figure 7 displays a lens aperture according to the f-number which is minimum in (a) with 16 and maximum in (b) with 1.9. Depending on the system, the scale varies, represented in fractional stops. So, to compute the scaled numbers in steps of N = 0, 1, 2, …, with the scale s, the following sequence is normally used: 20.5(Ns). The scales are defined as full stop (s = 1), half stop (s = 1/2), third stop (s = 1/3) and so on. The following is an illustrative example, if s = 1/3 the scaled numbers are: 1, 1.1, 1.3, …, 2.5,…16,…

- Holders and interfaces. With the aim of adapting the required accessories, filter holders are specified. The type of mount (C/F) is also provided by manufactures.

- Relative illumination and lens distortion. Relative illumination and distortion (barrel and pincushion) are provided as a function of focal distances.

- Transmittance (T): Fraction of incident light power transmitted through the optical system. Typical lens transmittances vary from 60% to 90%. A T-stop is defined as the f-number divided by the square root of the transmittance for the lens. If T-stop is N the image contains the same intensity as the ideal lens with transmittance of 100% and with f-number N. Relative spectral transmittance with respect wavelengths is also usually provided. Special care should be taken to ensure the proper transmission of the desired wavelengths toward the sensor.

- Optical filters. Used to attenuate or enhance the intensity of specific spectral bands, they transmit or reflect specific wavelengths. To achieve the maximum efficiency, their different parameters should be considered, including central wavelength, bandwidth, blocking range, optical density, cut on/off wavelength [52]. A common manufacturing technique consists of a deposition of layers alternating materials with high and low index of refraction. An example of a filter is the Schneider UV/IR 486 cut-off filter [32].

3.3. Focal Length Selection

4. Geometric Visual System Attitude

4.1. Initial Considerations

- Crop row detection: sometimes a fixed number of crop rows are to be detected for crops and weeds discrimination for site-specific treatments or precise guiding [5,7,10,11,12,13,14,15,16]. Depending on the number of crop rows to be detected or to follow during guidance, the vision system must be conveniently designed such that the required number of rows, considering the inter crop row spaces, can be imaged with sufficient image resolutions.

- Plants leaves, weed patches, fruits, diseases: different applications have been developed based on sizes of structures. In [54] morphology of leaves is used for weed and crop discrimination based on features by applying neural networks. Apples are identified and counted on their context on the trees in [55]. Fungal or powdery mildew diseases are identified in [56,57]. The machine vision must provide sufficient information and the structures (leaves, patches fruits) must be imaged with sufficient sizes and dimensions to obtain discriminant features for the required classification or identification. In this regard, small mapped areas could be insufficient for such a purpose.

- Tracking stubble lines: machine vision systems for tracking accumulations of straw for automatic baling in cereal has been addressed in [58], where a specific width is required to guide the tractor dragging the baling machine.

- Spatial variations: plant height, fruit yield, and topographic features (slope and elevation) have been studied in [59], where specific machine vision system arrangements are studied.

- 3D structure and guidance: stereovision systems are intended for 3D structure determination and guidance [20,21]. Multispectral analysis is carried improving the informative interpretation of crop/field status with respect to the 2D image plane. The panoramic 3D structure obtained must contain sufficient resolution for such interpretation and also provide a map where the autonomous ground vehicle applies path planning and obstacle avoidance for safe navigation. A variable field of view setup has been experimented for guidance in [22]. An adapted NDVI was used in [60] for distinguishing soil and plants trough a camera-based system for precise guidance in small vehicles.

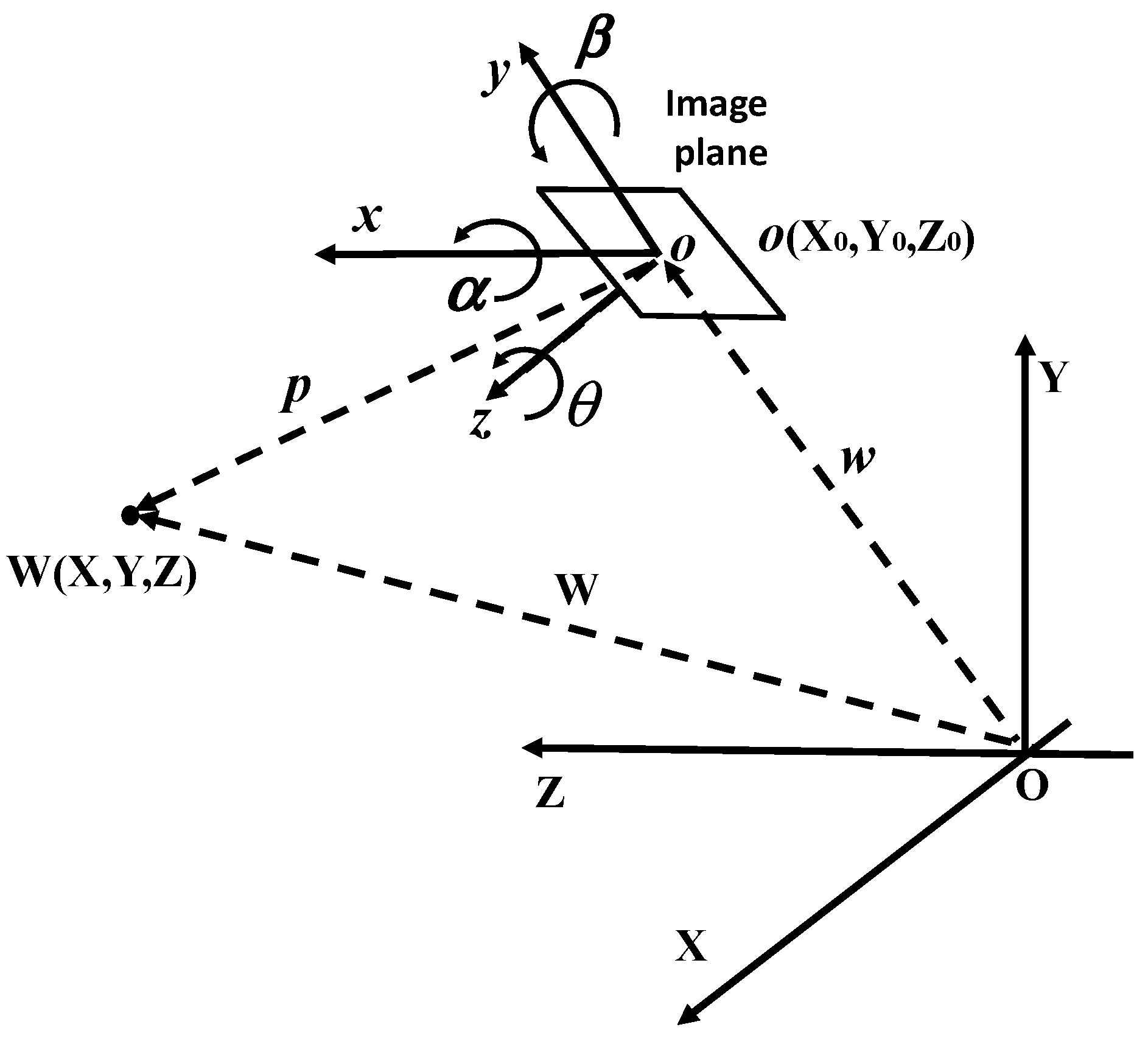

4.2. System Geometry

- Fix the position of the machine vision Cartesian system onboard the vehicle.

- Take as reference the central point of the sensor o, i.e., the point where the two diagonals in the image plane intersect. This point will be the origin of the secondary coordinate system oxyz, with axes (x,y,z).

- Fix the origin O and associated Cartesian axes (X,Y,Z) of the primary world coordinate system OXYZ. This is an imaginary system where the 3D points in the scene are to be referenced. Its positioning must be conveniently set as to facilitate the agricultural tasks.

- Initially the systems OXYZ and oxyz are both coincident, including their origins.

- Move the origin of oxyz to a new spatial position located at W0(X0,Y0,Z0), which is the point chosen to place the central point of the image plane, i.e., the origin of the oxyz system. This operation is carried out by applying a translation operation through the matrix G.

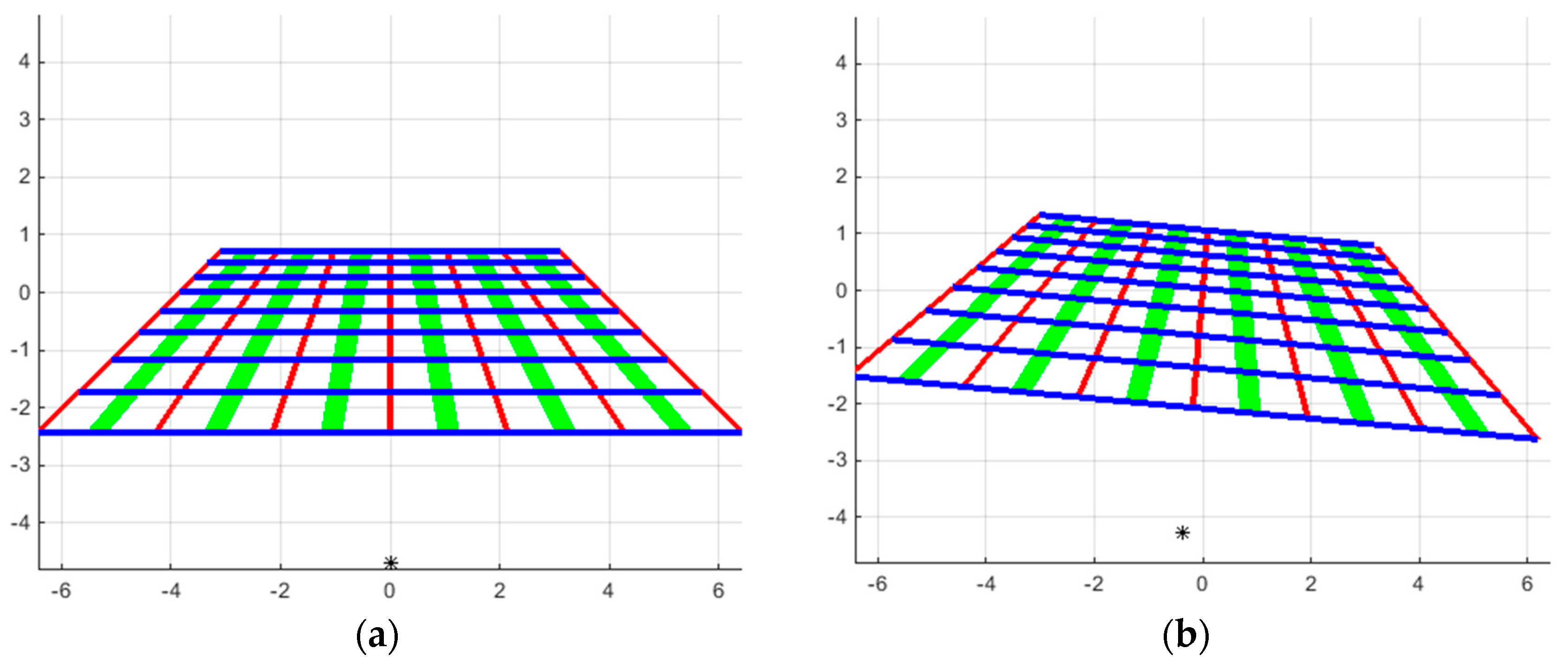

- Rotate the axes x, y and z with angles α, β and θ respectively. These rotations produce the corresponding elementary movements to place the image plane oriented toward the 3D scene (ROI) to be analyzed. These operations are carried out by applying the following respective operations Rα, Rβ and Rθ.

- Once the image plane is oriented toward the scene, the point W(X,Y,Z) is to be mapped onto the image plane to form its corresponding image. This is based on the image perspective projection by applying the perspective transformation matrix P.

- Mapping of specific areas: to determine the number of pixels in the image, which allows us to determine if the imaged area is sufficient for posterior image processing analysis, such as morphological operations where the areas are sometimes eroded. For example, it is very important to determine if such areas can provide discriminatory information based on shape descriptors for dicotyledons against monocotyledons or other different species. Maximum and minimum weed patches dimensions should be also of interest [6,7,10,11,12,13,14,16,62].

- Crop lines in wide row crops: determination of the maximum number of crop lines that can be fully seen widthwise. Maximum resolution that can be seen along with discriminant capabilities. Separation between crop lines to decide if weed patches can be distinguished or they could appear overlapped with the crop lines. Crop lines width and coverage [6,7,10].

- Fruits: sizes of fruits for robust identification [63], where the imaged dimensions determine specific shapes based on sufficient fruit’s areas.

- Canopy: where plant’s heights or other dimensions can be used as the basis for different applications, such as for plant counting to determine the number of plants of small young peach trees in a seedling nursery [64].

- The loss of the third dimension when the 3D scene is mapped onto 2D requires additional considerations in order to guarantee imaged working areas (ROIs) with sufficient resolutions and qualities.

- Camera system arrangements onboard agricultural vehicles, together with the definition of the sensor’s resolutions and optical systems, are to be considered.

- It is appropriate simulation studies to determine the best resolutions, based on geometric transformations from 3D to 2D.

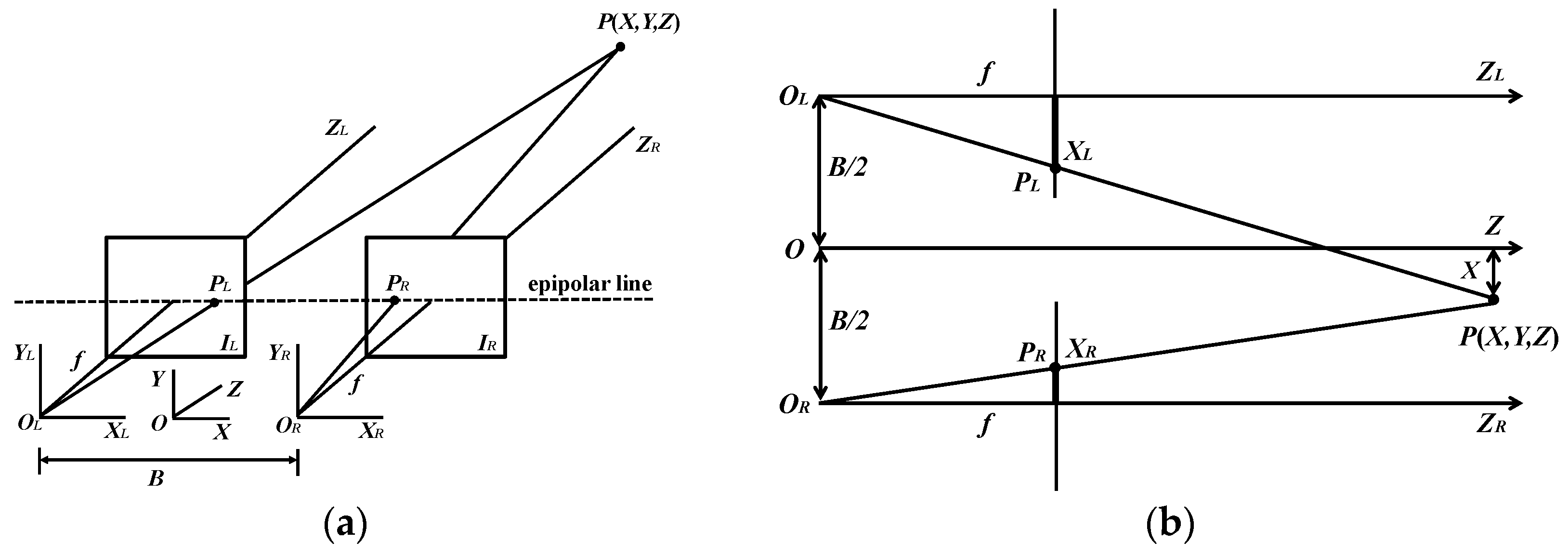

4.3. Stereovision Systems

5. A Case Study: Machine Vision Onboard an Autonomous Vehicle in the RHEA Project

5.1. Machine Vision System Specifications

5.2. 3D Mapping onto 2D Imaging

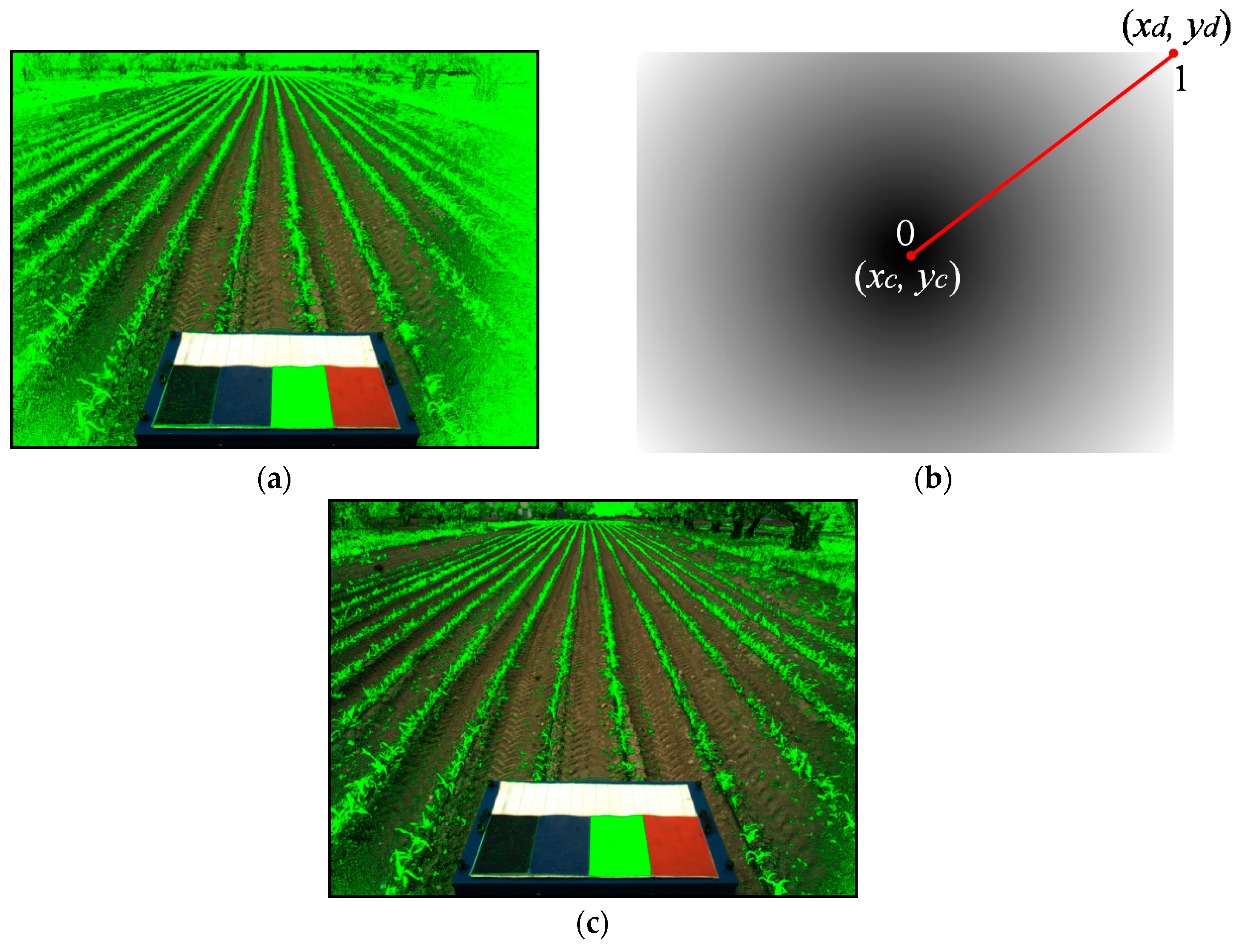

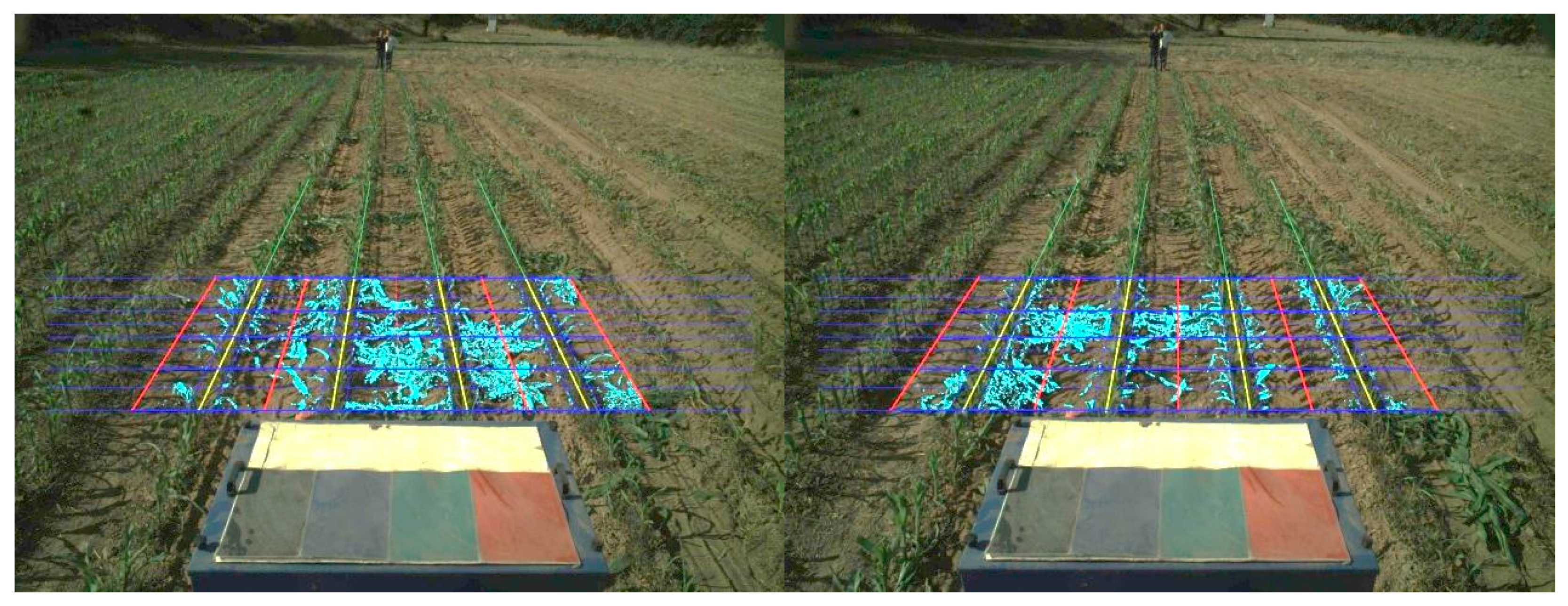

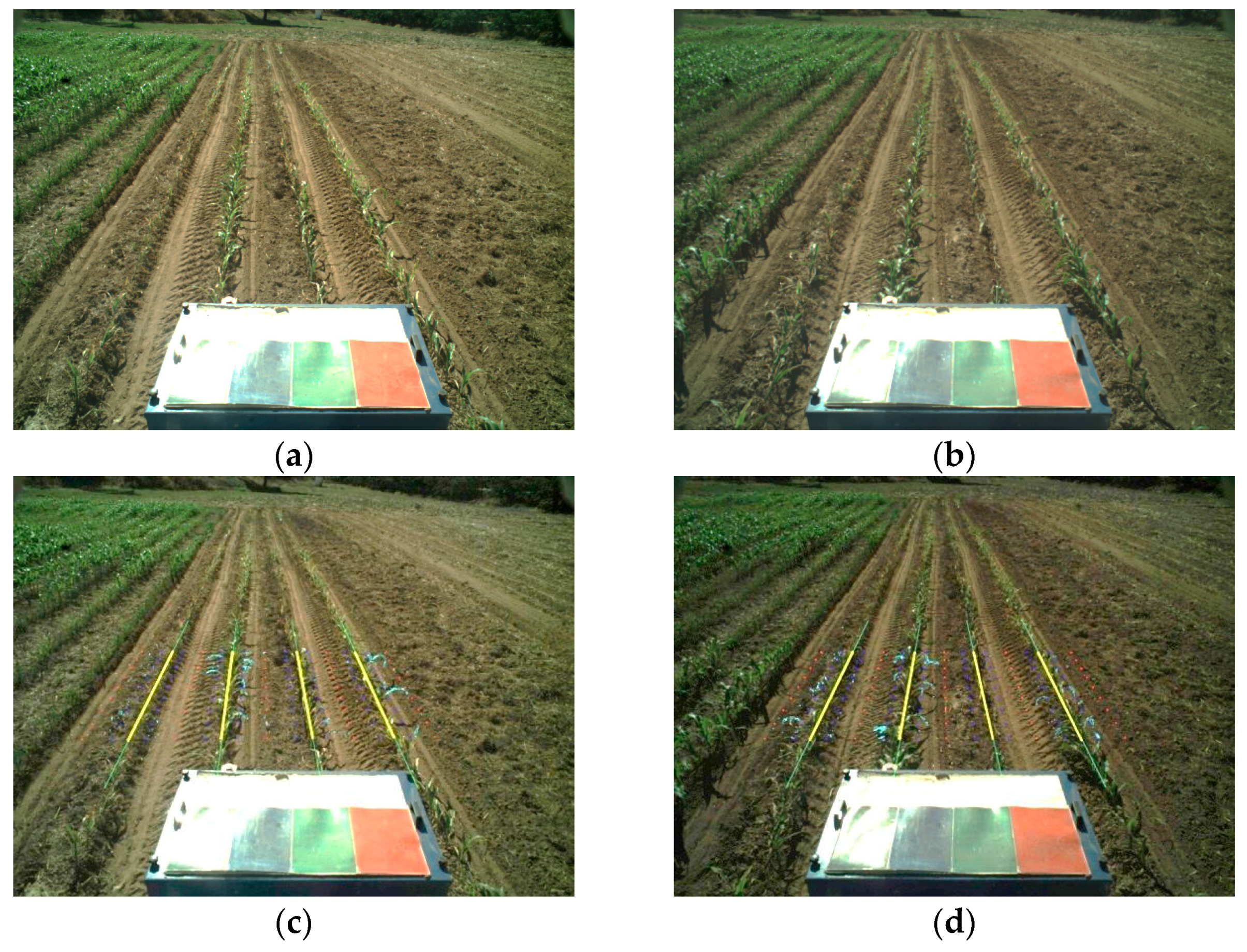

5.3. Crop Rows Detection and Weed Coverage

- Once the crop lines are identified, they are confined to the ROI in the image (yellow lines).

- To the left and right of each crop line, parallel lines are drawn (red). They divide the inter-crop space into two parts.

- Horizontal lines (in blue) are spaced conveniently in pixels so that each line corresponds to a distance of 0.25 m from the base line of the spatial ROI in the scene.

- The above lines define 8 × 8 trapezoidal cells, each trapezoid with its corresponding area Aij expressed in pixels. For each cell, the number of pixels identified as green pixels was computed, Gij, (drawn as cyan pixels in the image). Pixels close to the crop rows were excluded, with a margin of tolerance which represents 10% of the width of the cell along horizontal displacements. This is because this margin contains mainly crop plants but not weeds. The weed coverage for each cell is finally computed as dij = Aij/Gij, expressed in percentage. The different dij values compose the elements of the density matrix.

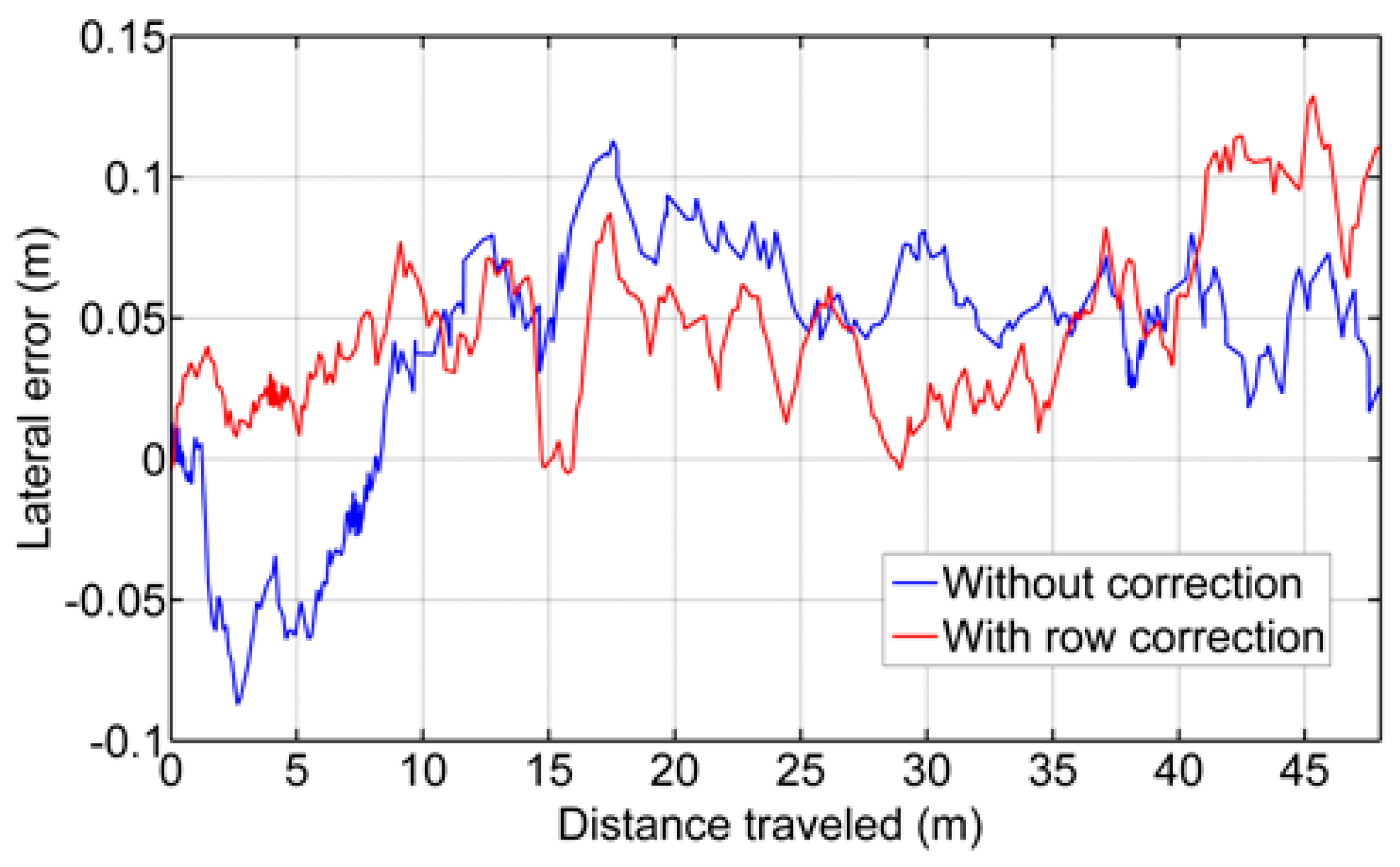

5.4. Guidance

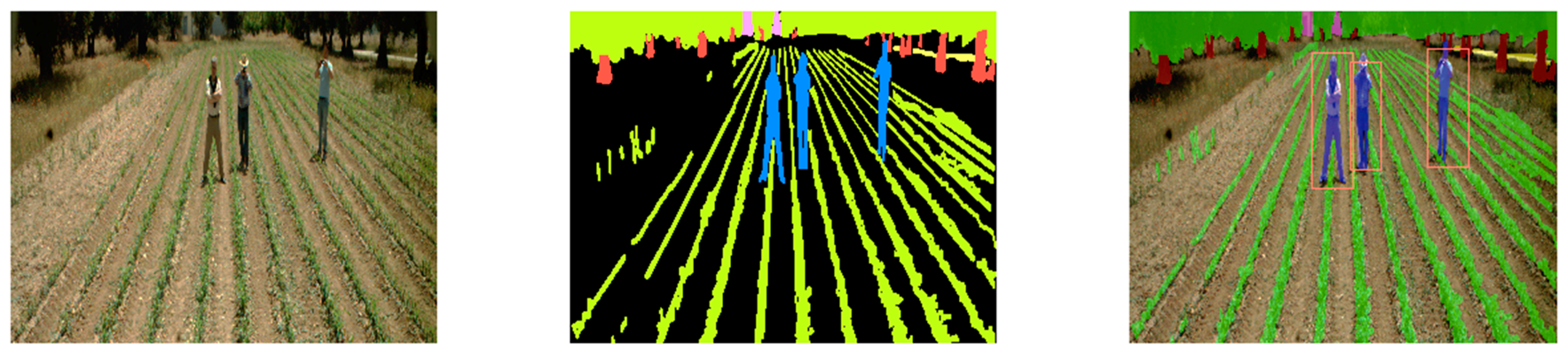

5.5. Security: Obstacle Detection

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix: Camera System Geometry

References

- Slaughter, D.C.; Giles, D.K.; Downey, D. Autonomous robotic weed control systems: A review. Comput. Electron. Agric. 2008, 61, 63–78. [Google Scholar] [CrossRef]

- Shalal, N.; Low, T.; McCarthy, C.; Hancock, N. A review of autonomous navigation systems in agricultural environments. In Proceedings of the SEAg 2013: Innovative Agricultural Technologies for a Sustainable Future, Barton, Australia, 22–25 September 2013; Available online: http://eprints.usq.edu.au/24779/ (accessed on 20 July 2015).

- Mousazadeh, H. A technical review on navigation systems of agricultural autonomous off-road vehicles. J. Terramech. 2013, 50, 211–23. [Google Scholar] [CrossRef]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Romeo, J.; Pajares, G.; Montalvo, M.; Guerrero, J.M.; Guijarro, M.; Ribeiro, A. Crop row detection in maize fields inspired on the human visual perception. Sci. World J. 2012, 2012, 484390. [Google Scholar] [CrossRef] [PubMed]

- Romeo, J.; Pajares, G.; Montalvo, M.; Guerrero, J.M.; Guijarro, M.; de la Cruz, J.M. A new expert system for greenness identification in agricultural images. Exp. Syst. Appl. 2013, 40, 2275–2286. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support vector machines for crop/weeds identification in maize fields. Exp. Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Gée, Ch.; Bossu, J.; Jones, G.; Truchetet, F. Crop/weed discrimination in perspective agronomic images. Comput. Electron. Agric. 2008, 60, 49–59. [Google Scholar] [CrossRef]

- Zheng, L.; Zhang, J.; Wang, Q. Mean-shift-based color segmentation of images containing green vegetation. Comput. Electron. Agric. 2009, 65, 93–98. [Google Scholar] [CrossRef]

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.J.; de la Cruz, J.M. Automatic detection of crop rows in maize fields with high weeds pressure. Exp. Syst. Appl. 2012, 39, 11889–11897. [Google Scholar] [CrossRef]

- Guijarro, M.; Pajares, G.; Riomoros, I.; Herrera, P.J.; Burgos-Artizzu, X.P.; Ribeiro, A. Automatic segmentation of relevant textures in agricultural images. Comput. Electron. Agric. 2011, 75, 75–83. [Google Scholar] [CrossRef]

- Burgos-Artizzu, X.P.; Ribeiro, A.; Tellaeche, A.; Pajares, G.; Fernández-Quintanilla, C. Improving weed pressure assessment using digital images from an experience-based reasoning approach. Comput. Electron. Agric. 2009, 65, 176–185. [Google Scholar] [CrossRef]

- Sainz-Costa, N.; Ribeiro, A.; Burgos-Artizzu, X.P.; Guijarro, M.; Pajares, G. Mapping wide row crops with video sequences acquired from a tractor moving at treatment speed. Sensors 2011, 11, 7095–7109. [Google Scholar] [CrossRef] [PubMed]

- Tellaeche, A.; Burgos-Artizzu, X.P.; Pajares, G.; Ribeiro, A. A new vision-based approach to differential spraying in precision agriculture. Comput. Electron. Agric. 2008, 60, 144–155. [Google Scholar] [CrossRef]

- Jones, G.; Gée, Ch.; Truchetet, F. Assessment of an inter-row weed infestation rate on simulated agronomic images. Comput. Electron. Agric. 2009, 67, 43–50. [Google Scholar] [CrossRef]

- Tellaeche, A.; Burgos-Artizzu, X.P.; Pajares, G.; Ribeiro, A. A vision-based method for weeds identification through the Bayesian decision theory. Pattern Recognit. 2008, 41, 521–530. [Google Scholar] [CrossRef]

- Li, M.; Imou, K.; Wakabayashi, K.; Yokoyama, S. Review of research on agricultural vehicle autonomous guidance. Int. J. Agric. Biol. Eng. 2009, 2, 1–26. [Google Scholar]

- Reid, J.F.; Searcy, S.W. Vision-based guidance of an agricultural tractor. IEEE Control. Syst. 1997, 7, 39–43. [Google Scholar] [CrossRef]

- Billingsley, J.; Schoenfisch, M. Vision-guidance of agricultural vehicles. Auton. Robots 1995, 2, 65–76. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Zhang, Q.; Reid, J.F.; Will, J.D. Machine vision based automated tractor guidance. Int. J. Smart Eng. Syst. Des. 2003, 5, 467–480. [Google Scholar] [CrossRef]

- Kise, M.; Zhang, Q. Development of a stereovision sensing system for 3D crop row structure mapping and tractor guidance. Biosyst. Eng. 2008, 101, 191–198. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, L.; Grift, T.E. Variable field-of-view machine vision based row guidance of an agricultural robot. Comput. Electron. Agric. 2012, 84, 85–91. [Google Scholar] [CrossRef]

- Wei, J.; Rovira-Mas, F.; Reid, J.F.; Han, S. Obstacle detection using stereo vision to enhance safety autonomous machines. Trans. ASABE 2005, 48, 2389–2397. [Google Scholar] [CrossRef]

- Nissimov, S.; Goldberger, J.; Alchanatis, V. Obstacle detection in a greenhouse environment using the Kinect sensor. Comput. Electron. Agric. 2015, 113, 104–115. [Google Scholar] [CrossRef]

- Campos, Y.; Sossa, H.; Pajares, G. Spatio-temporal analysis for obstacle detection in agricultural videos. Appl. Soft Comput. 2016, 45, 86–97. [Google Scholar] [CrossRef]

- Cheein, F.A.; Steiner, G.; Paina, G.P.; Carelli, R. Optimized EIF-SLAM algorithm for precision agriculture mapping based on stems detection. Comput. Electron. Agric. 2011, 78, 195–207. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- RHEA. Robot Fleets for Highly Effective Agriculture and Forestry Management. Available online: http://www.rhea-project.eu/ (accessed on 19 August 2016).

- Exelis Visual Information Solutions. Available online: http://www.exelisvis.com/docs/VegetationIndices.html (accessed on 19 August 2016).

- Meyer, G.E.; Camargo-Neto, J. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Point Grey Innovation and Imaging. How to Evaluate Camera Sensitivity. Available online: https://www.ptgrey.com/white-paper/id/10912 (accessed on 19 August 2016).

- Scheneider Kreuznach. Tips and Tricks. Available online: http://www.schneiderkreuznach.com/en/photo-imaging/product-field/b-w-fotofilter/products/filtertypes/special-filters/486-uvir-cut/ (accessed on 22 August 2016).

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Ollinger, S.V. Sources of variability in canopy reflectance and the convergent properties of plants. New Phytol. 2011, 189, 375–394. [Google Scholar] [CrossRef] [PubMed]

- Rabatel, G.; Gorretta, N.; Labbé, S. Getting NDVI Spectral Bands from a Single Standard RGB Digital Camera: A Methodological Approach. In Proceedings of the 14th Conference of the Spanish Association for Artificial Intelligence, CAEPIA 2011, La Laguna, Spain, 7–11 November 2011; Volume 7023, pp. 333–342.

- Xenics Infrared Solutions. Bobcat-640-GigE High Resolution Small form Factor InGaAs Camera. Available online: http://www.applied-infrared.com.au/images/pdf/Bobcat-640-GigE_Industrial_LowRes.pdf (accessed on 22 August 2016).

- Kiani, S.; Kamgar, S.; Raoufat, M.H. Machine Vision and Soil Trace-based Guidance-Assistance System for Farm Tractors in Soil Preparation Operations. J. Agric. Sci. 2012, 4, 1–5. [Google Scholar] [CrossRef]

- Hague, T.; Tillet, N.; Wheeler, H. Automated crop and weed monitoring in widely spaced cereals. Precis. Agric. 2006, 1, 95–113. [Google Scholar] [CrossRef]

- JAI 2CCD Cameras. Available online: http://www.jai.com/en/products/ad-080ge (accessed on 22 August 2016).

- 3CCD Color cameras. Image acquisition. Resource Mapping. Remote Sensing and GIS for Conservation. Available online: http://www.resourcemappinggis.com/image_technical.html (accessed on 22 August 2016).

- Kise, M.; Zhang, Q.; Rovira-Más, F. A Stereovision-based Crop Row Detection Method for Tractor-automated Guidance. Biosyst. Eng. 2005, 90, 357–367. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Zhang, Q.; Reid, J.F. Stereo vision three-dimensional terrain maps for precision agriculture. Comput. Electron. Agric. 2008, 60, 133–143. [Google Scholar] [CrossRef]

- Svensgaard, J.; Roitsch, T.; Christensen, S. Development of a Mobile Multispectral Imaging Platform for Precise Field Phenotyping. Agronomy 2014, 4, 322–336. [Google Scholar] [CrossRef]

- Li, L.; Zhang, Q.; Huang, D. A Review of Imaging Techniques for Plant Phenotyping. Sensors 2014, 14, 20078–20111. [Google Scholar] [CrossRef] [PubMed]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade camerasmounted on UAVs sufficiently reliable for assessing experimentalplots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Bockaert, V. Sensor sizes. Digital Photography Review. Available online: http://www.dpreview.com/glossary/camera-system/sensor-sizes (accessed on 22 August 2016).

- Emmi, L.; Gonzalez-de-Soto, M.; Pajares, G.; Gonzalez-de-Santos, P. Integrating Sensory/Actuation Systems in Agricultural Vehicles. Sensors 2014, 14, 4014–4049. [Google Scholar] [CrossRef] [PubMed]

- Choosing the Right Camera Bus. Available online: http://www.ni.com/white-paper/5386/en/ (accessed on 22 August 2016).

- Cambridge in Colour. Available online: http://www.cambridgeincolour.com/tutorials/camera-exposure.htm (accessed on 22 August 2016).

- Montalvo, M.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; de la Cruz, J.M.; Pajares, G. Acquisition of Agronomic Images with Sufficient Quality by Automatic Exposure Time Control and Histogram MatchingLecture Notes in Computer Science. In Proceedings of the Advanced Concepts for Intelligent Vision Systems (ACIVS’13), Poznan, Poland, 28–31 October 2013; Volume 8192, pp. 37–48.

- Cinegon 1.9/10 Ruggedized Lens. Available online: http://www.schneiderkreuznach.com/fileadmin/user_upload/bu_industrial_solutions/industrieoptik/16mm_Lenses/Compact_Lenses/Cinegon_1.9–10_ruggedized.pdf (accessed on 27 March 2015).

- Optical Filters. Edmund Optics. Available online: http://www.edmundoptics.com/technical-resources-center/optics/optical-filters/?&#guide (accessed on 22 August 2016).

- Point Grey Innovation and Imaging. Selecting a lens for Your Camera. Available online: https://www.ptgrey.com/KB/10694 (accessed on 22 August 2016).

- Jeon, H.Y.; Tian, L.F.; Zhu, H. Robust Crop and Weed Segmentation under Uncontrolled Outdoor Illumination. Sensors 2011, 11, 6270–6283. [Google Scholar] [CrossRef] [PubMed]

- Linker, R.; Cohen, O.; Naor, A. Determination of the number of green apples in RGB images recorded in orchard. Comput. Electron. Agric. 2012, 81, 45–57. [Google Scholar] [CrossRef]

- Moshou, D.; Bravo, D.; Oberti, R.; West, J.S.; Ramon, H.; Vougioukas, S.; Bochtis, D. Intelligent multi-sensor system for the detection and treatment of fungal diseases in arable crops. Biosyst. Eng. 2011, 108, 311–321. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Borghese, A.N. Automatic detection of powdery mildew on grapevine leaves by image analysis: Optimal view-angle range to increase the sensitivity. Comput. Electron. Agric. 2014, 104, 1–8. [Google Scholar] [CrossRef]

- Blas, M.R.; Blanke, M. Stereo vision with texture learning for fault-tolerant automatic baling. Comput. Electron. Agric. 2011, 75, 159–168. [Google Scholar] [CrossRef]

- Farooque, A.A.; Chang, Y.K.; Zaman, Q.U.; Groulx, D.; Schumann, A.W.; Esau, T.J. Performance evaluation of multiple ground based sensors mounted on a commercial wild blueberry harvester to sense plant height, fruit yield and topographic features in real-time. Comput. Electron. Agric. 2012, 84, 85–91. [Google Scholar] [CrossRef]

- Dworak, V.; Huebner, M.; Selbeck, J. Precise navigation of small agricultural robots in sensitive areas with a smart plant camera. J. Imaging 2015, 1, 115–133. [Google Scholar] [CrossRef]

- Fu, K.S.; Gonzalez, R.C.; Lee, C.S.G. Robótica: Control, Detección, Visión e Inteligencia; McGraw-Hill: Madrid, Spain, 1988. [Google Scholar]

- Herrera, P.J.; Dorado, J.; Ribeiro, A. A Novel Approach for Weed Type Classification Based on Shape Descriptors and a Fuzzy Decision-Making Method. Sensors 2014, 14, 15304–15324. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Lee, S.H.; Hsu, H.Y. Review on fruit harvesting method for potential use of automatic fruit harvesting systems. Procedia Eng. 2011, 23, 351–366. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Slaughter, D.C.; Hanson, B.D.; Barber, A.; Freitas, A.; Robles, D.; Whelan, E. Automated mobile system for accurate outdoor tree crop enumeration using an uncalibrated camera. Sensors 2015, 15, 18427–18442. [Google Scholar] [CrossRef] [PubMed]

- Vázquez-Arellano, M.; Griepentrog, H.W.; Reiser, D.; Paraforos, D.S. 3-D imaging systems for agricultural applications-a review. Sensors 2016, 16, 618. [Google Scholar] [CrossRef] [PubMed]

- Rong, X.; Huanyu, J.; Yibin, Y. Recognition of clustered tomatoes based on binocular stereo vision. Comput. Electron. Agric. 2014, 106, 75–90. [Google Scholar]

- Steen, K.A.; Christiansen, P.; Karstoft, H.; Jørgensen, R.N. Using deep learning to challenge safety standard for highly autonomous machines in agriculture. J. Imaging 2016, 2, 6. [Google Scholar] [CrossRef]

- Barnard, S.; Fishler, M. Computational stereo. ACM Comput. Surv. 1982, 14, 553–572. [Google Scholar] [CrossRef]

- Cochran, S.D.; Medioni, G. 3-D Surface Description from binocular stereo. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 981–994. [Google Scholar] [CrossRef]

- Pajares, G.; de la Cruz, J.M. On combining support vector machines and simulated annealing in stereovision matching. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 1646–1657. [Google Scholar] [CrossRef]

- Correal, R.; Pajares, G.; Ruz, J.J. Automatic expert system for 3D terrain reconstruction based on stereo vision and histogram matching. Expert Syst. Appl. 2014, 41, 2043–2051. [Google Scholar] [CrossRef]

- Rovira-Más, F.; Wang, Q.; Zhang, Q. Design parameters for adjusting the visual field of binocular stereo cameras. Biosyst. Eng. 2010, 105, 59–70. [Google Scholar]

- Pajares, G.; de la Cruz, J.M. Visión por Computador: Imágenes Digitales y Aplicacione; RA-MA: Madrid, Spain, 2007. (In Spanish) [Google Scholar]

- MicroStrain Sensing Systems. Available online: http://www.microstrain.com/inertial/3dm-gx3–35 (accessed on 22 August 2016).

- SVS-VISTEK. Available online: https://www.svs-vistek.com/en/svcam-cameras/svs-svcam-search-result.php (accessed on 22 August 2016).

- National Instruments. CompactRIO. Available online: http://sine.ni.com/nips/cds/view/p/lang/es/nid/210001 (accessed on 22 August 2016).

- National Instruments. LabView. Available online: http://www.ni.com/labview/esa/ (accessed on 22 August 2016).

- Cyberbotics. Webots Robot Simulator. Available online: https://www.cyberbotics.com/ (accessed on 24 August 2016).

- Gazebo. Available online: http://gazebosim.org/ (accessed on 24 August 2016).

- Guerrero, J.M.; Guijarro, M.; Montalvo, M.; Romeo, J.; Emmi, L.; Ribeiro, A.; Pajares, G. Automatic expert system based on images for accuracy crop row detection in maize fields. Exp. Syst. Appl. 2013, 40, 656–664. [Google Scholar] [CrossRef]

- Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; López-Granados, F.; Brandstoetter, M.; Tomic, S.; Pedrazzi, S.; Peruzzi, A.; Pajares, G.; Kaplanis, G.; et al. Fleets of robots for environmentally-safe pest control in agriculture. Precis. Agric. 2016, 1–41. [Google Scholar] [CrossRef]

- Conesa-Muñoz, J.; Pajares, G.; Ribeiro, A. Mix-opt: A new route operator for optimal coverage path planning for a fleet in an agricultural environment. Exp. Syst. Appl. 2016, 54, 364–378. [Google Scholar] [CrossRef]

| S | λ (nm) | S | λ (nm) | S | λ (nm) | S | λ (nm) | |

|---|---|---|---|---|---|---|---|---|

| UV | 1–380 | Visible | 380–780 | Blue | 450–500 | IR | Near | 760–1400 |

| Green | 500–600 | Short-Wave (SWIR) | 1400–3000 | |||||

| Mid-Wave (MWIR) | 3000–8000 | |||||||

| Red | 600–760 | Long-Wave (LWIR) | 8000–15,000 | |||||

| Vegetation Indices | RR for 560 nm, Figure 1a r = 0.20; g = 0.80; b = 0.01 | QE for 560 nm, Figure 1b r = 0.02; g = 0.35; b = 0.03 |

|---|---|---|

| GRVI = (g − r)/(g + r) | 0.60 | 0.89 |

| ExG = 2 g − r − b | 1.39 | 0.65 |

| ExR = 1.4 r – g | −0.52 | −0.32 |

| ExGR = ExG − ExR | 1.91 | 0.97 |

| CIVE = 0.441r − 0.811 g + 0.385b + 18.78745 | 18.23 | 18.52 |

| VEG = gr−ab(a−1) with a = 0.667 which was defined in [38] | 10.85 | 15.29 |

| Distances from O (m) | α° | f (mm) | Area (pixels) | Distances from O (m) | α° | f (mm) | Area (Pixels) |

|---|---|---|---|---|---|---|---|

| 3 | 15 | 3.5 | 756 | 5 | 3.5 | 212 | |

| 8.0 | 3901 | 8.0 | 1070 | ||||

| 10.0 | 6136 | 10.0 | 1675 | ||||

| 12.0 | 8840 | 12.0 | 2415 | ||||

| 20 | 3.5 | 710 | 3.5 | 188 | |||

| 8.0 | 3645 | 8.0 | 1007 | ||||

| 10.0 | 5684 | 10.0 | 1596 | ||||

| 12.0 | 8296 | 12.0 | 2320 | ||||

| 4 | 15 | 3.5 | 371 | 6 | 3.5 | 120 | |

| 8.0 | 1900 | 8.0 | 637 | ||||

| 10.0 | 2934 | 10.0 | 1026 | ||||

| 12.0 | 4312 | 12.0 | 1428 | ||||

| 20 | 3.5 | 342 | 3.5 | 110 | |||

| 8.0 | 1806 | 8.0 | 620 | ||||

| 10.0 | 2818 | 10.0 | 998 | ||||

| 12.0 | 4128 | 12.0 | 1388 |

| Crop Lines Detection | |||

|---|---|---|---|

| Maize growth stage | Low | Medium | High |

| % of success | 95 | 93 | 90 |

| Maize Growth Stage | |||

|---|---|---|---|

| Weed densities | Low | Medium | High |

| % of success | 92 | 90 | 88 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pajares, G.; García-Santillán, I.; Campos, Y.; Montalvo, M.; Guerrero, J.M.; Emmi, L.; Romeo, J.; Guijarro, M.; Gonzalez-de-Santos, P. Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. J. Imaging 2016, 2, 34. https://doi.org/10.3390/jimaging2040034

Pajares G, García-Santillán I, Campos Y, Montalvo M, Guerrero JM, Emmi L, Romeo J, Guijarro M, Gonzalez-de-Santos P. Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. Journal of Imaging. 2016; 2(4):34. https://doi.org/10.3390/jimaging2040034

Chicago/Turabian StylePajares, Gonzalo, Iván García-Santillán, Yerania Campos, Martín Montalvo, José Miguel Guerrero, Luis Emmi, Juan Romeo, María Guijarro, and Pablo Gonzalez-de-Santos. 2016. "Machine-Vision Systems Selection for Agricultural Vehicles: A Guide" Journal of Imaging 2, no. 4: 34. https://doi.org/10.3390/jimaging2040034

APA StylePajares, G., García-Santillán, I., Campos, Y., Montalvo, M., Guerrero, J. M., Emmi, L., Romeo, J., Guijarro, M., & Gonzalez-de-Santos, P. (2016). Machine-Vision Systems Selection for Agricultural Vehicles: A Guide. Journal of Imaging, 2(4), 34. https://doi.org/10.3390/jimaging2040034