1. Introduction

The hippocampus, a quintessential neuroanatomical structure within the medial temporal lobe, represents one of the most extensively investigated regions in contemporary neuroscience due to its fundamental role in episodic memory consolidation, spatial cognition, and neuroplasticity [

1]. This crescent-shaped structure, characterized by its intricate cytoarchitectural organization and distinct laminar arrangement, has emerged as a critical biomarker for numerous neurological and psychiatric pathologies, including Alzheimer’s disease, temporal lobe epilepsy, major depressive disorder, and post-traumatic stress disorder. The morphometric analysis of hippocampal volume, surface area, and shape characteristics has profound clinical implications for elucidating disease progression trajectories, therapeutic response monitoring, and prognostic stratification across diverse patient populations [

2].

The advent of high-resolution magnetic resonance imaging (MRI) has revolutionized our ability to visualize and quantify hippocampal morphology with extraordinary precision [

3]. However, the precise delineation of hippocampal boundaries remains a formidable computational challenge due to the structure’s complex three-dimensional geometry, substantial inter-individual anatomical variability, and the inherent ambiguity in defining precise anatomical boundaries adjacent to nearby structures such as the amygdala, parahippocampal gyrus, and ventricular system [

4]. Traditional manual segmentation approaches, while considered the gold standard, are prohibitively time-consuming, subject to significant inter-rater variability, and fundamentally unsuitable for large-scale neuroimaging studies and clinical applications requiring rapid, automated analysis [

5].

Contemporary automated segmentation methodologies have evolved from rudimentary atlas-based approaches to sophisticated machine learning paradigms, with deep learning architectures demonstrating remarkable efficacy in addressing the complexities inherent in neuroanatomical segmentation tasks [

6]. The U-Net architecture, originally designed for biomedical image segmentation, has emerged as a foundational framework due to its elegant encoder–decoder structure with skip connections that preserve fine-grained spatial information while enabling hierarchical feature extraction [

7]. However, conventional U-Net implementations often struggle with the nuanced spatial relationships and depth-dependent features that characterize hippocampal morphology, particularly in pathological conditions where alterations may manifest as subtle volumetric changes or irregular surface deformations [

8].

The integration of attention mechanisms into deep learning networks has precipitated a paradigm shift in computer vision and medical image analysis, enabling networks to selectively focus on salient features while suppressing irrelevant information. Spatial attention mechanisms enable dynamic weighting of feature maps according to their spatial significance, thereby enhancing the network’s ability to discriminate between anatomically relevant structures and background noise [

9]. Concurrently, depth attention mechanisms enable selective emphasis on features across different network layers, promoting integration of multi-scale information that is crucial for accurate boundary delineation in complex anatomical structures [

9].

Recent advances in hippocampal segmentation have demonstrated the potential of hybrid architectures that combine multiple attention mechanisms to address the multifaceted challenges inherent in neuroanatomical segmentation [

10]. However, existing approaches often implement attention mechanisms in isolation or employ simplistic combinations that fail to leverage the synergistic potential between spatial and depth attention mechanisms [

11]. Furthermore, most current methodologies lack the architectural sophistication necessary to handle the cascaded processing requirements of optimal hippocampal segmentation, where coarse-to-fine refinement is essential for achieving clinically acceptable accuracy [

12]. The cascaded processing paradigm represents a biologically inspired approach that mimics the hierarchical processing mechanisms observed in visual cortex, where information progresses through successive layers of increasing complexity and specialization [

12]. In the context of hippocampal segmentation, cascaded architectures enable the progressive refinement of segmentation masks through multiple stages, with each stage focusing on progressively finer anatomical details. This approach is particularly advantageous for hippocampal segmentation, where the initial identification of gross anatomical boundaries must be followed by precise delineation of complex surface topology and the resolution of ambiguous boundaries [

13].

The convergence of spatial attention, depth attention, and cascaded processing within a unified U-Net framework presents an unprecedented opportunity to address the longstanding challenges in automated hippocampal segmentation [

14]. This integrated approach leverages the complementary strengths of each component: spatial attention for enhanced boundary localization, depth attention for optimal feature integration across network layers, and cascaded processing for progressive refinement of segmentation accuracy. This synergistic combination can achieve segmentation accuracy that approaches or exceeds manual segmentation standards while maintaining the computational efficiency necessary for clinical deployment [

15].

Today’s clinical applications demand segmentation algorithms that not only achieve high accuracy but also demonstrate robust performance across diverse imaging protocols, scanner manufacturers, and patient populations. The heterogeneity of clinical MRI data, including variations in field strength, sequence parameters, and image quality, presents additional challenges that must be addressed through sophisticated architectural design and comprehensive training strategies [

16]. Moreover, the increasing emphasis on subfield-specific analysis of hippocampal subregions, including CA1, CA2, CA3, the dentate gyrus, and the subicular complex, necessitates segmentation algorithms capable of resolving fine anatomical distinctions with sub-millimeter precision [

17]. The clinical significance of accurate hippocampal segmentation extends beyond volumetric quantification to include shape analysis, surface-based morphometry, and longitudinal change detection. Advanced segmentation algorithms must therefore provide not only accurate boundary delineation but also geometrically consistent representations that facilitate downstream analysis. The integration of attention mechanisms within cascaded architectures offers the potential to meet these demanding requirements while maintaining computational tractability for large-scale studies [

18].

Hippocampus segmentation plays a crucial clinical role in the diagnosis and monitoring of neurodegenerative diseases, particularly Alzheimer’s disease and mild cognitive impairment. The hippocampus is one of the first brain regions to exhibit atrophy in Alzheimer’s disease progression, making accurate segmentation essential for early detection and therapeutic monitoring [

19]. Quantitative hippocampal volume measurements derived from MRI segmentation enable clinicians to track disease progression, evaluate treatment efficacy, and predict cognitive decline with high precision [

3]. Furthermore, automated hippocampus segmentation facilitates large-scale population studies and reduces inter-observer variability associated with manual delineation, providing reliable biomarkers for clinical decision-making. Recent studies have demonstrated that deep learning-based segmentation approaches achieve clinical-grade accuracy, making them valuable tools for routine clinical practice and longitudinal patient monitoring [

14].

This study presents a novel Cascaded Spatial and Depth Attention UNet architecture specifically designed to address the multifaceted challenges of hippocampal segmentation. The proposed framework incorporates sophisticated attention mechanisms within a cascaded processing paradigm, enabling the progressive refinement of segmentation masks through multiple stages of increasing specificity [

20]. The spatial attention component enables the dynamic weighting of feature maps based on their anatomical relevance, while the depth attention mechanism optimizes feature integration across network layers. The cascaded architecture enables hierarchical processing of imaging data, from coarse anatomical localization to fine-grained boundary refinement, thereby achieving segmentation accuracy that surpasses existing state-of-the-art methodologies [

20].

The fundamental hypothesis underlying this research posits that synergistically integrating spatial attention, depth attention, and cascaded processing within a unified U-Net framework will yield superior hippocampal segmentation performance compared to conventional approaches. This hypothesis is grounded in the recognition that hippocampal segmentation requires simultaneous consideration of spatial context, multi-scale feature integration, and hierarchical processing, capabilities that are optimally addressed through the proposed architectural innovation. The subsequent sections of this manuscript provide comprehensive validation of this hypothesis through extensive experimental evaluation on diverse datasets and rigorous comparison with established benchmarks.

3. Proposed Method

3.1. Network Architecture

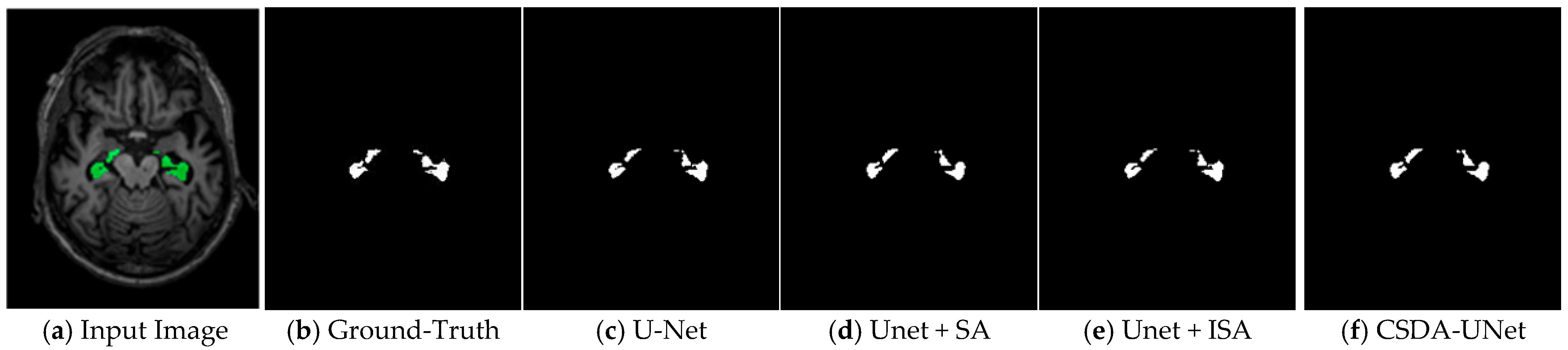

Figure 1 illustrates the proposed CSDA-UNet architecture. Its structure follows the U-Net design while adding a Spatial Attention module (SA module) and an Inter-Slice Attention module (ISA module). The input to CSDA-UNet is an MRI image with a size of 256 × 256 × 1.

The encoding path consists of five layers of convolution and pooling operations. In each layer, the input feature map undergoes a dual 3 × 3 convolution operation, with a ReLU activation function applied after each dual convolution layer. Finally, a 2 × 2 max pooling layer reduces the size of the feature map by half. The output sizes for each layer are as follows: the first layer outputs 256 × 256 × 32, becoming 128 × 128 × 32 after pooling; the second layer outputs 128 × 128 × 64, becoming 64 × 64 × 64 after pooling; the third layer outputs 64 × 64 × 128, becoming 32 × 32 × 128 after pooling; the fourth layer outputs 32 × 32 × 256, becoming 16 × 16 × 256 after pooling; and the fifth layer outputs 16 × 16 × 512, which is further optimized by the SA module.

The decoding path recovers the spatial dimensions of the feature maps through successive deconvolution and convolution operations. The first layer upsamples the feature map to 32 × 32 × 256 using a 2 × 2 upsampling layer, followed by dual 3 × 3 convolutions, and establishes a skip connection with the feature map from the fourth layer of the encoding path. The second layer upsamples to 64 × 64 × 128, performs dual 3 × 3 convolutions, and connects with the feature map from the third layer of the encoding path. The third layer upsamples to 128 × 128 × 64, performs dual 3 × 3 convolutions, and connects with the feature map from the second layer of the encoding path. The fourth layer upsamples to 256 × 256 × 32, performs dual 3 × 3 convolutions, and connects with the feature map from the first layer of the encoding path. Finally, a 1 × 1 convolution layer followed by a Sigmoid activation function generates a segmentation map of size 256 × 256 × 1. After the decoding path, the ISA module further processes the feature map to improve segmentation accuracy.

3.2. Spatial Attention (SA) Module

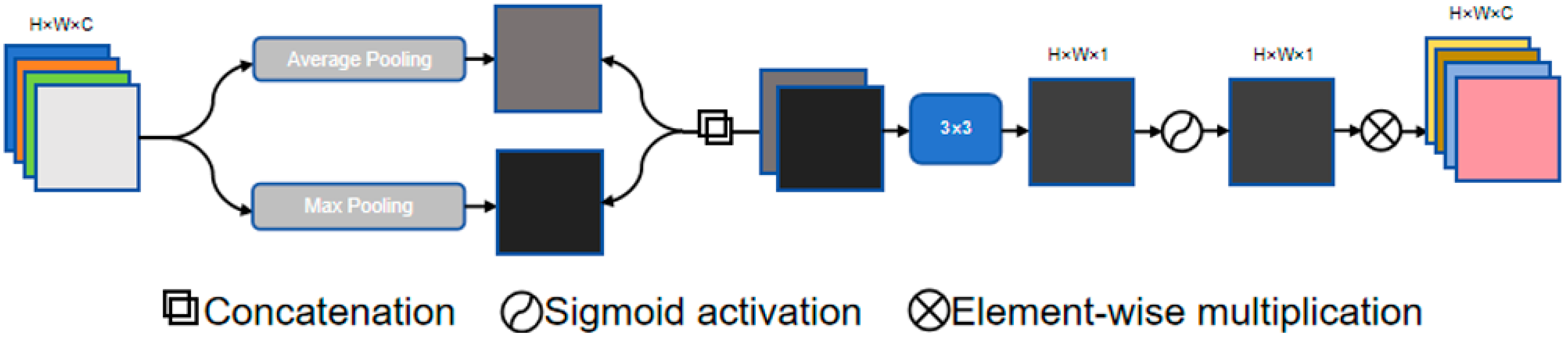

The SA module inserted at the bottom of the network generates a spatial attention map that is overlaid on the convolutional layers, as shown in

Figure 2. This module first applies max pooling and average pooling to the input feature map to generate two feature descriptor maps. These descriptor maps are then concatenated and processed through convolution operations. The SA module uses a set of convolution kernel sizes, including 1 × 1, 3 × 3, 5 × 5, and 7 × 7, ultimately producing an attention map through a Sigmoid activation function. This attention map further emphasizes the features captured by the convolutional layers, thereby improving segmentation accuracy.

3.3. Inter-Slice Attention (ISA) Module

The ISA module is integrated at the final stage of the decoding path to enhance cross-slice contextual awareness in 3D medical image segmentation. As illustrated in

Figure 3, it leverages spatial dependencies between adjacent slices by generating attention maps from neighboring slice feature maps (Slice i − 1 and Slice i + 1) using a lightweight attention mechanism. These attention masks are then applied via element-wise multiplication to the current slice’s feature map (Slice i), effectively weighting relevant spatial regions. The refined features are fused through element-wise addition and passed through a 1 × 1 convolution followed by a Sigmoid activation to produce the final 256 × 256 × 1 segmentation output.

3.4. Images Preprocessing and Augmentation

To enhance the model’s ability to recognize image features and to mitigate overfitting, several regularization strategies were employed during model training. Data augmentation techniques, including random horizontal and vertical flips and image enhancement, were applied to increase dataset diversity. Additionally, early stopping based on validation loss and careful learning rate scheduling were used to prevent overfitting. This approach increases the diversity of the training data without altering the inherent details and features of the images. Horizontal flipping simulates the left-right differences that may occur in anatomical structures among different individuals, while vertical flipping helps the model learn the impact of vertical position changes on pathological tissue features. In addition, image enhancement techniques were applied, including Contrast Limited Adaptive Histogram Equalization (CLAHE) to improve local contrast and Gaussian filtering to suppress noise while preserving structural boundaries.

Figure 4 presents a sample image from the dataset alongside two types of random variations, one using horizontal flipping and the other using vertical flipping.

After the training is completed, a confusion matrix is used to calculate evaluation metrics for assessing model performance. The relevant metrics are defined in

Table 1. These metrics represent the relationship between the model’s predicted results and the actual values in classification problems. Based on the confusion matrix, we can calculate evaluation metrics including Accuracy, Precision, Recall, F1 score, Dice coefficient, and the Intersection Over Union (IOU).

The accuracy formula is provided in Equation (1). This formula describes the ratio of the number of instances correctly predicted by the model to the total number of samples, serving as a fundamental metric for evaluating the overall performance of the model.

Equation (2) provides the formula for precision. This metric focuses on assessing the proportion of actual positives among the samples classified as positive by the model, reflecting the accuracy of the model’s positive determinations.

Equation (3) provides the formula for recall, also known as the true positive rate or sensitivity. Recall measures the proportion of actual positive samples that are correctly identified by the model and is an important metric for evaluating the model’s ability to recognize positive samples. In contexts such as clinical diagnosis, a high recall indicates a lower rate of missed diagnoses.

The Dice coefficient is defined in Equation (4). The Dice coefficient is the ratio of twice the number of true positives to the sum of predicted positives and actual positives, and it is commonly used in the field of medical imaging.

Equation (5) provides the formula for Intersection Over Union (IOU), also known as the Jaccard Index. This metric measures the ratio of the overlapping area between the predicted positives and the actual positives to their union area.

To ensure robust evaluation of the proposed CSDA-UNet and baseline models (U-Net, U-Net + SA, U-Net + ISA), we employed rigorous statistical methods to validate performance differences. Paired t-tests were conducted to compare the Dice coefficient and Intersection Over Union (IoU) scores between CSDA-UNet and each baseline model across the ADNI and Decathlon datasets. The null hypothesis assumes no significant difference in performance metrics between models, with a significance level of α = 0.05. Additionally, we implemented 5-fold cross-validation to assess model generalizability and reduce overfitting risks, particularly given the limited size of the ADNI dataset. Confidence intervals (95%) were calculated for key metrics (Dice, IoU) to quantify the precision of the reported results. These statistical analyses ensure that the performance improvements of CSDA-UNet are statistically significant and reliable for clinical applications.

3.5. Experimental Environment and Dataset

The experimental framework for this study was implemented using Python 3.8 and the PyTorch 2.0.1 deep learning library, executed within a CUDA 11.7-enabled environment to leverage GPU acceleration. The hardware and software configurations utilized for model development and training are summarized in

Table 2.

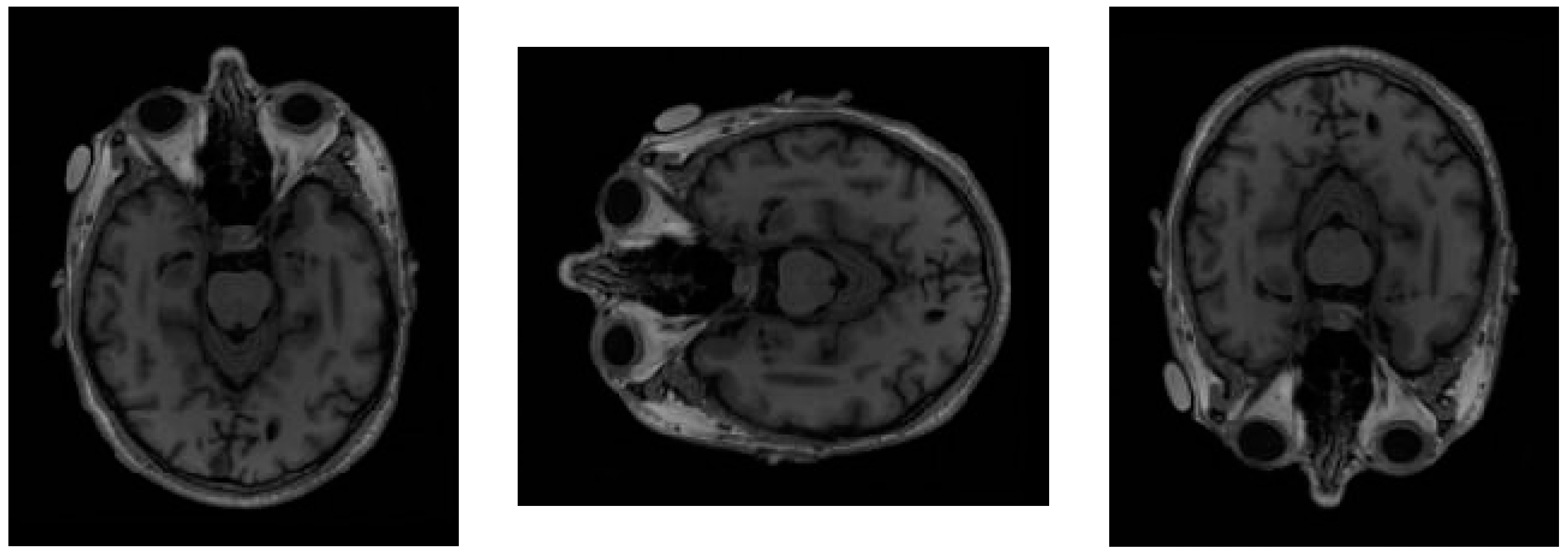

For empirical validation, we employed two hippocampus segmentation datasets: the Alzheimer’s Disease Neuroimaging Initiative (ADNI) dataset [

34] and the Decathlon Hippocampus dataset [

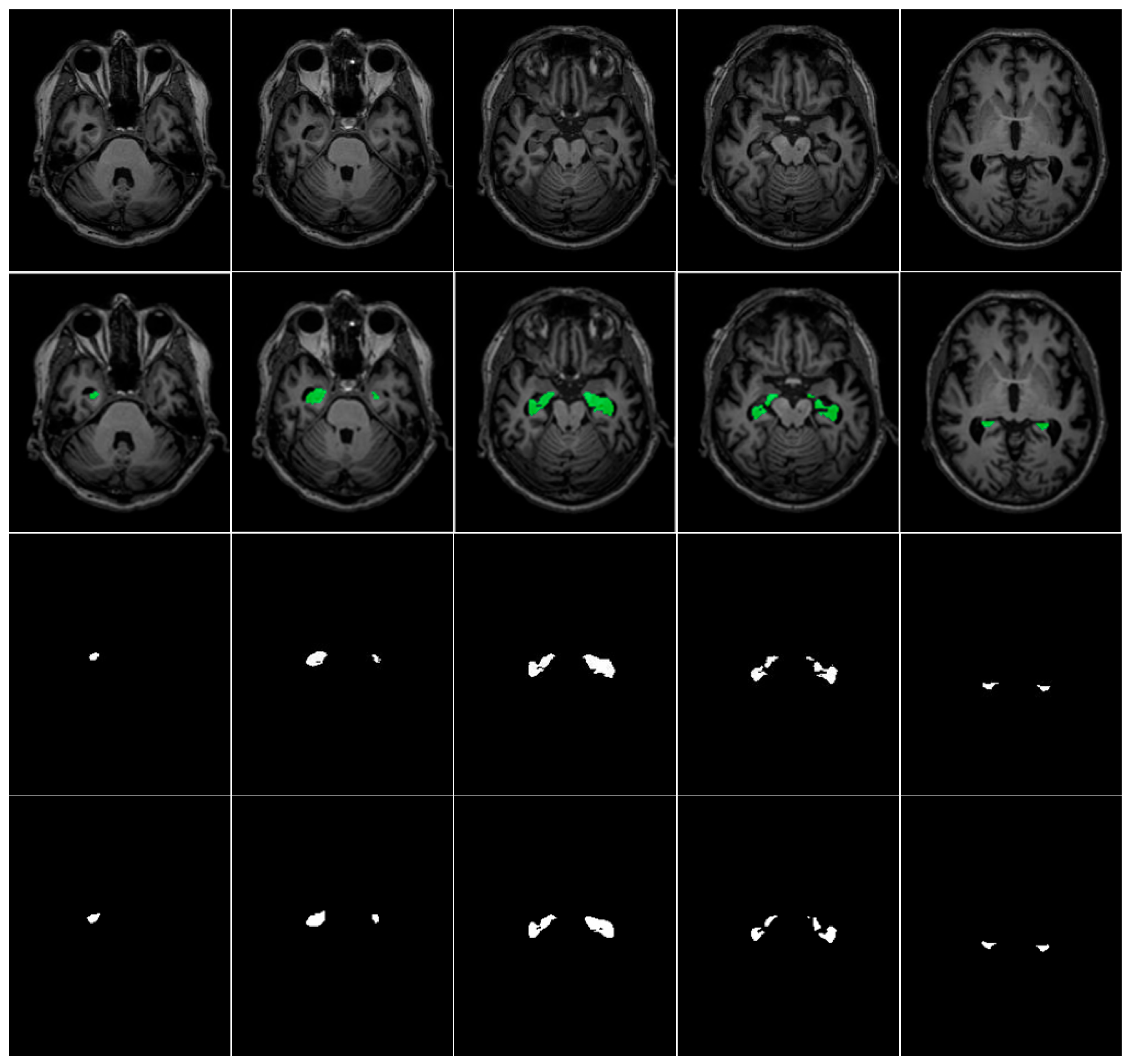

35]. Both datasets contain T1-weighted MRI scans and are publicly accessible resources for advancing Alzheimer’s Disease understanding and early diagnosis. The ADNI dataset comprises cross-sectional T1-weighted MRI scans from 135 subjects, with each subject contributing 189 axial slices (30 slices containing visible hippocampal structures on average). For model training and evaluation, data from 100 subjects (18,900 images) were allocated to the training set, while the remaining 35 subjects (6615 images) formed the test set. The Decathlon Hippocampus dataset includes T1-weighted MRI scans from 390 patients, with 30–34 slices per patient (9–10 slices with masks). The dataset was split into 260 patients (9270 images) for training and 130 patients (4499 images) for testing.

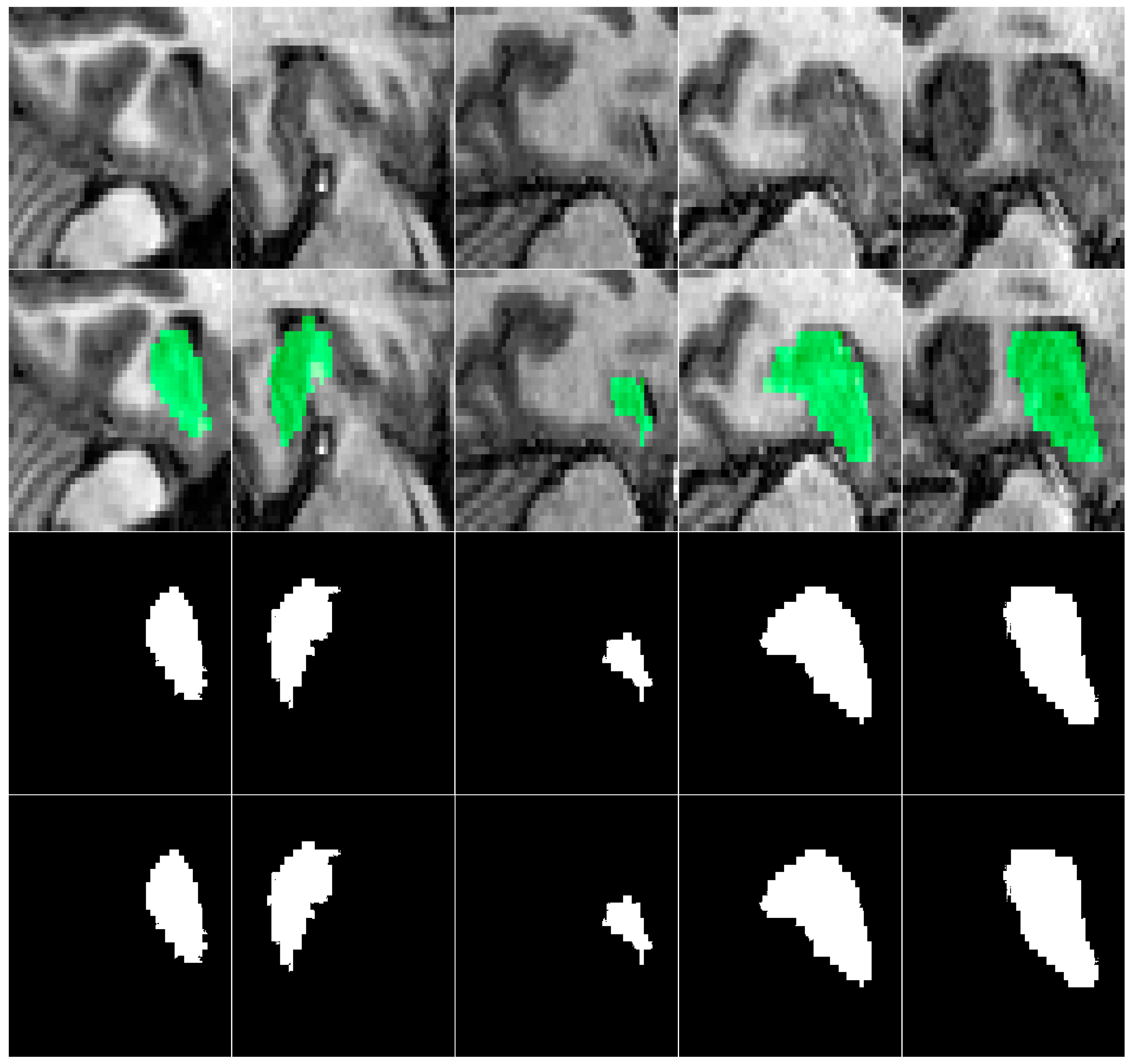

Table 3 summarizes the ADNI and Decathlon datasets used for hippocampus segmentation in our experiments. A representative visualization of hippocampal ground truth annotations overlaid on corresponding MRI slices from the ADNI dataset is presented in

Figure 5. This rigorously annotated dataset provides a robust foundation for benchmarking hippocampus segmentation models, particularly in the context of neurodegenerative disease assessment and clinical deployment.

In this study, the Adam optimizer is used, and the loss function is binary cross-entropy loss. Among the model parameters, the learning rate is set to 0.001, the batch size is 4, and the total number of training epochs is 200. Images are padded to a size of 256 × 256 through zero-padding before being input into the model and are cropped back to the original size during output to avoid image distortion.

5. Conclusions

This research applies a UNet-based deep learning architecture that is augmented with a Spatial Attention (SA) module as well as an Inter-Slice Attention (ISA) module in order to achieve very precise segmentation of the hippocampal region in MRI images. By applying the proposed technique on human brain MRI scans sourced from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) and Decathlon databases, this study not only validates the effectiveness of the U-Net architecture for medical image segmentation but also underscores its practical utility in real-world clinical applications. Experimental evaluations reveal that integrating a 3 × 3 convolutional kernel within the spatial attention (SA) module results in superior segmentation performance on T1-weighted hippocampal MRI images. Remarkably, the highest recorded IoU, Dice scores, and accuracy reached 0.9345, 0.9512, and 0.98, respectively, on the dataset [

34], while on the dataset [

35], the model achieved IoU scores of 0.9816/0.8132 (train/validation), Dice scores of 0.9907/0.8963 (train/validation), and accuracy of 0.9952/0.9832 (train/validation), substantially outperforming existing approaches and setting a new benchmark in hippocampal segmentation accuracy for both the [

34,

35] datasets. While the ADNI-derived hippocampus dataset provides high-quality annotations, its small size may limit generalization and increase overfitting risks for complex 3D architectures. The single-source origin may also reduce robustness to inter-scanner variability. To address these limitations, we validated our model on the Medical Segmentation Decathlon hippocampus dataset, where it demonstrated consistent performance improvements, confirming broader clinical generalizability. Statistical validation via paired t-tests (

p < 0.05) and 5-fold cross-validation confirms significant improvements over baselines, ensuring robust and reliable performance.

This study enhances the conventional UNet architecture by integrating dual attention mechanisms and optimizing convolutional kernel sizes within the spatial attention module. These modifications substantially improve the model’s ability to capture the complex spatial features of the hippocampal region, which is crucial due to its structural variability in brain MRI scans. Precise hippocampal segmentation is critical for early detection and monitoring of neurodegenerative disorders such as Alzheimer’s disease. The proposed deep learning framework advances automated hippocampal volumetric assessment and incorporating AI-driven segmentation into clinical decision-support systems can improve diagnostic accuracy and operational efficiency.

Future work will focus on enhancing clinical translational value through volumetric consistency analysis, hippocampal subfield segmentation, and correlation studies with cognitive assessments to bridge the gap between technical accuracy and clinical utility.