From Fragment to One Piece: A Review on AI-Driven Graphic Design

Abstract

1. Introduction

2. Background

- A raster image is a two-dimensional array storing pixel values, with the pixel as its fundamental unit influenced by resolution.

- Scalable Vector Graphics (SVG) uses mathematical descriptions to record content, such as parameters to draw straight lines.

3. Perception Tasks

3.1. Non-Text Element Perception

3.1.1. Object Recognition in Raster Image

3.1.2. SVG Recognition

3.2. Text Element Perception

3.2.1. Optical Character Recognition (OCR)

3.2.2. Font Recognition

3.3. Layout Analysis

3.4. Aesthetic Understanding

3.4.1. Color Palettes Recommendation

3.4.2. Other Aesthetic Attributes

3.5. Summary

4. Generation Tasks

4.1. Non-Text Element Generation

4.1.1. SVG Generation

4.1.2. Vectorization of Artist-Generated Imagery

4.2. Text Element Generation

4.2.1. Artistic Typography Generation

4.2.2. Visual Text Rendering

4.3. Layout Generation

4.3.1. Automatic Layout Generation

4.3.2. Glyph Layout Generation

4.4. Colorization

4.5. Summary

5. Present and Future

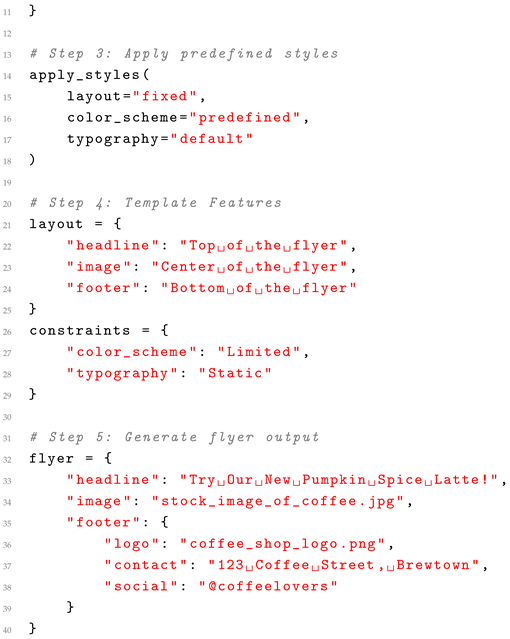

5.1. Analysis Within Perception Tasks

5.2. Analysis Within Generation Tasks

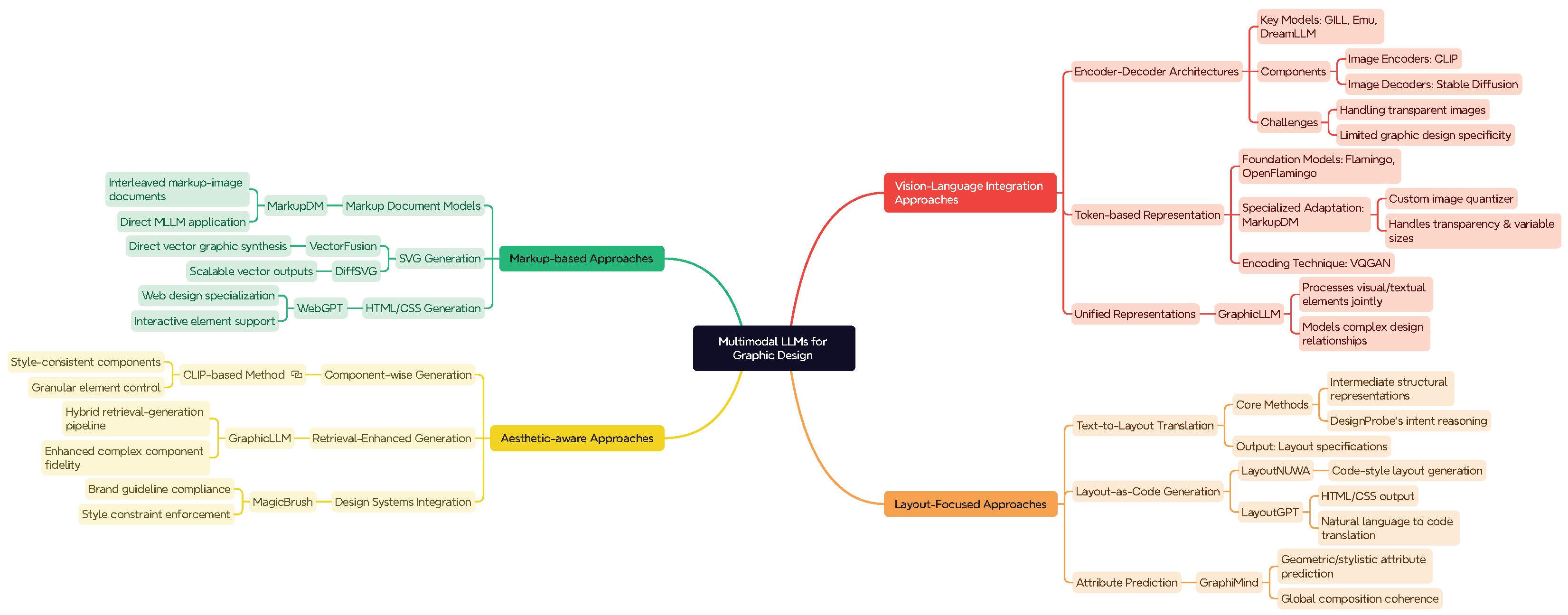

5.3. MLLM for Graphic Design

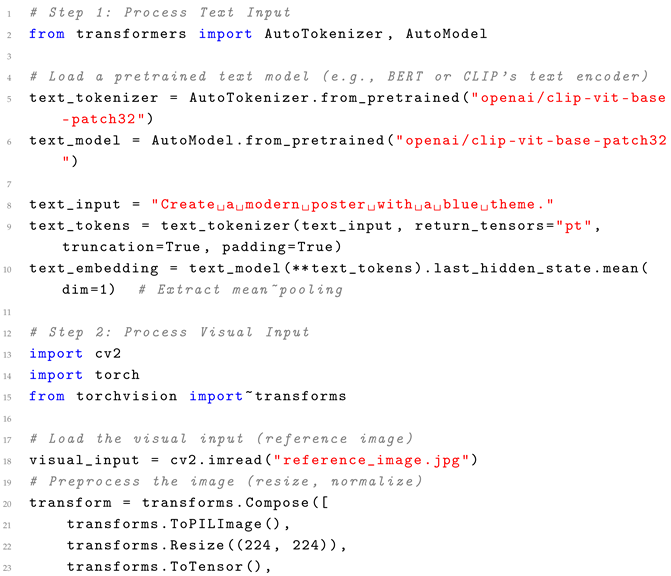

| Listing 1. Pseudocode for Multimodal Input Processing with Practical Integration. |

|

- Encoder–Decoder Architectures: Models such as DreamLLM [247] integrate LLMs with pre-trained image encoders (e.g., CLIP [171]) and decoders (e.g., Stable Diffusion [241]). While powerful for general image generation, these approaches face challenges with transparent images common in graphic design [248]. OpenCLOE [249] begins by translating user intentions into a design plan using GPT-3.5 and in-context learning. Then, the image and typography generation modules synthesize design elements according to the specified plan, and the graphic renderer assembles the final image.

- Token-based Representation: An alternative approach represents images as discrete tokens [10,250,251]. This method encodes images into token sequences via image quantizers like VQGAN [252]. The MarkupDM approach [248] adapts this methodology specifically for graphic design by developing a custom quantizer that handles transparency and varying image sizes.

- Unified Models: GraphicLLM [253] proposes a multimodal model that processes both visual and textual design elements within a unified framework, addressing the complex relationships.

- Text-to-Layout Translation: The authors of [254] utilize LLMs to translate descriptions into intermediate structural representations that guide subsequent layout generation. DesignProbe [19] extends this by introducing a reasoning mechanism where LLMs analyze design intent before generating structured layout specifications.

- Attribute Prediction: GraphiMind [25] employs MLLMs to predict geometric and stylistic attributes for design elements while maintaining global coherence across the entire composition.

- Component-wise Generation: The authors of [255] propose a method that leverages CLIP embeddings to generate design components that maintain stylistic consistency. VASCAR [256] is large vision–language model-based content-aware layout generation. Design-o-meter [257] is the first work to score and refine designs within a unified framework by adjusting the layout of design elements to achieve high aesthetic scores.

- Retrieval-Enhanced Generation: GraghicLLM [253] combines generative capabilities with retrieval mechanisms to leverage existing design elements, achieving higher fidelity results for complex graphic components.

- Design Systems Integration: MagicBrush [258] integrates with design systems to ensure generated elements conform to established brand guidelines and stylistic constraints.

- Markup Document Models: MarkupDM [248] introduces a novel approach treating graphic designs as interleaved multimodal documents consisting of markup language and images. This representation allows direct application of multimodal LLMs to graphic design tasks.

- SVG Generation: VectorFusion [126] focus on generating vector graphics (SVG) directly, addressing the scalability advantages needed for professional graphic design workflows.

- HTML/CSS Generation: WebGPT [259] generates web-based designs by producing HTML and CSS code, demonstrating the potential of code-centric approaches for interactive designs.

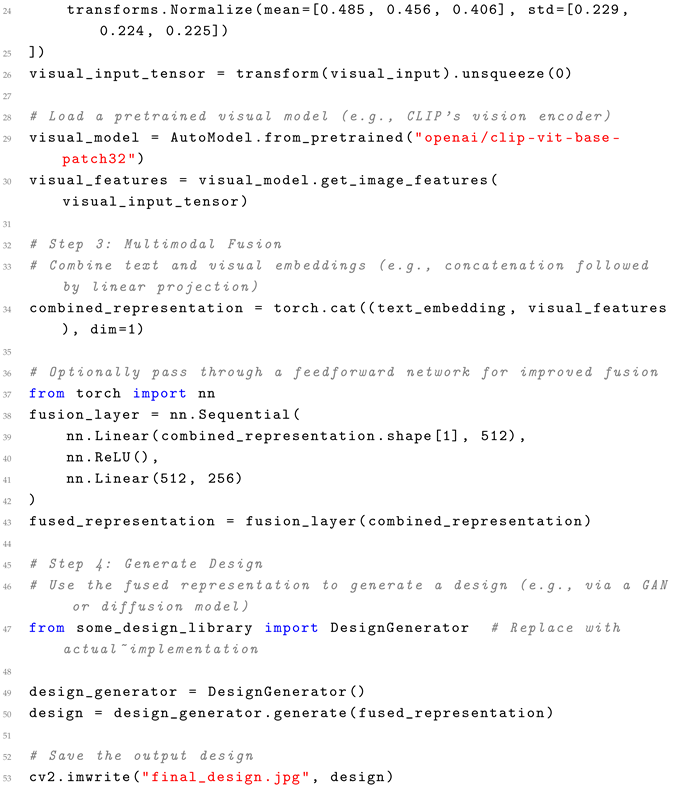

| Listing 2. Case study: traditional template-based graphic design. |

|

5.4. Existing Challenges

5.5. Potential Directions

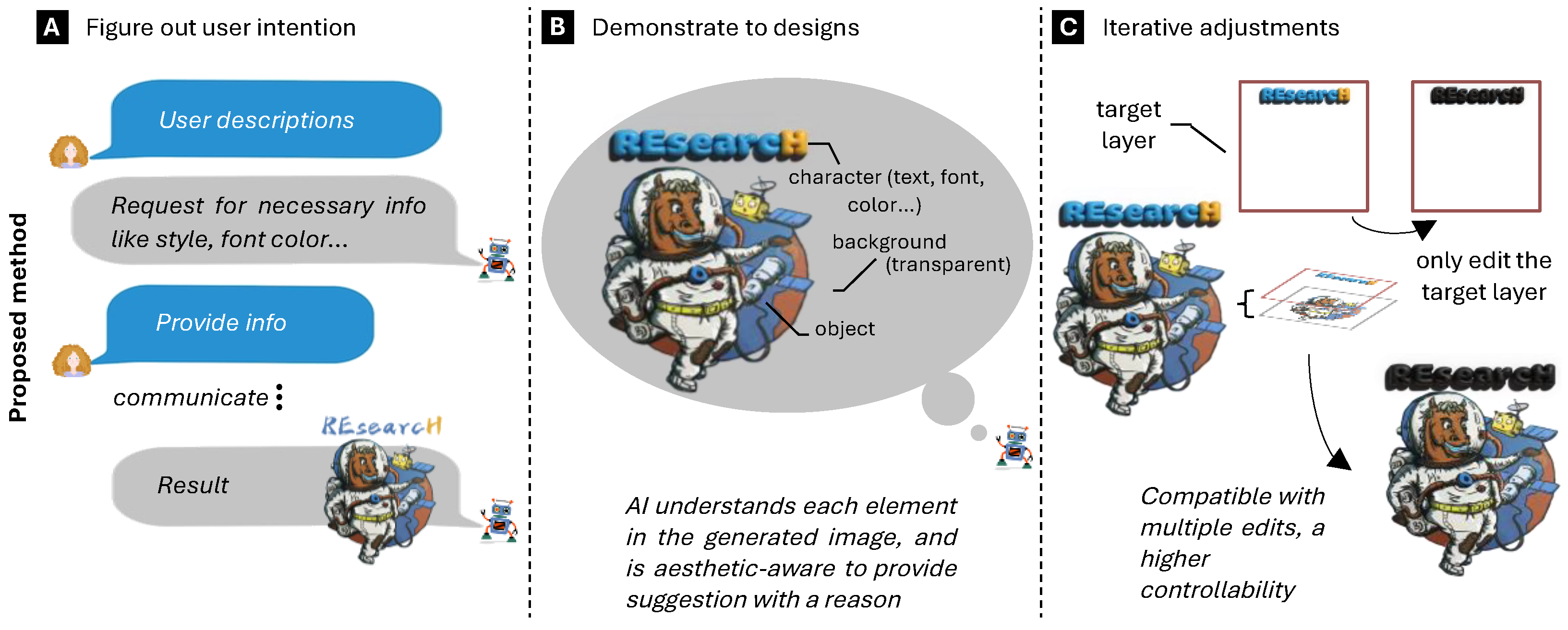

- Multimodal Intent Understanding. Current multimodal models integrating dialogue and visual recognition provide a foundation for intent understanding but require significant enhancement in several key areas: (1) Graphic design presents unique challenges with artistic images featuring diverse fonts and complex layouts that exceed the capabilities of general-purpose recognition systems. (2) Three-dimensional designs, text with special effects (overlapping, bending, distortion), and artistic typography demand specialized recognition approaches. (3) Enhanced communicative abilities in large language models are needed to translate ambiguous user inputs into coherent, actionable design specifications.

- Knowledge-Enhanced Layout Reasoning. The computational representation of abstract design principles presents significant challenges. Drawing inspiration from advanced reasoning models like OpenAI o1, research should focus on the following: (1) Encoding established design theories within computational frameworks. (2) Developing inference mechanisms that can apply these principles contextually. (3) Creating evaluation metrics that align with human aesthetic judgment. (4) Building models that can explain their layout decisions with reference to design principles.

- High-Quality Visual Element Generation. Layer diffusion techniques show promise for creating images with transparent backgrounds—a critical requirement for graphic design. However, text generation capabilities require substantial improvement, particularly for artistic typography, where models like Flux.1 demonstrate potential but insufficient fidelity. Meanwhile, LLM-guided approaches for generating vector graphics, exemplified by tools like SVGDreamer, offer precision and scalability advantages. Research should focus on enhancing text rendering and incorporating deeper reasoning about design principles. Finally, models capable of a seamless transition between raster and vector formats could revolutionize workflow efficiency by offering the advantages of both paradigms, as suggested by [196].

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jiang, S.; Wang, Z.; Hertzmann, A.; Jin, H.; Fu, Y. Visual font pairing. IEEE Trans. Multimed. 2019, 22, 2086–2097. [Google Scholar] [CrossRef]

- Weng, H.; Huang, D.; Zhang, T.; Lin, C.Y. Learn and Sample Together: Collaborative Generation for Graphic Design Layout. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 19–25 August 2023; pp. 5851–5859. [Google Scholar]

- Feng, W.; Zhu, W.; Fu, T.J.; Jampani, V.; Akula, A.; He, X.; Basu, S.; Wang, X.E.; Wang, W.Y. Layoutgpt: Compositional visual planning and generation with LLMs. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 36, pp. 18225–18250. [Google Scholar]

- Tan, J.; Lien, J.M.; Gingold, Y. Decomposing images into layers via RGB-space geometry. ACM Trans. Graph. (TOG) 2016, 36, 1–14. [Google Scholar] [CrossRef]

- Tan, J.; Echevarria, J.; Gingold, Y. Efficient palette-based decomposition and recoloring of images via RGBXY-space geometry. ACM Trans. Graph. (TOG) 2018, 37, 1–10. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Y.; Xu, K. An improved geometric approach for palette-based image decomposition and recoloring. In Proceedings of the International Joint Conference on Artificial Intelligence, Porto, Portugal, 3–7 June 2019; pp. 11–22. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Computer Vision—ECCV 2016. ECCV 2016; Springer: Cham, Switzerland, 2016; pp. 649–666. [Google Scholar]

- Vitoria, P.; Raad, L.; Ballester, C. Adversarial picture colorization with semantic class distribution. In Proceedings of the Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 11–14 October 2020; pp. 45–54. [Google Scholar]

- Huang, D.; Guo, J.; Sun, S.; Tian, H.; Lin, J.; Hu, Z.; Lin, C.Y.; Lou, J.G.; Zhang, D. A survey for graphic design intelligence. arXiv 2023, arXiv:2309.01371. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 34892–34916. [Google Scholar]

- Hu, H.; Chan, K.C.; Su, Y.C.; Chen, W.; Li, Y.; Sohn, K.; Zhao, Y.; Ben, X.; Gong, B.; Cohen, W.; et al. Instruct-Imagen: Image generation with multi-modal instruction. In Proceedings of the Advances in Neural Information Processing Systems, Seattle, WA, USA, 17–21 June 2024; Volume 36, pp. 4754–4763. [Google Scholar]

- Epstein, Z.; Hertzmann, A.; Investigators of Human Creativity; Akten, M.; Farid, H.; Fjeld, J.; Frank, M.R.; Groh, M.; Herman, L.; Leach, N.; et al. Art and the science of generative AI. Science 2023, 380, 1110–1111. [Google Scholar] [CrossRef] [PubMed]

- Tian, X.; Günther, T. A survey of smooth vector graphics: Recent advances in representation, creation, rasterization and image vectorization. IEEE Trans. Vis. Comput. Graph. 2022, 30, 1652–1671. [Google Scholar] [CrossRef]

- Shi, Y.; Shang, M.; Qi, Z. Intelligent layout generation based on deep generative models: A comprehensive survey. Inf. Fusion 2023, 100, 140. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, F.; Zhang, M. Intelligent Graphic Layout Generation: Current Status and Future Perspectives. In Proceedings of the International Conference on Computer Supported Cooperative Work in Design, Tianjin, China, 8–10 May 2024; pp. 2632–2637. [Google Scholar]

- Tang, Y.; Ciancia, M.; Wang, Z.; Gao, Z. What’s Next? Exploring Utilization, Challenges, and Future Directions of AI-Generated Image Tools in Graphic Design. arXiv 2024, arXiv:2406.13436. [Google Scholar]

- Tang, Y.; Ciancia, M.; Wang, Z.; Gao, Z. Vision-language models for vision tasks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5625–5644. [Google Scholar] [CrossRef]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. Blip: Bootstrapping language-image pre-training for unified vision-language understanding and generation. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 17–23 July 2022; pp. 12888–12900. [Google Scholar]

- Lin, J.; Huang, D.; Zhao, T.; Zhan, D.; Lin, C.Y. DesignProbe: A Graphic Design Benchmark for Multimodal Large Language Models. arXiv 2024, arXiv:2404.14801. [Google Scholar] [CrossRef]

- Cheng, Y.; Zhang, Z.; Yang, M.; Nie, H.; Li, C.; Wu, X.; Shao, J. Graphic Design with Large Multimodal Model. arXiv 2024, arXiv:2404.14368. [Google Scholar] [CrossRef]

- Xiao, S.; Wang, Y.; Zhou, J.; Yuan, H.; Xing, X.; Yan, R.; Li, C.; Wang, S.; Huang, T.; Liu, Z. Omnigen: Unified image generation. arXiv 2024, arXiv:2409.11340. [Google Scholar] [CrossRef]

- Zhou, C.; Yu, L.; Babu, A.; Tirumala, K.; Yasunaga, M.; Shamis, L.; Kahn, J.; Ma, X.; Zettlemoyer, L.; Levy, O. Transfusion: Predict the next token and diffuse images with one multi-modal model. arXiv 2024, arXiv:2408.11039. [Google Scholar] [CrossRef]

- Meggs, P.B. Type and Image: The Language of Graphic Design; John Wiley & Sons: Hoboken, NJ, USA, 1992. [Google Scholar]

- Dou, S.; Jiang, X.; Liu, L.; Ying, L.; Shan, C.; Shen, Y.; Dong, X.; Wang, Y.; Li, D.; Zhao, C. Hierarchical Recognizing Vector Graphics and A New Chart-based Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 7556–7573. [Google Scholar] [CrossRef]

- Huang, Q.; Lu, M.; Lanir, J.; Lischinski, D.; Cohen-Or, D.; Huang, H. GraphiMind: LLM-centric Interface for Information Graphics Design. arXiv 2024, arXiv:2401.13245. [Google Scholar]

- Ding, S.; Chen, X.; Fang, Y.; Liu, W.; Qiu, Y.; Chai, C. DesignGPT: Multi-Agent Collaboration in Design. In Proceedings of the International Symposium on Computational Intelligence and Design, Hangzhou, China, 16–17 December 2023; pp. 204–208. [Google Scholar]

- Weng, H.; Huang, D.; Qiao, Y.; Hu, Z.; Lin, C.Y.; Zhang, T.; Chen, C.L. Desigen: A Pipeline for Controllable Design Template Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 21–32. [Google Scholar]

- Luo, W.; Zhang, H.; Li, J.; Wei, X.S. Learning semantically enhanced feature for fine-grained image classification. IEEE Signal Process. Lett. 2020, 27, 1545–1549. [Google Scholar] [CrossRef]

- O’Gorman, L. The document spectrum for page layout analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1993, 15, 1162–1173. [Google Scholar] [CrossRef]

- Long, S.; Qin, S.; Panteleev, D.; Bissacco, A.; Fujii, Y.; Raptis, M. Towards end-to-end unified scene text detection and layout analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1049–1059. [Google Scholar]

- Cheng, H.; Zhang, P.; Wu, S.; Zhang, J.; Zhu, Q.; Xie, Z.; Li, J.; Ding, K.; Jin, L. M6doc: A large-scale multi-format, multi-type, multi-layout, multi-language, multi-annotation category dataset for modern document layout analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 15138–15147. [Google Scholar]

- Luo, C.; Shen, Y.; Zhu, Z.; Zheng, Q.; Yu, Z.; Yao, C. LayoutLLM: Layout Instruction Tuning with LLMs for Document Understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15630–15640. [Google Scholar]

- Chen, Y.; Zhang, J.; Peng, K.; Zheng, J.; Liu, R.; Torr, P.; Stiefelhagen, R. RoDLA: Benchmarking the Robustness of Document Layout Analysis Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15556–15566. [Google Scholar]

- Zhang, N.; Cheng, H.; Chen, J.; Jiang, Z.; Huang, J.; Xue, Y.; Jin, L. M2Doc: A Multi-Modal Fusion Approach for Document Layout Analysis. In Proceedings of the Association for the Advancement of Artificial Intelligence, London, UK, 17–19 October 2024; pp. 7233–7241. [Google Scholar]

- Chen, R.; Cheng, J.K.; Ma, J. A Fusion Framework of Whitespace Smear Cutting and Swin Transformer for Document Layout Analysis. In Proceedings of the International Conference on Intelligent Computing, Tianjin, China, 5–8 August 2024; pp. 338–353. [Google Scholar]

- Kong, Y.; Luo, C.; Ma, W.; Zhu, Q.; Zhu, S.; Yuan, N.; Jin, L. Look closer to supervise better: One-shot font generation via component-based discriminator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13482–13491. [Google Scholar]

- He, H.; Chen, X.; Wang, C.; Liu, J.; Du, B.; Tao, D.; Yu, Q. Diff-font: Diffusion model for robust one-shot font generation. Int. J. Comput. Vis. 2024, 132, 5372–5386. [Google Scholar] [CrossRef]

- Tang, L.; Cai, Y.; Liu, J.; Hong, Z.; Gong, M.; Fan, M.; Han, J.; Liu, J.; Ding, E.; Wang, J. Few-shot font generation by learning fine-grained local styles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7895–7904. [Google Scholar]

- Chen, L.; Lee, F.; Chen, H.; Yao, W.; Cai, J.; Chen, Q. Automatic Chinese font generation system reflecting emotions based on generative adversarial network. Appl. Sci. 2020, 10, 5976. [Google Scholar] [CrossRef]

- Wang, C.; Zhou, M.; Ge, T.; Jiang, Y.; Bao, H.; Xu, W. Cf-font: Content fusion for few-shot font generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1858–1867. [Google Scholar]

- Wang, Y.; Gao, Y.; Lian, Z. Attribute2font: Creating fonts you want from attributes. ACM Trans. Graph. 2020, 39, 69. [Google Scholar] [CrossRef]

- Liu, W.; Liu, F.; Ding, F.; He, Q.; Yi, Z. Xmp-font: Self-supervised cross-modality pre-training for few-shot font generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7905–7914. [Google Scholar]

- Yan, S. ReDualSVG: Refined Scalable Vector Graphics Generation. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2023; pp. 87–98. [Google Scholar]

- Cao, D.; Wang, Z.; Echevarria, J.; Liu, Y. Svgformer: Representation learning for continuous vector graphics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10093–10102. [Google Scholar]

- Zhao, Z.; Chen, Y.; Hu, Z.; Chen, X.; Ni, B. Vector Graphics Generation via Mutually Impulsed Dual-domain Diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 4420–4428. [Google Scholar]

- Podell, D.; English, Z.; Lacey, K.; Blattmann, A.; Dockhorn, T.; Müller, J.; Penna, J.; Rombach, R. Sdxl: Improving latent diffusion models for high-resolution image synthesis. arXiv 2023, arXiv:2307.01952. [Google Scholar] [CrossRef]

- Singh, J.; Gould, S.; Zheng, L. High-fidelity guided image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5997–6006. [Google Scholar]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards large-scale small object detection: Survey and benchmarks. IEEE Tran. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef]

- Chen, J.; Guo, H.; Yi, K.; Li, B.; Elhoseiny, M. Visualgpt: Data-efficient adaptation of pretrained models for image captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18030–18040. [Google Scholar]

- Wan, J.; Song, S.; Yu, W.; Liu, Y.; Cheng, W.; Huang, F.; Bai, X.; Yao, C.; Yang, Z. OmniParser: A Unified Framework for Text Spotting Key Information Extraction and Table Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 15641–15653. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Locteau, H.; Adam, S.; Trupin, E.; Labiche, J.; Héroux, P. Symbol spotting using full visibility graph representation. In Proceedings of the Graphics Recognition, Curitiba, Brazil, 20–21 September 2007; pp. 49–50. [Google Scholar]

- Ramel, J.Y.; Vincent, N.; Emptoz, H. A structural representation for understanding line-drawing images. Doc. Anal. Recognit. 2000, 3, 58–66. [Google Scholar] [CrossRef]

- Jiang, X.; Liu, L.; Shan, C.; Shen, Y.; Dong, X.; Li, D. Recognizing vector graphics without rasterization. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 24569–24580. [Google Scholar]

- Bi, T.; Zhang, X.; Zhang, Z.; Xie, W.; Lan, C.; Lu, Y.; Zheng, N. Text Grouping Adapter: Adapting Pre-trained Text Detector for Layout Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 28150–28159. [Google Scholar]

- Liao, M.; Shi, B.; Bai, X.; Wang, X.; Liu, W. Textboxes: A fast text detector with a single deep neural network. In Proceedings of the Association for the Advancement of Artificial Intelligence Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Tian, Z.; Huang, W.; He, T.; He, P.; Qiao, Y. Detecting text in natural image with connectionist text proposal network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 56–72. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Tran. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Shen, C.; Jin, L.; He, T.; Chen, P.; Liu, C.; Chen, H. Abcnet v2: Adaptive bezier-curve network for real-time end-to-end text spotting. IEEE Tran. Pattern Anal. Mach. Intell. 2021, 44, 8048–8064. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3651–3660. [Google Scholar]

- Wang, Y.; Zhang, X.; Yang, T.; Sun, J. Anchor detr: Query design for transformer-based detector. In Proceedings of the Association for the Advancement of Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 2567–2575. [Google Scholar]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13619–13627. [Google Scholar]

- Li, F.; Zhang, H.; Xu, H.; Liu, S.; Zhang, L.; Ni, L.M.; Shum, H.Y. Mask dino: Towards a unified transformer-based framework for object detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3041–3050. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading text in the wild with convolutional neural networks. Int. J. Comput. Vis. 2016, 116, 1–20. [Google Scholar] [CrossRef]

- Liu, W.; Chen, C.; Wong, K.Y. Char-net: A character-aware neural network for distorted scene text recognition. In Proceedings of the Association for the Advancement of Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Cheng, Z.; Xu, Y.; Bai, F.; Niu, Y.; Pu, S.; Zhou, S. Aon: Towards arbitrarily-oriented text recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5571–5579. [Google Scholar]

- Li, H.; Wang, P.; Shen, C.; Zhang, G. Show, attend and read: A simple and strong baseline for irregular text recognition. In Proceedings of the Association for the Advancement of Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8610–8617. [Google Scholar]

- Liu, Y.; Wang, Z.; Jin, H.; Wassell, I. Synthetically supervised feature learning for scene text recognition. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 435–451. [Google Scholar]

- Chen, T.; Wang, Z.; Xu, N.; Jin, H.; Luo, J. Large-scale tag-based font retrieval with generative feature learning. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9116–9125. [Google Scholar]

- Zhu, Y.; Tan, T.; Wang, Y. Font recognition based on global texture analysis. IEEE Tran. Pattern Anal. Mach. Intell. 2001, 23, 1192–1200. [Google Scholar]

- Chen, G.; Yang, J.; Jin, H.; Brandt, J.; Shechtman, E. Large-scale visual font recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3598–3605. [Google Scholar]

- Wang, Z.; Yang, J.; Jin, H.; Shechtman, E.; Agarwala, A.; Brandt, J.; Huang, T.S. Deepfont: Identify your font from an image. In Proceedings of the ACM Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 451–459. [Google Scholar]

- Bharath, V.; Rani, N.S. A font style classification system for English OCR. In Proceedings of the International Conference on Intelligent Computing and Control, Coimbatore, India, 23–24 June 2017; pp. 1–5. [Google Scholar]

- Liu, Y.; Wang, Z.; Jin, H.; Wassell, I. Multi-task adversarial network for disentangled feature learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3743–3751. [Google Scholar]

- Sun, Q.; Cui, J.; Gu, Z. Extending CLIP for Text-to-font Retrieval. In Proceedings of the International Conference on Multimedia Retrieval, Phuket, Thailand, 10–14 June 2024; pp. 1170–1174. [Google Scholar]

- Stoffel, A.; Spretke, D.; Kinnemann, H.; Keim, D.A. Enhancing document structure analysis using visual analytics. In Proceedings of the ACM Symposium on Applied Computing, Sierre, Switzerland, 22–26 March 2010; pp. 8–12. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Patil, A.G.; Ben-Eliezer, O.; Perel, O.; Averbuch-Elor, H. Read: Recursive autoencoders for document layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 544–545. [Google Scholar]

- Sun, H.M. Page segmentation for Manhattan and non-Manhattan layout documents via selective CRLA. In Proceedings of the Document Analysis and Recognition, Seoul, Republic of Korea, 31 August–1 September 2005; pp. 116–120. [Google Scholar]

- Agrawal, M.; Doermann, D. Voronoi++: A dynamic page segmentation approach based on voronoi and docstrum features. In Proceedings of the Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; pp. 1011–1015. [Google Scholar]

- Simon, A.; Pret, J.C.; Johnson, A.P. A fast algorithm for bottom-up document layout analysis. IEEE Tran. Pattern Anal. Mach. Intell. 1997, 19, 273–277. [Google Scholar] [CrossRef]

- Tran, T.A.; Na, I.S.; Kim, S.H. Page segmentation using minimum homogeneity algorithm and adaptive mathematical morphology. Int. J. Doc. Anal. Recognit. 2016, 19, 191–209. [Google Scholar] [CrossRef]

- Vil’kin, A.M.; Safonov, I.V.; Egorova, M.A. Algorithm for segmentation of documents based on texture features. Pattern Recognit. Image Anal. 2013, 23, 153–159. [Google Scholar] [CrossRef]

- Grüning, T.; Leifert, G.; Strauß, T.; Michael, J.; Labahn, R. A two-stage method for text line detection in historical documents. J. Doc. Anal. Recognit. 2019, 22, 285–302. [Google Scholar] [CrossRef]

- Xu, Y.; Yin, F.; Zhang, Z.; Liu, C.L. Multi-task Layout Analysis for Historical Handwritten Documents Using Fully Convolutional Networks. In Proceedings of the International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1057–1063. [Google Scholar]

- Luo, S.; Ivison, H.; Han, S.C.; Poon, J. Local interpretations for explainable natural language processing: A survey. ACM Comput. Surv. 2024, 56, 1–36. [Google Scholar] [CrossRef]

- Kong, W.; Jiang, Z.; Sun, S.; Guo, Z.; Cui, W.; Liu, T.; Lou, J.; Zhang, D. Aesthetics++: Refining graphic designs by exploring design principles and human preference. IEEE Trans. Vis. Comput. Graph. 2022, 29, 3093–3104. [Google Scholar] [CrossRef] [PubMed]

- Son, K.; Oh, S.Y.; Kim, Y.; Choi, H.; Bae, S.H.; Hwang, G. Color sommelier: Interactive color recommendation system based on community-generated color palettes. In Proceedings of the ACM Symposium on User Interface Software & Technology, Charlotte, NC, USA, 8–11 November 2015; pp. 95–96. [Google Scholar]

- Jahanian, A.; Liu, J.; Lin, Q.; Tretter, D.; O’Brien-Strain, E.; Lee, S.C.; Lyons, N.; Allebach, J. Recommendation system for automatic design of magazine covers. In Proceedings of the Conference on Intelligent User Interfaces, Santa Monica, CA, USA, 19–22 March 2013; pp. 95–106. [Google Scholar]

- Yang, X.; Mei, T.; Xu, Y.Q.; Rui, Y.; Li, S. Automatic generation of visual-textual presentation layout. ACM Trans. Multimed. Comput. Commun. Appl. 2016, 9, 39. [Google Scholar] [CrossRef]

- Maheshwari, P.; Jain, N.; Vaddamanu, P.; Raut, D.; Vaishay, S.; Vinay, V. Generating Compositional Color Representations from Text. In Proceedings of the Conference on Information & Knowledge Management, Gold Coast, QLD, Australia, 1–5 November 2021; pp. 1222–1231. [Google Scholar]

- Bahng, H.; Yoo, S.; Cho, W.; Park, D.K.; Wu, Z.; Ma, X.; Choo, J. Coloring with words: Guiding colorization via text-based palette generation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 431–447. [Google Scholar]

- Lu, K.; Feng, M.; Chen, X.; Sedlmair, M.; Deussen, O.; Lischinski, D.; Cheng, Z.; Wang, Y. Palettailor: Discriminable colorization for categorical data. IEEE Trans. Vis. Comput. Graph. 2020, 27, 475–484. [Google Scholar] [CrossRef] [PubMed]

- Yuan, L.P.; Zhou, Z.; Zhao, J.; Guo, Y.; Du, F.; Qu, H. Infocolorizer: Interactive recommendation of color palettes for infographics. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4252–4266. [Google Scholar] [CrossRef]

- Qiu, Q.; Otani, M.; Iwazaki, Y. An intelligent color recommendation tool for landing page design. In Proceedings of the Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022; pp. 26–29. [Google Scholar]

- Qiu, Q.; Wang, X.; Otani, M.; Iwazaki, Y. Color recommendation for vector graphic documents on multi-palette representation. In Proceedings of the Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 3621–3629. [Google Scholar]

- Kikuchi, K.; Inoue, N.; Otani, M.; Simo-Serra, E.; Yamaguchi, K. Generative colorization of structured mobile web pages. In Proceedings of the Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 3650–3659. [Google Scholar]

- Ke, Y.; Tang, X.; Jing, F. The design of high-level features for photo quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; Volume 1, pp. 419–426. [Google Scholar]

- Wong, L.K.; Low, K.L. Saliency-enhanced image aesthetics class prediction. In Proceedings of the Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 997–1000. [Google Scholar]

- Dhar, S.; Ordonez, V.; Berg, T.L. High level describable attributes for predicting aesthetics and interestingness. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1657–1664. [Google Scholar]

- Obrador, P.; Saad, M.A.; Suryanarayan, P.; Oliver, N. Towards category-based aesthetic models of photographs. In Proceedings of the ACM Multimedia, Nara, Japan, 29 October–2 November 2012; pp. 63–76. [Google Scholar]

- Reinecke, K.; Yeh, T.; Miratrix, L.; Mardiko, R.; Zhao, Y.; Liu, J.; Gajos, K.Z. Predicting users’ first impressions of website aesthetics with a quantification of perceived visual complexity and colorfulness. In Proceedings of the Conference on Human Factors in Computing Systems, Paris, France, 27 April–2 May 2013; pp. 2049–2058. [Google Scholar]

- Lu, X.; Lin, Z.; Jin, H.; Yang, J.; Wang, J.Z. Rapid: Rating pictorial aesthetics using deep learning. In Proceedings of the ACM Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 457–466. [Google Scholar]

- Lu, X.; Lin, Z.; Shen, X.; Mech, R.; Wang, J.Z. Deep multi-patch aggregation network for image aesthetics, and quality estimation. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 990–998. [Google Scholar]

- Cui, C.; Lin, P.; Nie, X.; Jian, M.; Yin, Y. Social-sensed image aesthetics assessment. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–19. [Google Scholar] [CrossRef]

- Cui, C.; Yang, W.; Shi, C.; Wang, M.; Nie, X.; Yin, Y. Personalized image quality assessment with social-sensed aesthetic preference. Inf. Sci. 2020, 512, 780–794. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, W.; Zhou, N.; Lei, P.; Xu, Y.; Zheng, Y.; Fan, J. Adaptive fractional dilated convolution network for image aesthetics assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Carlier, A.; Danelljan, M.; Alahi, A.; Timofte, R. DeepSVG: A Hierarchical Generative Network for Vector Graphics Animation. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 16351–16361. [Google Scholar]

- Ha, D.; Eck, D. A Neural Representation of Sketch Drawings. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Lopes, R.G.; Ha, D.; Eck, D.; Shlens, J. A learned representation for scalable vector graphics. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7930–7939. [Google Scholar]

- Wang, Y.; Lian, Z. Deepvecfont: Synthesizing high-quality vector fonts via dual-modality learning. ACM Trans. Graph. (TOG) 2021, 40, 1–15. [Google Scholar] [CrossRef]

- Wu, R.; Su, W.; Ma, K.; Liao, J. IconShop: A Comprehensive Tool for Icon Design and Management. In Proceedings of the International Conference on Computer Graphics and Interactive Techniques, SIGGRAPH, Los Angeles, CA, USA, 6–10 August 2023; pp. 456–465. [Google Scholar]

- Das, A.; Yang, Y.; Hospedales, T.; Xiang, T.; Song, Y.Z. Béziersketch: A generative model for scalable vector sketches. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 632–647. [Google Scholar]

- Li, T.M.; Lukáč, M.; Gharbi, M.; Ragan-Kelley, J. Differentiable vector graphics rasterization for editing and learning. ACM Trans. Graph. (TOG) 2020, 39, 1–15. [Google Scholar] [CrossRef]

- Ma, X.; Zhou, Y.; Xu, X.; Sun, B.; Filev, V.; Orlov, N.; Fu, Y.; Shi, H. Towards layer-wise image vectorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16314–16323. [Google Scholar]

- Reddy, P.; Gharbi, M.; Lukac, M.; Mitra, N.J. Im2vec: Synthesizing vector graphics without vector supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 7342–7351. [Google Scholar]

- Shen, I.C.; Chen, B.Y. Clipgen: A deep generative model for clipart vectorization and synthesis. IEEE Trans. Vis. Comput. Graph. 2021, 28, 4211–4224. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Shao, X.; Chen, K.; Zhang, W.; Jing, Z.; Li, M. Clipvg: Text-guided image manipulation using differentiable vector graphics. In Proceedings of the Association for the Advancement of Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2312–2320. [Google Scholar]

- Su, H.; Liu, X.; Niu, J.; Cui, J.; Wan, J.; Wu, X.; Wang, N. Marvel: Raster gray-level manga vectorization via primitive-wise deep reinforcement learning. Trans. Circuits Syst. Video Technol. 2023, 34, 2677–2693. [Google Scholar] [CrossRef]

- Xing, X.; Wang, C.; Zhou, H.; Zhang, J.; Yu, Q.; Xu, D. Diffsketcher: Text guided vector sketch synthesis through latent diffusion models. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Yamaguchi, K. Canvasvae: Learning to generate vector graphic documents. In Proceedings of the International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 5481–5489. [Google Scholar]

- Frans, K.; Soros, L.; Witkowski, O. Clipdraw: Exploring text-to-drawing synthesis through language-image encoders. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Vinker, Y.; Pajouheshgar, E.; Bo, J.Y.; Bachmann, R.C.; Bermano, A.H.; Cohen-Or, D.; Zamir, A.; Shamir, A. Clipasso: Semantically-aware object sketching. ACM Trans. Graph. (TOG) 2022, 41, 1–11. [Google Scholar] [CrossRef]

- Jain, A.; Xie, A.; Abbeel, P. Vectorfusion: Text-to-svg by abstracting pixel-based diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Iluz, S.; Vinker, Y.; Hertz, A.; Berio, D.; Cohen-Or, D.; Shamir, A. Word-as-image for semantic typography. ACM Trans. Graph. (TOG) 2023, 42, 1–11. [Google Scholar] [CrossRef]

- Gal, R.; Vinker, Y.; Alaluf, Y.; Bermano, A.; Cohen-Or, D.; Shamir, A.; Chechik, G. Breathing Life Into Sketches Using Text-to-Video Priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 4325–4336. [Google Scholar]

- Xing, X.; Zhou, H.; Wang, C.; Zhang, J.; Xu, D.; Yu, Q. SVGDreamer: Text guided SVG generation with diffusion model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 4546–4555. [Google Scholar]

- Zhang, P.; Zhao, N.; Liao, J. Text-to-Vector Generation with Neural Path Representation. ACM Trans. Graph. (TOG) 2024, 43, 1–13. [Google Scholar]

- Sun, J.; Liang, L.; Wen, F.; Shum, H.Y. Image vectorization using optimized gradient meshes. ACM Trans. Graph. (TOG) 2007, 26, 11-es. [Google Scholar] [CrossRef]

- Xia, T.; Liao, B.; Yu, Y. Patch-based image vectorization with automatic curvilinear feature alignment. ACM Trans. Graph. (TOG) 2009, 28, 1–10. [Google Scholar] [CrossRef]

- Lai, Y.K.; Hu, S.M.; Martin, R.R. Automatic and topology-preserving gradient mesh generation for image vectorization. ACM Trans. Graph. (TOG) 2009, 28, 1–8. [Google Scholar] [CrossRef]

- Zhang, S.H.; Chen, T.; Zhang, Y.F.; Hu, S.M.; Martin, R.R. Vectorizing cartoon animations. IEEE Trans. Vis. Comput. Graph. 2009, 15, 618–629. [Google Scholar] [CrossRef] [PubMed]

- Sỳkora, D.; Buriánek, J.; Zara, J. Sketching Cartoons by Example. In Proceedings of the SBM, Philadelphia, PA, USA, 13–16 March 2005; pp. 27–33. [Google Scholar]

- Bessmeltsev, M.; Solomon, J. Vectorization of line drawings via polyvector fields. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Dominici, E.A.; Schertler, N.; Griffin, J.; Hoshyari, S.; Sigal, L.; Sheffer, A. Polyfit: Perception-aligned vectorization of raster clip-art via intermediate polygonal fitting. ACM Trans. Graph. 2020, 39, 77:1–77:16. [Google Scholar] [CrossRef]

- Dominici, E.A.; Schertler, N.; Griffin, J.; Hoshyari, S.; Sigal, L.; Sheffer, A. SAMVG: A multi-stage image vectorization model with the segment-anything model. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Seoul, Republic of Korea, 14–19 April 2024; pp. 4350–4354. [Google Scholar]

- Chen, Y.; Ni, B.; Chen, X.; Hu, Z. Editable image geometric abstraction via neural primitive assembly. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23514–23523. [Google Scholar]

- Hu, T.; Yi, R.; Qian, B.; Zhang, J.; Rosin, P.L.; Lai, Y.K. SuperSVG: Superpixel-based scalable vector graphics synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 24892–24901. [Google Scholar]

- Zhou, B.; Wang, W.; Chen, Z. Easy generation of personal Chinese handwritten fonts. In Proceedings of the IEEE International Conference on Multimedia & Expo, Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar]

- Lian, Z.; Zhao, B.; Xiao, J. Automatic generation of large-scale handwriting fonts via style. In Proceedings of the SIGGRAPH Asia, Macao SAR, China, 5–8 December 2016; pp. 1–4. [Google Scholar]

- Phan, H.Q.; Fu, H.; Chan, A.B. Flexyfont: Learning transferring rules for flexible typeface synthesis. In Proceedings of the Computer Graphics Forum, Beijing, China, 7–9 October 2015; Volume 34, pp. 245–256. [Google Scholar]

- Tenenbaum, J.; Freeman, W. Separating style and content. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 2–5 December 1996; Volume 9. [Google Scholar]

- Goda, Y.; Nakamura, T.; Kanoh, M. Texture transfer based on continuous structure of texture patches for design of artistic Shodo fonts. In Proceedings of the ACM SIGGRAPH ASIA, Seoul, Republic of Korea, 15–18 December 2010; pp. 1–2. [Google Scholar]

- Murata, K.; Nakamura, T.; Endo, K.; Kanoh, M.; Yamada, K. Japanese Kanji-calligraphic font design using onomatopoeia utterance. In Proceedings of the Congress on Evolutionary Computation, Vancouver, BC, Canada, 24–29 July 2016; pp. 1708–1713. [Google Scholar]

- Tian, Y. zi2zi: Master chinese calligraphy with conditional adversarial networks. Internet 2017, 3, 2. [Google Scholar]

- Jiang, Y.; Lian, Z.; Tang, Y.; Xiao, J. Dcfont: An end-to-end deep chinese font generation system. In Proceedings of the SIGGRAPH Asia, Bangkok, Thailand, 27–30 November 2017; pp. 1–4. [Google Scholar]

- Huang, Y.; He, M.; Jin, L.; Wang, Y. Rd-gan: Few/zero-shot chinese character style transfer via radical decomposition and rendering. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2020; pp. 156–172. [Google Scholar]

- Zhang, Y.; Zhang, Y.; Cai, W. Separating style and content for generalized style transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 847–855. [Google Scholar]

- Gao, Y.; Guo, Y.; Lian, Z.; Tang, Y.; Xiao, J. Artistic glyph image synthesis via one-stage few-shot learning. ACM Trans. Graph. 2019, 38, 185. [Google Scholar] [CrossRef]

- Xie, Y.; Chen, X.; Sun, L.; Lu, Y. Dg-font: Deformable generative networks for unsupervised font generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021. [Google Scholar]

- Yang, S.; Liu, J.; Lian, Z.; Guo, Z. Awesome typography: Statistics-based text effects transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7464–7473. [Google Scholar]

- Men, Y.; Lian, Z.; Tang, Y.; Xiao, J. A common framework for interactive texture transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6353–6362. [Google Scholar]

- Azadi, S.; Fisher, M.; Kim, V.G.; Wang, Z.; Shechtman, E.; Darrell, T. Multi-content gan for few-shot font style transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7564–7573. [Google Scholar]

- Chen, F.; Wang, Y.; Xu, S.; Wang, F.; Sun, F.; Jia, X. Style transfer network for complex multi-stroke text. Multimed. Syst. 2023, 9, 91–100. [Google Scholar] [CrossRef]

- Xue, M.; Ito, Y.; Nakano, K. An Art Font Generation Technique using Pix2Pix-based Networks. Bull. Netw. Comput. Syst. Softw. 2023, 12, 6–12. [Google Scholar]

- Wang, C.; Wu, L.; Liu, X.; Li, X.; Meng, L.; Meng, X. Anything to glyph: Artistic font synthesis via text-to-image diffusion model. In Proceedings of the SIGGRAPH Asia, Sydney, Australia, 12–15 December 2023; pp. 1–11. [Google Scholar]

- Xu, J.; Kaplan, C.S. Calligraphic packing. In Proceedings of the Graphics Interface, Montreal, QC, Canada, 27–29 May 2007; pp. 43–50. [Google Scholar]

- Zou, C.; Cao, J.; Ranaweera, W.; Alhashim, I.; Tan, P.; Sheffer, A.; Zhang, H. Legible compact calligrams. ACM Trans. Graph. (TOG) 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, Y.; Xiao, W.; Luo, Z. Synthesizing ornamental typefaces. In Proceedings of the Computer Graphics Forum, Yokohama, Japan, 12–16 June 2017; Volume 36, pp. 64–75. [Google Scholar]

- Tanveer, M.; Wang, Y.; Mahdavi-Amiri, A.; Zhang, H. Ds-fusion: Artistic typography via discriminated and stylized diffusion. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 374–384. [Google Scholar]

- Gupta, A.; Vedaldi, A.; Zisserman, A. Synthetic data for text localisation in natural images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2315–2324. [Google Scholar]

- Zhan, F.; Lu, S.; Xue, C. Verisimilar image synthesis for accurate detection and recognition of texts. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 249–266. [Google Scholar]

- Liao, M.; Song, B.; Long, S.; He, M.; Yao, C.; Bai, X. SynthText3D: Synthesizing scene text images from 3D virtual worlds. Sci. China Inf. Sci. 2020, 63, 120105. [Google Scholar] [CrossRef]

- Chen, J.; Huang, Y.; Lv, T.; Cui, L.; Chen, Q.; Wei, F. Textdiffuser-2: Unleashing the power of language models for text rendering. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 386–402. [Google Scholar]

- Tuo, Y.; Xiang, W.; He, J.Y.; Geng, Y.; Xie, X. Anytext: Multilingual visual text generation and editing. arXiv 2023, arXiv:2311.03054. [Google Scholar]

- Esser, P.; Kulal, S.; Blattmann, A.; Entezari, R.; Müller, J.; Saini, H.; Levi, Y.; Lorenz, D.; Sauer, A.; Boesel, F.; et al. Scaling rectified flow transformers for high-resolution image synthesis. In Proceedings of the International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.L.; Ghasemipour, K.; Gontijo Lopes, R.; Karagol Ayan, B.; Salimans, T.; et al. Photorealistic text-to-image diffusion models with deep language understanding. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 36479–36494. [Google Scholar]

- Xue, L.; Barua, A.; Constant, N.; Al-Rfou, R.; Narang, S.; Kale, M.; Roberts, A.; Raffel, C. Byt5: Towards a token-free future with pre-trained byte-to-byte models. Trans. Assoc. Comput. Linguist. 2022, 10, 291–306. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Ma, J.; Zhao, M.; Chen, C.; Wang, R.; Niu, D.; Lu, H.; Lin, X. GlyphDraw: Seamlessly Rendering Text with Intricate Spatial Structures in Text-to-Image Generation. arXiv 2023, arXiv:2303.17870. [Google Scholar]

- Yang, Y.; Gui, D.; Yuan, Y.; Liang, W.; Ding, H.; Hu, H.; Chen, K. Glyphcontrol: Glyph conditional control for visual text generation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 9–15 December 2024; Volume 36, pp. 44050–44066. [Google Scholar]

- Zhang, L.; Chen, X.; Wang, Y.; Lu, Y.; Qiao, Y. Brush your text: Synthesize any scene text on images via diffusion model. In Proceedings of the Association for the Advancement of Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 7215–7223. [Google Scholar]

- Li, Z.; Shu, Y.; Zeng, W.; Yang, D.; Zhou, Y. First Creating Backgrounds Then Rendering Texts: A New Paradigm for Visual Text Blending. arXiv 2024, arXiv:2410.10168. [Google Scholar] [CrossRef]

- Lee, B.; Srivastava, S.; Kumar, R.; Brafman, R.; Klemmer, S.R. Designing with interactive example galleries. In Proceedings of the Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; pp. 2257–2266. [Google Scholar]

- Dayama, N.R.; Todi, K.; Saarelainen, T.; Oulasvirta, A. Grids: Interactive layout design with integer programming. In Proceedings of the Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- O’Donovan, P.; Agarwala, A.; Hertzmann, A. Designscape: Design with interactive layout suggestions. In Proceedings of the Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2015; pp. 1221–1224. [Google Scholar]

- Todi, K.; Weir, D.; Oulasvirta, A. Sketchplore: Sketch and explore with a layout optimiser. In Proceedings of the Conference on designing interactive systems, Brisbane, Australia, 4–8 June 2016; pp. 543–555. [Google Scholar]

- Lee, H.Y.; Jiang, L.; Essa, I.; Le, P.B.; Gong, H.; Yang, M.H.; Yang, W. Neural design network: Graphic layout generation with constraints. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 491–506. [Google Scholar]

- Li, J.; Yang, J.; Zhang, J.; Liu, C.; Wang, C.; Xu, T. Attribute-conditioned layout gan for automatic graphic design. IEEE Trans. Vis. Comput. Graph. 2020, 27, 4039–4048. [Google Scholar] [CrossRef]

- Jing, Q.; Zhou, T.; Tsang, Y.; Chen, L.; Sun, L.; Zhen, Y.; Du, Y. Layout generation for various scenarios in mobile shopping applications. In Proceedings of the Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–18. [Google Scholar]

- Zhang, W.; Zheng, Y.; Miyazono, T.; Uchida, S.; Iwana, B.K. Towards book cover design via layout graphs. In Document Analysis and Recognition; Springer: Berlin/Heidelberg, Germany, 2021; pp. 642–657. [Google Scholar]

- Chai, S.; Zhuang, L.; Yan, F.; Zhou, Z. Two-stage Content-Aware Layout Generation for Poster Designs. In Proceedings of the ACM Multimedia, Vancouver, BC, Canada, 7–10 June 2023; pp. 8415–8423. [Google Scholar]

- Guo, S.; Jin, Z.; Sun, F.; Li, J.; Li, Z.; Shi, Y.; Cao, N. Vinci: An intelligent graphic design system for generating advertising posters. In Proceedings of the Conference on Human Factors in Computing Systems, Virtual, 8–13 May 2021; pp. 1–17. [Google Scholar]

- Damera-Venkata, N.; Bento, J.; O’Brien-Strain, E. Probabilistic document model for automated document composition. In Proceedings of the ACM Symposium on Document Engineering, Mountain View, CA, USA, 19–22 September 2011; pp. 3–12. [Google Scholar]

- Hurst, N.; Li, W.; Marriott, K. Review of automatic document formatting. In Proceedings of the Symposium on Document Engineering, Munich, Germany, 15–18 September 2009; pp. 99–108. [Google Scholar]

- O’Donovan, P.; Agarwala, A.; Hertzmann, A. Learning layouts for single-pagegraphic designs. IEEE Trans. Vis. Comput. Graph. 2014, 20, 1200–1213. [Google Scholar] [CrossRef]

- Tabata, S.; Yoshihara, H.; Maeda, H.; Yokoyama, K. Automatic layout generation for graphical design magazines. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 28 July 2019; pp. 1–2. [Google Scholar]

- Bylinskii, Z.; Kim, N.W.; O’Donovan, P.; Alsheikh, S.; Madan, S.; Pfister, H.; Durand, F.; Russell, B.; Hertzmann, A. Learning visual importance for graphic designs and data visualizations. In Proceedings of the ACM Symposium on User Interface Software and Technology, Québec City, QC, Canada, 22–25 October 2017; pp. 57–69. [Google Scholar]

- Pang, X.; Cao, Y.; Lau, R.W.; Chan, A.B. Directing user attention via visual flow on web designs. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Kikuchi, K.; Simo-Serra, E.; Otani, M.; Yamaguchi, K. Constrained graphic layout generation via latent optimization. In Proceedings of the ACM Multimedia, Vitural, 20–24 October 2021; pp. 88–96. [Google Scholar]

- Chai, S.; Zhuang, L.; Yan, F. Layoutdm: Transformer-based diffusion model for layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhang, J.; Guo, J.; Sun, S.; Lou, J.G.; Zhang, D. Layoutdiffusion: Improving graphic layout generation by discrete diffusion probabilistic models. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 7226–7236. [Google Scholar]

- Hui, M.; Zhang, Z.; Zhang, X.; Xie, W.; Wang, Y.; Lu, Y. Unifying layout generation with a decoupled diffusion model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1942–1951. [Google Scholar]

- Shabani, M.A.; Wang, Z.; Liu, D.; Zhao, N.; Yang, J.; Furukawa, Y. Visual Layout Composer: Image-Vector Dual Diffusion Model for Design Layout Generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 9222–9231. [Google Scholar]

- Jyothi, A.A.; Durand, T.; He, J.; Sigal, L.; Mori, G. Layoutvae: Stochastic scene layout generation from a label set. In Proceedings of the International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9895–9904. [Google Scholar]

- Gupta, K.; Lazarow, J.; Achille, A.; Davis, L.S.; Mahadevan, V.; Shrivastava, A. Layouttransformer: Layout generation and completion with self-attention. In Proceedings of the International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1004–1014. [Google Scholar]

- Arroyo, D.M.; Postels, J.; Tombari, F. Variational transformer networks for layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 13642–13652. [Google Scholar]

- Kong, X.; Jiang, L.; Chang, H.; Zhang, H.; Hao, Y.; Gong, H.; Essa, I. Blt: Bidirectional layout transformer for controllable layout generation. In Proceedings of the European Conference on Computer Vision, Tel-Aviv, Israel, 23–27 October 2022; pp. 474–490. [Google Scholar]

- Inoue, N.; Kikuchi, K.; Simo-Serra, E.; Otani, M.; Yamaguchi, K. Layoutdm: Discrete diffusion model for controllable layout generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Zhang, Z.; Zhang, Y.; Liang, Y.; Xiang, L.; Zhao, Y.; Zhou, Y.; Zong, C. Layoutdit: Layout-aware end-to-end document image translation with multi-step conductive decoder. In Proceedings of the Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; pp. 10043–10053. [Google Scholar]

- Levi, E.; Brosh, E.; Mykhailych, M.; Perez, M. Dlt: Conditioned layout generation with joint discrete-continuous diffusion layout transformer. In Proceedings of the International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 2106–2115. [Google Scholar]

- Zheng, X.; Qiao, X.; Cao, Y.; Lau, R.W. Content-aware generative modeling of graphic design layouts. ACM Trans. Graph. 2019, 38, 1–15. [Google Scholar] [CrossRef]

- Cao, Y.; Ma, Y.; Zhou, M.; Liu, C.; Xie, H.; Ge, T.; Jiang, Y. Geometry aligned variational transformer for image-conditioned layout generation. In Proceedings of the ACM Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 1561–1571. [Google Scholar]

- Hsu, H.Y.; He, X.; Peng, Y.; Kong, H.; Zhang, Q. Posterlayout: A new benchmark and approach for content-aware visual-textual presentation layout. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6018–6026. [Google Scholar]

- Yu, N.; Chen, C.C.; Chen, Z.; Meng, R.; Wu, G.; Josel, P.; Niebles, J.C.; Xiong, C.; Xu, R. LayoutDETR: Detection transformer is a good multimodal layout designer. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 October 2025; pp. 169–187. [Google Scholar]

- Tang, Z.; Wu, C.; Li, J.; Duan, N. Layoutnuwa: Revealing the hidden layout expertise of large language models. arXiv 2023, arXiv:2309.09506. [Google Scholar] [CrossRef]

- Li, S.; Wang, R.; Hsieh, C.J.; Cheng, M.; Zhou, T. MuLan: Multimodal-LLM Agent for Progressive Multi-Object Diffusion. arXiv 2024, arXiv:2402.12741. [Google Scholar]

- Chen, J.; Zhang, R.; Zhou, Y.; Healey, J.; Gu, J.; Xu, Z.; Chen, C. TextLap: Customizing Language Models for Text-to-Layout Planning. arXiv 2024, arXiv:2410.12844. [Google Scholar]

- Lin, J.; Guo, J.; Sun, S.; Yang, Z.; Lou, J.G.; Zhang, D. Layoutprompter: Awaken the design ability of large language models. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 9–15 December 2024; Volume 36, pp. 43852–43879. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the Advances in Neural Information Processing Systems, Vitural, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Wang, Y.; Pu, G.; Luo, W.; Wang, Y.; Xiong, P.; Kang, H.; Lian, Z. Aesthetic text logo synthesis via content-aware layout inferring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2436–2445. [Google Scholar]

- He, J.; Wang, Y.; Wang, L.; Lu, H.; He, J.Y.; Li, C.; Chen, H.; Lan, J.P.; Luo, B.; Geng, Y. GLDesigner: Leveraging Multi-Modal LLMs as Designer for Enhanced Aesthetic Text Glyph Layouts. arXiv 2024, arXiv:2411.11435. [Google Scholar] [CrossRef]

- Lakhanpal, S.; Chopra, S.; Jain, V.; Chadha, A.; Luo, M. Refining Text-to-Image Generation: Towards Accurate Training-Free Glyph-Enhanced Image Generation. arXiv 2024, arXiv:2403.16422. [Google Scholar]

- Ouyang, D.; Furuta, R.; Shimizu, Y.; Taniguchi, Y.; Hinami, R.; Ishiwatari, S. Interactive manga colorization with fast flat coloring. In Proceedings of the SIGGRAPH Asia, Tokyo, Japan, 14–17 December 2021; pp. 1–2. [Google Scholar]

- Yuan, M.; Simo-Serra, E. Line art colorization with concatenated spatial attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vitural, 19–25 June 2021; pp. 3946–3950. [Google Scholar]

- Zhang, Q.; Wang, B.; Wen, W.; Li, H.; Liu, J. Line art correlation matching feature transfer network for automatic animation colorization. In Proceedings of the Winter Conference on Applications of Computer Vision, Virtual, 5–9 June 2021; pp. 3872–3881. [Google Scholar]

- Wang, N.; Niu, M.; Wang, Z.; Hu, K.; Liu, B.; Wang, Z.; Li, H. Region assisted sketch colorization. IEEE Trans. Image Process. 2023, 32, 6142–6154. [Google Scholar] [CrossRef]

- Zabari, N.; Azulay, A.; Gorkor, A.; Halperin, T.; Fried, O. Diffusing Colors: Image Colorization with Text Guided Diffusion. In Proceedings of the SIGGRAPH Asia, Sydney, Australia, 12–15 December 2023; pp. 1–11. [Google Scholar]

- Dou, Z.; Wang, N.; Li, B.; Wang, Z.; Li, H.; Liu, B. Dual color space guided sketch colorization. IEEE Trans. Image Process. 2021, 30, 7292–7304. [Google Scholar] [CrossRef]

- Yun, J.; Lee, S.; Park, M.; Choo, J. iColoriT: Towards propagating local hints to the right region in interactive colorization by leveraging vision transformer. In Proceedings of the Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 1787–1796. [Google Scholar]

- Zhang, L.; Li, C.; Simo-Serra, E.; Ji, Y.; Wong, T.T.; Liu, C. User-guided line art flat filling with split filling mechanism. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vitural, 19–25 June 2021; pp. 9889–9898. [Google Scholar]

- Bai, Y.; Dong, C.; Chai, Z.; Wang, A.; Xu, Z.; Yuan, C. Semantic-sparse colorization network for deep exemplar-based colorization. In Proceedings of the European Conference on Computer Vision, Tel-Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Li, H.; Sheng, B.; Li, P.; Ali, R.; Chen, C.P. Globally and locally semantic colorization via exemplar-based broad-GAN. IEEE Trans. Image Process. 2021, 30, 8526–8539. [Google Scholar] [CrossRef]

- Li, Y.K.; Lien, Y.H.; Wang, Y.S. Style-structure disentangled features and normalizing flows for icon colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Li, Z.; Geng, Z.; Kang, Z.; Chen, W.; Yang, Y. Eliminating gradient conflict in reference-based line-art colorization. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 579–596. [Google Scholar]

- Wang, H.; Zhai, D.; Liu, X.; Jiang, J.; Gao, W. Unsupervised deep exemplar colorization via pyramid dual non-local attention. IEEE Trans. Image Process. 2023, 32, 4114–4127. [Google Scholar] [CrossRef]

- Wu, S.; Yan, X.; Liu, W.; Xu, S.; Zhang, S. Self-driven dual-path learning for reference-based line art colorization under limited data. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 1388–1402. [Google Scholar] [CrossRef]

- Wu, S.; Yang, Y.; Xu, S.; Liu, W.; Yan, X.; Zhang, S. Flexicon: Flexible icon colorization via guided images and palettes. In Proceedings of the ACM Multimedia, Vancouver, BC, Canada, 7–10 June 2023; pp. 8662–8673. [Google Scholar]

- Zhang, J.; Xu, C.; Li, J.; Han, Y.; Wang, Y.; Tai, Y.; Liu, Y. Scsnet: An efficient paradigm for learning simultaneously image colorization and super-resolution. In Proceedings of the Association for the Advancement of Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 3271–3279. [Google Scholar]

- Zou, C.; Wan, S.; Blanch, M.G.; Murn, L.; Mrak, M.; Sock, J.; Yang, F.; Herranz, L. Lightweight Exemplar Colorization via Semantic Attention-Guided Laplacian Pyramid. IEEE Trans. Vis. Comput. Graph. 2024, 31, 4257–4269. [Google Scholar] [CrossRef]

- Wang, Y.; Xia, M.; Qi, L.; Shao, J.; Qiao, Y. PalGAN: Image colorization with palette generative adversarial networks. In Proceedings of the European Conference on Computer Vision, Tel-Aviv, Israel, 23–27 October 2022; pp. 271–288. [Google Scholar]

- Chang, Z.; Weng, S.; Zhang, P.; Li, Y.; Li, S.; Shi, B. L-CoIns: Language-based colorization with instance awareness. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Weng, S.; Zhang, P.; Li, Y.; Li, S.; Shi, B. L-cad: Language-based colorization with any-level descriptions using diffusion priors. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 77174–77186. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Zhang, L.; Li, C.; Wong, T.T.; Ji, Y.; Liu, C. Two-stage sketch colorization. ACM Trans. Graph. (TOG) 2018, 37, 1–14. [Google Scholar] [CrossRef]

- Cheng, Z.; Yang, Q.; Sheng, B. Deep colorization. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 415–423. [Google Scholar]

- Endo, Y.; Iizuka, S.; Kanamori, Y.; Mitani, J. Deepprop: Extracting deep features from a single image for edit propagation. In Proceedings of the 37th Annual Conference of the European Association for Computer Graphics, Lisbon, Portugal, 9–13 May 2016; Volume 35, pp. 189–201. [Google Scholar]

- Chang, H.; Fried, O.; Liu, Y.; DiVerdi, S.; Finkelstein, A. Palette-based photo recoloring. ACM Trans. Graph. (TOG) 2015, 34, 139. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Tatsukawa, Y.; Shen, I.C.; Qi, A.; Koyama, Y.; Igarashi, T.; Shamir, A. FontCLIP: A Semantic Typography Visual-Language Model for Multilingual Font Applications. Comput. Graph. Forum 2024, 43, e15043. [Google Scholar] [CrossRef]

- Olsen, L.H.; Glad, I.K.; Jullum, M.; Aas, K. Using Shapley values and variational autoencoders to explain predictive models with dependent mixed features. J. Mach. Learn. Res. 2022, 23, 1–51. [Google Scholar]

- Chen, J.; Huang, Y.; Lv, T.; Cui, L.; Chen, Q.; Wei, F. Textdiffuser: Diffusion models as text painters. Adv. Neural Inf. Process. Syst. 2023, 36, 9353–9387. [Google Scholar]

- Zhang, J.; Zhou, Y.; Gu, J.; Wigington, C.; Yu, T.; Chen, Y.; Sun, T.; Zhang, R. Artist: Improving the generation of text-rich images with disentangled diffusion models and large language models. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision (Winter Conference on Applications of Computer Vision), Tucson, AZ, USA, 26 February–6 March 2025; pp. 1268–1278. [Google Scholar]

- Li, J.; Yang, J.; Hertzmann, A.; Zhang, J.; Xu, T. Layoutgan: Synthesizing graphic layouts with vector-wireframe adversarial networks. IEEE Tran. Pattern Anal. Mach. Intell. 2020, 43, 2388–2399. [Google Scholar] [CrossRef]

- Dong, R.; Han, C.; Peng, Y.; Qi, Z.; Ge, Z.; Yang, J.; Zhao, L.; Sun, J.; Zhou, H.; Wei, H.; et al. DreamLLM: Synergistic multimodal comprehension and creation. arXiv 2023, arXiv:2309.11499. [Google Scholar]

- Kikuchi, K.; Inoue, N.; Otani, M.; Simo-Serra, E.; Yamaguchi, K. Multimodal Markup Document Models for Graphic Design Completion. arXiv 2024, arXiv:2409.19051. [Google Scholar] [CrossRef]

- Inoue, N.; Masui, K.; Shimoda, W.; Yamaguchi, K. OpenCOLE: Towards Reproducible Automatic Graphic Design Generation. arXiv 2024, arXiv:2406.08232. [Google Scholar] [CrossRef]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 23716–23736. [Google Scholar]

- Awadalla, A.; Gao, I.; Gardner, J.; Hessel, J.; Hanafy, Y.; Zhu, W.; Marathe, K.; Bitton, Y.; Gadre, S.; Sagawa, S.; et al. OpenFlamingo: An open-source framework for training large autoregressive vision-language models. arXiv 2023, arXiv:2308.01390. [Google Scholar]

- Esser, P.; Rombach, R.; Ommer, B. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12873–12883. [Google Scholar]

- Zou, X.; Zhang, W.; Zhao, N. From Fragment to One Piece: A Survey on AI-Driven Graphic Design. arXiv 2025, arXiv:2503.18641. [Google Scholar] [CrossRef]

- Qu, L.; Wu, S.; Fei, H.; Nie, L.; Chua, T.S. Layoutllm-t2i: Eliciting layout guidance from llm for text-to-image generation. In Proceedings of the ACM Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 643–654. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. Clip-adapter: Better vision-language models with feature adapters. Int. J. Comput. Vis. 2024, 132, 581–595. [Google Scholar] [CrossRef]

- Zhang, J.; Yoshihashi, R.; Kitada, S.; Osanai, A.; Nakashima, Y. VASCAR: Content-Aware Layout Generation via Visual-Aware Self-Correction. arXiv 2024, arXiv:2412.04237v3. [Google Scholar]

- Goyal, S.; Mahajan, A.; Mishra, S.; Udhayanan, P.; Shukla, T.; Joseph, K.J.; Srinivasan, B.V. Design-o-meter: Towards Evaluating and Refining Graphic Designs. In Proceedings of the 2025 IEEE/CVF Winter Conference on Applications of Computer Vision, Tucson, AZ, USA, 28 February–4 March 2025; Volume 5, pp. 676–5686. [Google Scholar]

- Zhang, K.; Mo, L.; Chen, W.; Sun, H.; Su, Y. MagicBrush: A Multimodal Assistant for Visual Design Editing. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023; Volume 36, pp. 31428–31449. [Google Scholar]

- Nakano, R.; Hilton, J.; Balaji, S.; Wu, J.; Ouyang, L.; Kim, C.; Hesse, C.; Jain, S.; Kosaraju, V.; Saunders, W.; et al. WebGPT: Browser-assisted question-answering with human feedback. arXiv 2021, arXiv:2112.09332. [Google Scholar]

- Le, D.H.; Pham, T.; Lee, S.; Clark, C.; Kembhavi, A.; Mandt, S.; Krishna, R.; Lu, J. One Diffusion to Generate Them All. In Proceedings of the Computer Vision and Pattern Recognition Conference, Music City Center, Nashville, TN, USA, 11–15 June 2025; pp. 2671–2682. [Google Scholar]

- Wang, Z.; Hsu, J.; Wang, X.; Huang, K.H.; Li, M.; Wu, J.; Ji, H. Visually Descriptive Language Model for Vector Graphics Reasoning. arXiv 2024, arXiv:2404.06479. [Google Scholar]

| Surveys | Focus Area | Key Contribution | Papers Reviewed |

|---|---|---|---|

| Tian et al. (2022) [13] | Mathematical foundations and content creation stages of vector graphics | Provides a foundation for understanding vector graphic representation and its mathematical principles | 147 |

| Shi et al. (2023) [14] | Layout generation aesthetics and technologies | Explores technologies for automatic layout generation, focusing on aesthetic rules | 131 |

| Huang et al. (2023) [9] | Taxonomy of graphic design intelligence | Categorizes approaches to graphic design tasks into a taxonomy of AI-driven intelligence | 77 |

| Liu et al. (2024) [15] | Graphic layout generation: implementation and interactivity | Reviews techniques for interactive and automated layout generation | 40 |

| Tang et al. (2024) [16] | Challenges and future needs for AI tools in graphic design | Identifies challenges and future functional needs based on designer interviews | 39 |

| Ours | Unification of cognitive and generative tasks in design workflows | Proposes a framework that integrates cognitive (e.g., reasoning, decision-making) and generative (e.g., image and layout generation) tasks, highlighting the interplay and potential synergies of AI-driven tools in holistic design processes | 267 |

| Technology | Key Features | Strengths | Limitations |

|---|---|---|---|

| Object Recognition | MLLMs, DETR-based detection | High accuracy; text integration | Limited coverage; semantic gaps |

| SVG Recognition | Graph-matching; YOLaT, GNNs | Effective for vectors; abstraction | Ignores high-level info; GNN limits |

| OCR | TextBoxes, Mask R-CNN, DETR | Diverse scenes; generative support | Complex post-processing; training issues |

| Font Recognition | CNNs, SVMs, FontCLIP, GANs | High accuracy; flexible fonts | Font variability; dataset complexity |

| Layout Analysis | Top-down, bottom-up, hybrid; transformers | Handles irregular layouts; pixel-based | Computationally heavy; table issues |

| Color Palettes | Regression, VAEAC, transformers | Dynamic, region-specific | Semantic oversight; suboptimal predictions |

| Other Attributes | CNNs, DMA-Net; personalized models | Global layout encoding; user-focused | Subjective; lacks benchmarks |

| SVG Generation | SketchRNN, DeepSVG, VectorFusion; VAEs | Scalable; precise; text-conditioned | Dataset dependency; complex synthesis |

| Vectorization | PolyFit, LIVE, SAMVG; optimization | Preserves detail; high quality | Boundary alignment; perceptual issues |

| Artistic Typography | DC-Font, Diff-Font; GANs, diffusion | Flexible; supports complex scripts | Data scarcity; readability trade-offs |

| Visual Text Rendering | TextDiffuser, GlyphControl; multilingual | Accurate segmentation; interactive | Limited dataset diversity; clarity issues |

| Automatic Layout | LayoutGAN, LayoutGPT; VAEs, LLMs | Diverse needs; language-driven | Complex layouts; visual feature loss |

| Glyph Layout | GLDesigner; vision–language models | High fidelity; constraint handling | Long text issues; limited data |

| Colorization | ControlNet, Palette-based; CNNs, GANs | Automated; semantic-aware | Long-range dependencies; inconsistencies |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, X.; Zhang, W.; Zhao, N. From Fragment to One Piece: A Review on AI-Driven Graphic Design. J. Imaging 2025, 11, 289. https://doi.org/10.3390/jimaging11090289

Zou X, Zhang W, Zhao N. From Fragment to One Piece: A Review on AI-Driven Graphic Design. Journal of Imaging. 2025; 11(9):289. https://doi.org/10.3390/jimaging11090289

Chicago/Turabian StyleZou, Xingxing, Wen Zhang, and Nanxuan Zhao. 2025. "From Fragment to One Piece: A Review on AI-Driven Graphic Design" Journal of Imaging 11, no. 9: 289. https://doi.org/10.3390/jimaging11090289

APA StyleZou, X., Zhang, W., & Zhao, N. (2025). From Fragment to One Piece: A Review on AI-Driven Graphic Design. Journal of Imaging, 11(9), 289. https://doi.org/10.3390/jimaging11090289