1. Introduction

Global firearm-related violence has escalated in recent years, amplifying the demand for rapid, AI-based gunshot detection systems. These technologies analyze acoustic signatures—frequency, amplitude, and temporal waveforms—to differentiate various firearm discharges in real time. AI-enabled systems offer law enforcement a non-invasive, automated alternative to traditional forensic ballistics, delivering location-based alerts for immediate response.

In 2022, the United States experienced over 650 mass shootings—defined as incidents involving four or more victims, excluding the shooter—with total firearm-related deaths surpassing 43,000. This alarming trend has driven urban centers to adopt advanced acoustic surveillance. ShotSpotter, deployed in more than 100 U.S. cities (including Chicago, New York, and San Francisco), utilizes sensor networks to triangulate gunfire and dispatch alerts in under a minute. Independent studies report up to 97% system accuracy in controlled conditions, though real-world performance varies. Estimated annual deployment and maintenance costs can exceed

$65,000 per square mile [

1].

Research on ShotSpotter reveals mixed outcomes. Some evaluations note faster police response and improved evidence collection—including assistance in incidents not reported via 911—but criminological analyses suggest no statistically significant reduction in violent crime or firearm homicides. Critics also raise concerns over false positives, racial profiling, and high infrastructure costs, leading some cities to terminate contracts amid concerns over their efficacy [

2].

Internationally, regions in Latin America face severe gun violence. Brazil, for example, recorded nearly 38,800 homicides in 2024—an intentional homicide rate of 17.9 per 100,000—with firearms implicated in the majority of cases [

3].

Despite being the country’s lowest rate in over a decade, gun violence remains widespread, driven by organized crime, firearm accessibility, and social inequality [

4].

Therefore, gunfire threat detection systems are critically important—especially in high-risk urban environments where rapid law enforcement response can significantly reduce the severity of incidents and enhance public safety.

In recent years, artificial intelligence (AI), machine learning (ML), and deep learning (DL) have made substantial progress in the field of audio signal classification, particularly in gunshot detection. A core principle of this research involves applying image processing and pattern recognition techniques to audio data. This is accomplished by converting audio signals into time-frequency representations, which can be visualized as images. These spectrogram-like visualizations enable the use of deep-learning models—originally developed for computer vision tasks—to effectively analyze and classify firearm sounds [

3,

5,

6].

Time-frequency features provide a comprehensive representation that integrates both the time and frequency domains, unlike traditional methods that analyze these aspects in isolation. Modern techniques such as spectrograms, wavelet transforms, and Mel-Frequency Cepstral Coefficients (MFCCs) enable models to extract deep temporal and spectral characteristics. Spectrograms, in particular, as visual representations of a signal’s frequency distribution over time, have become an exceptionally suitable tool for convolutional neural network (CNN)-based pattern recognition in gunshot classification [

7].

To further strengthen the link with image processing and create a more complex, multi-dimensional input, the concept of the RGB Spectrogram is applied. In this approach, each color channel (Red, Green, Blue) of an image is used to represent a different time-frequency feature. For instance, the Red (R) channel might represent the standard Mel spectrogram, the Green (G) channel could represent the delta features (the rate of spectral change), and the Blue (B) channel could represent the delta-delta features (the rate of acceleration of that change). This process effectively creates a “color image” from the audio signal, providing richer information than a standard grayscale spectrogram. It allows a CNN to learn more intricate patterns, thereby improving its ability to effectively distinguish gunshots from environmental noise [

5,

6,

8,

9].

The efficacy of CNN models in processing image-like data has been extensively proven in other fields, most notably in medical imaging for the analysis of MRI and CT scans [

10] and pathology detection [

11]. This success validates the application of the same principles to the analysis of audio spectrograms. Deep-learning models can automatically extract spatial and temporal features from these spectrograms, surpassing traditional ML algorithms that often depend on manual feature engineering [

12].

Therefore, this research focuses on the exploration and application of AI and DL techniques, centered on the transformation of gunshot audio signals into image-based spectrograms (including the RGB spectrogram concept) for use as input to image-classification models. This methodology aims not only to enhance classification accuracy but also to improve the system’s robustness against confounding noises with similar acoustic characteristics, such as fireworks or vehicle backfires, addressing a critical challenge for the reliable, semi-controlled outdoor environment deployment of automated gunshot detection systems.

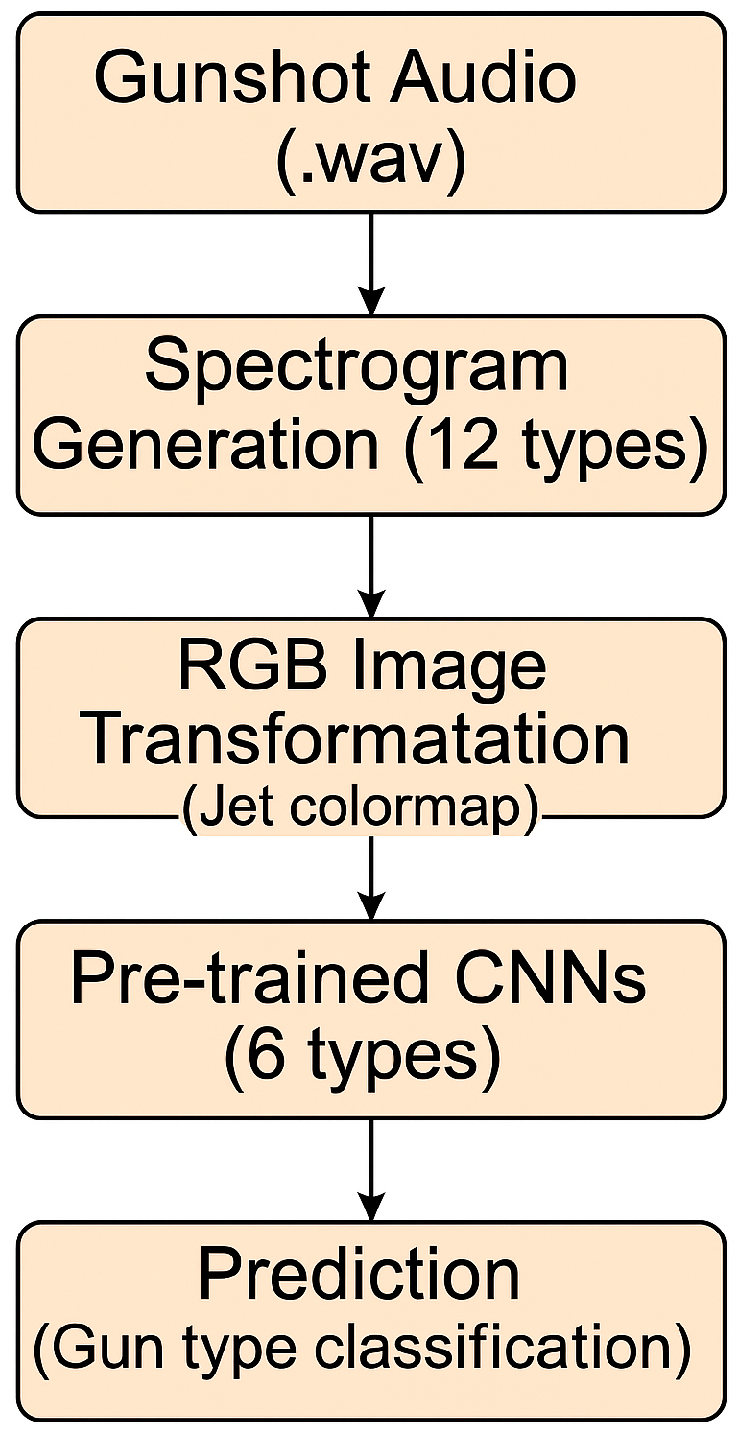

This study presents a comprehensive evaluation of deep-learning-based firearm sound classification using time-frequency representations. By converting 12 spectrogram types—including perceptual, Fourier-based, feature-specific, and wavelet-based methods—into RGB images and feeding them into six pretrained CNN architectures, the study establishes a reproducible benchmark and demonstrates the feasibility of applying deep image models to acoustic recognition tasks. The investigation lays a foundation for future work in intelligent acoustic surveillance systems.

The key contributions of this study are summarized as follows:

A systematic benchmark of 12 time-frequency spectrogram representations—transformed into RGB images—for gunshot classification.

Comparative evaluation of six state-of-the-art pretrained CNN architectures (ResNet18, ResNet50, ResNet101, GoogLeNet, Inception-v3, and InceptionResNetV2) under consistent 5-fold cross-validation, which serves not only as a performance evaluation method but also as a regularization strategy to reduce overfitting in training with a relatively small dataset.

Identification of optimal spectrogram–CNN pairs (e.g., CQT + InceptionResNetV2) achieving up to 95.81% accuracy, 0.9447 F1-score, and 0.9957 AUC.

Inclusion of misclassification analysis and computational cost comparison to support real-time or edge deployment.

The remainder of this paper is organized as follows:

Section 2 formulates the problem and defines the operational constraints of firearm sound recognition.

Section 3 describes the dataset, spectrogram generation methods, CNN architectures, and experimental settings.

Section 4 presents the classification outcomes, misclassification analysis, and computational cost evaluation. Finally,

Section 5 summarizes the findings and outlines potential directions for future work.

4. Results and Discussion

This study introduces a robust framework for firearm sound classification by integrating advanced audio signal processing techniques with deep convolutional neural networks (CNNs). The proposed system comprises three main stages: audio preprocessing, spectrogram-based feature extraction, and deep-learning-driven classification. In particular, gunshot audio recordings are converted into time-frequency representations—spectrograms—which serve as input to pretrained CNN models. To enhance generalizability and minimize overfitting, k-fold cross-validation is employed throughout training and evaluation.

The classification task targets four firearm types: Smith & Wesson .38 caliber, Glock 17 Gen3 9 mm, Remington Model 870 12-gauge, and Ruger AR-556 .223 caliber. The dataset is derived from the work of Kabealo et al. [

13], which features recordings captured in semi-controlled outdoor environments using multiple edge devices to reflect diverse acoustic conditions.

Transfer learning is applied using several established CNN architectures—GoogLeNet, Inception-v3, InceptionResNetV2, ResNet18, ResNet50, and ResNet101—originally trained on the ImageNet dataset. These models are fine-tuned for spectrogram classification by replacing their final layers with new fully connected, softmax, and classification layers, assigning a WeightLearnRateFactor and BiasLearnRateFactor of 20. Input images are resized to for GoogLeNet and ResNet variants, and to for Inception-based models. All models are trained using the Adam optimizer with a batch size of 32, an initial learning rate of 0.0001, and a maximum of 500 epochs.

In the following section, we present and analyze the results obtained from applying 12 distinct spectrogram types as input features. Each spectrogram is examined individually to assess its impact on classification accuracy across different CNN models. This comparative analysis provides valuable insights into the discriminative effectiveness of various time-frequency representations for firearm sound classification.

4.1. Performance Metrics for Bark Spectrogram with CNN Architectures

The experiment tested various CNN architectures—GoogLeNet, InceptionResNetV2, Inception-v3, ResNet18, ResNet50, and ResNet101—on a classification task using Bark spectrograms. The results show in

Table 3 that all models struggled to achieve strong performance, with none exceeding 44% accuracy. ResNet18 delivered the best accuracy at 43.81%, suggesting limited capability to generalize from the data. GoogLeNet and InceptionResNetV2 showed perfect precision scores (1.0000), but their recall values were extremely low (0.0700 and 0.0500, respectively). This indicates that while the models rarely predicted false positives, they also failed to detect most actual positives. Their F1-scores were also very low, highlighting a lack of balance between precision and recall.

Inception-v3 performed slightly better than the previous two, with a more moderate precision (0.2819) and higher recall (0.4200), resulting in an F1-score of 0.3374. While still underwhelming, this balance shows marginal improvement in capturing true positives. ResNet18 stood out for its more balanced performance, with a precision of 0.4050 and recall of 0.4852, giving it the highest F1-score at 0.4414. It also had a solid specificity of 0.7825 and AUC of 0.6714, which suggests better overall class separation than other models. ResNet50 and ResNet101 had high specificity (above 0.92), but their recall and F1-scores were low, showing they were heavily biased toward negative predictions. These models would miss many true positives, which is problematic for most real-world classification tasks.

In summary, the Bark spectrogram alone does not appear to provide enough discriminative power for CNN architectures to perform reliably. Although ResNet18 showed relative promise, its accuracy and F1-score are still below acceptable thresholds for deployment. The dataset or feature type may be inadequate, or the task may require more sophisticated model tuning or feature engineering.

Based on these results, the use of Bark spectrograms with standard CNN architectures is not currently suitable for practical applications. Further improvements in data preprocessing, feature selection, or model design would be necessary before these systems could be considered viable.

4.2. Performance Metrics for Chroma Spectrogram with CNN Architectures

As shown in

Table 4, the experiment evaluated the performance of various CNN architectures—GoogLeNet, InceptionResNetV2, Inception-v3, ResNet18, ResNet50, and ResNet101—using Chroma spectrograms as input features. Across the board, the models achieved strong performance, with all accuracies exceeding 84%, suggesting that Chroma spectrograms provide highly informative input representations for this classification task. InceptionResNetV2 achieved the highest accuracy at 88.92%, closely followed by ResNet50 at 88.78% and Inception-v3 at 88.32%. These results indicate that deeper and more complex architectures are particularly well-suited to learning from Chroma-based features.

In terms of precision, all models scored above 0.85, with InceptionResNetV2 leading at 0.9231. This high precision reflects a strong ability to minimize false positives. Recall values were similarly high, ranging from 0.7800 (GoogLeNet) to 0.8800 (Inception-v3 and ResNet50), showing that the models were also effective at detecting true positives. The F1-scores reflect balanced performance across precision and recall, with InceptionResNetV2 and ResNet50 both achieving 0.8800 or higher, making them strong candidates for deployment in scenarios requiring consistent classification reliability.

Specificity was also high, with all models above 0.95, indicating minimal false positive rates. The lowest FPR was recorded by InceptionResNetV2 at just 0.0213, showing excellent discriminatory power. The AUC scores further confirm these results, with all models scoring above 0.96. ResNet50 reached an AUC of 0.9796, while ResNet18 achieved a very close 0.9794 despite slightly lower accuracy. This suggests a strong ability to distinguish between classes under varying decision thresholds.

Based on the performance shown in

Table 4, Chroma spectrograms used with CNN architectures can be confidently recommended for practical applications. Their performance is stable, accurate, and reliable, making them suitable for deployment in real-world systems that require effective and efficient audio-based classification.

4.3. Performance Metrics for Cochleagram Spectrogram with CNN Architectures

As shown in

Table 5, the CNN architectures evaluated using Cochleagram spectrogram features achieved outstanding performance across all metrics. All models reached accuracy levels above 91%, with ResNet101 and ResNet50 performing the best at 93.9964% and 93.9516%, respectively. This indicates that Cochleagram features offer highly discriminative information suitable for CNN-based classification tasks.

Precision values across the models were consistently high, ranging from 0.9109 (ResNet18) to 0.9394 (InceptionResNetV2), indicating the models were very effective in minimizing false positives. Recall was also excellent across all architectures, with most models exceeding 0.91. ResNet50 achieved the highest recall at 0.9505, while ResNet101 was nearly identical at 0.9500. These high recall values suggest strong sensitivity to actual positive cases, a critical factor in many real-world applications.

F1-scores, which balance precision and recall, were equally impressive. All models scored above 0.91, with ResNet50 leading at 0.9412, followed by ResNet101 at 0.9314. These results confirm the ability of these networks to maintain balanced classification performance without favoring one metric at the expense of another.

Specificity was also strong, with all models scoring above 0.97. InceptionResNetV2 recorded the highest specificity at 0.9818, indicating its strong capability to correctly identify negative instances. The corresponding false positive rates (FPR) were extremely low, all under 0.03, reinforcing the overall reliability of the models in minimizing classification errors. The AUC scores, representing the overall ability of the models to distinguish between classes across all thresholds, were exceptionally high. Every model exceeded 0.98, with ResNet101 achieving the highest AUC of 0.9938, followed closely by ResNet50 at 0.9930. These near-perfect AUC values reflect consistent and robust performance.

Based on the metrics presented in

Table 5, it is clear that Cochleagram spectrograms are highly effective features for CNN architectures. All evaluated models delivered high accuracy, balanced precision-recall performance, and excellent class separation. Therefore, Cochleagram-based CNN classification systems are fully suitable for real-world deployment and offer reliable and accurate performance across multiple evaluation criteria.

4.4. Performance Metrics for CQT Spectrogram with CNN Architectures

As shown in

Table 6, the performance of CNN architectures using CQT (Constant-Q Transform) spectrogram features is remarkably high across all evaluated metrics. Every model achieved over 92% accuracy, with InceptionResNetV2 reaching the top accuracy of 95.8124%, followed closely by ResNet101 at 95.1647% and ResNet50 at 94.7835%. These results highlight the strong compatibility between CQT features and deep convolutional architectures.

Precision values are also notably high, ranging from 0.9029 (ResNet18) to 0.9691 (Inception-v3), showing that all models consistently predicted positive classes with minimal false positives. In terms of recall, performance was outstanding across the board, with ResNet50 and ResNet101 both achieving 0.9700. These models were particularly effective at identifying true positive instances, making them strong choices for tasks where missing positive cases would be costly.

F1-scores, which reflect the balance between precision and recall, further support the models’ robustness. Inception-v3 achieved the highest F1-score at 0.9543, followed closely by ResNet50 at 0.9463 and InceptionResNetV2 at 0.9447. These values confirm that the models did not trade off precision for recall, maintaining consistent strength in both areas.

Specificity was very high across all models, indicating strong performance in correctly classifying negative cases. Inception-v3 achieved the highest specificity at 0.9909, with a corresponding FPR of only 0.0092—the lowest among all models tested. These values suggest exceptional reliability in distinguishing between classes with minimal misclassification.

The AUC values underscore the overall excellence of these models. All AUC scores exceeded 0.989, with ResNet50 and ResNet101 both reaching the highest value of 0.9964. These near-perfect scores show that the models are highly effective across different classification thresholds, making them robust to varying decision criteria.

In conclusion, the results in

Table 6 confirm that CQT spectrograms are highly effective input features for CNN-based classification. The consistently strong metrics across all architectures demonstrate reliable, accurate, and balanced performance. These models are well-suited for deployment in real-world applications where high precision and sensitivity are essential.

4.5. Performance Metrics for FFT Spectrogram with CNN Architectures

Table 7 presents the results of CNN models trained on FFT spectrogram features. The performance across all models is consistently strong, with accuracy ranging from 90.0728% (GoogLeNet) to 93.6021% (InceptionResNetV2). These values indicate that FFT spectrograms are effective for CNN-based classification, although they slightly trail the performance seen with CQT or Cochleagram features.

Precision values are generally high across all architectures, with InceptionResNetV2 achieving the best score at 0.9515, reflecting a strong ability to minimize false positives. Other models, such as GoogLeNet and ResNet50, also perform well in this regard, both exceeding 0.93. Recall scores, however, are slightly more varied. While GoogLeNet reached 0.8566 and ResNet50 achieved a solid 0.8784, InceptionResNetV2 dropped to 0.8290. This indicates that some models, despite high precision, may miss a notable number of true positive cases.

F1-scores, which provide a balanced measure of model performance, ranged from 0.8538 (ResNet18) to 0.9038 (ResNet50). ResNet50 stands out as offering the best trade-off between precision and recall, suggesting it is the most well-rounded performer among the group. Overall, most models delivered F1-scores above 0.87, demonstrating reliable classification performance.

Specificity was also robust across the board, with all models exceeding 0.96. InceptionResNetV2 had the highest specificity at 0.9701, accompanied by a low false positive rate of 0.0299. These metrics indicate that the models were generally effective at correctly identifying negative cases and limiting misclassifications.

AUC values for all architectures remained high, ranging from 0.9736 (GoogLeNet) to 0.9846 (InceptionResNetV2), further confirming strong overall classification ability. ResNet50 and Inception-v3 also performed well in this area, each scoring above 0.983, suggesting consistent discrimination across decision thresholds.

Considering the data in

Table 7, CNNs trained with FFT spectrogram features deliver solid and reliable performance. Although marginally behind other spectrogram types in some metrics, FFT remains a viable and effective input representation for deep-learning-based audio classification tasks.

4.6. Performance Metrics for Mel Spectrogram with CNN Architectures

Performance results for CNN models using Mel spectrogram features are detailed in

Table 8. The metrics indicate that Mel spectrograms are highly effective for audio classification tasks. All models surpassed 89% accuracy, with the best-performing architecture, ResNet50, achieving 94.8352%, followed closely by InceptionResNetV2 at 94.5173%. These figures suggest strong overall model generalization using Mel-based input.

Precision was consistently high across all models. InceptionResNetV2 and ResNet101 led with scores of 0.9500 and 0.9681, respectively, while the other models also maintained values above 0.90. These results show that false positive rates were kept low. Recall values were similarly strong, with ResNet50 achieving the highest at 0.9700, indicating a high sensitivity to true positive instances. Most models maintained recall above 0.90, reflecting dependable detection performance.

The F1-scores reinforce the strength of the models, balancing both precision and recall. ResNet50 again stands out with an F1-score of 0.9463, and InceptionResNetV2 is close behind at 0.9500. Even the lowest F1-score, from GoogLeNet, remains strong at 0.9026, confirming solid predictive consistency across the architectures.

Specificity values were high across all models, with ResNet101 achieving a peak score of 0.9909, indicating a very low false positive rate (FPR = 0.0091). Other models also maintained strong specificity, all above 0.96. This indicates reliability in correctly rejecting negative cases.

AUC scores offer further confirmation of overall performance, all exceeding 0.98. ResNet50 recorded the highest AUC at 0.9965, suggesting excellent class separation. InceptionResNetV2 and ResNet101 followed closely with AUCs of 0.9940 and 0.9945, respectively, reinforcing their effectiveness across decision thresholds.

Based on the results in

Table 8, it is clear that CNN architectures paired with Mel spectrograms are highly effective for classification tasks. The consistent performance across accuracy, precision, recall, F1-score, specificity, and AUC makes this approach well-suited for practical deployment in real-world audio analysis systems.

4.7. Performance Metrics for MFCC Spectrogram with CNN Architectures

The performance metrics in

Table 9 highlight the effectiveness of CNN architectures using MFCC (Mel-Frequency Cepstral Coefficient) spectrograms. All models achieved high classification accuracy, with InceptionResNetV2 leading at 94.7438%, followed closely by ResNet101 at 94.4135% and ResNet50 at 92.9236%. These results demonstrate that MFCCs are a strong input representation for deep-learning models in audio classification tasks.

Precision scores were consistently high across all architectures. InceptionResNetV2 reached the highest at 0.9515, while most others, such as ResNet101 (0.9412) and Inception-v3 (0.9381), also performed well. These values suggest the models were effective at minimizing false positives. Recall was equally strong, with values above 0.90 for every model. ResNet50 achieved the highest recall at 0.9604, followed closely by InceptionResNetV2 (0.9703), highlighting the models’ ability to detect true positives reliably.

F1-scores, which balance precision and recall, were uniformly strong. InceptionResNetV2 again led with 0.9608, confirming its well-rounded performance. ResNet101 (0.9458) and ResNet50 (0.9238) also demonstrated strong overall classification consistency. Even the lowest F1-score, from GoogLeNet at 0.9091, remained robust, indicating that all models maintained a reliable precision-recall balance.

Specificity scores were high, with most models scoring above 0.96. InceptionResNetV2 achieved 0.9848, and Inception-v3 followed with 0.9818, indicating strong performance in correctly identifying negative cases. Corresponding false positive rates (FPR) remained low, under 0.04 for all models, further demonstrating their classification stability.

AUC values reinforced these findings, with all architectures scoring above 0.988. InceptionResNetV2 achieved the highest AUC at 0.9973, showing near-perfect class separability. ResNet101 (0.9953) and ResNet50 (0.9935) were also excellent in this regard.

Overall, the results in

Table 9 confirm that MFCC spectrograms are highly effective for CNN-based classification. The combination of high accuracy, balanced precision and recall, low false positive rates, and strong AUC scores makes this approach reliable and well-suited for deployment in real-world audio recognition systems.

4.8. Performance Metrics for Reassigned Spectrogram with CNN Architectures

Table 10 displays the results of CNN models trained on Reassigned spectrogram features, showing high performance across all evaluated metrics. All architectures achieved over 89% accuracy, with ResNet101 performing best at 93.6214%, followed by InceptionResNetV2 at 93.1639%. These results suggest that the Reassigned spectrogram is an effective input representation for CNN-based classification.

Precision values were strong across the board, ranging from 0.8611 (ResNet18) to 0.9406 (ResNet101). The higher scores for InceptionResNetV2, Inception-v3, and ResNet101 indicate that these models effectively minimized false positives. Recall values were even more consistent, with all models exceeding 0.92. ResNet18, despite a lower precision, reached a recall of 0.9300, highlighting its ability to identify true positives effectively.

F1-scores, which measure the balance between precision and recall, were all above 0.89. ResNet101 achieved the highest F1-score at 0.9406, with InceptionResNetV2 and Inception-v3 following closely at 0.9353 and 0.9307, respectively. Even GoogLeNet and ResNet50 maintained respectable F1-scores above 0.90, showing reliable overall classification performance.

Specificity scores were similarly high, with all models above 0.95. ResNet101 again led with 0.9817, closely followed by InceptionResNetV2 at 0.9787. These values reflect strong performance in correctly identifying negative instances. Corresponding false positive rates were all low, ranging from 0.0183 to 0.0455, with most models staying under 0.03.

AUC values further confirmed the models’ strength, with all architectures achieving scores above 0.985. ResNet101 reached the highest AUC at 0.9956, with Inception-v3 and ResNet50 also performing exceptionally well. These results suggest excellent class separation and model robustness across decision thresholds.

In summary, the performance metrics in

Table 10 demonstrate that Reassigned spectrograms are highly suitable for CNN-based classification. The models consistently achieved strong accuracy, balanced precision-recall performance, and high specificity and AUC values. These results indicate that reassigned spectrograms offer a reliable and effective approach for real-world audio classification tasks.

4.9. Performance Metrics for Spectral Contrast Spectrogram with CNN Architectures

Table 11 presents the performance of CNN architectures trained on Spectral Contrast spectrogram features for firearm sound classification. Among the models evaluated, ResNet101 achieved the highest accuracy at 74.8619%, followed closely by InceptionResNetV2 (74.6378%) and ResNet18 (72.3024%). These results suggest that architectures incorporating residual connections, and especially those combining residual and inception modules, are more effective at extracting relevant patterns from spectral contrast inputs.

Despite these relatively strong results for a contrast-based representation, the overall accuracy of all models remains lower than what was observed with other spectrogram types, such as Mel or MFCC. Precision scores ranged from 0.6941 (GoogLeNet) to 0.7742 (ResNet18), while recall values ranged from 0.5842 (GoogLeNet) to 0.7426 (ResNet101). This disparity highlights the difficulty CNNs face in capturing the necessary temporal and spectral information from spectral contrast features alone.

F1-scores reflect similar trends. ResNet18 achieved the highest F1-score at 0.7461, followed closely by InceptionResNetV2 (0.7423) and ResNet101 (0.7389). These values suggest that while the models are reasonably balanced in their precision and recall, their overall performance is still constrained by the limitations of the input representation. The results also indicate that deeper models do not consistently outperform shallower ones, pointing to the importance of architectural design over sheer depth when working with contrast-based inputs.

Specificity scores remained high across the board, with values ranging from 0.9119 to 0.9360, indicating that the models were generally good at identifying negative cases. However, false positive rates (FPR) were noticeably higher than those seen with other spectrogram types, reaching up to 0.0882 in the case of ResNet50. This contributes to lower reliability and robustness in classification.

AUC values ranged from 0.8685 (GoogLeNet) to 0.9268 (ResNet101), demonstrating moderate class separability. Although these scores confirm that CNNs trained on spectral contrast features have some discriminative power, they are still inferior to models trained on richer time-frequency representations.

In summary, while Spectral Contrast spectrograms can provide moderate performance for firearm sound classification using CNNs, they do not offer the same robustness or reliability as other spectrogram types. These findings suggest that future approaches should consider combining spectral contrast with other features or adopting alternative architectures to improve classification outcomes.

4.10. Performance Metrics for STFT Spectrogram with CNN Architectures

Table 12 summarizes the performance of CNN architectures trained on STFT (Short-Time Fourier Transform) spectrograms. The results demonstrate that STFT is a highly effective input representation for firearm sound classification using deep learning. All models achieved high accuracy, with InceptionResNetV2 leading at 94.7901%, followed by ResNet101 (93.1165%) and Inception-v3 (93.5834%). These results highlight the effectiveness of both residual and inception-based architectures in modeling time-frequency representations.

Precision scores were strong across all models, with Inception-v3 achieving the highest at 0.9681. InceptionResNetV2 and ResNet50 also performed well, with precision values above 0.92. Recall scores were equally impressive. InceptionResNetV2 achieved the highest recall at 0.9800, indicating an exceptional ability to detect true positive instances. GoogLeNet, despite being a shallower architecture, also showed strong recall at 0.9400 but suffered from lower precision (0.7402), leading to a more modest F1-score.

F1-scores for most models were high, reflecting a strong balance between precision and recall. InceptionResNetV2 stood out with an F1-score of 0.9561, followed closely by ResNet50 (0.9412) and Inception-v3 (0.9381). These results affirm that deeper models with sophisticated architectures are capable of extracting rich and meaningful patterns from STFT spectrograms.

Specificity values were uniformly high, with Inception-v3 achieving the best score at 0.9909. Most other models also exceeded 0.96, showing that false positive rates were kept low. GoogLeNet was the exception, with a specificity of 0.8994 and a relatively high FPR of 0.1006, suggesting a higher rate of misclassifications compared to the deeper models.

AUC scores support the overall conclusion of strong model performance. InceptionResNetV2 achieved the highest AUC at 0.9976, indicating near-perfect class discrimination. All other models recorded AUC values above 0.97, reinforcing their ability to maintain high performance across different classification thresholds.

In conclusion, the data in

Table 12 confirms that STFT spectrograms, when paired with advanced CNN architectures, provide an excellent foundation for accurate and reliable firearm sound classification. These models consistently deliver high precision, recall, F1-score, and AUC, making them well-suited for real-world implementation in intelligent acoustic recognition systems.

4.11. Performance Metrics for Wavelet Spectrogram with CNN Architectures

Table 13 presents the evaluation results of CNN architectures trained on Wavelet spectrogram features for firearm sound classification. The overall performance is strong across all models, with accuracy values ranging from 84.5927% (ResNet18) to 92.8849% (ResNet101). These results confirm that Wavelet-based time-frequency representations are effective for deep-learning-based audio classification, offering a good balance between time and frequency localization.

Precision scores across the architectures were consistently high, with ResNet101 achieving the best value at 0.9406. Other models, including ResNet50 (0.9126), Inception-v3 (0.9167), and GoogLeNet (0.9011), also showed strong performance in minimizing false positives. Recall scores were similarly robust, with all models exceeding 0.82. InceptionResNetV2, ResNet50, and ResNet101 all reached a recall of 0.9400 or above, indicating excellent sensitivity to true positive instances.

F1-scores reflect the models’ balanced performance. ResNet101 stood out with a perfect balance of 0.9406 in both precision and recall, leading to a strong F1-score. ResNet50 and InceptionResNetV2 followed closely with F1-scores of 0.9261 and 0.9216, respectively. Even the lowest score, from GoogLeNet at 0.8586, was still quite competitive, reaffirming the effectiveness of Wavelet spectrograms as input features.

Specificity values were high for all models, indicating a strong ability to correctly identify negative cases. ResNet101 achieved the highest specificity at 0.9817, while most other models were also above 0.96. The corresponding false positive rates remained low, ranging from 0.0183 (ResNet101) to 0.0424 (ResNet18), supporting the models’ reliability in avoiding misclassifications.

AUC scores further validate the models’ classification capabilities. All models scored above 0.977, with ResNet101 reaching the highest AUC at 0.9933. This indicates excellent class separability and robustness across decision thresholds. ResNet50 and InceptionResNetV2 also performed exceptionally well, with AUC values above 0.99.

In summary, the results in

Table 13 demonstrate that Wavelet spectrograms, when paired with modern CNN architectures, offer highly reliable and accurate performance for firearm sound classification. These models show a strong balance of precision, recall, and specificity, making Wavelet-based CNN classifiers suitable for real-world applications in intelligent acoustic detection systems.

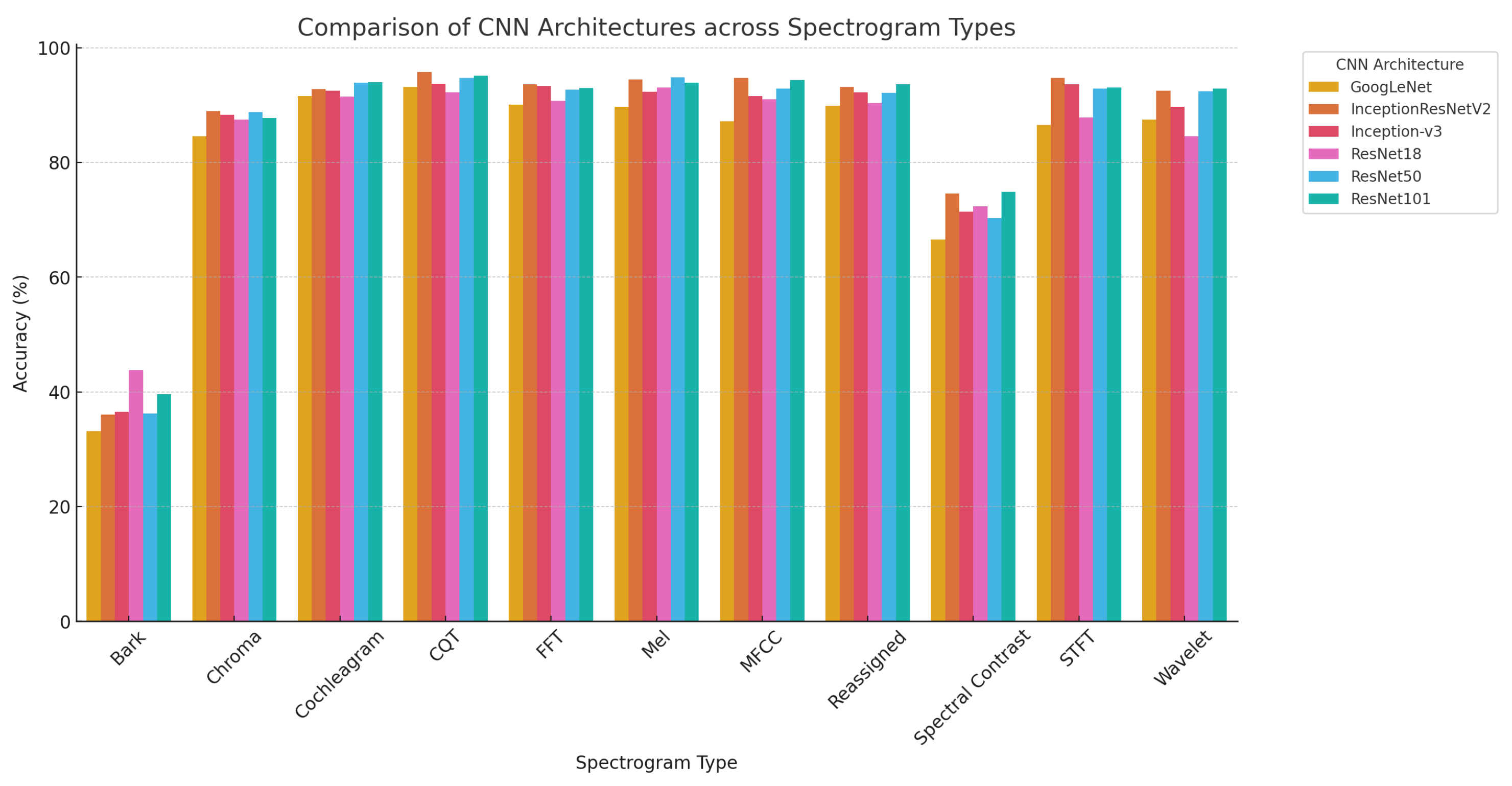

4.12. Discussion on CNN Performance Variation by Spectrogram Type

This study presents a rigorous comparative analysis of convolutional neural network (CNN) architectures applied to a comprehensive set of time-frequency spectrogram representations for firearm sound classification. The experimental findings, derived from performance metrics detailed in

Table 6,

Table 7,

Table 8,

Table 9,

Table 10,

Table 11,

Table 12 and

Table 13 and visualized in

Figure 2, offer critical insights into the interplay between spectrogram encoding techniques and deep-learning model performance.

The results affirm that image-based spectrogram representations are highly effective for firearm audio recognition, validating their potential for deployment in intelligent acoustic surveillance systems aimed at mitigating firearm-related incidents. Among the evaluated features, the Constant-Q Transform (CQT) demonstrated superior performance, particularly when integrated with advanced hybrid architectures such as InceptionResNetV2 and ResNet101. These models achieved classification accuracies of 95.8124% and 95.1647%, respectively, underscoring the efficacy of combining logarithmic frequency scaling with deep residual-inception frameworks for capturing complex spectral patterns.

Biologically inspired representations such as Cochleagram also yielded high classification performance, with ResNet101 achieving an accuracy of 93.9964%. Furthermore, MFCC and Mel spectrograms—widely used in speech and environmental sound recognition—maintained consistently strong results, with multiple models exceeding 94% accuracy. These findings reaffirm their suitability for firearm detection tasks.

Conversely, Bark and Spectral Contrast spectrograms exhibited markedly lower performance. Bark, despite its psychoacoustic basis, reached a maximum accuracy of only 43.8075% (ResNet18), suggesting limited utility without further enhancement. Spectral Contrast, while moderately effective, lacked the feature richness required to support robust classification across CNN variants.

The comparative evaluation of CNN architectures revealed that deeper and hybrid models, notably ResNet50, ResNet101, and InceptionResNetV2, outperformed shallower networks like GoogLeNet. The consistent superiority of these models, reflected in their higher accuracy, recall, and AUC values, highlights the importance of architectural depth and modular design for modeling complex audio representations. Inception-based models also performed favorably with high-detail spectrograms such as Reassigned and Spectral Contrast, further confirming their flexibility.

These findings are fully consistent with the stated Research Objectives and Scope of Research, successfully demonstrating the effectiveness of CNN-based classification across diverse spectrogram inputs. The study achieves its goals by identifying optimal feature-model combinations and contributes a valuable foundation for the development of practical, deployable firearm detection systems.

While our study provides a thorough comparative analysis across different spectrogram types and CNN architectures, it does not currently employ explicit feature fusion strategies within the network architectures. Recent advances in object detection and scene understanding have demonstrated that multi-scale feature fusion can significantly improve performance, especially when input representations vary in scale or frequency emphasis.

Notable approaches such as Feature Pyramid Networks (FPN) [

51], Zoom Text Detector [

52], Asymptotic Feature Pyramid Networks (AFPN) [

53], and CM-Net [

54] showcase effective mechanisms for aggregating hierarchical feature maps, enhancing the model’s ability to capture fine-grained and contextual patterns.

In the context of firearm sound classification, integrating multi-spectrogram fusion—e.g., combining CQT and Mel features—may allow CNNs to extract both perceptual and pitch-related features simultaneously. This could be implemented via channel-wise concatenation, attention modules, or late-fusion ensemble strategies. Future work could investigate embedding such fusion mechanisms into the CNN backbone to further boost classification robustness, especially under real-world noisy conditions or ambiguous gunshot signatures.

5. Conclusions

This study proposed a deep-learning framework for firearm sound classification based on image-based time-frequency feature extraction. By transforming raw gunshot audio recordings into 12 types of spectrograms—converted into RGB images—the system enables the application of powerful computer vision techniques to acoustic data. These spectrograms serve as two-dimensional feature maps, allowing pretrained convolutional neural networks (CNNs), originally designed for image recognition, to effectively learn firearm-specific acoustic patterns.

Among the spectrogram types evaluated, perceptually motivated representations such as Constant-Q Transform (CQT), Mel, and Cochleagram consistently delivered superior classification performance. When combined with deep and hybrid CNN architectures—particularly ResNet101 and InceptionResNetV2—these spectrograms achieved accuracies above 95%, F1-scores exceeding 0.94, and AUC values approaching 0.996. These results highlight the importance of both feature representation and model architecture in building robust and accurate gunshot classification systems.

Importantly, this work demonstrates that transforming audio signals into visual representations is not merely a preprocessing step but a powerful strategy for feature extraction. This paradigm effectively bridges the domains of audio signal processing and computer vision, supporting the development of intelligent acoustic surveillance and forensic analysis tools.

Future Work. Moving forward, future research will focus on enhancing real-time classification by integrating the proposed pipeline into edge computing platforms, enabling low-latency alerts in smart surveillance systems. We also aim to explore sequential modeling approaches to capture temporal patterns in bursts of gunfire and to distinguish gunshots from acoustically similar events such as fireworks or vehicle backfires. Expanding the dataset to include more firearm types, varied recording conditions, and background noise will further improve model generalization.

In addition, several methodological improvements will be prioritized in response to limitations identified in this study. First, we plan to conduct an ablation study to validate the impact of different colormap choices by comparing RGB (Jet/Viridis), grayscale, and multi-channel spectrogram encodings. Second, we aim to perform a sensitivity analysis on spectrogram parameters—such as hop length, window size, FFT size, and Mel filterbank configuration—to assess their influence on model performance and reproducibility. Third, future work will benchmark the proposed CNN-based pipeline against alternative learning paradigms, including raw waveform models (e.g., 1D CNNs), sequential models (e.g., LSTM/GRU), and attention-based models such as Audio Spectrogram Transformers (AST), Perceiver AR, and HTS-AT. We also intend to evaluate audio-specific architectures like LEAF and HEARNet to assess their suitability for firearm classification tasks.

Furthermore, we plan to investigate lightweight CNN and transformer-based architectures, as well as self-supervised learning methods, to support deployment in resource-constrained environments. Although high-performing models such as ResNet101 are effective, their higher inference time and computational demand highlight the need for lightweight, optimized models for real-time surveillance applications. Finally, to promote scientific transparency, we intend to apply statistical tests (e.g., ANOVA, t-tests) and publicly release the spectrogram generation pipeline and training code. While our results are promising, future studies should also explore how these models perform in uncontrolled, noise-heavy urban environments to ensure practical applicability in real-world scenarios.