Digital Image Processing and Convolutional Neural Network Applied to Detect Mitral Stenosis in Echocardiograms: Clinical Decision Support

Abstract

1. Introduction

2. Materials and Methods

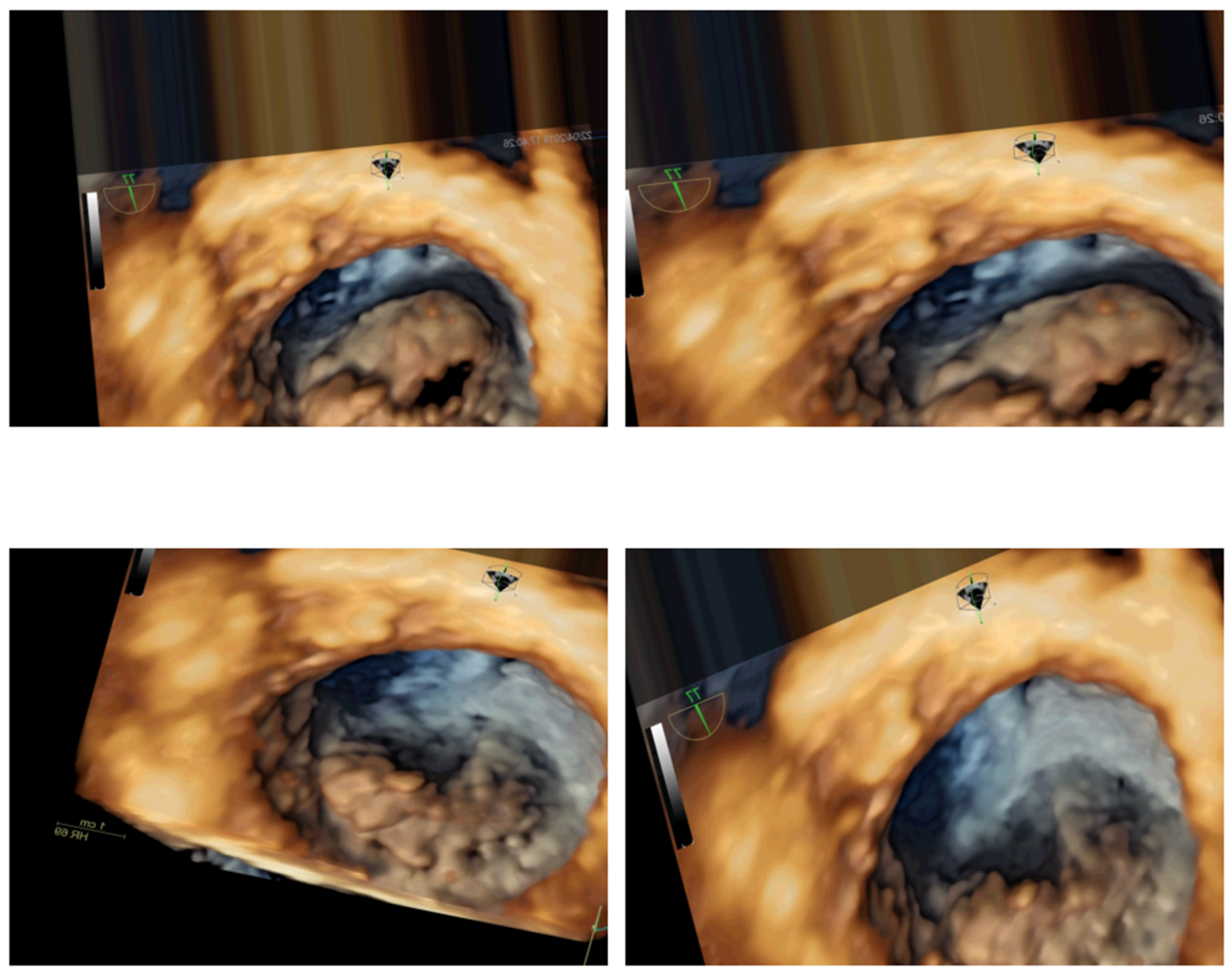

2.1. Data Acquisition

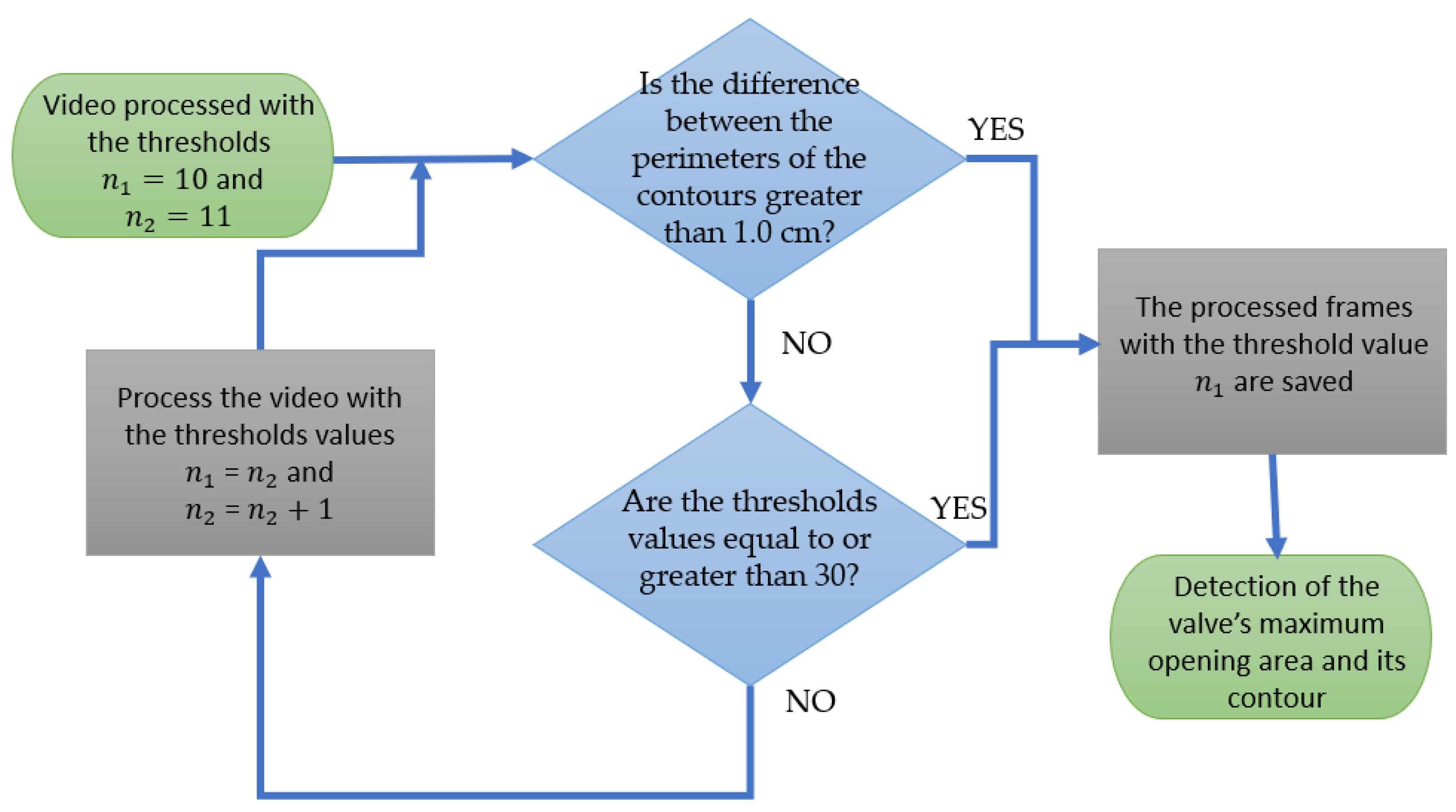

2.2. Digital Image Processing

2.3. Augmentation

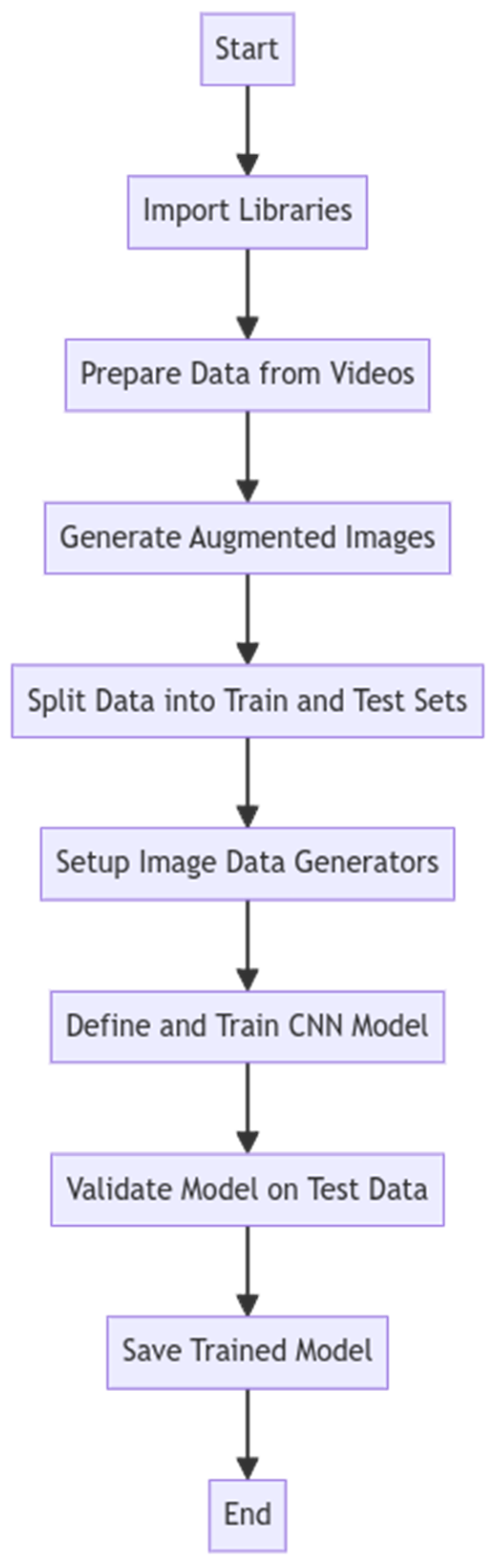

2.4. Convolutional Neural Network

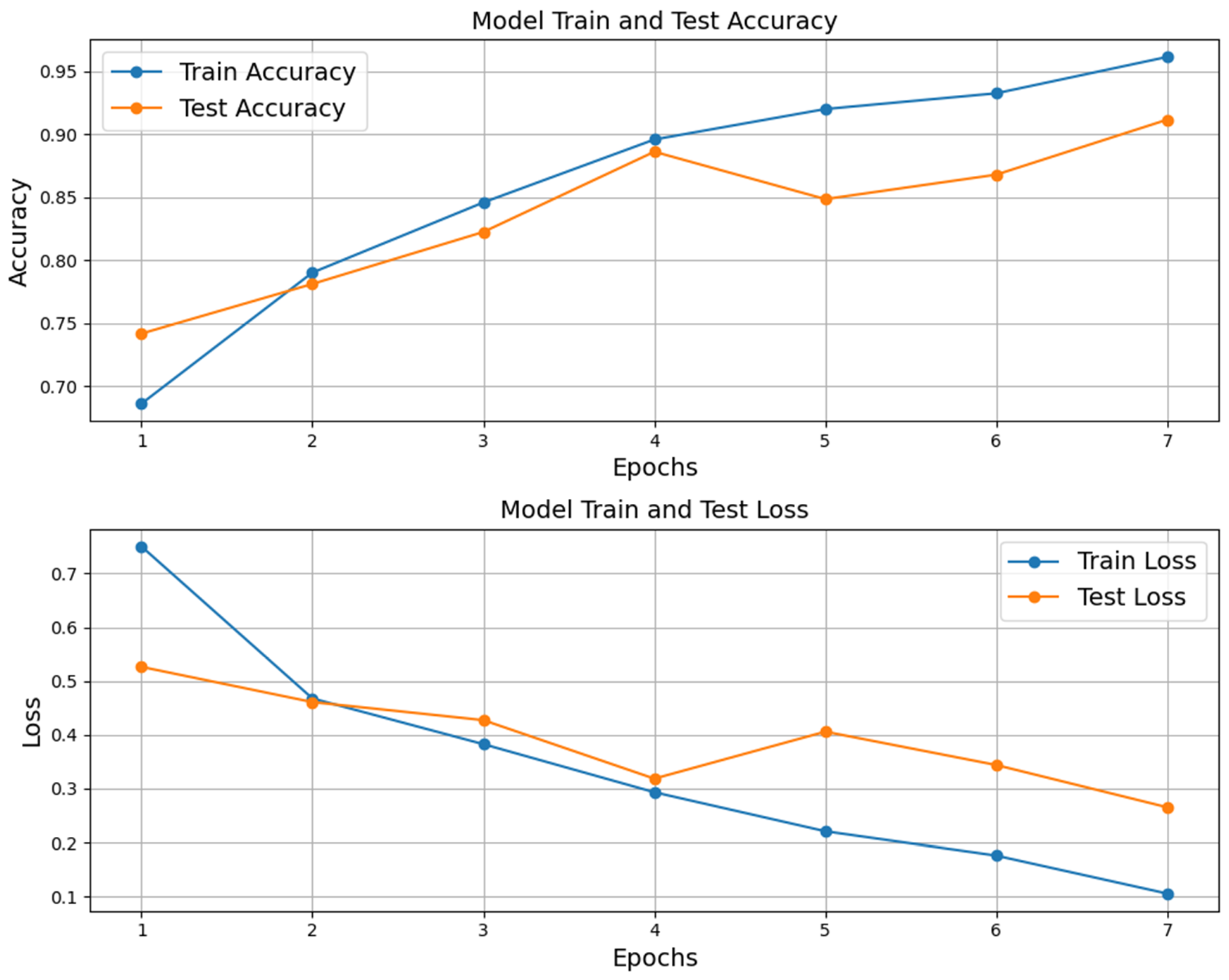

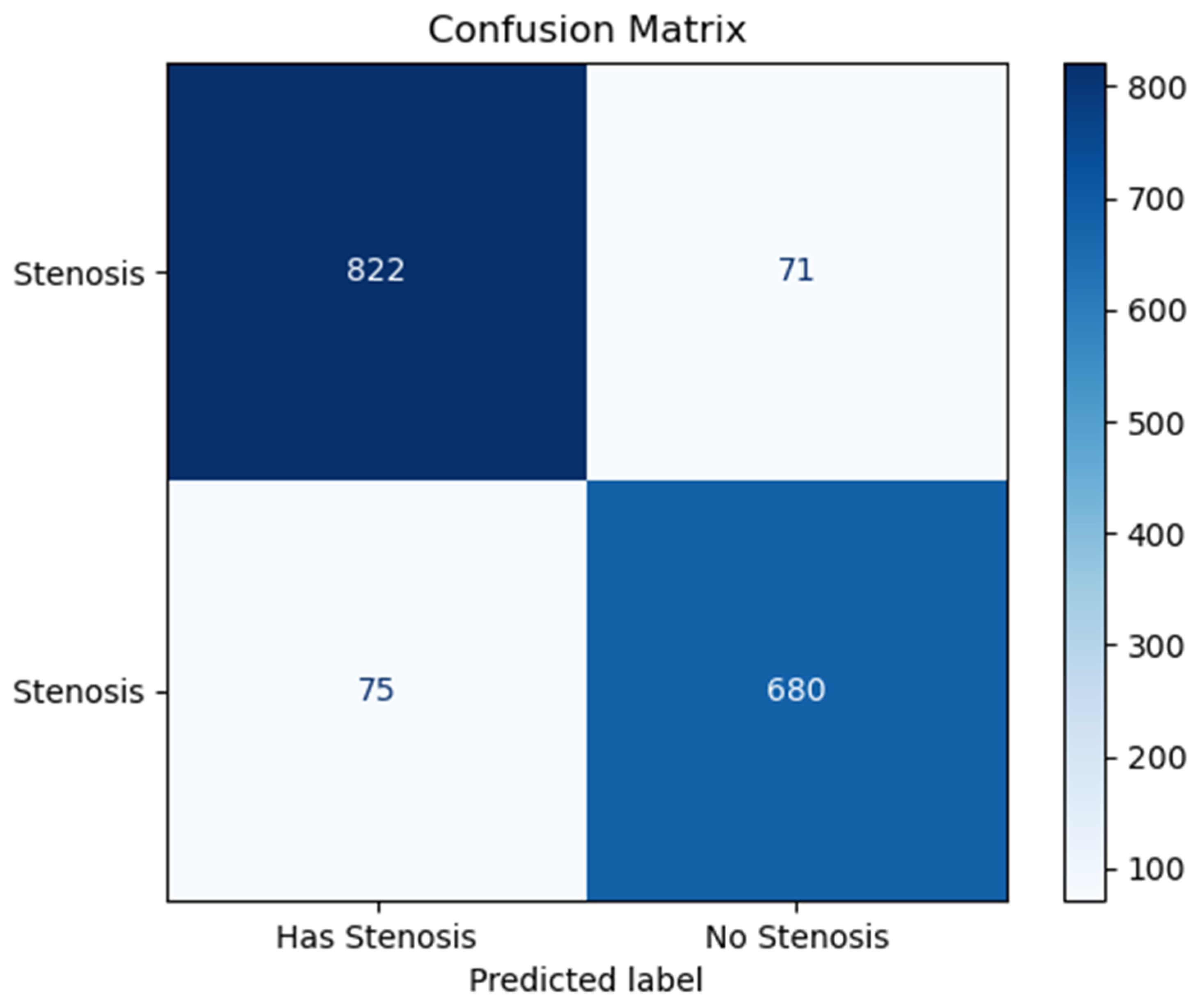

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Roth, G.A.; Mensah, G.A.; Johnson, C.O.; Addolorato, G.; Ammirati, E.; Baddour, L.M.; Barengo, N.C.; Beaton, A.Z.; Benjamin, E.J.; Benziger, C.P.; et al. GBD-NHLBI-JACC Global Burden of Cardiovascular Diseases Writing Group: Global Burden of Cardiovascular Diseases and Risk Factors, 1990–2019: Update from the GBD 2019 Study. J. Am. Coll. Cardiol. 2020, 76, 2982–3021, Erratum in: J. Am. Coll. Cardiol. 2021, 77, 1958–1959. [Google Scholar] [CrossRef] [PubMed]

- Virani, S.S.; Alonso, A.; Aparicio, H.J.; Benjamin, E.J.; Bittencourt, M.S.; Callaway, C.W.; Carson, A.P.; Chamberlain, A.M.; Cheng, S.; Delling, F.N.; et al. Heart Disease and Stroke Statistics—2021 Update: A Report from the American Heart Association. Circulation 2021, 143, e254–e743. [Google Scholar] [CrossRef]

- Andell, P.; Li, X.; Martinsson, A.; Andersson, C.; Stagmo, M.; Zöller, B.; Sundquist, K.; Smith, J.G. Epidemiology of valvular heart disease in a Swedish nationwide hospital-based register study. Heart 2017, 103, 1696–1703. [Google Scholar] [CrossRef] [PubMed]

- Turi, Z.G. Mitral Valve Disease. Circulation 2004, 109, e38–e41. [Google Scholar] [CrossRef]

- Dueñas-Criado, K.A.; Peña, A.H.; Rodriguez-González, M.J.; Fajardo, A. Cardiovascular Disease in Latin American Women Gaps and opportunities. Int. J. Cardiovasc. Sci. 2024, 37, e20230169. [Google Scholar] [CrossRef]

- Bonow, R.O.; Mann, D.L.; Zipes, D.P.; Libby, P. Echocardiography—Mitral Stenosis/Valve Diseases. In Braunwald’s Heart Disease: A Textbook of Cardiovascular Medicine; Elsevier: Amsterdam, The Netherlands, 2017; pp. 595–597/3794. [Google Scholar]

- Karamnov, S.; Burbano-Vera, N.; Huang, C.C.; Fox, J.A.; Shernan, S.K. Echocardiographic Assessment of Mitral Stenosis Orifice Area: A Comparison of a Novel Three-Dimensional Method Versus Conventional Techniques. Anesth. Analg. 2017, 125, 774–780. [Google Scholar] [CrossRef] [PubMed]

- Sadeghian, H.; Rezvanfard, M.; Jalali, A. Measurement of mitral valve area in patients with mitral stenosis by 3D echocardiography: A comparison between direct planimetry on 3D zoom and 3D quantification. Echocardiography 2019, 36, 1509–1514. [Google Scholar] [CrossRef]

- Toufan Tabrizi, M.; Faraji Azad, H.; Khezerlouy-Aghdam, N.; Sakha, H. Measurement of mitral valve area by direct three-dimensional planimetry compared to multiplanar reconstruction in patients with rheumatic mitral stenosis. Int. J. Cardiovasc. Imaging 2022, 38, 1341–1349. [Google Scholar] [CrossRef] [PubMed]

- Alkhodari, M.; Fraiwan, L. Convolutional and recurrent neural networks for the detection of valvular heart diseases in phonocardiogram recordings. Comput. Methods Programs Biomed. 2021, 200, 105940. [Google Scholar] [CrossRef]

- Joshi, R.C.; Khan, J.S.; Pathak, V.K.; Dutta, M.K. AI-CardioCare: Artificial Intelligence Based Device for Cardiac Health Monitoring. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 1292–1302. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Singh, S.; Joshi, D. Explainable Deep Convolutional Neural Network for Valvular Heart Diseases Classification Using PCG Signals. IEEE Trans. Instrum. Meas. 2023, 72, 2514215. [Google Scholar] [CrossRef]

- Cohen-Shelly, M.; Attia, Z.I.; Friedman, P.A.; Ito, S.; Essayagh, B.J.; Ko, W.Y.; Murphree, D.H.; Michelena, H.I.; Enriquez-Sarano, M.; Carter, R.E.; et al. Electrocardiogram screening for aortic valve stenosis using artificial intelligence. Eur. Heart J. 2021, 42, 2885–2896. [Google Scholar] [CrossRef] [PubMed]

- Ulloa-Cerna, A.E.; Jing, L.; Pfeifer, J.M.; Raghunath, S.; Ruhl, J.A.; Rocha, D.B.; Leader, J.B.; Zimmerman, N.; Lee, G.; Steinhubl, S.R.; et al. rECHOmmend: An ECG-Based Machine Learning Approach for Identifying Patients at Increased Risk of Undiagnosed Structural Heart Disease Detectable by Echocardiography. Circulation 2022, 146, 36–47. [Google Scholar] [CrossRef]

- Wegner, F.K.; Vidal, M.L.B.; Niehues, P.; Willy, K.; Radke, R.M.; Garthe, P.D.; Eckardt, L.; Baumgartner, H.; Diller, G.P.; Orwat, S. Accuracy of Deep Learning Echocardiographic View Classification in Patients with Congenital or Structural Heart Disease: Importance of Specific Datasets. J. Clin. Med. 2022, 11, 690. [Google Scholar] [CrossRef]

- Zhang, J.; Gajjala, S.; Agrawal, P.; Tiso, G.H.; Hallock, L.A.; Beussink-Nelson, L.; Lassen, M.H.; Fan, E.; Aras, M.A.; Jordan, C.; et al. Fully Automated Echocardiogram Interpretation in Clinical Practice. Circulation 2018, 138, 1623–1635. [Google Scholar] [CrossRef] [PubMed]

- Hahn, R.T.; Chaira Abraham, T.; Adams, M.S.; Bruce, C.J.; Glas, K.E.; Lang, R.M.; Reeves, S.T.; Shanewise, J.S.; Siu, S.C.; Stewart, W.; et al. Guidelines for Performing a Comprehensive Transesophageal Echocardiographic Examination: Recommendations from the American Society of Echocardiography and the Society of Cardiovascular Anesthesiologists. Anesth. Analg. 2014, 118, 22–68. [Google Scholar] [CrossRef]

- Hahn, R.T.; Saric, M.; Faletra, F.F.; Garg, R.; Gillam, L.D.; Horton, K.; Khalique, O.K.; Little, S.H.; Mackensen, G.B.; Oh, J.; et al. Recommended Standards for the Performance of Transesophageal Echocardiographic Screening for Structural Heart Intervention: From the American Society of Echocardiography. J. Am. Soc. Echocardiogr. 2022, 35, 1–76. [Google Scholar] [CrossRef]

- Cherry, A.D.; Maxwell, C.D.; Nicoara, A. Intraoperative Evaluation of Mitral Stenosis by Transesophageal Echocardiography. Anesth. Analg. 2016, 123, 14–20. [Google Scholar] [CrossRef]

- Tekalp, A.M. Basics of Video. In Digital Video Processing; Prentice Hall: Hoboken, NJ, USA, 1995; pp. 1–18. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Introduction. In Digital Image Processing, 2nd ed.; Prentice Hall: Hoboken, NJ, USA, 2002; pp. 1–33. [Google Scholar][Green Version]

- Choong, R.J.; Yap, W.S.; Hum, Y.C.; Lai, K.W.; Ling, L.; Vodacek, A.; Tee, Y.K. Impact of visual enhancement and color conversion algorithms on remote sound recovery from silent videos. J. Soc. Inf. Disp. 2024, 32, 100–113. [Google Scholar] [CrossRef]

- Blackledge, J.M. Segmantation and Edge Detection. In Digital Image Processing—Mathematical and Computational Methods, 1st ed.; Horwood Publishing: Chichester, UK, 2005; pp. 487–511. [Google Scholar][Green Version]

- Jähne, B. Segmentation. In Digital Image Processing, 6th ed.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 449–462. [Google Scholar][Green Version]

- Barros Filho, G.F.; Solha, I.; Medeiros, E.F.; Felix, A.S.; Almeida, A.L.C.; Lima Júnior, J.C.; Melo, M.D.T.; Rodrigues, M.C. Automatic Measurement of the Mitral Valve Based on Echocardiography Using Digital Image Processing. Arq. Bras. Cardiol. Imagem Cardiovasc. 2023, 36, e371. [Google Scholar] [CrossRef]

- Ghosh, A.; Sufian, A.; Sultana, F.; Chakrabarti, A.; De, D. Fundamental Concepts of Convolutional Neural Network. In Recent Trends and Advances in Artificial Intelligence and Internet of Things; Springer: Cham, Switzerland, 2020; pp. 519–567. [Google Scholar][Green Version]

- Teuwen, J.; Moriakov, N. Convolutional neural networks. In Handbook of Medical Image Computing and Computer Assisted Intervention; Academic Press: Cambridge, MA, USA, 2020; pp. 481–501. [Google Scholar][Green Version]

- Bishop, C.M. Pattern Recognition and Machine Learning (Information Science and Statistics); Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar][Green Version]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar][Green Version]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. 2014. Available online: https://arxiv.org/abs/1412.6980 (accessed on 1 October 2024).[Green Version]

- Siqueira, V.S.; Borges, M.M.; Furtado, R.G.; Dourado, C.N.; Costa, R.M. Artificial intelligence applied to support medical decisions for the automatic analysis of echocardiogram images: A systematic review. Artif. Intell. Med. 2021, 120, 102165. [Google Scholar] [CrossRef] [PubMed]

- Shahid, K.T.; Schizas, I. Unsupervised Mitral Valve Tracking for Disease Detection in Echocardiogram Videos. J. Imaging 2020, 6, 93. [Google Scholar] [CrossRef] [PubMed]

- Faraji, M.; Behnam, H.; Norizadeh Cherloo, M.; Shojaeifard, M. Novel approach for automatic mid-diastole frame detection in 2D echocardiography sequences for performing planimetry of the mitral valve orifice. IET Image Process 2020, 14, 2890–2900. [Google Scholar] [CrossRef]

| Echocardiogram Exam Videos | Area of Maximum Mitral Valve Opening Calculated by DIP | Classification |

|---|---|---|

| 1 | 4.2 cm2 | No Stenosis |

| 2 | 5.3 cm2 | |

| 3 | 5.1 cm2 | |

| 4 | 4.0 cm2 | |

| 5 | 5.5 cm2 | |

| 6 | 4.9 cm2 | |

| 7 | 7.4 cm2 | |

| 8 | 4.5 cm2 | |

| 9 | 2.7 cm2 | Mild Stenosis |

| 10 | 1.6 cm2 | |

| 11 | 1.6 cm2 | |

| 12 | 1.8 cm2 | |

| 13 | 3.7 cm2 | |

| 14 | 2.6 cm2 | |

| 15 | 3.2 cm2 | |

| 16 | 1.6 cm2 | |

| 17 | 1.0 cm2 | Moderate Stenosis |

| 18 | 1.1 cm2 | |

| 19 | 1.0 cm2 | |

| 20 | 1.1 cm2 | |

| 21 | 0.7 cm2 | Severe Stenosis |

| 22 | 0.9 cm2 | |

| 23 | 0.5 cm2 | |

| 24 | 0.4 cm2 | |

| 25 | 0.6 cm2 | |

| 26 | 0.4 cm2 | |

| 27 | 0.7 cm2 | |

| 28 | 0.6 cm2 | |

| 29 | 0.7 cm2 | |

| 30 | 0.7 cm2 |

| DIP | CNN | |

|---|---|---|

| Diagnosis | No Stenosis | No Stenosis |

| Mild Stenosis | Has Stenosis | |

| Moderate Stenosis | ||

| Severe Stenosis |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barros Filho, G.d.F.; Firmino, J.F.d.M.; Solha, I.; Medeiros, E.F.d.; Felix, A.d.S.; Lima Júnior, J.C.d.; Melo, M.D.T.d.; Rodrigues, M.C. Digital Image Processing and Convolutional Neural Network Applied to Detect Mitral Stenosis in Echocardiograms: Clinical Decision Support. J. Imaging 2025, 11, 272. https://doi.org/10.3390/jimaging11080272

Barros Filho GdF, Firmino JFdM, Solha I, Medeiros EFd, Felix AdS, Lima Júnior JCd, Melo MDTd, Rodrigues MC. Digital Image Processing and Convolutional Neural Network Applied to Detect Mitral Stenosis in Echocardiograms: Clinical Decision Support. Journal of Imaging. 2025; 11(8):272. https://doi.org/10.3390/jimaging11080272

Chicago/Turabian StyleBarros Filho, Genilton de França, José Fernando de Morais Firmino, Israel Solha, Ewerton Freitas de Medeiros, Alex dos Santos Felix, José Carlos de Lima Júnior, Marcelo Dantas Tavares de Melo, and Marcelo Cavalcanti Rodrigues. 2025. "Digital Image Processing and Convolutional Neural Network Applied to Detect Mitral Stenosis in Echocardiograms: Clinical Decision Support" Journal of Imaging 11, no. 8: 272. https://doi.org/10.3390/jimaging11080272

APA StyleBarros Filho, G. d. F., Firmino, J. F. d. M., Solha, I., Medeiros, E. F. d., Felix, A. d. S., Lima Júnior, J. C. d., Melo, M. D. T. d., & Rodrigues, M. C. (2025). Digital Image Processing and Convolutional Neural Network Applied to Detect Mitral Stenosis in Echocardiograms: Clinical Decision Support. Journal of Imaging, 11(8), 272. https://doi.org/10.3390/jimaging11080272