Abstract

Large-scale models trained on extensive datasets have become the standard due to their strong generalizability across diverse tasks. In-context learning (ICL), widely used in natural language processing, leverages these models by providing task-specific prompts without modifying their parameters. This paradigm is increasingly being adapted for computer vision, where models receive an input–output image pair, known as an in-context pair, alongside a query image to illustrate the desired output. However, the success of visual ICL largely hinges on the quality of these prompts. To address this, we propose Enhanced Instruct Me More (E-InMeMo), a novel approach that incorporates learnable perturbations into in-context pairs to optimize prompting. Through extensive experiments on standard vision tasks, E-InMeMo demonstrates superior performance over existing state-of-the-art methods. Notably, it improves mIoU scores by 7.99 for foreground segmentation and by 17.04 for single object detection when compared to the baseline without learnable prompts. These results highlight E-InMeMo as a lightweight yet effective strategy for enhancing visual ICL.

1. Introduction

Recent years have witnessed significant progress in large-scale models, which have shown impressive generalization capabilities and strong potential across various downstream tasks [,,]. Representative models like GPT [] and Gemini [] exemplify the effectiveness of in-context learning (ICL) in Natural Language Processing (NLP) [,,,,,,,,]. Through ICL, models can tackle novel tasks by leveraging prompt-based inputs to infer predictions on previously unseen data, without requiring updates to their parameters, thus substantially reducing training costs. Despite its promise as a foundational paradigm for deploying large-scale models in real-world scenarios, the exploration of ICL in computer vision applications is still at a relatively early stage [,,].

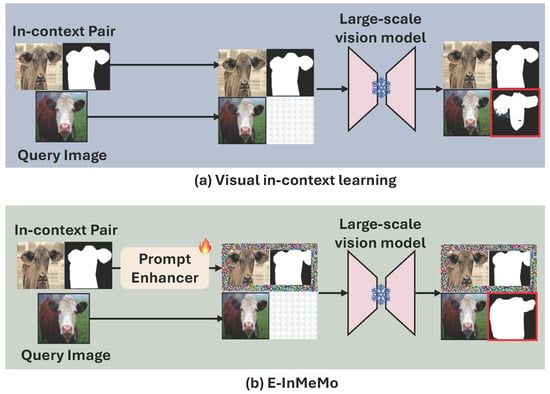

MAE-VQGAN [] marks a groundbreaking development by showcasing the potential of applying ICL to a range of computer vision tasks, such as image segmentation, inpainting, and style transfer. This method introduces visual prompts arranged in a four-cell grid canvas, as shown in Figure 1a, which includes a query image alongside an input–output example known as an in-context pair. These in-context pairs illustrate the task at hand by pairing an input image with its corresponding label or output image. Prior work [] has emphasized the importance of such pairs in effectively guiding the model toward producing accurate results. In visual ICL, it is particularly important that the in-context image closely resembles the query image in terms of semantics, perspective, and other attributes [], as illustrated in Figure 2. Consequently, selecting appropriate in-context pairs becomes a critical component of the overall process.

Figure 1.

A schematic comparison between standard visual ICL and E-InMeMo. (a) Visual ICL constructs a four-cell grid canvas composed of a query image, an in-context pair, and an empty cell (bottom-right) where the model generates the prediction. This canvas serves as the prompt, and the output (marked in the red box) is produced by passing it through a frozen large-scale vision model. (b) E-InMeMo enhances this paradigm by introducing a learnable prompt, a trainable perturbation designed to adjust the distribution of the in-context pair, thereby improving task guidance and prediction accuracy.

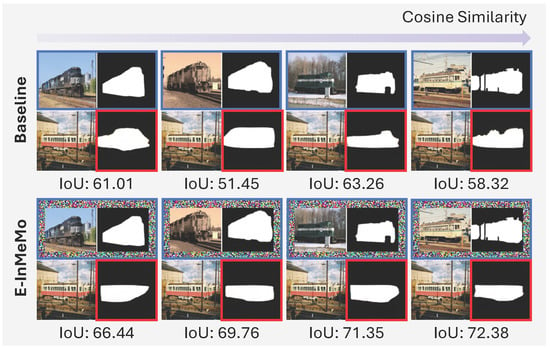

Figure 2.

Performance comparison of visual ICL on a foreground segmentation task. Blue boxes indicate in-context pairs, while red boxes represent the predicted label images (query images are unmarked). The quality and similarity of the in-context pair significantly influence the prediction results. In the absence of a learnable prompt, performance is highly dependent on the semantic closeness between the query and in-context images. In contrast, E-InMeMo, with its learnable prompt, produces more stable and accurate predictions across varying input conditions.

Although visual ICL methods have shown promising results [,], the in-context pairs retrieved are not always optimal. This is often due to limitations in the available retrieval dataset and a mismatch between the prompt content and the latent knowledge embedded within large-scale vision models. This raises a critical question: Is it possible to refine or transform the prompt to better guide the model for downstream visual ICL tasks?

The concept of learnable prompting (In [], modifying images with learnable pixel-level changes is called visual prompting. Since our approach also uses visual prompts consisting of a query and an in-context example, we refer to these pixel-level modifications as a learnable prompt.) [,,] provides a promising solution. It adjusts the model’s input without altering the model parameters, enabling adaptation to various tasks. This method, known as parameter-efficient fine-tuning (PEFT) [,,,], is especially suitable for large models where conventional fine-tuning can be prohibitively expensive due to their scale [,,,]. Learnable prompts have demonstrated a remarkable ability to adapt, even in cases with significant semantic or distributional differences [,,].

In this work, we introduce a novel visual ICL method called Enhanced Instruct Me More (E-InMeMo), which leverages learnable visual prompts to instruct large vision models. After retrieving an initial in-context pair, we refine it using a dedicated prompt enhancer inspired by []. As with previous visual ICL approaches, the refined in-context pair is combined with the query into a unified image canvas, which is then processed by a pre-trained large-scale vision model []. The learnable prompt is trained in a supervised fashion to produce the correct label image for the query.

Contributions. E-InMeMo offers a PEFT-based solution that enables efficient training while achieving high performance. By customizing a learnable prompt for a given task, the framework reshapes the prompt distribution to be more aligned with the task objectives, thereby improving both the encoding and decoding stages within the large-scale model. Our experiments establish new state-of-the-art (SOTA) results in tasks such as foreground segmentation and single object detection. Although E-InMeMo requires training, it substantially mitigates the limitations posed by suboptimal visual prompts.

This paper extends our earlier conference work published at WACV 2024 []. The enhancements in this version include the following:

- We introduce a new strategy to apply learnable prompt directly to the in-context pair, with strong robustness.

- We remove the need for resizing the in-context pairs, and thus reducing trainable parameters from 69,840 to 27,540.

- We replace CLIP [] with DINOv2 [] for feature extraction, improving representation quality.

- We validate E-InMeMo’s consistent outperformance across diverse datasets, including natural and medical images. Furthermore, we present comprehensive experiments to highlight E-InMeMo’s robustness and generalizability.

2. Related Work

2.1. In-Context Learning

In-Context Learning (ICL) has emerged as a powerful framework in Natural Language Processing (NLP), particularly with the advent of large language models (LLMs) such as GPT-3 []. By presenting several input–output examples pertaining to a specific task, this paradigm enables autoregressive models to perform inference without adjusting their parameters []. ICL has gained significant traction due to its various strengths [], including an intuitive and interpretable user interface [,,], its resemblance to human-like reasoning [], and its suitability for plug-and-play deployment as a service []. It has opened doors to new possibilities across different areas [,,], such as solving complex reasoning tasks [], question answering [,], and compositional generalization [,].

In the computer vision field, ICL has sparked growing interest [,,,,]. Unlike NLP, where instructions are text-based, visual ICL presents a unique challenge: how to clearly define the task for the model using visual inputs. Bar et al. [] introduced a novel method that combines an input–output image pair with a query image, to specify the task. This setup, presented as a single image, reframes the task as a form of image inpainting task. Subsequent efforts like Painter [], which adopts the MAE architecture instead of VQGAN, and SegGPT [], which generalizes the method to image and video segmentation, have built upon this setting. LVM [] further pushes the boundaries by tokenizing visual inputs and applying an autoregressive learning strategy akin to that used in LLMs.

Beyond developing new architectures, recent studies underscore the critical role of in-context image quality in determining performance [,]. Zhang et al. [] proposed a supervised approach for prompt selection, emphasizing that both the number and the relevance of in-context pairs significantly affect results. Similarly, Sun et al. [] explored pixel-level retrieval methods for selecting in-context pairs, experimenting with multiple layout configurations to improve ICL effectiveness.

While existing research has firmly established the importance of in-context pairs for downstream tasks [,,], little attention has been paid to altering or enhancing these pairs to boost visual ICL performance. Our study seeks to fill this gap by introducing learnable perturbations to refine in-context pairs and ultimately enhance downstream outcomes.

2.2. Learnable Prompting

In the NLP field, prompting is widely used to adapt LLMs for specific downstream applications []. For example, while GPT-3 [] demonstrates strong generalization across many tasks, it often relies on carefully engineered prompts, an approach that can be labor-intensive. Additionally, fully fine-tuning these massive models incurs high computational costs. Parameter-efficient fine-tuning (PEFT) addresses these challenges by modifying only a small fraction of the model parameters or by adding auxiliary components, such as adapters [,,] or learned prompt vectors [,], achieving performance comparable to full fine-tuning with far less overhead.

Motivated by the success of PEFT in NLP, researchers have extended these strategies to computer vision [,,,] and vision-language models [,,,]. A common approach involves augmenting the input image with learnable prompt embeddings or perturbations. Several recent methods have demonstrated how trainable modifications to visual inputs can enhance model performance [,,]. For instance, Bahng et al. [] proposed adding learnable, input-agnostic pixel-level prompts to input images to better adapt large, frozen models like CLIP [] to new tasks. ILM-VP [] extended this by introducing task-aware prompts that incorporate label mapping for improved transfer learning. Since this method involves optimizing only a small number of parameters relative to the full model, it offers a practical and scalable solution—particularly well-suited for integration into visual ICL pipelines. In this work, we explore the integration of visual prompting to improve task performance in vision models using in-context learning.

3. Method

3.1. Preliminary: MAE-VQGAN

MAE-VQGAN represents a pioneering effort that first introduces visual ICL via inpainting []. Consider a query image denoted by , alongside an example input and its corresponding ground-truth output (e.g., a segmentation result). These components form what is referred to as prompt. The prompt is created by concatenating , x, and y into a single canvas , (Following [], we add a two-pixel gap between images.) where represents image concatenation to formulate a four-cell grid canvas. The region remains blank, designated for the task output . (In [], the arrangement of , x, and y is flexible. A mask is used to specify the region r to be filled. For brevity, these details are omitted here.) The region r is subdivided into L patch regions, forming a patch set . The MAE-VQGAN computes the assignment scores vector for the patch region as follows:

where represents the pre-trained VQGAN codebook, and is the MAE function that outputs the assignment score of , with each element indicating the score for visual token . These scores can be interpreted as the probability that the visual token assigned to l, denoted by , is v. After determining the appropriate visual token for l as , the VQGAN decoder f generates the prediction , expressed as follows:

3.2. Overview of E-InMeMo

Let represent a dataset consisting of input images x paired with their corresponding label (or output) images y, designated for a specific downstream task, where . Given this dataset and a query image , the goal is to predict the output for the query.

An outline of the proposed E-InMeMo framework is illustrated in Figure 3. First, the query image is passed through an retriever, which selects a similar in-context pair from the dataset . This selected pair is combined with the query image into a grid format, forming what we refer to as a canvas. The canvas is expressed as , where r denotes an empty cell.

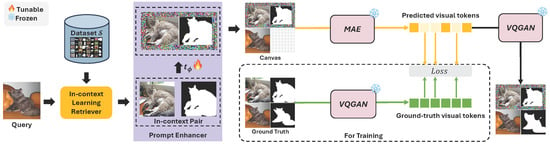

Figure 3.

Overview of the proposed E-InMeMo framework. The process begins with the In-context Learning Retriever, which selects an in-context pair from the dataset for a given query image. A Prompt Enhancer then applies learnable perturbations to the in-context pair, producing an enhanced version. These enhanced in-context images, along with the query and an empty cell (bottom-right), are arranged into a four-cell grid canvas. This canvas is passed through a frozen MAE, which outputs predicted visual tokens corresponding to the empty cell. For visualization, the predicted tokens are decoded into an image using the decoder of VQGAN. During training, a ground-truth canvas, comprising the original in-context pair and the true label for the query, is encoded using a pre-trained VQGAN encoder to produce ground-truth tokens. A cross-entropy loss is computed on the empty cell to update only the parameters of the Prompt Enhancer, .

The prompt enhancer, which integrates learnable prompt, then refines the in-context pair to produce . This results in an enhanced version of the canvas: . This enhanced canvas is then input to a frozen, pre-trained large-scale vision model g, which outputs a sequence of visual tokens . Simultaneously, the complete ground-truth canvas is encoded using a VQGAN encoder to generate the reference visual tokens .

To optimize the learnable prompt, we compute a cross-entropy loss over the visual tokens corresponding to the empty cell location. These tokens encode the model’s prediction , which is decoded by a function f that maps tokens back into the pixel domain, producing the final output .

At the heart of E-InMeMo lies the prompt enhancer, denoted as , parameterized by a learnable set . This component is trained on dataset to generate instructive and task-specific transformations, ensuring effective guidance even in scenarios where the retrieved in-context pair is suboptimal.

3.3. In-Context Learning Retriever

Selecting a high-quality in-context pair that aligns well with a given query image is essential for enhancing ICL performance []. To improve this selection process, we advocate for leveraging rich and comprehensive visual features during retrieval. To this end, we follow the Feature-Map-Level Retrieval (FMLR) [] strategy.

In particular, we employ a pre-trained visual encoder, different from that used in previous InMeMo works, such as the one from DINOv2 [] to extract -normalized visual features for both the query image and every candidate image . Given that our downstream tasks are entirely based on image features, and considering the differing pre-training paradigms of CLIP and DINOv2, it is reasonable to adopt DINOv2, which is pre-trained on large-scale images, as our feature extractor. Unlike methods that rely on global features, FMLR preserves the full feature map, flattens it into a one-dimensional representation, and utilizes it directly for similarity comparison.

Among all available in-context candidates in , the one whose visual representation is most similar to that of the query is selected as the in-context pair . Formally, the selection is given by

where denotes the extracted and normalized visual features.

3.4. Prompt Enhancer

Learnable visual prompting, initially introduced in [], draws inspiration from prompt-based learning in NLP [,,]. It offers an effective solution for addressing domain shifts by enabling input adaptation for downstream tasks, without requiring any fine-tuning of the base model. In our setting, we follow the formulation in [], introducing pixel-level learnable perturbations near the image boundaries to guide the model more effectively.

In the E-InMeMo framework, the Prompt Enhancer applies such learnable prompts to the in-context image pair using different formats. These enhanced examples provide additional instructions that help the frozen model interpret the task more accurately, thereby narrowing the domain gap between the query image and the provided in-context pairs. Notably, the learnable prompt is input-agnostic; a single shared prompt is used across all in-context pairs for the same task. This allows the learned prompt to act as a task-specific identifier.

Given a retrieved in-context pair , the prompt enhancer applies a learnable perturbation , controlled by a parameter set , to produce the modified pair as follows:

where defines the perturbation’s strength. Unlike previous InMeMo approaches, we add a 2-grid padding type visual prompting only to the in-context pair, rather than adding it to the in-context image and mask separately. This format provides the ICL model with more learnable parameters and fewer occlusions. The prompt resides in the image space and consists of learnable pixels concentrated along the image borders. All other pixels are zeroed out. These parameters are optimized through backpropagation during training.

3.5. Prediction

Following [], we adopt the MAE-VQGAN model, in which pre-trained MAE [] g generates visual tokens from . The VQGAN [] decoder f, again pre-trained, generates resulting image from .

After compiling the in-context pair and the query into a canvas , g predicts latent visual tokens , specifically,

where is a visual token in the vocabulary at spatial position l, and gives the probability of for l. The VQGAN decoder f then generates a label image by

We obtain the prediction for the query as .

3.6. Training

The only learnable parameters in E-InMeMo are the prompt . We train it for a specific task on . The loss is the same as [], while all parameters except for are frozen.

We first randomly choose a pair as query from . The E-InMeMo prediction process from the ICL retriever is then applied to to compute , but the retriever uses instead of .

The label image is used for training. We compile the retrieved in-context pair and into a canvas . The pre-trained VQGAN encoder E associated f gives the ground-truth visual tokens z that reconstruct with f, i.e.,

where again is the probability of for position l. The loss function to train our learnable prompt is given by

where CE is the cross-entropy loss, is the probabilities of respective tokens in , and the expectation is computed over all as well as all visual tokens corresponding to (i.e., over the latent visual tokens of r, represented as masked index).

3.7. Interpretation

Adding to images in a visual prompt as in Equation (4) translates the distribution of the prompt in a certain direction. Determining by Equation (8) will encode some ideas about the task described by in , supplying complementary information that is not fully conveyed by the in-context pair . We consider that our training roughly aligns the distributions of image patches and in the latent space before visual token classification with smaller degrees of freedom in . This can be particularly effective as these distributions are inherently different due to the lack of the ground-truth label image in the canvas. Therefore, our best expectation is that captures the distribution of collectively to bring the distribution of prompts closer to the ground-truth prompts (containing ground-truth label ). With this, the encoder f will have better access to more plausible visual tokens that decode a label image closer to the ground-truth label.

4. Experiments

4.1. Experimental Setup

Datasets and Downstream Tasks. We follow the experimental settings of [] to evaluate E-InMeMo. As downstream tasks, we perform foreground segmentation and single object detection. (1) Foreground segmentation aims to extract apparent objects from the query image with the in-context pair. We use the Pascal- dataset [], which is split into four-fold subsets, each containing five classes. (2) Single object detection evaluates whether a model can capture fine-grained features specified by a coarse-grained bounding box in the in-context pair. We conduct experiments on images and bounding boxes from the PASCAL VOC 2012 []. To align with [], we use a subset of the dataset whose samples only contain a single object as our dataset .

We also verify E-InMeMo on two medical datasets for foreground segmentation. (1) Kvasir-SEG dataset [] is a dataset for gastrointestinal polyp segmentation. It contains 1000 polyp images and their corresponding binary segmentation masks. Since there has no official split of the test set, we randomly split the whole dataset in a five-fold, to conduct the experiments. Notably, methods such as DCNet [] have demonstrated strong performance in similar polyp segmentation tasks. (2) Skin lesion dataset [] is a dermoscopic image benchmark challenge for diagnosis of skin cancer. It contains 900 images for training and 379 images for test. Each image contains their binary segmentation masks labeled by experts. For this specific task, approaches like PMFSNet [] have yielded promising results.

Methods for Comparison. All experiments utilize MAE-VQGAN [] as the pre-trained large-scale vision model. E-InMeMo is compared against SOTA methods for visual ICL. Below are the details of the comparison methods.

- Random [] means randomly retrieves in-context pairs from the training set.

- UnsupPR [] uses CLIP as the feature extractor to retrieve in-context pairs based on features after the classification header.

- SupPR [] introduces contrastive learning to develop a similarity metric for in-context pair selection.

- SCS [] proposes a stepwise context search method to adaptively search the well-matched in-context pairs based on a small yet rich candidate pool.

- Partial2Global [] proposes a transformer-based list-wise ranker and ranking aggregator to approximately identify the global optimal in-context pair.

- Prompt-SelF [] applies an ensemble of eight different prompt arrangements, combined using a pre-defined threshold of voting strategy.

- FMLR (CLIP) means to utilizes CLIP as the feature extractor for feature-map- level retrieval.

- FMLR (DINOv2) utilizes DINOv2 as the feature extractor for feature-map-level retrieval.

Additionally, we compare E-InMeMo with few-shot segmentation methods derived from meta-learning, such as OSLSM [] and co-FCN []. For medical datasets, E-InMeMo is compared with visual ICL methods and FMLR using different feature extractors.

Implementation details. For the foreground segmentation task, E-InMeMo is trained separately on each fold of the training dataset, treating each fold as an independent task and learning a unique prompt for each one. In the case of single object detection, the model is trained using the entire training set, with in-context pairs being retrieved from the same set. During evaluation, each test image is treated as a query, and its corresponding in-context pair is selected from the training set.

All images are resized to 111 × 111 pixels to construct the canvas. The learnable prompting is applied around the borders of the in-context pair, occupying 15 pixels along each edge. This configuration results in a total of 27,540 trainable parameters for the prompt . The canvas layout follows the standard format from [], where the enhanced in-context pair , the query image , and an empty cell r are placed at the top-left, top-right, bottom-left, and bottom-right positions, respectively. The perturbation strength is set to 1.

Our implementation is built with PyTorch (version 1.12.1). Training is conducted for 70 epochs using the Adam optimizer [], starting with an initial learning rate of 15. The learning rate follows a cosine annealing schedule with warm restarts. A key strength of E-InMeMo lies in its efficiency—the model can be trained on a single NVIDIA Tesla V100-SXM2-32GB GPU using a batch size of 32. Code is publicly available at: https://github.com/Jackieam/E-InMeMo (accessed on 20 May 2025).

4.2. Comparison with State-of-the-Art

We present a comparative analysis of E-InMeMo against existing visual in-context learning (ICL) methods and meta-learning-based few-shot learning approaches, as shown in Table 1. The results demonstrate that E-InMeMo sets a new SOTA in both downstream tasks, with particularly strong gains in single object detection. Notably, it significantly outperforms the FMLR baseline and consistently surpasses meta-learning-based methods across nearly all folds as well as in average performance. These findings underscore the effectiveness of incorporating a learnable prompt into the visual ICL framework.

Table 1.

Performance of the foreground segmentation and single object detection downstream tasks. The best scores in in-context learning are highlighted in bold. Seg. and Det. stand for the foreground segmentation and single object detection tasks, respectively.

One point of comparison is prompt-SelF [], whose performance benefits from a bagging mechanism. It applies the model across eight different canvas configurations and aggregates the results, thereby leveraging diverse perspectives from the large vision model. In contrast, E-InMeMo requires only a single inference per query, yet achieves superior results. While bagging may offer a performance boost without significant overhead, the improvements achieved by E-InMeMo remain substantial.

E-InMeMo particularly excels in single object detection, where it exceeds the prompt-SelF by an impressive 13.38 points, highlighting its ability to accurately capture fine-grained visual cues and detect small objects with high precision.

These findings illustrate the strength of our direct and efficient strategy. By refining the in-context pair through learnable prompting, E-InMeMo effectively enhances the model’s task comprehension. Furthermore, the approach remains lightweight, adding only 27,540 trainable parameters and requiring minimal computational resources. The task-specific, shared learnable prompts not only simplify the training process but also point toward a scalable and efficient direction for future visual ICL research.

4.3. Results on Medical Datasets

In addition to the dataset comprising natural images, we also verify E-InMeMo on two medical datasets as a foreground segmentation task. The results are shown in Table 2. We observe that E-InMeMo also achieved the SOTA results on these two medical datasets, demonstrating that E-InMeMo is also effective on medical domain datasets. We found that, on the ISIC dataset, there was a minor degradation of SupPR and FMLR (DINOv2) compared to suboptimal methods (such as UnSupPR and FMLR (CLIP)). This phenomenon demonstrates that previous methods cannot ensure obtaining better results on different datasets (such as medical datasets). But E-InMeMo also showed optimal performance on such dataset transformation.

Table 2.

The performance of E-InMeMo on ISIC and Kvasir datasets with different diseases.

4.4. Domain Shifts Analysis

In practical applications, domain shifts frequently occur, representing variations between the training dataset and the target environment. These discrepancies can lead to reduced performance when models are applied beyond their original training distribution. These shifts are prevalent across datasets and are commonly used as benchmarks to evaluate the robustness of machine learning models [].

To investigate E-InMeMo’s resilience to domain shifts, we adopt the COCO dataset [] for out-of-domain inference, following the evaluation protocol established in []. Specifically, we use a variant known as COCO-, which aligns its category divisions with those of Pascal- []. In this setup, referred to as COCO → Pascal, the in-context pairs are sampled from COCO-, while the query images come from the Pascal- validation split, consistent with the configuration in [].

The domain shifts evaluation results are summarized in Table 3. Under the COCO → Pascal setting, the FMLR baseline using DINOv2 experiences a 2.32-point drop in mIoU, equivalent to a 6.46% decrease. E-InMeMo, on the other hand, achieves an mIoU of 41.42%, reflecting a 2.49-point drop (5.67% decrease). Despite the slightly larger absolute drop, E-InMeMo exhibits a smaller relative decrease, and the performance gap between the two methods under domain shifts is minimal (0.17 points). This indicates that E-InMeMo maintains greater robustness in the face of domain shifts.

Table 3.

Domain shifts analysis on E-InMeMo. Pascal → Pascal means in-context pairs and query images are both from PASCAL. COCO → Pascal indicates that in-context pairs are from COCO while query images are from PASCAL.

These findings suggest that incorporating learnable prompts into visual ICL not only improves in-domain performance but also enhances cross-domain generalization. The robustness and adaptability of E-InMeMo make it a promising approach for real-world applications where domain shifts are inevitable.

4.5. Further Analyses on E-InMeMo

This section further investigates the capabilities of E-InMeMo through a series of experiments, primarily focusing on the fine-grained foreground segmentation task.

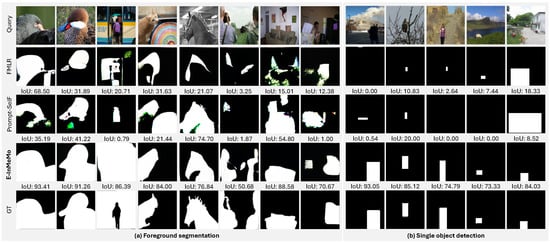

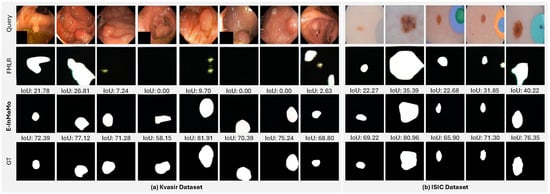

Qualitative comparison. We conduct a qualitative comparison of E-InMeMo against FMLR (DINOv2), prompt-SelF [], and the ground-truth (GT) labels across both foreground segmentation and single object detection tasks, as illustrated in Figure 4.

Figure 4.

Qualitative comparisons across baseline methods, prompt-SelF, and our proposed E-InMeMo on two downstream tasks: (a) Foreground segmentation and (b) Single-object detection. For each task, the top row shows the query image. The subsequent rows (from top to bottom) present results from FMLR (DINOv2), prompt-SelF, E-InMeMo, and the ground-truth (GT) label, respectively. E-InMeMo consistently enables visual ICL to capture finer details and demonstrates robustness to mismatches between in-context and query images. Notably, it also appears to mitigate the negative impact of low-quality in-context pairs—an important advantage when the retriever fails to find highly similar samples.

In the foreground segmentation task (Figure 4a), E-InMeMo produces segmentation results that closely align with the GT labels, capturing fine-grained details with high fidelity. Notably, E-InMeMo demonstrates strong robustness to various visual discrepancies. For instance, it performs well even when the in-context image is achromatic or when there is a substantial color difference between the in-context and query images. Additionally, E-InMeMo remains stable in cases involving significant foreground size variation and is capable of accurately distinguishing foreground from background in challenging scenes.

For the single object detection task (Figure 4b), E-InMeMo continues to exhibit consistent attention to detail. Its performance remains unaffected by changes in color or object scale within the in-context pair. Impressively, it is able to detect and localize objects even when the corresponding foreground in the in-context example is minimal, showcasing its adaptability and strong visual reasoning.

Figure 5 further showcases examples from two medical imaging datasets, Kvasir and ISIC. In these more complex and domain-specific tasks, E-InMeMo is compared with FMLR (DINOv2). The results reveal that E-InMeMo accurately segments the regions of interest, including gastrointestinal polyps and skin lesions, while FMLR consistently fails to deliver meaningful segmentation outputs. These visual results highlight the capability of E-InMeMo to generalize to medical imagery and reinforce its potential for real-world applications in specialized domains.

Figure 5.

Qualitative results of FMLR (DINOv2) and our proposed E-InMeMo on two medical datasets: (a) Kvasir and (b) ISIC. For each dataset, the top row shows the query image. The following rows are arranged from top to bottom in the order of FMLR (DINOv2), E-InMeMo, and the ground-truth label (GT), respectively.

Where should the learnable prompt be applied? We advocate applying the learnable prompt exclusively to in-context pairs, as they play a crucial role in guiding the model during inference and are central to the effectiveness of visual ICL. To systematically investigate the impact of prompt placement, we three variants of E-InMeMo with different prompt application strategies: (1) applying the prompt only to the in-context image (I); (2) only to the query image (Q); (3) to both the in-context image and query image (I & Q); and (4) to the in-context image and in-context label (I & L), which corresponds to the original E-InMeMo formulation.

The evaluation results are reported in Table 4. Across all variants, the introduction of a learnable prompt improves performance over baseline without the learnable prompt, regardless of where it is applied. Interestingly, while applying the prompt to in-context images alone (I) yields lower performance compared to other E-InMeMo variants, it still surpasses prompt-SelF. This finding reinforces that refining in-context pairs—even minimally—can significantly enhance visual ICL effectiveness.

Table 4.

Segmentation performance for different combinations of images in the canvas to which the learnable prompt is applied. I, L, and Q denote the in-context image, in-context label image, and query image, respectively.

Surprisingly, the configuration where the prompt is added to both the in-context and query images (I & Q) performs worse than adding it to either one individually. We attribute this to the input-agnostic nature of the prompt: when the same perturbation is applied to both images, it may hinder the model’s ability to distinguish task-specific cues, thus reducing its capacity to bridge the semantic gap between the query and the support examples.

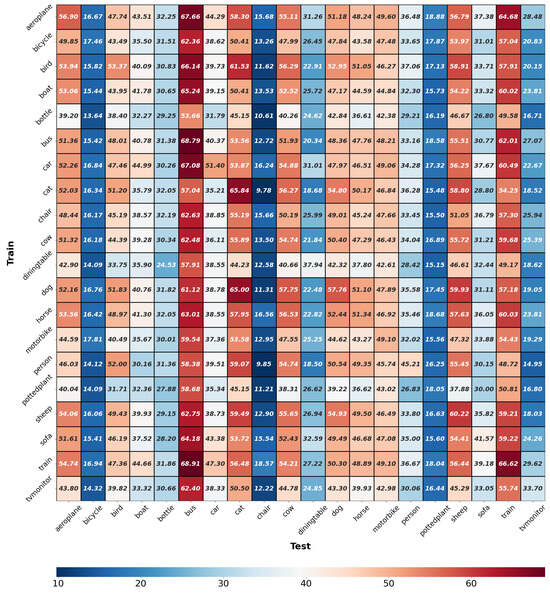

Intra- and inter-class generalizability of E-InMeMo. We have demonstrated that E-InMeMo performs well on low-quality datasets and now explore its generalizability to unseen classes not included in the training dataset . To achieve this, we train a learnable prompt for each of the 20 classes. Specifically, let denote the training subset of images and label images in Pascal- for class . During E-InMeMo training, an image from is used as a query, paired with an image and label from as an in-context pair. For inter-class generalizability, predictions are made using images in the test set as queries, with in-context pairs retrieved from the training set , where . The full results are shown in Table 1. Diagonal values indicate intra-class results. The analysis reveals varying levels of difficulty across classes: (1) Prompts trained on their own class achieve good results for classes such as bus, cat, and train. (2) Prompts trained on their own class perform poorly for classes such as bicycle, chair, and pottedplant.

We also observed that, for both challenging and general classes, the overall performance of prompts adapted from different classes aligns with intra-class performance (best viewed vertically in Figure 6). For instance, bus, cat, sheep, and train are the most generalizable classes, with prompts trained on different classes achieving high accuracy (IoU close to 50%). In contrast, classes such as bicycle, chair, and pottedplant are the least generalizable, performing poorly across all prompts. These results suggest that, for challenging classes, learned prompts struggle to deliver good performance, whereas for general classes, learned prompts consistently achieve strong results.

Figure 6.

Intra- and inter-class generalization performance in mIoU. The horizontal and vertical axes are the classes used for prediction and training, respectively. The diagonal elements show intra-class performance.

In our inter-class analysis (best viewed horizontally in Figure 6), we observe that the effectiveness of prompts trained on different classes varies based on the inherent difficulty of each target class. Notably, certain patterns of generalizability emerge across related categories. Within the transportation super-class—comprising classes such as aeroplane, train, and car—prompts exhibit strong cross-class generalization. For example, prompts trained on aeroplane generalize well to train and bus, and similarly for car-train and motorbike-train pairs.

In contrast, prompts from transportation classes tend to generalize poorly to the animal category (e.g., dog, horse). Within the animal group, most classes show good mutual generalizability, with the exception of cow, which consistently underperforms. However, prompts from animal classes also struggle to generalize to transportation classes.

There are a few interesting exceptions. For instance, the aeroplane class generalizes surprisingly well to cat, and bird performs well when applied to bus. These anomalies may be attributed to class-specific segmentation properties—for example, cat and bus may inherently be easier to segment, regardless of the prompt source.

The mean mIoU score over all pairs of 20 classes is 39.24%. This score surpasses most methods in Table 1 but represents a significant drop compared to E-InMeMo’s mean score over all folds. This finding underscores the importance of tuning the learnable prompt for target tasks.

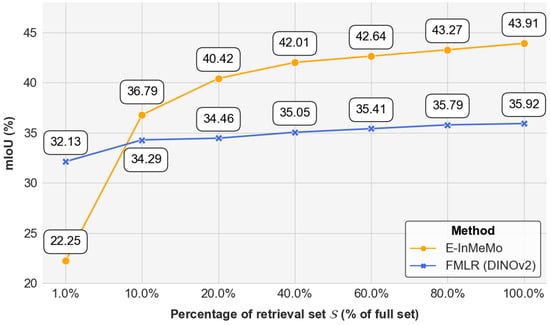

Is E-InMeMo sensitive to the size of retrieval dataset? Following [], we wonder whether E-InMeMo is sensitive to the size of the retrieval set or not. So we use seven different subsets (1%, 10%, 20%, 40%, 60%, 80%, and 100%) on each fold as retrieval sets to be used for retrieval and as training sets for E-InMeMo training. The results are shown in Figure 7. It is obvious that both FMLR (DINOv2) and E-InMeMo benefit from larger retrieval set sizes. There is a clear trend in the growth of E-InMeMo; especially when going from 1.0% to 10.0%, there is a significant increase in mIoU. E-InMeMo is more sensitive to the data, and when the retrieval set is increased from 1% to 100%, its mIoU improves by 21.66 points. FMLR (DINOv2), on the other hand, is not as sensitive to the size of the retrieval set and improves by only 3.79 points. It should be noted that E-InMeMo needs only 10% of the retrieval set to achieve significantly better results than FMLR (DINOv2) at 100% retrieval set with parameter-efficient fine-tuning which, side by side, emphasizes that E-InMeMo is a data-efficient method.

Figure 7.

The performance of E-InMeMo and FMLR (DINOv2) in mIoU of each fold for the size of retrieval set . We annotate the scores in the figure.

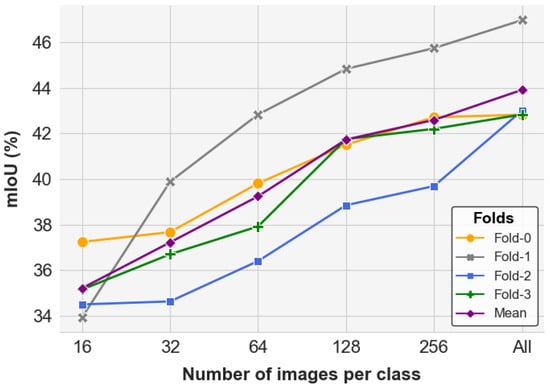

Is E-InMeMo sensitive to dataset size? To better understand how the performance of E-InMeMo scales with the size of the dataset , we investigate its sensitivity to varying data volumes. Given the lightweight and efficient nature of the learnable prompt, we systematically varied the dataset size by randomly selecting 16, 32, 64, 128, and 256 images per class for each fold. The performance trends are illustrated in Figure 8.

Figure 8.

The performance of E-InMeMo in mIoU of each fold for the number of images per class in . All means to use all images in the training set.

The experimental results indicate that E-InMeMo outperforms the baseline mIoU score of 35.92% once the dataset includes at least 64 images per class, achieving 37.22% at that point. Across all folds, performance generally improves as more data is provided. For instance, in Fold-0, E-InMeMo reaches 37.24% with only 16 images per class and shows rapid gains starting from 32 images, eventually saturating at 256. Both Fold-1 and Fold-2 demonstrate steady and significant improvements with increasing data volume.

In Fold-3, a more complex fold, a notable jump in performance is observed between 64 and 128 images per class. Beyond this, the improvement plateaus, with only marginal gains when utilizing the full dataset.

Overall, these results suggest that E-InMeMo requires fewer training examples for simpler folds, yet benefits from larger datasets in more complex scenarios. This data efficiency makes E-InMeMo particularly suitable for tasks where labeled data is limited, while still being capable of leveraging larger datasets when available to further boost performance.

Effect of Padding Size in the Prompt Enhancer . In our implementation, the padding size for E-InMeMo’s learnable prompt enhancer is set to 15 pixels. To investigate the influence of different padding sizes on model performance, we conducted a comprehensive evaluation, with the results summarized in Table 5.

Table 5.

The mIoU scores for varying padding sizes of prompt enhancer . The Para. represents the number of tunable parameters. The highest score in each fold is marked in bold.

E-InMeMo consistently outperformed all baseline methods across different padding sizes in terms of Mean performance. As the padding size increased from 5 to 15, we observed a steady improvement, suggesting that a moderate spatial footprint for the prompt allows the model to better guide the in-context pair without excessive interference.

However, further increasing the padding beyond 15 pixels led to diminishing returns. Specifically, increasing the padding size to 20 resulted in a noticeable drop in performance—from 43.91% to 40.22% on Mean. Although a small gain was observed when moving from 20 to 25 pixels, performance remained below the optimal. When the padding was increased to 30, the performance continued to decline.

Interestingly, at a padding size of 10, the model achieved the best results on Fold-0 (43.59%) and Fold-1 (47.86%), yet overall Mean performance was slightly lower than that of padding size 15. This suggests that, while smaller prompts can be beneficial in specific scenarios, they may lack the generalization capacity required across more diverse or complex folds. We hypothesize that larger padding sizes (e.g., larger than 20) cause the learnable prompt to occupy too much of the original image, potentially overwriting critical visual information from the in-context pair. This over-perturbation compromises the prompt’s guiding ability and leads to a drop in performance.

In summary, our findings indicate that a padding size of 15 offers the best trade-off between expressive capacity and preservation of the original visual content, making it the empirically optimal setting for E-InMeMo. Expanding the padding further introduces unnecessary parameters and can degrade performance.

5. Discussion

We proposed E-InMeMo, a parameter-efficient method for applying vision foundation models to specific tasks and domains. E-InMeMo has broad applicability in fields such as medicine and environmental science, where ease of adaptation and high accuracy are essential. The basic performance of visual in-context learning (ICL) can be further enhanced depending on the strength of the underlying vision foundation models [,]. Leveraging powerful models and integrating E-InMeMo with data- and parameter-efficient strategies could be a promising direction for real-world deployment.

Limitations. Despite its strengths, E-InMeMo requires a minimum of 32 images per class to achieve competitive performance relative to the baseline. Furthermore, the learned prompt is task-specific, a prompt trained for one class or task does not generalize well to others. Therefore, designing dedicated prompts for each target task remains essential to maximizing performance.

6. Conclusions

E-InMeMo achieves SOTA performance on two downstream tasks by introducing a learnable prompt to the in-context pairs, a lightweight yet powerful approach that enhances the effectiveness of visual in-context learning (ICL). This learnable prompt improves the model’s ability to recover fine-grained details in predictions and mitigates the negative impact of low-quality or semantically distant in-context pairs. Furthermore, our experiments demonstrate that E-InMeMo exhibits robustness to domain shifts, such as transferring from the Pascal dataset to COCO.

Author Contributions

Conceptualization, J.Z. and B.W.; methodology, J.Z. and B.W.; investigation, J.Z.; formal analysis, J.Z.; validation, J.Z.; visualization, J.Z.; writing—original draft preparation, J.Z.; writing—review and editing, B.W., H.L., L.L., and Y.N.; supervision, B.W., H.L., L.L., Y.N., and H.N.; funding acquisition, Y.N. and H.N. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by FOREST Grant No. JPMJFR216O.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available in Pascal-: https://github.com/Seokju-Cho/Volumetric-Aggregation-Transformer (accessed on 20 May 2025); COCO: https://cocodataset.org/#home (accessed on 20 May 2025); ISIC: https://challenge.isic-archive.com/data (accessed on 20 May 2025); Kvasir SEG: https://datasets.simula.no/kvasir-seg (accessed on 20 May 2025).

Conflicts of Interest

The author L.L. was employed by Xiamen Meet You Co., Ltd. All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the opportunities and risks of foundation models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- GPT-4o. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 21 February 2025).

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Rubin, O.; Herzig, J.; Berant, J. Learning to retrieve prompts for in-context learning. arXiv 2021, arXiv:2112.08633. [Google Scholar]

- Gonen, H.; Iyer, S.; Blevins, T.; Smith, N.A.; Zettlemoyer, L. Demystifying prompts in language models via perplexity estimation. arXiv 2022, arXiv:2212.04037. [Google Scholar]

- Wu, Z.; Wang, Y.; Ye, J.; Kong, L. Self-adaptive in-context learning. arXiv 2022, arXiv:2212.10375. [Google Scholar]

- Sorensen, T.; Robinson, J.; Rytting, C.M.; Shaw, A.G.; Rogers, K.J.; Delorey, A.P.; Khalil, M.; Fulda, N.; Wingate, D. An information-theoretic approach to prompt engineering without ground truth labels. arXiv 2022, arXiv:2203.11364. [Google Scholar]

- Honovich, O.; Shaham, U.; Bowman, S.R.; Levy, O. Instruction induction: From few examples to natural language task descriptions. arXiv 2022, arXiv:2205.10782. [Google Scholar]

- Wang, Y.; Kordi, Y.; Mishra, S.; Liu, A.; Smith, N.A.; Khashabi, D.; Hajishirzi, H. Self-instruct: Aligning language model with self generated instructions. arXiv 2022, arXiv:2212.10560. [Google Scholar]

- Min, S.; Lewis, M.; Hajishirzi, H.; Zettlemoyer, L. Noisy channel language model prompting for few-shot text classification. arXiv 2021, arXiv:2108.04106. [Google Scholar]

- Zhang, J.; Yoshihashi, R.; Kitada, S.; Osanai, A.; Nakashima, Y. VASCAR: Content-Aware Layout Generation via Visual-Aware Self-Correction. arXiv 2024, arXiv:2412.04237. [Google Scholar]

- Wang, B.; Chang, J.; Qian, Y.; Chen, G.; Chen, J.; Jiang, Z.; Zhang, J.; Nakashima, Y.; Nagahara, H. DiReCT: Diagnostic Reasoning for Clinical Notes via Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 74999–75011. [Google Scholar]

- Bar, A.; Gandelsman, Y.; Darrell, T.; Globerson, A.; Efros, A. Visual prompting via image inpainting. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 25005–25017. [Google Scholar]

- Zhang, Y.; Zhou, K.; Liu, Z. What Makes Good Examples for Visual In-Context Learning? In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2023; Volume 36, pp. 17773–17794. [Google Scholar]

- Wang, X.; Wang, W.; Cao, Y.; Shen, C.; Huang, T. Images speak in images: A generalist painter for in-context visual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 6830–6839. [Google Scholar]

- Sun, Y.; Chen, Q.; Wang, J.; Wang, J.; Li, Z. Exploring Effective Factors for Improving Visual In-Context Learning. arXiv 2023, arXiv:2304.04748. [Google Scholar] [CrossRef] [PubMed]

- Bahng, H.; Jahanian, A.; Sankaranarayanan, S.; Isola, P. Exploring visual prompts for adapting large-scale models. arXiv 2022, arXiv:2203.17274. [Google Scholar]

- Chen, A.; Yao, Y.; Chen, P.Y.; Zhang, Y.; Liu, S. Understanding and improving visual prompting: A label-mapping perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 19133–19143. [Google Scholar]

- Oh, C.; Hwang, H.; Lee, H.y.; Lim, Y.; Jung, G.; Jung, J.; Choi, H.; Song, K. BlackVIP: Black-Box Visual Prompting for Robust Transfer Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 24224–24235. [Google Scholar]

- Jia, M.; Tang, L.; Chen, B.C.; Cardie, C.; Belongie, S.; Hariharan, B.; Lim, S.N. Visual prompt tuning. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 709–727. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The power of scale for parameter-efficient prompt tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar]

- Li, X.L.; Liang, P. Prefix-tuning: Optimizing continuous prompts for generation. arXiv 2021, arXiv:2101.00190. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Learning to prompt for vision-language models. Int. J. Comput. Vis. 2022, 130, 2337–2348. [Google Scholar] [CrossRef]

- Elsayed, G.F.; Goodfellow, I.; Sohl-Dickstein, J. Adversarial reprogramming of neural networks. arXiv 2018, arXiv:1806.11146. [Google Scholar]

- Neekhara, P.; Hussain, S.; Du, J.; Dubnov, S.; Koushanfar, F.; McAuley, J. Cross-modal adversarial reprogramming. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2427–2435. [Google Scholar]

- Zhang, J.; Wang, B.; Li, L.; Nakashima, Y.; Nagahara, H. Instruct me more! random prompting for visual in-context learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 4–8 January 2024; pp. 2597–2606. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Dong, Q.; Li, L.; Dai, D.; Zheng, C.; Wu, Z.; Chang, B.; Sun, X.; Xu, J.; Sui, Z. A survey for in-context learning. arXiv 2022, arXiv:2301.00234. [Google Scholar]

- Liu, J.; Shen, D.; Zhang, Y.; Dolan, B.; Carin, L.; Chen, W. What Makes Good In-Context Examples for GPT-3? arXiv 2021, arXiv:2101.06804. [Google Scholar]

- Lu, Y.; Bartolo, M.; Moore, A.; Riedel, S.; Stenetorp, P. Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. arXiv 2021, arXiv:2104.08786. [Google Scholar]

- Winston, P.H. Learning and reasoning by analogy. Communications of the ACM 1980, 23, 689–703. [Google Scholar] [CrossRef]

- Sun, T.; Shao, Y.; Qian, H.; Huang, X.; Qiu, X. Black-box tuning for language-model-as-a-service. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 20841–20855. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. Palm: Scaling language modeling with pathways. arXiv 2022, arXiv:2204.02311. [Google Scholar]

- Min, S.; Lewis, M.; Zettlemoyer, L.; Hajishirzi, H. Metaicl: Learning to learn in context. arXiv 2021, arXiv:2110.15943. [Google Scholar]

- Kim, H.J.; Cho, H.; Kim, J.; Kim, T.; Yoo, K.M.; Lee, S.g. Self-generated in-context learning: Leveraging auto-regressive language models as a demonstration generator. arXiv 2022, arXiv:2206.08082. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 24824–24837. [Google Scholar]

- Press, O.; Zhang, M.; Min, S.; Schmidt, L.; Smith, N.A.; Lewis, M. Measuring and narrowing the compositionality gap in language models. arXiv 2022, arXiv:2210.03350. [Google Scholar]

- An, S.; Lin, Z.; Fu, Q.; Chen, B.; Zheng, N.; Lou, J.G.; Zhang, D. How Do In-Context Examples Affect Compositional Generalization? arXiv 2023, arXiv:2305.04835. [Google Scholar]

- Hosseini, A.; Vani, A.; Bahdanau, D.; Sordoni, A.; Courville, A. On the compositional generalization gap of in-context learning. arXiv 2022, arXiv:2211.08473. [Google Scholar]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 23716–23736. [Google Scholar]

- Bai, Y.; Geng, X.; Mangalam, K.; Bar, A.; Yuille, A.L.; Darrell, T.; Malik, J.; Efros, A.A. Sequential modeling enables scalable learning for large vision models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 22861–22872. [Google Scholar]

- Wang, X.; Zhang, X.; Cao, Y.; Wang, W.; Shen, C.; Huang, T. Seggpt: Towards segmenting everything in context. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 1130–1140. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Kamath, A.; Rücklé, A.; Cho, K.; Gurevych, I. AdapterFusion: Non-destructive task composition for transfer learning. arXiv 2020, arXiv:2005.00247. [Google Scholar]

- Houlsby, N.; Giurgiu, A.; Jastrzebski, S.; Morrone, B.; De Laroussilhe, Q.; Gesmundo, A.; Attariyan, M.; Gelly, S. Parameter-efficient transfer learning for NLP. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2790–2799. [Google Scholar]

- Zhang, R.; Han, J.; Liu, C.; Gao, P.; Zhou, A.; Hu, X.; Yan, S.; Lu, P.; Li, H.; Qiao, Y. Llama-adapter: Efficient fine-tuning of language models with zero-init attention. arXiv 2023, arXiv:2303.16199. [Google Scholar]

- Hu, S.; Ding, N.; Wang, H.; Liu, Z.; Wang, J.; Li, J.; Wu, W.; Sun, M. Knowledgeable prompt-tuning: Incorporating knowledge into prompt verbalizer for text classification. arXiv 2021, arXiv:2108.02035. [Google Scholar]

- Tsai, Y.Y.; Chen, P.Y.; Ho, T.Y. Transfer learning without knowing: Reprogramming black-box machine learning models with scarce data and limited resources. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 9614–9624. [Google Scholar]

- Chen, S.; Ge, C.; Tong, Z.; Wang, J.; Song, Y.; Wang, J.; Luo, P. Adaptformer: Adapting vision transformers for scalable visual recognition. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 16664–16678. [Google Scholar]

- Zhou, K.; Yang, J.; Loy, C.C.; Liu, Z. Conditional prompt learning for vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16816–16825. [Google Scholar]

- Gao, P.; Geng, S.; Zhang, R.; Ma, T.; Fang, R.; Zhang, Y.; Li, H.; Qiao, Y. Clip-adapter: Better vision-language models with feature adapters. Int. J. Comput. Vis. 2024, 132, 581–595. [Google Scholar] [CrossRef]

- Tsao, H.A.; Hsiung, L.; Chen, P.Y.; Liu, S.; Ho, T.Y. Autovp: An automated visual prompting framework and benchmark. arXiv 2023, arXiv:2310.08381. [Google Scholar]

- Han, X.; Zhao, W.; Ding, N.; Liu, Z.; Sun, M. Ptr: Prompt tuning with rules for text classification. AI Open 2022, 3, 182–192. [Google Scholar] [CrossRef]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 16000–16009. [Google Scholar]

- Esser, P.; Rombach, R.; Ommer, B. Taming transformers for high-resolution image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12873–12883. [Google Scholar]

- Shaban, A.; Bansal, S.; Liu, Z.; Essa, I.; Boots, B. One-shot learning for semantic segmentation. arXiv 2017, arXiv:1709.03410. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Jha, D.; Smedsrud, P.H.; Riegler, M.A.; Halvorsen, P.; De Lange, T.; Johansen, D.; Johansen, H.D. Kvasir-seg: A segmented polyp dataset. In Proceedings of the International Conference on Multimedia Modeling, Daejeon, Republic of Korea, 5–8 January 2020; pp. 451–462. [Google Scholar]

- Yue, G.; Xiao, H.; Xie, H.; Zhou, T.; Zhou, W.; Yan, W.; Zhao, B.; Wang, T.; Jiang, Q. Dual-constraint coarse-to-fine network for camouflaged object detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 3286–3298. [Google Scholar] [CrossRef]

- Gutman, D.; Codella, N.C.; Celebi, E.; Helba, B.; Marchetti, M.; Mishra, N.; Halpern, A. Skin lesion analysis toward melanoma detection: A challenge at the international symposium on biomedical imaging (ISBI) 2016, hosted by the international skin imaging collaboration (ISIC). arXiv 2016, arXiv:1605.01397. [Google Scholar]

- Zhong, J.; Tian, W.; Xie, Y.; Liu, Z.; Ou, J.; Tian, T.; Zhang, L. PMFSNet: Polarized multi-scale feature self-attention network for lightweight medical image segmentation. Comput. Methods Programs Biomed. 2025, 108611. [Google Scholar] [CrossRef] [PubMed]

- Suo, W.; Lai, L.; Sun, M.; Zhang, H.; Wang, P.; Zhang, Y. Rethinking and Improving Visual Prompt Selection for In-Context Learning Segmentation. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 18–35. [Google Scholar]

- Xu, C.; Liu, C.; Wang, Y.; Yao, Y.; Fu, Y. Towards Global Optimal Visual In-Context Learning Prompt Selection. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 74945–74965. [Google Scholar]

- Rakelly, K.; Shelhamer, E.; Darrell, T.; Efros, A.; Levine, S. Conditional networks for few-shot semantic segmentation. In Proceedings of the International Conference on Learning Representations Workshop, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhou, K.; Liu, Z.; Qiao, Y.; Xiang, T.; Loy, C.C. Domain generalization: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 4396–4415. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).