1. Introduction

Medical visualization is crucial for assisting physicians in analyzing diseases [

1,

2], particularly for different lesions. Meningiomas are the most common primary intracranial tumors in adults. Most meningiomas can be surgically resected to reduce the risk of recurrence [

3]. Visualization of meningiomas helps radiologists assess the location and volume of the tumor within the brain and facilitates neurosurgeons’ surgery planning.

However, traditional medical 3D visualization methods struggle to effectively distinguish lesion areas from normal tissue due to overlapping pixel value ranges between tumor and non-tumor regions [

1,

4]. Conventional methods usually fail to accurately identify lesion areas and highlight them automatically. For a more effective analysis of tumors’ 3D structures, segmentation of tumors is frequently required. In recent years, with the development of deep learning, automatic segmentation technique has been significantly improved [

5]. However, the technique relies on meticulously annotated data of high quality, which is expensive and time-consuming to acquire. More and more deep learning methods have adopted semi-supervised learning (SSL) strategies to reduce the dependence on annotations [

6,

7,

8,

9]. Therefore, we embed the SSL-based segmentation method into our visualization system to reduce the cost of manual annotations.

The visualization of segmentation results is also crucial. Traditional approaches involve extracting meshes from segmented masks, yet they struggle to analyze tumor positions across the brain. Direct volume rendering (DVR) can reveal the internal structure of volume data and stands as a pivotal technique in medical visualization [

1,

10,

11,

12]. It aids in displaying richer hierarchical details in the rendered results. Existing DVR technologies [

10,

13] primarily focus on various color effects, with little attention given to how to highlight regions of interest for the user. Most of these technologies focus solely on assigning material properties based on non-semantic features of volumetric data, without considering semantic information. For volumetric data that includes pre-extracted structures of interest, there is a lack of research on how to better display the shape of these structures and their relative positional relationships with surrounding tissue structures. Particularly for the target users (medical professionals), an important research focus is how to achieve optimal visualization results with minimal operation, without the need for a background in visualization theory.

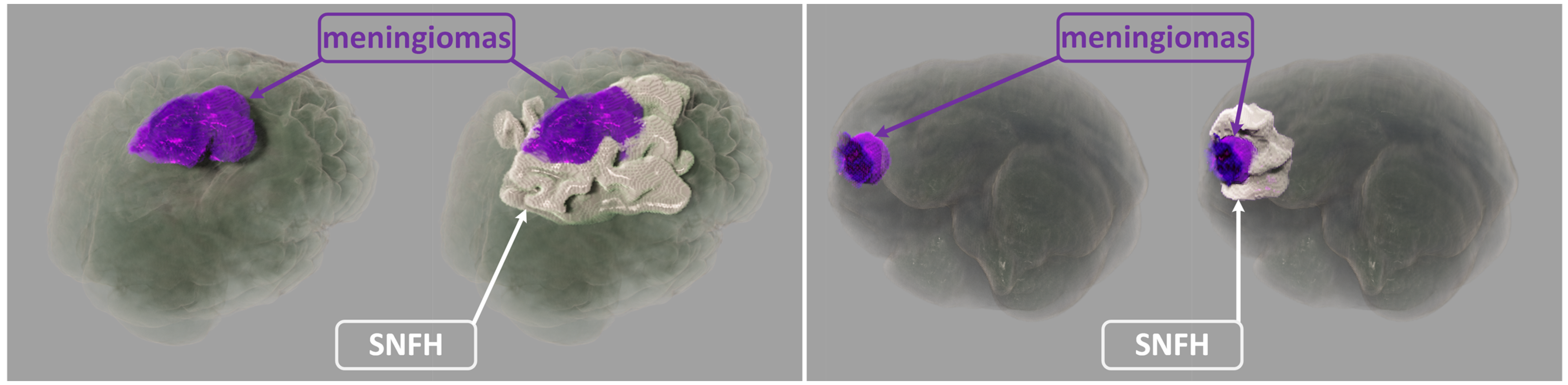

In this paper, our realistic rendering system SegR3D is introduced. It aims to enhance tumor visualization to assist the physician in surgical planning. Two examples of the visualization results using SegR3D are provided in

Figure 1. Our main contributions are as follows: (1) We present an interactive visualization system that integrates a segmentation pipeline to obtain lesion regions of meningiomas. Our system offers a visualization method that fuses the original medical images and the segmentation results. (2) An SSL-based segmentation model is proposed to acquire the lesion area of meningiomas, named uncertainty correction pyramid model based on probability-aware cropping (UCPPA). This model offers a simple training process, as it eliminates the need for multiple forward passes [

14], thereby enhancing SegR3D’s inference efficiency [

9]. The probability-aware weighted random cropping employs a finite set of labels to construct a cropping probability mask. The mask is used to extract more sub-volumes from the lesion regions in both labeled and unlabeled images, optimizing the use of data. (3) We propose a novel importance transfer function to enhance the rendering outcome by emphasizing areas that we consider to be more significant for identifying tumors. We integrate advanced illumination techniques to enhance the stereoscopic quality of the rendering outcome [

10,

11,

15,

16,

17,

18]. The spatial partitioning acceleration technique is used to enable real-time interaction for users [

19,

20].

In our SegR3D system, users can simply drag and drop data onto the GUI, and the segmentation algorithm automatically executes, providing visual results within 5 s. Users can choose between real-time denoising [

22] or progressive convergence to obtain noise-free visualization results. The interactive frame rate can reach over 80 frames per second on an Nvidia 2080Ti. Users only need to adjust the importance values for different regions, without requiring any further knowledge of DVR or transfer functions, to obtain visualizations that highlight specific areas. The interaction is intuitive and user-friendly.

Based on the evaluation conducted by multiple clinicians, our system shows outstanding performance in tumor analysis and surgical planning compared to conventional methods. It can be used as a useful tool for medical visualization.

2. Materials and Methods

We used a publicly available dataset from the Brain Tumor Segmentation 2023 Meningioma Challenge (BraTS2023-MEN) [

21]. This dataset consists of 1650 cases from six medical centers, with an annotated training set of 1000 cases, each providing multiparametric MRI (mpMRI) (T1-weighted, T2-weighted, T2-FLAIR, T1Gd) and ground truth annotations by radiologists with 10+ years of experience. The annotations consist of non-enhancing tumor core (NETC), enhancing tumor (ET) and SNFH.

In our experiments, NETC and ET were categorized as meningiomas, representing the primary surgical targets for gross total resection. We divided the 1000 T2-FLAIR series into three subsets: 666 for training, 134 for validation, and 200 for testing. Patient demographics are given in

Table 1.

2.1. The Realistic 3D Medical Visualization System

In this section, we describe the realistic 3D medical visualization system: SegR3D. It utilized a semi-supervised segmentation model and interactive realistic rendering for the visualization analysis of lesion regions, as illustrated in

Figure 2.

2.1.1. Semi-Supervised Segmentation Model

Uncertainty Correction Pyramid Model. The SegR3D system adopted a 3D semi-supervised segmentation method for outlining lesion areas of meningiomas in MRI. The training set was divided into a labeled data set

and an unlabeled data set

, where

was the input volume and

was the ground-truth annotation (2 foreground categories). We referred to the design of uncertainty rectified pyramid consistency (URPC) [

9]. The auxiliary segmentation headers were added to V-Net [

23] decoders at different resolution levels. For the input image

, the network generates a set of segmentation results of different scales. The results were resized to match the dimensions of

by upsampling, yielding the sequence

. For the inputs with labeled

y, the supervised optimization objective was the combination of two loss functions and can be formulated as:

where

is the robust cross-entropy loss, and

is the soft dice loss.

For unlabeled data, we calculated the loss

through a scale-level uncertainty-aware approach [

9]. The total optimization objective includes the supervised loss and the unsupervised loss, which is formulated as:

where

is a widely-used time-dependent Gaussian warming up function [

9]. It can be used to control the trade-off between supervised and unsupervised losses, which is defined as:

where

means the final regularization weight,

t denotes the current training step, and

is the maximal training step.

Probability-Aware Weighted Random Cropping. Most meningiomas are small in comparison to the brain and appear in a limited number of sequences in MRI. Inspired by the work of Lin et al. [

24], we develop the probability-aware weighted random cropping strategy to make the model focus more on the lesion region. For each labeled image

, we establish a list

with the length of

D, where each element’s index represents the starting point on the depth axis for cropping. An element at index

j in list

is assigned a value of 1 only if the cropping window starting at

j contains more than

k voxels labeled as foreground. Then these lists are aggregated across all labeled images to form a list

. The element values in

L represent the probabilities of the elements, and the indexes of the elements in

L are selected for cropping through a weighted random selection process. Empirically, we set the threshold

k to 50 in our experiments.

2.1.2. Visualization

Our research work focused on three key aspects for the visualization of meningiomas in MRI: (1) We integrated and displayed lesion segmentation results with the original medical images. This allows physicians to easily identify lesion areas and perform an objective volumetric assessment of meningiomas. (2) We incorporated advanced illumination and shadowing to enhance the 3D sense. (3) We emphasized efficient real-time computation to meet interactive requirements.

Visualization of Non-Scalar Data. Each pixel of the input data for the renderer comprised a 2D vector

, representing the original medical image pixel value and the mask value generated by the segmentation pipeline. A 2D transformation function

was defined, which mapped voxel values to material attributes [

1]. These material attributes commonly used in realistic DVR include opacity, phase function coefficients, albedo, and smoothness [

25].

We designated the foreground in the segmentation results as “important regions”. In visualization, it is imperative to prevent unimportant regions from impacting the observation of important regions in the rendered results. To address this issue, we proposed a novel importance transfer function as the second dimension of

T. The transfer function can be used to translate the mask value

into the importance value

I, which is defined as:

The material attribute values, namely opacity, smoothness and albedo, were readjusted based on importance value. The new attribute values were obtained by multiplying them with

I. If the importance value

I was lower than 1, each of the attribute values was reduced by

I.

I represented a nonlinear transformation of

g, providing finer control over regions with higher importance. In this equation,

controls the level of precision, with higher values enabling more detailed control over regions of greater importance. The importance transfer function was applied to smoothness, albedo and opacity to highlight regions of interest. The effects of this function were discussed in

Section 3.2.

Realistic DVR. Hybrid volumetric scattering and surface scattering model [

25,

26] was employed as the shading model. Utilizing this shading model can yield rendering outcomes with realistic material appearances. The radiative transfer equation (RTE) simulates the process of light propagation within a volumetric space, constituting a technique for achieving realistic DVR [

17,

25,

27]. The Monte Carlo-based null-scattering algorithm [

27] represents an advanced method for solving the RTE and was used in SegR3D.

Acceleration Structure. The maximum density value in the volumetric space is higher than the density in the majority of regions. This results in a significantly reduced average sampling step length [

19,

20,

27], thus greatly impacting sampling efficiency. In SegR3D, the volumetric space was divided into multiple macrocells, and 3D-DDA traversal algorithm was used to enhance sampling efficiency [

19,

20].

2.2. User Evaluation

To validate the superiority of our system, we implemented three other widely-used rendering approaches. Mesh [

9] refers to renderings of meshes extracted from masks, representing a commonly used approach for displaying 3D segmentation outcomes in academic literature. Realistic Rendering (RR) [

10] refers to realistic rendering results of original medical data. Ray Casting (RC) [

1] is the Ray-Casting results using original data and segmentation results. We engaged a cohort of physicians in a user survey, comprising a total of 15 participants, including 8 radiologists and 7 surgeons. The statistical results of the user survey ratings were presented using a Gantt chart.

2.3. Implementation Details

Before training the segmentation model, the input scans were normalized to zero mean and unit variance. The size of each image was 240 × 240 × 150. During training, the patch size was 128 × 128 × 32. The total number of epochs for training was set to 500. The initial value of the learning rate was set to 0.001, which was adaptively adjusted using the ReduceLROnPlateau method. Stochastic gradient descent (SGD) was used as the optimizer. The batch size was set to 4, which included 2 labeled images and 2 unlabeled images. Data enhancement methods included probability-aware weighted random cropping, flipping and rotation. The segmentation network was crafted with PyTorch 1.8 and trained on a GRID V100D-8Q. The segmentation layers of the network are in

Table 2.

Our rendering system was implemented using C++ and CUDA, incorporating an embedded Python environment. It operated seamlessly on an RTX 4070 Ti GPU. Realistic DVR capabilities were achieved through C++ and CUDA programming languages.

3. Results

3.1. Segmentation Metrics and Results

Dice and 95% Hausdorff Distance (HD95) were employed as segmentation evaluation metrics. The segmentation performance of various methods on the testing set is presented in

Table 3, with the first row detailing the outcomes for V-Net [

23] trained via supervised learning. Additionally, we have implemented several cutting-edge SSL segmentation techniques for comparison, including calibrating label distribution (CLD) [

24] and URPC (baseline) [

9] in

Table 3. The lack of labels in the training set diminished segmentation accuracy across all categories. On 20% labeled experiments, UCPPA gained 72.9% and 80.0% Dice on meningiomas and SNFH respectively, which improved the segmentation results compared to both CLD and URPC. On 40% labeled experiments, the segmentation performance of UCPPA on meningiomas and SNFH is nearly comparable to that of fully supervised V-Net (Meningiomas Dice: 79.5% vs. 80.0%; SNFH Dice: 82.3% vs. 83.0%). Furthermore, compared to URPC, UCPPA achieved superior segmentation performance while maintaining identical computational complexity (FLOPs).

Various segmentation examples from different networks (based on 20% labeled experiments) were visualized using the SegR3D system, as illustrated in

Figure 3. UCPPA produced precise segmentation outcomes for both meningioma and SNFH regions. This demonstrates the effectiveness of probability-aware weighted random cropping.

3.2. The Role of Importance Transfer Function

Lowering the albedo value and smoothness value of unimportant regions helps mitigate the accumulation of highlights, which could otherwise affect observations. Lowering the opacity of unimportant regions aims to prevent them from obstructing important regions. Various visual effects can be created by adjusting the importance transfer function, as illustrated in

Figure 4.

3.3. User Evaluation of the SegR3D System

In user evaluation, we presented four descriptors, and the physicians rated their level of agreement with these descriptions. Ratings ranged from 1 to 5, with 1 indicating “strongly disagree” and 5 indicating “strongly agree.” The first three descriptions are utilized for assessing visualization algorithms, as depicted in

Figure 5. The inquiry assesses

Q1 whether our approach is more conducive to perceiving the location and distribution of lesions,

Q2 whether it facilitates better perception of tumor shape, and

Q3 whether it holds advantages over other methods. We exclude methods that require complex manual operations to achieve the desired visualization. The fourth description pertains to assessing

Q4 whether our system’s visualization outcomes have reached a sufficient level of precision for lesion analysis and surgical planning when compared to those obtained from ground truth.

The statistical results of user experiment ratings are presented in

Figure 6 using a Gantt chart. The statistical results (mean/standard deviation) for the four questions are as follows:

Q1 (4.47/0.52),

Q2 (4.53/0.52),

Q3 (4.40/0.51), and

Q4 (4.40/0.51). It can be observed that all participating physicians consider our system as the optimal visualization tool. They can selectively emphasize lesion areas through the adjustment of the importance transfer function, thereby enhancing comprehension of tumor location and morphology.

Supplementary Material Video S1 demonstrates the interactive effects of SegR3D.

4. Discussion

In this study, we developed a realistic 3D medical visualisation system named SegR3D, which combines a segmentation pipeline and a realistic rendering pipeline. The segmentation pipeline can segment the tumor region and the SNFH region in meningiomas automatically. The realistic rendering pipeline provides an interactive visualisation of the region of interest and allows the user to adjust the target object’s appearance and observation direction. SegR3D helps radiologists to assess the distribution and volume of meningiomas in the brain and facilitates neurosurgeons to make surgical plans.

To reduce the reliance on expert annotations, the segmentation pipeline used a semi-supervised training approach. The proposed UCPPA network achieved results comparable to fully supervised methods V-Net using only 20% of annotated data (Meningiomas: Dice of 72.9% vs. 80.0%, HD95 of 12.8 mm vs. 9.2 mm; SNFH: Dice of 80.0% vs. 83.0%, HD95 of 10.8 mm vs. 9.7 mm). This success can be attributed to the novel probability-aware weighted random cropping we introduced, which enabled UCPPA to focus more effectively on the lesion regions.

Our visualization system employs AI segmentation technology as a pre-classifier to distinguish between diseased and healthy tissue regions. The visualization engine integrates medical image segmentation with photorealistic rendering to effectively highlight regions of interest, such as lesions. The system not only displays the shape of the lesions but also provides a clear representation of their distribution within the surrounding brain tissue. Furthermore, the design of the importance transfer function simplifies user interaction, allowing users to adjust the importance of different tissues to emphasize specific areas. Compared to existing visualization methods, our approach offers significant advantages. Furthermore, through discussions with physicians, we have learned that the majority of user experiment participants indicated their willingness to consider the visualization outcomes of SegR3D in clinical practice. However, they have also expressed a desire for SegR3D to visualize the relationship between tumors, blood vessels, and nerves to mitigate surgical risks. This constitutes a prospective avenue for our future research endeavors.

Although this work used a large publicly available dataset of meningiomas (BraTS2023-MEN), the segmentation model was still trained and evaluated using a single dataset. This may limit the generalizability of SegR3D. Validation in a variety of clinical settings would be beneficial. Additionally, our system was unable to visualise the relationship between tumors, blood vessels and nerves, whose positional relationship is extremely important for surgical planning. Overall, future work will focus on improving the accuracy and versatility of the segmentation model, enhancing the visualization imaging effect, and improving real-time interaction quality, thereby making SegR3D an ideal tool for physicians.

5. Conclusions

In this paper, we proposed an interactive visualization system SegR3D for meningiomas that integrates a semi-supervised segmentation pipeline and a realistic rendering pipeline. Considering the relative smallness of meningiomas compared to the brain, we introduced probability-aware weighted random cropping into the segmentation model, substantially enhancing segmentation performance beyond the baseline. To highlight the lesion location in the visualization results, we proposed an importance transfer function to adjust the material parameters by evaluating the importance of different regions. Furthermore, we introduced realistic rendering to enhance the spatial three-dimensionality of the rendered results. Seg 3D has undergone evaluation by multiple clinicians and has been recognized as highly valuable for tumor analysis and surgical planning.

Author Contributions

Conceptualization, J.Z.; methodology, J.Z. and C.X.; software, J.Z. and C.X.; validation, J.Z. and C.X.; formal analysis, C.X.; investigation, X.X.; resources, J.Z.; data curation, Y.Z.; writing—original draft preparation, J.Z. and C.X.; writing—review and editing, X.X. and L.Z.; visualization, C.X.; supervision, Y.Z.; project administration, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research and APC were funded by the National Natural Science Foundation of China grant number No. 82473472 and Suzhou Basic Research Pilot Project grant number SJC2021022.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the fact that it does not involve any human or animal experiments. The data and research content used do not involve clinical application feedback. The data employed in this study are anonymized and de-identified, with no impact on any patient’s surgery or treatment.

Informed Consent Statement

Patient consent was waived due to we used a de-identified public dataset.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Preim, B.; Botha, C.P. Visual Computing for Medicine: Theory, Algorithms, and Applications; Meg, D., Heather, S., Newnes, Eds.; Morgan Kaufmann Publishers: Burlington, MA, USA, 2013. [Google Scholar]

- Linsen, L.; Hagen, H.; Hamann, B. Visualization in Medicine and Life Sciences; Gerald, F., Hans-Christian, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Ogasawara, C.; Philbrick, B.D.; Adamson, D.C. Meningioma: A review of epidemiology, pathology, diagnosis, treatment, and future directions. Biomedicines 2021, 9, 319. [Google Scholar] [CrossRef] [PubMed]

- Agus, M.; Aboulhassan, A.; Al Thelaya, K.; Pintore, G.; Gobbetti, E.; Calì, C.; Schneider, J. Volume Puzzle: Visual analysis of segmented volume data with multivariate attributes. In Proceedings of the 2022 IEEE Visualization and Visual Analytics (VIS), LNCS, Oklahoma City, OK, USA, 16–21 October 2022; pp. 130–134. [Google Scholar]

- Jiao, R.; Zhang, Y.; Ding, L.; Xue, B.; Zhang, J.; Cai, R.; Jin, C. Learning with limited annotations: A survey on deep semi-supervised learning for medical image segmentation. Comput. Biol. Med. 2023, 169, 107840. [Google Scholar] [CrossRef] [PubMed]

- Zeng, L.L.; Gao, K.; Hu, D.; Feng, Z.; Hou, C.; Rong, P.; Wang, W. SS-TBN: A Semi-Supervised Tri-Branch Network for COVID-19 Screening and Lesion Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10427–10442. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Yuan, Y.; Guo, D.; Huang, X.; Cui, Y.; Xia, M.; Wang, Z.; Bai, C.; Chen, S. SSA-Net: Spatial self-attention network for COVID-19 pneumonia infection segmentation with semi-supervised few-shot learning. Med. Image Anal. 2022, 79, 102459. [Google Scholar] [CrossRef] [PubMed]

- Huang, W.; Chen, C.; Xiong, Z.; Zhang, Y.; Chen, X.; Sun, X.; Wu, F. Semi-supervised neuron segmentation via reinforced consistency learning. IEEE Trans. Med. Imaging 2022, 41, 3016–3028. [Google Scholar] [CrossRef] [PubMed]

- Luo, X.; Wang, G.; Liao, W.; Chen, J.; Song, T.; Chen, Y.; Zhang, S.; Metaxas, D.N.; Zhang, S. Semi-supervised medical image segmentation via uncertainty rectified pyramid consistency. Med. Image Anal. 2022, 80, 102517. [Google Scholar] [CrossRef] [PubMed]

- Denisova, E.; Manetti, L.; Bocchi, L.; Iadanza, E. AR2T: Advanced Realistic Rendering Technique for Biomedical Volumes. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Bertino, E., Gao, W., Steffen, B., Yung, M., Eds.; LNCS; Springer: Berlin/Heidelberg, Germany, 2023; Volume 14225, pp. 347–357. [Google Scholar]

- Rowe, S.P.; Johnson, P.T.; Fishman, E.K. Initial experience with cinematic rendering for chest cardiovascular imaging. Br. J. Radiol. 2018, 91, 20170558. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Nishikawa, R.M. Computer-Aided Detection and Diagnosis in Medical Imaging; Taylor & Francis: Abingdon, UK, 2015. [Google Scholar]

- Igouchkine, O.; Zhang, Y.; Ma, K.L. Multi-Material Volume Rendering with a Physically-Based Surface Reflection Model. IEEE Trans. Vis. Comput. Graph. 2017, 24, 3147–3159. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Zhang, Y.; Tian, J.; Zhong, C.; Shi, Z.; Zhang, Y.; He, Z. Double-Uncertainty Weighted Method for Semi-Supervised Learning. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020; Martel, A.L., Abolmaesumi, P., Stoyanov, D., Mateus, D., Zuluaga, M.A., Zhou, S.K., Racoceanu, D., Joskowicz, L., Eds.; LNCS; Springer: Berlin/Heidelberg, Germany, 2020; Volume 12261, pp. 542–551. [Google Scholar]

- Lindemann, F.; Ropinski, T. About the Influence of Illumination Models on Image Comprehension in Direct Volume Rendering. IEEE Trans. Vis. Comput. Graph. 2011, 17, 1922–1931. [Google Scholar] [CrossRef] [PubMed]

- Jönsson, D.; Sundén, E.; Ynnerman, A.; Ropinski, T. A Survey of Volumetric Illumination Techniques for Interactive Volume Rendering. Comput. Graph. Forum 2014, 33, 27–51. [Google Scholar] [CrossRef]

- Dappa, E.; Higashigaito, K.; Fornaro, J.; Leschka, S.; Wildermuth, S.; Alkadhi, H. Cinematic rendering—an alternative to volume rendering for 3D computed tomography imaging. Insights Into Imaging 2016, 7, 849–856. [Google Scholar] [CrossRef] [PubMed]

- Kraft, V.; Schumann, C.; Salzmann, D.; Weyhe, D.; Zachmann, G.; Schenk, A. A Clinical User Study Investigating the Benefits of Adaptive Volumetric Illumination Sampling. IEEE Trans. Vis. Comput. Graph. 2024, 30, 7571–7578. [Google Scholar] [CrossRef] [PubMed]

- Szirmay-Kalos, L.; Tóth, B.; Magdics, M. Free path sampling in high resolution inhomogeneous participating media. Comput. Graph. Forum 2011, 30, 85–97. [Google Scholar] [CrossRef]

- Pharr, M.; Jakob, W.; Humphreys, G. Physically Based Rendering: From Theory to Implementation; MIT Press: Cambridge, MA, USA, 2023. [Google Scholar]

- LaBella, D.; Adewole, M.; Alonso-Basanta, M.; Altes, T.; Anwar, S.M.; Baid, U.; Bergquist, T.; Bhalerao, R.; Chen, S.; Chung, V.; et al. The ASNR-MICCAI Brain Tumor Segmentation (BraTS) Challenge 2023: Intracranial Meningioma. arXiv 2023, arXiv:2305.07642. [Google Scholar]

- Xu, C.; Cheng, H.; Chen, Z.; Wang, J.; Chen, Y.; Zhao, L. Real-time Realistic Volume Rendering of Consistently High Quality with Dynamic Illumination. IEEE Trans. Vis. Comput. Graph. 2024. Early Access. [Google Scholar] [CrossRef] [PubMed]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE: Stanford, CA, USA, 2016; pp. 565–571. [Google Scholar]

- Lin, Y.; Yao, H.; Li, Z.; Zheng, G.; Li, X. Calibrating Label Distribution for Class-Imbalanced Barely-Supervised Knee Segmentation. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2022; Bertino, E., Gao, W., Steffen, B., Yung, M., Eds.; LNCS; Springer: Cham, Switzerland, 2022; Volume 13438, pp. 109–118. [Google Scholar]

- Kroes, T.; Post, F.H.; Botha, C.P. Exposure render: An interactive photo-realistic volume rendering framework. PloS ONE 2012, 7, e38586. [Google Scholar] [CrossRef] [PubMed]

- von Radziewsky, P.; Kroes, T.; Eisemann, M.; Eisemann, E. Efficient stochastic rendering of static and animated volumes using visibility sweeps. IEEE Trans. Vis. Comput. Graph. 2016, 23, 2069–2081. [Google Scholar] [CrossRef] [PubMed]

- Miller, B.; Georgiev, I.; Jarosz, W. A null-scattering path integral formulation of light transport. ACM Trans. Graph. 2019, 38, 1–13. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).