Abstract

Chest imaging plays a pivotal role in screening and monitoring patients, and various predictive artificial intelligence (AI) models have been developed in support of this. However, little is known about the effect of decreasing the radiation dose and, thus, image quality on AI performance. This study aims to design a low-dose simulation and evaluate the effect of this simulation on the performance of CNNs in plain chest radiography. Seven pathology labels and corresponding images from Medical Information Mart for Intensive Care datasets were used to train AI models at two spatial resolutions. These 14 models were tested using the original images, 50% and 75% low-dose simulations. We compared the area under the receiver operator characteristic (AUROC) of the original images and both simulations using DeLong testing. The average absolute change in AUROC related to simulated dose reduction for both resolutions was <0.005, and none exceeded a change of 0.014. Of the 28 test sets, 6 were significantly different. An assessment of predictions, performed through the splitting of the data by gender and patient positioning, showed a similar trend. The effect of simulated dose reductions on CNN performance, although significant in 6 of 28 cases, has minimal clinical impact. The effect of patient positioning exceeds that of dose reduction.

1. Introduction

Plain chest radiography is one of the most common radiological examinations. It plays a pivotal role in patient management and screening due to its accessibility and broad range of indications [1,2]. The diagnostic accuracy of screening by radiologists is directly impacted by image quality [3,4,5]. Image quality, in turn, is limited by radiation exposure. By decreasing the radiation dose, noise will increase, which will reduce the distinctive capacity of images and, thus, overall image quality [3,4,5,6,7,8]. While image noise can be reduced by increasing the dose, this is constrained by the linear relationship between the carcinogenic nature and radiation exposure [9].

Noise reduction and, thus, an increase in image quality, can also be achieved by post-processing with noise reduction techniques [10]. These techniques enable increased image quality while implementing an ‘as low as reasonably achievable’ radiation exposure, referred to as the ALARA principle [11,12]. It is important to note that although these techniques reduce noise, they can also reduce the perceptibility of detail and thereby hinder diagnostic accuracy [13]. Hence, the implementation of post-processing should be carefully considered.

More recently, artificial intelligence (AI) has led to the development of more complex image techniques aimed at noise reduction [5,14,15,16,17]. An example of the application of AI-based noise reduction is computed tomography (CT), which can achieve significantly lower doses while maintaining diagnostic accuracy for pediatric patients, a group that is especially sensitive to radiation [16]. Likewise, the implementation of AI-based noise reduction has been shown to lead to increased lung nodule detection in CT imaging [15]. It is important to note that these AI techniques strive to improve image quality for the diagnostic accuracy of human observers.

In addition to image optimization, AI models have been increasingly implemented as a diagnostic tool in radiology [18,19]. Chest imaging has been a recurring application for the development of these AI models [20,21,22,23,24,25]. Initially, the clinical representativity of popular datasets used for the development of AI models was limited (e.g., low resolution in the popular ChestX-ray14 introduced in 2017) [26,27]. More recently, high-quality data have been released, such as Medical Information Mart for Intensive Care—CXR (MIMIC-CXR), a dataset including full bit-depth images with clinically representative resolution [28,29,30,31,32]. AI models trained on either dataset tend to perform at a level comparable to radiologists in well-defined tasks, as shown by an area under the receiver operator curve (AUROC) > 0.90 [20,21,22,23,24,25]. Models trained on datasets of varying quality achieve comparable performance. Therefore, image resolution in plain chest radiology may have a limited impact on the training of AI models.

This observation was substantiated in a study by Sabottke et al. (2021), which showed that AI models maintain performance between resolutions of 6002 pixels and 1282 pixels, both of which are lower resolutions compared to those used in clinical practice (>10242 pixels) [33]. The sustained performance is likely attributed to the use of convolutions and down-scaling of resolution, as is implemented by convolutional neural networks (CNNs), a commonly used model architecture. As noise in chest imaging is expressed at high resolutions, we hypothesize that the impact of reduction-induced noise on CNN performance differs from that of human observers. To the best of our knowledge, applying dose reductions to assess the impact of noise on CNN performance in plain chest imaging has not yet been investigated.

Assessing the impact of noise due to dose reduction on CNN performance of plain chest radiology is preferably performed using a set of images that differ in dose levels. However, this requires multiple exposures per case, which is ethically not feasible due to the aforementioned carcinogenic nature of radiation exposure. One way to circumvent the challenge of varying exposure per radiograph is to simulate low doses for an individual image, an approach previously applied in computed tomography [34,35].

The aim of this experimental study is to design a novel low-dose simulation and to evaluate the effect of this low-dose simulation on the performance of CNNs in plain chest radiography.

2. Materials and Methods

Two datasets were used in this study. The first dataset consisted of plain chest phantom images acquired for this study to develop a low-dose simulation (see Section 2.1). The second dataset, MIMIC, provided by the Massachusetts Institute of Technology (MIT), was used for CNN training, validation, and testing (see Section 2.2) [28,29,36,37].

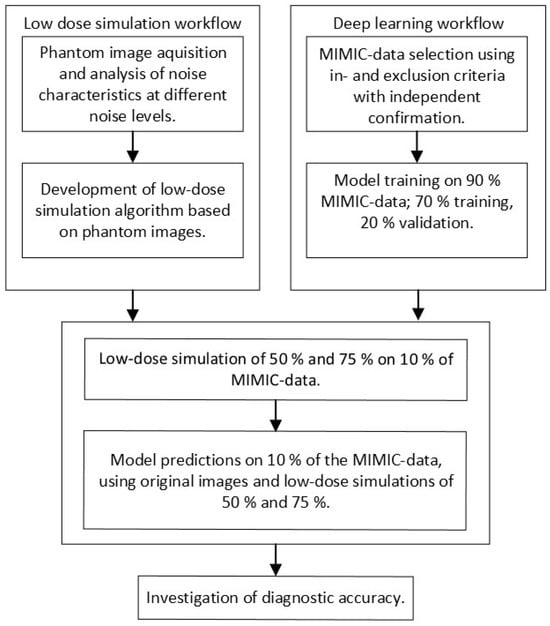

This study was performed in four steps, which are schematically represented in Figure 1. First, a low-dose imaging simulation procedure was developed based on X-ray images obtained for this study using an anthropomorphic phantom. This simulation made it possible to process a single examination at different dose levels. Second, the MIMIC dataset, containing original chest radiographs, was used to train CNNs. Third, the developed low-dose simulation was applied to 10% of the MIMIC radiographs for independent testing. Fourth, the effect of simulated dose reduction on CNN performance was investigated. During the investigation, subpopulations were considered by stratifying for gender (male and female) and positioning (posterior–anterior (PA) and anterior–posterior (AP)).

Figure 1.

Visualization of the low-dose simulation, deep learning, and testing workflows used in this study.

2.1. Simulated Low-Dose Imaging

To analyze the relationship between noise and exposure parameters, a set of chest images was acquired using an anthropomorphic Alderson phantom containing tissue-equivalent materials representing different organs. A common range of clinically relevant exposure parameters for chest radiography was used with tube voltages (80, 100, and 120 kVp) and manual exposure time products (0.6, 0.8, 1.2, 1.6, and 2 mAs), resulting in 15 separate images. Note that the tube voltages within MIMIC have a mean (SD) of 101 (±12) kVp and fall within this range. All images were acquired at a source-to-image distance (SID) of 150 cm. This approximates the distances used in the MIMIC dataset, which are 183 (±0.6) cm and 165 (±3.2) cm for PA and AP images, respectively. The resulting phantom images were analyzed using 10 × 10-pixel sliding windows to measure the mean and standard deviation of the grey values, representing an approximation of noise. Linear regression was used to approximate the relationship between the mean grey values (horizontal axis) and standard deviation (vertical axis) for each tube voltage and exposure time product combination. To overcome systematic error in direct exposures, where grey values are 0 and the standard deviation is negligible, the x- and y-axis intercepts were set to 0. The resulting regression slope represents the variation in SNR per anatomical region caused by a variation in attenuation for each 10 × 10-pixel window. The slopes of lower-dose images were used as a ‘goal slope’ to create dose reductions from the original-dose images per tube voltage.

The ‘goal slope’ derived from the phantom images was used in a custom Python (version 3.7) script to introduce generated noise on images (hereafter referred to as InGen). InGen implements three steps to add noise to existing X-ray images, such as those available in MIMIC.

First, the original-dose full-resolution images were divided into twenty equal-sized grey value threshold windows (e.g., 0/20th–1/20th, 1/20th–2/20th, etc.). These twenty thresholds were determined during a preliminary phase and based on the visual inspection of simulations with various numbers of thresholds. For each window, the mean grey value was multiplied by the ‘goal slope’ related to the intended low dose. This outcome was multiplied by an array of randomly generated values between 0.0 and 1.0, taking the same size as the image. Combining these multiplications resulted in a noise mask.

Second, the resultant noise mask was further adjusted to increase visual agreement with real noise by using a multiplication factor of 6 or 9 to adjust the noise level to the ‘goal noise level’ of the phantom images. These multiplication factors were determined during the preliminary visual inspection while comparing the resultant slope to the ‘goal slope’, ensuring clinical representation of the generated low-dose simulations. Further optimization was performed by applying a zero-centered Gaussian filter with unit standard deviation to soften the noise.

In the last step, the processed noise mask was added to the original image, after which a correction was applied to restore the range of grey values present in the original image. This step, based on grey value thresholding followed by histogram correction, is crucial as it ensures that the grey values in the simulated low-dose image match those of a normal exposure, which were lost by subtracting the noise mask. The result is a simulated low-dose image that replicates what was initially an image with normal exposure.

The above steps were performed using Python 3.7 in combination with Scipy (version 1.4.1) and Skimage (version 0.16.2).

2.2. AI Model Training

The datasets MIMIC-CXR v2.0.0, -JPG 2.0.0, and -IV v0.4 were used for training [28,29,37]. These consist of anonymized patient information (e.g., date of birth and gender), full-resolution and full bit-depth DICOM-format chest radiographs, and their fourteen corresponding diagnoses. Each of the fourteen diagnoses is expressed as a binary label, each indicating the presence or absence of the related pathology. All MIMIC data were used in accordance with the PhysioNet Credentialed Health Data Use Agreement 1.5.0.

The primary data source of this study is the MIMIC-CXR containing 227,835 chest radiographs. Examples of these images are shown in the Results section (see Figure 2 and Figure 3 for close-ups and Figure 4 for images of varying quality). The corresponding pathology labels for the images in MIMIC-CXR were taken from MIMIC-CXR-JPG [29,37]. Metadata from the MIMIC-CXR DICOM images were extracted using Pydicom (version 1.4.2). The relevant variables derived were tube voltage (kVp), exposure time product (mAs), dose area product (DAP, unit unknown), exposure index (EI), relative exposure index (REX) (collectively referred to as exposure parameters), and ‘acquisition date’. Both ‘anchor age’ and ‘anchor year’ from MIMIC-IV were used in combination with the ‘acquisition date’ from the DICOM metadata to derive the patient age at the time of exposure [36].

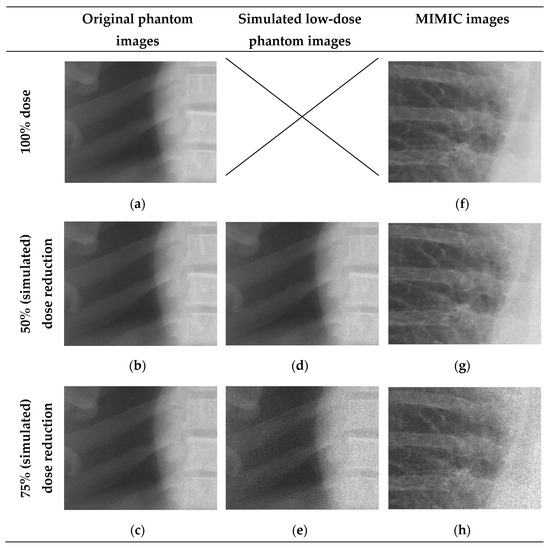

Figure 2.

Images representative of the real and simulated low-dose images based on phantom images and MIMIC-CXR. Left: images (a–c) were acquired using an anthropomorphic phantom (2 mAs, 1.2 mAs, 0.6 mAs). Middle: images (d,e) showing 50% and 75% simulated dose reductions based on image a. Right: Images (f–h) show original MIMIC-CXR with simulated 50% and 75% dose reductions.

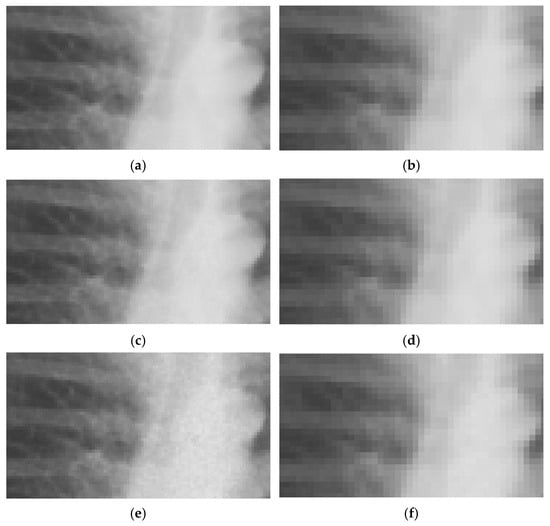

Figure 3.

Representative examples of MIMIC-CXR images with different noise levels on training and testing resolutions. LR ((a–c): 254 × 305 px), ULR ((d–f): 127 × 152 px), and normal exposure (a,d); 50% (b,e) and 75% (c,f) simulated dose reductions.

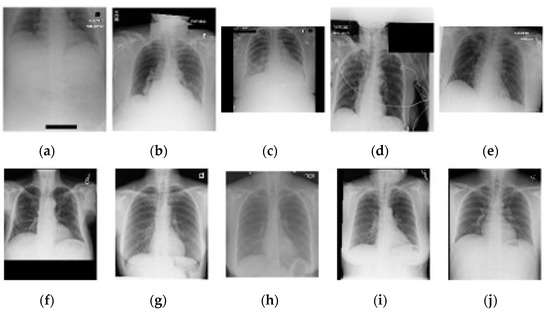

Figure 4.

A selection of five random AP (a–e) and PA (f–j) chest images from the complete included dataset. Note the difference in image quality related to exposure, orientation, and cropping between AP and PA positioning.

The inclusion and exclusion of pathologies and related images were performed by the first author, a radiographer with over ten years of experience, and validated using the literature [21,38,39,40,41]. Of the fourteen available pathologies, eight were included based on clinical relevance: No Finding, Fracture, Enlarged Cardiomediastinum, Cardiomegaly, Atelectasis, Edema, Pneumonia, Lung Lesion (see Table 1). Pneumothorax was excluded because of the presence of drains on 30% of 100 random pneumothorax images. Previous studies have shown that drains cause an overfit in CNN development related to pneumothorax [33,38]. Secondly, ambiguous language or limited difference in image characteristics lead to the exclusion of Lung opacity, Consolidation, Pleural other and Pleural effusion [29,33]. Lastly, images positively labelled under Support Devices were excluded from classification due to limited clinical relevancy. A radiologist with over twenty years of experience independently confirmed the choices made.

Table 1.

Overview of included and excluded pathologies.

Only pathology labels with clear certainty were included (1 and 0). The uncertain label (−1), occurring in 2% of the cases was excluded, limiting the inclusion of false positives or false negatives [24,29]. The presence/absence labels were taken as true positives (1) and true negatives (0) during the training and validation of the CNNs. Additional exclusion based on image quality was not performed to circumvent an overfit on clinically unrealistic image quality.

For each of the seven pathologies, image sets were created, with 50% of the images showing the presence of the specific pathology and 50% showing its absence. Each image set was limited to one pathology label, resulting in seven separate pathology-based image sets. The maximum size per image set was 10,000 images, and each set was saved separately. The final number of images used for training, validation, and testing per pathology ranged from 4670 to 10,000, depending on the number of images available for each pathology (see Table 2). These image sets were randomly divided into training, validation, or test sets with a split ratio of 0.7, 0.2, and 0.1, ensuring independence among samples.

Table 2.

Total number of images per pathology per image set for training, validation, and test sets.

The training was performed on an EfficientNetB4 model pretrained with ImageNet using Keras (version 2.4.3) with a TensorFlow (version 2.4.1) backend [42]. This model was customized with a top layer (Dropout (0.2), Flatten, and Dense (1) with sigmoid activation) to facilitate binary classification per pathology.

The resolutions used were based on the approach by Sabottke et al. and available hardware resources, in which a batch of four could be used at 254 px × 305 px and a batch of eight could be used for 127 px × 152 px (respectively referred to as low resolution (‘LR’) and ultra-low resolution (‘ULR’)) [33]. A custom image data loader was used to allow for the use of 16-bit images and augmentations to simulate a broad range of geometric image acquisition variations. The implementation of image augmentation was based on the literature and was applied by Keras in its built-in augmentation during the training phase, which included a rotation ± 10 degrees, vertical flip, and fractional changes to the zoom range ± 0.01, height shift ± 0.05, width shift ± 0.1, and shear range ± 0.1 with a ‘constant’ fill mode [39,40,41,43]. Note that no augmentations were applied during testing to ensure independence of the impact of the simulated low dose on CNN performance during testing.

The values of the hyperparameters for model training were based on the literature and tuned through preliminary testing [33,39,40]. The implementation of an Adam optimizer with an initial learning rate of 1 × 10−3 and binary cross entropy loss function led to the optimal reduction in validation loss during these preliminary tests. Additionally, preliminary testing led to the implementation of a learning rate scheduler and an adjustment in the number of steps per epoch. The learning rate scheduler reduced the learning rate by a factor of 0.5 after each epoch, while the number of steps was reduced by a factor of 4 to increase the effect of backpropagation (therefore, each epoch was a ‘semi-epoch’). Preliminary experiments pointed towards stabilization in the training process after six epochs.

The resulting fourteen separate models, created by crossing seven pathologies with two resolutions, were trained end-to-end. Each model predicted the presence of a pathology using a range between 0 and 1. Predictions close to 0 indicated that the pathology was absent with a high certainty, while those close to 1 indicated that the pathology was present with a high certainty.

An overall investigation of the impact of simulated low-dose imaging on CNN performance was achieved by applying InGen to all images within the test image sets. Simulations were based on the ‘goal-coefficients’ and correction factors from the phantom images. Both types of parameters were based on a tube voltage of 100 kVp, taken from the MIMIC-CXR meta-data. Three separate dose levels were simulated for both LR and ULR resolutions: one for the original image dose and two for the simulated dose reductions of 50% and 75% using InGen. Please note that these simulated reductions represent images with 50% and 25% of the original dose.

2.3. Data Analysis

A visual face validity check was performed to investigate the clinical representation of simulated doses by using 15 randomly selected images. The corresponding author and an experienced radiologist independently performed this check. Additional image quality investigation was performed using the structural similarity index (SSIM) and peak signal to noise (PSNR). Both SSIM and PSNR were determined for the original resolution, LR and ULR [44].

The performance of the trained CNNs was operationalized using the AUROC obtained from predictions on independent test sets for each pathology, resolution, and dose level. The use of AUROC facilitates comparison with relevant literature and, due to its monotonic relationship with sensitivity and specificity, a higher AUROC indicates better clinical performance. To assess the significance of the effect of simulated dose reductions on CNN performance, the ROC curves of the original image predictions were compared to those of the simulated dose reductions using a DeLong test [45,46]. This comparison was performed for both resolutions independently, and for each of the seven pathologies.

To provide insights into potential clinically relevant outcomes, additional preliminary investigations were carried out. The differences in exposure parameters and age for PA and AP positioning were tested using a Mann-Whitney U test for continuous non-normally distributed data. Analyses were performed using Scikit-learn (version 0.22.1) to determine the AUROC and SciPy (version 1.4.1) for all other statistical analysis with a significance level of p < 0.05.

Finally, a cursory estimation on overall image quality related to image acquisition was performed on 100 random images for both AP and PA positioning.

3. Results

3.1. Simulated Low-Dose Radiography

A visual face validity check was performed on three low-dose simulation for five random full resolution MIMIC images. Both the first author and an experienced radiologist independently agree on the clinical representability of the low-dose simulations. Figure 2 uses representative images and shows a difference in noise levels between high and low exposure areas (e.g., lung versus bone), and a softened ‘salt and pepper’ appearance. Also note the higher degree of noise for the lower dose simulations, most notably the 75% low-dose phantom image compared to the 75% low-dose simulations. This indicates a larger than expected increase in noise.

Objective evaluation of image quality on the five full resolution images at the three noise levels, comparing the original images to the 50% and 75% low-dose simulations, resulted in an SSIM > 0.992. The PSNR for the original phantom images was 53.1 dB and 50.0 dB while the low-dose simulated full resolution images resulted in 55.7 ± 0.5 dB and 46.1 ± 0.5 dB for the 50% and 75% simulations, respectively. This shows a similar impact of increased noise on the phantom and MIMIC images verifying the face-validity.

After lowering images resolution to LR and ULR used for CNN input, the noise generated by InGen is less prominent compared to the original full-resolution images, as shown in Figure 3. Note the difference in expression of noise in the examples shown in Figure 2 and Figure 3. SSIM shows comparable results as the full resolution images with all values > 0.998. This indicates a smaller impact of noise on structural similarity. PSNR measurements were higher for lower resolutions, as shown in Table 3, which also indicates a smaller effect of noise on lower resolutions.

Table 3.

PSNR results of the comparison between the original-dose images to 50% and 75% low-dose simulations of four randomly chosen images.

3.2. Investigation of Performance Through AUROC

3.2.1. Overall Investigation

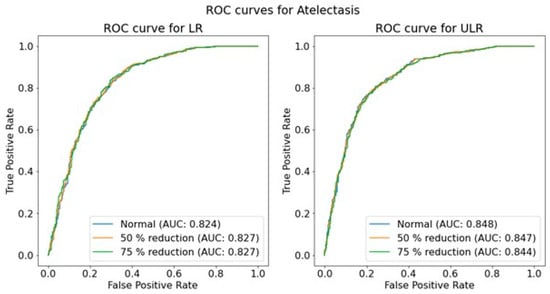

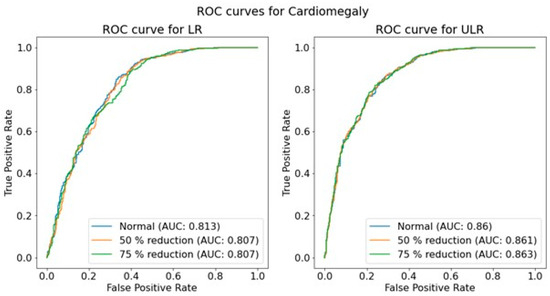

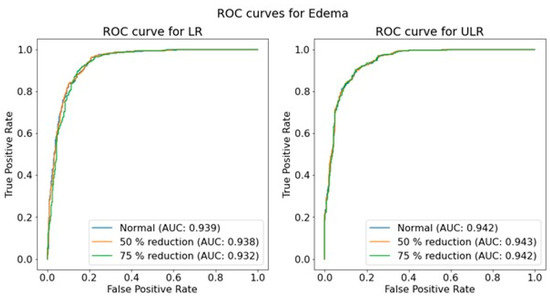

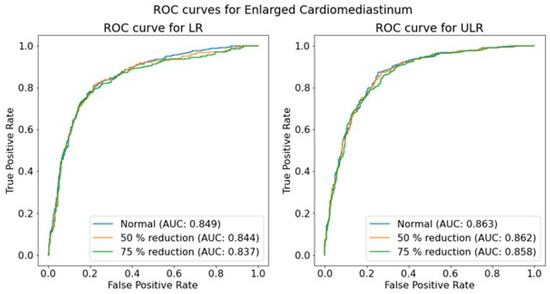

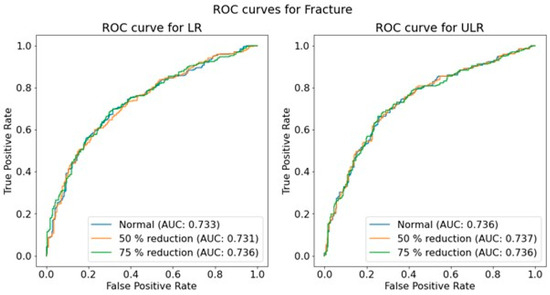

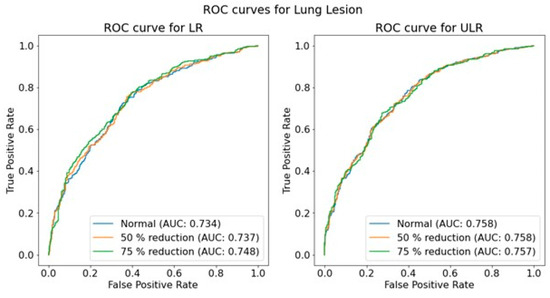

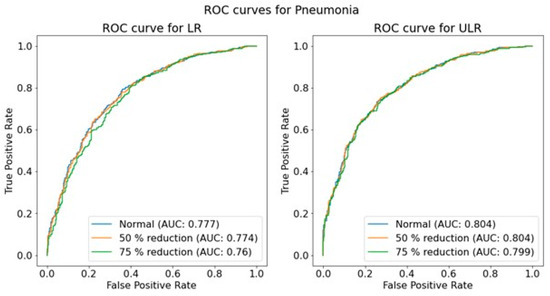

Simulated dose reductions resulted in minimal changes in CNN performance expressed by the AUROC for all models. The CCN models in the ULR appear more stable than the LR (see Table 4 for the AUROC values). The average absolute deviation in the AUROC for all LR predictions is 0.005 (SD = 0.004), with none exceeding the change of −0.014 for LR Pneumonia. The change in the AUROC for ULR predictions is smaller, with an absolute mean change of 0.002 (SD = 0.001) and a maximum change of −0.005 for Pneumonia. The nature of change is equal across the ROC, as shown by ROC curves presented in Figure A1, Figure A2, Figure A3, Figure A4, Figure A5, Figure A6 and Figure A7 provided in Appendix A.

Table 4.

AUROC for all model prediction test sets (original dose and simulated dose reductions of 50% and 75%) at different resolutions.

Analyses of the ROCs using the DeLong test to compare the original image predictions with the low-dose simulation prediction resulted in a p < 0.040 in 6 out of 28 cases (see Table 4). Five of these significant differences are found within the LR predictions and one in the ULR predictions.

3.2.2. Investigation of Division by Gender

The changes related to simulated low-dose imaging differ by gender; the absolute mean change in prediction for LR male images is 0.006 (SD = 0.004) and that for LR female images is 0.006 (SD = 0.005). However, the absolute changes in ULR male and female images are lower, with respective mean changes of 0.003 (SD = 0.003) and 0.002 (SD = 0.002) (Table 5). Comparisons of the original ROC to the simulated low-dose ROCs using the DeLong test show nine significant findings (Table 5).

Table 5.

AUROC for all model predictions (original dose and simulation dose reductions of 50% and 75%) at different resolutions separated by gender.

The overall effect of the dose reductions is comparable to the overall investigation, and the difference between males and females never exceeds 0.044 for LR Lung Lesion 50%, with an absolute mean difference of 0.017 (SD = 0.011) for all AUROCs.

3.2.3. Investigation of Division by View Position

The overall predictions separated for PA and AP show a comparable effect of simulated low-dose imaging on model predictions. The absolute mean change in LR PA and LR AP predictions are 0.015 (SD = 0.017) and 0.007 (SD = 0.005), respectively, while URL PA and URL AP predictions are 0.002 (SD = 0.003) and 0.003 (SD = 0.004), respectively (Table 6). The DeLong tests for comparing the original ROCs to 50% and 75% dose reductions show p-values < 0.049 in nine comparisons, five of which belong to LR PA. The remaining four significant differences are spread across the remaining resolutions and positioning (Table 6).

Table 6.

AUROC for all model predictions (original dose and dose reductions of 50% and 75%) at different resolutions separated by PA and AP positioning.

However, PA and AP positioning shows a difference for all AUROCs, with a maximum of 0.189 for LR Cardiomegaly and an absolute mean difference of 0.087 (SD = 0.061). To investigate this difference between PA and AP further, an additional analysis was performed.

Further analysis of the DAP, the EI, the REX, the mAs, and age showed a higher median and interquartile range (IRQ) for AP (see Table A1 in Appendix B). In contrast to the aforementioned variables, the tube voltage was significantly lower for AP images. An analysis of the exposure parameters and age between PA and AP using the Mann–Whitney U test resulted in p < 0.001 for all situations, showing a significant difference.

Supplementary information related to image quality was obtained using a cursory inspection of 100 random PA and AP images, of which some representative examples are shown in Figure 4. A deviation in average image quality related to image acquisition was visible in 15 PA cases and 52 AP cases. The largest deviations appear on AP images and are related to inspiration, field size, exclusion of anatomy, rotation (longitudinal and AP axis), and cropping. In 13 PA and 73 AP cases, lines are visible (e.g., intravenous drips, ECG, and port-a-cath).

4. Discussion

The aim of this study was to design a low-dose simulation and to evaluate the effect of this low-dose simulation on the CNN performance in plain chest radiography. The low-dose simulation results in clinically representative simulations; however, the visual impact of the generated noise is limited at lower resolutions often used in CNN development. The effect of the simulated low dose on performance, expressed by the AUROC, is minimal, although significant in 6 of 28 cases. These results are comparable for all included pathologies and both resolutions. These findings indicate the robustness of CNNs toward noise, making dose reductions in CNN applications for chest imaging feasible.

This study focused on developing a low-dose imaging simulation; it is important to note that the approach used approximates the noise characteristics in radiography. Clinical representativeness was improved by applying grey value thresholds and correction factors. The validation of the low-dose simulations involved two steps. First, a visual face validity check was performed at full resolution with the help of an experienced radiologist, indicating a clinically relevant simulation. Second, an objective analysis was performed using a PSNR at the original resolution to verify the visual assessment. The low-dose simulation does not account for variations in SID, specific exposure parameters, patient characteristics, and variations in equipment used for image acquisition. Due to the two-dimensional nature of the images and limited information on patient and equipment influences, individualized simulated low-dose images based on MIMIC are not feasible. The low-dose simulation in this study was based on the mean tube voltage and SID from the MIMIC data, ensuring a relevant low-dose simulation at the population level. Separate noise components (e.g., thermal and scatter noise) were also not considered. However, the noise intensity surpasses that of the individual components; so, the current approach is comparable to other approaches in the literature [34,35]. Before implementing this simulation on a lower or individual scale, care should be taken to assess the simulation’s representativeness on individual patients.

The chosen approach for low-dose simulation may not be as accurate as more complex methods [34,35]. It is important to note that the subjective and objective results show that a reduction in resolution has a large impact on the expression of noise. Any deviations caused by limitations in the low-dose simulation are therefore expected to be corrected by a reduction in resolution. This is substantiated by the DeLong tests, which show five significant differences for LR AUROCs compared to the one for the ULR in the overall analysis. Given the dependence of resolution, further investigations comparing higher and lower resolutions are needed. Nonetheless, the scientific relevancy is not to be dismissed as low- and high-resolution ensemble models could combine robustness towards the effect of lower doses with access to more information.

A benefit of the low-dose simulation compared to multiple exposures is that patient positioning did not vary between doses. The focus on the effect of simulated doses was maintained. One way to provide a more comprehensive clinical validation could be the use of human cadavers, which can be exposed repeatedly without the risks associated with radiation exposure, unlike living human subjects [47]. Although this study shows the feasibility of dose reduction in plain chest imaging when using CNNs, further investigation is required. For instance, the findings are hopeful as they indicate possibilities for modalities such as computed tomography and fluoroscopy involving higher doses.

The CNN performance encountered, with an average AUROC of 0.812 ± 0.066, for all LR and ULR models in this study is comparable to those described in other publications [24,33,38,48]. While comparable to related studies, the AUROCs are lower than the top results, which exceed 0.95 [22]. Nonetheless, the AUROC achieved in this study is encouraging considering the limited training optimization (e.g., a limited number of epochs) and the inhomogeneous nature of the data (e.g., the negative impact of positioning). While further optimization of CNN performance, such as by extending the number of training epochs, is feasible, it falls outside the scope of this study.

Combining the poor performance of AP predictions with the significantly older age of patients in this group, this study suggests that there is ageism related to the implementation of AI models. This is further substantiated by the fact that AP imaging is often the only one performed on patients who are unable to stand erect due to their ill health. This limitation often results in inferior positioning and decreased image quality. It is important for radiographers to realize this as they play a crucial role in optimizing image acquisition. Another suggestion could be to differentiate between PA and AP images when implementing CNNs for the diagnosis of chest X-rays.

5. Conclusions

The low-dose simulation developed during this study provides clinically representative low-dose images from original exposure images. The effect of these low-dose simulations on CNN performance is very small, although significant in 6 of 28 cases. This limited effect is likely caused by reduced noise at the low resolutions used in this study. The robustness of the models toward dose reduction suggests the potential for further dose reductions in CNN-based medical imaging applications, warranting further research.

Additionally, the current study revealed that CNN performance is lower for AP images compared to PA images, which may have a more significant impact on elderly patients. This highlights the importance of tailored image acquisition by radiographers regarding the implementation of AI in clinical practice.

Author Contributions

Conceptualization, H.E.; methodology, H.E.; software, H.E.; validation, W.P.K., A.v.d.H.-M. and P.v.O.; formal analysis, EH, W.P.K., A.v.d.H.-M. and P.v.O.; investigation, H.E.; resources, H.E.; data curation, H.E.; writing—original draft preparation, H.E.; writing—review and editing, H.E., W.P.K., A.v.d.H.-M. and P.v.O.; visualization, H.E.; supervision, W.P.K., A.v.d.H.-M. and P.v.O.; project administration,. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

All MIMIC data were originally approved by the Institutional Review Board of Beth Israel Deaconess Medical Center (Boston, MA), which also waived individual patient consent [22]. During this study, all MIMIC data were used in accordance with PhysioNet Credentialed Health Data Use Agreement 1.5.0. Additional phantom data collected during this study do not involve patient data.

Informed Consent Statement

Not applicable due to the use of an open dataset.

Data Availability Statement

The MIMIC data used in this study are available online; project information and access to the data are provided by MIT through https://mimic.mit.edu/docs/ (accessed on 17 March 2025). The InGen script itself is available at https://github.com/HendrikBE/LDSim/blob/main/noise_gen (accessed on 17 March 2025); data used for the calibration of InGen are also available through this repository.

Acknowledgments

The authors would like to acknowledge the contribution of R. F. E. Wolf to the inclusion and exclusion of pathologies and the visual investigation of the simulated low-dose images.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Figure A1.

ROC for Atelectasis for low and ultra-low resolutions and all dose levels.

Figure A2.

ROC for Cardiomegaly for low and ultra-low resolutions and all dose levels.

Figure A3.

ROC for Edema for low and ultra-low resolutions and all dose levels.

Figure A4.

ROC for Enlarged Cardiomediastinum for low and ultra-low resolutions and all dose levels.

Figure A5.

ROC for Fracture for low and ultra-low resolutions and all dose levels.

Figure A6.

ROC for Lung Lesion for low and ultra-low resolutions and all dose levels.

Figure A7.

ROC for Pneumonia for low and ultra-low resolutions and all dose levels.

Appendix B

Table A1.

Median and IQR of acquisition parameters and patient age for AP and PA images.

Table A1.

Median and IQR of acquisition parameters and patient age for AP and PA images.

| PA | AP | |||||

|---|---|---|---|---|---|---|

| Median | IQR | n | Median | IQR | n | |

| Age (y) | 57 | 27 | 5772 | 68 | 24 | 6508 |

| DAP (unknown) | 1.1 | 0.8 | 4376 | 2.1 | 1.6 | 4282 |

| EI (n.a.) | 157.5 | 47.4 | 5866 | 464.7 | 322.7 | 3898 |

| REX (n.a.) | 1321 | 1167 | 5770 | 1762 | 265 | 6508 |

| Tube voltage (kVp) | 110 | 10 | 5766 | 90 | 0 | 4682 |

| Exposure time product (mAs) | 2.5 | 1.5 | 5766 | 3.2 | 0.8 | 4682 |

References

- Speets, A.M.; Van Der Graaf, Y.; Hoes, A.W.; Kalmijn, S.; Sachs, A.P.; Rutten, M.J.; Gratama, J.W.C.; Van Swijndregt, A.D.M.; Mali, W.P. Chest radiography in general practice: Indications, diagnostic yield and consequences for patient management. Br. J. Gen. Pract. 2006, 56, 574. [Google Scholar] [PubMed]

- World Health Organization. Chest Radiography in Tuberculosis Detection: Summary of Current WHO Recommendations and Guidance on Programmatic Approaches; World Health Organization: Geneva, Switzerland, 2016. [Google Scholar]

- Veldkamp, W.J.; Kroft, L.J.; Geleijns, J. Dose and perceived image quality in chest radiography. Eur. J. Radiol. 2009, 72, 209–217. [Google Scholar] [CrossRef] [PubMed]

- Metz, S.; Damoser, P.; Hollweck, R.; Roggel, R.; Engelke, C.; Woertler, K.; Renger, B.; Rummeny, E.J.; Link, T.M. Chest radiography with a digital flat-panel detector: Experimental receiver operating characteristic analysis. Radiology 2005, 234, 776–784. [Google Scholar] [CrossRef] [PubMed]

- Qu, T.; Guo, Y.; Li, J.; Cao, L.; Li, Y.; Chen, L.; Sun, J.; Lu, X.; Guo, J. Iterative reconstruction vs. deep learning image reconstruction: Comparison of image quality and diagnostic accuracy of arterial stenosis in low-dose lower extremity CT angiography. Br. J. Radiol. 2022, 95, 20220196. [Google Scholar] [CrossRef]

- Oh, S.; Kim, J.H.; Yoo, S.-Y.; Jeon, T.Y.; Kim, Y.J. Evaluation of the image quality and dose reduction in digital radiography with an advanced spatial noise reduction algorithm in pediatric patients. Eur. Radiol. 2021, 31, 8937–8946. [Google Scholar] [CrossRef]

- Tugwell-Allsup, J.; Morris, R.; Hibbs, R.; England, A. Optimising image quality and radiation dose for neonatal incubator imaging. Radiography 2020, 26, e258–e263. [Google Scholar] [CrossRef]

- Jin, M.X.; Gilotra, K.; Young, A.; Gould, E. Call to Action: Creating Resources for Radiology Technologists to Capture Higher Quality Portable Chest X-rays. Cureus 2022, 14, e29197. [Google Scholar] [CrossRef]

- ICRP. Low-Dose Extrapolation of Radiation-Related Cancer Risk; ICRP Publication: Ottawa, ON, Canada, 2005; p. 99. [Google Scholar] [CrossRef]

- Kolck, J.; Ziegeler, K.; Walter-Rittel, T.; Hermann, K.-G.K.G.; Hamm, B.; Beck, A. Clinical utility of postprocessed low-dose radiographs in skeletal imaging. Br. J. Radiol. 2022, 95, 20210881. [Google Scholar] [CrossRef]

- European Commission: Directorate-General for Energy; Chateil, J.F.; Cavanagh, P.; Ashford, N.; Remedios, D.; Bezzi, M.; Grenier, P. Referral Guidelines for Medical Imaging–Availability and Use in the European Union; European Commission Publications Office: Luxembourg, 2014; Available online: https://data.europa.eu/doi/10.2833/18118 (accessed on 17 March 2025).

- Mc Fadden, S.; Roding, T.; de Vries, G.; Benwell, M.; Bijwaard, H.; Scheurleer, J. Digital imaging and radiographic practise in diagnostic radiography: An overview of current knowledge and practice in Europe. Radiography 2018, 24, 137–141. [Google Scholar] [CrossRef]

- Fukui, R.; Ishii, R.; Kodani, K.; Kanasaki, Y.; Suyama, H.; Watanabe, M.; Nakamoto, M.; Fukuoka, Y. Evaluation of a Noise Reduction Procedure for Chest Radiography. Yonago Acta Med. 2013, 56, 85–91. [Google Scholar]

- Kang, E.; Min, J.; Ye, J.C. A deep convolutional neural network using directional wavelets for low-dose X-ray CT reconstruction. Med. Phys. 2017, 44, e360–e375. [Google Scholar] [CrossRef] [PubMed]

- Jiang, B.; Li, N.; Shi, X.; Zhang, S.; Li, J.; de Bock, G.H.; Vliegenthart, R.; Xie, X. Deep Learning Reconstruction Shows Better Lung Nodule Detection for Ultra–Low-Dose Chest CT. Radiology 2022, 303, 202–212. [Google Scholar] [CrossRef]

- Brendlin, A.S.; Schmid, U.; Plajer, D.; Chaika, M.; Mader, M.; Wrazidlo, R.; Männlin, S.; Spogis, J.; Estler, A.; Esser, M.; et al. AI Denoising Improves Image Quality and Radiological Workflows in Pediatric Ultra-Low-Dose Thorax Computed Tomography Scans. Tomography 2022, 8, 1678–1689. [Google Scholar] [CrossRef] [PubMed]

- Brendlin, A.S.; Plajer, D.; Chaika, M.; Wrazidlo, R.; Estler, A.; Tsiflikas, I.; Artzner, C.P.; Afat, S.; Bongers, M.N. AI Denoising Significantly Improves Image Quality in Whole-Body Low-Dose Computed Tomography Staging. Diagnostics 2022, 12, 225. [Google Scholar] [CrossRef]

- Pesapane, F.; Codari, M.; Sardanelli, F. Artificial intelligence in medical imaging: Threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur. Radiol. Exp. 2018, 2, 1–10. [Google Scholar] [CrossRef]

- Van Leeuwen, K.G.; Schalekamp, S.; Rutten, M.J.C.M.; Van Ginneken, B.; De Rooij, M. Artificial intelligence in radiology: 100 commercially available products and their scientific evidence. Eur. Radiol. 2021, 31, 3797–3804. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.; Shpanskaya, K.; et al. CheXNet: Radiologist-Level Pneumonia Detection on Chest X-Rays with Deep Learning. arXiv 2017. [Google Scholar] [CrossRef]

- Yuan, Z.; Yan, Y.; Sonka, M.; Yang, T. Large-scale Robust Deep AUC Maximization: A New Surrogate Loss and Empirical Studies on Medical Image Classification. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar] [CrossRef]

- van Beek, E.J.R.; Ahn, J.S.; Kim, M.J.; Murchison, J.T. Validation study of machine-learning chest radiograph software in primary and emergency medicine. Clin. Radiol. 2022, 78, 1–7. [Google Scholar] [CrossRef]

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. Proc. AAAI Conf. Artif. Intell. 2019, 33, 590–597. [Google Scholar] [CrossRef]

- Castiglioni, I.; Ippolito, D.; Interlenghi, M.; Monti, C.B.; Salvatore, C.; Schiaffino, S.; Polidori, A.; Gandola, D.; Messa, C.; Sardanelli, F. Machine learning applied on chest x-ray can aid in the diagnosis of COVID-19: A first experience from Lombardy, Italy. Eur. Radiol. Exp. 2021, 5, 1–10. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. arXiv 2017. [Google Scholar] [CrossRef]

- Exploring the ChestXray14 Dataset: Problems–Luke Oakden-Rayner n.d. Available online: https://lukeoakdenrayner.wordpress.com/2017/12/18/the-chestxray14-dataset-problems/ (accessed on 7 June 2020).

- Johnson, A.E.W.; Pollard, T.J.; Berkowitz, S.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.-Y.; Mark, R.G.; Horng, S. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 2019, 6, 317. [Google Scholar] [CrossRef]

- Johnson, A.E.; Pollard, T.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.Y.; Peng, Y.; Lu, Z.; Mark, R.G.; Berkowitz, S.J.; Horng, S. MIMIC-CXR-JPG, a large publicly available database of labeled chest radiographs. arXiv 2019. [Google Scholar] [CrossRef]

- Cohen, J.P.; Hashir, M.; Brooks, R.; Bertrand, H. On the limits of cross-domain generalization in automated X-ray prediction. Med. Imaging Deep. Learn. PMLR 2020, 121, 136–155. [Google Scholar]

- Hayat, N.; Abu Dhabi, H.; Krzysztof Geras, U.J.; Shamout, F.E. MedFuse: Multi-modal fusion with clinical time-series data and chest X-ray images. arXiv 2022. [Google Scholar] [CrossRef]

- Kamal, U.; Zunaed, M.; Nizam, N.B.; Hasan, T. Anatomy-XNet: An Anatomy Aware Convolutional Neural Network for Thoracic Disease Classification in Chest X-Rays. IEEE J. Biomed. Health Inform. 2022, 26, 5518–5528. [Google Scholar] [CrossRef] [PubMed]

- Sabottke, C.F.; Spieler, B.M. The Effect of Image Resolution on Deep Learning in Radiography. Radiol. Artif. Intell. 2020, 2, e190015. [Google Scholar] [CrossRef]

- Båth, M.; Håkansson, M.; Tingberg, A.; Månsson, L.G. Method of simulating dose reduction for digital radiographic systems. Radiat. Prot. Dosim. 2005, 114, 253–259. [Google Scholar] [CrossRef]

- Murakami, R.; Katsuragawa, S. Development of a computer simulation technique for low-dose chest radiographs: A phantom study. Radiol. Phys. Technol. 2020, 13, 111–118. [Google Scholar] [CrossRef]

- Johnson, A.; Bulgarelli, L.; Pollard, T.; Horng, S.; Celi, L.A.; Mark, R. MIMIC-IV (version 1.0). PhysioNet 2019. [Google Scholar] [CrossRef]

- Johnson, A.; Lungren, M.; Peng, Y.; Lu, Z.; Mark, R.; Berkowitz, S.; Horng, S. MIMIC-CXR-JPG-chest radiographs with structured labels (version 2.0.0). PhysioNet 2019. [Google Scholar] [CrossRef]

- Rueckel, J.; Trappmann, L.; Schachtner, B.; Wesp, P.; Hoppe, B.F.; Fink, N.; Ricke, J.; Dinkel, J.; Ingrisch, M.; Sabel, B.O. Impact of Confounding Thoracic Tubes and Pleural Dehiscence Extent on Artificial Intelligence Pneumothorax Detection in Chest Radiographs. Investig. Radiol. 2020, 55, 792–798. [Google Scholar] [CrossRef] [PubMed]

- Irfan, A.; Adivishnu, A.L.; Sze-To, A.; Dehkharghanian, T.; Rahnamayan, S.; Tizhoosh, H. Classifying Pneumonia among Chest X-Rays Using Transfer Learning. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020. [Google Scholar] [CrossRef]

- Hashmi, M.F.; Katiyar, S.; Keskar, A.G.; Dhanraj Bokde, N.; Geem, Z.W. Efficient Pneumonia Detection in Chest Xray Images Using Deep Transfer Learning. Diagnostics 2020, 10, 417. [Google Scholar] [CrossRef] [PubMed]

- Pham, H.H.; Le, T.T.; Tran, D.Q.; Ngo, D.T.; Nguyen, H.Q. Interpreting chest X-rays via CNNs that exploit hierarchical disease dependencies and uncertainty labels. Neurocomputing 2020, 437, 186–194. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Mosquera, C.; Diaz, F.N.; Binder, F.; Rabellino, J.M.; Benitez, S.E.; Beresñak, A.D.; Seehaus, A.; Ducrey, G.; Ocantos, J.A.; Luna, D.R. Chest X-ray automated triage: A semiologic approach designed for clinical implementation, exploiting different types of labels through a combination of four Deep Learning architectures. Comput. Methods Programs Biomed. 2021, 206, 106130. [Google Scholar] [CrossRef] [PubMed]

- Mason, A.; Rioux, J.; Clarke, S.E.; Costa, A.; Schmidt, M.; Keough, V.; Huynh, T.; Beyea, S. Comparison of Objective Image Quality Metrics to Expert Radiologists’ Scoring of Diagnostic Quality of MR Images Index Terms-Evaluation and performance, image qual-ity assessment, magnetic resonance imaging (MRI), image quality metric. IEEE Trans. Med. Imaging 2019, 39, 1064–1072. [Google Scholar] [CrossRef]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the Areas under Two or More Correlated Receiver Operating Characteristic Curves: A Nonparametric Approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef]

- Sun, X.; Xu, W. Fast implementation of DeLong’s algorithm for comparing the areas under correlated receiver operating characteristic curves. IEEE Signal Process Lett. 2014, 21, 1389–1393. [Google Scholar] [CrossRef]

- Niu, C.; Wang, G.; Yan, P.; Hahn, J.; Lai, Y.; Jia, X.; Krishna, A.; Mueller, K.; Badal, A.; Zeng, R. Noise Entangled GAN For Low-Dose CT Simulation. arXiv 2021. [Google Scholar] [CrossRef]

- Larrazabal, A.J.; As Nieto, N.; Peterson, V.; Milone, D.H.; Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl. Acad. Sci. USA 2020, 117, 12592–12594. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).