Abstract

Recycled glass has been the focus of attention owing to its role in reducing plastic waste and further increasing the demand for glass containers. Cosmetics glass bottles require strict quality inspections because of the frequent handling, safety concerns, and other factors. During manufacturing, glass bottles sometimes develop chips on the top surface, rim, or screw threads of the bottle mouth. Conventionally, these chips are visually inspected by inspectors; however, this process is time consuming and prone to inaccuracies. To address these issues, automatic inspection using image processing has been explored. Existing methods, such as dynamic luminance value correction and ring-shaped inspection gates, have limitations: the former relies on visible light, which is strongly affected by natural light, and the latter acquires images directly from above, resulting in low accuracy in detecting chips on the lower part of screw threads. To overcome these challenges, this study proposes a method that combines infrared backlighting and image processing to determine the range of screw threads and detect chips accurately. Experiments were conducted in an experimental environment replicating an actual factory production line. The results confirmed that the detection accuracy of chipping was 99.6% for both good and defective bottles. This approach reduces equipment complexity compared to conventional methods while maintaining high inspection accuracy, contributing to the productivity and quality control of glass bottle manufacturing.

1. Introduction

1.1. Research Background

In recent years, Japanese cosmetic glass bottles have attracted attention in terms of improved manufacturing technology and environmental friendliness through the use of recycled glass [1], and the amount of glass bottles exported overseas is on the rise [2].

Glass bottles used as cosmetic bottles are treated as high-end products and are subject to strict quality inspections. In particular, the mouths of glass bottles are often equipped with screw threads for opening and closing the caps, and their complex shapes easily cause defects during production. In visual inspection, where defective products are judged by humans, research by Hida et al. has shown that different workers have different criteria for judgment and that long hours of work can cause a loss of accuracy [3]. This calls for the development of inspection equipment that uses image processing to improve productivity.

1.2. Related Work

To improve productivity, it is necessary to develop a detection device using image processing [1,2,3]. Many inspection methods using image processing have been proposed [4,5,6,7]. However, their targets are plastics and metals, and if the glass bottle is transparent, it cannot be inspected successfully due to its light-transmitting characteristics. In addition, glass bottle mouth inspection methods using image processing include a glass bottle mouth inspection method employing dynamic brightness value correction [8] and a glass bottle inspection method using ring-shaped inspection gates [9]. The former uses six cameras for side inspections and two cameras for top inspections to perform omnidirectional inspection. However, it is limited to inspecting simple-shaped glass bottles without screw threads, and the accuracy of flaw detection is low because of the use of natural light. The latter captures images from above but cannot inspect the entire screw-threaded portion effectively. To address these challenges, we propose improving the detection accuracy through the use of an infrared backlight and limiting the inspection area to critical regions.

2. Proposed Method

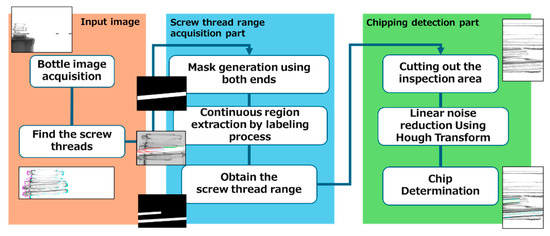

The proposed method primarily focuses on obtaining a range of screw threads from captured images and detecting flaws. Figure 1 provides an overview of the proposed method. Each step is explained in detail in the following sections.

Figure 1.

Overview of the proposed method.

2.1. Capturing Bottle Images

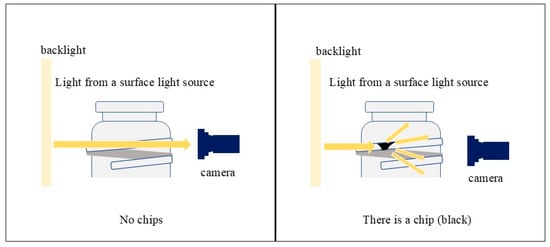

An infrared surface light source and an infrared camera are employed for image capture. Since the glass bottles used in this study are transparent, light penetrates the smooth, undamaged surface when photographed under an infrared surface light source, resulting in a uniformly white appearance. However, the presence of chips or scratches causes diffuse reflection of light, making these areas appear darker. This optical property is utilized for defect detection. Figure 2 shows an overview of the glass bottle photography process, which simulates the way chips appear when infrared light is used. Additionally, a cut filter is applied to block ambient light, ensuring that inspections remain unaffected by external lighting conditions in the factory or inspection area.

Figure 2.

Overview of glass bottle photography.

2.2. Reasons for Limiting the Scope

During manufacturing, chipping is less likely to occur near the beginning and end of the screw threads and on the bottle surface because these areas are more exposed to air compared to the center and top of the screw threads, where the temperature distribution is more uniform during the cooling process [10]. Consequently, the possibility of chipping in these areas is significantly lower. To minimize false detections caused by noise, these areas are masked and excluded from the detection process.

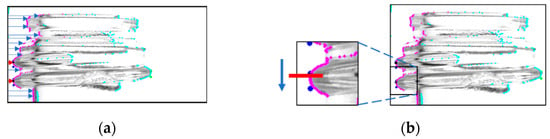

2.3. Detection of the Screw Thread Endpoints

To obtain the screw threads, a search was first performed from the endpoints of the glass bottle. Figure 3a shows the scan starting from the upper-left corner of the captured image. The coordinates of the first pixel with the lowest luminance value, which corresponds to the outline of the glass bottle, were retained. The leftmost and second points from the left were retained as the endpoints of the screw threads based on the coordinate information of the outline. Subsequently, the top and bottom edges of the screw threads were determined by scanning a few pixels above and below the retained coordinates and selecting the two coordinates that fell within the specified pixel range. A similar scan was performed on the right side to locate the end of the screw thread. Figure 3b illustrates this process. The black dots indicate the ends of the screw threads, and the blue dots indicate the top and bottom coordinates determined during this process.

Figure 3.

Process to search both ends of the screw threads. (A process that searches both ends (a) and a process that further searches the screw thread region (b)).

2.4. Mask Generation and Screw Thread Range Detection

The screw thread range was determined by connecting the coordinates obtained in the previous section. However, if a screw thread is cut in the middle, as illustrated in Figure 4, the coordinate information on one side is retained, while the coordinate information on the other side is unavailable. This makes it impossible to correctly determine the extent of the screw thread.

Figure 4.

Screw thread range depicted when both endpoints are successfully identified (Solid line; for screw threads on both ends. Dotted line; for screw threads on only one side and complements by predicting).

Therefore, a mask image was generated to accurately identify the screw thread missing on one side. As shown in Figure 5, the mask image was created with a thickness sufficient to cover the top and bottom of the screw thread. The endpoint of the screw thread was then located by detecting a shadow area that is continuous above and below the screw thread. This mask image was generated based on the slope obtained during the determination of the screw-thread range when both endpoints were successfully connected.

Figure 5.

Generated mask image and the resulting composite image.

2.5. Image Processing

The image of the mouth of a cosmetic glass bottle often contains minute noise, which was removed using a bilateral filter [11]. This filter effectively eliminates microscopic noise while preserving image contours. In addition, contrast transformation was applied to enhance the distinction between light and dark areas, making scratches and non-scratches more discernible [12].

2.6. Screw Thread Range Detection Using Labeling

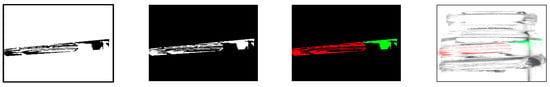

For missing screw threads, the thread range was determined by identifying contiguous areas through the labeling process. This process used OpenCV’s “ConnectedComponentsWithStats” function [13]. As shown in Figure 6, the generated mask image was combined with the contrast-enhanced image to generate an image containing only the screw thread portion. Labeling was then performed by tracking the shadows of the upper and lower portions of the screw threads to determine their full extent.

Figure 6.

Labeling process.

Based on the labeling information obtained, a mask image was generated to match the screw thread range. The generated mask image and the bottle image synthesized using the mask are shown in Figure 7. For example, the red label information is used to identify the screw thread regions contiguous from the left, and the green label information is used to identify the screw thread regions contiguous from the right.

Figure 7.

Generated mask and synthesized images. (a) Label image, (b) mask image, (c) composite image.

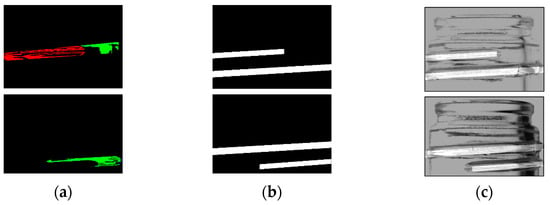

2.7. Cutting Out the Inspection Area

Chipping detection performed on the entire bottle image can be significantly affected by shadows in areas not visible in the front view. To minimize the influence of these shadows and improve chip detection, only the center portion of the bottle image was cropped using the coordinates of the two endpoints identified in Section 2.3. An example of this cropping method is shown in Figure 8. Because one image was acquired by the left camera, two images by the center camera, and one image by the right camera for flaw detection, it was possible to perform 180° flaw detection even when only the center portion of the image was used.

Figure 8.

Cutout portion of the bottle image used for the detection. (The image to be cropped and retrieved with the red line is on the left).

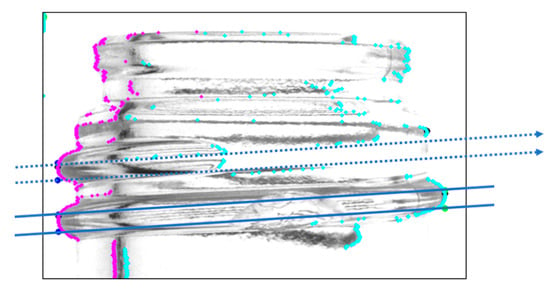

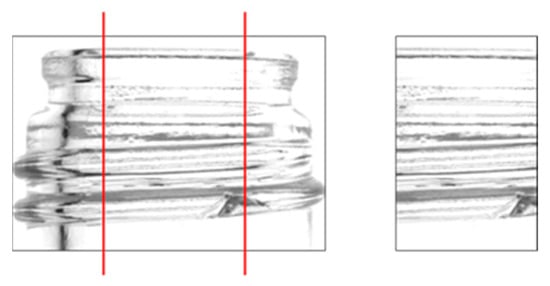

2.8. Mask Generation Using Hough Transform

As described in the previous sections, an image containing only the screw threads was obtained; however, it included significant linear noise owing to the shadows cast by the screw threads. To remove this noise, linear line detection using Hough transform processing was performed [14,15]. Labels identified as straight lines are removed by generating a mask to exclude them from the detection target. Figure 9 illustrates the mask generation process.

Figure 9.

Hough transform process. (a) Hough transforms for each label, (b) generated mask image, (c) composite image.

2.9. Chip Detection

From the generated images, chips were detected based on the area, width, and height of each label. The labels are listed in Table 1. The chip detection process is illustrated in Figure 10.

Table 1.

Criteria for chip detection and corresponding label colors.

Figure 10.

Process for extracting missing parts.

3. Experiments

Two experiments were conducted to verify the effectiveness of the proposed method. In Experiment 1, one good bottle and two defective bottles were rotated by 30°, and images were acquired 10 times at each angle to confirm that 180-degree side detection was achievable. In Experiment 2, the orientation of the glass bottles was adjusted to match that of an actual factory production line. A total of 100 good bottles were placed on the conveyor four times, while 29 defective bottles were placed on the conveyor twice.

3.1. Experimental Environment

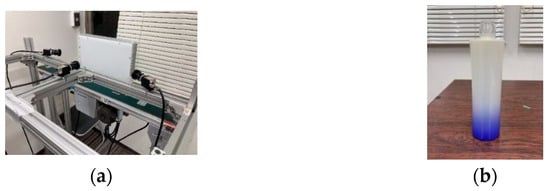

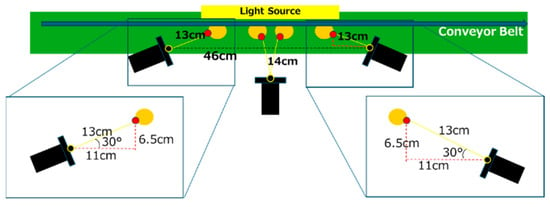

Figure 11 illustrates the experimental environment and the glass bottles used in the study. Table 2 lists the components and their specifications for the experimental setup. In addition, Figure 12 provides a detailed diagram of the camera arrangement. A constant voltage controller was utilized for image capture, with the light intensity set to its maximum level and maintained at a constant value. A cut filter was applied to block wavelengths other than 850 nm, effectively eliminating ambient light interference.

Figure 11.

Experimental environment. (a) Experimental setup and (b) glass bottle used in the study.

Table 2.

Equipment used in the experiment.

Figure 12.

Schematic drawing of the experimental environment.

3.2. Taking Bottle Images

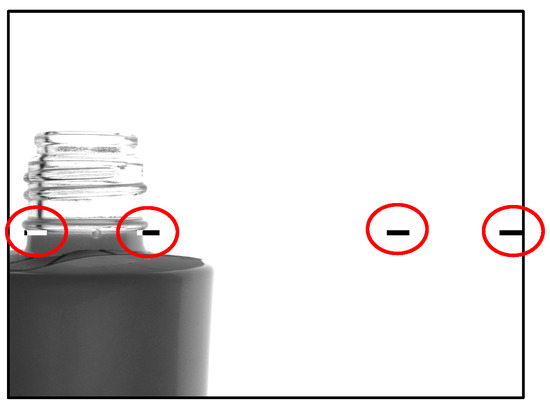

The bottles transported onto the conveyor appear as shown in Figure 13 when captured by the camera. As the bottles move along the conveyor, images are taken based on two reference markers (indicated by red circles), and the relevant regions are extracted. In this example, the central camera is depicted, capturing two images—one from each side of the bottle.

Figure 13.

How to take bottle images.

3.3. Detection Experiment on the Side of the Bottle

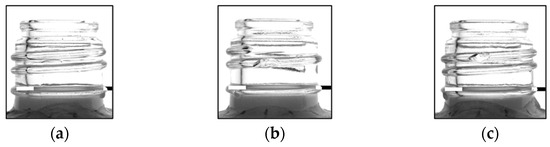

In this study, a detection of 180° was achieved. To verify the detection rate of the half-face (180°) of the bottles using this method, one good and two defective bottles (D1 and D2) were placed on the conveyor belt, and their images were acquired 10 times at 30° intervals, resulting in a total of 210 images, to ensure that the method could accurately inspect them. One bottle with chips on both the top and bottom of the screw threads was selected as the sample to confirm that there were no issues in detecting flaws during the acquisition of the screw threads. Figure 14 shows images of one good and two defective bottles acquired using an infrared camera.

Figure 14.

Examples of good bottles and defective bottles presenting chips. (a) Good, (b) D1, (c) D2.

3.4. Experimental Results (Experiment on the Side of the Bottle)

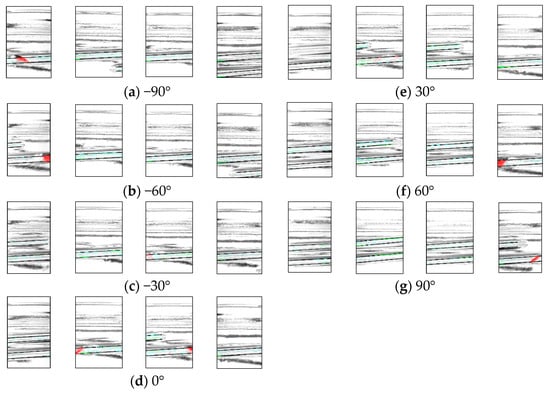

Figure 15 shows a portion of the resulting image, with the chipped areas indicated in red, indicating successful detection. The image shows the D1 rotated by 30°. In addition, Table 3, Table 4 and Table 5 present a breakdown of the experimental results. Table 3 presents the results of inspecting good bottles 10 times per angle, Table 4 shows the results of inspecting D1 10 times per angle, and Table 5 shows the results of inspecting D2 10 times per angle.

Figure 15.

Experimental results show images of bottle defective 1 at different angles.

Table 3.

Experimental results for the good bottle.

Table 4.

Experimental results for D1.

Table 5.

Experimental results for D2.

3.5. Discussion of Results (Experiment on the Side of the Bottle)

The chip detection rates were 98.6% for defective products, 97.1% for D1, and 100% for D2. In the experiment involving good bottles, 100% were correctly identified as good. The overall inspection accuracy rate for the entire set, including both good and defective bottles, was 99.0%. The cameras were able to detect the chips even when the bottles were rotated by 30°. This confirmed that the three-camera system could effectively detect chips on half of the bottles. Figure 16 shows an image of an inspection result that was erroneously detected as a defective product as an example of a false detection. It was found that the chips were determined as noise.

Figure 16.

Example of the false detection.

3.6. Experiments Simulating a Production Plant

In an actual factory production line, glass bottles flow on a conveyor at a specific angle because of the product labeling process. To simulate this, we conducted an experiment in which glass bottles were aligned in the same direction as they would be in a production plant. The sample set for this experiment included 100 good bottles and 29 defective bottles. In the experiment, good bottles were placed on the conveyor four times each to acquire 400 images, and defective bottles were placed twice to acquire 58 images.

3.7. Experimental Results (Experiments Simulating a Production Plant)

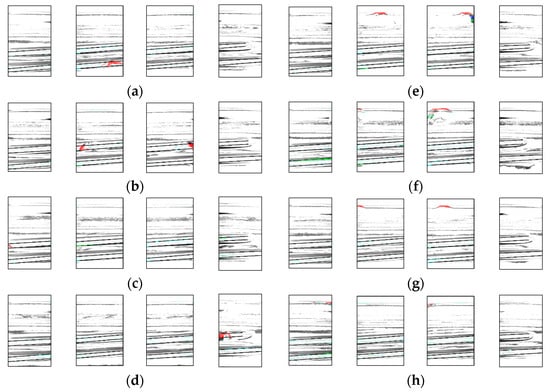

Figure 17 shows an example of a resulting image from a normal inspection by each camera. The areas shown in red indicate the chips that should be detected using this method. The image also displays a portion of the 29 bottles containing chips. A breakdown of the experimental results is presented in Table 6.

Figure 17.

Example of detection. Result for (a) chip1, (b) chip2, (c) chip3, (d) chip 4, (e) chip5, (f) chip6, (g) chip7, (h) chip8.

Table 6.

Experimental results.

3.8. Discussion of Results (Experiments Simulating a Production Plant)

As a result of the experiment, 3 false positives were detected at the screw threads and 19 false negatives at the top surface, resulting in an inspection accuracy of 94.5%. For defective products, no false positives were detected, achieving an inspection accuracy of 100%. Figure 18 shows examples of false-positive detection for a good bottle.

Figure 18.

Example of false positive.

3.9. Additional Experiments Based on the Experimental Results

Based on the results of Experiments 1 and 2, additional experiments were conducted to study the reflection of the chips at different angles using a single camera. Examples of chip detection obtained by applying minute angle changes are shown in Figure 19.

Figure 19.

Example for minute angle changes.

4. Proposed Enhancements and Results

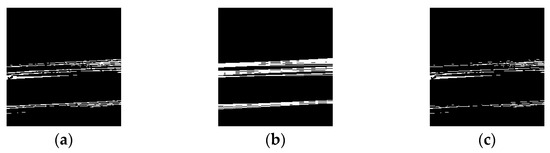

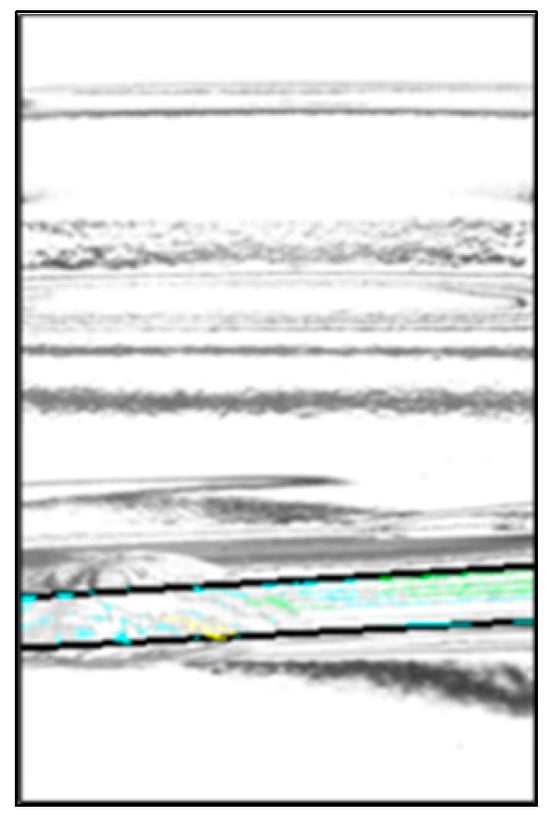

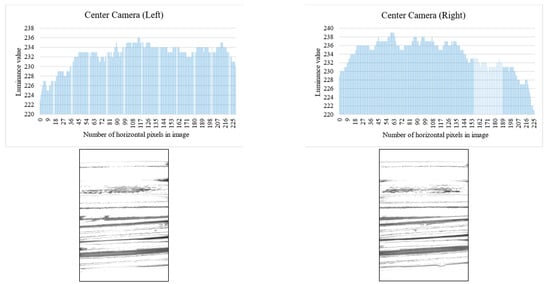

4.1. Binarization Process According to the Curved Surface of the Bottle

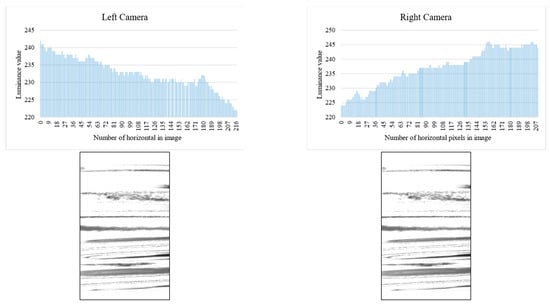

Binarization is performed on the contrast-adjusted image by applying a labeling process for chip detection. The glass bottle being inspected has a curved surface. When light from a surface light source strikes a curved surface, it is reflected, causing an increase in refraction and a decrease in luminance value. Figure 20 and Figure 21 illustrate the relationship between the average luminance values and the curved surface.

Figure 20.

Relationship between inspection image and luminance value (center camera).

Figure 21.

Relationship between inspection image and luminance value (left and right camera).

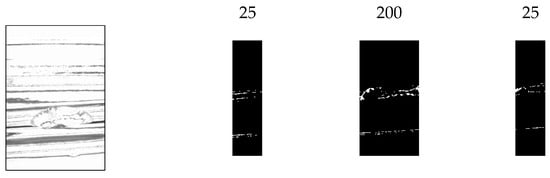

In addition, when images of bottles with chips were acquired from multiple angles, it was observed that when the chips were in front of the image, the reflection was weak, making it difficult to identify the chips. Based on this observation, the inspection image was divided vertically, as shown in Figure 22, and different binarization thresholds were applied to each section.

Figure 22.

Threshold change process.

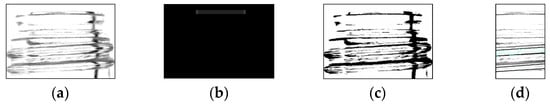

4.2. Subtractive Processing of the Top Surface Area

In Experiment 2, false detections due to noise were observed on the top surface area of the bottles. To address this, we propose a method that involves adding a certain pixel value to the top surface area. In the proposed method, the contrast was adjusted to emphasize the chips. However, this process also emphasized noise along with the chips. As shown in Figure 23, by adding a certain pixel value to the top surface of the entire inspection area, it may be possible to emphasize the chips while reducing the noise.

Figure 23.

Pixel value addition process. (a) Photographic image, (b) added image, (c) enhanced image, and (d) result image.

4.3. Experimental Setup

In the third experiment, the proposed method was added, and the experiment was conducted in the same manner as in Experiment 2, using images acquired in Experiment 2 for 100 good products four times and 29 defective products.

4.4. Experimental Results

The experimental results are shown in Table 7.

Table 7.

Experimental results.

4.5. Discussion of Results

Table 7 and Figure 24 and Figure 25 show the additional proposed method improved inspection accuracy for good bottles. However, for defective bottles, two false positives occurred, which did not occur in Experiment 2. An example of a false-positive detection is shown in Figure 26. The accuracy of the inspection was reduced because the addition of pixels caused some pixels to be missing from the image, making it difficult to accurately identify the missing areas.

Figure 24.

Comparison with Experiment 2 (screw thread). (a) Experiment 2 and (b) Experiment 3.

Figure 25.

Comparison with Experiment 2 (top). (a) Experiment 2 and (b) Experiment 3.

Figure 26.

Comparison with Experiment 2 (defect bottle). (a) Experiment 2 and (b) Experiment 3.

4.6. Comparison with Conventional Method

Table 8 and Table 9 summarize the inspection results of a previous study [4] and those of the proposed method.

Table 8.

Detection accuracy of the conventional method [4].

Table 9.

Detection accuracy of the proposed method.

The accuracy of the inspection was comparable to that of previous studies. However, one prior study used six cameras for the sides of the bottle and three cameras for the top surface to achieve 360° detection [4]. In this study, although the bottle-half surface was 180°, the side and top surfaces could be inspected with only three cameras. We believe that this approach will contribute to reducing the weight of the equipment used.

5. Conclusions

In this study, we aimed to improve inspection accuracy by using an infrared backlight and limiting the inspection area. The results of Experiment 1 showed that good and defective bottles were correctly detected when rotated every 30°, with sufficient accuracy to inspect the sides of the bottles. Experiment 2 demonstrated inspection accuracies for good and defective bottles of 94.5% and 100%, respectively. The results of Experiments 1 and 2 confirm that the reflection of scratches depends on the refractive index and reflectivity of the curved surface area of the glass bottle. Based on these findings, we proposed methods to adjust the binarization threshold and to add specific pixel values to the top surface to improve inspection accuracy in Experiment 3. The results of Experiment 3 confirmed the effectiveness of these methods, achieving a 100% detection rate for good bottles and an inspection accuracy of 96.5% for defective bottles. The parameters of the proposed method exhibit a trade-off between good and defective bottles, and future work will focus on optimizing these parameters to enhance detection accuracy. In addition, we would like to prepare two experimental apparatuses to check the accuracy of 360° inspection and to see if they can be used for different types of glass bottles. Finally, it was found that the method proposed in this study has the potential to improve productivity in glass bottle production and advance the industry.

Author Contributions

The individual contributions were investigation, methodology, validation, software, writing (first draft preparation, revision, and editing): D.T.; supervision, project administration, writing (editing): Y.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Acknowledgments

We thank Koa Glass Co., Ltd. for providing the glass bottles used in the experiment and Nakajima of the Technical Department for his cooperation with the experiment.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Japan Glass Bottle Association. Returnable Bottle. Available online: https://glassbottle.org/ecology/returnable/ (accessed on 27 September 2024).

- Japan Cosmetic Industry Association. Cosmetics Statistics. Available online: https://www.jcia.org/user/statistics/trade (accessed on 27 September 2024).

- Hida, T.; Chihara, T.; Seo, A. Effect of object handling on accuracy of visual inspection. Proc. Annu. Conf. Jpn. Ergon. Soc. 2012, 48, 454–455. [Google Scholar]

- Zhang, Y.; Zhen, J.; Liu, T.; Yang, Y.; Cheng, Y. Adaptive Differentiation Siamese Fusion Network for Remote Sensing Change Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6002405. [Google Scholar] [CrossRef]

- Kubik, C.; Molitor, D.A.; Varchmin, S.; Leininger, D.S.; Ohrenberg, J.; Groche, P. Image-based feature extraction for inline quality assurance and wear classification in high-speed blanking processes. Int. J. Adv. Manuf. Technol. 2023, 129, 4883–4897. [Google Scholar] [CrossRef]

- Hu, Z.; Yin, Z.; Qin, L.; Xu, F. A Novel Method of Fault Diagnosis for Injection Molding Systems Based on Improved VGG16 and Machine Vision. Sustainability 2022, 14, 14280. [Google Scholar] [CrossRef]

- Gruber, D.P.; Berger, G.; Pacher, G.; Friesenbichler, W. Novel approach to the measurement of the visual perceptibility of sink marks on injection molding parts. Polym. Test. 2011, 30, 651–656. [Google Scholar] [CrossRef]

- Ma, H.-M.; Su, G.-D.; Wang, J.-Y.; Ni, Z. A glass bottle defect detection system without touching. In Proceedings of the First International Conference on Machine Learning and Cybemetics, Beijing, China, 4–5 November 2002; Volume 2, pp. 628–632. [Google Scholar] [CrossRef]

- Harada, T.; Suzuki, G. Container Mouth Inspection Method and Device. Patent No. 4986255, 8 August 2011. [Google Scholar]

- Kunugi, M. Chemistry of Glass. Material 1977, 26, 495–502. (In Japanese) [Google Scholar]

- Urahama, K. Noise reduction and generation of illustration-like images using a bilateral filter that brings out the essence of the image. J. Inst. Image Inf. Telev. Eng. 2008, 62, 1268–1273. [Google Scholar]

- Kannan, P.; Deepa, S.; Ramakrishnan, R. Contrast enhancement of sports images using modified sigmoid mapping function. In Proceedings of the International Conference on Communication Control and Computing Technologies, Nagercoil, India, 7–9 October 2010; pp. 651–656. [Google Scholar] [CrossRef]

- Bolelli, F.; Allegretti, S.; Baraldi, L.; Grana, C. Spaghetti Labeling: Directed Acyclic Graphs for Block-Based Connected Components Labeling. IEEE Trans. Image Process. 2020, 29, 1999–2012. [Google Scholar] [CrossRef] [PubMed]

- Trivedi, J.; Devi, M.S.; Dhara, D. OpenCV and MATLAB based Car Parking System Module for Smart City using Circle Hough Transform. In Proceedings of the 2017 International Conference on Energy, Communication, Data Analytics and Soft Computing (ICECDS), Chennai, India, 1–2 August 2017; pp. 2461–2464. [Google Scholar] [CrossRef]

- Watanabe, S.; Seki, M.; Haga, T. Lane marks detection using particle filter based on voting results of hough transform. IEEJ Trans. Electron. Inf. Syst. 2013, 144, 1384–1391. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).