1. Introduction

The theoretical foundation of Salient Object Detection (SOD) originates from the visual attention mechanism, whose core principle is to emulate the human visual system’s ability to prioritize regions with the most distinctive features within a scene, thereby accurately localizing areas of maximal visual contrast in an image. With continued research, the application of visual attention mechanisms has expanded considerably and is now widely integrated into various computer vision tasks, including image retrieval, object detection [

1], image editing, image quality assessment, arc detection [

2], and image classification. Over time, SOD has evolved into several research branches, such as Natural Scene Image SOD (NSI-SOD), Video SOD for dynamic sequences, and Remote Sensing Image SOD (RSI-SOD) for high-resolution remote sensing data, forming a comprehensive research framework. From a methodological perspective, SOD has progressed from early center-surround difference-based computational approaches to feature-driven traditional machine learning methods, and ultimately to deep learning-dominated architectures. The incorporation of deep learning [

3] has not only substantially enhanced detection performance but also broadened the applicability of SOD to complex and multimodal environments [

4].

Compared with natural scene images (NSIs), optical remote sensing images (RSIs) exhibit significant differences in object types, scale variations, illumination conditions, imaging perspectives [

5], and background complexity. Consequently, directly transferring natural scene salient object detection (NSI-SOD) methods to remote sensing scenarios often yields suboptimal results. Nevertheless, the development of RSI-SOD has been largely inspired by NSI-SOD, particularly by convolutional neural network (CNN)-based approaches, which have provided valuable technical foundations for this field.

Among existing representative methods, LVNet enhances adaptability to multi-scale targets through multi-resolution feature fusion, while PDFNet further designs a five-branch, five-scale fusion architecture to achieve more comprehensive detection capability. EMFINet integrates multi-resolution inputs, edge supervision, and hybrid loss functions, yielding improved edge awareness but still exhibiting limitations under low-contrast and complex backgrounds. DAFNet employs shallow attention mechanisms to capture edge and texture information and deep features to capture semantic and positional cues; however, its interaction between high- and low-level features remains limited, and its decoder structure is relatively simple, leading to insufficient contextual information extraction.

Despite significant progress in RSI-SOD, existing studies still exhibit several common limitations. First, many methods struggle to handle complex and heterogeneous backgrounds, leading to incomplete or noisy saliency maps. Second, cross-level feature interactions are often insufficient, limiting the effective fusion of low-level spatial details with high-level semantic information. Third, decoder architectures are typically simple, which restricts the exploitation of contextual cues and multi-scale dependencies. Finally, the majority of approaches focus on specific datasets or scenarios, reducing their generalizability to diverse remote sensing conditions.Therefore, this study explicitly considers the complex background content inherent to optical remote sensing images. Moreover, to enhance the robustness of the proposed method, hierarchical semantic interaction modules are incorporated across multiple feature scales. Deploying these modules within a generic encoder–decoder backbone network is of particular significance. Accordingly, we propose a simple yet effective Hierarchical Semantic Interaction Network, which, benefiting from the backbone’s progressive inference process, effectively highlights salient regions of varying scales and object types while maintaining adaptability to challenging optical remote sensing scenarios.

The main contributions of this study are summarized as follows:

(1) A Hierarchical Semantic Interaction Network (HSIMNet) is proposed to explore the complementarity of multi-content feature representations in optical remote sensing images for salient region perception, enabling the joint utilization of both local and global image-level information.

(2) The Hierarchical Semantic Interaction Module is embedded across multiple feature scales within an encoder–decoder architecture, thereby seamlessly integrating the feature complementarity of the proposed module with the inference capability of the backbone network.

(3) The module incorporates an Efficient Channel Adaptive Enhancement component to adaptively adjust channel weights, capture global contextual information, and maintain computational efficiency. Combined with a Position-Aware Spatial Selection component that focuses on critical spatial regions, enhances spatial perception, and improves robustness, the two components synergistically integrate complementary information, enhance generalization, and improve detection accuracy and efficiency.

(4) Comprehensive experiments conducted on two RSI-SOD benchmark datasets demonstrate that the proposed method consistently outperforms state-of-the-art approaches across various evaluation metrics, validating the effectiveness of each component in the overall framework.

To provide a clear roadmap for readers, the remainder of this paper is organized as follows.

Section 2 reviews related work on salient object detection in optical remote sensing images.

Section 3 presents the proposed method, including the Hierarchical Semantic Interaction Module (HSIM) and the hybrid loss design.

Section 4 describes the experimental setup, datasets, evaluation metrics, and presents the results and ablation studies. Finally,

Section 5 concludes the paper and discusses potential directions for future research.

3. Hierarchical Semantic Interaction-Based Salient Object Detection Method for Remote Sensing Images

3.1. Network Architecture Design

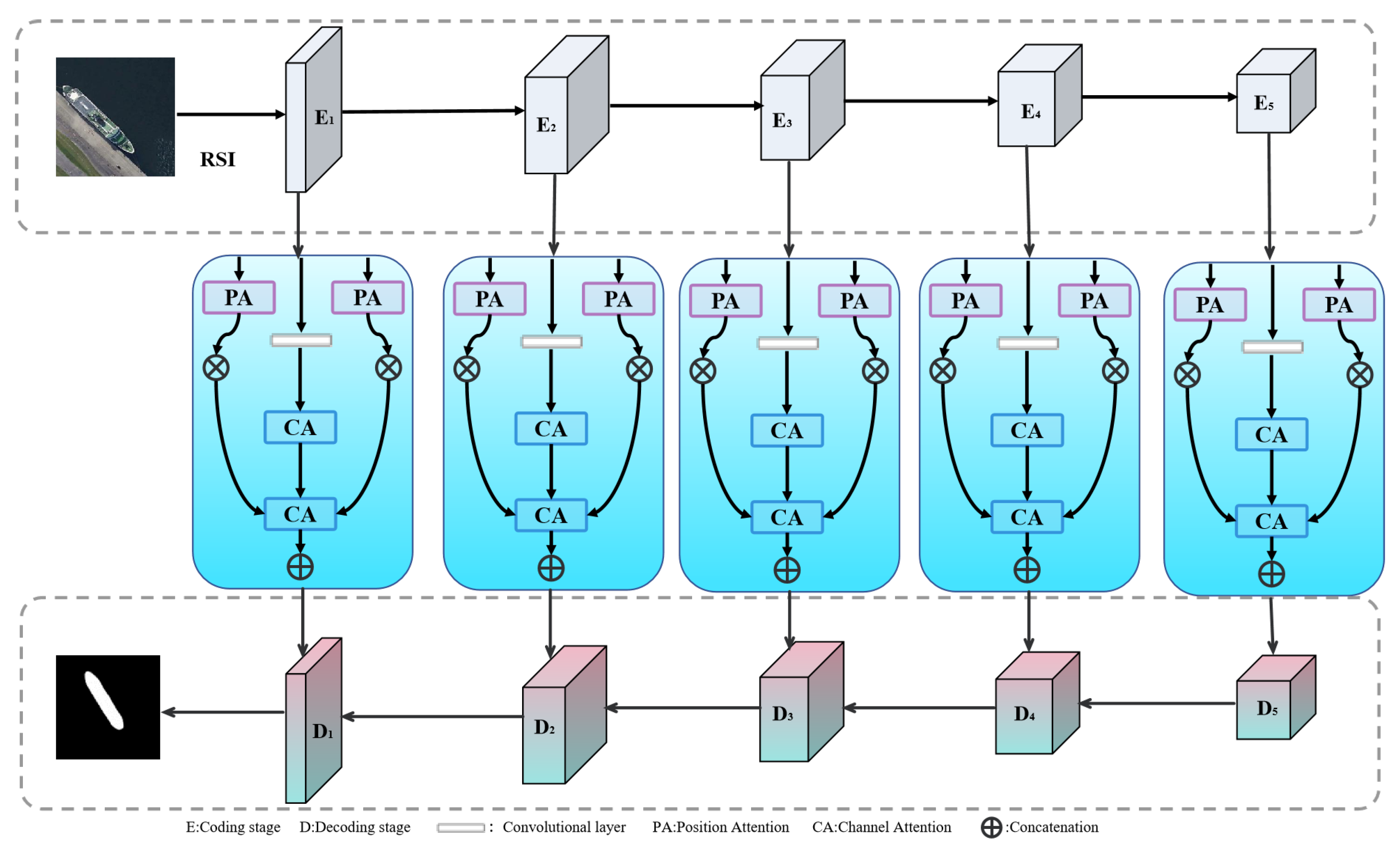

In this work, we propose a novel salient object detection architecture named HSIMNet, specifically designed for optical remote sensing images. The overall framework follows an encoder–decoder structure, as illustrated in

Figure 1. HSIMNet primarily consists of three original components: an encoder network, a Hierarchical Semantic Interaction Module (HSIM), and a decoder network. Unlike existing architectures, HSIMNet focuses on hierarchical semantic interaction and multi-scale complementary feature aggregation to enhance saliency perception and object structure preservation.

The encoder network is constructed based on a modified VGG-16 backbone, which is adapted to better capture multi-level semantic and spatial cues from remote sensing imagery. This provides a strong feature foundation for handling complex scenes that typically exhibit heterogeneous content and weak object boundaries.

The core contribution of our framework lies in the Hierarchical Semantic Interaction Module, which we propose to address the limitations of insufficient global understanding and poor boundary awareness in existing SOD models. The module generates multi-scale complementary saliency features by jointly modeling foreground–background contrast, edge structure information, and global contextual semantics. Through hierarchical interaction, the module progressively enhances feature discriminability and significantly improves the representation of small and low-contrast salient targets.

The decoder network subsequently reconstructs high-resolution saliency maps using progressive upsampling and multi-level feature fusion. By aligning semantic and spatial information at different stages, the decoder enables fine-grained delineation of object boundaries and accurate pixel-level localization.

Overall, HSIMNet introduces a complete architectural pipeline that integrates hierarchical interaction, semantic complementarity, and structural restoration, effectively improving robustness and detection accuracy across diverse remote sensing environments.

3.2. Hierarchical Semantic Interaction Module

To enhance cross-level semantic interaction and improve feature discrimination under complex backgrounds in optical remote sensing images, we propose a Hierarchical Semantic Interaction Module (HSIM). Unlike traditional SOD methods that rely solely on foreground priors or independent attention mechanisms for highlighting salient regions, the proposed HSIM performs hierarchical semantic guidance and dual-attention fusion to jointly utilize foreground, background, and boundary cues, enabling more accurate salient object perception.

As shown in

Figure 1, the HSIM receives multi-scale features from the backbone network and extracts fine-grained semantic representation through a hierarchical interaction structure. Conventional convolution-based feature extraction strategies are limited in capturing the correlations between channel-wise semantic information and spatial structural cues. To overcome this limitation, the proposed module integrates a Channel Attention Mechanism CAM [

24] and a Position Attention Mechanism (PAM) [

25] to effectively model global channel dependencies and spatial positional relationships, respectively. This design enhances the perception of salient targets while suppressing background interference [

26].

The processing pipeline of the HSIM is summarized as follows: the backbone first generates initial feature maps, which are fed into the CAM to adaptively recalibrate channel responses based on global semantic dependenciess [

27]. Meanwhile, the PAM highlights spatially important regions by encoding pixel-wise relationships across the feature map. Finally, the outputs of the CAM and PAM branches are fused to produce a content-enhanced multi-dimensional semantic feature representation, enabling more precise detection of salient objects with clear boundaries and robust context understanding.

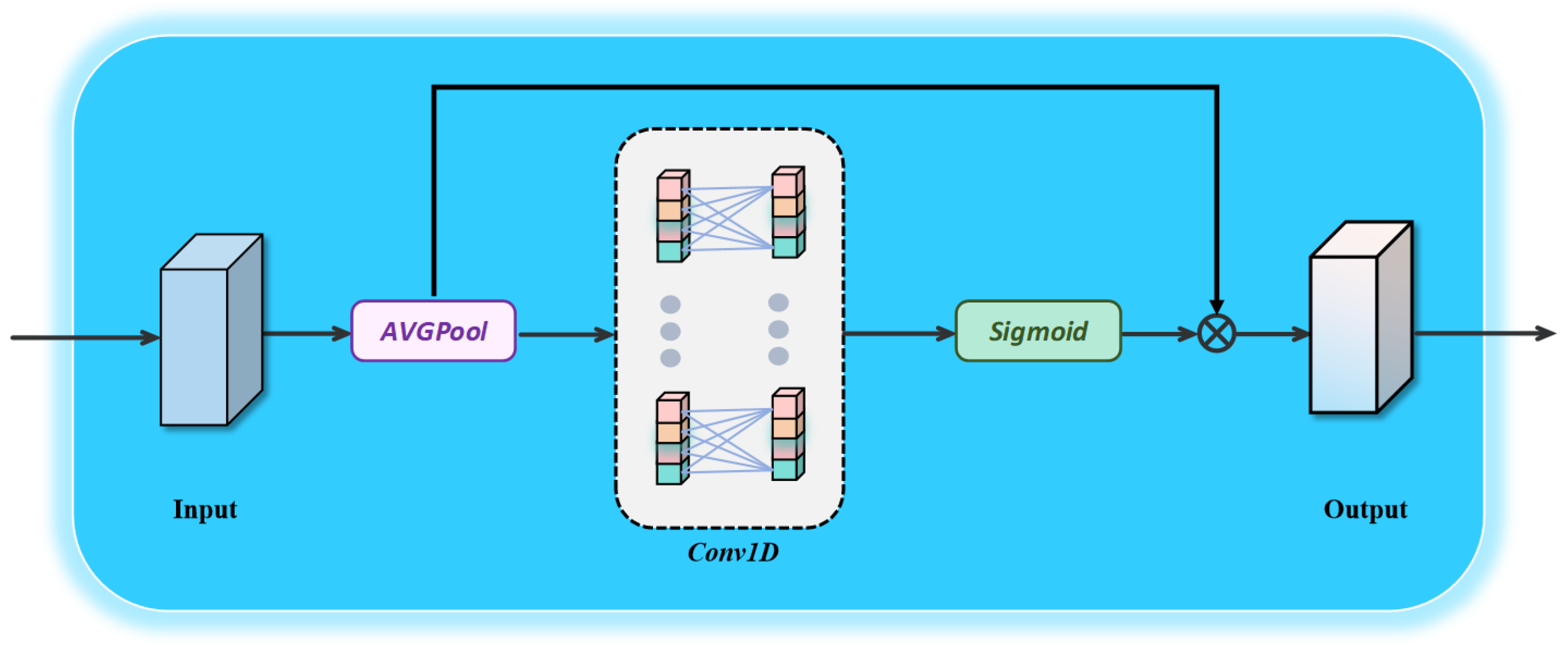

The detailed process of the Channel Adaptive Enhancement Module (CAE) [

28] is illustrated in

Figure 2. Within the Hierarchical Semantic Interaction Module, the primary purpose of the CAE is to model inter-channel relationships globally by adaptively weighting feature maps across different channels. This enables the network to better capture the associations and dependencies among various regions of the image, thereby effectively identifying salient regions in optical remote sensing images characterized by complex backgrounds and diverse object types. The process of the Channel Adaptive Enhancement Module includes the following steps:

(1) Channel-wise Global Average Pooling: The input feature map undergoes Global Average Pooling (GAP) to compute a global feature descriptor for each channel. The specific formulation is as follows:

where

H denotes the height of the feature map, and

represents the feature value at position

. This operation extracts the global information of each channel without reducing the channel dimensionality.

(2) Channel Weighting: The channel weights are generated by applying a one-dimensional convolution to the globally pooled features

. To better capture inter-channel dependencies, a one-dimensional convolution is employed to model the local interactions between each channel and its

k nearest neighboring channels. The kernel size

k, a hyperparameter, defines the interaction range for each channel and its local neighborhood. This process can be expressed as follows:

where

denotes the channel weight,

represents the Sigmoid activation function, and

refers to the one-dimensional convolution operation used to capture local cross-channel interactions with a kernel size of

k.

(3) Relationship Between Channel Dimension and Kernel Size: To adaptively adjust the relationship between kernel size and channel dimension across different network architectures, the following formulation is proposed:

where

C denotes the number of channels,

k is the kernel size, and

,

are parameters controlling the proportional relationship between kernel size and channel dimension.

The kernel size

k can be inversely derived from the channel dimension

C as follows:

where

denotes rounding to the nearest odd integer. This adaptive formulation allows the model to automatically adjust the kernel size according to the channel dimension, enabling more effective inter-channel interaction modeling and achieving an optimal balance between computational efficiency and detection performance.

(4) Feature Weighting: The generated channel weights are multiplied with the input feature map to obtain the weighted feature map.

The detailed process of the Position-Aware Spatial Selection Module (PSS) is illustrated in

Figure 3. The objective of this module is to enhance the model’s understanding of spatial structural information by assigning adaptive weights to spatial locations, thereby emphasizing the most salient regions. The process involves the following steps:

A. Horizontal and Vertical Pooling: The input feature map

is first subjected to pooling operations along the horizontal

and vertical

directions to extract spatial structural information. The pooling operations are defined as follows:

where

and

represent the horizontal and vertical features, respectively, and

W and

H denote the width and height of the feature map. The pooling operation facilitates the preservation of spatial structural information within the image.

B. Generation of Positional Attention Coordinates: The pooled features from the horizontal and vertical directions are concatenated and processed through a convolution operation to generate the positional attention coordinates. The computation is defined as follows:

where Conv denotes the

convolution operation, and Concat represents the concatenation operation. The resulting positional attention coordinates

capture the weighted information of each spatial location.

C. Segmentation of Positional Attention Features: The generated attention coordinates are partitioned to obtain feature maps in the horizontal and vertical directions:

where Split denotes the segmentation operation, and

and

represent the feature maps in the horizontal and vertical directions, respectively. This step ensures that the Position-Aware Spatial Selection Module can independently extract features along different spatial dimensions, thereby enhancing the model’s spatial representation capability.

D. Final Output: The features from the channel and position-aware spatial selection modules are integrated and weighted to generate the final feature output:

where

E denotes the combined channel and position attention weight matrix, and

represents the multi-content feature map integrating both channel and spatial information, effectively capturing image details and spatial structural characteristics.

(5) Multi-Content Feature Fusion Module: By integrating the feature maps generated by the Channel Adaptive Enhancement Module and the Position-Aware Spatial Selection Module, a multi-level feature representation of the image is obtained. These feature maps jointly capture both channel-wise and spatial positional information. The final feature fusion is formulated as follows:

In this manner, the Hierarchical Semantic Interaction Module fully leverages the strengths of both the channel and position-aware spatial selection modules, thereby enhancing its performance in complex tasks.

3.3. Loss Function

The Hierarchical Semantic Interaction Module effectively exploits the advantages of both the channel and position-aware spatial selection modules, thereby enhancing performance in complex tasks. To develop a successful CNN-based model, in addition to an efficient architecture and well-designed modules, an effective training strategy can further improve model performance without increasing parameter complexity. During training, deep supervision is employed to monitor intermediate saliency maps of varying scales, encouraging the network to learn multi-scale salient features. Moreover, inspired by the successful application of hybrid and complementary losses in salient object detection, the loss function integrates the classical pixel-level cross-entropy loss, the feature map-level

loss, and a metric-aware F-measure loss to further facilitate network optimization. Consequently, a comprehensive loss function

is formulated to supervise the predicted saliency map

, defined as follows:

where

G denotes the ground truth, and

,

, and

represent the BCE loss, IoU loss, and F-measure loss, respectively. Their formulations are as follows:

where

and

denote the ground truth label and the predicted saliency score of the

i pixel, respectively. The parameter

is set to 0.3.

Precision (P) and Recall (R) are defined as follows:

where

,

, and

.

Additionally, an auxiliary model is employed to learn and generate the edge map

, which is trained by minimizing the edge loss

, defined as follows:

where

denotes the ground truth edge map, generated following the method described in [

17]. Finally, the total loss of the network during training,

, is defined as follows:

4. Experiments

4.1. Datasets

The experiments in this study are conducted on two publicly available remote sensing salient object detection datasets: ORSSD [

2] and EORSSD [

5]. ORSSD contains 800 optical remote sensing images with pixel-level manually annotated saliency masks, including 600 images for training and 200 for testing. EORSSD, as an extended version of ORSSD, consisting of 2000 images, divided into 1400 for training and 600 for testing.

Both datasets include diverse and challenging real-world remote sensing scenes, such as strong illumination, shadow occlusion, cloud interference, land–sea boundaries, and complex background textures. These conditions pose significant difficulty for salient object detection tasks and provide a comprehensive benchmark for evaluating model robustness and generalization. In our experiments, all images and ground-truth masks are used in their original resolutions, and standard data-augmentation strategies (random flipping, rotation, and cropping) are applied during training to enhance model stability and mitigate overfitting.

4.2. Implementation Details

The model is implemented using the PyTorch framework (version 1.13.1), and all experiments are conducted on a workstation equipped with an Intel Core i9-9900X @ 3.50 GHz CPU, 32 GB RAM, and an NVIDIA GTX 3090 GPU. The proposed HSIMNet model supports end-to-end training and employs the Adam optimizer for parameter updates.

To configure the training hyperparameters of RSI-SOD, we performed systematic empirical tuning, taking into account the characteristics of high-resolution remote sensing imagery. The initial learning rate () was chosen based on preliminary experiments and common practices for salient object detection networks using the Adam optimizer. We evaluated several candidate learning rates in the range of to and found that produced the most stable optimization behavior while avoiding gradient explosion and oscillations common in complex remote sensing scenes.

Since the multi-scale fusion architecture of HSIMNet leads to high GPU memory consumption, we set the batch size to 4. Experiments with batch sizes of 2, 4, and 8 showed that a batch size of 4 achieved a good balance between training stability and GPU utilization, while a batch size of 8 exceeded the memory capacity of an RTX 3090 GPU.

The maximum number of iterations was determined based on convergence analysis. During training, both the MAE and F-value curves stabilized before 120 iterations, with minimal improvement thereafter. Therefore, we set the maximum number of iterations to 120 to ensure efficient training and avoid unnecessary computational costs.

Finally, the decay step size of 40 in the learning rate scheduling was derived through validation experiments. A decay interval of 40 epochs ensures that the learning rate decreases at an appropriate rate, preventing overfitting in the later stages of training while maintaining sufficient learning capacity to handle the complex spatial structures in optical remote sensing imagery.

4.3. Quantitative Comparison

To validate the effectiveness of the proposed network, it is compared with 12 state-of-the-art methods.

The proposed model is evaluated against SOD methods designed for both NSI and ORSI scenarios, including CorrNet [

13], DAFNet [

18], EGNet [

11], EMFINet [

29], F3Net [

30], GateNet [

31], LVNet [

17], MJRBM [

32], R3Net [

12], SARNet [

2], U2Net [

33], and SUCA [

34]. For fair comparison, the results of all benchmark methods were derived from the saliency maps publicly released by the original authors on the ORSSD and EORSSD datasets. This approach minimizes the impact of parameter configuration differences on the comparison results. This ensures the comparability and accuracy of the overall evaluation. These methods are standard benchmarks in the field of remote sensing SOD [

26].

Table 1 presents the quantitative results for the four evaluation metrics: Sm, MAE, maxE, and max F. It is noteworthy that lower MAE values indicate better performance, whereas higher values for the other three metrics correspond to superior results. Compared with the 12 competing methods, the proposed approach achieves outstanding performance across all four metrics, surpassing nearly all existing methods, with DAFNet being the closest competitor. Specifically, the proposed method attains the best MAE value on the ORSSD dataset and demonstrates strong performance on both the MAE and maxF metrics, with the maxF score exceeding the second-best result by 0.0156. Moreover, the method achieves a notable improvement in the Sm metric, reaching 0.94415, and it attains competitive performance in the maxE metric, ranking second only to DAFNet.

On the EORSSD dataset, the proposed method achieves the best performance in both Sm and maxF metrics, with scores of 0.9205 and 0.9100, respectively. The MAE and maxE values rank second, and although slight differences exist between the proposed method and DAFNet in these metrics, the discrepancies are not statistically significant.

To comprehensively evaluate the strengths and weaknesses of each comparative method, multiple dimensions of analysis are conducted, including the quantitative results presented in

Table 1 and corresponding visualizations.

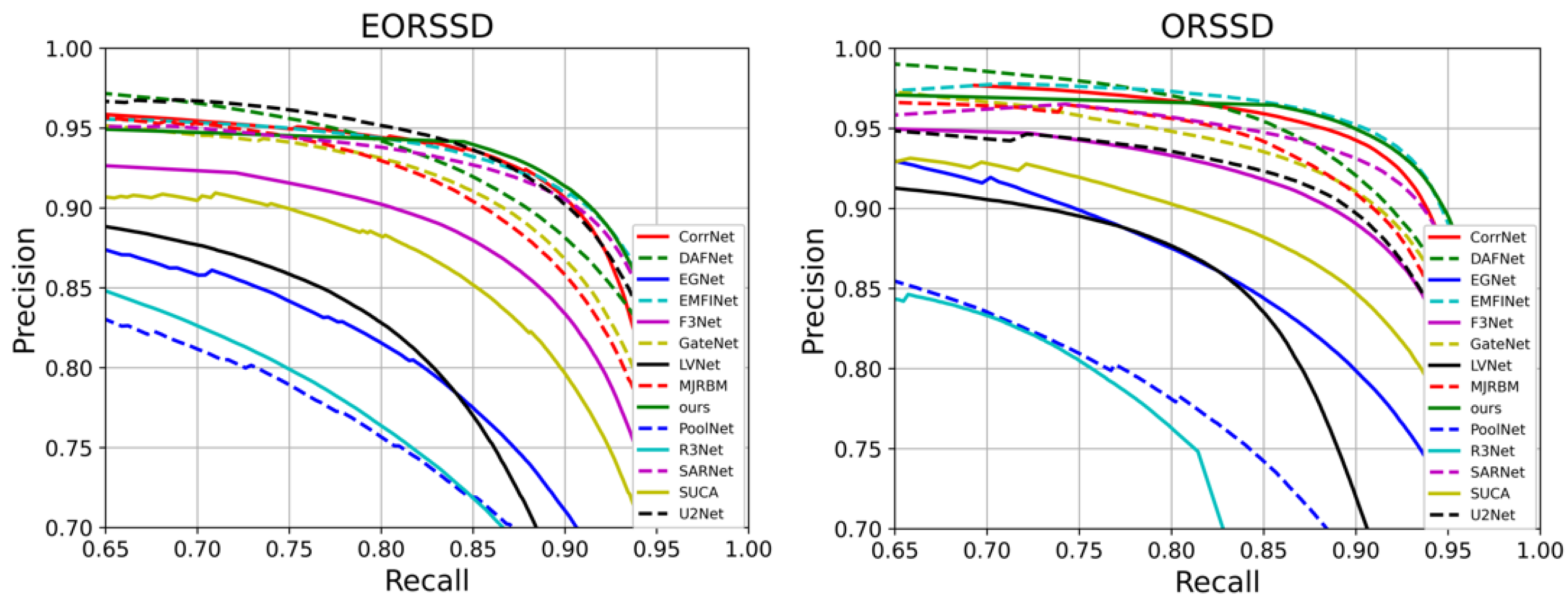

Figure 4 illustrates the PR curves of all methods, where curves closer to the top-right corner indicate superior performance. The results demonstrate that HSIMNet outperforms all competing approaches on both the EORSSD and ORSSD datasets.

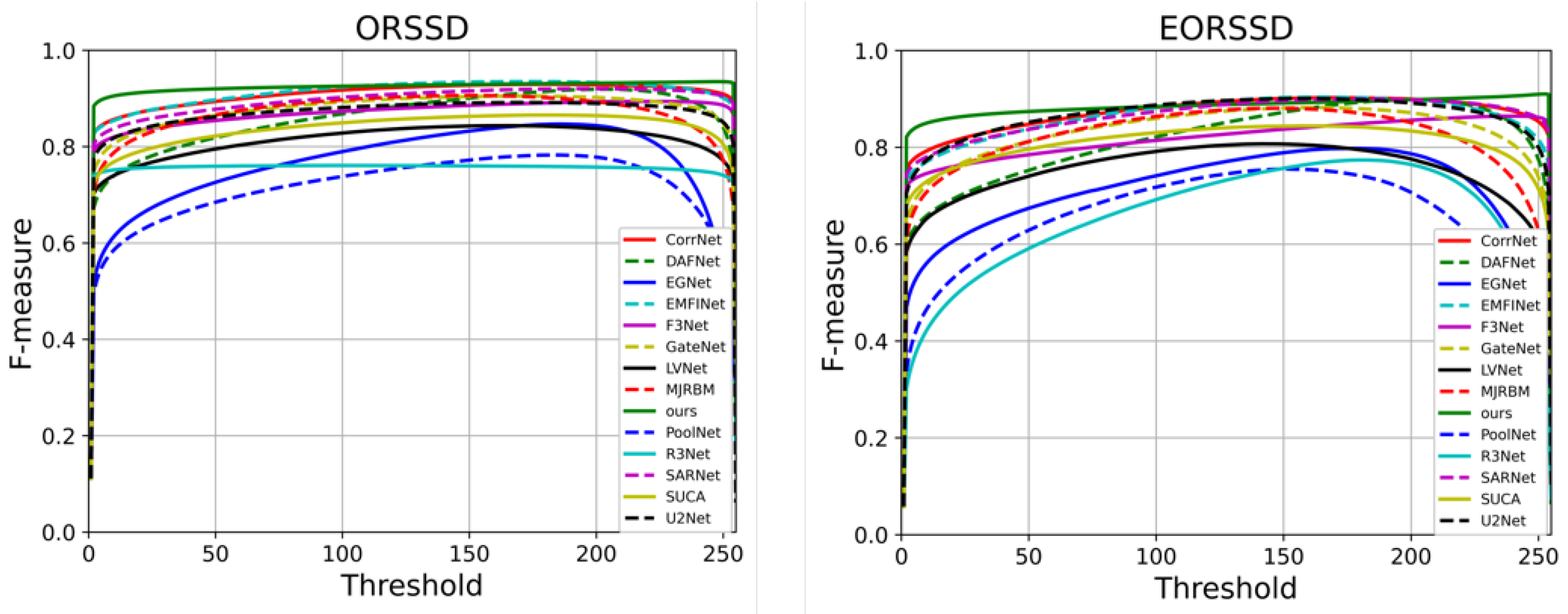

Furthermore, to provide a more detailed comparison,

Figure 5 presents the F-measure curves, where larger enclosed areas correspond to better performance. It can be observed that HSIMNet achieves superior results on both the PR and F-measure curves—the PR curve lies closest to the coordinate point (1, 1), while the F-measure curve encloses the largest area, confirming the model’s overall advantage.

4.4. Qualitative Comparison

Figure 6 presents the qualitative visual comparison of various saliency models applied to optical remote sensing images containing targets such as lakes, roads, and both large and small buildings. All models, including HSIMNet and other deep learning-based approaches, were trained on the same datasets. By accurately localizing and segmenting salient objects in complex and challenging scenes, HSIMNet demonstrates superior overall visual performance compared with the other models.

To more intuitively demonstrate the advantages of HSIMNet, several challenging test samples were selected for comparative analysis. The first column shows the original images, the second displays the corresponding ground truth, the third presents the results of HSIMNet, and the remaining columns depict the outputs of other state-of-the-art salient object detection (SOD) methods. As illustrated in

Figure 6, HSIMNet exhibits exceptional robustness in handling globally salient targets, primarily attributed to the Hierarchical Semantic Interaction Module, which effectively extracts semantic features of optical remote sensing images from multiple perspectives and scales.

Furthermore,

Figure 6 includes several small-scale salient targets, which pose a significant challenge in optical remote sensing saliency detection. HSIMNet accurately captures the shapes of these smaller salient objects and generates fine-grained saliency maps. For instance, in the fourth row of

Figure 6, which depicts a scene containing large buildings, most methods are affected by a narrow road outside the salient region that shares a similar color with the boundary. In contrast, HSIMNet precisely delineates the boundary of the salient region, effectively mitigating interference and accurately localizing the target area. These results further demonstrate the strong saliency detection capability and robustness of HSIMNet across diverse scenarios.

4.5. Ablation Study

In this section, the effectiveness of different modules within the proposed network is analyzed through a series of ablation experiments. Specifically, the Channel Adaptive Enhancement Module and the Position-Aware Spatial Selection Module within the Hierarchical Semantic Interaction Module are examined. To evaluate the contribution of each component, a U-shaped encoder–decoder network with skip connections—excluding the Hierarchical Semantic Interaction Module—is adopted as the baseline model. Subsequently, the individual contributions of each component within the proposed framework are investigated, including the Channel Adaptive Enhancement Module and the Position-Aware Spatial Selection Module. The quantitative results for various module combinations are presented in

Table 2 and

Table 3.

As shown in

Table 2 (EORSSD) and

Table 3 (ORSSD), the model’s performance metrics (maxF, maxE, and Sm) consistently improve with the progressive integration of the key modules (CA and PA). This indicates that each module independently enhances performance to a certain extent, while their combined effect further strengthens overall model capability. When the CA module is introduced individually, a noticeable improvement is observed, demonstrating its effectiveness in enhancing the model’s ability to capture global contextual information and refine saliency detection. Similarly, the inclusion of the PA module yields significant performance gains—particularly on the EORSSD dataset, where the improvements in maxF and Sm are especially prominent—highlighting its strong contribution to fine-grained local feature representation and small-object detection. When both modules are integrated, the model achieves its best overall performance, confirming that the CA and PA modules provide complementary benefits through mutual reinforcement, thereby strengthening both global and local feature extraction capabilities. The baseline model alone struggles to effectively capture global and detailed salient features. With the integration of the CA and PA modules, maxF, maxE, and Sm increase by 0.0274, 0.0184, and 0.0189, respectively, on the EORSSD dataset—an evident improvement that demonstrates the modules’ effectiveness in handling complex scenes featuring diverse salient targets such as lakes and roads. On the ORSSD dataset, the addition of the CA and PA modules results in performance gains of 0.0138, 0.0056, and 0.0123 in maxF, maxE, and Sm, respectively. Although the improvement margin is smaller than that on EORSSD, the enhancement reflects a more refined optimization, indicating the model’s capacity for precision-oriented saliency detection. Overall, these findings confirm that the inclusion of the CA and PA modules significantly enhances model performance, validating the effectiveness of the proposed module design. The greater improvement observed on the EORSSD dataset underscores the modules’ robustness in handling complex environments, while the refined gains on ORSSD highlight the model’s superior precision and adaptability across both complex and simpler scenarios.

We performed an ablation study to evaluate the contribution of each loss term (

Table 4). Using only CE loss results in lower performance (max F = 0.8750, maxE = 0.9550, S-measure = 0.8950). Adding IoU loss or F-measure loss individually improves spatial consistency and metric alignment. The combination of all three losses achieves the best results (max F = 0.9100, maxE = 0.9727, S-measure = 0.9205), demonstrating that each component contributes complementary information and justifying the hybrid loss design.

4.6. Limitations and Future Work

Although the proposed HSIMNet achieves state-of-the-art performance on multiple optical remote sensing salient object detection datasets, several limitations remain. First, the model is trained on datasets that may not fully cover the diversity of real-world remote sensing conditions, such as extreme illumination variations, haze, dense cloud coverage, or sensor-dependent radiometric differences. As a result, the model’s generalization to unseen sensors or complex environmental conditions may be limited. Second, while the hierarchical spectral–spatial interaction modules effectively capture multi-level features, HSIMNet may still struggle with very small or low-contrast salient objects, or in scenarios with highly cluttered backgrounds, due to feature loss in deeper layers. Third, the computational cost during training is relatively high because of the multi-branch interaction structure and high-resolution input. Although inference is efficient on high-end GPUs, deploying HSIMNet on low-resource or edge devices may require model compression, pruning, or knowledge distillation techniques. In future work, we plan to address these limitations by:

(1) Extending the training datasets to include more diverse and challenging remote sensing scenarios to improve generalization.

(2) Exploring adaptive multi-scale feature enhancement and attention mechanisms to better capture very small or low-contrast salient objects.

(3) Developing lightweight and resource-efficient variants of HSIMNet to enable deployment on edge platforms while maintaining competitive accuracy.