Knowledge-Guided Symbolic Regression for Interpretable Camera Calibration

Abstract

1. Introduction

1.1. Motivation and Contributions

- restricts the solution space to interpretable, physically plausible models, reducing the risk of overfitting;

- retains flexibility to discover new hybrid models by composing known distortions;

- enables closed-form, differentiable expressions that can be refined via traditional nonlinear optimization (e.g., Levenberg–Marquardt [6]).

1.2. Summary of Contributions

- A symbolic regression framework for intrinsic calibration, combining GP-based model discovery with domain-specific symbolic grammars;

- An extensible model library incorporating classical and modern distortion formulations;

- An empirical evaluation across diverse simulated lenses, demonstrating competitive or superior reprojection accuracy versus standard models.

2. Related Work

3. Background

3.1. Coordinate Systems

3.2. Projective Geometry

3.2.1. Pinhole Camera Model

3.2.2. Lens Camera Models

Brown–Conrady Model

Rational Distortion Model (Extended Brown–Conrady)

Kannala–Brandt Model

Mei–Rives Model

Equidistant Model

Double-Sphere Model

4. Methodologies

- Optimization Methods

4.1. Planar Pattern-Based Calibration

- Projection and Homography

4.2. Symbolic Camera Calibration

4.2.1. Selecting Camera Models via Symbolic Regression

Genetic Programming Setup

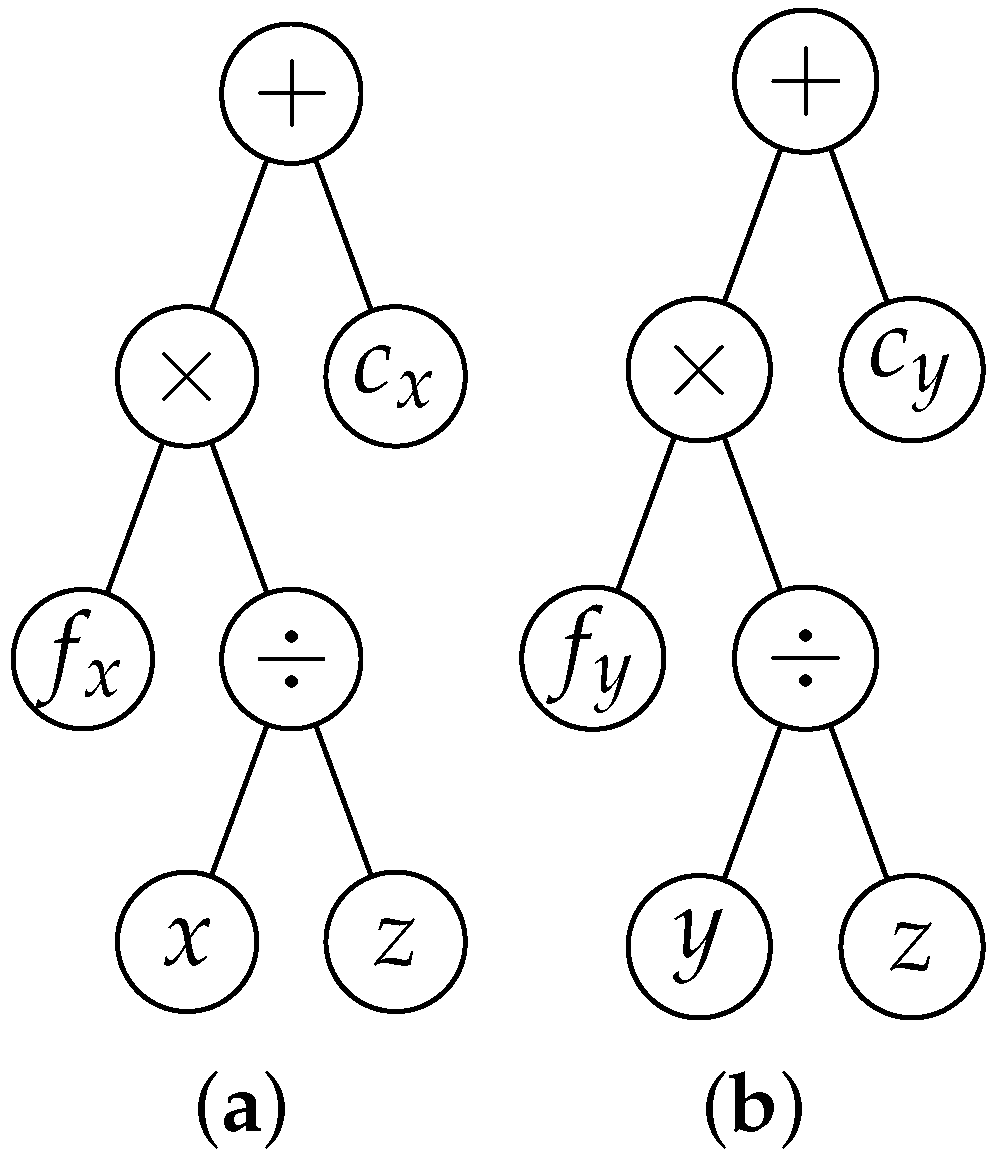

- Representation: Candidate solutions encode parameterized, predefined camera models (e.g., Brown–Conrady, Mei, Kannala–Brandt) as expression trees, combining fixed model structures with variables and constants.

- Initialization: The initial population consists of variants of these predefined models with randomized parameters.

- Fitness: Evaluated via the mean squared error (MSE) between the predicted projections and observed data.

- Selection: Employ roulette wheel or tournament selection to probabilistically favor fitter individuals.

- Genetic Operators:

- –

- Crossover: Exchange parameters or subtrees between parent models, preserving structural validity.

- –

- Mutation: Randomly perturb parameters or substitute subexpressions within models.

- Evolution: New generations are formed through elitism combined with genetic operators until convergence or stopping criteria are met.

4.2.2. Parameter Optimization via Levenberg–Marquardt

5. Experiments

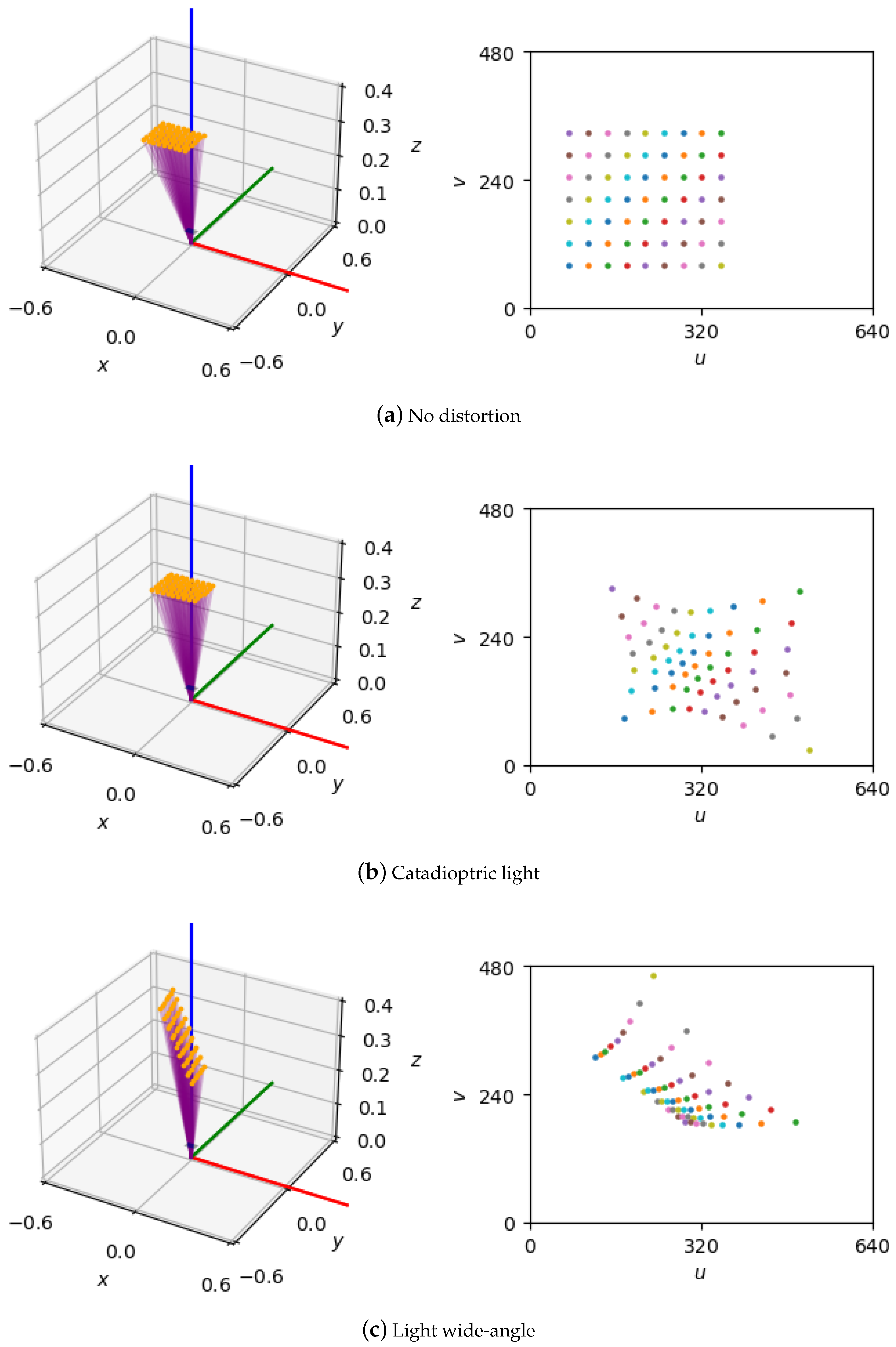

5.1. Numerical Dataset Generation for Camera Calibration

5.2. Estimating the Search Space Size

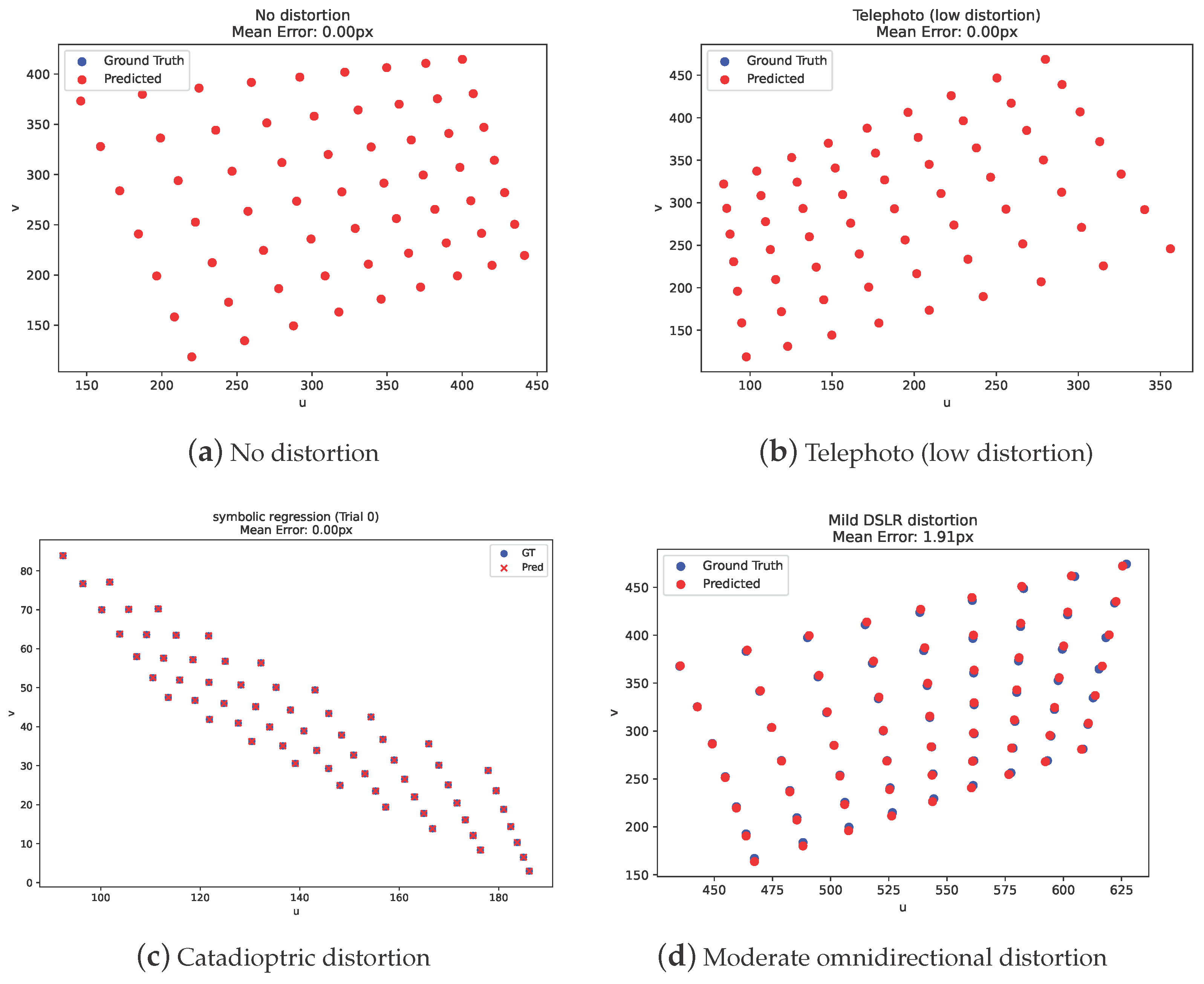

5.3. Evaluating Calibration Performance

- Reprojection Error

6. Conclusions

Current Limitations and Future Work

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Brown, D.C. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A Flexible Technique for Accurate Omnidirectional Camera Calibration and Structure from Motion. In Proceedings of the IEEE International Conference on Computer Vision Systems, New York, NY, USA, 4–7 January 2006. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. In Proceedings of the IEEE Workshop on Stereo and Multi-Baseline Vision (SMBV 2001), Kauai, HI, USA, 9–10 December 2001; Volume 47, pp. 7–42. [Google Scholar]

- Li, X.; Zhang, B.; Sander, P.V.; Liao, J. Blind Geometric Distortion Correction on Images Through Deep Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 16536–16546. [Google Scholar]

- Levenberg, K. A Method for the Solution of Certain Non-Linear Problems in Least Squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localization and Mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Davison, A.J. Real-Time Simultaneous Localization and Mapping with a Single Camera. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Nice, France, 13–16 October 2003; pp. 1403–1410. [Google Scholar]

- Kormushev, P.; Calinon, S.; Caldwell, D.G. Robot Motor Skill Coordination with EM-based Reinforcement Learning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Taipei, Taiwan, 18–22 October 2010; pp. 3232–3237. [Google Scholar]

- Levinson, J.; Thrun, S. Robust Vehicle Localization in Urban Environments Using Probabilistic Maps. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 4372–4378. [Google Scholar]

- Li, J.; Pi, J.; Wei, P.; Luo, Z.; Yan, G. Automatic Multi-Camera Calibration and Refinement Method in Road Scene for Self-Driving Car. IEEE Trans. Intell. Vehicles 2024, 9, 2429–2438. [Google Scholar] [CrossRef]

- Azuma, R.T. A Survey of Augmented Reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Modeling and Rendering Architecture from Photographs: A Hybrid Geometry- and Image-Based Approach. In Proceedings of the SIGGRAPH, New Orleans, LA, USA, 4–9 August 1996; pp. 11–20. [Google Scholar]

- Ranganathan, P.; Olson, E. Gaussian Process for Lens Distortion Modeling. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 3620–3625. [Google Scholar]

- Heikkilä, J.; Silvén, O. A Four-step Camera Calibration Procedure with Implicit Image Correction. In Proceedings of the 1997 Conference on Computer Vision and Pattern Recognition, San Juan, Puerto Rico, 17–19 June 1997; pp. 1106–1112. [Google Scholar]

- Mei, C.; Rives, P. Single View Point Omnidirectional Camera Calibration from Planar Grids. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Rome, Italy, 10–14 April 2007; pp. 3945–3950. [Google Scholar] [CrossRef]

- Schmidt, M.; Lipson, H. Distilling Free-Form Natural Laws from Experimental Data. Science 2009, 324, 81–85. [Google Scholar] [CrossRef] [PubMed]

- Bongard, J.; Lipson, H. Automated Reverse Engineering of Nonlinear Dynamical Systems. Proc. Natl. Acad. Sci. USA 2007, 104, 9943–9948. [Google Scholar] [CrossRef] [PubMed]

- Brown, D.C. Decentering Distortion of Lenses. Photom. Eng. 1966, 32, 444–462. [Google Scholar]

- Kannala, J.; Brandt, S.S. A Generic Camera Model and Calibration Method for Conventional, Wide-Angle, and Fish-Eye Lenses. IEEE Trans. Pattern Anal. Mach. Intell. (PAMI) 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Usenko, V.; Engel, J.; Stückler, J.; Cremers, D. The Double Sphere Camera Model. In Proceedings of the International Conference on 3D Vision (3DV), Verona, Italy, 5–8 September 2018; pp. 552–560. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment—A Modern Synthesis. In Vision Algorithms: Theory and Practice; Lecture Notes in Computer, Science; Kanade, T., Kryszczyk, A., Pajdla, T., Shafique, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1883, pp. 298–372. [Google Scholar] [CrossRef]

- O’Reilly, U.-M. Genetic Programming II: Automatic Discovery of Reusable Programs. Artif. Life 1994, 1, 439–441. [Google Scholar] [CrossRef]

- Makke, N.; Chawla, S. Interpretable scientific discovery with symbolic regression: A review. Artif. Intell. Rev. 2024, 57, 2. [Google Scholar] [CrossRef]

- Wiener, R. Expression Trees. In Generic Data Structures and Algorithms in Go: An Applied Approach Using Concurrency, Genericity and Heuristics; Apress: Berkeley, CA, USA, 2022; pp. 387–399. [Google Scholar] [CrossRef]

- Manti, S.; Lucantonio, A. Discovering interpretable physical models using symbolic regression and discrete exterior calculus. Mach. Learn. Sci. Technol. 2024, 5, 015005. [Google Scholar] [CrossRef]

- Bartlett, D.J.; Desmond, H.; Ferreira, P.G. Exhaustive Symbolic Regression. IEEE Trans. Evol. Comput. 2023, 28, 950–964. [Google Scholar] [CrossRef]

- Xu, Q.S.; Liang, Y.Z. Monte Carlo cross validation. Chemom. Intell. Lab. Syst. 2001, 56, 1–11. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

| Parameter | Value |

|---|---|

| Resolution | 640 × 480 pixels |

| Focal length | 35 mm |

| Skew | 0 |

| Principal point | (320, 240) |

| Aspect ratio | 0.75 |

| Label | Model | Coefficients |

|---|---|---|

| No distortion | Pinhole | [] |

| Telephoto (low distortion) | Brown–Conrady | [−0.01, 0.001, 0.0001, −0.0002, 0.0] |

| Light fisheye | Kannala–Brandt | [0.05, −0.01, 0.005, −0.001] |

| Catadioptric light | Mei–Rives | [0.5] |

| Moderate omnidirectional | Mei–Rives | [1.0] |

| 360 camera | Mei–Rives | [1.5] |

| Extreme hyperbolic | Mei–Rives | [2.0] |

| Light wide-angle | Equidistant | [0.01, −0.005, 0.0, 0.0] |

| Primitive Name | Description | Purpose in Camera Modeling | Arguments |

|---|---|---|---|

| normalize | Computes normalized image plane coordinates: or | Projects 3D points to 2D before applying distortion or intrinsics | X or Y, Z |

| linear_affine | Applies | Models scaling and shifting (e.g., focal length, principal point) | x or y, scale, offset |

| brown_conrady | Classical Brown–Conrady radial–tangential model | Captures lens distortion using radial and tangential terms | x or y, y or x, k1, k2, p1, p2, k3 |

| kannala_brandt | Odd-order polynomial fisheye model | Models extreme wide-angle distortions | x or y, y or x, k0, k1, k2, k3 |

| mei_rives | Spherical projection with mirror parameter | For central catadioptric (mirror-based) systems | X or Y, Y or X, Z, |

| equidistant | Equidistant fisheye model | Ensures angle from optical axis maps linearly to radius | x or y, y or x, k1, k2, k3, k4 |

| double_sphere | Two-sphere projection model with , | Models ultra-wide FOV more accurately than pinhole | X or Y, Y or X, Z, , |

| rational | Rational model: | Flexible model using polynomial numerator/denominator | x, y, k1–k6 |

| omnidirectional | Polynomial mapping for omnicameras | Approximates wide-angle views with polynomial terms | x or y, y or x, c0–c3 |

| Parameter | Value |

|---|---|

| Population Size () | 100 |

| Generations () | 100 |

| Multi-Island Model () | 10 |

| Crossover Probability () | 0.7 |

| Mutation Probability () | 0.3 |

| Dataset | Camera Calibration Model | ||

|---|---|---|---|

| Pinhole | Rational | Symbolic Regression | |

| No distortion | 0.000 ± 0.000 | 0.000 ± 0.000 | 0.000 ± 0.000 |

| Telephoto (low distortion) | 0.000 ± 0.000 | 0.000 ± 0.000 | 0.000 ± 0.000 |

| Light fisheye | 0.716 ± 0.505 | 0.764 ± 0.536 | 0.000 ± 0.000 |

| Catadioptric light | 0.147 ± 0.091 | 0.125 ± 0.089 | 0.000 ± 0.000 |

| Moderate omnidirectional | 0.406 ± 0.331 | 0.376 ± 0.322 | 0.286 ± 1.648 |

| 360 camera | 0.745 ± 0.487 | 0.725 ± 0.484 | 3.323 ± 4.915 |

| Extreme hyperbolic | 0.358 ± 0.217 | 0.644 ± 0.480 | 1.778 ± 2.611 |

| Light wide-angle | 0.291 ± 0.203 | 0.265 ± 0.175 | 0.000 ± 0.000 |

| Dataset | Symbolic Model |

|---|---|

| No distortion (Best U) | |

| No distortion (Best V) | |

| Telephoto (low distortion) (Best U) | |

| Telephoto (low distortion) (Best V) | |

| Light fisheye (Best U) | |

| Light fisheye (Best V) | |

| Catadioptric light (Best U) | |

| Catadioptric light (Best V) | |

| Moderate omnidirectional (Best U) | |

| Moderate omnidirectional (Best V) | |

| 360 camera (Best U) | |

| 360 camera (Best V) | |

| Extreme hyperbolic (Best U) | |

| Extreme hyperbolic (Best V) | |

| Light wide-angle (Best U) | |

| Light wide-angle (Best V) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pimentel de Figueiredo, R. Knowledge-Guided Symbolic Regression for Interpretable Camera Calibration. J. Imaging 2025, 11, 389. https://doi.org/10.3390/jimaging11110389

Pimentel de Figueiredo R. Knowledge-Guided Symbolic Regression for Interpretable Camera Calibration. Journal of Imaging. 2025; 11(11):389. https://doi.org/10.3390/jimaging11110389

Chicago/Turabian StylePimentel de Figueiredo, Rui. 2025. "Knowledge-Guided Symbolic Regression for Interpretable Camera Calibration" Journal of Imaging 11, no. 11: 389. https://doi.org/10.3390/jimaging11110389

APA StylePimentel de Figueiredo, R. (2025). Knowledge-Guided Symbolic Regression for Interpretable Camera Calibration. Journal of Imaging, 11(11), 389. https://doi.org/10.3390/jimaging11110389