Multi-Channel Spectro-Temporal Representations for Speech-Based Parkinson’s Disease Detection

Abstract

1. Introduction

2. Materials and Methods

2.1. Dataset

2.2. Data Preprocessing

2.3. Multi-Channel Feature Extraction

2.4. Deep-Learning Models

2.5. Experimental Setup

2.6. Evaluation Metrics

2.7. Interpreting Model Predictions with SHAP

3. Results and Discussion

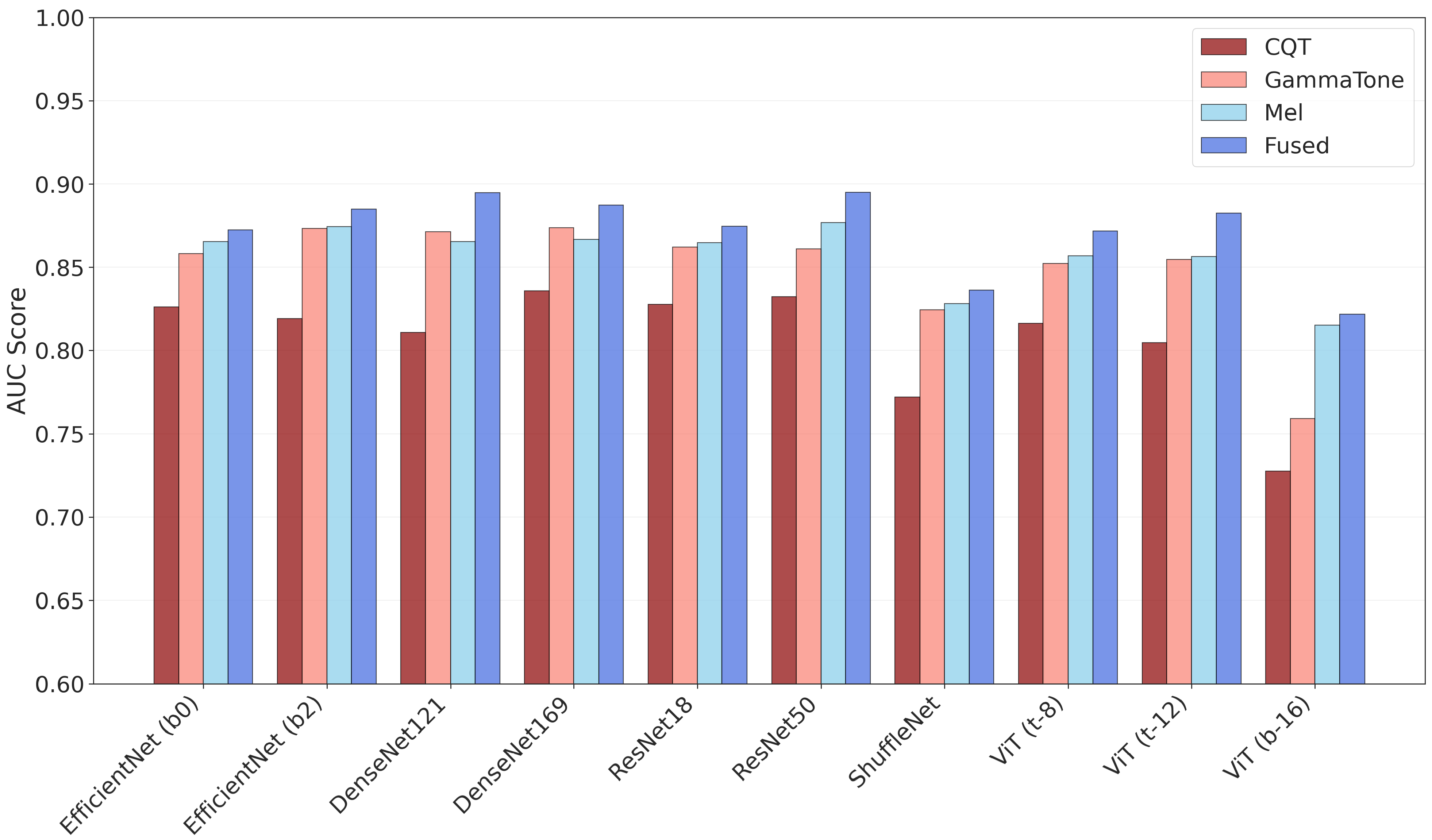

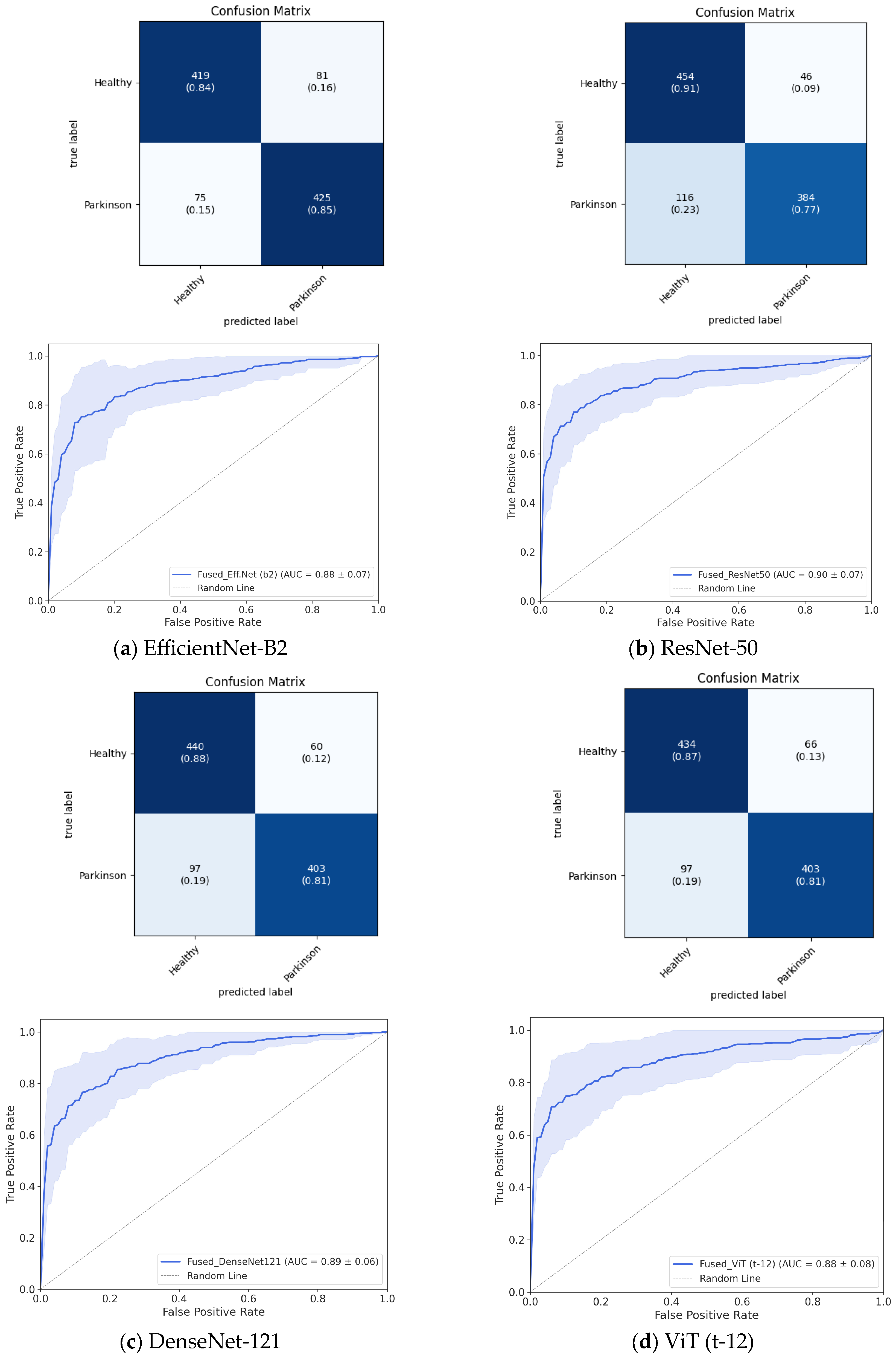

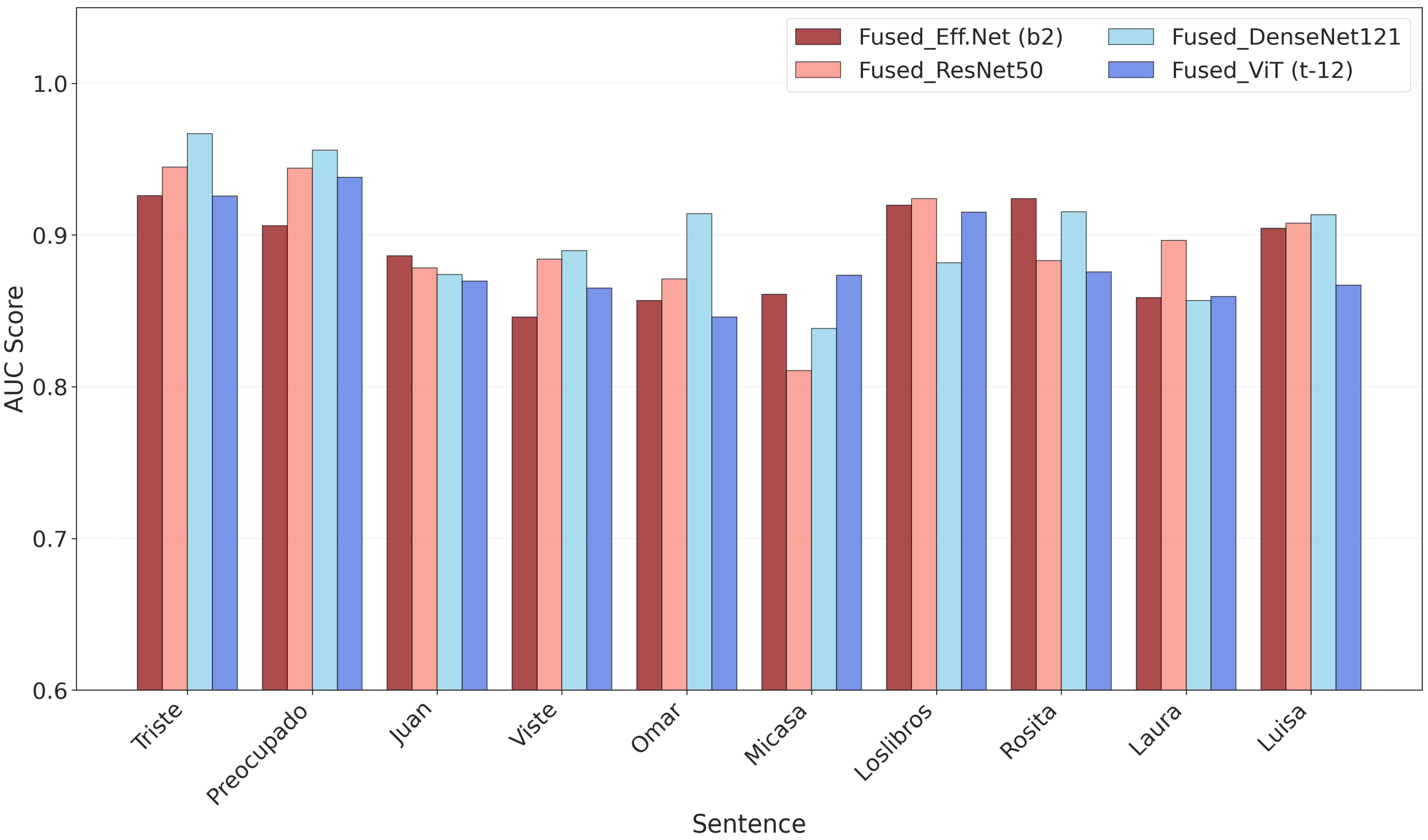

3.1. Classification Performance

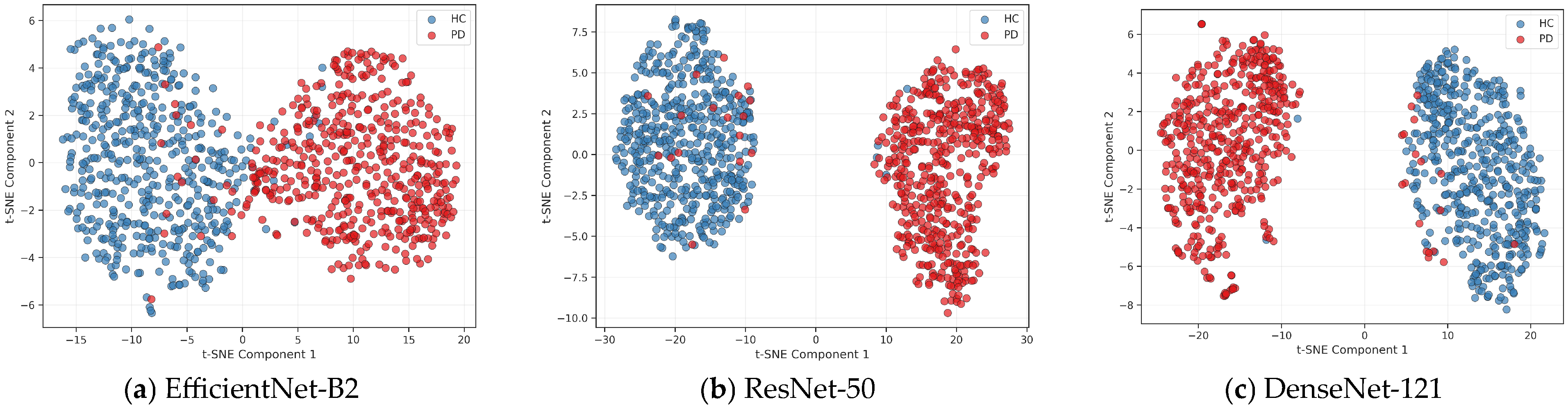

3.2. SHAP and t-SNE Feature Visualization

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PD | Parkinson’s Disease |

| HC | Healthy Controls |

| CQT | Constant-Q Transform |

| CNN | Convolutional Neural Network |

| ViT | Vision Transformer |

| SHAP | SHapley Additive exPlanations |

| AUC | Area Under the ROC Curve |

| ROC | Receiver Operating Characteristic |

| TP | True Positive |

| TN | True Negative |

| FP | False Positive |

| FN | False Negative |

| ERB | Equivalent Rectangular Bandwidth |

| F1 | F1-Score |

| PC-GITA | Parkinson’s Spanish Speech Corpus |

| RGB | Red–Green–Blue |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

| AdamW | Adaptive Moment Estimation with Weight Decay |

| dB | Decibel |

| UPDRS | Unified Parkinson’s Disease Rating Scale |

| Mel | Mel spectrogram (used contextually) |

| Gamma | Gammatone Spectrogram (used contextually) |

References

- Moustafa, A.A.; Chakravarthy, S.; Phillips, J.R.; Gupta, A.; Keri, S.; Polner, B.; Frank, M.J.; Jahanshahi, M. Motor Symptoms in Parkinson’s Disease: A Unified Framework. Neurosci. Biobehav. Rev. 2016, 68, 727–740. [Google Scholar] [CrossRef]

- Mei, J.; Desrosiers, C.; Frasnelli, J. Machine Learning for the Diagnosis of Parkinson’s Disease: A Review of Literature. Front. Aging Neurosci. 2021, 13, 633752. [Google Scholar] [CrossRef] [PubMed]

- Ramig, L.A.; Fox, C.M. Treatment of Dysarthria in Parkinson Disease. In Therapy of Movement Disorders; Reich, S.G., Factor, S.A., Eds.; Current Clinical Neurology; Springer International Publishing: Cham, Switzerland, 2019; pp. 37–39. ISBN 978-3-319-97896-3. [Google Scholar]

- Rusz, J.; Cmejla, R.; Ruzickova, H.; Ruzicka, E. Quantitative Acoustic Measurements for Characterization of Speech and Voice Disorders in Early Untreated Parkinson’s Disease. J. Acoust. Soc. Am. 2011, 129, 350–367. [Google Scholar] [CrossRef] [PubMed]

- Cao, F.; Vogel, A.P.; Gharahkhani, P.; Renteria, M.E. Speech and Language Biomarkers for Parkinson’s Disease Prediction, Early Diagnosis and Progression. NPJ Park. Dis. 2025, 11, 57. [Google Scholar] [CrossRef] [PubMed]

- Bridges, B.; Taylor, J.; Weber, J.T. Evaluation of the Parkinson’s Remote Interactive Monitoring System in a Clinical Setting: Usability Study. JMIR Hum. Factors 2024, 11, e54145. [Google Scholar] [CrossRef]

- Hssayeni, M.D.; Jimenez-Shahed, J.; Burack, M.A.; Ghoraani, B. Symptom-Based, Dual-Channel LSTM Network for The Estimation of Unified Parkinson’s Disease Rating Scale III. In Proceedings of the 2019 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), Chicago, IL, USA, 19–22 May 2019; pp. 1–4. [Google Scholar]

- Ngo, Q.C.; Motin, M.A.; Pah, N.D.; Drotár, P.; Kempster, P.; Kumar, D. Computerized Analysis of Speech and Voice for Parkinson’s Disease: A Systematic Review. Comput. Methods Programs Biomed. 2022, 226, 107133. [Google Scholar] [CrossRef]

- Govindu, A.; Palwe, S. Early Detection of Parkinson’s Disease Using Machine Learning. Procedia Comput. Sci. 2023, 218, 249–261. [Google Scholar] [CrossRef]

- Wang, Q.; Fu, Y.; Shao, B.; Chang, L.; Ren, K.; Chen, Z.; Ling, Y. Early Detection of Parkinson’s Disease from Multiple Signal Speech: Based on Mandarin Language Dataset. Front. Aging Neurosci. 2022, 14, 1036588. [Google Scholar] [CrossRef]

- Motin, M.A.; Pah, N.D.; Raghav, S.; Kumar, D.K. Parkinson’s Disease Detection Using Smartphone Recorded Phonemes in Real World Conditions. IEEE Access 2022, 10, 97600–97609. [Google Scholar] [CrossRef]

- Mamun, M.; Mahmud, M.I.; Hossain, M.I.; Islam, A.M.; Ahammed, M.S.; Uddin, M.M. Vocal Feature Guided Detection of Parkinson’s Disease Using Machine Learning Algorithms. In Proceedings of the 2022 IEEE 13th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 26–29 October 2022; IEEE: New York, NY, USA, 2022; pp. 0566–0572. [Google Scholar]

- Hireš, M.; Drotár, P.; Pah, N.D.; Ngo, Q.C.; Kumar, D.K. On the Inter-Dataset Generalization of Machine Learning Approaches to Parkinson’s Disease Detection from Voice. Int. J. Med. Inf. 2023, 179, 105237. [Google Scholar] [CrossRef]

- Van Gelderen, L.; Tejedor-García, C. Innovative Speech-Based Deep Learning Approaches for Parkinson’s Disease Classification: A Systematic Review. Appl. Sci. 2024, 14, 7873. [Google Scholar] [CrossRef]

- Hireš, M.; Gazda, M.; Drotár, P.; Pah, N.D.; Motin, M.A.; Kumar, D.K. Convolutional Neural Network Ensemble for Parkinson’s Disease Detection from Voice Recordings. Comput. Biol. Med. 2022, 141, 105021. [Google Scholar] [CrossRef] [PubMed]

- Quan, C.; Ren, K.; Luo, Z.; Chen, Z.; Ling, Y. End-to-End Deep Learning Approach for Parkinson’s Disease Detection from Speech Signals. Biocybern. Biomed. Eng. 2022, 42, 556–574. [Google Scholar] [CrossRef]

- Balaji, E.; Brindha, D.; Vinodh, K.E.; Vikrama, R. Automatic and Non-Invasive Parkinson’s Disease Diagnosis and Severity Rating Using LSTM Network. Appl. Soft Comput. 2021, 108, 107463. [Google Scholar] [CrossRef]

- Rey-Paredes, M.; Pérez, C.J.; Mateos-Caballero, A. Time Series Classification of Raw Voice Waveforms for Parkinson’s Disease Detection Using Generative Adversarial Network-Driven Data Augmentation. IEEE Open J. Comput. Soc. 2025, 6, 72–84. [Google Scholar] [CrossRef]

- Moro-Velazquez, L.; Gomez-Garcia, J.A.; Arias-Londoño, J.D.; Dehak, N.; Godino-Llorente, J.I. Advances in Parkinson’s Disease Detection and Assessment Using Voice and Speech: A Review of the Articulatory and Phonatory Aspects. Biomed. Signal Process. Control 2021, 66, 102418. [Google Scholar] [CrossRef]

- Costantini, G.; Cesarini, V.; Di Leo, P.; Amato, F.; Suppa, A.; Asci, F.; Pisani, A.; Calculli, A.; Saggio, G. Artificial Intelligence-Based Voice Assessment of Patients with Parkinson’s Disease Off and On Treatment: Machine vs. Deep-Learning Comparison. Sensors 2023, 23, 2293. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762v7. [Google Scholar]

- Tougui, I.; Zakroum, M.; Karrakchou, O.; Ghogho, M. Transformer-Based Transfer Learning on Self-Reported Voice Recordings for Parkinson’s Disease Diagnosis. Sci. Rep. 2024, 14, 30131. [Google Scholar] [CrossRef]

- Klempir, O.; Skryjova, A.; Tichopad, A.; Krupicka, R. Ranking Pre-Trained Speech Embeddings in Parkinson’s Disease Detection: Does Wav2Vec 2.0 Outperform Its 1.0 Version across Speech Modes and Languages? Comput. Struct. Biotechnol. J. 2025, 27, 2584–2601. [Google Scholar] [CrossRef]

- Klempíř, O.; Krupička, R. Analyzing Wav2Vec 1.0 Embeddings for Cross-Database Parkinson’s Disease Detection and Speech Features Extraction. Sensors 2024, 24, 5520. [Google Scholar] [CrossRef]

- Favaro, A.; Tsai, Y.-T.; Butala, A.; Thebaud, T.; Villalba, J.; Dehak, N.; Moro-Velázquez, L. Interpretable Speech Features vs. DNN Embeddings: What to Use in the Automatic Assessment of Parkinson’s Disease in Multi-Lingual Scenarios. Comput. Biol. Med. 2023, 166, 107559. [Google Scholar] [CrossRef]

- Hemmerling, D.; Wodzinski, M.; Orozco-Arroyave, J.R.; Sztaho, D.; Daniol, M.; Jemiolo, P.; Wojcik-Pedziwiatr, M. Vision Transformer for Parkinson’s Disease Classification Using Multilingual Sustained Vowel Recordings. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2023, 2023, 1–4. [Google Scholar] [CrossRef]

- Adnan, T.; Abdelkader, A.; Liu, Z.; Hossain, E.; Park, S.; Islam, M.S.; Hoque, E. A Novel Fusion Architecture for Detecting Parkinson’s Disease Using Semi-Supervised Speech Embeddings. NPJ Park. Dis. 2025, 11, 176. [Google Scholar] [CrossRef]

- van der Maaten, L.; Hinton, G. Visualizing Data Using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Lundberg, S.M.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Ibarra, E.J.; Arias-Londoño, J.D.; Zañartu, M.; Godino-Llorente, J.I. Towards a Corpus (and Language)-Independent Screening of Parkinson’s Disease from Voice and Speech through Domain Adaptation. Bioengineering 2023, 10, 1316. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Liu, Z.; Luo, X.; Zhao, H. Diagnosis of Parkinson’s Disease Based on SHAP Value Feature Selection. Biocybern. Biomed. Eng. 2022, 42, 856–869. [Google Scholar] [CrossRef]

- Meral, M.; Ozbilgin, F.; Durmus, F. Fine-Tuned Machine Learning Classifiers for Diagnosing Parkinson’s Disease Using Vocal Characteristics: A Comparative Analysis. Diagnostics 2025, 15, 645. [Google Scholar] [CrossRef] [PubMed]

- Alalayah, K.M.; Senan, E.M.; Atlam, H.F.; Ahmed, I.A.; Shatnawi, H.S.A. Automatic and Early Detection of Parkinson’s Disease by Analyzing Acoustic Signals Using Classification Algorithms Based on Recursive Feature Elimination Method. Diagnostics 2023, 13, 1924. [Google Scholar] [CrossRef]

- Shen, M.; Mortezaagha, P.; Rahgozar, A. Explainable Artificial Intelligence to Diagnose Early Parkinson’s Disease via Voice Analysis. Sci. Rep. 2025, 15, 11687. [Google Scholar] [CrossRef]

- Oiza-Zapata, I.; Gallardo-Antolín, A. Alzheimer’s Disease Detection from Speech Using Shapley Additive Explanations for Feature Selection and Enhanced Interpretability. Electronics 2025, 14, 2248. [Google Scholar] [CrossRef]

- Orozco-Arroyave, J.R.; Noth, E. New Spanish Speech Corpus Database for the Analysis of People Suffering from Parkinson’s Disease. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; European Language Resources Association (ELRA): Paris, France, 2014; pp. 342–347. [Google Scholar]

- Patterson, R.D.; Robinson, K.; Holdsworth, J.; McKeown, D.; Zhang, C.; Allerhand, M. Complex Sounds and Auditory Images. In Auditory Physiology and Perception; Elsevier: Amsterdam, The Netherlands, 1992; pp. 429–446. ISBN 978-0-08-041847-6. [Google Scholar]

- Ayoub, B.; Jamal, K.; Arsalane, Z. Gammatone Frequency Cepstral Coefficients for Speaker Identification over VoIP Networks. In Proceedings of the 2016 International Conference on Information Technology for Organizations Development (IT4OD), Fez, Morocco, 30 March–1 April 2016; pp. 1–5. [Google Scholar]

- Lidy, T.; Schindler, A. CQT-Based Convolutional Neural Networks for Audio Scene Classification. In Proceedings of the Workshop on Detection and Classification of Acoustic Scenes and Events, Budapest, Hungary, 3 September 2016. [Google Scholar]

- Cnockaert, L.; Schoentgen, J.; Auzou, P.; Ozsancak, C.; Defebvre, L.; Grenez, F. Low-Frequency Vocal Modulations in Vowels Produced by Parkinsonian Subjects. Speech Commun. 2008, 50, 288–300. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE Conference Publication. IEEE: Piscataway, NJ, USA, 2009. Available online: https://ieeexplore.ieee.org/document/5206848 (accessed on 20 August 2025).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.-T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the Computer Vision–ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 122–138. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Gong, Y.; Chung, Y.-A.; Glass, J. AST: Audio Spectrogram Transformer. In Proceedings of the Interspeech 2021, Brno, Czech Republic, 30 August–3 September 2021; pp. 571–575. [Google Scholar]

- Jeong, S.-M.; Kim, S.; Lee, E.C.; Kim, H.J. Exploring Spectrogram-Based Audio Classification for Parkinson’s Disease: A Study on Speech Classification and Qualitative Reliability Verification. Sensors 2024, 24, 4625. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019; Wallach, H., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2019; pp. 8024–8035. [Google Scholar]

- McKinney, W. Data Structures for Statistical Computing in Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 56–61. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array Programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Hunter, J.D. Matplotlib: A 2D Graphics Environment. Comput. Sci. Eng. 2007, 9, 90–95. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Narendra, N.P.; Alku, P. Dysarthric Speech Classification from Coded Telephone Speech Using Glottal Features. Speech Commun. 2019, 110, 47–55. [Google Scholar] [CrossRef]

- Quatra, M.L.; Turco, M.F.; Svendsen, T.; Salvi, G.; Orozco-Arroyave, J.R.; Siniscalchi, S.M. Exploiting Foundation Models and Speech Enhancement for Parkinson’s Disease Detection from Speech in Real-World Operative Conditions. In Proceedings of the Interspeech 2024, Kos, Greece, 1–5 September 2024; pp. 1405–1409. [Google Scholar]

- Zhong, T.Y.; Janse, E.; Tejedor-Garcia, C.; ten Bosch, L.; Larson, M. Evaluating the Usefulness of Non-Diagnostic Speech Data for Developing Parkinson’s Disease Classifiers. In Proceedings of the Interspeech 2025, Rotterdam, NL, USA, 17–21 August 2025; pp. 3738–3742. [Google Scholar]

- Yang, S.; Wang, F.; Yang, L.; Xu, F.; Luo, M.; Chen, X.; Feng, X.; Zou, X. The Physical Significance of Acoustic Parameters and Its Clinical Significance of Dysarthria in Parkinson’s Disease. Sci. Rep. 2020, 10, 11776. [Google Scholar] [CrossRef]

- Villa-Cañas, T.; Arias-Londoño, J.D.; Orozco-Arroyave, J.R.; Vargas-Bonilla, J.F.; Nöth, E. Low-Frequency Components Analysis in Running Speech for the Automatic Detection of Parkinson’s Disease. In Proceedings of the Interspeech 2015, Dresden, Germany, 6–10 September 2015; pp. 100–104. [Google Scholar]

| Hyperparameter | Values |

|---|---|

| Epochs | 50 |

| Batch size | 16 |

| Initial Learning Rate | 3 × 10−4 |

| Optimizer | AdamW (β1 = 0.9, β2 = 0.999, Weight decay = 0.01) |

| Scheduler | Cosine annealing scheduler with initial warm-up |

| Loss | Cross-entropy loss |

| Model | Input Rep. a | Accuracy | F1-Score | Sensitivity | Precision | Specificity | AUC |

|---|---|---|---|---|---|---|---|

| Eff.Net-B2 | CQT | 0.772 ± 0.086 | 0.770 ± 0.085 | 0.768 ± 0.126 | 0.787 ± 0.108 | 0.778 ± 0.126 | 0.819 ± 0.094 |

| Mel | 0.828 ± 0.084 | 0.825 ± 0.089 | 0.828 ± 0.145 | 0.837 ± 0.084 | 0.831 ± 0.112 | 0.874 ± 0.094 | |

| Gamma | 0.822 ± 0.074 | 0.806 ± 0.092 | 0.766 ± 0.156 | 0.876 ± 0.079 | 0.881 ± 0.084 | 0.873 ± 0.075 | |

| Fused | 0.843 ± 0.051 | 0.843 ± 0.055 | 0.850 ± 0.098 | 0.845 ± 0.066 | 0.838 ± 0.081 | 0.884 ± 0.073 | |

| DenseNet-121 | CQT | 0.777 ± 0.084 | 0.781 ± 0.087 | 0.806 ± 0.131 | 0.768 ± 0.084 | 0.748 ± 0.112 | 0.810 ± 0.116 |

| Mel | 0.830 ± 0.072 | 0.819 ± 0.087 | 0.798 ± 0.151 | 0.868 ± 0.083 | 0.864 ± 0.118 | 0.865 ± 0.106 | |

| Gamma | 0.818 ± 0.068 | 0.808 ± 0.077 | 0.778 ± 0.121 | 0.853 ± 0.072 | 0.861 ± 0.080 | 0.871 ± 0.075 | |

| Fused | 0.842 ± 0.061 | 0.831 ± 0.077 | 0.806 ± 0.148 | 0.881 ± 0.075 | 0.883 ± 0.091 | 0.894 ± 0.063 | |

| ResNet-50 | CQT | 0.784 ± 0.083 | 0.764 ± 0.111 | 0.736 ± 0.179 | 0.824 ± 0.090 | 0.836 ± 0.094 | 0.832 ± 0.091 |

| Mel | 0.799 ± 0.067 | 0.783 ± 0.092 | 0.764 ± 0.183 | 0.844 ± 0.091 | 0.839 ± 0.120 | 0.876 ± 0.095 | |

| Gamma | 0.817 ± 0.073 | 0.807 ± 0.083 | 0.778 ± 0.128 | 0.854 ± 0.079 | 0.858 ± 0.089 | 0.861 ± 0.086 | |

| Fused | 0.837 ± 0.064 | 0.823 ± 0.072 | 0.768 ± 0.099 | 0.895 ± 0.067 | 0.908 ± 0.061 | 0.895 ± 0.074 | |

| ShuffleNet | CQT | 0.727 ± 0.072 | 0.707 ± 0.092 | 0.676 ± 0.133 | 0.753 ± 0.065 | 0.774 ± 0.078 | 0.772 ± 0.091 |

| Mel | 0.790 ± 0.082 | 0.774 ± 0.098 | 0.750 ± 0.168 | 0.824 ± 0.080 | 0.833 ± 0.094 | 0.828 ± 0.106 | |

| Gamma | 0.771 ± 0.066 | 0.755 ± 0.076 | 0.718 ± 0.133 | 0.822 ± 0.109 | 0.824 ± 0.123 | 0.824 ± 0.065 | |

| Fused | 0.800 ± 0.079 | 0.790 ± 0.089 | 0.774 ± 0.152 | 0.831 ± 0.104 | 0.829 ± 0.122 | 0.836 ± 0.087 | |

| ViT (t) | CQT | 0.751 ± 0.086 | 0.743 ± 0.088 | 0.720 ± 0.105 | 0.776 ± 0.096 | 0.782 ± 0.119 | 0.804 ± 0.106 |

| Mel | 0.789 ± 0.080 | 0.768 ± 0.114 | 0.738 ± 0.183 | 0.839 ± 0.084 | 0.842 ± 0.136 | 0.856 ± 0.095 | |

| Gamma | 0.818 ± 0.085 | 0.800 ± 0.110 | 0.764 ± 0.173 | 0.865 ± 0.063 | 0.876 ± 0.065 | 0.854 ± 0.103 | |

| Fused | 0.836 ± 0.073 | 0.828 ± 0.081 | 0.806 ± 0.134 | 0.867 ± 0.080 | 0.867 ± 0.100 | 0.882 ± 0.087 |

| Demographic Property | Model | Group | Number of Samples | Group Accuracy | p-Value | Significant? |

|---|---|---|---|---|---|---|

| Gender | DenseNet-121 | Male | 500 | 0.8420 | 0.9037 | No |

| Female | 500 | 0.8440 | ||||

| ResNet-50 | Male | 500 | 0.8080 | 0.0993 | No | |

| Female | 500 | 0.8680 | ||||

| EfficientNet-B2 | Male | 500 | 0.8260 | 0.3845 | No | |

| Female | 500 | 0.8520 | ||||

| Age | DenseNet-121 | Above 62 | 530 | 0.8532 | 0.5275 | No |

| 62 and below | 470 | 0.8340 | ||||

| ResNet-50 | Above 62 | 530 | 0.8787 | 0.1197 | No | |

| 62 and below | 470 | 0.8019 | ||||

| EfficientNet-B2 | Above 62 | 530 | 0.8553 | 0.4260 | No | |

| 62 and below | 470 | 0.8245 |

| Study | F1-Score [%] | Approach |

|---|---|---|

| A. Favaro et al. [25] | 57% a | XGBoost on prosodic, linguistic, and cognitive handcrafted descriptors. |

| A. Favaro et al. [25] | 82% a | Wav2Vec2.0/HuBERT/TRILLsson embeddings from raw audio; pooled + simple classifier. |

| N. Narendra et al. [55] | 68.52 ± 8.85 | 1D-CNN on raw glottal flow (QCP inverse filtering); compared to SVM on handcrafted features. |

| M. L. Quatra et al. [56] | 81.99 ± 8.34 | WavLM base fine-tuned; layer-weighted sum + attention pooling. |

| M. L. Quatra et al. [56] | 80.84 ± 9.17 | HuBERT base, with the same setup, combined with WavLM for optimal performance. |

| T. Yi Zhong et al. [57] | 74.19 ± 10.80 | WavLM base fine-tuned; attention pooling; tested on conversational (TT) vs. clinical (PC-GITA) data. |

| Current Study | 84.35 ± 5.52 | EfficientNet-B2, using multi-channel spectrograms. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Malekroodi, H.S.; Madusanka, N.; Lee, B.-i.; Yi, M. Multi-Channel Spectro-Temporal Representations for Speech-Based Parkinson’s Disease Detection. J. Imaging 2025, 11, 341. https://doi.org/10.3390/jimaging11100341

Malekroodi HS, Madusanka N, Lee B-i, Yi M. Multi-Channel Spectro-Temporal Representations for Speech-Based Parkinson’s Disease Detection. Journal of Imaging. 2025; 11(10):341. https://doi.org/10.3390/jimaging11100341

Chicago/Turabian StyleMalekroodi, Hadi Sedigh, Nuwan Madusanka, Byeong-il Lee, and Myunggi Yi. 2025. "Multi-Channel Spectro-Temporal Representations for Speech-Based Parkinson’s Disease Detection" Journal of Imaging 11, no. 10: 341. https://doi.org/10.3390/jimaging11100341

APA StyleMalekroodi, H. S., Madusanka, N., Lee, B.-i., & Yi, M. (2025). Multi-Channel Spectro-Temporal Representations for Speech-Based Parkinson’s Disease Detection. Journal of Imaging, 11(10), 341. https://doi.org/10.3390/jimaging11100341