1. Introduction

Fatigue driving refers to the impairment of a driver’s physiological and psychological functions due to sleep deprivation or prolonged driving, leading to slower reaction times and diminished driving skills. This increases the risk of delayed operations and imprecise steering corrections, significantly increasing the risk of traffic accidents [

1,

2,

3]. To improve road safety, researchers worldwide are actively developing real-time driver state monitoring systems to detect fatigue status and issue timely warnings.

Extensive research has been carried out both domestically and internationally in the field of driver fatigue detection, yielding significant advancements and valuable insights. The primary methods for fatigue driving detection can currently be categorized into three types: (1) methods based on driver facial features [

4,

5], (2) methods based on driver physiological features [

6,

7], and (3) methods based on vehicle driving information [

8,

9]. As computer vision, artificial intelligence, and CPU/GPU hardware technology continue to develop, fatigue detection methods based on facial features have made significant progress, achieving high accuracy and real-time performance under ideal conditions. Under fatigued conditions, a driver’s facial features typically undergo noticeable changes, such as increased blink frequency, reduced eye opening, and frequent yawning [

10,

11]. This method uses machine vision technology and convolutional neural networks to extract features and assess fatigue from driver images. It offers advantages such as non-invasiveness, efficiency, and low cost. However, in complex real-world driving scenarios, the accuracy of these methods is still challenged by factors such as individual differences, lighting changes, and occlusions. Further research is necessary to enhance their robustness and reliability in actual application environments.

Current fatigue detection methods that are based on facial features, such as the eyes, mouth, and head posture, primarily rely on extracting various features and comparing them to fixed thresholds that are set for each feature. However, since a uniform average threshold is typically used for each feature, this approach struggles to adapt to individual differences among drivers, thereby affecting the overall accuracy of detection. Additionally, if fatigue assessment relies solely on one type of feature (e.g., the eyes or mouth), the method is not adaptable in complex driving scenarios and is easily influenced by environmental factors, such as pose occlusion and changes in lighting conditions. Furthermore, achieving a balance between high detection accuracy and real-time performance in limited computational environments remains a critical challenge for fatigue detection systems when deployed in real-world embedded settings. System design must focus not only on improving algorithm performance, but also on prioritizing model lightweighting and response efficiency to meet the real-time operational requirements of embedded platforms.

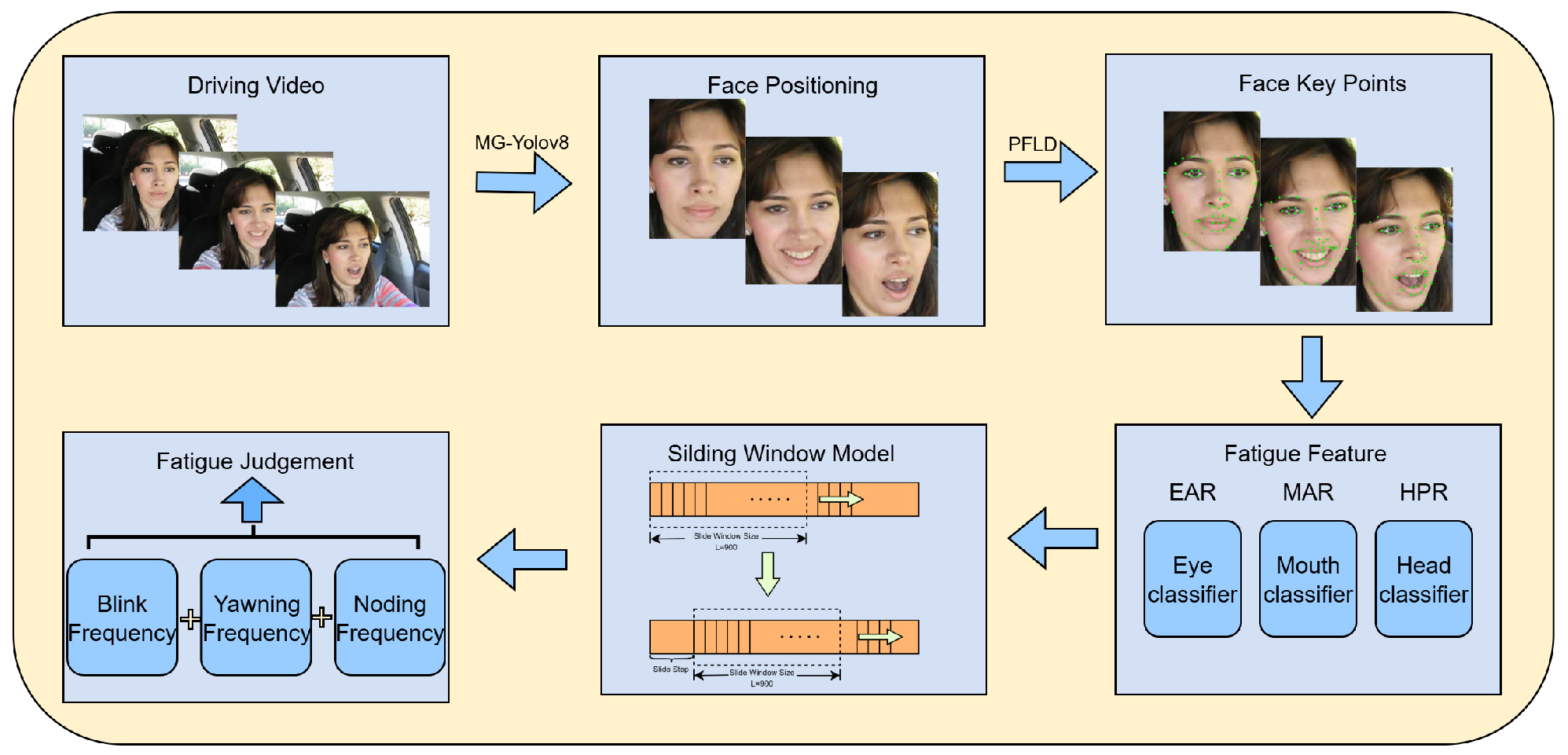

To address the aforementioned challenges, this paper proposes a face-based, multi-feature driver-fatigue detection algorithm built upon an improved YOLOv8 architecture. Our principal contributions are as follows:

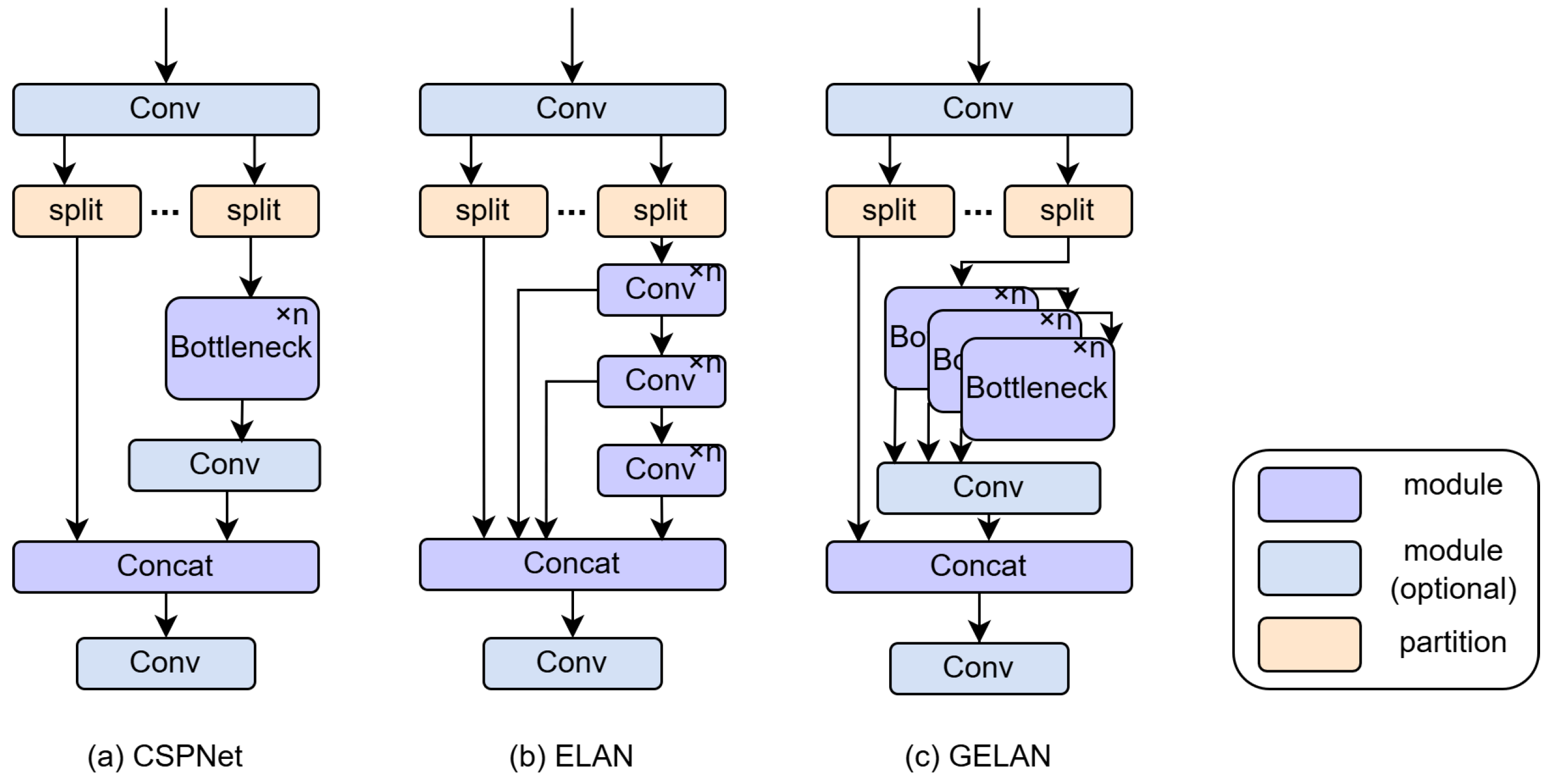

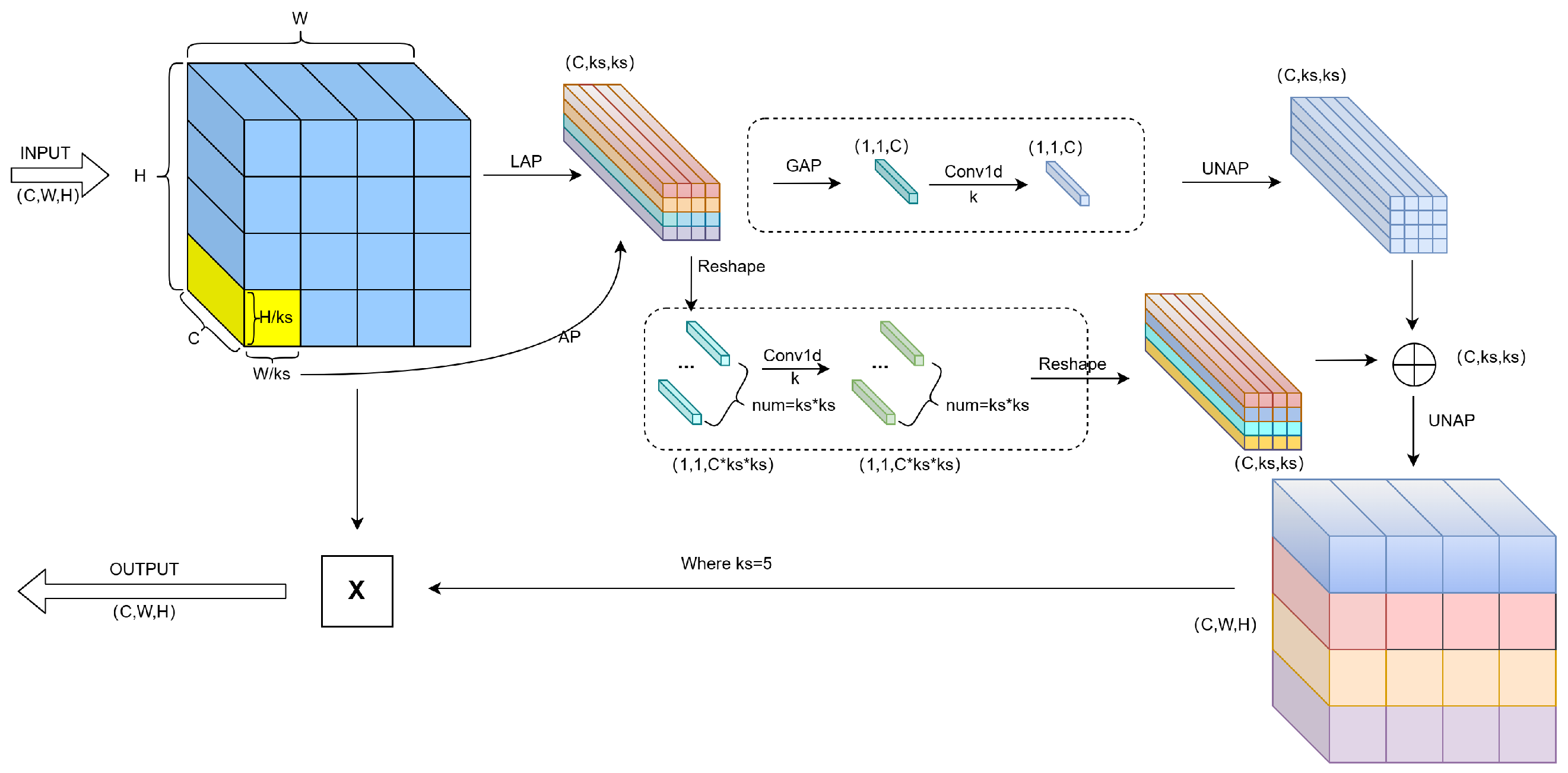

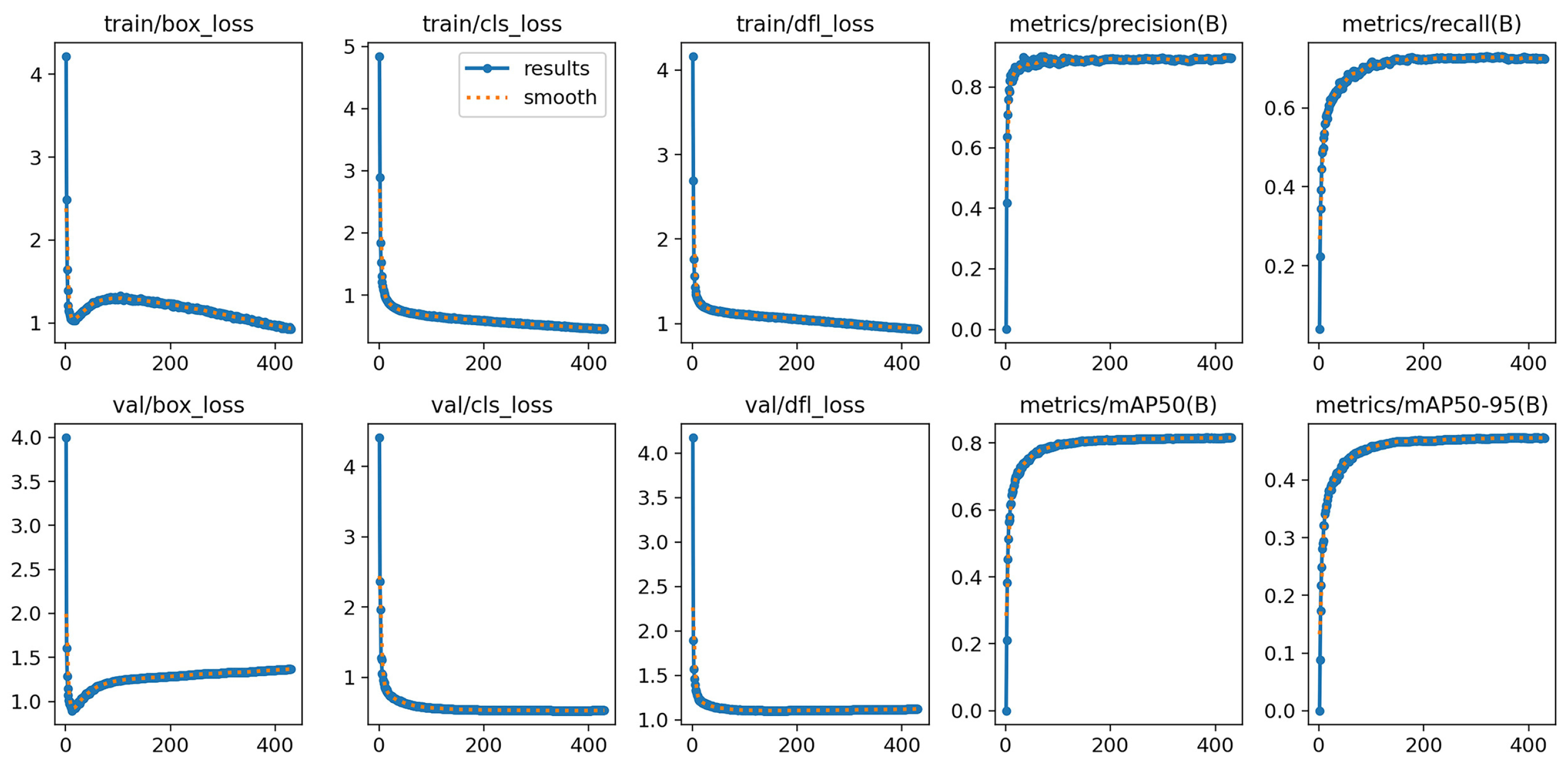

The improved YOLOv8 model introduces the GELAN module, the Mixed Local Channel Attention (MLCA) mechanism, and the EIoU loss function. These enhancements improve face detection accuracy in complex scenes and effectively optimize network structure and inference efficiency.

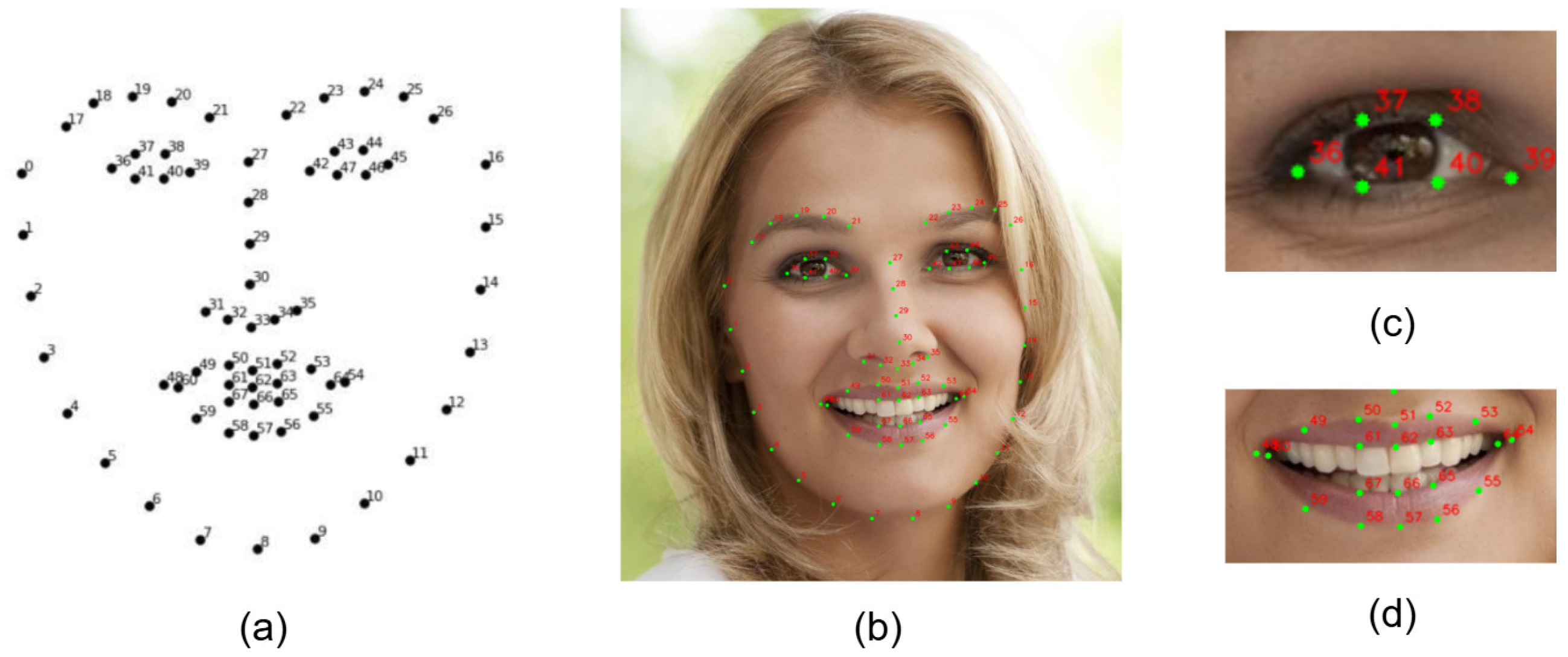

A fatigue detection metric system that integrates multiple dynamic facial features has been developed. This system comprehensively incorporates blink frequency (BF), yawn frequency (YF), nod frequency (NF), and eyelid closure percentage (PERCLOS). This replaces traditional, single-metric discrimination methods and significantly improves the stability and adaptability of the fatigue detection system under multiple scenarios and subjects.

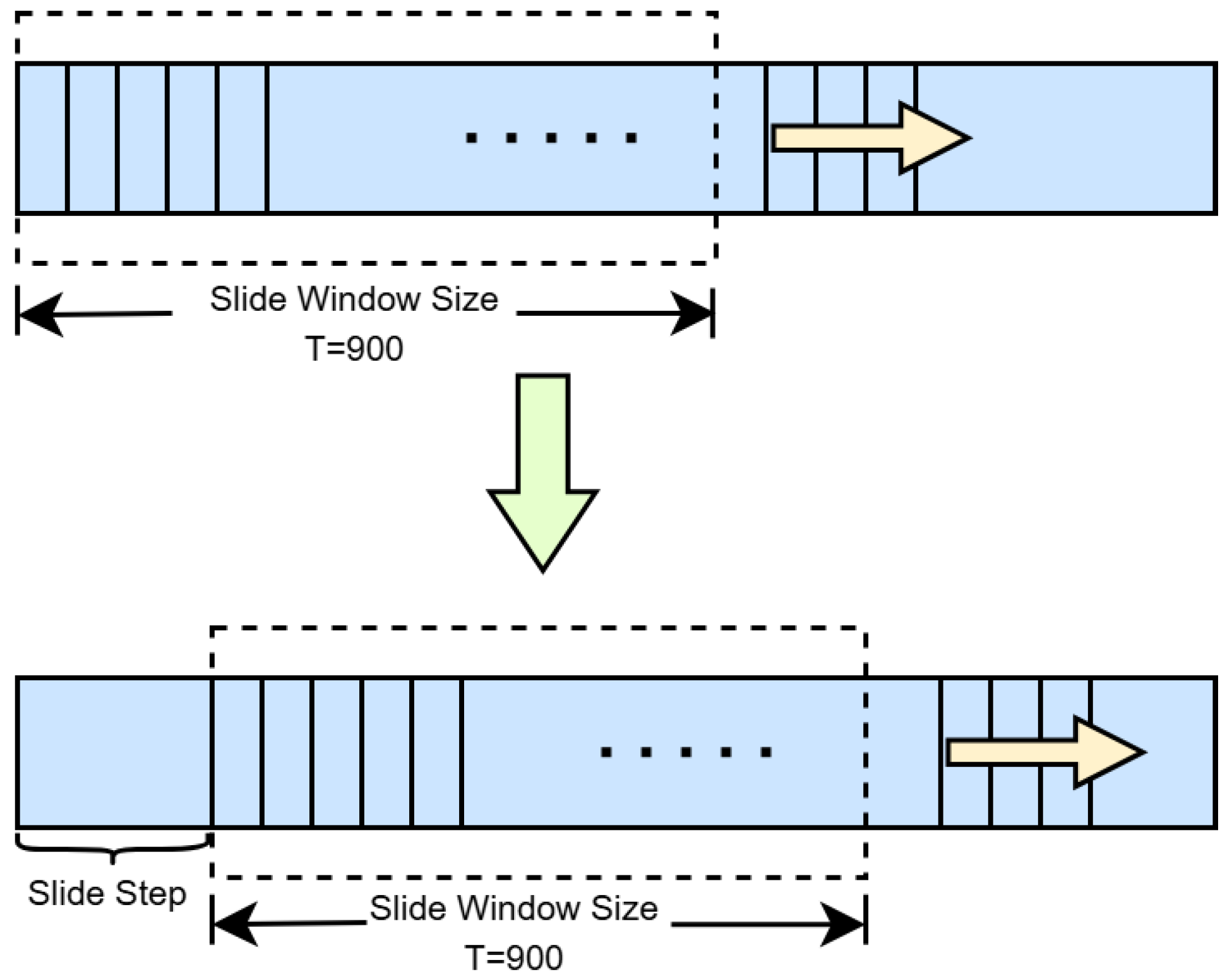

A novel sliding window model based on a dynamic threshold adjustment strategy and an exponential weighted moving average (EWMA) algorithm has been proposed. This model can better adapt to physiological differences among drivers and the temporal evolution patterns of fatigue behavior. Thus, it enhances the stability and individual adaptability of fatigue detection.

Systematic experimental validation was conducted using the publicly available YAWDD fatigue driving dataset and a simulated driving platform. The results demonstrate that the proposed method exhibits excellent detection accuracy and real-time processing capabilities across various driving environments, showcasing its strong potential for practical application.

2. Related Work

In recent years, with the rapid advancement of deep learning and computer vision, driver fatigue detection has evolved from traditional static image analysis toward dynamic behavior recognition and multimodal feature fusion. Research in this domain primarily focuses on two core aspects: effective feature representation and optimization of detection performance. In the broader field of general computer vision, multi-feature fusion techniques have achieved remarkable progress, providing essential methodological insights for fatigue detection. For instance, [

12] proposed a unified framework for video fusion that enhances temporal feature modeling through multi-frame learning and large-scale benchmarking; study [

13] explored the integration of vision–language models for image fusion, strengthening cross-modal semantic alignment; [

14] introduced an equivariant multimodality image fusion approach that improves robustness to pose and illumination variations through geometric invariance design; reference [

15] employed denoising diffusion models to achieve consistent multi-modality fusion under complex noise conditions; and study [

16] developed a correlation-driven dual-branch feature decomposition mechanism that refines fused representations by separating shared and modality-specific features. Although these studies were not explicitly designed for driver fatigue detection, they provide a solid theoretical foundation and valuable technical references for developing multi-feature fusion strategies in this domain. Building upon these advances, current research on driver fatigue detection increasingly emphasizes the synergistic utilization of multimodal features to achieve more robust and generalizable recognition of fatigue-related behaviors.

Early studies primarily inferred fatigue from static spatial cues, such as the opening and closing of the eyes and mouth. Viola et al. [

17] introduced the AdaBoost cascade classifier to achieve efficient and rapid face detection. With the advent of convolutional neural networks and contemporary object detectors, models such as including Faster R-CNN [

18], SSD [

19], improved MTCNN [

20], and YOLO [

21] have gained widespread adoption. Wang et al. [

22] combined spatial eye-state cues with multi-feature temporal fusion for fatigue recognition, while Zhang et al. [

23] used MTCNN to localise facial landmarks. Ji et al. [

11] designed ESR and MSR networks on top of MTCNN to identify spatial differences in facial states, and Adhinata et al. [

24] paired FaceNet embeddings with a multi-class SVM to analyse temporal blinking patterns. Meanwhile, Deng et al. [

25] employed RetinaFace to enhance spatial detection precision, and Liu et al. [

26] fused MB-LBP texture features with AdaBoost for graded detection. Despite these advances, many approaches remain sensitive to illumination and occlusion, and do not sufficiently exploit joint spatiotemporal cues. In parallel, the YOLO family has catalysed real-time detection. Li et al. [

27] combined YOLOv3, Dlib and SVM, validating the real-time capabilities on DSD and WIDER FACE. You et al. [

28] applied this framework to YawDD, further enhancing spatiotemporal matching, underscoring YOLO’s advantages in coordinated spatiotemporal modeling and lightweight, real-time detection.

In existing studies utilizing driver behavior data, spatial and temporal features remain the dominant focus. Researchers generally acknowledge that spatial features extracted from single-frame images are insufficient to capture the dynamic evolution of fatigue behaviors, necessitating the integration of temporal information for precise recognition. For example, ref. [

29] constructed a multi-task fusion system by combining the spatial information of facial features with the temporal sequence of yawning behaviors, achieving recall rates of 84–85% on the YawDD, MiraclHB, and DEAP datasets. Similarly, ref. [

30] focused exclusively on temporal characteristics, tracking the time-series variations in the pupil center and the horizontal distance between the eyes using occlusion-based standards, and achieved 89% accuracy with an SVM classifier on public benchmark datasets. Building upon these efforts, ref. [

31] proposed a spatio-temporal graph convolutional network (ST-GCN) that employs a dual-stream architecture to capture both the spatial structural features of facial landmarks and the temporal dynamics of fatigue behaviors, fusing first- and second-order spatio-temporal information. This approach achieved average accuracies of 93.4% and 92.7% on the YawDD and NTHU-DDD datasets, respectively, demonstrating a deep synergy between spatial and temporal feature learning. In addition, ref. [

32] centered on temporal features supported by spatial information extracted via Dlib, analyzing the time-series pattern of blink duration, and obtained 94.2% accuracy in open-eye state detection on the IMM face dataset after occlusion preprocessing. Ref. [

33] explicitly adopted a spatial–temporal dual-feature framework, designing two model variants: one used LSTM to extract temporal features of fatigue behavior combined with Dlib and a linear SVM to achieve 79.9% accuracy, while the other combined CNN-based spatial extraction with LSTM temporal modeling and a Softmax classifier, achieving 97.5% accuracy, though with higher computational cost due to the large multi-feature matrices. Likewise, ref. [

34] employed MTCNN and Dlib to extract spatial features of facial, mouth, and eye regions, combining them with temporal sequences at constant frame rates to compute fatigue states, yielding 98.81% accuracy on the YawDD and NTHU-DDD datasets and achieving fundamental spatio-temporal fusion. Ref. [

35] further extracted facial keypoints using MTCNN and refined localization accuracy via Dlib, transforming each frame’s spatial feature vector into temporal sequences input to an LSTM to model fatigue evolution, reaching 88% and 90% accuracy on the YawDD and self-constructed datasets, respectively.

Real-time driver drowsiness detection remains a challenging research problem, and several studies have made progress by optimizing detection speed and adapting models to real-time data streams. Ref. [

36] proposed a hybrid architecture combining Haar cascades with a lightweight CNN, validated through fivefold cross-validation on the UTA-RLDD dataset, achieving a detection speed of 8.4 frames per second. When tested on a custom real-time video dataset collected from 122 participants, the model achieved 96.8% accuracy after hyperparameter tuning with batch sizes of 200 and 500 epochs. Similarly, ref. [

28] developed an entropy-based real-time analysis system using an improved YOLOv3-tiny CNN for rapid face-region detection, coupled with Dlib-based extraction of the spatial coordinates of facial triangles and temporal sequences of facial landmarks to construct fatigue-related feature vectors. The proposed system achieved speeds exceeding 20 frames per second and an accuracy of 94.32% on the YawDD dataset. In another effort, ref. [

37] established a four-class real-time detection framework using real-world data from 223 participants, selecting 245 five-minute videos from 10 subjects with a 3:1 training-validation split. Trained on real-time data, this framework achieved a maximum accuracy of 64%, highlighting the difficulties in maintaining robustness and accuracy under natural driving conditions.

To enhance robustness and balance model performance under complex driving environments, researchers have explored architectural optimization and multi-feature fusion strategies. Ref. [

38] addressed challenges such as eyeglass reflections and variable illumination by proposing a 3DcGAN + TLABiLSTM spatio–temporal fusion architecture, which achieved 91.2% accuracy on the NTHU-DDD dataset and improved adaptability to environmental variations. Meanwhile, ref. [

39] introduced a multi-feature fusion system that integrates Haar-like features, 68 facial landmarks, PERCLOS, and MAR metrics, combined with VGG16 and SSD algorithms, achieving 90% accuracy on the NTHU-DDD dataset while effectively mitigating the effects of lighting variation and eyeglass interference. Regarding model lightweighting and generalization, ref. [

40] compared multiple deep networks including Xception, ResNet101, InceptionV4, ResNeXt101, and MobileNetV2, revealing that MobileNetV2 significantly reduces computational demand while maintaining 90.5% accuracy, whereas a Weibull-optimized ResNeXt101 achieved 93.80% accuracy and 84.21% generalization accuracy on the NTHU-DDD dataset, offering a practical balance between model efficiency and generalization. Finally, ref. [

41] optimized temporal fatigue-state modeling on the RLDD dataset using an HM-LSTM network, improving accuracy from 61.4% (baseline LSTM) to 65.2%, thus enhancing model generalization capacity for real-world driving fatigue recognition.

Overall, driver fatigue detection has transitioned from single facial spatial cues to a framework that deeply fuses spatial and temporal features, jointly optimizing real-time performance and robustness. Coordinated spatiotemporal modeling has become the core of behavior-driven analysis, while advances in the YOLO family and lightweight backbones provide the critical support required for real-time deployment in driving scenarios. At the same time, multi-feature fusion and adaptation to complex environments remain key challenges for future work—and they directly inform the model design and performance optimizations pursued in this study.

5. Conclusions

This study proposes a multi-feature fusion fatigue detection method based on an improved YOLOv8 model. The system adopts a lightweight PFLD model to replace the conventional Dlib framework, achieving efficient and accurate facial landmark localization. By integrating head posture, eye, and mouth features, an innovative sliding window mechanism is designed to compute fatigue-related indicators in real time, including Percentage of Eye Closure (PERCLOS), Blink Frequency (BF), Yawn Frequency (YF), and Head Posture Ratio (HPR). The system subsequently employs a comprehensive fatigue evaluation algorithm to classify and promptly alert different levels of driver fatigue. Experimental results demonstrate that, on the YAWDD dataset, the proposed method achieves a detection accuracy of 94.5% with a stable inference speed exceeding 30 FPS. Moreover, the results of the driving simulator experiments indicate a low false detection rate, confirming that the proposed system effectively balances accuracy and real-time performance, making it highly suitable for deployment in resource-constrained embedded environments.

It is worth emphasizing that the configuration of feature weights plays a critical role in the overall detection performance. The current weight settings were determined through experimental validation and empirical tuning. However, future studies will conduct systematic investigations on larger and more diverse datasets, enabling fine-grained optimization of feature weights to further enhance model robustness and generalization capability. In addition, this work plans to expand the number of participants and experimental scenarios, introducing more comprehensive statistical performance metrics—such as Recall, and F1-Score—to achieve a more systematic and standardized evaluation of fatigue-state classification. These efforts will help verify the model’s effectiveness and stability under a broader range of real-world driving conditions. Furthermore, as the current system occasionally misclassifies speech-related mouth movements as yawning, future research will focus on dynamic mouth behavior modeling and the fusion of multimodal features (e.g., combining vocal and oral motion patterns) to improve recognition accuracy and adaptability in complex interactive environments.