Automatic Visual Inspection for Industrial Application

Abstract

1. Introduction

- 1.

- Development of a dataset, which includes ground truth data from defects in pharmaceutical bottles, gathered in controlled and real-world industrial environments (Dataset can be made available upon request), to enable assessment in real conditions.

- 2.

- Set of baseline experiments to monitor how diverse object detection models cope and react under various conditions presented by the proposed datasets, providing insights into their performance in a real industrial scenario.

- 3.

- Implementation of a visual defect detection model and training strategies based on incremental learning through Learn without Forgetting (LwF), capable of addressing the real requirements of a production line.

2. Literature Review

2.1. Region-of-Interest Object Detection

2.2. Defect Detection

2.3. Incremental Learning

2.4. Related Datasets

3. Data Acquisition and Preparation

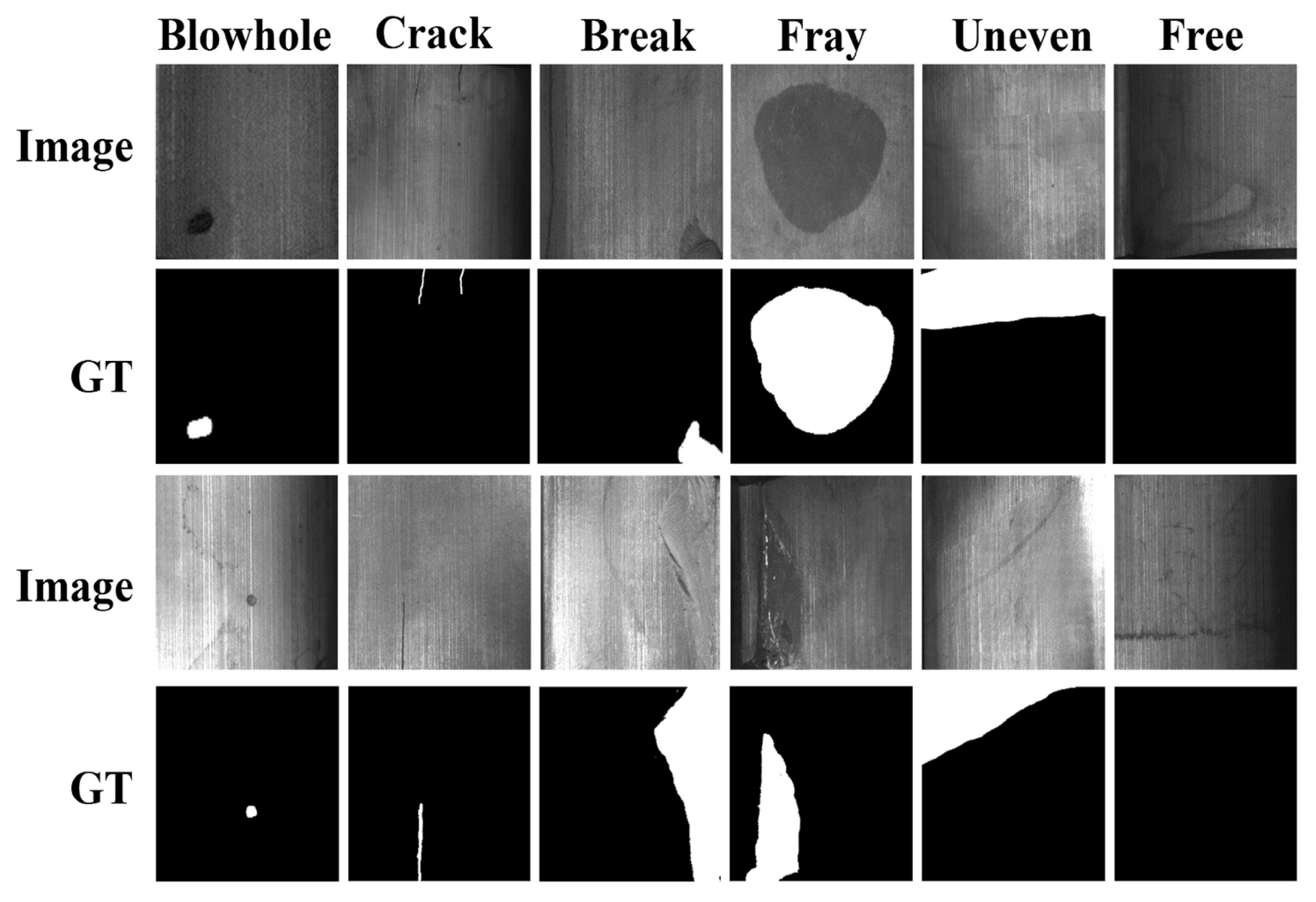

3.1. Metal Surface Defect Dataset

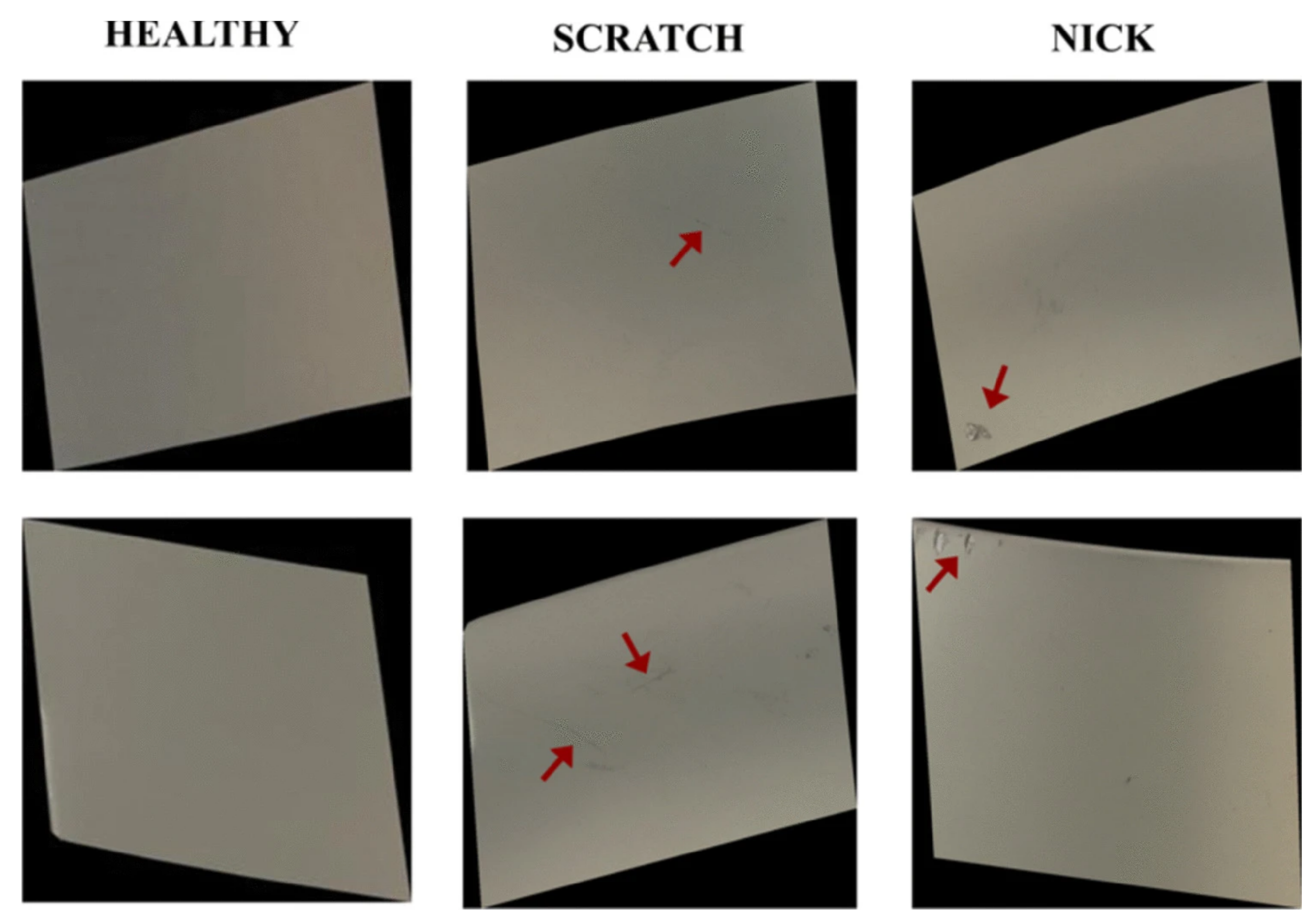

3.2. Blade Surface Defect Dataset

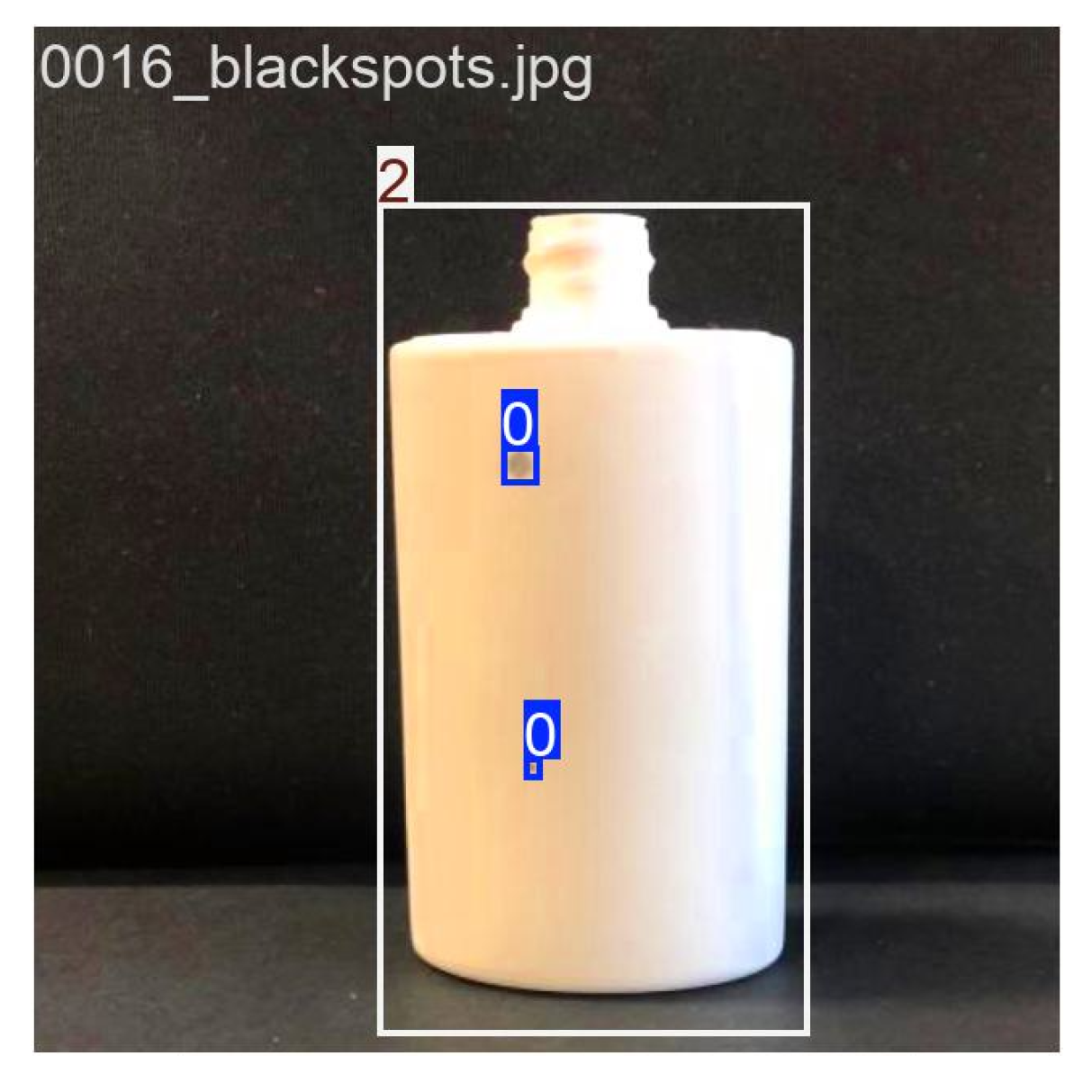

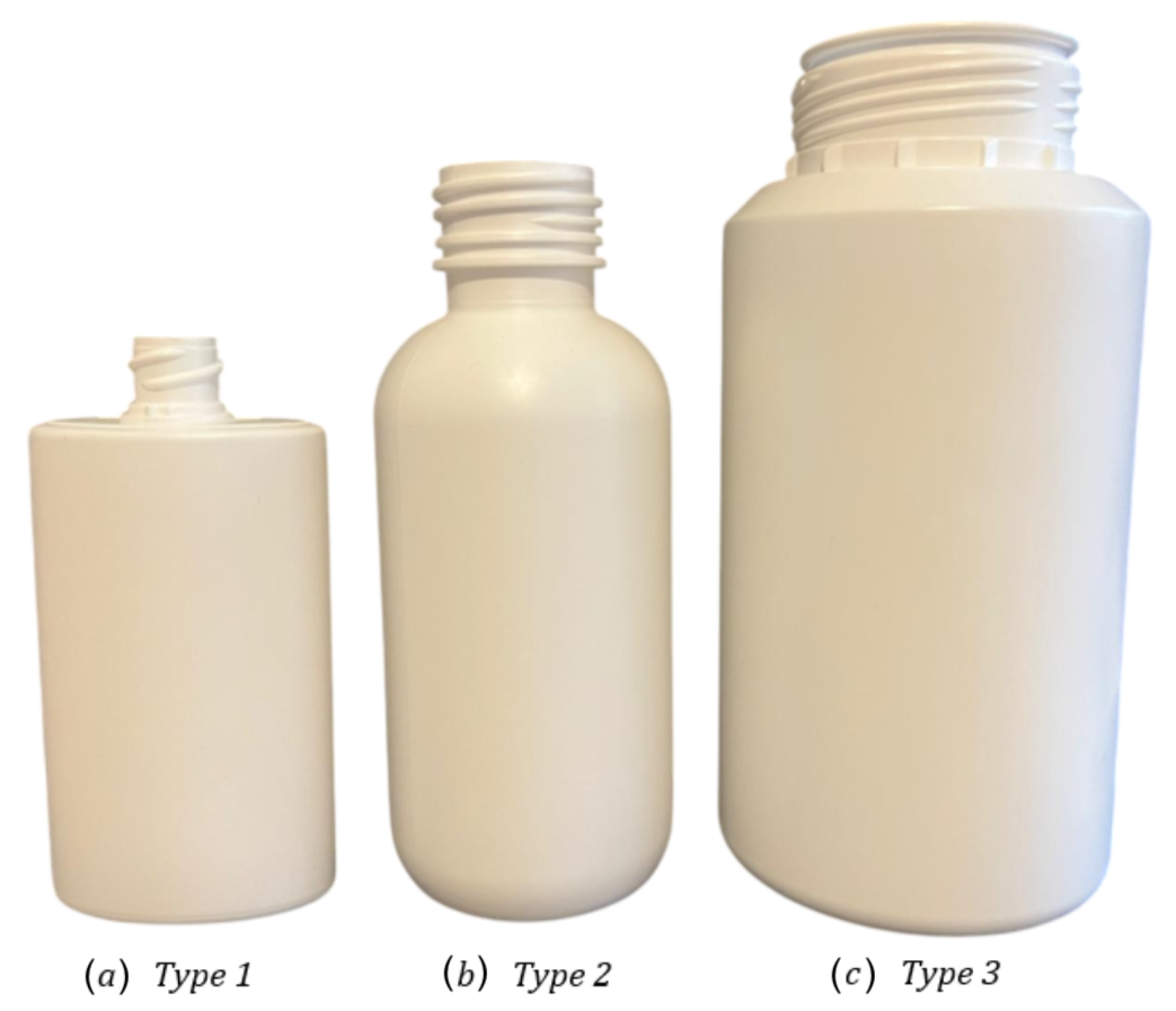

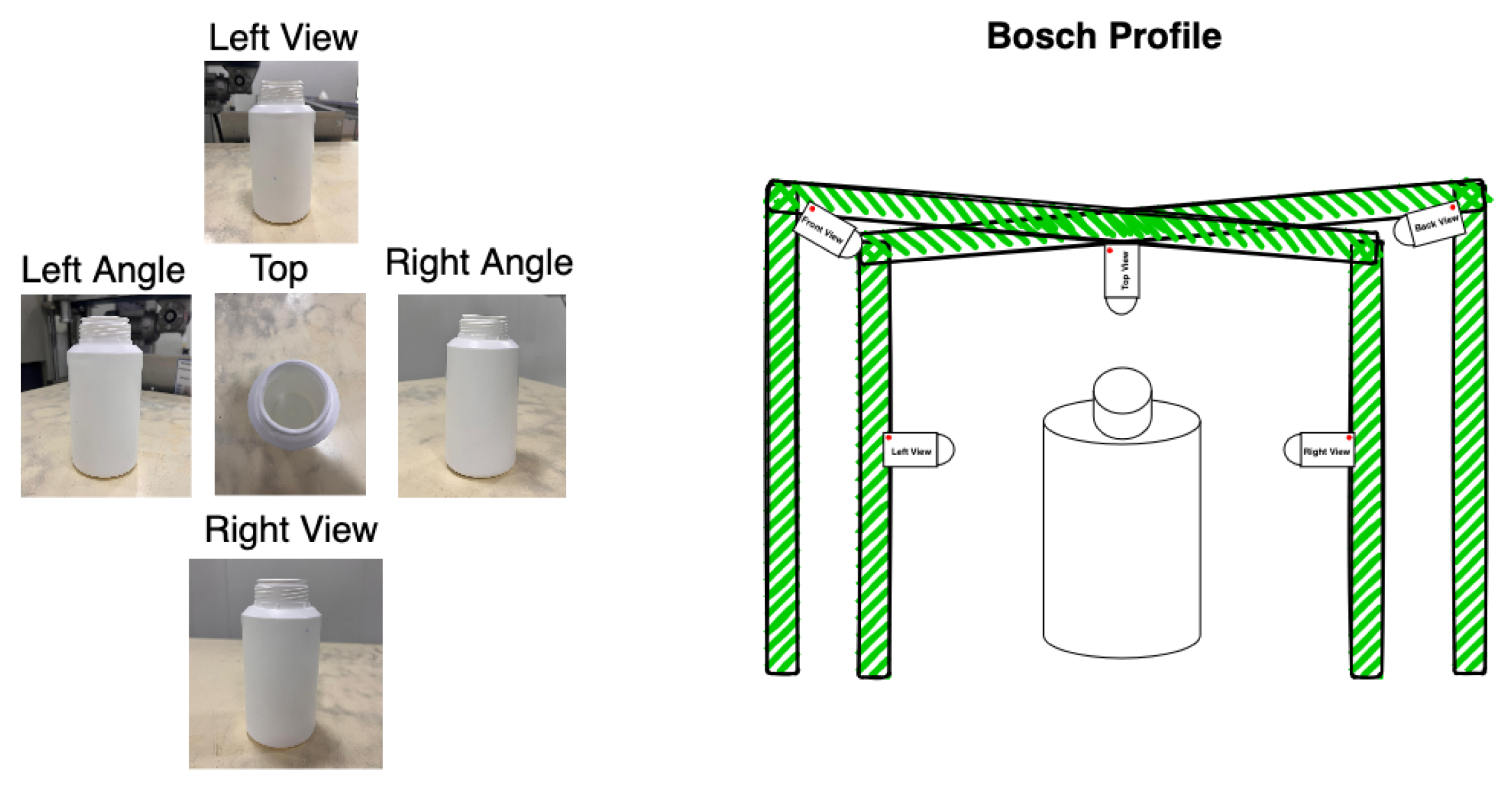

3.3. Bottle Defects Dataset Within a Control Environment

3.4. Bottle Defect Dataset Within Industrial Environment

4. Incremental Learning for Visual Inspection Proposal

4.1. Data Distribution

4.2. Implementation Strategy

4.2.1. Baseline Approach

4.2.2. Incremental Learning Approach

4.3. Performance Metrics

5. Results and Discussion

5.1. Baseline Models

5.1.1. YOLOv8

5.1.2. YOLOv11

5.1.3. Faster R-CNN

5.2. Incremental Learning

5.2.1. Knowledge Distillation

5.2.2. Incremental Learning Validation

5.2.3. Learning Rate Study

5.2.4. Simulations

- Experiment 1: BDDCE—All ClassesThe first experiment simulates low-resource industrial conditions by introducing only 50 images per class on BDDCE.

- Experiment 2: BDDCE—First, 3 ClassesThis experiment also simulates low-resource conditions using BDDCE but limits training to only 50 images from the first three classes, with the teacher model trained solely on these classes.

- Experiment 3: BDDIE—All ClassesThis experiment mirrors the setup of experiment 1 but uses BDDIE for both training and testing, with 50 images per class. The teacher model is trained on BDDIE using all classes.

- Experiment 4: BDDIE—First, 3 ClassesSimilarly to experiment 2, this experiment uses BDDIE instead of BDDCE, training on only 50 images from the first three classes. The teacher model is trained on BDDCE using only the first three classes.

- Experiment 5: BDDCE + BDDIE—All ClassesThis experiment introduces images from both BDDCE and BDDIE for training (50 images per class each), while testing is conducted exclusively on BDDIE. The teacher model is trained using both datasets.

- Experiment 6: BDDCE + BDDIE—First, 3 ClassesThis experiment builds on experiment 5 by limiting training to only the first three classes from both BDDCE and BDDIE (50 images each), with testing still conducted on BDDIE.

- Experiment 7: BDDCE—Baseline—This experiment evaluates the best-performing baseline teacher model trained on the BDDCE on BDDIE.

- Experiment 8: BDDCE—First, 3 Classes Baseline—Using the teacher model from the incremental learning validation experiment, this experiment simulates a scenario where only three known classes were trained in a controlled setting on BDDCE and evaluated on BDDIE.

- Experiment 9: BDDCE—Baseline—This experiment follows the setup of experiment 7 but introduces a distillation weight of to reduce the teacher’s influence due to its poor performance.

- Experiment 10: BDDCE—First, 3 Classes Baseline—Similarly to experiment 8, this experiment applies a lower distillation weight of to mitigate the impact of a weak teacher model trained on only three classes.

5.3. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BCE | Binary Cross Entropy |

| BDDCE | Bottle Defect Dataset in Controlled Environment |

| BDDIE | Bottle Defect Dataset in Industrial Environment |

| BSDD | Blade Surface Defect Dataset |

| CE | Cross Entropy |

| CIoU | Complete Intersection over Union |

| CNN | Convolutional Neural Network |

| CV | Computer Vision |

| CVAT | Computer Vision Annotation Tool |

| DFL | Distributed Focal Loss |

| DL | Deep Learning |

| EWC | Elastic Weight Consolidation |

| IoU | Intersection of Union |

| KD | Knowledge Distillation |

| LwF | Learning without Forgetting |

| mAP | Mean Average Precision |

| ML | Machine Learning |

| MSDD | Metal Surface Defect Dataset |

| NN | Neural Network |

| RCNN | Region-based Convolutional Neural Network |

| RILOD | Real-Time Incremental Learning for Object Detection |

| ROI | Region of Interest |

| SOA | State-of-the-Art |

| YOLO | You Only Look Once |

Appendix A

References

- Quality, M. Product Inspection: What It Is, Why It’s Important & How It Works. Mars Quality. Mars Quality Control. 2023. Available online: https://www.marsquality.com/product-inspection-what-it-is-why-its-important-how-it-works/ (accessed on 25 June 2025).

- Limkar, K.; Tamboli, F. Impact of Automation. Int. J. Sci. Res. Mod. Sci. Technol. 2024, 3, 13–17. [Google Scholar] [CrossRef]

- Tao, X.; Zhang, D.; Ma, W.; Liu, X.; Xu, D. Automatic Metallic Surface Defect Detection and Recognition with Convolutional Neural Networks. Appl. Sci. 2018, 8, 1575. [Google Scholar] [CrossRef]

- Chang, F.; Dong, M.; Liu, M.; Wang, L.; Duan, Y. A Lightweight Appearance Quality Assessment System Based on Parallel Deep Learning for Painted Car Body. IEEE Trans. Instrum. Meas. 2020, 69, 5298–5307. [Google Scholar] [CrossRef]

- Neutroplast. 2025. Available online: https://www.neutroplast.com/en (accessed on 11 June 2025).

- Neutroplast. SUSTAINABLE PLASTICS. Neutroplast Sustainable Plastics Project. 2025. Available online: https://www.neutroplast.com/en/project/sustainable-plastics (accessed on 11 June 2025).

- Li, Z.; Hoiem, D. Learning without Forgetting. arXiv 2017, arXiv:1606.09282. [Google Scholar] [CrossRef]

- Malek, K.; Sanei, M.; Mohammadkhorasani, A.; Moreu, F. Increasing human immersion with image analysis using automatic region selection. Expert Syst. Appl. 2025, 284, 127938. [Google Scholar] [CrossRef]

- Yeum, C.; Choi, J.; Dyke, S. Automated region-of-interest localization and classification for vision-based visual assessment of civil infrastructure. Struct. Health Monit. 2018, 18, 675–689. [Google Scholar] [CrossRef]

- Pollicelli, D.; Coscarella, M.A.; Delrieux, C. RoI detection and segmentation algorithms for marine mammals photo-identification. Ecol. Inform. 2020, 56, 101038. [Google Scholar] [CrossRef]

- Mu, C. Application of ROI-based image processing technology in mechanical component size measurement. Int. J. Interact. Des. Manuf. (IJIDeM) 2024, 19, 2975–2986. [Google Scholar] [CrossRef]

- Sun, W.; Zhao, H.; Jin, Z. A visual attention based ROI detection method for facial expression recognition. Neurocomputing 2018, 296, 12–22. [Google Scholar] [CrossRef]

- Chen, Q.H.; Xie, X.F.; Guo, T.J.; Shi, L.; Wang, X.F. The Study of ROI Detection Based on Visual Attention Mechanism. In Proceedings of the 2010 6th International Conference on Wireless Communications Networking and Mobile Computing (WiCOM), Chengdu, China, 23–25 September 2010; pp. 1–4. [Google Scholar] [CrossRef]

- Tang, Y.; Sun, K.; Zhao, D.; Lu, Y.; Jiang, J.; Chen, H. Industrial Defect Detection Through Computer Vision: A Survey. In Proceedings of the 2022 7th IEEE International Conference on Data Science in Cyberspace (DSC), Guilin, China, 11–13 July 2022; pp. 605–610. [Google Scholar] [CrossRef]

- Qi, S.; Yang, J.; Zhong, Z. A Review on Industrial Surface Defect Detection Based on Deep Learning Technology. In Proceedings of the 2020 3rd International Conference on Machine Learning and Machine Intelligence, MLMI ’20, New York, NY, USA, 18–20 September 2020; pp. 24–30. [Google Scholar] [CrossRef]

- Lan, Y.; Huang, C. A Deep Learning Based End-to-end Surface Defect Detection Method for Industrial Scenes. In Proceedings of the 2023 3rd International Conference on Bioinformatics and Intelligent Computing, BIC ’23, Sanya, China, 10–12 February 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 45–49. [Google Scholar] [CrossRef]

- Tabernik, D.; Šela, S.; Skvarč, J.; Skočaj, D. Segmentation-based deep-learning approach for surface-defect detection. J. Intell. Manuf. 2019, 31, 759–776. [Google Scholar] [CrossRef]

- Chen, J.; Van Le, D.; Tan, R.; Ho, D. A Collaborative Visual Sensing System for Precise Quality Inspection at Manufacturing Lines. ACM Trans. Cyber-Phys. Syst. 2024, 8, 1–27. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-Based Defect Detection and Classification Approaches for Industrial Applications—A SURVEY. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef]

- Jha, S.B.; Babiceanu, R.F. Deep CNN-based visual defect detection: Survey of current literature. Comput. Ind. 2023, 148, 103911. [Google Scholar] [CrossRef]

- Ibrahim, A.A.M.S.; Tapamo, J.R. A Survey of Vision-Based Methods for Surface Defects’ Detection and Classification in Steel Products. Informatics 2024, 11, 25. [Google Scholar] [CrossRef]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Li, Z.; Liu, X.; Zhang, C.; Li, S.; Xi, Z.; Deng, W. Incremental Object Detection based on YOLO v5 and EWC Models. In Proceedings of the 2023 6th International Conference on Artificial Intelligence and Pattern Recognition, AIPR ’23, New York, NY, USA, 22–24 September 2023; pp. 452–457. [Google Scholar] [CrossRef]

- Shmelkov, K.; Schmid, C.; Alahari, K. Incremental Learning of Object Detectors without Catastrophic Forgetting. arXiv 2017, arXiv:1708.06977. [Google Scholar] [CrossRef]

- Li, D.; Tasci, S.; Ghosh, S.; Zhu, J.; Zhang, J.; Heck, L. RILOD: Near Real-Time Incremental Learning for Object Detection at the Edge. arXiv 2019, arXiv:1904.00781. [Google Scholar] [CrossRef]

- Monte, R.D.; Pezze, D.D.; Susto, G.A. Teach YOLO to Remember: A Self-Distillation Approach for Continual Object Detection. arXiv 2025, arXiv:2503.04688. [Google Scholar] [CrossRef]

- Schlagenhauf, T.; Landwehr, M. Industrial machine tool component surface defect dataset. Data Brief 2021, 39, 107643. [Google Scholar] [CrossRef] [PubMed]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9584–9592. [Google Scholar] [CrossRef]

- Zhou, Q.; Chen, X.; Tang, J. GANs fostering data augmentation for automated surface inspection with adaptive learning bias. Int. J. Adv. Manuf. Technol. 2024, 135, 5647–5667. [Google Scholar] [CrossRef]

- Huang, Y.; Qiu, C.; Guo, Y.; Wang, X.; Yuan, K. Surface Defect Saliency of Magnetic Tile. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 612–617. [Google Scholar] [CrossRef]

- Golchubian, A.; Marques, O.; Nojoumian, M. Photo quality classification using deep learning. Multimed. Tools Appl. 2021, 80, 22193–22208. [Google Scholar] [CrossRef]

- Mistry, R. Data Splitting (Train-Test-Validation) in Machine Learning. Medium. 2025. Available online: https://medium.com/@rohanmistry231/data-splitting-train-test-validation-in-machine-learning-2d5d1927fa69 (accessed on 27 June 2025).

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Konishi, T.; Kurokawa, M.; Ono, C.; Ke, Z.; Kim, G.; Liu, B. Parameter-Level Soft-Masking for Continual Learning. arXiv 2023, arXiv:2306.14775. [Google Scholar] [CrossRef]

- Buhl, N. Mean Average Precision in Object Detection. Encord. 2023. Available online: https://encord.com/blog/mean-average-precision-object-detection/ (accessed on 2 June 2025).

- Padilla, R.; Netto, S.L.; da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Gad, A.F.; Skelton, J. Evaluating Object Detection Models Using Mean Average Precision (mAP). Digitalocean. Mean Average Precision. 2025. Available online: https://www.digitalocean.com/community/tutorials/mean-average-precision (accessed on 30 July 2025).

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar] [CrossRef]

- Feng, F.; Wang, X.; Li, R. Cross-modal Retrieval with Correspondence Autoencoder. In Proceedings of the 22nd ACM International Conference on Multimedia, MM ’14, Orlando, FL, USA, 3–7 November 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 7–16. [Google Scholar] [CrossRef]

- Yeh, C.K.; Wu, W.C.; Ko, W.J.; Wang, Y.C.F. Learning Deep Latent Spaces for Multi-Label Classification. arXiv 2017, arXiv:1707.00418. [Google Scholar] [CrossRef]

| Packaging Type | Width (cm) | Height (cm) | Length (cm) |

|---|---|---|---|

| Type 1 | 4.45 | 9 | 2.45 |

| Type 2 | 4.5 | 11.5 | 4.5 |

| Type 3 | 6.5 | 13.3 | 6.5 |

| Defect Type | Number of Images |

|---|---|

| Black spots | 374 |

| Color variation | 367 |

| Deformed thread | 368 |

| Oval neck | 372 |

| No defect | 371 |

| Defect Type | Number of Images |

|---|---|

| Black Spots | 499 |

| Color Variation | 512 |

| Deformed Thread | 499 |

| Oval Neck | 494 |

| No Defect | 484 |

| Dataset | Model | Split | Epochs | Batch | Training Time (h) | Class Loss | Box Loss | Precision | Recall | mAP@50 | mAP@50-95 | Inference (ms) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BDDCE | YOLOv8n | 80/10/10 | 50 | 32 | 0.338 | 0.367 | 0.237 | 0.917 | 0.896 | 0.912 | 0.731 | 2.8 |

| 70/20/10 | 64 | 0.106 | 0.368 | 0.243 | 0.917 | 0.936 | 0.938 | 0.752 | 2.8 | |||

| 70/10/20 | 32 | 0.063 | 0.370 | 0.248 | 0.924 | 0.896 | 0.905 | 0.729 | 2.8 | |||

| YOLOv8l | 80/10/10 | 50 | 32 | 0.338 | 0.373 | 0.212 | 0.915 | 0.914 | 0.913 | 0.733 | 23.6 | |

| 70/20/10 | 16 | 0.353 | 0.371 | 0.209 | 0.919 | 0.940 | 0.945 | 0.759 | 23.1 | |||

| 70/10/20 | 8 | 0.193 | 0.380 | 0.233 | 0.914 | 0.916 | 0.921 | 0.738 | 22.6 | |||

| BDDIE | YOLOv8n | 80/10/10 | 50 | 16 | 0.147 | 0.60293 | 0.35877 | 0.912 | 0.928 | 0.927 | 0.726 | 2.8 |

| 70/20/10 | 8 | 0.214 | 0.60480 | 0.38183 | 0.889 | 0.914 | 0.928 | 0.737 | 2.8 | |||

| 70/10/20 | 8 | 0.189 | 0.60915 | 0.38136 | 0.899 | 0.915 | 0.922 | 0.722 | 2.8 | |||

| YOLOv8l | 80/10/10 | 50 | 8 | 0.532 | 0.58354 | 0.33198 | 0.932 | 0.936 | 0.953 | 0.744 | 23.7 | |

| 70/20/10 | 16 | 0.490 | 0.58677 | 0.32988 | 0.924 | 0.929 | 0.958 | 0.750 | 23.3 | |||

| 70/10/20 | 8 | 0.469 | 0.73761 | 0.60451 | 0.813 | 0.840 | 0.858 | 0.645 | 11.0 | |||

| MSDD | YOLOv8n | 80/10/10 | 50 | 8 | 0.084 | 0.23885 | 0.27360 | 0.907 | 0.957 | 0.948 | 0.739 | 2.8 |

| 70/20/10 | 32 | 0.078 | 0.28267 | 0.28311 | 0.879 | 0.927 | 0.928 | 0.653 | 2.8 | |||

| 70/10/20 | 32 | 0.067 | 0.21248 | 0.25980 | 0.870 | 0.940 | 0.924 | 0.695 | 2.7 | |||

| YOLOv8l | 80/10/10 | 50 | 16 | 0.253 | 0.22931 | 0.24993 | 0.605 | 0.482 | 0.490 | 0.340 | 24.2 | |

| 70/20/10 | 16 | 0.174 | 0.37128 | 0.22089 | 0.901 | 0.895 | 0.897 | 0.584 | 24.2 | |||

| 70/10/20 | 32 | 0.223 | 0.22072 | 0.25048 | 0.833 | 0.902 | 0.893 | 0.649 | 11.8 | |||

| BSDD | YOLOv8n | 80/10/10 | 50 | 64 | 0.074 | 0.78502 | 0.85533 | 0.737 | 0.679 | 0.621 | 0.470 | 3.1 |

| 70/20/10 | 8 | 0.051 | 0.74818 | 0.88053 | 0.500 | 0.766 | 0.563 | 0.421 | 3.0 | |||

| 70/10/20 | 32 | 0.062 | 0.84231 | 0.90273 | 0.589 | 0.642 | 0.568 | 0.433 | 3.1 | |||

| YOLOv8l | 80/10/10 | 50 | 8 | 0.111 | 0.91288 | 1.02700 | 0.474 | 0.703 | 0.602 | 0.453 | 23.5 | |

| 70/20/10 | 8 | 0.113 | 0.90840 | 0.97775 | 0.461 | 0.715 | 0.573 | 0.397 | 23.7 | |||

| 70/10/20 | 32 | 0.120 | 0.80387 | 0.72388 | 0.586 | 0.652 | 0.579 | 0.435 | 23.8 |

| Dataset | Model | Split | Epochs | Batch | Training Time (h) | Class Loss | Box Loss | Precision | Recall | mAP@50 | mAP@50-95 | Inference (ms) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BDDCE | YOLOv11n | 80/10/10 | 50 | 16 | 0.162 | 0.37530 | 0.23405 | 0.889 | 0.909 | 0.894 | 0.728 | 2.7 |

| 70/20/10 | 32 | 0.119 | 0.36844 | 0.23969 | 0.928 | 0.928 | 0.929 | 0.744 | 2.5 | |||

| 70/10/20 | 64 | 0.098 | 0.36493 | 0.25005 | 0.916 | 0.902 | 0.913 | 0.727 | 2.1 | |||

| YOLOv11l | 80/10/10 | 50 | 32 | 0.382 | 0.38924 | 0.22975 | 0.913 | 0.903 | 0.907 | 0.727 | 20.3 | |

| 70/20/10 | 16 | 0.353 | 0.39942 | 0.23687 | 0.918 | 0.929 | 0.941 | 0.747 | 20.3 | |||

| 70/10/20 | 8 | 0.365 | 0.39520 | 0.23602 | 0.877 | 0.908 | 0.910 | 0.732 | 20.3 | |||

| BDDIE | YOLOv11n | 80/10/10 | 50 | 32 | 0.158 | 0.60507 | 0.35816 | 0.913 | 0.920 | 0.936 | 0.739 | 2.7 |

| 70/20/10 | 8 | 0.251 | 0.60995 | 0.38729 | 0.909 | 0.914 | 0.925 | 0.724 | 2.7 | |||

| 70/10/20 | 32 | 0.076 | 0.60327 | 0.36607 | 0.904 | 0.906 | 0.920 | 0.725 | 3.1 | |||

| YOLOv11l | 80/10/10 | 50 | 8 | 0.508 | 0.60285 | 0.35625 | 0.889 | 0.928 | 0.939 | 0.727 | 15.2 | |

| 70/20/10 | 32 | 0.450 | 0.60907 | 0.36075 | 0.905 | 0.920 | 0.944 | 0.745 | 15.3 | |||

| 70/10/20 | 16 | 0.402 | 0.63718 | 0.45083 | 0.886 | 0.929 | 0.926 | 0.724 | 21.2 | |||

| MSDD | YOLOv11n | 80/10/10 | 50 | 16 | 0.110 | 0.20390 | 0.27131 | 0.858 | 0.945 | 0.951 | 0.709 | 3.1 |

| 70/20/10 | 32 | 0.082 | 0.20086 | 0.24453 | 0.790 | 0.901 | 0.892 | 0.621 | 3.0 | |||

| 70/10/20 | 32 | 0.078 | 0.21455 | 0.26217 | 0.878 | 0.952 | 0.951 | 0.698 | 3.0 | |||

| YOLOv11l | 80/10/10 | 50 | 16 | 0.250 | 0.21000 | 0.23468 | 0.881 | 0.934 | 0.945 | 0.677 | 21.1 | |

| 70/20/10 | 32 | 0.241 | 0.28630 | 0.27654 | 0.866 | 0.890 | 0.911 | 0.617 | 21.0 | |||

| 70/10/20 | 16 | 0.133 | 0.21832 | 0.28172 | 0.838 | 0.927 | 0.923 | 0.687 | 21.1 | |||

| BSDD | YOLOv11n | 80/10/10 | 50 | 64 | 0.031 | 0.78892 | 1.07391 | 0.606 | 0.654 | 0.611 | 0.457 | 3.7 |

| 70/20/10 | 8 | 0.059 | 0.74901 | 0.92073 | 0.509 | 0.773 | 0.564 | 0.417 | 3.8 | |||

| 70/10/20 | 16 | 0.021 | 0.85119 | 0.99043 | 0.605 | 0.626 | 0.541 | 0.422 | 3.5 | |||

| YOLOv11l | 80/10/10 | 50 | 32 | 0.086 | 1.05403 | 0.90116 | 0.522 | 0.704 | 0.599 | 0.469 | 20.8 | |

| 70/20/10 | 32 | 0.098 | 0.80929 | 0.75795 | 0.502 | 0.732 | 0.554 | 0.414 | 20.9 | |||

| 70/10/20 | 8 | 0.102 | 0.93782 | 0.95878 | 0.514 | 0.627 | 0.508 | 0.408 | 21.0 |

| Dataset | Model | Split | Epochs | Batch | Training Time (h) | Class Loss | Box Loss | Precision | Recall | mAP@50 | mAP@50-95 | Inference (ms) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| BDDCE | Faster R-CNN | 80/10/10 | 10 | 16 | 1.03 | 0.058 | 0.053 | 0.3891 | 0.8120 | 0.5993 | 0.4967 | 50.11 |

| 50 | 16 | 4.53 | 0.032 | 0.027 | 0.6320 | 0.7540 | 0.6910 | 0.6100 | 50.35 | |||

| Faster R-CNN | 70/20/10 | 10 | 32 | 1.58 | 0.070 | 0.066 | 0.3038 | 0.8679 | 0.5847 | 0.4449 | 69.39 | |

| 50 | 16 | 7.133 | 0.034 | 0.028 | 0.5855 | 0.7710 | 0.6765 | 0.5879 | 69.93 | |||

| Faster R-CNN | 70/10/20 | 10 | 16 | 1.067 | 0.057 | 0.053 | 0.3680 | 0.7946 | 0.5801 | 0.4790 | 69.84 | |

| 50 | 16 | 7.0167 | 0.032 | 0.028 | 0.6081 | 0.7449 | 0.6748 | 0.5884 | 68.31 | |||

| BDDIE | Faster R-CNN | 80/10/10 | 10 | 16 | 1.37 | 0.0587 | 0.0544 | 0.2528 | 0.7487 | 0.5001 | 0.4019 | 68.92 |

| 50 | 8 | 6.72 | 0.0157 | 0.0194 | 0.7054 | 0.7095 | 0.7055 | 0.6075 | 68.61 | |||

| Faster R-CNN | 70/20/10 | 10 | 16 | 1.53 | 0.0550 | 0.0550 | 0.2378 | 0.7557 | 0.4960 | 0.3947 | 68.86 | |

| 50 | 8 | 6.93 | 0.0160 | 0.0200 | 0.6511 | 0.7210 | 0.6842 | 0.5838 | 68.65 | |||

| Faster R-CNN | 70/10/20 | 10 | 16 | 1.18 | 0.0560 | 0.0580 | 0.2303 | 0.7655 | 0.4972 | 0.3938 | 50.90 | |

| 50 | 8 | 5.83 | 0.0160 | 0.0200 | 0.6480 | 0.7170 | 0.6800 | 0.5700 | 51.05 | |||

| MSDD | Faster R-CNN | 80/10/10 | 10 | 8 | 0.63 | 0.020 | 0.025 | 0.4485 | 0.9370 | 0.6905 | 0.6495 | 68.36 |

| 50 | 8 | 2.83 | 0.008 | 0.007 | 0.6290 | 0.9260 | 0.7740 | 0.7280 | 68.60 | |||

| Faster R-CNN | 70/20/10 | 10 | 8 | 1.01 | 0.022 | 0.022 | 0.2558 | 0.9245 | 0.5889 | 0.5 401 | 68.27 | |

| 50 | 16 | 5.42 | 0.012 | 0.010 | 0.3425 | 0.9209 | 0.6300 | 0.5834 | 68.50 | |||

| Faster R-CNN | 70/10/20 | 10 | 8 | 0.82 | 0.026 | 0.027 | 0.2570 | 0.9270 | 0.5910 | 0.5480 | 67.97 | |

| 50 | 16 | 2.58 | 0.013 | 0.010 | 0.5050 | 0.9290 | 0.7150 | 0.6730 | 68.46 | |||

| BSDD | Faster R-CNN | 80/10/10 | 10 | 16 | 0.2710 | 0.065 | 0.110 | 0.4709 | 1.0000 | 0.7331 | 0.5602 | 68.25 |

| 50 | 8 | 1.2667 | 0.014 | 0.016 | 0.5780 | 0.8610 | 0.7170 | 0.5780 | 68.60 | |||

| Faster R-CNN | 70/20/10 | 10 | 8 | 0.2875 | 0.027 | 0.027 | 0.2542 | 0.9701 | 0.6109 | 0.4767 | 68.42 | |

| 50 | 16 | 1.1167 | 0.011 | 0.011 | 0.4827 | 0.8678 | 0.6731 | 0.5215 | 68.05 | |||

| Faster R-CNN | 70/10/20 | 10 | 16 | 0.2875 | 0.055 | 0.096 | 0.3480 | 0.9720 | 0.6580 | 0.5170 | 68.57 | |

| 50 | 8 | 1.1500 | 0.017 | 0.021 | 0.5530 | 0.8690 | 0.7090 | 0.5430 | 69.25 |

| Classes | Precision | Recall | mAP@50 | mAP@50-95 |

|---|---|---|---|---|

| all | 0.897 | 0.881 | 0.887 | 0.72 |

| black spots | 0.773 | 0.609 | 0.619 | 0.264 |

| color variation | 0.985 | 0.988 | 0.995 | 0.973 |

| bottle | 0.997 | 1 | 0.995 | 0.965 |

| deformed thread | 0.717 | 0.698 | 0.727 | 0.273 |

| oval neck | 0.941 | 1 | 0.995 | 0.897 |

| no defect | 0.967 | 0.993 | 0.993 | 0.944 |

| Train | Test | ||||||

|---|---|---|---|---|---|---|---|

| Time (h) | Box Loss | Class Loss | Class | Precision | Recall | mAP@50 | mAP@50-95 |

| 0.580 | 0.429 | 0.287 | all | 0.932 | 0.910 | 0.913 | 0.745 |

| black spots | 0.806 | 0.739 | 0.748 | 0.302 | |||

| color variation | 0.991 | 0.991 | 0.995 | 0.966 | |||

| bottle | 0.998 | 1 | 0.995 | 0.968 | |||

| Train | Test | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Time (h) | Student Loss | Teacher Loss | Class Loss | Box Loss | Class | Precision | Recall | mAP@50 | mAP@50-95 |

| 2.247 | 1.189 | 2.020 | 0.007 | 0.908 | all | 0.778 | 0.836 | 0.809 | 0.617 |

| black spots | 0.722 | 0.467 | 0.503 | 0.155 | |||||

| color variation | 0.827 | 1 | 0.990 | 0.901 | |||||

| bottle | 0.969 | 0.996 | 0.992 | 0.918 | |||||

| deformed thread | 0.636 | 0.676 | 0.599 | 0.198 | |||||

| oval neck | 0.876 | 0.919 | 0.937 | 0.778 | |||||

| no defect | 0.640 | 0.957 | 0.836 | 0.753 | |||||

| Train | Test | ||||||

|---|---|---|---|---|---|---|---|

| Time (min) | Box Loss | Class Loss | Class | Precision | Recall | mAP@50 | mAP@50-95 |

| 7.5 | 0.400 | 0.379 | all | 0.858 | 0.873 | 0.880 | 0.686 |

| black spots | 0.638 | 0.573 | 0.576 | 0.197 | |||

| color variation | 0.953 | 1 | 0.988 | 0.952 | |||

| bottle | 0.981 | 1 | 0.993 | 0.952 | |||

| deformed thread | 0.746 | 0.775 | 0.772 | 0.275 | |||

| oval neck | 0.929 | 1 | 0.995 | 0.854 | |||

| no defect | 0.901 | 0.889 | 0.954 | 0.887 | |||

| Cycle | Time (min) | Student Loss | Teacher Loss | Class Loss | Box Loss | Precision | Recall | mAP@50 | mAP@50-95 | Inference (ms) |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 3.360 | 1.2649 | 1.8762 | 0.1122 | 0.8059 | 0.671 | 0.642 | 0.632 | 0.517 | 2.7 |

| 5 | 2.940 | 0.9691 | 1.6530 | 0.0218 | 0.7704 | 0.756 | 0.724 | 0.729 | 0.575 | 2.6 |

| 10 | 4.200 | 0.9110 | 1.7382 | 0.0137 | 0.7631 | 0.819 | 0.762 | 0.778 | 0.594 | 2.7 |

| 15 | 2.460 | 0.8854 | 1.8447 | 0.0131 | 0.7641 | 0.731 | 0.795 | 0.780 | 0.612 | 2.6 |

| 20 | 3.240 | 0.9999 | 2.0175 | 0.0117 | 0.7649 | 0.790 | 0.813 | 0.809 | 0.636 | 2.6 |

| Experiment | Recall (%) | mAP@50 (%) | ||

|---|---|---|---|---|

| First, Cycle | Final Cycle | First, Cycle | Final Cycle | |

| 1 | 63.0 | 83.0 | 61.2 | 81.4 |

| 2 | 61.3 | 79.0 | 57.4 | 80.2 |

| 3 | 53.0 | 80.8 | 52.1 | 76.1 |

| 4 | 60.2 | 77.1 | 31.1 | 71.5 |

| 5 | 60.2 | 78.3 | 33.1 | 71.8 |

| 6 | 60.9 | 76.2 | 34.2 | 72.4 |

| 7 | 62.8 | 76.2 | 55.3 | 74.0 |

| 8 | 60.5 | 72.8 | 32.7 | 73.7 |

| 9 | 59.8 | 80.1 | 31.4 | 74.5 |

| 10 | 61.5 | 72.9 | 45.7 | 73.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ribeiro, A.G.; Vilaça, L.; Costa, C.; Soares da Costa, T.; Carvalho, P.M. Automatic Visual Inspection for Industrial Application. J. Imaging 2025, 11, 350. https://doi.org/10.3390/jimaging11100350

Ribeiro AG, Vilaça L, Costa C, Soares da Costa T, Carvalho PM. Automatic Visual Inspection for Industrial Application. Journal of Imaging. 2025; 11(10):350. https://doi.org/10.3390/jimaging11100350

Chicago/Turabian StyleRibeiro, António Gouveia, Luís Vilaça, Carlos Costa, Tiago Soares da Costa, and Pedro Miguel Carvalho. 2025. "Automatic Visual Inspection for Industrial Application" Journal of Imaging 11, no. 10: 350. https://doi.org/10.3390/jimaging11100350

APA StyleRibeiro, A. G., Vilaça, L., Costa, C., Soares da Costa, T., & Carvalho, P. M. (2025). Automatic Visual Inspection for Industrial Application. Journal of Imaging, 11(10), 350. https://doi.org/10.3390/jimaging11100350