Effects of Biases in Geometric and Physics-Based Imaging Attributes on Classification Performance

Abstract

1. Introduction

- PART I

1.1. Current Thinking About Generalization in Learned Systems

1.2. The Issue of Bias in Data

- i.

- In reading-up on the field (5);

- ii.

- In specifying and selecting the study sample (22);

- iii.

- In executing the experimental manoeuvre (or exposure) (5);

- iv.

- In measuring exposures and outcomes (13);

- v.

- In analysing the data (5);

- vi.

- In interpreting the analysis (5);

- vii.

- In publishing the results.

- Popularity bias: The admission of patients to some practices, institutions or procedures (surgery, autopsy) is influenced by the interest stirred up by the presenting condition and its possible causes.In other words, if many researchers, companies, or governments are interested in a particular type of image (e.g., challenges sponsored by a conference or by a prize, the financial lure of start-ups and funding competitions, etc.), the more likely are samples of that type (or studies of this type as a whole) to be prioritized and others excluded. A potential example of this, could be generative AI models which produce images with colour palettes most similar to those of commercial images.

- Wrong sample size bias: Samples which are too small can prove nothing; samples which are too large can prove anything.How does one determine sample population size for a given task and domain? Most in the field (perhaps all) follow the maxim ‘the more data the better’ (e.g., Anderson [50]) with little regard to its suitability for the desired outcome. One could imagine for a specific field or application, some type of bias is present in all available data or data-gathering methods, thus more data can even degrade performance along a biased dimension.

- Missing clinical data bias: Missing clinical data may be missing because they are normal, negative, never measured, or measured but never recorded.Tsotsos et al. [51] show how the range of possible camera parameters are not well-sampled in modern datasets and how their setting makes a difference in performance. Similarly, datasets are not typically annotated with imaging geometry or lighting characteristics for each image. Yet it is clear each affects an image. Finally, there are many cases of particular data being missing, and several research efforts to ameliorate this problem were given in Section 1.1 above.

- Diagnostic purity bias: When ‘pure’ diagnostic groups exclude co-morbidity, they may become non-representative.The computer vision counterpart here reflects what Marr [52] prescribed in his theory: that it was designed for stimuli where target and background have a clear psychophysical boundary. That is, object context, clutter, occlusions, and lighting effects that somehow prevent a clear figure–ground segregation are not included in his theory. Many research works to this day still insist on images of this kind even though it is clear they represent only a small subset of all realistic images.

- Unacceptable disease bias: When disorders are socially unacceptable (V.D., suicide, insanity), they tend to be under-reported.In modern computer vision, ‘nuisance’ or ‘adversarial’ situations are under-reported or avoided by engineering around them, while others suggest they can be ignored.

1.3. Setting up the Problem

1.4. The Approach

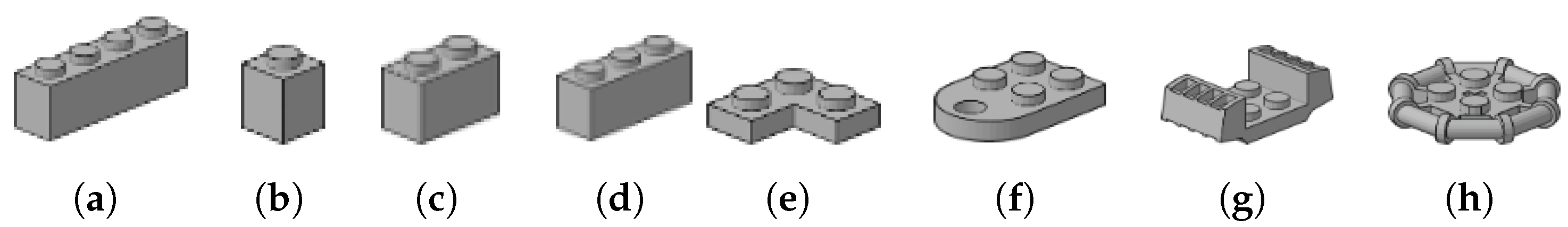

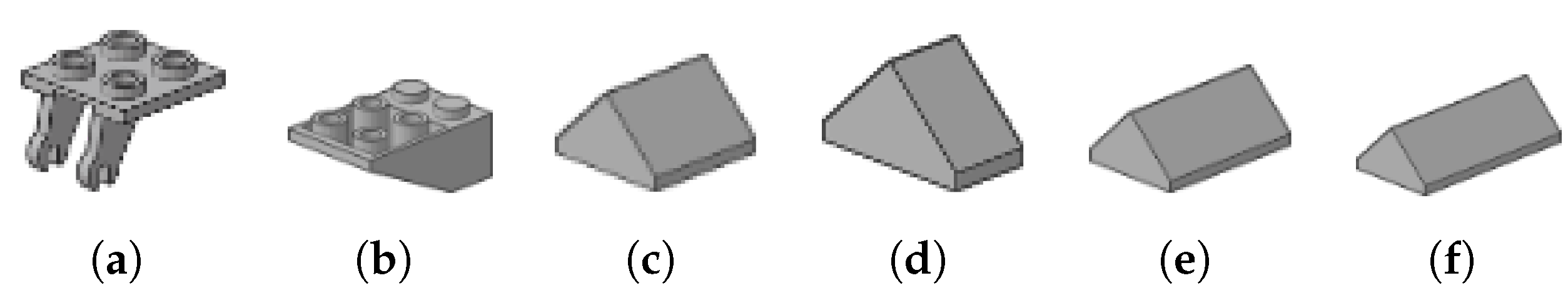

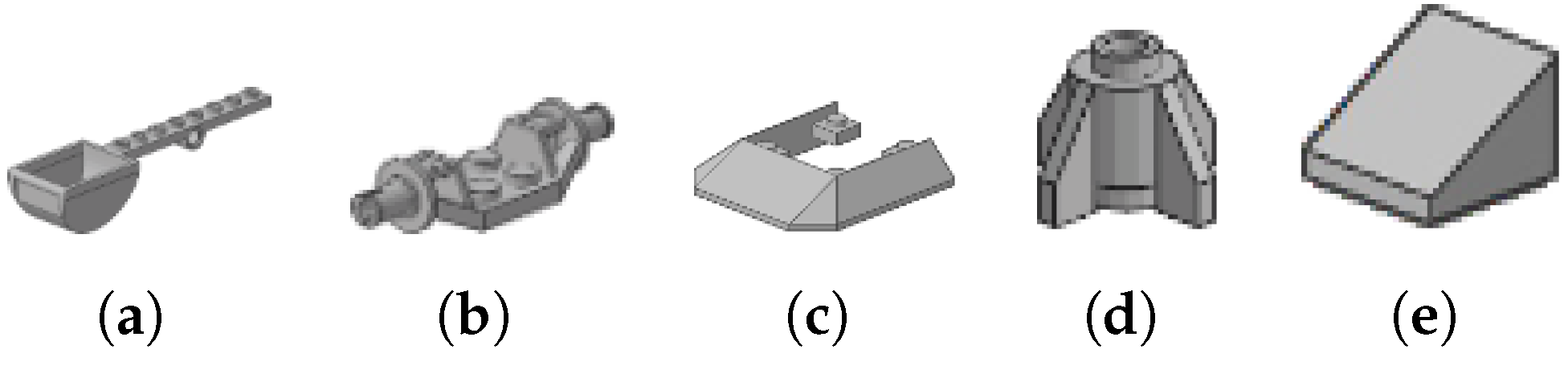

2. The Domain of LEGO Bricks

2.1. The LEGO Brick World

| Category | Number of Sub-Types in Each Category |

| Plates 1 × N | 48 |

| Plates 2 × N | 49 |

| Plates 3 × N–4 × N | 25 |

| Bricks 1 × N | 48 |

| Bricks 2 × N | 33 |

| Wedge-Plates | 29 |

| Tiles | 49 |

| Slopes | 106 |

| Plates 5 × N–6 × N plus Plates 5 × N+ | 22 |

| Hinges | 66 |

| Wedge-Bricks | 45 |

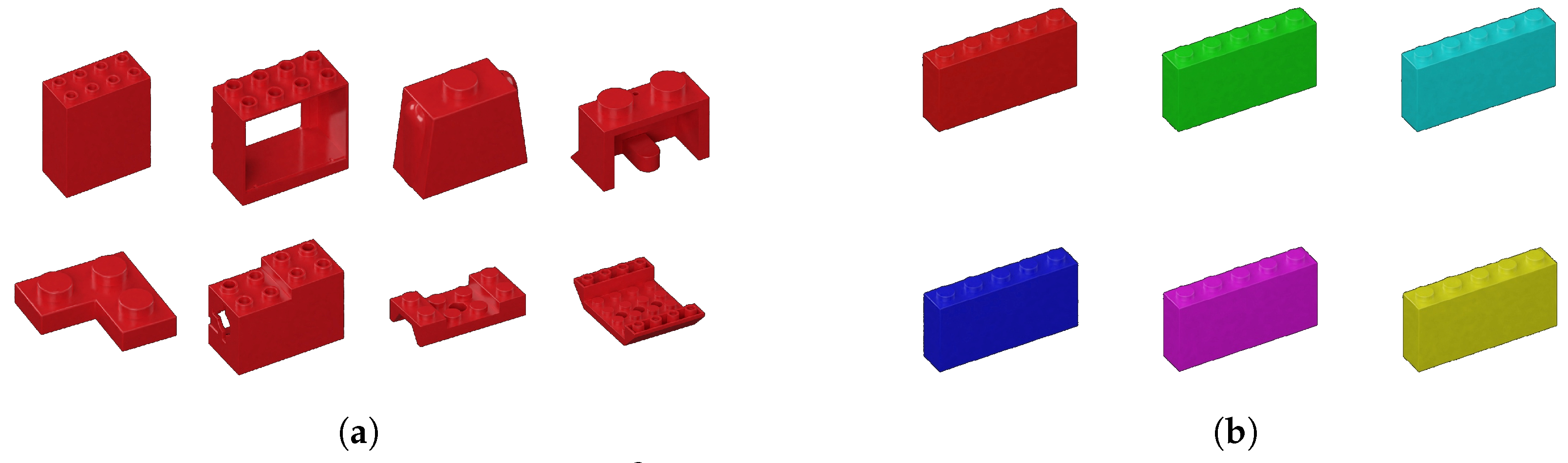

- LEGO® went from 12,000 different pieces to 6800 in the last few years, a number that includes the colour variations.

- Staple colours are red, yellow, blue, green, black, and white. We thus assume equal numbers of each of 6 colours—this means 1133 unique brick types.

- Approximately 19 billion LEGO® elements are produced per year. Moreover, 2.16 million are moulded every hour, 36,000 every minute.

- Additionally, 18 elements in every million produced fail to meet the company’s high standards.

2.2. Detection and Binning Scenario

- There is a conveyor belt moving at a speed compatible with the speed of production of the bricks, i.e., using the data described earlier, so that 600 elements can be processed per second. It is acceptable that many conveyor belts might be used operating in parallel.

- Lighting is fixed; camera position and optical parameters are fixed and known; the camera’s optical axis is perpendicular to the conveyor belt and centred at the belt’s centreline (the camera is directly overhead and pointing down to the centre of the conveyor belt); shadows are minimized or non-existent; the appearance of the conveyor belt permits easy figure–ground segregation.

- An appropriate system exists for understanding a customer request (e.g., “I need 500 bricks, only yellow and with fewer than 6 studs”) and deploying the appropriate systems for its realization.

- The pose of each brick on the conveyor belt may be upright, upside-down, or on one of its other stable sides. Each brick has its own set of stable sides on which it might lie. Each brick would have at least 2 stable positions perhaps to a maximum of 6 (sides of a cube). We conservatively assume 3 as the average, but this is likely low.

- When on their stable position, bricks may be at any orientation relative to the plane of the conveyor belt; we assume the recognition system understands orientation quantized to , i.e., 72 orientations (360 orientations seems unnecessarily fine-grained whereas 4 seems too coarse. The assumption is made to enable the ‘back-of-the-envelope’ kind of counting argument made here and could easily be some other sensible value. It also seems sensible due to the restrictions on camera viewpoint described earlier).

- We assume that bricks do not overlap and thus do not occlude each other for the camera.

- The number of possible images using the complete library of bricks can be given by 3 (poses) × 520 (brick types) × 141 (colours) × 72 (orientations) = 15,837,120 (single instance of each part at each orientation and pose). In our argument, we will use the actual production numbers described earlier, namely, 3 (poses) × 6800 (bricks of the standard 6 colours) × 72 (orientations) = 1,468,800.

3. The Thought Experiment: Setup

- Challenge (or even refute) a prevailing theory, often involving proof via contradiction (Reductio ad Absurdum);

- Confirm a prevailing theory;

- Establish a new theory;

- Simultaneously refute a prevailing theory and establish a new theory through a process of mutual exclusion.

4. The Thought Experiment: Cases Considered

4.1. Training Set Missing One Brick Colour

- Green is created by combining equal parts of blue and yellow;

- Black can be made with equal parts red, yellow, and blue, or blue and orange, red and green, or yellow and purple (The author of https://brickarchitect.com/color/ (accessed on 30 April 2022) points out that the LEGO Black is not a true black, but rather a very dark gray, and LEGO White is actually a light orange-ish gray);

- White can be created by mixing red, green, and blue; alternatively, a yellow (say, 580 nm) and a blue (420 nm) will also give white.

- Red is created by mixing only magenta and yellow;

- Green is created by mixing only cyan and yellow;

- Blue is created by mixing only cyan and magenta;

- Black can be approximated by mixing cyan, magenta, and yellow, but pure black is nearly impossible to achieve.

4.2. Training Set Missing One Brick Size

4.3. Training Set Missing One Brick Orientation

4.4. Training Set Missing One Brick 3D Pose

4.5. Training Set Missing One Brick Shape

4.6. Part I Summary

- PART II

5. Empirical Counterpart

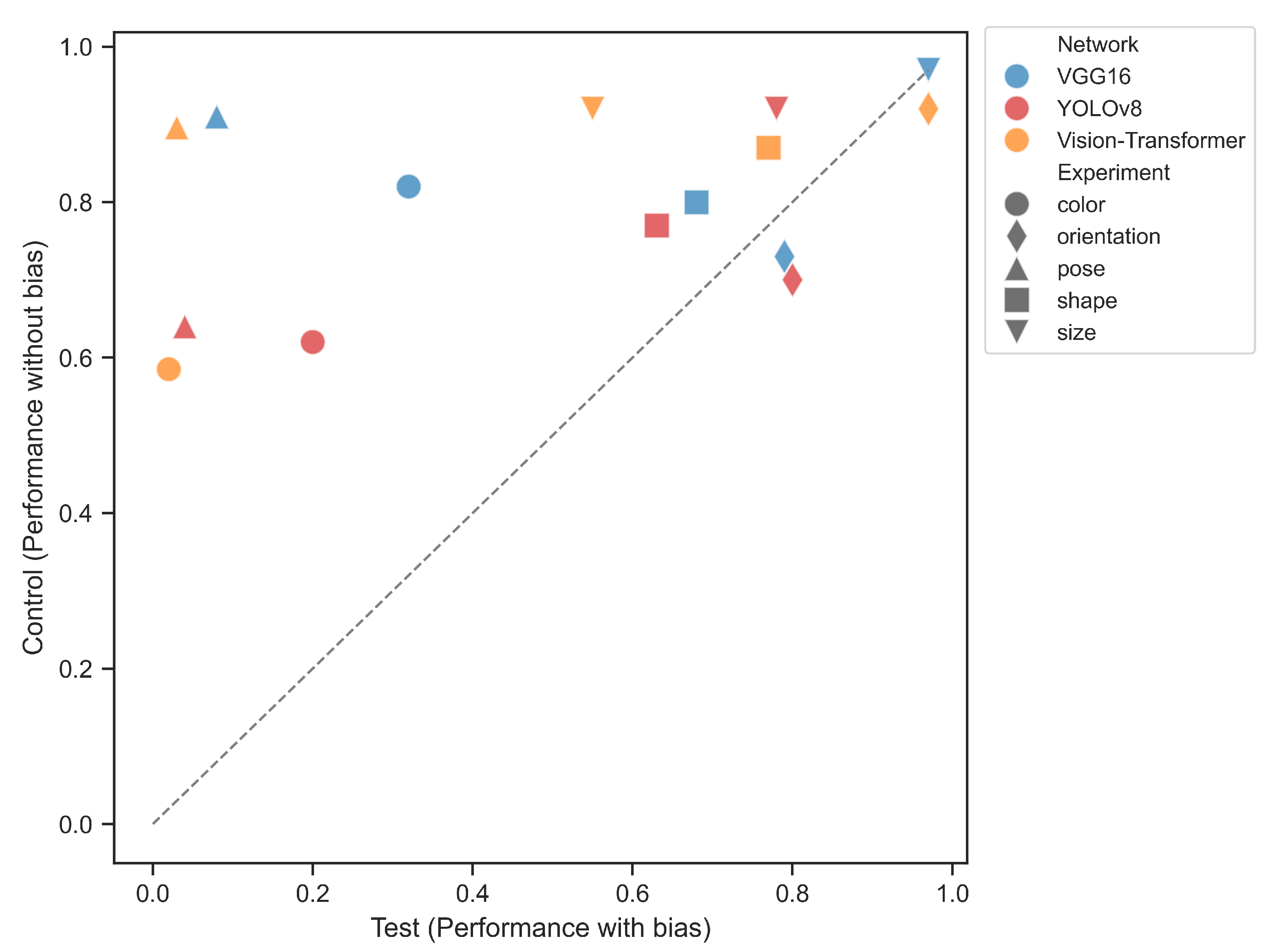

- Training set: This is a biased dataset used to train the models. In each experiment, the training set is biased by excluding certain values of a specific attribute, for example, an experiment might exclude a particular colour, shape, or orientation. As a result, the training data does not represent the full range of variation for that attribute.

- Control set: A subset of the training set is set aside as a control set to evaluate generalization under the same bias. These samples are excluded from the training process but share the same distribution as the training set. This allows us to isolate the effect of the training-test gap by ensuring any performance drop is not simply due to overfitting or memorization.

- Test set: The test set contains samples with attribute values that are entirely missing from the training set. For instance, these samples might include shapes, colours, or poses that the model never encountered during training. These samples are considered out-of-distribution (OOD) relative to the biased training set.

5.1. Dataset—Official LEGO Models

The Size Specific Dataset

5.2. Experiments

5.2.1. Colour Experiment

5.2.2. Orientation Experiment

5.2.3. Pose Experiments

5.2.4. Shape Experiment

5.2.5. Size Experiment

5.3. Experiment Results

5.4. Part II Summary

6. General Discussion

6.1. Methodology and Assumption

6.2. Cases Outside the Brick Binning Task

6.3. Limits on Generalization

6.4. Problems with Data Augmentation

6.5. Bias in Datasets

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shorten, C.; Khoshgoftaar, T. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Xu, H.; Ma, Y.; Liu, H.; Deb, D.; Liu, H.; Tang, J.; Jain, A. Adversarial attacks and defenses in images, graphs and text: A review. Int. J. Autom. Comput. 2020, 17, 151–178. [Google Scholar] [CrossRef]

- Bartlett, P.L.; Maass, W. Vapnik-Chervonenkis dimension of neural nets. In The Handbook of Brain Theory and Neural Networks; MIT Press: Cambridge, MA, USA, 2003; pp. 1188–1192. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning requires rethinking generalization. arXiv 2016, arXiv:1611.03530. [Google Scholar]

- Keskar, N.S.; Mudigere, D.; Nocedal, J.; Smelyanskiy, M.; Tang, P.T.P. On large-batch training for deep learning: Generalization gap and sharp minima. arXiv 2016, arXiv:1609.04836. [Google Scholar]

- Neyshabur, B.; Bhojanapalli, S.; McAllester, D.; Srebro, N. Exploring generalization in deep learning. Adv. Neural Inf. Process. Syst. 2017, 30, 5949–5958. [Google Scholar]

- Kawaguchi, K.; Kaelbling, L.P.; Bengio, Y. Generalization in deep learning. arXiv 2017, arXiv:1710.05468. [Google Scholar]

- Wu, L.; Zhu, Z. Towards understanding generalization of deep learning: Perspective of loss landscapes. arXiv 2017, arXiv:1706.10239. [Google Scholar] [CrossRef]

- Novak, R.; Bahri, Y.; Abolafia, D.A.; Pennington, J.; Sohl-Dickstein, J. Sensitivity and generalization in neural networks: An empirical study. arXiv 2018, arXiv:1802.08760. [Google Scholar] [CrossRef]

- Fetaya, E.; Jacobsen, J.H.; Grathwohl, W.; Zemel, R. Understanding the Limitations of Conditional Generative Models. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Wang, H.; Wu, X.; Huang, Z.; Xing, E. High-frequency component helps explain the generalization of convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8684–8694. [Google Scholar]

- Zhang, C.; Zhang, M.; Zhang, S.; Jin, D.; Zhou, Q.; Cai, Z.; Liu, Z. Delving deep into the generalization of vision transformers under distribution shifts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 7277–7286. [Google Scholar]

- Wang, M.; Ma, C. Generalization error bounds for deep neural networks trained by sgd. arXiv 2022, arXiv:2206.03299. [Google Scholar]

- Galanti, T.; Xu, M.; Galanti, L.; Poggio, T. Norm-based generalization bounds for sparse neural networks. Adv. Neural Inf. Process. Syst. 2023, 36, 42482–42501. [Google Scholar]

- Zhao, C.; Sigaud, O.; Stulp, F.; Hospedales, T. Investigating generalisation in continuous deep reinforcement learning. arXiv 2019, arXiv:1902.07015. [Google Scholar] [CrossRef]

- Barbu, A.; Mayo, D.; Alverio, J.; Luo, W.; Wang, C.; Gutfreund, D.; Tenenbaum, J.; Katz, B. Objectnet: A large-scale bias-controlled dataset for pushing the limits of object recognition models. Adv. Neural Inf. Process. Syst. 2019, 32, 9453–9463. [Google Scholar]

- Afifi, M.; Brown, M.S. What else can fool deep learning? Addressing color constancy errors on deep neural network performance. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 243–252. [Google Scholar]

- Senhaji, A.; Raitoharju, J.; Gabbouj, M.; Iosifidis, A. Not all domains are equally complex: Adaptive Multi-Domain Learning. arXiv 2020, arXiv:2003.11504. [Google Scholar] [CrossRef]

- Bengio, Y.; Gingras, F. Recurrent neural networks for missing or asynchronous data. Adv. Neural Inf. Process. Syst. 1995, 8, 395–401. [Google Scholar]

- Van Buuren, S. Flexible Imputation of Missing Data; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Lin, W.C.; Tsai, C.F. Missing value imputation: A review and analysis of the literature (2006–2017). Artif. Intell. Rev. 2020, 53, 1487–1509. [Google Scholar] [CrossRef]

- Śmieja, M.; Struski, L.; Tabor, J.; Zieliński, B.; Spurek, P. Processing of missing data by neural networks. Adv. Neural Inf. Process. Syst. 2018, 31, 2719–2729. [Google Scholar]

- Chai, X.; Gu, H.; Li, F.; Duan, H.; Hu, X.; Lin, K. Deep learning for irregularly and regularly missing data reconstruction. Sci. Rep. 2020, 10, 3302. [Google Scholar] [CrossRef]

- Zhou, K.; Yang, Y.; Qiao, Y.; Xiang, T. MixStyle Neural Networks for Domain Generalization and Adaptation. Int. J. Comput. Vis. 2024, 132, 822–836. [Google Scholar] [CrossRef]

- García-Laencina, P.J.; Sancho-Gómez, J.L.; Figueiras-Vidal, A.R. Pattern classification with missing data: A review. Neural Comput. Appl. 2010, 19, 263–282. [Google Scholar] [CrossRef]

- Polikar, R.; DePasquale, J.; Mohammed, H.S.; Brown, G.; Kuncheva, L.I. Learn++. MF: A random subspace approach for the missing feature problem. Pattern Recognit. 2010, 43, 3817–3832. [Google Scholar] [CrossRef]

- Fridovich-Keil, S.; Bartoldson, B.; Diffenderfer, J.; Kailkhura, B.; Bremer, T. Models out of line: A fourier lens on distribution shift robustness. Adv. Neural Inf. Process. Syst. 2022, 35, 11175–11188. [Google Scholar]

- Simsek, B.; Hall, M.; Sagun, L. Understanding out-of-distribution accuracies through quantifying difficulty of test samples. arXiv 2022, arXiv:2203.15100. [Google Scholar] [CrossRef]

- Hendrycks, D.; Basart, S.; Mu, N.; Kadavath, S.; Wang, F.; Dorundo, E.; Desai, R.; Zhu, T.; Parajuli, S.; Guo, M.; et al. The Many Faces of Robustness: A Critical Analysis of Out-of-Distribution Generalization. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 10–17 October 2021; IEEE Computer Society: Washington, DC, USA, 2021; pp. 8320–8329. [Google Scholar]

- Hendrycks, D.; Zhao, K.; Basart, S.; Steinhardt, J.; Song, D. Natural adversarial examples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15262–15271. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Ridnik, T.; Ben-Baruch, E.; Noy, A.; Zelnik-Manor, L. Imagenet-21k pretraining for the masses. arXiv 2021, arXiv:2104.10972. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Alcorn, M.A.; Li, Q.; Gong, Z.; Wang, C.; Mai, L.; Ku, W.S.; Nguyen, A. Strike (with) a pose: Neural networks are easily fooled by strange poses of familiar objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4845–4854. [Google Scholar]

- Zong, Y.; Yang, Y.; Hospedales, T. MEDFAIR: Benchmarking fairness for medical imaging. arXiv 2022, arXiv:2210.01725. [Google Scholar]

- Larrazabal, A.J.; Nieto, N.; Peterson, V.; Milone, D.H.; Ferrante, E. Gender imbalance in medical imaging datasets produces biased classifiers for computer-aided diagnosis. Proc. Natl. Acad. Sci. USA 2020, 117, 12592–12594. [Google Scholar] [CrossRef]

- Drenkow, N.; Pavlak, M.; Harrigian, K.; Zirikly, A.; Subbaswamy, A.; Farhangi, M.M.; Petrick, N.; Unberath, M. Detecting dataset bias in medical AI: A generalized and modality-agnostic auditing framework. arXiv 2025, arXiv:2503.09969. [Google Scholar] [CrossRef]

- Chen, I.; Johansson, F.D.; Sontag, D. Why is my classifier discriminatory? In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2018; Volume 31. [Google Scholar]

- Seyyed-Kalantari, L.; Liu, G.; McDermott, M.; Chen, I.Y.; Ghassemi, M. CheXclusion: Fairness gaps in deep chest X-ray classifiers. In Biocomputing 2021: Proceedings of the Pacific Symposium; World Scientific: Singapore, 2020; pp. 232–243. [Google Scholar]

- Li, X.; Chen, Z.; Zhang, J.M.; Sarro, F.; Zhang, Y.; Liu, X. Bias behind the wheel: Fairness testing of autonomous driving systems. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–24. [Google Scholar] [CrossRef]

- Fernández Llorca, D.; Frau, P.; Parra, I.; Izquierdo, R.; Gómez, E. Attribute annotation and bias evaluation in visual datasets for autonomous driving. J. Big Data 2024, 11, 137. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. In Proceedings of the Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; pp. 77–91. [Google Scholar]

- Kortylewski, A.; Egger, B.; Schneider, A.; Gerig, T.; Morel-Forster, A.; Vetter, T. Empirically analyzing the effect of dataset biases on deep face recognition systems. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2093–2102. [Google Scholar]

- Kortylewski, A.; Egger, B.; Schneider, A.; Gerig, T.; Morel-Forster, A.; Vetter, T. Analyzing and reducing the damage of dataset bias to face recognition with synthetic data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 2261–2268. [Google Scholar]

- Balding, D.J. A tutorial on statistical methods for population association studies. Nat. Rev. Genet. 2006, 7, 781–791. [Google Scholar] [CrossRef] [PubMed]

- Gordis, L. Epidemiology; Elsevier Health Sciences: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Sackett, D. Bias in analytic research. J. Chronic Dis. 1979, 32, 51–63. [Google Scholar] [CrossRef] [PubMed]

- Grimes, D.A.; Schulz, K.F. Bias and causal associations in observational research. Lancet 2002, 359, 248–252. [Google Scholar] [CrossRef]

- Anderson, C. The end of theory: The data deluge makes the scientific method obsolete. Wired Magazine, 23 June 2008. [Google Scholar]

- Tsotsos, J.; Kotseruba, I.; Andreopoulos, A.; Wu, Y. A Possible Reason for why Data-Driven Beats Theory-Driven Computer Vision. arXiv 2019, arXiv:1908.10933. [Google Scholar]

- Marr, D. Vision: A Computational Investigation into the Human Representation and Processing of Visual Information; MIT Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Pavlidis, T. The number of all possible meaningful or discernible pictures. Pattern Recognit. Lett. 2009, 30, 1413–1415. [Google Scholar] [CrossRef]

- Andreopoulos, A.; Tsotsos, J.K. On sensor bias in experimental methods for comparing interest-point, saliency, and recognition algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 110–126. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Tsotsos, J. Active control of camera parameters for object detection algorithms. arXiv 2017, arXiv:1705.05685. [Google Scholar] [CrossRef]

- Tsotsos, J. The Complexity of Perceptual Search Tasks. In Proceedings of the International Joint Conference on Artificial Intelligence, Detroit, MI, USA, 20–25 August 1989; pp. 1571–1577. [Google Scholar]

- Ahmad, S.; Tresp, V. Some solutions to the missing feature problem in vision. Adv. Neural Inf. Process. Syst. 1992, 5, 393–400. [Google Scholar]

- Boen, J. BrickGun Storage Labels. Available online: https://brickgun.com/Labels/BrickGun_Storage_Labels.html (accessed on 30 April 2022).

- Diaz, J. Everything You Always Wanted to Know About LEGO®. 2009. Available online: https://gizmodo.com/everything-you-always-wanted-to-know-about-LEGO-5019797 (accessed on 23 April 2025).

- Robinson, J.B. Futures under glass: A recipe for people who hate to predict. Futures 1990, 22, 820–842. [Google Scholar] [CrossRef]

- Xu, M.; Bai, Y.; Ghanem, B.; Liu, B.; Gao, Y.; Guo, N.; Olmschenk, G. Missing Labels in Object Detection. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Koenderink, J.J. Color for the Sciences; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Rumelhart, D.E.; McClelland, J.L.; Hinton, G.E.; PDP Research Group. Distributed representations. In Parallel Distributed Processing, Volume 1: Explorations in the Microstructure of Cognition: Foundations; MIT Press: Cambridge, MA, USA, 1986; Chapter 3. [Google Scholar]

- Rasouli, A.; Tsotsos, J.K. The effect of color space selection on detectability and discriminability of colored objects. arXiv 2017, arXiv:1702.05421. [Google Scholar] [CrossRef]

- Cutler, B. Aspect Graphs Under Different Viewing Models. 2003. Available online: http://people.csail.mit.edu/bmcutler/6.838/project/aspect_graph.html#22 (accessed on 30 April 2022).

- Plantinga, H.; Dyer, C.R. Visibility, occlusion, and the aspect graph. Int. J. Comput. Vis. 1990, 5, 137–160. [Google Scholar] [CrossRef]

- Rosenfeld, A. Recognizing unexpected objects: A proposed approach. Int. J. Pattern Recognit. Artif. Intell. 1987, 1, 71–84. [Google Scholar] [CrossRef]

- Dickinson, S.J.; Pentland, A.; Rosenfeld, A. 3-D shape recovery using distributed aspect matching. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 174–198. [Google Scholar] [CrossRef]

- Engstrom, L.; Tran, B.; Tsipras, D.; Schmidt, L.; Madry, A. A rotation and a translation suffice: Fooling CNNs with simple transformations. arXiv 2017, arXiv:1712.02779. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Rosenfeld, A.; Zemel, R.; Tsotsos, J.K. The elephant in the room. arXiv 2018, arXiv:1808.03305. [Google Scholar] [CrossRef]

- Clowes, M.B. On seeing things. Artif. Intell. 1971, 2, 79–116. [Google Scholar] [CrossRef]

- Waltz, D.L. Understanding line drawings of scenes with shadows. In The Psychology of Computer Vision; Winston, P.H., Horn, B.K.P., Eds.; McGraw–Hill: New York, NY, USA, 1975; pp. 19–91. [Google Scholar]

- Kirousis, L.M.; Papadimitriou, C.H. The complexity of recognizing polyhedral scenes. J. Comput. Syst. Sci. 1988, 37, 14–38. [Google Scholar] [CrossRef]

- Parodi, P. The Complexity of Understanding Line Drawings of Origami Scenes. Int. J. Comput. Vis. 1996, 18, 139–170. [Google Scholar] [CrossRef]

- Sugihara, K. Mathematical structures of line drawings of polyhedrons. IEEE Trans. Pattern Anal. Mach. Intell. 1982, 4, 458–469. [Google Scholar] [CrossRef]

- Malik, J. Interpreting line drawings of curved objects. Int. J. Comput. Vis. 1987, 1, 73–103. [Google Scholar] [CrossRef]

- LDraw.org. LDraw (Version LDraw.org Parts Update 2023-05). 2023. Available online: https://www.ldraw.org (accessed on 20 December 2023).

- Blender Studio. Blender. Version 2.79. Available online: https://www.blender.org/ (accessed on 20 December 2023).

- Nelson, T. ImportLDraw: A plugin for the LDraw library in Blender, Version 1.1.10. 2019. Available online: https://github.com/TobyLobster/ImportLDraw (accessed on 17 September 2025).

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J.; Ultralytics YOLO Version 8. Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 1 October 2023).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the ICLR, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Gavrilescu, R.; Zet, C.; Foșalău, C.; Skoczylas, M.; Cotovanu, D. Faster R-CNN: An approach to real-time object detection. In Proceedings of the 2018 International Conference and Exposition on Electrical and Power Engineering (EPE), Iasi, Romania, 18–19 October 2018; pp. 0165–0168. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin transformer v2: Scaling up capacity and resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Chen, X.; Wang, X.; Changpinyo, S.; Piergiovanni, A.; Padlewski, P.; Salz, D.; Goodman, S.; Grycner, A.; Mustafa, B.; Beyer, L.; et al. PaLI: A Jointly-Scaled Multilingual Language-Image Model. In Proceedings of the the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Faugeras, O. Three-Dimensional Computer Vision: A Geometric Viewpoint; MIT Press: Cambridge, MA, USA, 1993. [Google Scholar]

- Horn, B. Robot Vision; MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Bajcsy, R.; Aloimonos, Y.; Tsotsos, J.K. Revisiting active perception. Auton. Robot. 2018, 42, 177–196. [Google Scholar] [CrossRef]

- Kratsios, A.; Bilokopytov, I. Non-euclidean universal approximation. Adv. Neural Inf. Process. Syst. 2020, 33, 10635–10646. [Google Scholar]

- van Dyk, D.; Meng, X.L. The Art of Data Augmentation. J. Comput. Graph. Stat. 2001, 10, 1–50. [Google Scholar] [CrossRef]

- Kukačka, J.; Golkov, V.; Cremers, D. Regularization for deep learning: A taxonomy. arXiv 2017, arXiv:1710.10686. [Google Scholar] [CrossRef]

- Hernández-García, A.; König, P. Data augmentation instead of explicit regularization. arXiv 2018, arXiv:1806.03852. [Google Scholar]

- Hill, H.; Bruce, V. Independent effects of lighting, orientation, and stereopsis on the hollow-face illusion. Perception 1993, 22, 887–897. [Google Scholar] [CrossRef] [PubMed]

- Carroll, J.B. Human Cognitive Abilities: A Survey of Factor-Analytic Studies; Number 1; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Itti, L.; Rees, G.; Tsotsos, J.K. Neurobiology of Attention; Elsevier: Amsterdam, The Netherlands, 2005. [Google Scholar]

- Rasouli, A.; Tsotsos, J.K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 2019, 21, 900–918. [Google Scholar] [CrossRef]

- Akhtar, N.; Mian, A. Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access 2018, 6, 14410–14430. [Google Scholar] [CrossRef]

| Training Set Bias | Learned Network | Generalization Error |

|---|---|---|

| Unbiased | A | |

| Colour Biased | , | |

| , | ||

| Size Biased | ||

| Orientation Biased | ||

| 3D Pose Biased | ||

| Shape Biased |

| ⏹ff36ff | ⏹ 3636ff | ⏹ 36ffff | ⏹ 36ff36 | ⏹ ffff36 |

|---|---|---|---|---|

| ⏹ ff8082 | ⏹ ff80ff | ⏹ 8080ff | ⏹ 80ffff | ⏹ 80ff80 |

| ⏹ ffff80 | ⏹ 800002 | ⏹ 800080 | ⏹ 000080 | ⏹ 008080 |

| ⏹ 008000 | ⏹ 808000 | ⏹ ff3333 | ⏹ 808080 | ⏹ 000000 |

| VGG 16 | YOLOv8 | ViT | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Test | Train | Ctrl | Test | Train | Ctrl | Test | Train | Ctrl | |

| Size | 97 | 97 | 97 | 78 | 90 | 92 | 55 | 85 | 92 |

| Shape | 68 | 80 | 80 | 63 | 77 | 77 | 77 | 87 | 87 |

| Orientation | 79 | 73 | 73 | 80 | 69 | 70 | 97 | 96 | 92 |

| Pose | 8 | 90 | 90 | 4 | 64 | 64 | 3 | 89 | 89 |

| Colour | 33 | 84 | 82 | 20 | 63 | 62 | 2 | 59 | 58 |

| Bias Class |

| Bias dimensions evaluated |

| Interpretation within current domain |

| Bias not applicable by design |

| Evaluated impact on performance requirements |

| Bias dimensions not evaluated |

| Estimated impact of non-evaluated biases |

| Bias Class | missing data |

| Bias dimensions evaluated | size, shape, orientation, colour, 3D pose |

| Interpretation within current domain | there are no training samples representing a particular value of one or more of these dimensions |

| Bias not applicable by design | the following are assumed constant across all training/test samples: imaging geometry, lighting, occlusion |

| Evaluated impact on performance requirements | See Table 1 above |

| Bias dimensions not evaluated | conjunctions of size, shape, orientation, colour, 3D pose; low sample size of some dimension |

| Estimated impact of non-evaluated biases | less predictable:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rouhani, B.; Tsotsos, J.K. Effects of Biases in Geometric and Physics-Based Imaging Attributes on Classification Performance. J. Imaging 2025, 11, 333. https://doi.org/10.3390/jimaging11100333

Rouhani B, Tsotsos JK. Effects of Biases in Geometric and Physics-Based Imaging Attributes on Classification Performance. Journal of Imaging. 2025; 11(10):333. https://doi.org/10.3390/jimaging11100333

Chicago/Turabian StyleRouhani, Bahman, and John K. Tsotsos. 2025. "Effects of Biases in Geometric and Physics-Based Imaging Attributes on Classification Performance" Journal of Imaging 11, no. 10: 333. https://doi.org/10.3390/jimaging11100333

APA StyleRouhani, B., & Tsotsos, J. K. (2025). Effects of Biases in Geometric and Physics-Based Imaging Attributes on Classification Performance. Journal of Imaging, 11(10), 333. https://doi.org/10.3390/jimaging11100333