Abstract

This paper addresses the significant problem of identifying the relevant background and contextual literature related to deep learning (DL) as an evolving technology in order to provide a comprehensive analysis of the application of DL to the specific problem of pneumonia detection via chest X-ray (CXR) imaging, which is the most common and cost-effective imaging technique available worldwide for pneumonia diagnosis. This paper in particular addresses the key period associated with COVID-19, 2020–2023, to explain, analyze, and systematically evaluate the limitations of approaches and determine their relative levels of effectiveness. The context in which DL is applied as both an aid to and an automated substitute for existing expert radiography professionals, who often have limited availability, is elaborated in detail. The rationale for the undertaken research is provided, along with a justification of the resources adopted and their relevance. This explanatory text and the subsequent analyses are intended to provide sufficient detail of the problem being addressed, existing solutions, and the limitations of these, ranging in detail from the specific to the more general. Indeed, our analysis and evaluation agree with the generally held view that the use of transformers, specifically, vision transformers (ViTs), is the most promising technique for obtaining further effective results in the area of pneumonia detection using CXR images. However, ViTs require extensive further research to address several limitations, specifically the following: biased CXR datasets, data and code availability, the ease with which a model can be explained, systematic methods of accurate model comparison, the notion of class imbalance in CXR datasets, and the possibility of adversarial attacks, the latter of which remains an area of fundamental research.

1. Introduction

Pneumonia is a lung infection caused by various pathogens, including viruses, bacteria, and fungi [1,2]. It affects either one or both lungs and results in the inflammation of lung parenchyma, i.e., the portion of the lung tissue that is responsible for gas exchange, including pulmonary alveoli. The inflammation causes the lung’s alveoli to fill up with pus or fluid, therefore resulting in symptoms like shortness of breath, cough, chest pain, fever, etc. Common pathogens responsible for viral pneumonia include influenza viruses, respiratory syncytial virus, and the SARS-CoV-2 virus, while the most common pathogen of bacterial pneumonia is Streptococcus pneumoniae [3].

Pneumonia is a significant global health threat, leading to high mortality rates worldwide. It is a major cause of death of children (under five years) in developing countries as well as of elderly people (above 65 years) in developed countries [4,5,6]. Recently, the outbreak of SARS-CoV-2 virus has also caused much havoc across the world. Globally, up till the time of writing, there have been more than 702 million confirmed cases of the virus, resulting in around 6.9 million deaths [7]. In many cases, the SARS-CoV-2 virus leads to pneumonia in the infected person [8] and therefore requires the hospitalization of the patient. Since SARS-CoV-2 has a relatively high transmission rate with an R0 of around 2.5 for the original variant [9], the medical and healthcare systems of even developed countries have sometimes been overwhelmed by the high number of COVID-19 patients [10]. Due to the aforementioned problems, timely and accurate pneumonia diagnoses are needed for prompt curative treatment, which in turn helps mitigate the pneumonia-associated crisis.

Some common tools to diagnose pneumonia include chest X-rays (CXRs), chest computed tomography (CT) scans, magnetic resonance imaging (MRI) of the chest, chest ultrasound scans, etc. [11]. Despite CXRs’ lower sensitivity for detecting pneumonia compared to that of some other diagnostic tools, like chest CT and chest ultrasound scans [12,13], CXRs are still considered the gold standard for diagnosing pneumonia according to most clinical guidelines globally [14]. Moreover, CXRs are very economical [15], easily accessible, and have very low radiation doses compared to CT scans [16]. For example, a CXR scan delivers only 0.1 millisievert (mSv) of ionized radiation to the patient compared to 7 mSv delivered by a chest CT scan; i.e., one’s exposure to radiation is seventy times higher in the case of the CT scan. Compared to MRIs, CXRs also have some benefits. They have lower costs, are much quicker to perform, and are much more commonly and easily available even in resource-constrained parts of the world. For these reasons, CXRs are the most commonly performed radiological scans in the world [17].

In the context of the COVID-19 pandemic, CXRs enable the rapid triaging of patients during a COVID-19 wave [18]. This is because the gold-standard diagnostic test for SARS-CoV-2 is reverse transcription-polymerase chain reaction (RT-PCR) [19] but this is time-consuming, involves a laborious manual process [20], and also suffers from a low level of sensitivity [20]. The use of CXR-based diagnosis in parallel with RT-PCR (which takes longer) can help prioritize patients and improve survival rates. In addition, portable CXRs ensure patient isolation and help in preventing the spread of the virus [18,21]. All this is possible only because COVID-19 pneumonia has some unique manifestations on CXRs [21], which are different from other forms of pneumonia.

Unfortunately, despite all the aforementioned benefits of using CXRs as a tool to diagnose pneumonia, there is a dearth of expert radiologists [6,15,22], especially in developing countries, that can accurately interpret CXRs; i.e., there is often a serious imbalance between the number of patients and the number of available radiologists. Moreover, since the resolution of CXRs is lower than that of CT and MRI scans, there is always a chance that even an expert radiologist or clinician may miss out some important pattern or manifestation present in a CXR [6]. Computer-aided diagnostic (CAD) tools can help medical staff like radiologists and clinicians in pneumonia diagnosis. Deep-learning (DL)-based methods have been extensively evaluated as an underlying CAD technology for pneumonia diagnosis [1,2,6,8,11,15,18,23,24,25,26].

Over the past decade, DL methods have dramatically improved the state of the art in visual recognition tasks [27,28]. This includes diagnostic accuracy in medical imaging [29], such as pneumonia diagnosis in CXR. Unlike the traditional machine learning (ML) approach, DL methods do not require domain expertise or hand-crafted features. This is because in DL, features are learned from the data directly using a general-purpose learning procedure called backpropagation [27]. This enables automatic end-to-end feature extraction and image classification. A convolutional neural network (CNN) is a type of deep neural network that specializes in visual recognition tasks such as pneumonia diagnosis in CXRs [30]. An important challenge in exploiting CNNs for diagnostic tasks is the scarcity of high-quality correctly labeled large-size medical image datasets. Training on small datasets often leads to problems such as overfitting, poor generalization, etc. Moreover, training on poorly labeled datasets will also likely lead to misleading diagnoses. The public availability of some high-quality CXR datasets [1,31,32,33,34,35] has certainly helped promote research in the area of automated chest disease diagnoses. In some research studies [36,37], generative adversarial network (GAN)-based synthetic CXR generation techniques have been successfully exploited to overcome the problems of overfitting and poor generalization.

In this paper, we review the research carried out in the area of pneumonia identification in CXR images using DL in the last eleven years, i.e., from 2012 to 2023. The year 2012 was chosen as the starting year because deep learning was adopted by the computer vision community as its mainstay after its remarkable performance in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012 [27,38]. Unfortunately, from 2012 till 2016, almost all research in this area was carried out using ML approaches [39,40] rather than DL. So, in essence, this paper covers research studies published after 2016. In order to be more relevant to the state of the art, this study mainly focuses on research works published from 2020 onwards. The last search was performed on 30 November 2023, and therefore, this study only considers research published up to this date. The articles were searched in four electronic research databases, namely IEEE Xplore, SpringerLink, ScienceDirect, and ACM Digital Library. This paper provides a comprehensive review and critical analyses of the searched literature.

2. Research Questions

Research questions provide motivation and guidance throughout the research process [41]. A research study is normally based on research questions [42]. In this respect, the key research question for this survey paper is as follows: “How can we draw a comprehensive picture of the state of research in the field of pneumonia detection using CXRs using deep learning?” To comprehensively answer this main research question, the following research questions were formulated:

RQ-1: What are the basic concepts behind pneumonia detection using CXRs using DL?

Rationale: Answering this question will establish the theoretical foundation of this research field.

RQ-2: What are the publicly available pneumonia-specific CXR datasets?

Rationale: The answer to this question will involve listing and describing the various publicly available, labelled, and pneumonia-specific CXR datasets.

RQ-3: What are some key statistics in this research area?

Rationale: Answering this question will unveil key statistics in this research area. It will indicate how interest in this field has evolved over the course of time. Moreover, it will also reveal other important statistics, such as the relative frequencies of the commonly exploited methods, the number of studies focusing on COVID-19 pneumonia detection, etc.

RQ-4: What are some of the recent techniques that have been thoroughly evaluated and have yielded promising results?

Rationale: The answer to this question will elaborate on the various recent DL algorithms and architectures involved in solving the problem of pneumonia detection using CXRs.

RQ-5: What are some key issues and challenges in this field?

Rationale: The answer to this question will involve highlighting key challenges and issues that the researchers in this field need to resolve.

3. Research Method

An initial search was performed on Google Scholar with phrases like “pneumonia detection in CXR using deep learning” and “COVID-19 detection in CXR using deep learning”. After finding some relevant papers, a list of keywords was prepared, and search venues were selected. The keywords selected were “pneumonia”, “chest X-ray”, “CXR”, “chest radiograph”, “deep learning”, “convolutional neural network”, and “cnn”. The list of keywords was finalized based on the most frequently occurring keywords in the relevant papers. Four electronic research databases [43] were finalized based on their credibility and relevance. They were “IEEE Xplore”, “ScienceDirect”, “SpringerLink”, and “ACM Digital Library”. The following search query [17] was designed based on the selected keywords: “pneumonia” AND (“CXR” OR “chest X-ray” OR “chest radiograph”) AND (“deep learning” OR “CNN” OR “convolutional neural network”).

Along with specifying the above search query, a number of other search filters were also applied to narrow down the search criteria. For example, the subject area or discipline was restricted to “computer science”, and article type was restricted to “research articles”. When this search query along with relevant filters was run on the four selected research databases, a large number of items were returned. The precise number of items returned by each database is given in Table 1 below.

Table 1.

Number of search items returned by the research databases.

In total, 789 research items were returned by the four research databases. These research items were then short-listed by following strict inclusion/exclusion criteria, which are outlined below:

3.1. Inclusion Criteria

All included studies satisfy the following three criteria:

- Empirical studies that focus on solving the problem of pneumonia detection using CXRs using DL;

- Studies published in a peer-reviewed journal or conference proceeding;

- Studies published between 2020 and 2023 inclusively.

3.2. Exclusion Criteria

- If a paper or a publication fell into any of the following categories, it was excluded:

- All reviews and survey papers;

- All non-peer-reviewed publications;

- Short papers less than 5 pages long;

- Book chapters, as these are usually reviews of a research area;

- All papers that were scientifically unsound. Scientifically unsound papers include all papers in which methodology is not meticulously presented or in which the hypothesis or the solution is not methodically evaluated;

- All papers not written in English;

- Duplicate papers were removed. For example, only a single item was retained if the same item was returned by two or more different databases;

- Papers focusing on detection of some other chest disease like tuberculosis, pneumothorax, cardiomegaly, etc., in which pneumonia detection is not considered;

- All papers exclusively exploiting traditional ML approaches;

- All papers exclusively based on non-CXR modalities like CT scans, ultrasound scans, etc.

Point number 5 of the exclusion criteria states that scientifically unsound papers were discarded. It is vital to be more precise about what constitutes a scientifically unsound paper. For this purpose, quality assessment criteria were formulated. For a paper to be scientifically sound, the answer to all the following questions should be ‘yes’:

- Are the research objectives clear and well defined?

- Is the methodology comprehensively explained?

- Is the proposed solution thoroughly evaluated?

- Are the limitations of the research clearly stated?

- Is the research work published in a reputable journal or conference proceeding? For example, any relevant paper published in a journal that has an impact factor less than 3 is discarded.

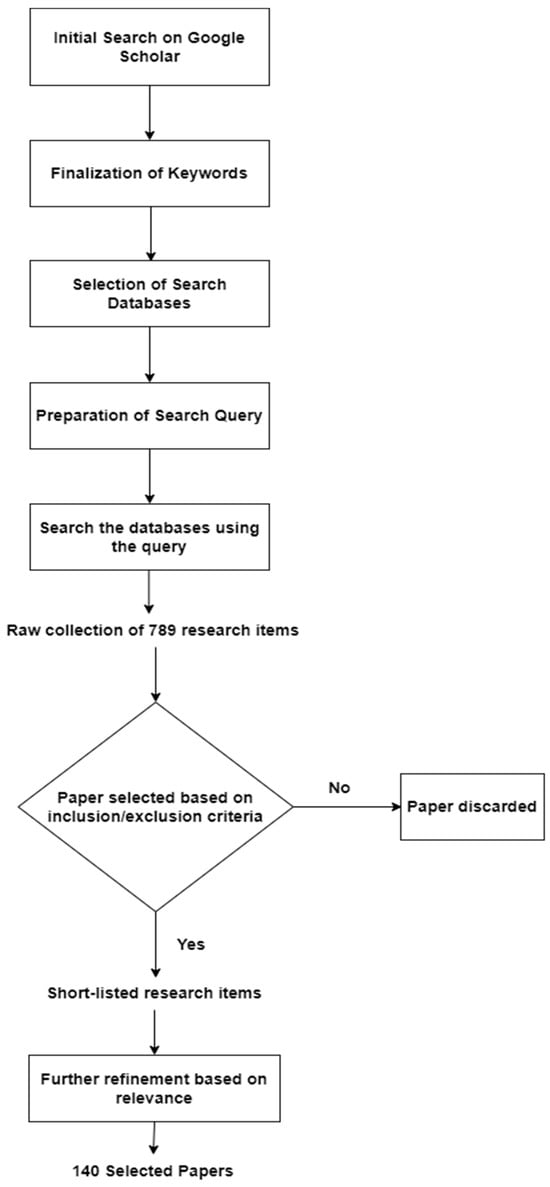

Figure 1 depicts the research paper selection process followed in this study. The first few steps, which resulted in a raw collection of 789 research items, were explained earlier in this section. This raw collection of research items was short-listed and refined by following the above inclusion/exclusion criteria, including the assessment of paper quality. Finally, a limited number of the most relevant papers from the short-listed papers were selected for in-depth review. In total, 140 research papers were selected and reviewed thoroughly to provide an updated and comprehensive picture of this research area.

Figure 1.

The research paper selection process.

A Microsoft Excel spreadsheet was created to record and maintain important information about the selected publications. This spreadsheet was updated as more and more selected publications were reviewed. The spreadsheet recorded the following information: paper title, authors, publication year, journal/conference name, keywords, publication type (possible entries: “Journal Paper” or “Conference Paper”), search database, task (possible entries: “classification”, “localization”, or “both”), pneumonia type (possible entries: “covid”, “non-covid”, or “both”), number of classes (possible entries: “binary” or “multi-class”), dataset(s), method, evaluation metrics, (best) results, and critical remarks.

4. Basic Concepts

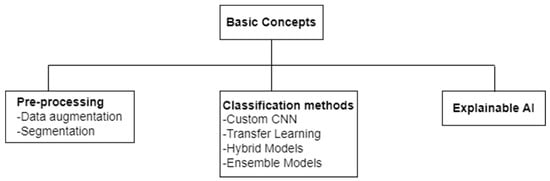

In this section, some basic concepts in this area of research are elaborated. These basic concepts are divided into three broad categories: pre-processing, classification methods, and explainable AI. Two pre-processing techniques, namely data augmentation and segmentation, are briefly overviewed in Section 4.1 and Section 4.2, respectively. The classification methods employed for deep-learning-based pneumonia detection using CXRs are divided into four broad categories: CNNs, transfer learning, hybrid models, and ensemble models. The underlying concepts behind these four terms are described briefly in Section 4.3, Section 4.4, Section 4.5 and Section 4.6. Finally, the concept of explainable AI is briefly discussed in Section 4.7. Each section from Section 4.1, Section 4.2, Section 4.3, Section 4.4, Section 4.5, Section 4.6 and Section 4.7 also indicates the pneumonia detection studies that have exploited the concept overviewed. Figure 2 presents the taxonomy of the basic concepts discussed.

Figure 2.

Taxonomy of basic concepts in the area of CXR-based pneumonia detection using deep learning.

4.1. Data Augmentation

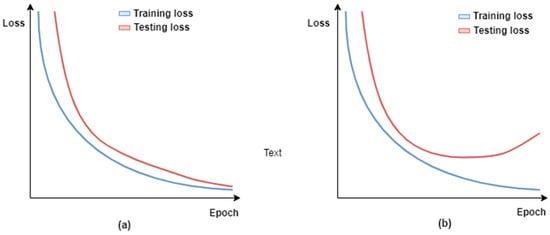

Medical image classification tasks, such as pneumonia detection using CXRs, usually suffer from the problem of limited data [44]. This may lead to model overfitting, which means that the trained model lacks generalization ability, i.e., the model performs perfectly on training data but demonstrates a poor performance on test data. In order to build useful models, the test set accuracy should be high and close to the training set accuracy. Figure 3 illustrates the training and validation curves for the two different scenarios: (1) the trained model is useful because the validation loss decreases with the training loss; and (2) the trained model loses its generalization ability, i.e., it fits too closely to the training data, as its validation loss starts to increase over the course of model training. Figure 3a,b illustrate the first and second scenarios, respectively.

Figure 3.

Visualization of training and testing loss over training epochs for two different scenarios: (a) the trained model is useful because the validation loss decreases with the training loss; and (b) the trained model loses its generalization ability, i.e., overfitted.

There are a few techniques to reduce overfitting, and data augmentation is one of them. Data augmentation has been extensively exploited for pneumonia detection using CXRs [11,45,46,47]. It involves artificially creating new training samples that are realistic variants of the original training samples. This inflates the size of the training set and helps mitigate the issue of limited data. The new training samples are generated either through data warping [48] or synthetic over-sampling [49]. Data-warping augmentations usually involve generating new samples by applying some geometric transformations on existing images. Possible geometric transformations include shearing, scaling, rotating, reflecting, translating, etc. The newly generated images are added to the training set. Even though there are other types of data-warping augmentations, such as color transformations, random erasing [50], adversarial training [51], etc., geometric transformations remain the most widely exploited data-warping approach for medical image classification problems [52]. Data warping preserves the label of the original sample such that the new sample has the same label as the original sample. Data-warping transformations are often used in combination. For example, a new sample may be created by applying a combination of geometric and color transformations on the original image.

In addition to the above data augmentation mechanisms, generative adversarial networks (GANs) [53,54] have also been exploited to augment CXR image data in various studies [37,55,56,57,58]. Jabbar et al. [59] state that a GAN is the most common unsupervised learning model in machine learning. GANs are capable of generating high-quality, synthetic image data at a fast rate and generally perform better than other generative models [59]. If a GAN has been properly trained, the generated synthetic images are indistinguishable from real data samples.

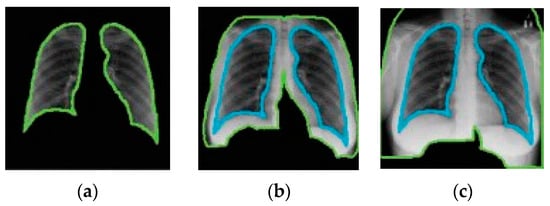

4.2. Segmentation

Like data augmentation, segmentation is a pre-processing step applied to CXR images [60]. Segmentation is typically performed to locate objects (e.g., lungs) and boundaries (e.g., lung boundaries) in images (e.g., CXR images) [61]. It enables the extraction of desired regions of interest from CXR images. Manual segmentation is tedious and time-consuming and relies on the expertise of radiologists. DL-based segmentation models have been used to obtain the lung region in CXR images. Narayanan et al. [62] have made use of CXR image segmentation as a pre-processing step. They did this using a DL-based segmentation model called U-Net [63]. Through segmentation, they were able to find out that the shape of the lung is key to differentiating between viral and bacterial pneumonia. The performance of the classification model improved as a result of incorporating segmentation in the process.

A number of DL-based segmentation models exist such as fully convolutional networks (FCNs) [64], U-Net [63], and V-Net [65]. U-Net is the most popular architecture [60]. The following are some of the studies that have exploited segmentation in CXR-based pneumonia detection: [66,67,68,69,70,71].

4.3. Convolutional Neural Networks

CNNs are inspired by the brain’s visual cortex, and it is the type of DL model best-suited for computer vision tasks [72] like classification and localization. Even though the concept of CNNs has been around since the 1980s, they were adopted as the mainstay for computer vision tasks after achieving remarkable, state-of-the-art results in the ILSVRC from 2012 onwards [27,38]. This relatively recent, meteoric rise of CNNs is largely due to three key factors: (1) the availability of more sophisticated CNN techniques, (2) the availability of high-performance graphical processing units (GPUs), and (3) the availability of large volumes of training data [60]. Apart from producing higher accuracy levels, another advantage of using CNNs is that they require much less data pre-processing compared with traditional machine learning methods [73]. The following are some of the studies that have designed and evaluated their own custom CNNs for the task of CXR-based pneumonia detection: [71,74,75,76,77,78,79,80,81,82,83,84,85,86].

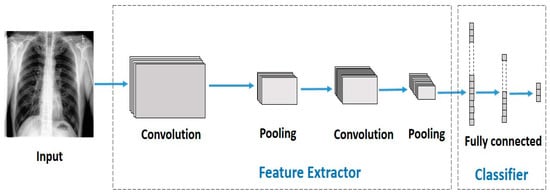

CNNs are simply stacked multi-layered neural networks [87]. There are three main layer types in a CNN: (1) the convolutional layer, (2) pooling layer, and (3) fully connected layer. Figure 4 depicts a sample CNN architecture.

Figure 4.

A sample CNN architecture.

4.4. Transfer Learning

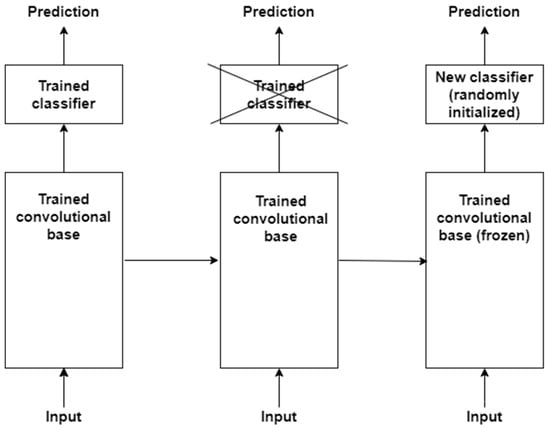

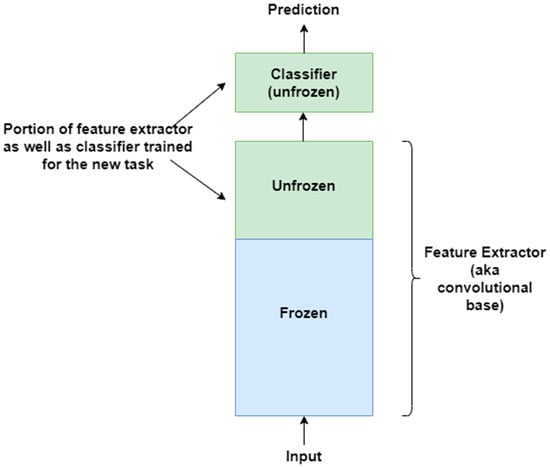

The basic idea behind transfer learning is that a model trained on a large dataset containing images of a particular domain (e.g., natural object images) is repurposed for classifying images of some other dataset belonging to a different domain (e.g., CXR images) [88,89]. The latter dataset is usually a small dataset with a limited number of images [90]. Transfer learning is a very effective technique when there is scarcity of well-labelled data in the target domain but a huge collection of well-labelled data in some other domain. The latter may be in a different feature space or follow a different data distribution. The generic features learned by the model during training on a large dataset are reused for the target task. Therefore, such models do not need to be trained from scratch, saving time and resources [91]. There are two approaches to transferring knowledge from one domain to another [88]. In the first approach, a pre-trained model is treated as a feature extractor, and its weights are kept frozen [92]. The original trained classifier is removed from the top of the feature extractor and is replaced by an untrained, randomly initialized classifier. The new classifier is then trained on top of the frozen architecture for the new task. This approach is depicted in Figure 5. The second approach involves unfreezing part or all of the feature extractor [45,93]. This approach is referred to as fine-tuning. The weights of the pre-trained feature extractor are used as a baseline during fine-tuning; i.e., the weights are not randomly initialized. This enables faster convergence during training. The fine-tuning technique is based on the idea that low-level visual features are transferable from one domain to another. In a CNN, low-level visual features are extracted by the initial layers of a feature extractor. Therefore, the initial layers are kept frozen. The last layers of the feature extractor are unfrozen and trained for the new task along with the added classifier. This second approach to transfer learning is depicted in Figure 6. The following are some of the studies that have exploited transfer learning for CXR-based pneumonia detection: [45,47,79,88,91,92,94,95,96,97,98,99,100,101,102].

Figure 5.

First approach to performing transfer learning is based on training a new classifier on top of a frozen feature extractor, i.e., convolutional base.

Figure 6.

Second approach to transfer learning involves fine-tuning the unfrozen portion of the feature extractor as well as classifier for the new task.

4.5. Hybrid Deep Learning Models

Hybrid models are designed in a way such that they fuse together different neural network architectures and machine learning algorithms to form a single architecture [103]. Such models are meant to solve challenging problems by combining the strengths of various approaches. Fundamentally, hybrid models try to overcome the limitations of a standalone, pure deep neural network by combining it with other neural network architectures and/or machine learning techniques. The following are some of the studies that have exploited hybrid models to solve CXR-based pneumonia detection problems: [11,104,105,106,107,108]. Issues such as performance, efficiency, interpretability, etc., determine the choice of constituent architectures and techniques in a hybrid model.

4.6. Ensemble Deep Learning Models

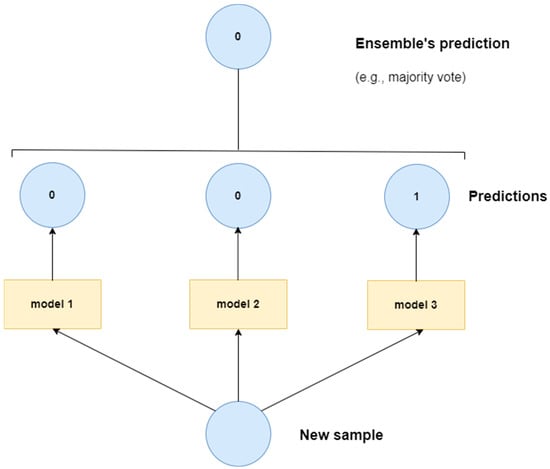

Ensemble models in deep learning combine various machine learning models, often neural networks, to improve overall performance. By combining the strengths of various models, ensemble methods can generate more accurate and robust predictions. In essence, different individual models work independently to generate their own classification output. The outputs from individual models are combined through some voting system, e.g., a majority vote, to produce the final classification output. The core idea is that averaging or combining the predictions of multiple individual models results in a better overall model than an individual model on its own. Figure 7 depicts a simplified diagram of a sample ensemble model. The following studies have exploited some form of an ensemble model for CXR-based pneumonia detection: [109,110,111,112,113,114,115].

Figure 7.

Sample ensemble model.

4.7. Explainable Artificial Intelligence (XAI)

Deep learning methods have shown great promise for pneumonia detection using CXRs. However, medical experts and physicians still lack confidence in deep learning methods, primarily because of their black-box nature [116]. Since human lives are at stake, there are numerous issues that need to be addressed [117]: Can the reasons for a correct diagnosis be explained? How can these methods be exploited for further system improvement? Who is responsible if things go wrong? XAI is a research field that aims to address these issues by making DL systems more transparent and understandable to humans [118]. XAI has been extensively exploited for DL-based pneumonia detection using CXRs [8,47,71,75,78,80,88,92,119,120,121,122,123,124,125,126]. Despite extensive use of XAI in CXR-based pneumonia detection systems, a recent study [127] has argued that the explanations produced by XAI are either unreliable or only offer superficial explanations for individual cases. The study concludes that XAI explanations are often misleading with respect to individual decisions.

5. Datasets

Medical imaging datasets have evolved as the foundation of contemporary diagnostic research. These datasets serve as the essential building blocks upon which machine learning and deep learning algorithms are created and tested in the context of pneumonia and COVID-19 diagnosis. The current study explores a wide range of publicly accessible CXR image datasets. These datasets, around 10 in total, are essential for the creation and verification of algorithms for the detection of COVID-19 and pneumonia. They act as the standard against which the effectiveness of different algorithms, which are highlighted in the reviewed publications, is assessed. This collection includes datasets that are specifically devoted to COVID-19 and only contain CXR images associated with the virus, while other datasets present a more varied selection of images, including those of healthy patients as well as instances of CXRs showing manifestations of viral or bacterial pneumonia. The research community’s overarching goal of developing flexible algorithms capable of detecting pneumonia among a variety of radiographic images is reflected in the use of such a varied group of datasets. As part of our thorough analysis of a few key datasets in this study, we also give quick download links in Table 2, which will make it easier for researchers to access the data and promote more investigation in this important area.

Table 2.

Some common publicly available CXR image datasets used for COVID and non-COVID pneumonia detection.

Table 2 provides a review of various CXR datasets commonly used in tasks such as pneumonia detection and COVID-19 diagnosis. It contains a variety of datasets, each distinguished by the number of images, the number of classes, and the specific classes contained within them. This table also includes references to scholarly papers that have used these datasets, making it a significant resource for scholars and practitioners in the field of medical image analysis. These datasets jointly aid in the creation and validation of deep learning models, improving the accuracy and efficiency of chest X-ray analysis and enabling the early detection of pneumonia, particularly COVID-19, which is critical in clinical settings.

Table 2 presents a comprehensive assessment of significant public CXR datasets used for pneumonia and COVID-19 detection. Each dataset’s distinct characteristics make it a significant resource for deep learning researchers. This study will help researchers choose the best dataset for their research aims and computational requirements.

6. Key Statistics

In the present review, we explored a total of 262 studies that utilized deep learning architectures to detect pneumonia, especially distinguishing between COVID pneumonia, non-COVID pneumonia, or both. Convolutional neural networks (CNNs), including customized CNNs, transfer learning, ensemble models, and hybrid models, were the focus of our research. We also looked into the use of generative adversarial networks (GANs), explainable AI, and vision transformers, among other things. In addition to the various models, we analyzed multiple datasets related to pneumonia identification via X-ray imaging and consulted various survey papers to acquire a thorough understanding of the present landscape.

Specifically related to non-COVID and COVID pneumonia, 140 papers have been reviewed in this study. As Table 3 presents, out of these 140 papers, 70 focus on both COVID and non-COVID pneumonia, while 48 consider only COVID pneumonia and 22 consider only non-COVID pneumonia. In recent times, there has been more focus on COVID pneumonia and this is obviously due to the COVID-19 pandemic.

Table 3.

Number of studies for each of the two pneumonia types.

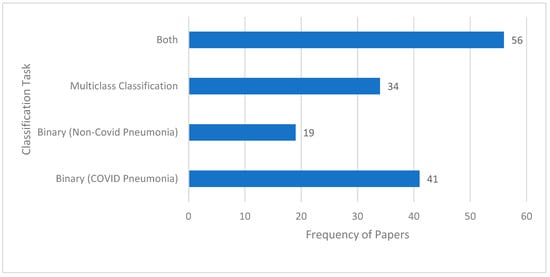

Another aspect of the reviewed research studies is that they are based on a binary classification task, on multiclass classification, or on both. In the context of this paper, binary classification involves classifying a CXR image into one of the two mutually exclusive classes, e.g., “pneumonia” class or “normal” class. Multiclass classification, in contrast, classifies a CXR image into a class out of more than two possible classes. An example of multiclass classification is a “normal” vs. “viral” vs. “bacterial” vs. “COVID” classification task. Figure 8 illustrates the frequency of papers focusing on each of these classification tasks. It can be seen that there are many studies that consider both classification tasks. Examples of binary and multiclass classification tasks are given in Table 4.

Figure 8.

Frequency of papers for the two types of classification tasks.

Table 4.

Examples of binary and multiclass classification tasks.

As a detailed overview of the many methodologies used to diagnose pneumonia in chest X-ray (CXR) pictures, the techniques have been grouped methodically into four key categories, demonstrating the expanding landscape of diagnostic techniques.

As indicated by the references [71,74,77,80,81,83,85], the most common technique seen in the provided investigations is the use of custom-designed convolutional neural networks (CNNs). These customized CNNs show promise in catching subtle patterns within CXR pictures for accurate pneumonia detection. Further, the application of transfer learning is closely followed, as evidenced by the following references: [94,95,96,99,100,101]. Transfer learning applies pre-trained models from big datasets to the specific problem of pneumonia identification. This method capitalizes on the knowledge gathered from unrelated tasks, increasing the model’s efficiency.

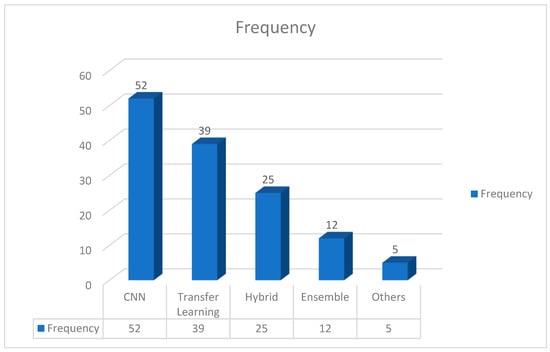

In addition to these main methodologies, other research, such as the references [11,46,108,185,186], investigate the possibilities of hybrid models. Hybrid models combine multiple distinct models to produce higher categorization results. Notably, these constituent models collaborate on decision making by feeding their outputs into one another, resulting in a synergistic impact. Furthermore, as stated by the references [119,128,184], ensemble approaches provide another path for pneumonia diagnosis. Ensemble models, as opposed to hybrid models, are made up of independent constituent models. Each model generates its own classification output, and the final diagnosis is determined by a vote method. This ensemble strategy takes advantage of the diversity of different models, resulting in more robust and dependable predictions. Figure 9 demonstrates the adaptability of pneumonia detection tactics by highlighting the frequency of custom-designed CNNs, the usefulness of transfer learning, and the possibility of hybrid and ensemble models in improving diagnostic accuracy. Figure 9 also depicts the distribution of approaches for detecting pneumonia in chest X-ray (CXR) pictures. With a frequency of 52, custom-designed convolutional neural networks (CNNs) emerge as the most common technique, demonstrating its broad use and efficacy in capturing subtle patterns within CXR images. With a frequency of 39, transfer learning is close behind, suggesting its popularity in modifying pre-trained models for pneumonia diagnosis. Hybrid models, which combine several models for superior categorization, are used 25 times, demonstrating the growing interest in synergistic model combinations in research. Ensemble methods are utilized 12 times, demonstrating their contribution to robust forecasts by utilizing independent constituent models with a voting system. Furthermore, a category called “Others” with a frequency of five includes less frequent or alternative procedures, implying that the field is still exploring new approaches. This quantitative analysis sheds light on the current trends and research priorities in pneumonia detection techniques.

Figure 9.

Various methods employed for pneumonia detection using CXR images.

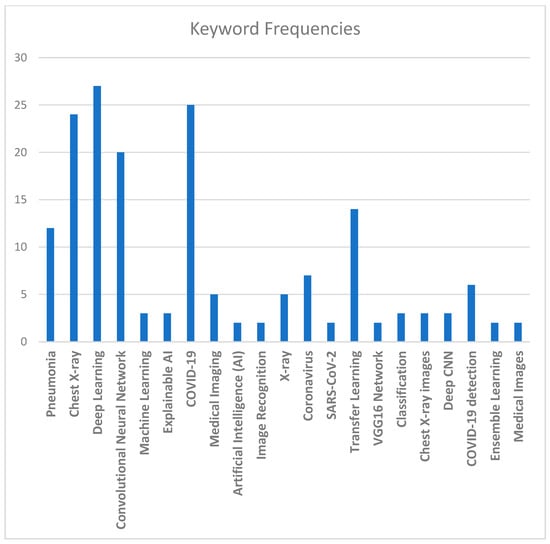

Another aspect used to evaluate studies statistically is commonly occurring keywords in the relevant literature and their relative frequencies, which are given in Figure 10. These keywords can serve as a guide to researchers while searching the related literature. Some of the most commonly used keywords, in order of their significance, are as follows: “Deep Learning”, “COVID-19”, “Chest X-ray”, “Convolutional Neural Network”, “Transfer Learning”, and “Pneumonia”.

Figure 10.

Common keywords and their relative frequencies.

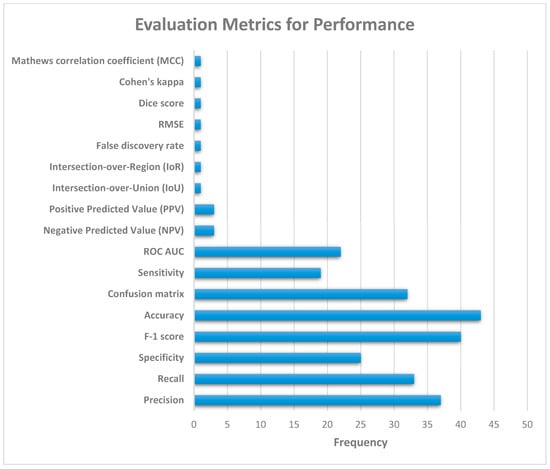

Figure 11 illustrates the various evaluation metrics used to evaluate the performance of pneumonia detection systems. Based on Figure 11, the most commonly used evaluation metrics (in order of their relative usage) are as follows: accuracy, F-1 score, precision, recall, confusion matrix, specificity, ROC AUC, and sensitivity.

Figure 11.

Performance evaluation metrics for pneumonia detection systems.

Various deep learning architectures were investigated in this comprehensive analysis of several studies concentrating on pneumonia diagnosis, specifically discriminating between COVID and non-COVID pneumonia. Convolutional neural networks (CNNs) were the focus of the investigation, which included customized CNNs, transfer learning, ensemble models, and hybrid models. We also looked into generative adversarial networks (GANs), explainable AI, and vision transformers. For a comprehensive grasp of the existing landscape, we combined data from different pneumonia diagnosis datasets via X-ray imaging and referenced survey studies. The review of 140 papers on non-COVID and COVID pneumonia found a growing emphasis on COVID pneumonia, which was likely affected by the ongoing pandemic. This study also looked at classification tasks, distinguishing between binary and multiclass classifications and providing examples. A statistical review also emphasized the prevalence of frequent keywords in the literature, assisting researchers in accessing relevant studies. Finally, the paper covered the many assessment measures used in analyzing the performance of pneumonia detection systems, highlighting metrics such as accuracy, F-1 score, precision, recall, confusion matrix, specificity, ROC AUC, and sensitivity. The data shown here visually illustrate the distribution and patterns discovered in the examined studies, providing useful insights into the methodology and evaluation criteria that shape the current landscape of pneumonia detection research.

7. Current Trends

The detection of pneumonia using chest radiographs or X-rays, specifically the distinction between COVID and non-COVID patients, is a rising area of research. Deep learning approaches have emerged as critical tools for improving the precision and efficacy of various diagnostic processes.

Convolutional neural networks (CNNs) are the foundation of this landscape, acclaimed for their ability to detect subtle patterns in photos. Their application in the analysis of visual data, such as chest X-rays, has proven useful in the identification of pneumonia. CNN-based architectures extract essential information from radiographic images, allowing for the detection of minor subtleties suggestive of various pneumonia types, including those linked with COVID-19. Furthermore, transfer learning has emerged as a strong paradigm, capitalizing on pre-trained models produced on large datasets such as ImageNet. Researchers use previously learnt features by fine-tuning these models on pneumonia-specific datasets, reducing computational costs and data requirements. This method considerably improves the model’s capacity to generalize and categorize pneumonia cases. Correspondingly, ensemble learning methods reinforce this environment even further by combining outputs from various models or utilizing multiple architectures. This combination improves classification accuracy by combining the collective intelligence of multiple models. Ensemble approaches efficiently leverage the capabilities of various models, resulting in increased precision and robustness in pneumonia classification, including the distinction between COVID and non-COVID cases.

Hybrid techniques, complementing these approaches, combine disparate methodologies or other data sources, such as clinical records or laboratory findings, with chest radiographs. This combination improves the comprehensiveness and dependability of diagnostic systems. These strategies aim to increase diagnosis accuracy and provide a more holistic view of pneumonia by combining numerous information sources, allowing for the accurate categorization of COVID and non-COVID pneumonia patients. Deep learning developments, aided by the emergence of larger and more diversified datasets, hold excellent potential for further improving the precision and dependability of pneumonia detection systems. These developments have the potential to catalyze advances in respiratory illness diagnosis, ultimately improving patient care and treatment techniques.

This field’s research focuses not only on accurately discriminating between COVID and non-COVID pneumonia, but also on tackling issues such as data scarcity, class imbalance, and the interpretability of deep learning models in medical situations. Deep learning developments, as well as the availability of larger and more diversified datasets, are projected to improve the accuracy and reliability of pneumonia detection systems, greatly contributing to the diagnosis and treatment of respiratory infections. Table 5 provides the current trends and some of the latest research methodologies for pneumonia detection and classification.

Table 5 summarizes the outcomes with respect to methodology. Specialized convolutional neural networks provide better accuracies; for example, the authors of [187,188] used specialized network topologies, such as DCNN and Enhanced CNN+ResNet-50, to achieve 96.09% and 92.4% accuracies, respectively. They emphasized the need of preprocessing techniques such as intensity normalization and certain network designs in achieving acceptable accuracies. Further, transfer learning architectures such as VGG-19, CDC_Net, and DenseNet201 were used in studies [143,189,190] to achieve high accuracies ranging from 96.48% to 99.39% for multi-classification tasks. These demonstrated the value of using pre-trained models for feature extraction and classification. On the other hand, ensemble learning approaches have been employed in [133,191,192,193]. These studies combined numerous models or techniques, including convolutional networks, self-attention mechanisms, EfficientNet, and stacked ensembles, and achieved accuracies ranging from 98% to 99.21%. These approaches stressed the use of communal knowledge or the selection of optimal features to increase performance. The studies of [193,194] used hybrid algorithms that combined transfer learning and deep learning (AlexNet, C+EffxNet). They achieved 97.9% and 99.2% accuracies while reducing model complexity and improving decision support systems through feature merging. Subsequently, other specialized architectures like transformers [163,195] have achieved accuracies of 99.13% and 94.96%, respectively. These focused on reducing computing complexity and increasing accuracy.

Table 5.

Current trends in pneumonia detection.

Table 5.

Current trends in pneumonia detection.

| Ref./ Year | COVID/Non COVID Pneumonia | Binary/Multi Classification | Methodology | Results | Contribution | Research Gap |

|---|---|---|---|---|---|---|

| [189] 2023 | Both | Multi Classification | Transfer Learning, VGG-19+CNN | 96.48% | Good accuracy | Powerful segmentation models for precise ROI identification are required. |

| [194] 2023 | Non-COVID Pneumonia | Binary Classification | Hybrid technique | 97.9% | Simplified the model by reducing advance feature extraction. | Unbalanced data distribution |

| [143] 2023 | Both | Multi Classification | CDC_Net | 99.39% | Structured noise reduced | NA |

| [191] 2023 | Non-COVID Pneumonia | Binary Classification | Ensemble CNN+ Transformer Encoder | 99.21% | Self-attention mechanism provided more accurate results | Annotated text data required |

| [195] 2023 | COVID Pneumonia | Binary Classification | Multi-level self-attention mechanismTransforme r | 99.13% | Reduced computing complexity to increase the efficiency of the recognition process. | Multi-classification model could be enhanced |

| [187] 2023 | Non-COVID Pneumonia | Binary Classification | DCNN | 96.09% | Impactful preprocessing techniques | NA |

| [190] 2023 | Both | Multi Classification | DenseNet201 | 99.1% | DenseNet provides collective knowledge | NA |

| [192] 2023 | Both | Multi Classification | Ensemble Learning (EfficientNet) | 98% | NA | Attention-based feature fusion may reduce complexity |

| [188] 2023 | Non-COVID Pneumonia | Binary Classification | Enhanced CNN+ResNet-50 | 92.4% | NA | NA |

| [193] 2023 | Non-COVID Pneumonia | Multi Classification | Hybrid deep learning model (C+EffxNet) | 99.2% | The application of feature merging improved the decision support system. | To better predict chest infections, more classes can be included |

| [133] 2023 | Non-COVID Pneumonia | Multi Classification | Stacked ensemble learning | 98.3% | Reduced features are promoted to the stacking classifier. | Preprocessing and reinforment learning can improve results |

| [163] 2023 | Both | Multi Classification | Vision Transformer (PneuNet) | 94.96% | Binary pneumonia classification model achieved 99.29% accuracy | Channel-wise transformer encoder can enhance results |

Considering Table 5, each study’s research gap emphasizes critical areas for advancement, such as segmentation refinement, addressing data imbalance, noise reduction, interpretability enhancement, computing efficiency, and the exploration of preprocessing and feature extraction techniques for more robust and reliable pneumonia detection models. A study [189] has shown an impressive accuracy in diagnosing six types of COVID and non-COVID pneumonia. It did, however, identify an important research gap: the need for more robust segmentation models. These models would make it easier to identify regions of interest (ROIs) on chest radiographs. By improving segmentation capabilities, the model could more precisely target affected areas, ultimately refining the classification process for higher levels of precision and dependability. A study [194] shed light on the issue of data imbalance in pneumonia datasets. It emphasized the possible application of generative adversarial networks (GANs) to address this issue, with the goal of balancing the representation of multiple classes, particularly in cases with few instances. Furthermore, the study recommended investigating capsule networks due to their ability to perform successfully with fewer training data. This indicates a research void in investigating approaches that require fewer data while maintaining robust performance in pneumonia classification tasks. Despite obtaining an outstanding accuracy and controlling the impact of structured noise, a study [143] highlighted a critical research need. It stressed the importance of further refining segmentation models to improve the identification and delineation of abnormal characteristics in chest radiographs. Furthermore, decreasing the negative impact of structured noise is a critical area for more refined and noise-resilient classification models. A study [191] underlined the importance of improving interpretability while attaining a high accuracy using a self-attention technique. When annotated text datasets become accessible, they suggest including textual explanations alongside visual heatmaps. Furthermore, the study emphasized the importance of improving feature extraction processes in order to improve model interpretability and performance. Further, a study [187] indicated the necessity of a broader investigation of preprocessing techniques. Another study [163] raised issues about interpretability after reaching a great accuracy in binary pneumonia categorization. It underscored the proposed PneuNet model’s black-box character and the need for more representative feature extraction methods. The study advised for improving the channel-wise transformer encoder inside the model architecture to increase interpretability and feature representation.

The findings from numerous research in pneumonia classification highlight the importance of Transformer designs in improving detection model accuracy and efficiency. Several research have used Transformer models or recommended their prospective application across numerous techniques and classification tasks (binary and multi-class), emphasizing their importance in this sector. Application of Transformer models for pneumonia detection in CXR images has the following salient points:

- Transformer-based designs have been successfully employed in both multi-class and binary classification settings in studies. These models equipped with self-attention mechanisms have shown remarkable accuracies ranging from 94.96% to 99.39%.

- Several studies have shown that transformers minimize computational complexity without sacrificing accuracy. They promote efficient recognition processes, which are critical in medical applications in which prompt diagnosis is required.

- Despite its usefulness, some research using transformer models has presented interpretability difficulties. To improve interpretability and feature representation, there is a clear need to improve feature extraction approaches inside transformer designs.

- Transformers have demonstrated adaptability and resilience by being successfully deployed in both COVID and non-COVID pneumonia detection across many classification challenges.

We propose that vision transformers (ViTs) are the most suited method for future work in the area of pneumonia detection using CXR images. ViTs have demonstrated exceptional potential in a variety of image-processing tasks, including natural images. They have shown a competitive performance when compared to that of convolutional neural networks (CNNs), which have long been the go-to architecture for image processing.

ViTs have the following advantages for X-ray image analysis:

- Handling Global Information: ViTs capture global relationships inside an image. This understanding of context and relationships between different regions may be especially important in medical imaging, for which the context of anomalies in an X-ray may be critical for diagnosis;

- Fewer Parameters: Because ViTs can process pictures without depending on complex convolutional procedures, they may require fewer parameters than typical CNNs, making them more efficient;

- Transfer Learning: ViTs have demonstrated promise in transfer learning. Pre-trained ViT models developed on large-scale datasets can be fine-tuned on smaller medical datasets, which is especially useful when labeled medical data are limited.

However, there are challenges as well, which can provide a clear future direction to address theoretical gaps:

- Data Efficiency: ViTs frequently require significant amounts of data for training, and collecting labeled datasets in medical imaging can be difficult due to privacy concerns and data scarcity;

- Computational Needs: Training ViTs can be computationally demanding, necessitating significant resources and effort;

- Interpretability: Understanding why a ViT makes a certain decision may be more difficult than with typical CNNs, which may be a worry in essential applications such as medical diagnosis.

Transformers are emerging as the most potential option for future pneumonia detection due to a number of compelling reasons. For initial reasons, their ability to achieve high accuracies—ranging from 94.96% to 99.39%—shows that they are effective across a variety of classification assignments [163,195]. Transformers excel in capturing complicated global dependencies among images, which is crucial for detecting subtle irregularities in chest X-rays that traditional CNNs may miss. Transformers also minimize computational complexity by exploiting self-attention techniques, allowing them to process images faster than traditional CNN systems [195]. This efficiency is critical in medical applications in which speedy and precise analysis is required.

Furthermore, transformers are highly effective in transfer learning scenarios. Pre-trained models from big datasets, such as ImageNet, can be fine-tuned on smaller, more specialized medical datasets, which is especially useful considering the scarcity of annotated medical pictures. This ability to adapt and generalize from large-scale pre-training to specific medical tasks overcomes the prevalent problem of data scarcity in medical imaging [163].

To address the challenges of interpretability and data efficiency, these aspects are being continually improved. The ability of transformers to be integrated with hybrid models or with approaches such as transfer learning and ensemble learning increases their robustness and versatility. These characteristics make transformers an excellent choice for further research and development, promising improvements in both diagnostic accuracy and operating efficiency for pneumonia detection.

8. Discussion

This section discusses the key issues and challenges faced in the area of CXR-based pneumonia detection using deep learning.

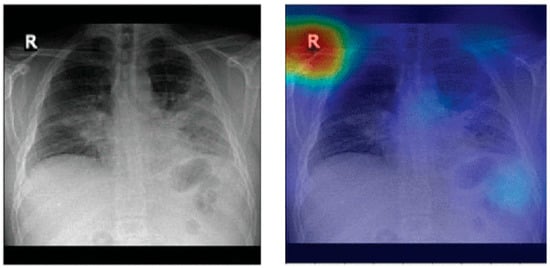

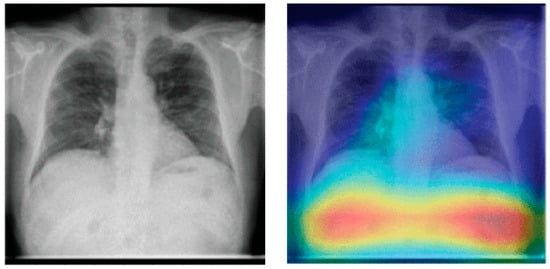

8.1. Biased Datasets

It has been shown that pneumonia detection models tend to focus on irrelevant features when classifying CXR images. A detailed bias analysis has been presented in [121]. The classification network used in that study was VGG16 [196]. The network was pretrained on an ImageNet dataset [197], and then, using transfer learning, the network was trained and fine-tuned on domain data. Figure 12 depicts some CXR images that were fed to the model. The figure also shows the corresponding heatmaps generated using Grad-CAM [198]. The heatmaps highlight areas of the image that are critical in the model’s prediction. It can be seen that for both the generated heatmaps in Figure 12, the highly activated regions (denoted in red) are outside the boundaries of the lung tissue. This indicates that features of the lung tissue have not been utilized in the classification decision. The study [121] suggests that this behavior demonstrated by the classification models is due to the inherent biases present in the publicly available pneumonia and COVID-19 CXR image datasets [31,34,158].

Figure 12.

Lung heatmaps generated through Grad-CAM (all images taken from [121]).

These dataset biases could be due to a variety of reasons. An example scenario which can lead to such biases is when a specific CXR machine is used for a group of subjects with a lower chance of disease and a separate CXR machine is used for another group of subjects with a high chance of disease. This may happen when patients highly suspicious of a contagious disease, like COVID-19, are sent to some other area of hospital or even to some other healthcare center for CXR scanning in order to avoid spreading the disease. If one of the two machines generates a white rectangular box at the top right corner in each CXR image and the other one does not, then the model may learn to classify images based on this simple feature rather than true class features.

Another possible scenario is that there is an imbalanced dataset; i.e., there are too few images for one class and a lot more for the other class, e.g., too few COVID-19 CXR images and much more normal CXR images. In order to balance the dataset, COVID-19 samples are included from different data sources. Each of these data sources is likely based on different equipment as well as acquisition protocols. In other words, the samples of the two classes are from different sources. Therefore, the resulting dataset is inherently biased. Any classifier trained on such a dataset may learn to classify CXR images based on source-specific features rather than true class features.

In addition to the acquisition site specific features, the demographic traits of the subjects can be significant confounding factors [199]. For instance, remix datasets that combine adult COVID-19 patients with non-COVID-19 controls from the Guangzhou pediatric dataset (ages 1–5 years) [1] can result in models that erroneously link anatomical features associated with age to the diagnosis.

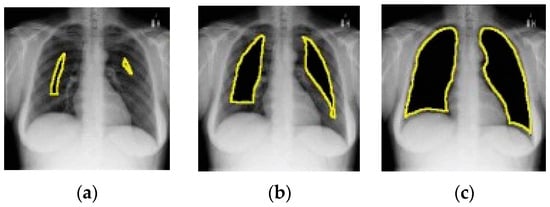

Catalá et al. [121] have experimented with background expansion and lung exclusion in CXR images. Background expansion means a background is gradually added to initial lung-only images. Figure 13 depicts an example of background expansion. Ideally, the AUC ROC should not be affected by background expansion. However, empirical results obtained indicate a significant AUC ROC increase when a background is expanded in two of three datasets used. This means that features outside the lung area often play a critical role in correct predictions.

Figure 13.

Example of background expansion (all images taken from [121]). From (a–c) the background is gradually expanded.

Figure 14 depicts an example of lung occlusion, i.e., the gradual removal of the lung area from CXR images. Ideally, the AUC ROC should go down close to 0.5 for the complete occlusion of the lung. However, an AUC ROC of up to 0.88 has been achieved for full lung occlusion. This is quite remarkable as this means that even without considering lung tissue, the model is able to correctly predict the class of the CXR image. These findings may imply that the results reported in various research studies on the performance of pneumonia detection models may be too optimistic and may not be a true representation of the capabilities of those models.

Figure 14.

Example of lung occlusion (all images taken from [121]). From (a–c) the lung area is gradually occluded.

Recent studies [200,201,202] have proposed ways to mitigate the effects of biased datasets. One possible way [200] is to employ style randomization modules at both image and feature levels to create style-perturbed features while keeping the content intact. This enables CNNs to focus more on the actual, relevant content of CXRs rather than the many different uninformative styles present in biased datasets. Another possibility [201] is to use federated deep learning, ensuring that no single data source can profoundly influence model training. Finally, using ethical tools such as Aequitas [202] to ensure that the dataset is free of biases based on gender, race, age, etc., can help obtain results that are more compatible with ethical and responsible standards.

8.2. Data and Code Availability

The majority of the studies surveyed for this paper did not make their code and data public. However, most of the studies in which datasets have been created by combining various publicly available data sources have provided details of the original data sources. But even these studies fail to provide links to their final, combined datasets, except for a few studies like [35,203,204]. Open data and code enable other researchers to validate results [205]. It also helps in the reproduction of the results reported in a study and therefore helps in confirming the truth of results. Moreover, open-source code and data also help in improving existing models and techniques. The reproducibility of pneumonia detection models, therefore, has become a major issue [60]. Current and future research works in this area should ensure open-source code and data availability.

8.3. Explainability of Models

The transparency of pneumonia detection models is likely to be critical to their acceptance by medical practitioners [149]. An interpretable and transparent pneumonia detection model can give medical practitioners more confidence in the underlying algorithm and its final prediction [206]. Explainable AI (XAI) can provide a behavioral understanding of these models [119]. The most widely used XAI method for pneumonia detection models is Grad-CAM [71,169,191,207,208,209,210] followed by CAM [133,135]. Other state-of-the-art XAI methods for pneumonia detection models include LIME [211], SHAP [212], and Grad-CAM++ [213]. Recently, new XAI approaches specially designed for CXR-based pneumonia detection models have been presented [119,149].

Up till now, XAI techniques are mostly based on visualizations, i.e., highlighting image areas that contribute to a model’s prediction [210]. These visualizations do help in understanding a model’s behavior, but a more standard, quantitative way of explanation is also required. The creation of standardized quantitative XAI metrics for explainability and interpretability is therefore needed. This can help improve the behavioral understanding of pneumonia detection models in a more standardized and regulated way. There is also a need for a universally agreed definition of ‘explanation’ in the context of XAI [214,215]. In addition, the scope and applicability of XAI should also be clearly defined by some recognized regulatory authority. Future XAI approaches should output explanations in a more comprehensible language that can be interpreted by humans [127].

8.4. Fair Comparison

Suppose there are two pneumonia detection models: ‘A’ and ‘B’. Also, suppose there are two separate CXR image datasets: ‘X’ and ‘Y’. Model ‘A’ is evaluated on dataset ‘X’ and model ‘B’ is evaluated on dataset ‘Y’. The performance of model ‘A’ and model ‘B’ cannot be compared because both have been evaluated on different datasets, i.e., ‘X’ and ‘Y’, respectively. It is important to mention that many studies [206] have compared their method(s) evaluated on a particular dataset with other state-of-the-art methods evaluated on different datasets. Such comparisons are not very useful or may even be futile [60]. This is because the performance metrics obtained in each case are data-dependent. There is a need to develop a broad range of labelled, benchmark datasets for the purpose of training and evaluating pneumonia detection models. All models that need to be compared should be evaluated on the same set of datasets. A performance comparison then makes more sense. In this regard, the availability of the code used in published studies can help. This is because this can enable a model to be regenerated and evaluated on benchmark datasets if this has not been already done.

8.5. Class Imbalance in CXR Datasets

A key challenge in CXR image classification is having to deal with imbalanced datasets [42]. Class imbalance is very common in COVID-19 CXR image datasets [60] due to unavailability of a large number of COVID-19 samples early on in the pandemic. As a consequence, there are very few COVID-19 samples in many COVID-19 datasets. This results in the algorithm mostly seeing non-COVID-19 samples. An imbalanced dataset, therefore, leads to inaccurate results due to issues such as model overfitting [216,217,218]. This is true for both binary and multi-class classifications.

Deep learning algorithms, such as CNNs, are primarily designed for an equal number of images in each class [219]. If there is a class imbalance, then this needs to be resolved through some technique. Some class imbalance resolution approaches commonly exploited are briefly described here. Rajaraman et al. [220] solved this problem by designing the loss function of their model in a way such that the class with a smaller number of samples is given more weight compared to the class with a greater number of samples. Another mechanism to counter class imbalance is resampling. In [221], resampling is exploited by applying data augmentation to increase the number of samples for the COVID-19 class that had much fewer samples. The objective of resampling is to create an even distribution of classes. This may be accomplished by either increasing the number of samples for the class with fewer samples or by taking fewer samples for the class with a disproportionately higher number of samples. A recent study [222] addresses the issue of class imbalance by exploiting the focal loss (FL) function for imbalanced datasets. The FL function adds a modulating factor that lessens the impact of well-classified examples. The core idea is to concentrate the training process more on difficult, misclassified examples. The FL, therefore, de-emphasizes easy examples to allow the model to focus on the minority class.

8.6. Adversarial Attacks

CXR-based pneumonia detection models are highly accurate but are vulnerable to adversarial attacks [223,224,225,226,227,228]. Paschali et al. [229] first suggested that deep learning models in medical imaging should be evaluated for robustness and not just for generalizability. It has been shown in [229] that adversarial examples are a much better means to evaluate a model’s robustness compared to random noise like Gaussian noise. Ma et al. [230] have demonstrated that deep learning models trained and evaluated on medical images such as CXRs are even more vulnerable to adversarial examples compared to models using natural images. This high vulnerability due to adversarial attacks can make automated diagnostic models like CXR-based pneumonia detection models unreliable and a source of fraud and/or confusion.

In [231], a strong, image-independent adversarial attack called a universal adversarial perturbation (UAP) [232] was studied for pneumonia detection. The study [231] exploits a variant of UAP which is based on a single perturbation and which is very easy to apply and computationally a lot less expensive. The results show that the attack has a success rate greater than 80% for both targeted and untargeted attacks. Moreover, adversarial retraining [51], which is a defense mechanism, largely failed to mitigate the effect of the UAP attack [231]. These results highlight the need to focus more on model reliability in case of adversarial attacks in the future.

Some recent studies [233,234] have focused on developing effective defense mechanisms against adversarial attacks on CXR images. Dai et al. [233] employs global attention noise injection to combat adversarial attacks, while Sheikh and Zafar [234] exploit a denoising technique called total variation minimization to effectively mitigate adversarial noise in images. Both these techniques have yielded promising results.

9. Conclusions

It is evident from the introductory and explanatory material detailed in sections one to six and the references supplied that a significant body of knowledge and expertise has evolved over time that addresses both related research in the field of and the eventual application of deep learning, especially for pneumonia detection using X-rays. Such work has thus enabled key statistics to be derived in section seven regarding the effectiveness, and hence relative accuracy, of techniques for both detecting and discriminating between forms of COVID and non-COVID pneumonia.

Given the range of both past and present techniques and approaches in deep learning, as well as the evaluations and metrics for effectiveness proposed and detailed herein for its application to pneumonia detection using X-rays, it is possible to show, first, that adversarial attacks are a viable means of identifying, analyzing, and hence determining the effectiveness and reliability (and so ensuring the vital integrity and accuracy) of data models used for the detection of pneumonia via X-rays.

Secondly, it is possible to suggest one particular field of research and its application that may prove particularly useful in such endeavors, i.e., the use of vision transformers (ViTs), though these have inherent limitations that must inevitably be addressed and ultimately resolved.

Author Contributions

Conceptualization, R.S.; Data curation, R.S. and S.J.; Formal analysis, R.S. and S.J.; Investigation, R.S. and S.J.; Methodology, R.S.; Project administration, R.S.; Visualization, R.S. and S.J.; Writing—original draft, R.S. and S.J.; Writing—review & editing, R.S. and S.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

We would like to thank Christopher John Harrison for his guidance and assistance in this research.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.S.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F.; et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, A.U.; Ozsoz, M.; Serte, S.; Al-Turjman, F.; Yakoi, P.S. Pneumonia Classification Using Deep Learning from Chest X-ray Images During COVID-19. Cogn. Comput. 2024, 16, 1589–1601. [Google Scholar] [CrossRef]

- Pneumonia|CDC. Available online: https://www.cdc.gov/pneumonia/index.html (accessed on 14 September 2022).

- Ruuskanen, O.; Lahti, E.; Jennings, L.C.; Murdoch, D.R. Viral pneumonia. Lancet 2011, 377, 1264–1275. [Google Scholar] [CrossRef]

- World Health Organization (WHO). Available online: https://www.who.int/ (accessed on 14 September 2022).

- Khan, W.; Zaki, N.; Ali, L. Intelligent Pneumonia Identification From Chest X-Rays: A Systematic Literature Review. IEEE Access 2021, 9, 51747–51771. [Google Scholar] [CrossRef]

- Johns Hopkins Coronavirus Resource Center. Johns Hopkins University & Medicine. Available online: https://coronavirus.jhu.edu/ (accessed on 14 September 2022).

- Ieracitano, C.; Mammone, N.; Versaci, M.; Varone, G.; Ali, A.-R.; Armentano, A.; Calabrese, G.; Ferrarelli, A.; Turano, L.; Tebala, C.; et al. A fuzzy-enhanced deep learning approach for early detection of COVID-19 pneumonia from portable chest X-ray images. Neurocomputing 2022, 481, 202–215. [Google Scholar] [CrossRef]

- D’Arienzo, M.; Coniglio, A. Assessment of the SARS-CoV-2 basic reproduction number, R0, based on the early phase of COVID-19 outbreak in Italy. Biosaf. Health 2020, 2, 57–59. [Google Scholar] [CrossRef] [PubMed]

- Rossman, H.; Meir, T.; Somer, J.; Shilo, S.; Gutman, R.; Ben Arie, A.; Segal, E.; Shalit, U.; Gorfine, M. Hospital load and increased COVID-19 related mortality in Israel. Nat. Commun. 2021, 12, 1904. [Google Scholar] [CrossRef]

- Yaseliani, M.; Hamadani, A.Z.; Maghsoodi, A.I.; Mosavi, A. Pneumonia Detection Proposing a Hybrid Deep Convolutional Neural Network Based on Two Parallel Visual Geometry Group Architectures and Machine Learning Classifiers. IEEE Access 2022, 10, 62110–62128. [Google Scholar] [CrossRef]

- Self, W.H.; Courtney, D.M.; McNaughton, C.D.; Wunderink, R.G.; Kline, J.A. High discordance of chest X-ray and computed tomography for detection of pulmonary opacities in ED patients: Implications for diagnosing pneumonia. Am. J. Emerg. Med. 2013, 31, 401–405. [Google Scholar] [CrossRef]

- Ticinesi, A.; Lauretani, F.; Nouvenne, A.; Mori, G.; Chiussi, G.; Maggio, M.; Meschi, T. Lung ultrasound and chest X-ray for detecting pneumonia in an acute geriatric ward. Medicine 2016, 95, e4153. [Google Scholar] [CrossRef]

- Htun, T.P.; Sun, Y.; Chua, H.L.; Pang, J. Clinical features for diagnosis of pneumonia among adults in primary care setting: A systematic and meta-review. Sci. Rep. 2019, 9, 7600. [Google Scholar] [CrossRef] [PubMed]

- Siddiqi, R. Efficient Pediatric Pneumonia Diagnosis Using Depthwise Separable Convolutions. SN Comput. Sci. 2020, 1, 343. [Google Scholar] [CrossRef]

- Mettler, F.A.; Huda, W.; Yoshizumi, T.T.; Mahesh, M. Effective Doses in Radiology and Diagnostic Nuclear Medicine: A Catalog. Radiology 2008, 248, 254–263. [Google Scholar] [CrossRef] [PubMed]

- Çallı, E.; Sogancioglu, E.; van Ginneken, B.; van Leeuwen, K.G.; Murphy, K. Deep learning for chest X-ray analysis: A survey. Med. Image Anal. 2021, 72, 102125. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef] [PubMed]

- Ai, T.; Yang, Z.; Hou, H.; Zhan, C.; Chen, C.; Lv, W.; Tao, Q.; Sun, Z.; Xia, L. Correlation of Chest CT and RT-PCR Testing for Coronavirus Disease 2019 (COVID-19) in China: A Report of 1014 Cases. Radiology 2020, 296, E32–E40. [Google Scholar] [CrossRef]

- Fang, Y.; Zhang, H.; Xie, J.; Lin, M.; Ying, L.; Pang, P.; Ji, W. Sensitivity of Chest CT for COVID-19: Comparison to RT-PCR. Radiology 2020, 296, E115–E117. [Google Scholar] [CrossRef] [PubMed]

- Jacobi, A.; Chung, M.; Bernheim, A.; Eber, C. Portable chest X-ray in coronavirus disease-19 (COVID-19): A pictorial review. Clin. Imaging 2020, 64, 35–42. [Google Scholar] [CrossRef] [PubMed]

- Pal, B.; Gupta, D.; Rashed-Al-Mahfuz, M.; Alyami, S.A.; Moni, M.A. Vulnerability in Deep Transfer Learning Models to Adversarial Fast Gradient Sign Attack for COVID-19 Prediction from Chest Radiography Images. Appl. Sci. 2021, 11, 4233. [Google Scholar] [CrossRef]

- Rajaraman, S.; Guo, P.; Xue, Z.; Antani, S.K. A Deep Modality-Specific Ensemble for Improving Pneumonia Detection in Chest X-rays. Diagnostics 2022, 12, 1442. [Google Scholar] [CrossRef]

- Kundu, R.; Das, R.; Geem, Z.W.; Han, G.-T.; Sarkar, R. Pneumonia detection in chest X-ray images using an ensemble of deep learning models. PLoS ONE 2021, 16, e0256630. [Google Scholar] [CrossRef] [PubMed]

- Mousavi, Z.; Shahini, N.; Sheykhivand, S.; Mojtahedi, S.; Arshadi, A. COVID-19 detection using chest X-ray images based on a developed deep neural network. SLAS Technol. 2022, 27, 63–75. [Google Scholar] [CrossRef] [PubMed]

- Cha, S.-M.; Lee, S.-S.; Ko, B. Attention-Based Transfer Learning for Efficient Pneumonia Detection in Chest X-ray Images. Appl. Sci. 2021, 11, 1242. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef]

- Zhou, S.K.; Greenspan, H.; Davatzikos, C.; Duncan, J.S.; Van Ginneken, B.; Madabhushi, A.; Prince, J.L.; Rueckert, D.; Summers, R.M. A Review of Deep Learning in Medical Imaging: Imaging Traits, Technology Trends, Case Studies With Progress Highlights, and Future Promises. Proc. IEEE 2021, 109, 820–838. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Irvin, J.; Rajpurkar, P.; Ko, M.; Yu, Y.; Ciurea-Ilcus, S.; Chute, C.; Marklund, H.; Haghgoo, B.; Ball, R.; Shpanskaya, K.; et al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. Proc. AAAI Conf. Artif. Intell. 2019, 33, 590–597. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-Ray8: Hospital-Scale Chest X-Ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3462–3471. [Google Scholar]

- Johnson, A.E.W.; Pollard, T.J.; Berkowitz, S.J.; Greenbaum, N.R.; Lungren, M.P.; Deng, C.; Mark, R.G.; Horng, S. MIMIC-CXR, a de-identified publicly available database of chest radiographs with free-text reports. Sci. Data 2019, 6, 317. [Google Scholar] [CrossRef]

- Bustos, A.; Pertusa, A.; Salinas, J.-M.; de la Iglesia-Vayá, M. PadChest: A large chest X-ray image dataset with multi-label annotated reports. Med. Image Anal. 2020, 66, 101797. [Google Scholar] [CrossRef]

- Tabik, S.; Gómez-Ríos, A.; Martín-Rodríguez, J.L.; Sevillano-García, I.; Rey-Area, M.; Charte, D.; Guirado, E.; Suárez, J.L.; Luengo, J.; Valero-González, M.A.; et al. COVIDGR Dataset and COVID-SDNet Methodology for Predicting COVID-19 Based on Chest X-Ray Images. IEEE J. Biomed. Health Inform. 2020, 24, 3595–3605. [Google Scholar] [CrossRef] [PubMed]

- Srivastav, D.; Bajpai, A.; Srivastava, P. Improved Classification for Pneumonia Detection using Transfer Learning with GAN based Synthetic Image Augmentation. In Proceedings of the 2021 11th International Conference on Cloud Computing, Data Science & Engineering, Noida, India, 28–29 January 2021; pp. 433–437. [Google Scholar]

- Motamed, S.; Rogalla, P.; Khalvati, F. Data augmentation using Generative Adversarial Networks (GANs) for GAN-based detection of Pneumonia and COVID-19 in chest X-ray images. Inform. Med. Unlocked 2021, 27, 100779. [Google Scholar] [CrossRef] [PubMed]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Sousa, R.T.; Marques, O.; Soares, F.A.A.M.N.; Sene, I.I.G.; de Oliveira, L.L.G.; Spoto, E.S. Comparative Performance Analysis of Machine Learning Classifiers in Detection of Childhood Pneumonia Using Chest Radiographs. Procedia Comput. Sci. 2013, 18, 2579–2582. [Google Scholar] [CrossRef]

- Khobragade, S.; Tiwari, A.; Patil, C.Y.; Narke, V. Automatic detection of major lung diseases using Chest Radiographs and classification by feed-forward artificial neural network. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–5. [Google Scholar]

- Hussain, K.; Mohd Salleh, M.N.; Cheng, S.; Shi, Y. Metaheuristic research: A comprehensive survey. Artif. Intell. Rev. 2019, 52, 2191–2233. [Google Scholar] [CrossRef]

- Meedeniya, D.; Kumarasinghe, H.; Kolonne, S.; Fernando, C.; Díez, I.D.l.T.; Marques, G. Chest X-ray analysis empowered with deep learning: A systematic review. Appl. Soft Comput. 2022, 126, 109319. [Google Scholar] [CrossRef]

- Alghamdi, H.S.; Amoudi, G.; Elhag, S.; Saeedi, K.; Nasser, J. Deep Learning Approaches for Detecting COVID-19 From Chest X-Ray Images: A Survey. IEEE Access 2021, 9, 20235–20254. [Google Scholar] [CrossRef] [PubMed]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Ahmed, K.B.; Goldgof, G.M.; Paul, R.; Goldgof, D.B.; Hall, L.O. Discovery of a Generalization Gap of Convolutional Neural Networks on COVID-19 X-Rays Classification. IEEE Access 2021, 9, 72970–72979. [Google Scholar] [CrossRef]