Abstract

The main objective of this work is to use convolutional neural networks (CNN) to improve the performance in previous works on their baseline for pollen grain classification, by improving the performance of the following eight popular architectures: InceptionV3, VGG16, VGG19, ResNet50, NASNet, Xception, DenseNet201 and InceptionResNetV2, which are benchmarks on several classification tasks, like on the ImageNet dataset. We use a well-known annotated public image dataset for the Brazilian savanna, called POLLEN73S, composed of 2523 images. Holdout cross-validation is the name of the method used in this work. The experiments carried out showed that DenseNet201 and ResNet50 outperform the other CNNs tested, achieving results of 97.217% and 94.257%, respectively, in terms of accuracy, higher than the existing results, with a difference of 1.517% and 0.257%, respectively. VGG19 is the architecture with the lowest performance, achieving a result of 89.463%.

MSC:

35A01; 65L10; 65L12; 65L20; 65L70

1. Introduction

Pollen grains are tiny particles carried by the wind, and are essential to the reproduction of flowering plants. They are produced by the male organs of flowers, called stamens, in particular the anthers, which contain the pollen grains. Pollinators in general ensure the reproduction of flowering plants, contributing to biodiversity and playing a crucial role in climate regulation, although climate change may disrupt this synergy [1]. The classification of pollen grains represents a major challenge in the fields of plant biology and paleoecology (paleoecology is the study of the complex relationships between living organisms, their physical environment and their evolution over time, using fossil, archaeological and sedimentological data.) This process is of great importance, as it enables the identification of the plants responsible for these grains and the reconstruction of environmental history through the ages thanks to sediment analysis [2,3].

Pollen analysis is also widely used to detect and monitor allergenic particles in the air. The duration of pollen seasons has grown longer in recent years due to the effects of global warming and changes in climate conditions [4]. As a result, individuals who are exposed to high levels of allergenic pollen in the air have experienced an increase in seasonal allergies [5]. The recognition of pollen grains in palynological investigation is crucial for the development of effective treatments for patients with allergic rhinitis. By employing this method, patients and healthcare providers can track the level of allergenic pollen present in the air, plan their outdoor activities accordingly, and manage their medical treatments accordingly [6]. The study of pollen grains present in individuals, objects, air, pollinators and beekeeping products also contributes to the protection, surveillance and monitoring of flora in order to preserve this ecosystem [4].

The creation of image datasets that contain numerous examples categorized by experts is crucial for automating pollen grain analysis. The task requires a lot of hard work and specialized equipment, like an optical microscope and slides. The lack of pollen grain image datasets specifically designed for computer vision automation is a result of this reason [4]. The training of efficient convolutional neural networks (CNNs) can be hindered by a limited number of examples, which could result in the poor performance of learning models [4]. CNNs are deep neural networks capable of learning complex patterns from images. They have been used successfully in the classification of pollen grain images. Using these networks, it is possible to accurately classify images of pollen grains from different species.

The main goal of this work is to improve the performance of eight (08) architectures of Astolfi et al. [4] for the classification of Pollen73S, using the holdout cross-validation method according to the accuracy [7,8]. The work is structured as follows: Section 2 reviews the state of the art in pollen grain classification using CNN. Section 3 describes the POLLEN73S dataset of Astolfi et al. [4] used in this work. An overview of the methods used is provided in Section 4. Results and discussions are the focus of Section 5. The conclusion rounds up the paper.

2. Literature Review of Related Work

Pollen grain classification has been a focus for several state-of-the-art convolutional neural networks (CNNs) in recent years. Daood et al. developed a seven-layer deep convolutional neural network, as described in [9], that underwent training on a dataset comprising 30 classes of pollen grains, achieving a correct classification rate of 94%. A dataset with 46 different pollen grain classes was classified by pretrained AlexNet in [10]. The incorporation of data augmentation and cross-validation techniques resulted in a precision of 98%. To categorize five classes of pollen grains using 13,000 images, AlexNet and SmallerVGGNet were implemented in [11]. Precision rates of 89.63% and 89.73%, respectively, were achieved by both networks. A pollen dataset with 73 pollen grain categories was analyzed by Astolfi et al. in [4]. Eight state-of-the-art CNNs were compared in terms of performance, including Inception V3, VGG16, VGG19, ResNet 50, NASNet, Xception, DenseNet 201 and Inception ResNet V2. They showed that DenseNet201 and ResNet50 outperformed other CNNs (Inception V3, VGG16, VGG19, NASNet, Xception and Inception ResNet V2) with an precision of 95.7% and 94.0%, respectively. Li et al. in [6] analyzed two categories of the Urticaceae family, named Parietaria and Urtica, showing strong morphological similarities composed of three categories of a dataset from 6472 images of pollen grains. To find a better classifier, they used both machine learning and deep learning methods. For the first method, they measured both texture and moment characteristics based on images of pollen grains with feature selection techniques and a hierarchical strategy. Secondly, they compared the performance of six popular CNNs: VGG16, AlexNet, ResNet50, VGG19, MobileNet V1 and MobileNet V2. Machine learning-based methods achieved high precision of 94.5% and deep learning-based methods achieved 99.4%.

In addition to CNN, there are other works that use computer vision techniques as references. Treloar et al. [12] gave us access to a dataset that consisted of 12 pollen grain types, with a handful of grayscale pictures per category collected on Henderson Island, Polynesia. For establishing a baseline with the dataset, the researchers employed a method where geometric characteristics of pollen grains like perimeter, roundness, and surface area were utilized as input to generate texture features. Additionally, a classification technique based on Fisher’s linear discriminant was employed for classification purposes. Depending on the subset of variables used, the proportion of pollen correctly classified can range from 81% to 100%, according to their report. An average classification rate of around 95% was achieved by the best set of variables. In [13], Travieso et al. used a dataset of 564 images. A total of 22 plant species in Costa Rica, Central America were utilized to capture these images. They categorized 47 distinct types of pollen grains using the techniques of the hidden Markov model (HMM) and support vector machines (SVM). An average classification accuracy of 93.8% was achieved by the proposed method. The classification of pollen grains was performed with an HMM classifier by García et al. [14], but they used a method relying on binarization and edge identification to obtain features from the grains. In Costa Rica, Central America, there are 11 distinct tropical honeybee plant families with 426 pollen grains from 17 types in the constructed dataset. An average of 98.77% was achieved in classification by the proposed method. A baseline was established using the same dataset as [14] by Ticay-Rivas et al. [15]. One approach suggested by the researchers includes merging geometric parameters, Fourier descriptors of morphological features utilizing the discrete cosine transform (DCT), and color data to identify distinctive attributes of pollen grains. These attributes are utilized to educate a multilayer neural network classifier, resulting in an average accuracy rate of 96.49%.

Instead of deep learning methods that automatically extract features from the image [9,10,16,17,18,19], machine learning methods are employed to manually select and extract features with specific functions from images [20,21]. Machine learning techniques use handcrafted features based on pollen grain images’ shape, texture, and other related properties. Classification performance is greatly influenced by the extracted features. The use of proper feature selection methods and classifiers is also important for machine learning-based classification methods.

The authors suggested a fusion of geometric and textural characteristics in [22] as distinctive intrinsic attributes for a pollen dataset containing 17 classes. Integrating linear discriminant analysis (LDA) and least squares support vector machines (LS-SVM) led to the optimal performance, achieving an precision of 94.92%. Marcos et al. [23] derived four texture attributes, comprising gray-level co-occurrence matrices (GLCM), log-Gabor filters (LGF), local binary patterns (LBP), and Tchebychev discrete moments (DTM), from a set of pollen images encompassing 15 classes. Subsequently, Fisher’s discriminant analysis (FDA) and K-nearest neighbor (KNN) were utilized for dimensionality reduction and multivariate classification purposes. This resulted in achieving a 95% accuracy rate. Manikis et al. [21] leveraged texture attributes derived from GLCM and seven geometric properties computed from the binary mask of a pollen image collection. Subsequently, a random forest (RF) classifier was employed for classification, obtaining an accuracy of 88.24% for six pollen classes. The outcomes of machine learning exhibit considerable variability, seemingly influenced by the dataset under consideration.

A recent investigation conducted by Djoulde et al. [24] centered on the categorization of pepper seeds through the utilization of color filter array (CFA) images. The research specifically delved into Penja pepper, a variant of Piper nigrum predominantly cultivated in the Litoral region of Cameroon. Despite its relatively modest production levels in comparison to larger producers like India and Brazil, Penja pepper is highly esteemed in the market due to its unique quality. The primary objective of this study was to address the challenge individuals face in discerning between various bell pepper types based solely on seed appearance. Through the collection and analysis of 5618 samples encompassing white and black Penja peppers alongside other varieties, the researchers applied image processing techniques and a supervised machine learning approach to extract 18 attributes from the visuals and employ diverse models for classification. The outcomes revealed that the support vector machine (SVM) emerged as the top-performing model, achieving an accuracy rate of 0.87, a precision score of 0.874, a recall of 0.873 and an of 0.874. This study thus represents a significant advancement in the realm of pepper seed classification, integrating cutting-edge image analysis and machine learning methodologies to facilitate the differentiation among various pepper cultivars. Other works on pollen classification using machine learning can be founded in [25,26,27,28,29].

3. Description of Dataset POLLEN73S

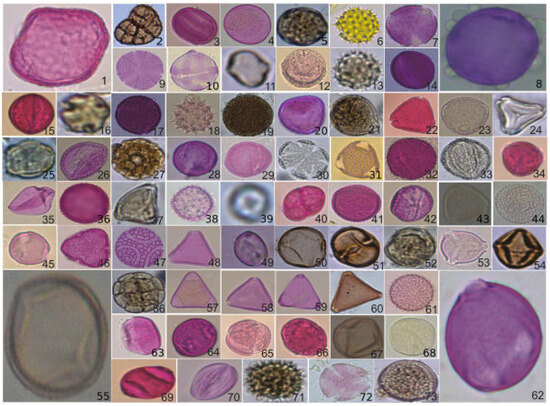

Astolfi et al. in [4] developed a dataset called POLLEN73S containing annotated images of pollen grains present in the Brazilian savannah. This dataset includes 2523 images of pollen grains from 73 different types, captured at various angles. The plant species in bloom, from which these pollen grains were sourced, were gathered within a 1.5 km distance from coordinates 20°23′16.8″ S 54°36′36.3″ W, situated in the urban vicinity of Campo Grande City, the capital of the state of Mato Grosso do Sul in Brazil.

The dataset POLLEN73S comprises reduced images obtained by resizing larger images. Given the varying sizes of the pollen grains, the image dimensions in the POLLEN73S dataset exhibit diversity. Specifically, 88.6% of images possess an average size of 512 × 512 pixels or less, with the remaining 11.4% exceeding this measurement. The images were resized to 224 × 224, which is the standard input size used for all the trained models mentioned in Section 4.2. This size was chosen to ensure compatibility with already pretrained models, maintain a balance between quality and performance, and simplify the process in computer vision. In Figure 1, an exemplar of a pollen grain is displayed for each category. Every category contains 35 images, except for Gomphrena sp. with 10 images, Trema micrantha with 34 images, and Zea mays with 29 images [4]. POLLEN73S offers an exhaustive variety of pollen grains, providing satisfactory categorical coverage and diversity of examples, to promote the progress of computer vision in the field of palynology.

Figure 1.

The sampling process for the POLLEN73S dataset (see [4] for the name of each image).

4. Methods

4.1. Block Diagram of the Proposed Methodology

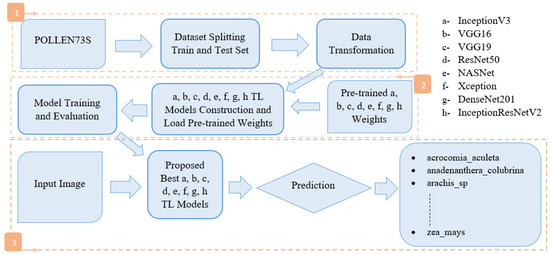

Figure 2 depicts the methodology used in this work.

Figure 2.

A block diagram of the proposed methodology.

We divided the POLLEN73S dataset into two distinct sets: a training set and a test set. Section 4.3 outlines the importance of using the training set to train the model and the test set to validate and test it. After that, we applied data augmentation, dropout layers, fine tuning and hyperparameters to the training dataset (Section 4.4). We then set up transfer learning models. The TL models designed (a, b, c, d, e, f, g and h as shown in the Figure 2, which we will detail in Section 4.2), as well as the loading of pretrained weights, model training and evaluation are carried out. Finally, we subjected the input image to each best TL model proposed, and proceeded with prediction to produce the expected result, namely the percentage of membership of each class.

4.2. CNN Architectures for Pollen Grain Classification

A transfer learning approach was implemented to overcome the limitations of the small training dataset and avoid overlearning using ImageNet’s pretrained weights. In this study, the final layer of seven CNN models—VGG16, VGG19, DenseNet12, InceptionResNetV2, InceptionV3, ResNet50 and Xception—was tuned while exploiting the pretrained model only as a feature extractor. To adapt these models to our classification problem, the final layers comprising fully connected layers with softmax activation were replaced by a flattened layer for data transformation, a dropout was introduced for regularization, as well as hyperparameters to control the learning process. In addition, a dense layer has been included to apply softmax activation and generate probabilities for the classes associated with “POLLEN73”.

4.2.1. Inception V3

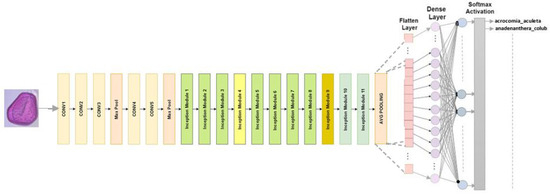

The classification we use it for consists of 48 layers with 11 creation modules. The image input size for InceptionV3 is 299 × 299. The ReLU activation function and convolution filters, clustering layers, and other elements comprise every module [30]. To reduce the number of parameters without compromising network efficiency, convolutions are factorized. The Figure 3 shows the refined InceptionV3 model used for our classification.

Figure 3.

InceptionV3 architecture designed for multiclass classification.

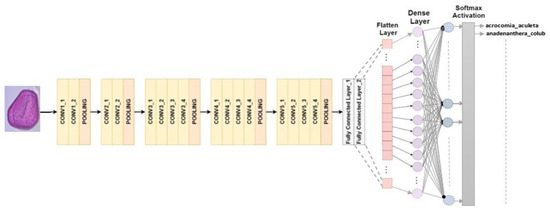

4.2.2. VGG 16

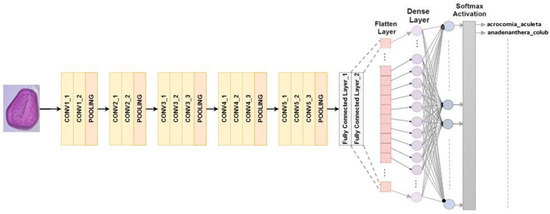

RGB images can be processed with a set input size of 224 × 224 pixels in the VGG16 architecture. A total of 13 convolutional layers and 3 fully connected layers are the main components of the composition that consists of 16 layers. The final classification process involves using max-pooling to decrease the volume size, and using softmax activation in the last fully connected layer [30]. In this study, we replaced the last fully connected layer with softmax activation with our own classifier, as shown in Figure 4.

Figure 4.

VGG16 architecture for multiclass classification.

4.2.3. VGG 19

There are 19 layers in the structure, with 16 convolutional layers and three fully connected layers. The volume size can be reduced by using max-pooling, and classification is carried out using the last fully connected layer with softmax activation [30]. In this study, we substituted our own classifier for the last fully connected layer with softmax activation, as illustrated in Figure 5.

Figure 5.

VGG19 architecture for multiclass classification.

4.2.4. ResNet 50

ResNet, or the Residual Network, is modified into a different version. The arrangement is comprised of 49 convolution layers, a max pool layer, and a medium pool layer. There are three convolution layers in every convolution block, and there are also three convolution layers in every identification block. ResNet50 has over 23 million adjustable parameters [30]. Figure 6 shows our adapted ResNet50 model used to classify Pollen73s.

Figure 6.

Multiclass classification is the intended use for ResNet50 architecture.

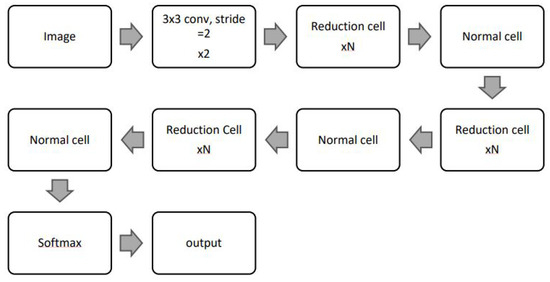

4.2.5. NASNet

The NASNetLarge model, based on the Neural Architecture search network (NASNet), is designed using two cell types: normal cells and reduction cells. The default input size for NASNetLarge is 331 × 331. Details of the two cell types are shown in Figure 7.

Figure 7.

NASNetLarge architecture [31].

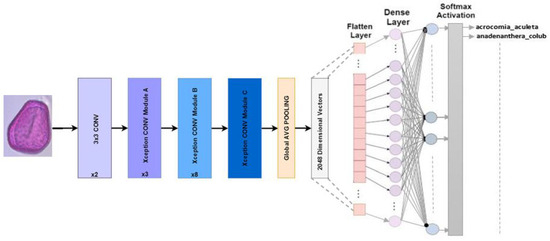

4.2.6. Xception

The Keras library creator, Chollet, proposed adapting Inception architectures in 2016. Deep-separable convolutions are used to replace Inception modules in Xception. The ImageNet dataset resulted in Xception’s results exceeding those of InceptionV3 for accuracy in the Top-1 and Top-5. Xception has a parameter count that is approximately the same as that of InceptionV3, with around 23 million [30]. Our refined Xception model for Pollen73s classification is illustrated in Figure 8.

Figure 8.

Multiclass classification is the goal of the Xception architecture.

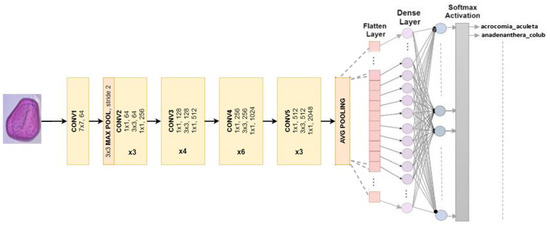

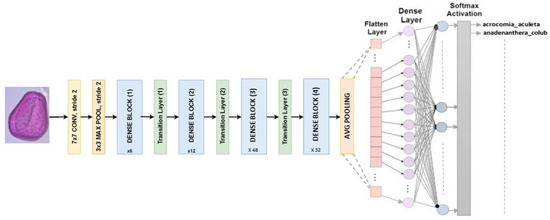

4.2.7. DenseNet 201

Has a fixed input size of 224 × 224 pixels for RGB images. DenseNet201 consists of 201 layers with over 19.9 million parameters. Dense blocks are divided into blocks where the dimensions of the feature maps stay the same, but the number of filters varies. Batch normalization is used for subsampling by transition layers, which are the input layers of the blocks [30]. In this study, our classifier is designed to replace the last fully connected layer with softmax activation, as shown in Figure 9.

Figure 9.

Multiclass classification is what DenseNet201 architecture is designed to do.

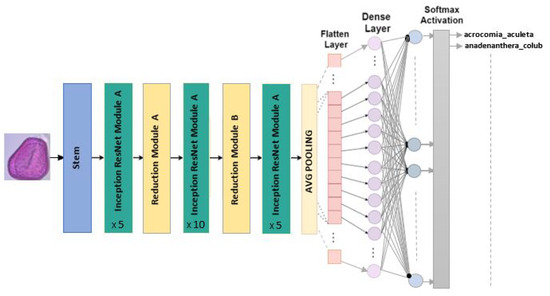

4.2.8. InceptionResNet V2

The fundamental module of this model is known as the Residual Origin Block. A 1 × 1 convolution layer is employed after each block to increase the size of the filter bank to match the input depth. Batch normalization is only applied to the traditional layers of this architecture. Featuring 164 layers deep and an image input dimension of , InceptionResNetV2 incorporates residual connections to blend convolutional filters of varying sizes. This use of residual connections avoids the degradation problems associated with deep networks and reduces training time [30]. Our refined model of InceptionResNetV2 for the classification of Pollen73s is illustrated in the Figure 10.

Figure 10.

InceptionResNetV2 architecture for multiclass classification.

4.3. Holdout Cross-Validation Method

The holdout cross-validation method is a simple model validation method that consists of splitting the dataset into a training set and a test set. The principle of the method is as follows: the dataset is divided into two sets, the first of which is used to train the model and the second to test the model’s performance. The proportion for the training set is set at 80% and 20% for the test set [7,8].

4.4. Experimental Setup

- Data augmentation: In deep learning, this is a common way to increase the number of training data available to the model, which can enhance generalization and tackle underlearning. The various keras (https://keras.io/api/ accessed on 9 November 2022) parameters we have used are as follows:

- -

- rescale: 1./255, resize pixel values to between 0 and 1 by dividing each pixel by 255;

- -

- shear_range: 0.2, applies a random shear transformation to the image;

- -

- zoom_range: 0.2, randomly zooms the image in or out;

- -

- featurewise_center: True, subtracts the average of all training images from each image;

- -

- featurewise_std_normalization: True, divides each pixel by the standard deviation of the set of training images;

- -

- rotation_range: 20%, applies a 20% rotation to the image;

- -

- width_shift_range: 0.2, applies a random horizontal shift to the image;

- -

- height_shift_range: 0.2, applies a random vertical shift to the image;

- -

- horizontal_flip: True, applies random horizontal symmetry to the image;

- -

- vertical_flip: True, applies random vertical symmetry to the image;

- -

- validation_split: 0.2, separates 80% of training data and 20% for validation.

- Dropout layers: Typically, connecting all features directly to the Fully Connected (FC) layer can result in overfitting to the training dataset. Overfitting transpires when a model excels excessively on the training data, subsequently hindering its performance on new data. To mitigate this issue, a dropout layer is implemented, selectively removing a few neurons from the neural network during training to trim down the model’s complexity. Specifically with ResNet50, a dropout rate of 0.7 is utilized, leading to the random elimination of 70% of nodes from the neural network.

- Fine Tuning: This pertains to the notion of transfer learning, a machine learning methodology where knowledge gained from training for one specific problem is leveraged to train for another related task or domain. In transfer learning, the final layers of the pretrained network are removed, and new layers are trained for the intended task. This process was executed on the CNN InceptionResNetV2 architecture, with the batch size transitioning from 12 to 16 along with data augmentation. Truncation of the top layer was implemented for this model by introducing a new softmax layer fully connected to the top layer. Additionally, fine-tuning was conducted using the Adam (adaptive moment estimation) [32] optimizer and a learning rate of 0.001.

- Hyperparameters: These are parameters whose values are used to control the learning process. These parameters are obtained using grid search and random search. These methods optimize and sort the parameters of CNN models. These values can be adjusted to optimize model performance. Here are the hyperparameters used in the experimental setup (see Table 1).

Table 1. Hyperparameters for different models.

Table 1. Hyperparameters for different models.

4.5. Performance Evaluation Measures

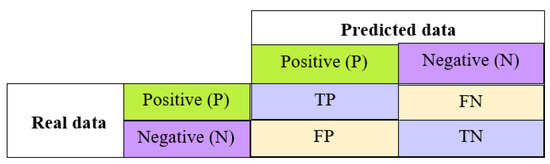

Confusion matrix, precision, recall, and accuracy are the standard classification model evaluation methods.

- Confusion matrix: This entails a comprehensive evaluation of the model’s performance, involving four key metrics: true positive (TP), false positive (FP), false negative (FN) and true negative (TN). These metrics are organized in a tabular format with rows and columns, where the rows portray the actual classes and the columns depict the predicted classes (see Figure 11).

Figure 11. Confusion matrix.

Figure 11. Confusion matrix. - Precision: This indicates the percentage of cases that were correctly identified out of all identified cases.

- Recall: This is the number of cases correctly identified among all positive representations. It measures a model’s ability to identify all positive results.

- : This is the harmonic mean of precision and recall. It provides a compromise between precision and recall.

- Accuracy: This is the ratio of correct predictions to the total number of observations (total input samples). However, this measure is not very reliable if the classes are unbalanced.

5. Results and Discussion

5.1. Architectural Performance Summary Table

Table 2 shows the different results obtained by the eight models.

Table 2.

Performance summary of the different architectures used.

We managed to achieve the highest precision and accuracy, 97.5545% and 97.202%, using DenseNet201 architecture and a batch size of 16. Convolutional neural networks like DenseNet201, InceptionResNetV2 and ResNet50 demonstrated superior performance with a batch size of 16. VGG19, VGG16 and Xception attained optimal results with a batch size of 8, while InceptionV3 and NASNet accomplished this with batch sizes of 4 and 12, respectively. Notably, DenseNet201 exhibited a remarkable of 97.26%, indicating well-balanced true positives, true negatives, false positives and false negatives, leading to a notable accuracy rate of 97.217%. Significantly, ResNet50 yielded outcomes that diverged from those of DenseNet201, exhibiting a precision 2.50% lower, aligning more closely with InceptionV3 with a difference of 0.818% in precision. Conversely, VGG19 displayed the least favorable results in comparison to other CNNs, whereas InceptionResNetV2, VGG16, NASNet and Xception produced comparable results. The best performances were characterized by the values of the metrics highlighted in bold. The significance of the accuracy rates reached by DenseNet201 and ResNet50, standing at 97.217% and 94.257%, respectively, lies in the diverse nature of pollen types within POLLEN73S. Notably, Khanzhina et al. [33] accomplished a 99% accuracy level in a dataset comprising 5 pollen types, which diminished to 95% as the dataset expanded to include 11 pollen types, underscoring the challenge of maintaining high accuracy rates when dealing with a larger array of pollen types. Drawing a comparison, Sevillano and Aznarte [16] employed three CNNs to categorize 23 pollen types within the POLEN23E [20] dataset and achieved a commendable 97% accuracy rate. The POLLEN23E dataset has a total of 23 pollen grain types and POLLEN73S has 22 of them. The performance of DenseNet201 and ResNet50 on POLLEN73S was satisfactory, as shown by this.

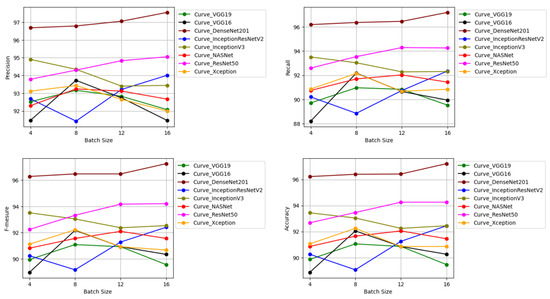

5.2. CNN Architectures’ Performances

Figure 12 depicts CNN architectures’ performances.

Figure 12.

Performances of eight architectures in terms of (top left) precision, (top right) recall, (bottom left) , (bottom right) accuracy.

We found that for all evaluation measures, DenseNet201 architecture performs better on all batch sizes.

5.3. Performance Comparison of CNN Architectures for Pollen Grain Classification: Current Results Compared to [4]

In Table 3, we summarize the results obtained for POLLEN73S classification by the following two methods: the holdout cross-validation method (current works) in the first column of each metric and the five-block cross-validation method of [4] in the second column of each metric.

Table 3.

Synthesis between current results and [4] results for the eight architectures. [P = Precision, R = Recall, F = , A = Accuracy, Aut = Authors [4].

Performance graphs for the eight architectures are displayed in Appendix A while graphs of pollen73S class probabilities when predicting a pollen grain using the best-performing architecture (DenseNet201) are displayed in Appendix B.

6. Conclusions

The aim of this work was to classify pollen grains from an annotated image dataset called POLLEN73S, and improve the performance of eight state-of-the-art convolutional neural networks (CNNs) already used in existing work, for the classification of pollen grains from the Brazilian savannah (Cerrado).

We used the holdout cross-validation method with hyperparameters and techniques such as dropout, data augmentation and fine-tuning, in contrast to the five-block cross-validation method used in previous works. The DenseNet201 and ResNet50 architectures outperformed the other CNNs tested, achieving performances of 97.217% and 94.257%, respectively. Compared to the best results obtained before, our results are therefore relatively appreciable.

In future work, we plan to further explore CNN hyperparameters. We also intend to better explore the VGG19 architecture to find a convolutional architecture specific to the POLLEN73S dataset, because even using the architecture defined for the ImageNet dataset, this architecture worked well in the case of the present work. Additionally, we plan to combine different CNNs in order to continue to improve performance.

Author Contributions

Conceptualization, B.G., H.A. and L.B.; methodology, B.G. and H.A.; software, B.G., R.S.S. and D.L.L.G.; validation, B.G., H.A. and L.B.; formal analysis, B.G. and H.A.; investigation, B.G. and H.A.; resources, B.G. and H.A.; data curation, B.G.; writing—original draft preparation, B.G., H.A., R.S.S. and D.L.L.G.; writing—review and editing, H.A. and L.B.; visualization, B.G., H.A. and L.B.; supervision, H.A. and L.B.; project administration, H.A. and L.B.; funding acquisition, H.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data used in this work can be found in Astolfi et al. in [4].

Acknowledgments

The authors thank the Academic Editor as well as the anonymous Reviewers for their comments and suggestions which permitted to improve the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

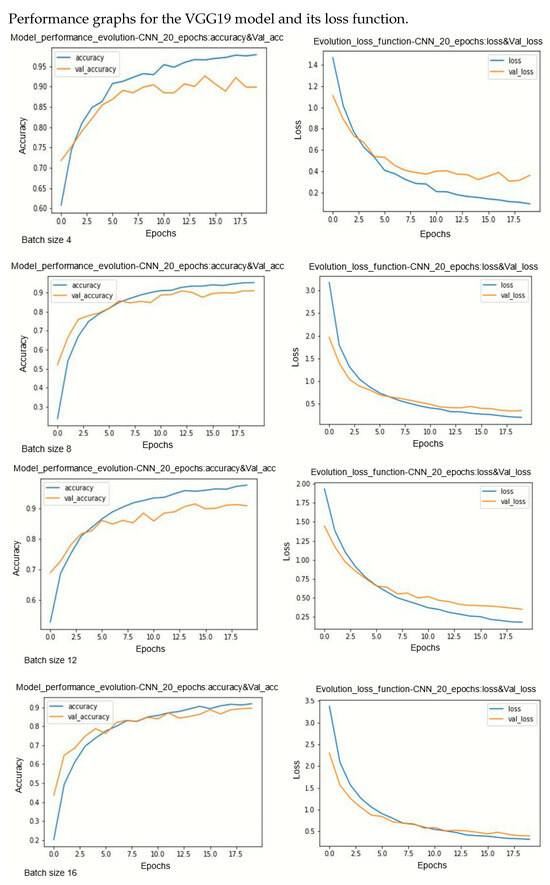

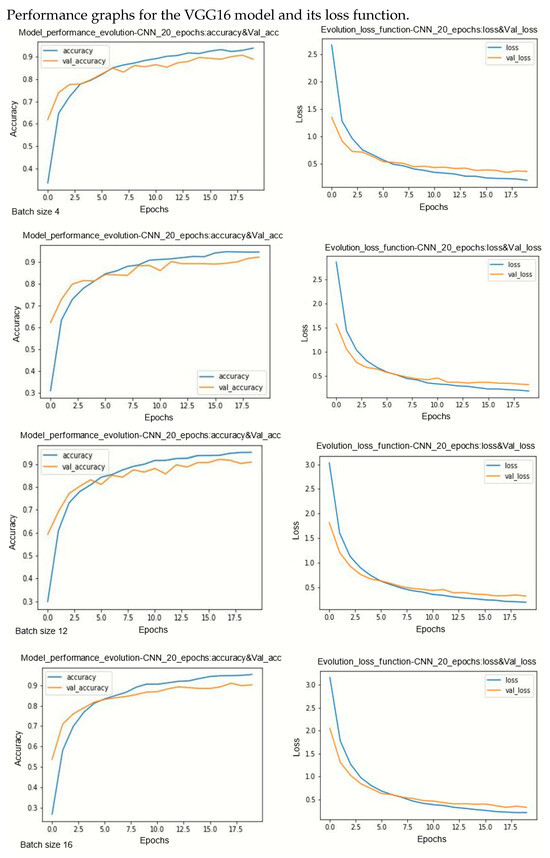

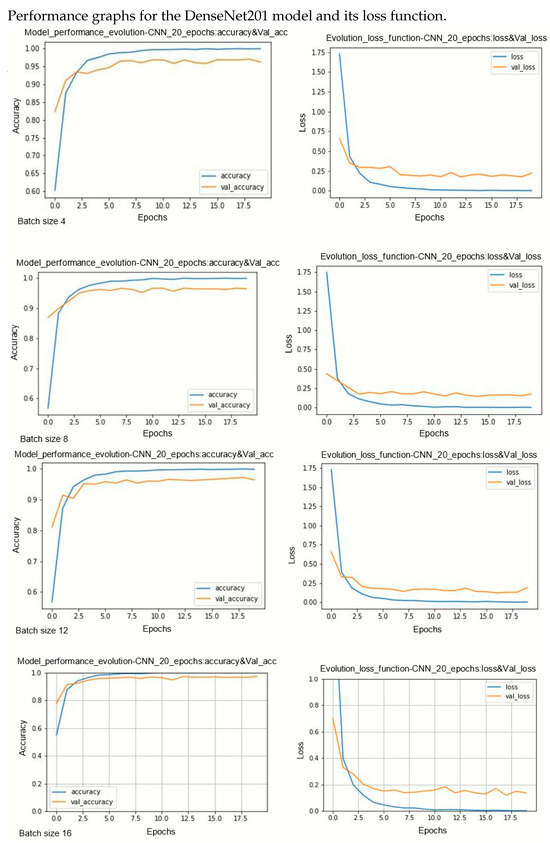

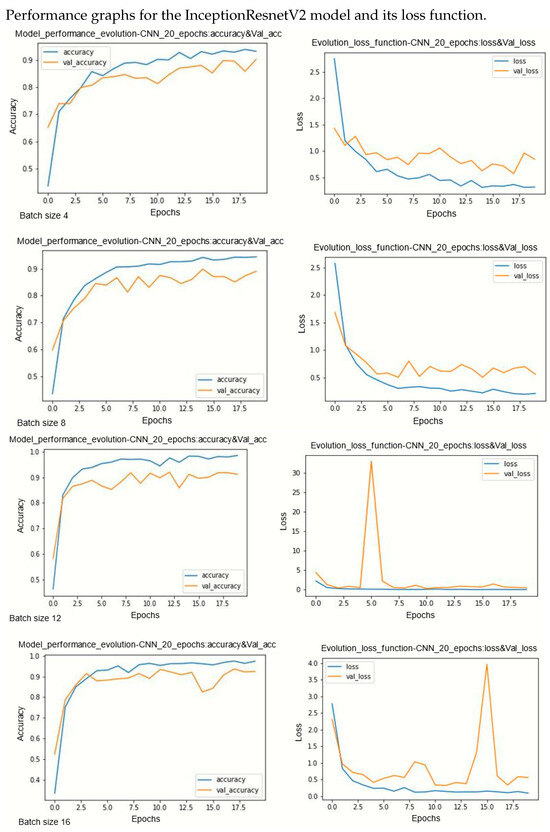

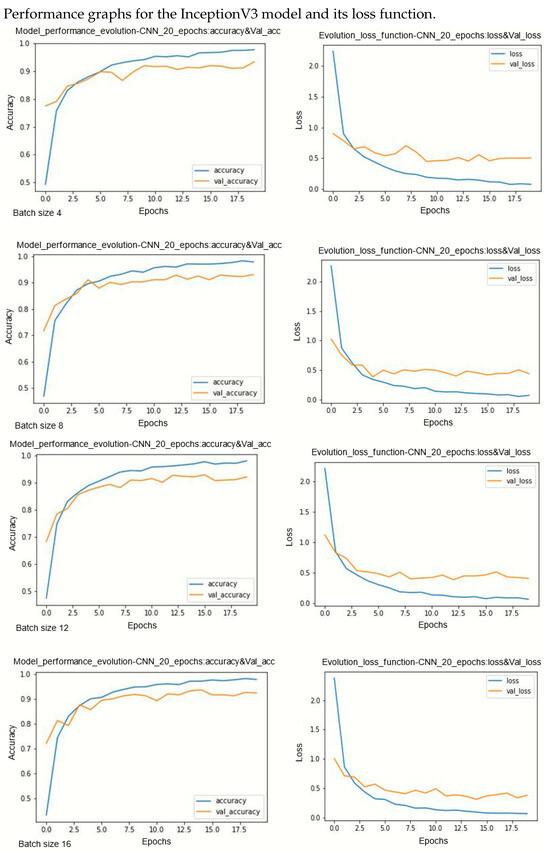

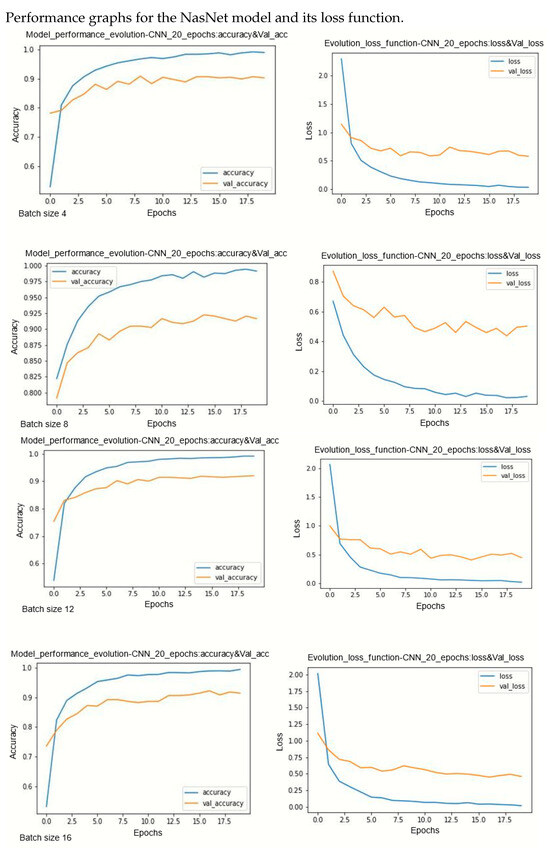

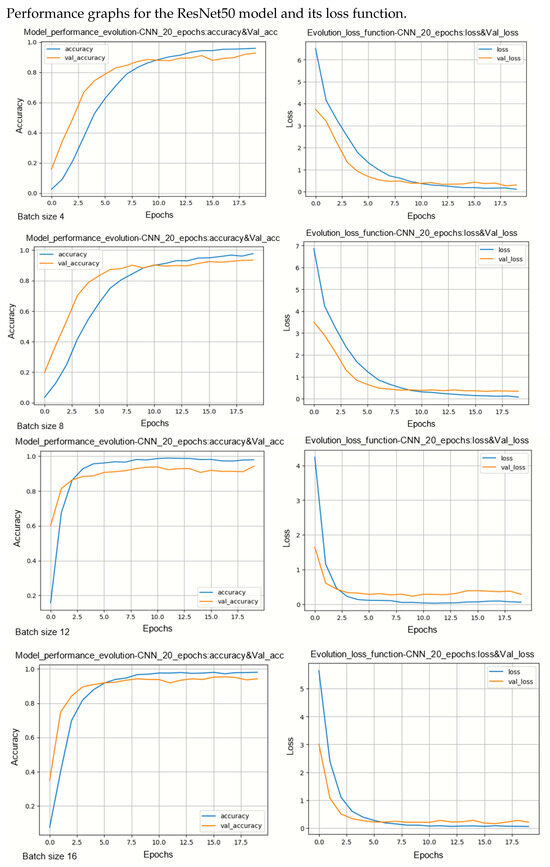

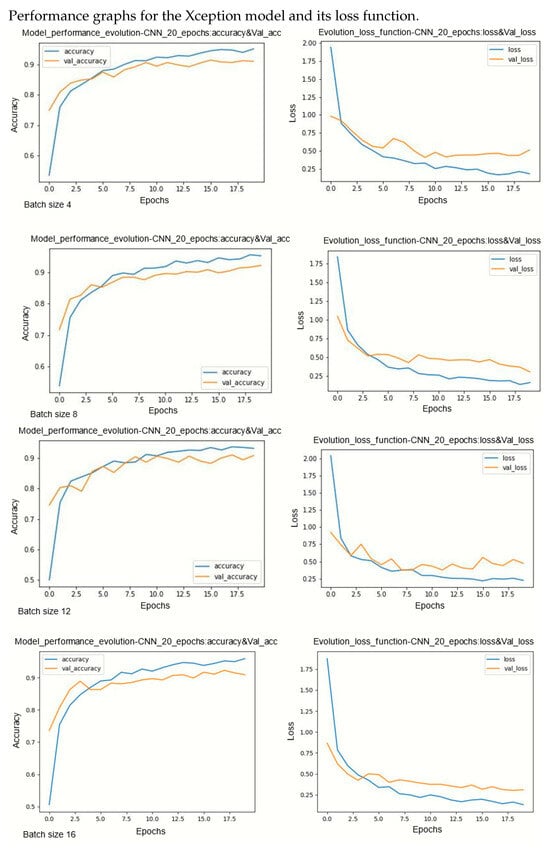

Appendix A. Performance Graphs for the Eight Architectures

This annex illustrates the training and validation performance as well as loss trends of the models detailed in Section 4.2 (VGG19, VGG16, DenseNet201, InceptionResNetV2, InceptionV3, NASNet, ResNet50, Xception) with varying batch sizes of 4, 8, 12, and 16. These results were gathered during the training and testing phases of the models over 20 epochs (iterations). All architectures were trained with accuracy as the overall evaluation metric.

During model training, two primary challenges are often encountered: underfitting and overfitting. Underfitting occurs when there is a significant disparity between the training loss curve and the validation loss curve. This discrepancy indicates that the model is not effectively learning from the training data. On the other hand, overfitting occurs when the validation error surpasses the training error as the number of epochs increases. The loss curves provide insights into the model’s progression towards its target state across epochs, with lower loss values indicating a more efficient model. By observing several curves, two observations can be made. Models are less sensitive on underlearning and overlearning.

Figure A1.

Performance evolution and losses of Vgg19.

Figure A2.

Performance evolution and losses of Vgg16.

Figure A3.

Performance evolution and losses of DenseNet201.

Figure A4.

Performance evolution and losses of InceptionResnetV2.

Figure A5.

Performance evolution and losses of InceptionV3.

Figure A6.

Performance evolution and losses of NasNet.

Figure A7.

Performance evolution and losses of ResNet50.

Figure A8.

Performance evolution and losses of Xception.

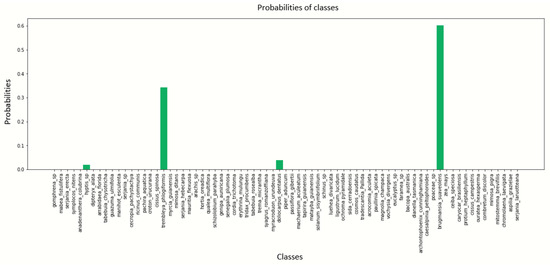

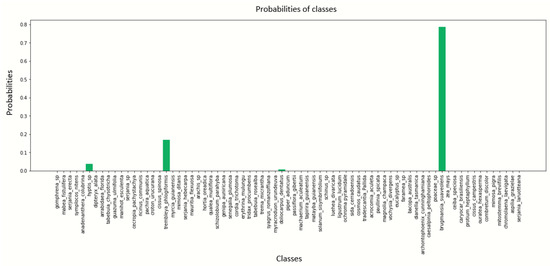

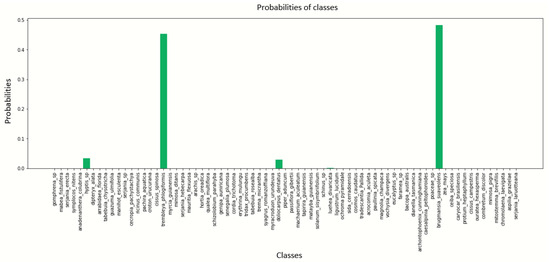

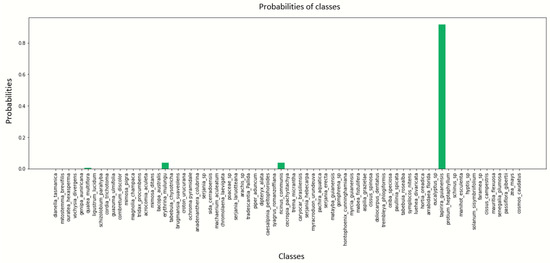

Appendix B. Graphs of pollen73S Class Probabilities When Predicting a Pollen Grain Using the Best-Performing Architecture (DenseNet201)

Appendix B illustrates the prediction probabilities of each class derived from the performance of a CNN architecture used to classify POLLEN73S from 20 epochs and batch sizes of 4, 8, 12 and 16, respectively (see Figure A9, Figure A10, Figure A11, Figure A12 and Figure A13).

Figure A9.

Image to predict (https://gapp.org/your-pollinator-garden-online/ accessed on 10 November 2023).

Figure A10.

Pollen image prediction using the DenseNet201 architecture with a batch size of 4.

Figure A11.

Pollen image prediction using the DenseNet201 architecture with a batch size of 8.

Figure A12.

Pollen image prediction using the DenseNet201 architecture with a batch size of 12.

Figure A13.

Pollen image prediction using the DenseNet201 architecture with a batch size of 16.

References

- Potts, S.G.; Biesmeijer, J.C.; Kremen, C.; Neumann, P.; Schweiger, O.; Kunin, W.E. Global pollinator declines: Trends, impacts and drivers. Trends Ecol. Evol. 2010, 25, 345–353. [Google Scholar] [CrossRef]

- Demers, I. État des Connaissances sur le Pollen et les Alergies; desLibris, Institut national de santé publique du Québec: Québec, QC, Canada, 2013. [Google Scholar]

- Girard, M. La méLissopalynologie L’étude des Pollens dans le Miel; Université Laval: Québec, QC, Canada, 2014. [Google Scholar]

- Astolfi, G.; Goncalves, A.B.; Menezes, G.V.; Borges, F.S.B.; Astolfi, A.C.M.N.; Matsubara, E.T.; Alvarez, M.; Pistori, H. POLLEN73S: An image dataset for pollen grains classification. Ecol. Inform. 2020, 60, 101165. [Google Scholar] [CrossRef]

- Bianco, S.; Cadene, R.; Celona, L.; Napoletano, P. Benchmark analysis of representative deep neural network architectures. IEEE Access 2018, 6, 64270–64277. [Google Scholar] [CrossRef]

- Li, C.; Polling, M.; Cao, L.; Gravendeel, B.; Verbeek, F.J. Analysis of automatic image classification methods for Urticaceae pollen classification. Neurocomputing 2023, 522, 181–193. [Google Scholar] [CrossRef]

- Bates, S.; Hastie, T.; Tibshirani, R. Cross-validation: What does it estimate and how well does it do it? J. Am. Stat. Assoc. 2023, 119, 1–12. [Google Scholar] [CrossRef]

- Kee, E.; Chong, J.J.; Choong, Z.J.; Lau, M. A comparative analysis of cross-validation techniques for a smart and lean pick-and-place solution with deep learning. Electronics 2023, 12, 2371. [Google Scholar] [CrossRef]

- Daood, A.; Ribeiro, E.; Bush, M. Pollen grain recognition using deep learning. In Proceedings of the Advances in Visual Computing: 12th International Symposium, ISVC 2016, Las Vegas, NV, USA, 12–14 December 2016; Proceedings, Part I 12. Springer: Berlin/Heidelberg, Germany, 2016; pp. 321–330. [Google Scholar]

- Sevillano, V.; Holt, K.; Aznarte, J.L. Precise automatic classification of 46 different pollen types with convolutional neural networks. PLoS ONE 2020, 15, e0229751. [Google Scholar] [CrossRef]

- Battiato, S.; Ortis, A.; Trenta, F.; Ascari, L.; Politi, M.; Siniscalco, C. Detection and classification of pollen grain microscope images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 980–981. [Google Scholar]

- Treloar, W.; Taylor, G.; Flenley, J. Towards automation of palynology 1: Analysis of pollen shape and ornamentation using simple geometric measures, derived from scanning electron microscope images. J. Quat. Sci. Publ. Quat. Res. Assoc. 2004, 19, 745–754. [Google Scholar] [CrossRef]

- Travieso, C.M.; Briceño, J.C.; Ticay-Rivas, J.R.; Alonso, J.B. Pollen classification based on contour features. In Proceedings of the 2011 15th IEEE International Conference on Intelligent Engineering Systems, Poprad, Slovakia, 23–25 June 2011; pp. 17–21. [Google Scholar] [CrossRef]

- García, N.M.; Chaves, V.A.E.; Briceño, J.C.; Travieso, C.M. Pollen Grains Contour Analysis on Verification Approach. In Proceedings of the Hybrid Artificial Intelligent Systems, Salamanca, Spain, 8–12 March 2012; Corchado, E., Snášel, V., Abraham, A., Woźniak, M., Graña, M., Cho, S.B., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 521–532. [Google Scholar]

- Ticay-Rivas, J.R.; del Pozo-Baños, M.; Travieso, C.M.; Arroyo-Hernández, J.; Pérez, S.T.; Alonso, J.B.; Mora-Mora, F. Pollen Classification Based on Geometrical, Descriptors and Colour Features Using Decorrelation Stretching Method. In Proceedings of the Artificial Intelligence Applications and Innovations, Wroclaw, Poland, 23–25 May 2011; Iliadis, L., Maglogiannis, I., Papadopoulos, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 342–349. [Google Scholar]

- Sevillano, V.; Aznarte, J.L. Improving classification of pollen grain images of the POLEN23E dataset through three different applications of deep learning convolutional neural networks. PLoS ONE 2018, 13, e0201807. [Google Scholar] [CrossRef] [PubMed]

- Polling, M.; Li, C.; Cao, L.; Verbeek, F.; de Weger, L.A.; Belmonte, J.; De Linares, C.; Willemse, J.; de Boer, H.; Gravendeel, B. Neural networks for increased accuracy of allergenic pollen monitoring. Sci. Rep. 2021, 11, 11357. [Google Scholar] [CrossRef]

- Gallardo, R.; García-Orellana, C.J.; González-Velasco, H.M.; García-Manso, A.; Tormo-Molina, R.; Macías-Macías, M.; Abengózar, E. Automated multifocus pollen detection using deep learning. Multimed. Tools Appl. 2024, 1–16. [Google Scholar] [CrossRef]

- Gallardo-Caballero, R.; García-Orellana, C.J.; García-Manso, A.; González-Velasco, H.M.; Tormo-Molina, R.; Macías-Macías, M. Precise pollen grain detection in bright field microscopy using deep learning techniques. Sensors 2019, 19, 3583. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, A.B.; Souza, J.S.; Silva, G.G.d.; Cereda, M.P.; Pott, A.; Naka, M.H.; Pistori, H. Feature extraction and machine learning for the classification of Brazilian Savannah pollen grains. PLoS ONE 2016, 11, e0157044. [Google Scholar]

- Manikis, G.C.; Marias, K.; Alissandrakis, E.; Perrotto, L.; Savvidaki, E.; Vidakis, N. Pollen grain classification using geometrical and textural features. In Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST), Abu Dhabi, UAE, 8–10 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- del Pozo-Banos, M.; Ticay-Rivas, J.R.; Alonso, J.B.; Travieso, C.M. Features extraction techniques for pollen grain classification. Neurocomputing 2015, 150, 377–391. [Google Scholar] [CrossRef]

- Marcos, J.V.; Nava, R.; Cristóbal, G.; Redondo, R.; Escalante-Ramírez, B.; Bueno, G.; Déniz, Ó.; González-Porto, A.; Pardo, C.; Chung, F.; et al. Automated pollen identification using microscopic imaging and texture analysis. Micron 2015, 68, 36–46. [Google Scholar] [CrossRef]

- Djoulde, K.; Ousman, B.; Abboubakar, H.; Bitjoka, L.; Tchiegang, C. Classification of Pepper Seeds by Machine Learning Using Color Filter Array Images. J. Imaging 2024, 10, 41. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Ju, F. Automatic classification of pollen grain microscope images using a multi-scale classifier with SRGAN deblurring. Appl. Sci. 2022, 12, 7126. [Google Scholar] [CrossRef]

- Kubera, E.; Kubik-Komar, A.; Kurasiński, P.; Piotrowska-Weryszko, K.; Skrzypiec, M. Detection and recognition of pollen grains in multilabel microscopic images. Sensors 2022, 22, 2690. [Google Scholar] [CrossRef] [PubMed]

- Minowa, Y.; Shigematsu, K.; Takahara, H. A deep learning-based model for tree species identification using pollen grain images. Appl. Sci. 2022, 12, 12626. [Google Scholar] [CrossRef]

- Tsiknakis, N.; Savvidaki, E.; Manikis, G.C.; Gotsiou, P.; Remoundou, I.; Marias, K.; Alissandrakis, E.; Vidakis, N. Pollen grain classification based on ensemble transfer learning on the Cretan Pollen Dataset. Plants 2022, 11, 919. [Google Scholar] [CrossRef]

- Viertel, P.; König, M. Pattern recognition methodologies for pollen grain image classification: A survey. Mach. Vis. Appl. 2022, 33, 18. [Google Scholar] [CrossRef]

- Shazia, A.; Xuan, T.Z.; Chuah, J.H.; Usman, J.; Qian, P.; Lai, K.W. A comparative study of multiple neural network for detection of COVID-19 on chest X-ray. EURASIP J. Adv. Signal Process. 2021, 2021, 50. [Google Scholar] [CrossRef]

- Albahli, S.; Albattah, W. Detection of coronavirus disease from X-ray images using deep learning and transfer learning algorithms. J. X-ray Sci. Technol. 2020, 28, 841–850. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Khanzhina, N.; Putin, E.; Filchenkov, A.; Zamyatina, E. Pollen grain recognition using convolutional neural network. In Proceedings of the ESANN, Bruges, Belgium, 25–27 April 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).