FishSegSSL: A Semi-Supervised Semantic Segmentation Framework for Fish-Eye Images

Abstract

1. Introduction

- We present a comprehensive study on fish-eye image segmentation by adopting conventional semi-supervised learning (SSL) methods from the perspective image domain. Our study reveals the suboptimal performance of such methods since they were not originally designed for fish-eye images.

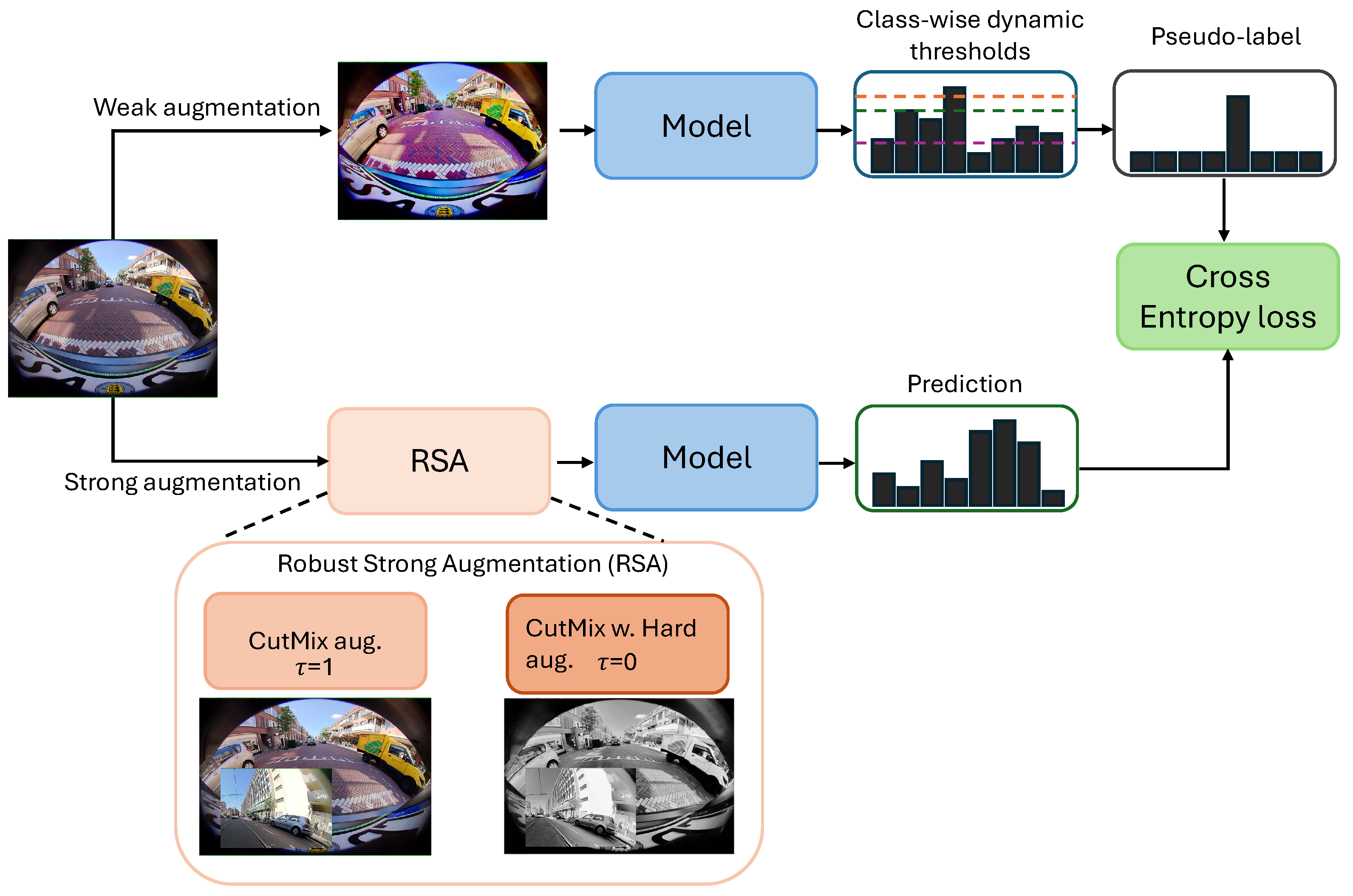

- We propose a new SSL-based semantic segmentation method that incorporates three key concepts: pseudo-label filtering to reduce noisy pseudo-labels, dynamic thresholding for adaptive filtering based on class difficulty, and robust strong augmentation as a regularizer on easy samples.

- This is the first work on semi-supervised fish-eye image segmentation that is uni-modal and single-task.

- We perform an extensive experimental analysis with a detailed ablation and sensitivity study on the WoodScape dataset, revealing a 10.49% improvement over fully supervised learning with the same amount of labeled data and showcasing the efficacy of the introduced method.

- We make the code available at: https://github.com/snehaputul/FishSegSSL (accessed on 15 January 2024).

2. Related Works

2.1. Perspective Image Segmentation

2.2. Fish-Eye Image Segmentaiton

2.3. Segmentation for Autonomous Driving

3. Method

3.1. Priliminaries

3.2. Proposed Method

3.2.1. Pseudo-Label Filtering

3.2.2. Dynamic Confidence Thresholding

3.2.3. Robust Strong Augmentation

4. Experiments

4.1. Dataset and Implementation Details

4.2. Baseline

4.3. Main Results

4.4. Ablation Study

4.5. Sensitivity Study

4.5.1. Hyperparameter Sensitivity

4.5.2. Confidence Thresholding

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yogamani, S.; Hughes, C.; Horgan, J.; Sistu, G.; Varley, P.; O’Dea, D.; Uricár, M.; Milz, S.; Simon, M.; Amende, K.; et al. Woodscape: A multi-task, multi-camera fisheye dataset for autonomous driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9308–9318. [Google Scholar]

- Talaoubrid, H.; Vert, M.; Hayat, K.; Magnier, B. Human tracking in top-view fisheye images: Analysis of familiar similarity measures via hog and against various color spaces. J. Imaging 2022, 8, 115. [Google Scholar] [CrossRef]

- Deng, L.; Yang, M.; Qian, Y.; Wang, C.; Wang, B. CNN based semantic segmentation for urban traffic scenes using fisheye camera. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 231–236. [Google Scholar]

- Kumar, V.R.; Klingner, M.; Yogamani, S.; Milz, S.; Fingscheidt, T.; Mader, P. Syndistnet: Self-supervised monocular fisheye camera distance estimation synergized with semantic segmentation for autonomous driving. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 61–71. [Google Scholar]

- Shi, H.; Li, Y.; Yang, K.; Zhang, J.; Peng, K.; Roitberg, A.; Ye, Y.; Ni, H.; Wang, K.; Stiefelhagen, R. FishDreamer: Towards Fisheye Semantic Completion via Unified Image Outpainting and Segmentation. arXiv 2023, arXiv:2303.13842. [Google Scholar]

- Khayretdinova, G.; Apprato, D.; Gout, C. A Level Set-Based Model for Image Segmentation under Geometric Constraints and Data Approximation. J. Imaging 2023, 10, 2. [Google Scholar] [CrossRef]

- Apud Baca, J.G.; Jantos, T.; Theuermann, M.; Hamdad, M.A.; Steinbrener, J.; Weiss, S.; Almer, A.; Perko, R. Automated Data Annotation for 6-DoF AI-Based Navigation Algorithm Development. J. Imaging 2021, 7, 236. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Peng, X.; Wang, K.; Zhang, Z.; Geng, N.; Zhang, Z. A Point-Cloud Segmentation Network Based on SqueezeNet and Time Series for Plants. J. Imaging 2023, 9, 258. [Google Scholar] [CrossRef] [PubMed]

- Valada, A.; Oliveira, G.L.; Brox, T.; Burgard, W. Deep multispectral semantic scene understanding of forested environments using multimodal fusion. In Proceedings of the 2016 International Symposium on Experimental Robotics, Tokyo, Japan, 3–6 October 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 465–477. [Google Scholar]

- Sun, Y.; Zuo, W.; Liu, M. RTFNet: RGB-thermal fusion network for semantic segmentation of urban scenes. IEEE Robot. Autom. Lett. 2019, 4, 2576–2583. [Google Scholar] [CrossRef]

- Paul, S.; Patterson, Z.; Bouguila, N. Semantic Segmentation Using Transfer Learning on Fisheye Images. In Proceedings of the 22nd IEEE International Conference on Machine Learning and Applications (ICMLA), Jacksonville, FL, USA, 15–17 December 2023; pp. 1–8. [Google Scholar]

- Ye, Y.; Yang, K.; Xiang, K.; Wang, J.; Wang, K. Universal semantic segmentation for fisheye urban driving images. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; pp. 648–655. [Google Scholar]

- Blott, G.; Takami, M.; Heipke, C. Semantic segmentation of fisheye images. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Playout, C.; Ahmad, O.; Lecue, F.; Cheriet, F. Adaptable deformable convolutions for semantic segmentation of fisheye images in autonomous driving systems. arXiv 2021, arXiv:2102.10191. [Google Scholar]

- Sáez, Á.; Bergasa, L.M.; López-Guillén, E.; Romera, E.; Tradacete, M.; Gómez-Huélamo, C.; Del Egido, J. Real-time semantic segmentation for fisheye urban driving images based on ERFNet. Sensors 2019, 19, 503. [Google Scholar] [CrossRef] [PubMed]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

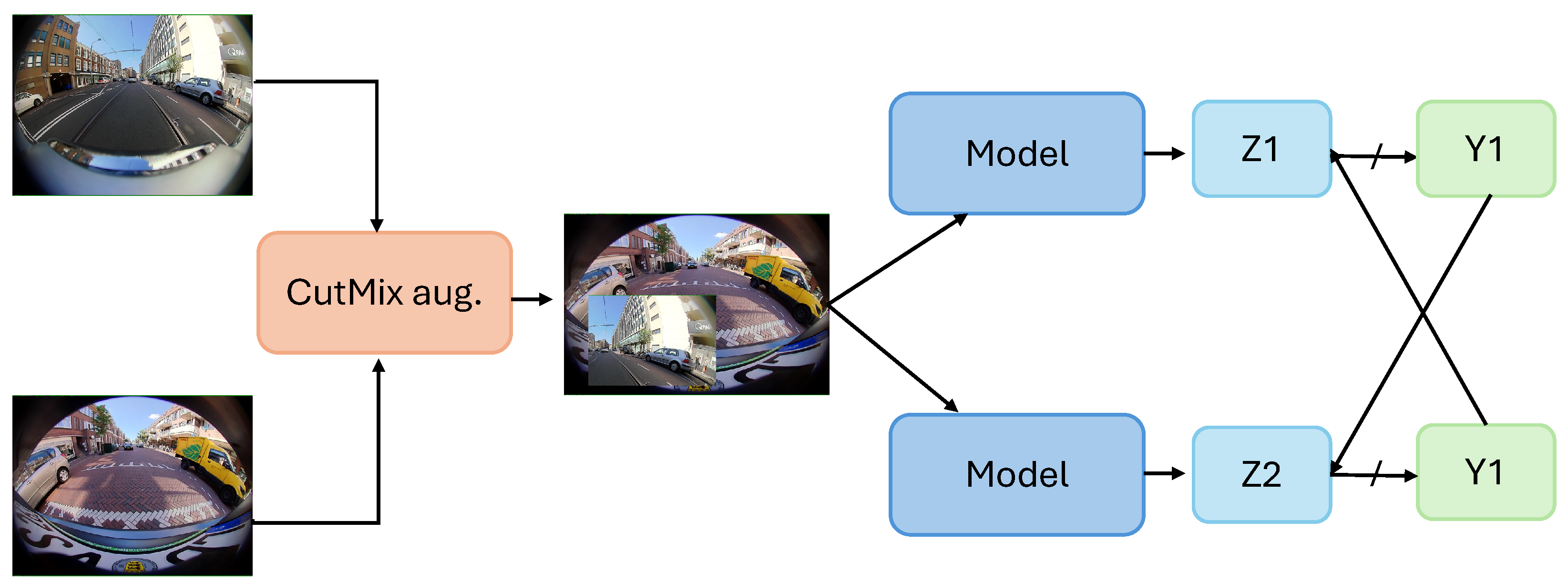

- Chen, X.; Yuan, Y.; Zeng, G.; Wang, J. Semi-supervised semantic segmentation with cross pseudo supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2613–2622. [Google Scholar]

- Cui, L.; Jing, X.; Wang, Y.; Huan, Y.; Xu, Y.; Zhang, Q. Improved Swin Transformer-Based Semantic Segmentation of Postearthquake Dense Buildings in Urban Areas Using Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 369–385. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6881–6890. [Google Scholar]

- Zhang, B.; Tian, Z.; Tang, Q.; Chu, X.; Wei, X.; Shen, C. Segvit: Semantic segmentation with plain vision transformers. Adv. Neural Inf. Process. Syst. 2022, 35, 4971–4982. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Arsenali, B.; Viswanath, P.; Novosel, J. Rotinvmtl: Rotation invariant multinet on fisheye images for autonomous driving applications. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Cho, J.; Lee, J.; Ha, J.; Resende, P.; Bradaï, B.; Jo, K. Surround-view Fisheye Camera Viewpoint Augmentation for Image Semantic Segmentation. IEEE Access 2023, 11, 48480–48492. [Google Scholar] [CrossRef]

- Kumar, V.R.; Hiremath, S.A.; Bach, M.; Milz, S.; Witt, C.; Pinard, C.; Yogamani, S.; Mäder, P. Fisheyedistancenet: Self-supervised scale-aware distance estimation using monocular fisheye camera for autonomous driving. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 574–581. [Google Scholar]

- Ramachandran, S.; Sistu, G.; McDonald, J.; Yogamani, S. Woodscape Fisheye Semantic Segmentation for Autonomous Driving–CVPR 2021 OmniCV Workshop Challenge. arXiv 2021, arXiv:2107.08246. [Google Scholar]

- Kumar, V.R.; Yogamani, S.; Rashed, H.; Sitsu, G.; Witt, C.; Leang, I.; Milz, S.; Mäder, P. Omnidet: Surround view cameras based multi-task visual perception network for autonomous driving. IEEE Robot. Autom. Lett. 2021, 6, 2830–2837. [Google Scholar] [CrossRef]

- Schneider, L.; Jasch, M.; Fröhlich, B.; Weber, T.; Franke, U.; Pollefeys, M.; Rätsch, M. Multimodal neural networks: RGB-D for semantic segmentation and object detection. In Proceedings of the Image Analysis: 20th Scandinavian Conference, SCIA 2017, Tromsø, Norway, 12–14 June 2017; Proceedings, Part I 20. Springer: Berlin/Heidelberg, Germany, 2017; pp. 98–109. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Teichmann, M.; Weber, M.; Zoellner, M.; Cipolla, R.; Urtasun, R. Multinet: Real-time joint semantic reasoning for autonomous driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Erkent, Ö.; Laugier, C. Semantic segmentation with unsupervised domain adaptation under varying weather conditions for autonomous vehicles. IEEE Robot. Autom. Lett. 2020, 5, 3580–3587. [Google Scholar] [CrossRef]

- Kalluri, T.; Varma, G.; Chandraker, M.; Jawahar, C. Universal semi-supervised semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5259–5270. [Google Scholar]

- Huo, X.; Xie, L.; He, J.; Yang, Z.; Zhou, W.; Li, H.; Tian, Q. ATSO: Asynchronous teacher-student optimization for semi-supervised image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1235–1244. [Google Scholar]

- Hung, W.C.; Tsai, Y.H.; Liou, Y.T.; Lin, Y.Y.; Yang, M.H. Adversarial learning for semi-supervised semantic segmentation. arXiv 2018, arXiv:1802.07934. [Google Scholar]

- Novosel, J.; Viswanath, P.; Arsenali, B. Boosting semantic segmentation with multi-task self-supervised learning for autonomous driving applications. In Proceedings of the NeurIPS-Workshops, Vancouver, BC, Canada, 13 December 2019; Volume 3. [Google Scholar]

- French, G.; Laine, S.; Aila, T.; Mackiewicz, M.; Finlayson, G. Semi-supervised semantic segmentation needs strong, varied perturbations. arXiv 2019, arXiv:1906.01916. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Zhang, B.; Wang, Y.; Hou, W.; Wu, H.; Wang, J.; Okumura, M.; Shinozaki, T. Flexmatch: Boosting semi-supervised learning with curriculum pseudo labeling. Adv. Neural Inf. Process. Syst. 2021, 34, 18408–18419. [Google Scholar]

- Gui, G.; Zhao, Z.; Qi, L.; Zhou, L.; Wang, L.; Shi, Y. Enhancing Sample Utilization through Sample Adaptive Augmentation in Semi-Supervised Learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 15880–15889. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

| Method | mIoU |

|---|---|

| Fully supervised (baseline) | 54.32 |

| MeanTeacher [17] | 54.20 |

| CPS [18] | 60.31 |

| CPS with CutMix [18] | 62.47 |

| FishSegSSL (ours) | 64.81 |

| PLF | RSA | DCT | mIoU |

|---|---|---|---|

| ✓ | ✓ | ✓ | 64.81 |

| ✓ | ✓ | 62.89 | |

| ✓ | ✓ | 63.94 | |

| ✓ | 62.49 | ||

| 62.47 |

| CPS Weight | |||

|---|---|---|---|

| LR | 1.5 | 1 | 0.5 |

| 0.01 | 47.36 | 46.46 | 46.67 |

| 0.001 | 57.28 | 57.19 | 56.10 |

| 0.0001 | 60.31 | 59.86 | 60.40 |

| Threshold Value | mIoU |

|---|---|

| 0.5 | 60.56 |

| 0.75 | 60.66 |

| 0.9 | 59.96 |

| Threshold Value | mIoU |

|---|---|

| 0.5 | 62.38 |

| 0.75 | 61.98 |

| 0.9 | 62.12 |

| 0.95 | 55.60 |

| Method Setting | mIoU |

|---|---|

| Default (box number = 3; range = 0.25–0.5) | 62.47 |

| nb. of. box = 2 and range = 0.1 to 0.25 | 62.13 |

| nb. of. box = 2 and range = 0.25 to 0.5 | 61.60 |

| nb. of. box = 2 and range = 0.4 to 0.75 | 61.29 |

| nb. of. box = 3 and range = 0.1 to 0.25 | 61.02 |

| nb. of. box = 3 and range = 0.4 to 0.75 | 61.59 |

| nb. of. box = 4 and range = 0.1 to 0.25 | 61.00 |

| nb. of. box = 4 and range = 0.25 to 0.5 | 60.91 |

| nb. of. box = 4 and range = 0.4 to 0.75 | 62.71 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Paul, S.; Patterson, Z.; Bouguila, N. FishSegSSL: A Semi-Supervised Semantic Segmentation Framework for Fish-Eye Images. J. Imaging 2024, 10, 71. https://doi.org/10.3390/jimaging10030071

Paul S, Patterson Z, Bouguila N. FishSegSSL: A Semi-Supervised Semantic Segmentation Framework for Fish-Eye Images. Journal of Imaging. 2024; 10(3):71. https://doi.org/10.3390/jimaging10030071

Chicago/Turabian StylePaul, Sneha, Zachary Patterson, and Nizar Bouguila. 2024. "FishSegSSL: A Semi-Supervised Semantic Segmentation Framework for Fish-Eye Images" Journal of Imaging 10, no. 3: 71. https://doi.org/10.3390/jimaging10030071

APA StylePaul, S., Patterson, Z., & Bouguila, N. (2024). FishSegSSL: A Semi-Supervised Semantic Segmentation Framework for Fish-Eye Images. Journal of Imaging, 10(3), 71. https://doi.org/10.3390/jimaging10030071