Abstract

The Plug-and-Play framework has demonstrated that a denoiser can implicitly serve as the image prior for model-based methods for solving various inverse problems such as image restoration tasks. This characteristic enables the integration of the flexibility of model-based methods with the effectiveness of learning-based denoisers. However, the regularization strength induced by denoisers in the traditional Plug-and-Play framework lacks a physical interpretation, necessitating demanding parameter tuning. This paper addresses this issue by introducing the Constrained Plug-and-Play (CPnP) method, which reformulates the traditional PnP as a constrained optimization problem. In this formulation, the regularization parameter directly corresponds to the amount of noise in the measurements. The solution to the constrained problem is obtained through the design of an efficient method based on the Alternating Direction Method of Multipliers (ADMM). Our experiments demonstrate that CPnP outperforms competing methods in terms of stability and robustness while also achieving competitive performance for image quality.

1. Introduction

The challenge of reconstructing a high-quality image from its degraded measurement is commonly formulated as a linear inverse problem. Such a problem has to be addressed in several imaging frameworks, such as in medicine [1,2,3,4], microscopy [5,6,7], and astronomy [8,9,10]. Although these are different and maybe distant topics, they share a common linear model [11] for the image acquisition process: namely,

where is a known blur operator called the Point Spread Function (PSF) [12], and represents additive random noise with a standard deviation of . Linear inverse problems, due to the physics underlying the data acquisition process, often suffer from ill-posedness [12], necessitating the formulation of the solution as a minimizer of a regularized objective function.

The standard model-based approach involves minimizing a regularized objective function of the form:

where encodes data fidelity information, and is the regularization term. The choice of the function ℓ depends on the statistical noise perturbing the data. In the presence of additive Gaussian noise (AWGN), the natural choice is the least square function, whilst signal-dependent noise requires tailored functionals: for example, Poisson noise induces the employment of the Kullback–Leibler functional [13,14,15]. Defining the regularization parameter is crucial, and it is often selected by hand. The imaging community has developed several techniques for the automatic choosing of such a parameter. For example, well established methods such as the discrepancy principle, L-curve, or cross-validation [16] have been considered for Gaussian noise, while the discrepancy principle has been adapted also in the presence of Poisson noise [17,18]. Alternatively, another interesting approach consists of recasting the optimization problem (2) as a constrained one [19,20,21]: namely,

The positive scalar c represents the strength of the constraints, and different from the parameter in (2), it has a physical meaning. For example, the value of c in (3) usually depends on the amount of noise.

Another grand challenge for the model-based approach is the design of an effective regularization functional capable of capturing intricate image features. Some examples include the well-known Tikhonov regularization [22], known as ridge regression in statistical contexts, and its variant that promotes diffuse components on the final reconstruction; the total variation functional, which aims to preserve sharp edges [2,23,24]; the -norms regularizers, with , which induce sparsity on the image and/or gradient domains [19,25,26]; and the elastic-net functional [4], which is a convex combination of and norms.

Nowadays, it has been recognized that among the possible strategies to solve imaging inverse problems, learning-based techniques represent the most efficient alternative. The advancements in deep learning for imaging problems were driven by the application of neural networks to learn an inverse mapping from measurements to the image space to obtain an approximate solution. In particular, a dataset is composed by considering several acquisitions and their corresponding ground truths, and a neural network is trained in order to minimize the empirical risk. This end-to-end approach is very interesting since the forward degradation model (1) is not needed. This property is extremely useful when the problem of interest is physically unknown or hard to express with an analytical expression. Another appealing characteristic of these inverse learning methods is their computational efficiency during the inference, as they outperform standard variational techniques. However, the major limitation of learning approaches regards the stability of the models [27]. The presence of noise in the measurement can produce various artifacts in the reconstruction obtained through a neural network [28]. Moreover, when a measurement falls outside the training set distribution, the model may produce hallucinated reconstructions containing misleading artifacts [29]. Such undesired behavior is particularly problematic in some applications. In addition, different from variational approaches, learned models have to be retrained whenever the acquisition model changes.

A popular technique to construct a model that is adaptable to various imaging tasks with loose dependence on the training data consists of decoupling the degradation model from the learning-based prior. The fast development of learning-based techniques in the field of imaging inverse problems has allowed the defining of data-driven regularizers that have largely outperformed handcrafted ones [30]. This approach is often referred to as Plug-and-Play (PnP) and represents a versatile and innovative paradigm to impose a statistically learned prior within a variational framework. The PnP prior framework [31,32,33,34,35] has emerged as a potent approach that leverages advanced denoisers as regularizers without explicitly defining . However, the lack of an explicit objective function complicates theoretical analyses [35]. Regularization by denoising (RED) [36] addresses this by formulating an explicit regularization functional, but practical challenges persist, especially in ensuring denoisers align with the manifold of natural images.

The complexity of selecting an appropriate regularizer prompts exploration beyond traditional handcrafted terms. While model-based approaches often rely on handcrafted terms, this paper advocates for the PnP framework and demonstrates that closed-form regularizers are not always optimal for inducing prior information. The PnP approach, rooted in proximal algorithms, allows substituting the regularization term with off-the-shelf denoisers, diversifying prior information sources. The paper concludes with an overview of existing PnP studies that highlights the modular structure’s flexibility and the versatility of employing various proximal algorithms and denoisers.

The presented work is organized as follows. Section 2 is devoted to provide a brief introduction to the PnP method (Section 2.1), and then it presents the novel constrained approach (Section 2.2). Section 3 addresses the performance of the the proposed model. In particular, we define the implementation settings (Section 3.1); we discuss selection of the optimal denoiser, examining how different denoising priors influence the overall performance of the proposed model (Section 3.2); we show the robustness of the proposed method with respect to its parameters (Section 3.3 and Section 3.4); we provide comparisons from a quantitative and qualitative perspective with similar state-of-the-art algorithms (Section 3.5). Finally, Section 4 draws the conclusion and future perspectives.

Notations

Bold small letters refer to vectors, while bold capital letters refer to matrices. The operator stands for the proximity operator of f: . The projection on a set is denoted with . Square or rectangular images are vectorized: an image is seen as a vector belonging to , where and the elements of are stacked column-wise. The term denotes the indicator function of the set . denotes the set of positive real numbers.

2. Constrained PnP Model

This section briefly introduces the Plug-and-Play approach: showing its main idea and convergence properties. The second part is devoted to presenting the novel constrained strategy.

2.1. Plug-and-Play Models: A Brief Overview

The building block of Plug-and-Play methods is the established Alternating Direction of Multipliers Method (ADMM) used to solve Problem (2): this method introduces a novel variable that induces a further constraint; in this way, one is led to solve the following problem.

The augmented Lagrangian function for (4) reads as

where is a penalty parameter and is the Lagrangian parameter relative to the constraint . After minimal algebraic manipulations, the new unconstrained problem to be solved is

Such a problem is addressed by the Alternate Direction Method of Multipliers, which is shown in Algorithm 1. The astute reader will recognize that Steps 3 and 4 in Algorithm 1 are the proximity operators [37] of ℓ and computed at and at , respectively. For classical choices for ℓ, such as the least square or the Kullback–Leibler functionals, the proximity operators have explicit expressions (see [38] for a comprehensive list of proximity operators for several families of functions). The straightforward expression for the proximity operator of is available for particular regularizations: such as, for example, , whose prox is the soft thresholding operator, and Tikhonov regularization, whose prox is simply a rescaling of the input [38].

| Algorithm 1: Alternating Direction Method of Multipliers (ADMM). |

|

Under the framework presented in [31], Step 3 of Algorithm 1 is considered as a reconstruction step and provides the maximum a priori estimate given the data and the operator . Step 4 is the denoising operator: this depends obviously on the design choice in (2), namely, in the choice for . The strategy depicted in [31] instead suggests bypassing this designing and directly employing a denoising operator D in Step 3.

This is tantamount to still considering an objective function as in (2), but regularization functional is unknown. Algorithm 2 assumes that the operator D is the proximity operator of the unknown function computed at :

After its first presentation to the scientific community, several researcher showed that under a suitable hypothesis, Plug-and-Play methods converge to a solution of the original problem. In [39], the authors showed fixed-point convergence under the usage of denoisers belonging to the bounded denoiser class. The authors in [40] adopt an incremental version of PnP algorithm and prove its convergence under some explicit hypothesis on ℓ and on the chosen denoiser. Ref. [41] shows that under the hypotheses of D being averaged [41] (Definition 2.1) and ℓ being convex, Algorithm 2 converges and, moreover, that it can be proved that some denoisers are actually the prox of some particular functions (e.g., the non-local-mean filter is the prox of a quadratic convex function).

| Algorithm 2: Plug-and-Play method (PnP). |

|

2.2. The Proposed Constrained Model

Under the hypothesis of additive noise in (1) and following the constrained approach [42] shown in (3), Problem (2) is reformulated as

where , with and being the known noise level. Hereafter, we assume that an AWGN framework, i.e., additive Gaussian noise is perturbing the image, and hence, the choice of a fit-to-data functional consists of the least square:

The constrained formulation, hence, has the following form:

We make the following assumption:

Assumption 1.

The function ρ is continuous.

The constraint set is compact; thus, under Assumption 1, Problem (6) has at least one solution by Weierstrass theorem. Problem (6) can be equivalently recast into the following form:

where is a closed disk with a zero center and radius . Adopting the approach of Section 2.1, the augmented Lagrangian function relative to Equation (7) reads as

where and are the Lagrange multipliers, and and are the proximity penalties. The new task to be addressed is, hence,

and then the PnP-ADMM approach can be adopted to solve the above optimization problem. As previously shown, this amounts to substituting the proximity operator of computed at with a denoiser computed at the same point. Algorithm 3 shows the implementation of this strategy.

The noise level may be a priori known; on the other hand, when information only about the type of noise is available, one can find in the literature several methods to estimate the noise level (see for example [43]). The computation of seems to pose some computational issues since it requires the inversion of a matrix, which could be cumbersome in terms of computational cost. Nonetheless, under a suitable hypothesis for the operator , which is practically satisfied in real-life applications, such an update can be easily pursued in Fourier spaces via FFTs.

Remark 1

(Convergence of the ADMM approach). We discuss some observations about the convergence of the presented scheme in Algorithm 3.

- A well-established result [44] states that ADMM converges even when more than two variables are considered in the formulation.

- The substitution of the proximity operator in Step 4 may hinder the convergence behavior of the whole algorithm. Coupling the result from [44] with [41], for example, assures the convergence for a suitable denoiser D.

| Algorithm 3: Constrained Plug-and-Play approach (CPnP). |

|

Remark 2.

We point out that the constrained approach has a remarkable positive outcome: it avoids selection of the regularization parameter in Problem (2).

3. Results

In this section, we delve into the outcomes of our study. The first subsection begins with an overview of the methodological setting. This encompasses the experimental setup, the metrics employed for the evaluation of the results, and a comprehensive presentation of the comparative methods. Subsequently, we focus on the analysis of the proposed method with respect to the choice of the ADMM penalty sequences. The final section offers a detailed examination, both qualitatively and quantitatively, of our approach in comparison to its competitors.

3.1. Settings, Evaluation Metrics, and Competing Baseline Methods

As a case study, we focus on the task of image deblurring with AWGN assumptions. Accordingly, in Equation (1), represents a Gaussian blurring operator with a standard deviation of , and denotes zero-mean Gaussian noise with a standard deviation of . We generate blurry and noisy data by applying the image formation model (1) to the images from Set5 [45] and Set24 [46], which are referred to as the ground truths (GTs).

Our method is compared with two baselines: (1) the original unconstrained Plug-and-Play model [47] solved via the half-quadratic splitting (HQS) algorithm and (2) the unconstrained RED formulation [36] solved via ADMM. The former is referred to as PnP and the latter is referred to as RED in the following.

We evaluate the quality of restored images using the peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) metrics: both assess the quality of the reconstruction. PSNR offers a numerical perspective by measuring the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its representation. It quantifies the degree of distortion present in the restored image compared to the original and considers the full range of pixel values. Higher PSNR values typically indicate better-quality reconstruction as they suggest lower levels of distortion. SSIM evaluates the structural similarity between the restored image and the original from a perceptual standpoint. It considers factors such as luminance, contrast, and structure—mimicking human visual perception. SSIM scores closer to 1 indicate greater similarity between the restored and original images and reflect higher perceived quality. Additionally, from a theoretical standpoint, considering a ground truth image and its blurred and noisy simulated data , we use as an unbiased estimator of . Thus, we compare the real noise standard deviation with , where refers to the output of the algorithms.

We point out that, as one could expect, the quality of the restored images computed using PnP and RED strongly depends on the choice of the regularization parameters (since they consider unconstrained models). In the experiments, the regularization parameters are selected in order to maximize the PSNR metric. We stress that the proposed CPnP automatically selects the strength of the regularization, thus avoiding highly demanding parameter tuning.

Finally, as the stopping criterion, we choose the relative difference of the iterates within a tolerance of . The maximum number of iterations is set to 100 for all the methods.

All the experiments are conducted on a PC with an Intel(R) Core(TM) i7-8565U CPU @ 1.80 GHz 1.99 GHz (Intel Corporation, Santa Clara, CA, USA), running Windows 10 Pro and MATLAB 2023b. The codes of the proposed CPnP are available at https://github.com/AleBenfe/CPNP (accessed on 25 January 2024).

3.2. On the Choice of the Denoiser

In this section, we evaluate the CPnP method’s efficacy: specifically, by analyzing its performance when considering various state-of-the-art denoising techniques as denoising engines. Our investigation encompasses the Block-Matching and 3D Filtering (BM3D) [48], Non-Local Means (NLM) [49], and Deep Convolutional Neural Network (DnCNN) [47] methods.

BM3D [48] employs a multi-step process wherein it partitions the image into blocks, identifies similar blocks, collaboratively filters them to estimate clean signals, applies 3D transform filtering to reduce noise, and aggregates these filtered blocks to produce the final denoised image. In contrast, NLM [49] compares local image patches, averages similar patches to estimate clean pixels, and then outputs a denoised image. Lastly, DnCNN [47] utilizes a deep neural network to directly map noisy images to denoised counterparts, utilizing residual learning to boost its performance.

In this section, the focus is on images belonging to the Set5 dataset. We simulate blurred and noisy data by employing the linear image formation model defined in Equation (1) with parameters set to (, ). Through this setup, we aim to assess how each of the aforementioned denoising methods impacts the quality of the restored images with respect to PSNR and SSIM metrics and visual quality.

In Table 1, we report the mean values of PSNR and SSIM for images in the Set5 dataset for NLM, BM3D, and DnCNN. DnCNN consistently demonstrates superior performance compared to both BM3D and NLM across both PSNR and SSIM metrics. Specifically, this suggests that DnCNN induces better regularization compared to BM3D and NLM.

Table 1.

Mean values of PSNR and SSIM for the images in Set5 by varying the embedded denoiser: namely, NLM, BM3D, and DncNN. The best results are highlighted in bold. DnCNN outperforms both BM3D and NLM in terms of the considered metrics.

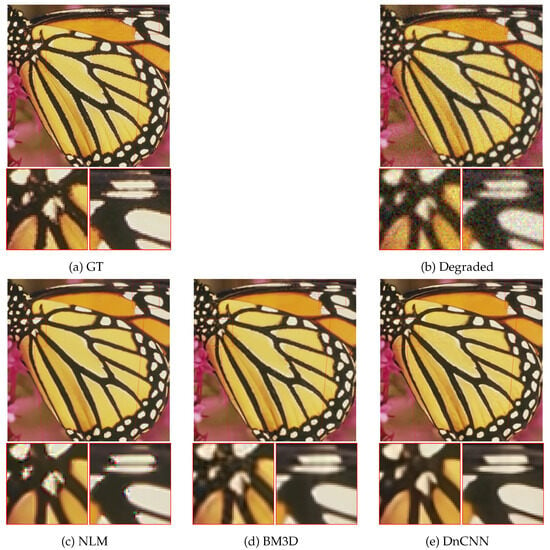

In Figure 1, we report the restored images using DnCNN, BM3D, and NLM alongside their respective ground truth and corrupted input data. DnCNN (Figure 1e) produces reconstructions that are noticeably more accurate and clearer (less blurred) and with fine details better preserved. In contrast, NLM (Figure 1c) tends to overly smooth out details, while the BM3D reconstruction (Figure 1d) appears out of focus.

Figure 1.

Restoration of the Butterfly image from Set5 obtained when selecting different denoising engines: namely, NLM, BM3D, and DnCNN. From left to right: two close-ups of ground truth, degraded image, and the CPnP restorations with NLM, BM3D, and DnCNN. Our CPnP provides more reliable restorations characterized by enhanced clarity and reduced noise when using DnCNN as the denoiser.

These observations align with the findings from Table 1. The visual assessment further reinforces the superiority of DnCNN and highlights its ability to preserve image details and enhance overall image clarity when compared to traditional denoising methods like NLM and BM3D. Based on these findings, we solely consider DnCNN as the embedded denoiser in our CPnP framework for the subsequent sections.

3.3. On the Choice of the Penalty Sequence for the Proposed CPnP

In this section, we investigate the performance of the implemented CPnP method by systematically varying the ADMM penalty sequences. We define increasing penalty sequences according to the relations:

where . Our experimental setup involves initializing the pair of parameters from the set and testing different values of : specifically, .

We set , and for the purposes of our experiments, we assume a known level of noise denoted by . Further discussion on the rationale behind choosing these parameters will be provided in the subsequent section.

As discussed in the previous section, we adopt the DnCNN introduced in [47] as the denoising prior due to its state-of-the-art performance in the field and its fast computation.

We consider the sole butterfly image from Set5. We simulate a degraded acquisition by setting and . We investigate the stability of CPnP with respect to the choice of , , and . We point out that in the experiments, we did not observe any significant difference when choosing different images.

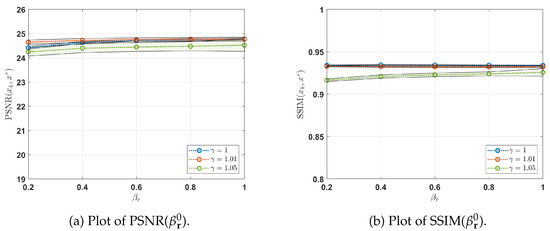

The stability of the implemented CPnP is depicted in Figure 2a,b. The figures illustrate the distribution of the PSNR and SSIM metrics, respectively, while varying the starting points (, ) of the increasing ADMM penalty sequences for different values of . More specifically, for each , we consider the distribution of the considered metrics (average ± standard deviation) with respect to for different values of . In-depth analysis reveals that CPnP consistently maintains high performance levels, as assessed by PSNR and SSIM metrics, even when varying the parameter and employing different initializations for and . This robustness across different settings underscores the reliability and effectiveness of the CPnP approach for deblurring and denoising tasks. For all subsequent sections, we set , , and as fixed parameters.

Figure 2.

Distribution of PSNR (a) and SSIM (b) by varying the starting points (, ) of the increasing ADMM penalty sequences for different value of . In (a,b), for each , we present the PSNR and SSIM distributions (average ± standard deviation), respectively, with respect to . The solid lines represent the means of these distributions. The results demonstrate the stability of CPnP and reveal comparable performance in terms of PSNR and SSIM across various values and initializations of and .

3.4. On the Choice of the Constraint Parameters for the Proposed CPnP

In this section, we consider the images belonging to Set5. We generate blurred and noisy data by applying the linear image formation model (1) with two different degradation settings (, ) and (, ). We examine the impact on the restored images of with ranging in [0,1]. Two distinct scenarios are taken into account. In the first, which is referred to as the ideal scenario in the following, we assume that the magnitude of is exactly known. In the second, which is referred to as the realistic scenario in the following, we assume that only an estimate of is provided. The estimation is computed following the approach outlined in [43].

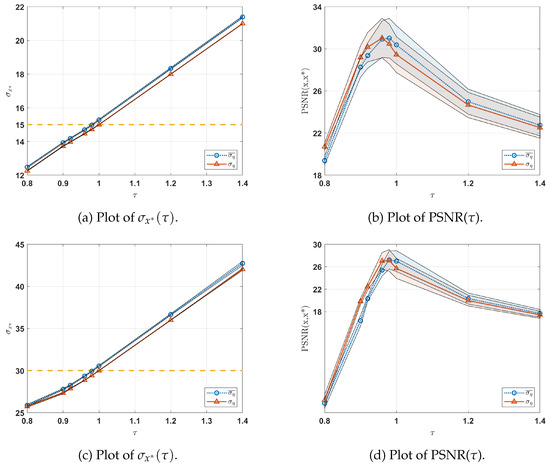

In Figure 3a–d, we illustrate the variations in and PSNR as a function of for the two different degradation levels considered. For each , we display the distribution (average ± standard deviation) across all images in Set5 (depicted as shaded regions). The red and blue lines denote the means of these distributions for the idealized and realistic scenarios, respectively. Additionally, in Figure 3a,c, the yellow dashed line represents the true standard deviations (namely and ) of the Gaussian noise affecting all the data in Set5.

Figure 3.

Distribution of (a) and PSNR (b) by varying for the ideal (red line) and realistic (blue line) scenarios. In (a,c), the dashed yellow line represents the standard deviation of the Gaussian noise corrupting the degraded data ( and , respectively). In (a–d), for each , we present the and PSNR distributions (average ± standard deviation) with respect to all the images in Set5 for the two different degradation levels considered. The solid red and blue lines represent the means of these distributions.

In Figure 3a,c, the idealized scenario (blue lines) demonstrates that the computed closely aligns with when across all images in Set5 (thin shading). In contrast, within the realistic scenario, where only an estimate of the Gaussian noise level is provided, the optimal approximation of is achieved at for both degradation levels. Multiple experiments have consistently revealed that this phenomenon stems from the algorithm in [43], which tends to overestimate the noise level in the simulated data .

Figure 3b,d illustrate the behavior of the PSNR metric with respect to . Notably, comparable performance in terms of PSNR is observed for both scenarios. Additionally, Figure 3b,d emphasizes that the highest PSNR is achieved when setting .

Lastly, Figure 3a indicates that smaller values of tend to underestimate, while larger values of tend to overestimate . Figure 3b shows that the PSNR metric is negatively affected by these under/overestimations of the noise level.

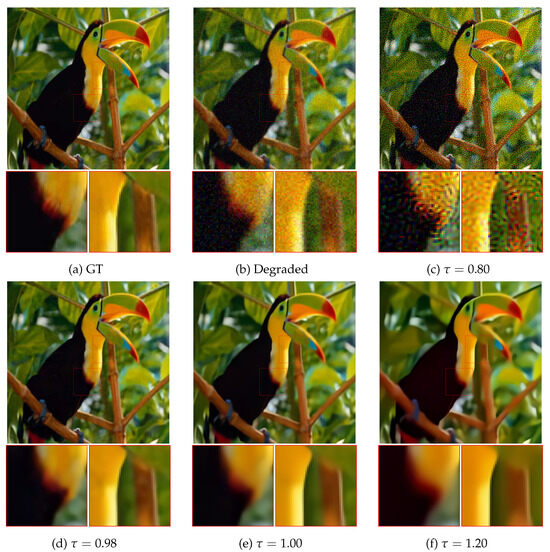

Figure 4 provides a visual inspection of the behavior of CPnP with respect to values. Small values produce several artifacts in the recovered images, while higher ones induce an oversmoothing effect on the final result. The most reliable results are obtained for close to , i.e., for values close to 1 but still smaller than 1. This behavior is due to overestimation of the noise level given by the employed algorithm [43].

Figure 4.

Restoration of the Bird image obtained with CPnP for different values of . From left to right: two close-ups of ground truth, , , , , and . Small values of produce several artifacts on the restored images, whilst large values induce smoothing of the result. The optimal value for is close to one; due to the overestimation of the noise level given by [43], has to be set strictly less than 1.

To enhance the reliability of our CPnP testing, we employ the noise level estimation method proposed by [43] to estimate , and consistently set for all subsequent experiments.

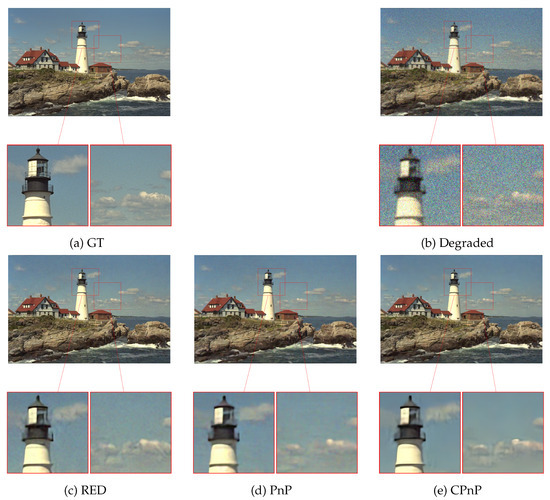

3.5. Comparisons with PnP and RED

In this section, we conduct a comparative analysis between our CPnP method and the other two competing methods: PnP and RED. The assessment of reconstruction metric performance involves utilizing images from Set24 under different degradation levels: () and (). Table 2 presents the mean PSNR and SSIM values. For the competing methods, PnP and RED, the regularization parameters are estimated to achieve optimal performance in terms of the PSNR metric. The results clearly indicate that our CPnP method outperforms both RED and PnP in terms of both performance measures. Furthermore, the effectiveness of our CPnP approach is also assessed from a visual perspective. Figure 5 demonstrates the superiority of CPnP with respect to RED and PnP by providing two close-up views of the restored images. These close-ups highlight the superior reconstruction capabilities of CPnP in terms of clarity and noise reduction compared to both PnP and RED. This visual evidence confirms the outcomes of the quantitative results presented in Table 2 and emphasizes the enhanced performance and reliability of CPnP for deblurring and denoising tasks. Moreover, we finally remark that CPnP exhibits robustness regarding the choice of hyperparameters, unlike RED and PnP, as underlined in the previous sections. The physical interpretation of CPnP hyperparameters enhances the interpretability and practicality of CPnP compared to traditional methods such as RED and PnP.

Table 2.

Mean values of PSNR and SSIM for the images in Set5 and Set24 when varying the degradation levels. The best results are highlighted in bold. Our CPnP outperforms both RED and PnP in terms of the considered metrics.

Figure 5.

Restoration of the kodim21 image from Set24 obtained with different methods. From left to right: two close-ups of ground truth, degraded image, RED, PnP, and CPnP. Our CPnP provides more reliable restorations characterized by enhanced clarity and reduced noise.

4. Conclusions

In this paper, a novel constrained formulation of the well-established Plug-and-Play framework is presented and is denoted as CPnP. Within this model, the minimum of the regularization functional is compelled to adhere to a discrepancy-based threshold. The solution to the CPnP model is obtained within the ADMM framework and offers a straightforward yet effective approach for image restoration while allowing the usage of different denoising priors.

The determination of the threshold, serving as the regularization parameter, holds physical significance and involves estimating the standard deviation of the noise affecting the data. Efficient assessment of the noise level in the degraded data is achieved through the method outlined in [43] and eliminates the need for extensive parameter tuning as required by unconstrained models like PnP and RED.

In the experimental section, CPnP demonstrates stability and robustness concerning both the model and algorithm hyperparameters. Furthermore, it performs comparably, if not better, than both PnP and RED in terms of PSNR and SSIM metrics as well as visual inspection. The superior performance, coupled with its stability and robustness, position CPnP as a promising choice for various image restoration applications.

Author Contributions

Conceptualization, A.B. and P.C.; methodology, A.B. and P.C.; software, A.B. and P.C.; validation, A.B. and P.C.; formal analysis, A.B. and P.C.; investigation, A.B. and P.C.; data curation, A.B. and P.C.; writing—original draft preparation, A.B. and P.C.; writing—review and editing, A.B. and P.C.; visualization, A.B. and P.C.; supervision, A.B. and P.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been conducted under the activities of Gruppo Nazionale Calcolo Scientifico (GNCS) of Istituto Nazionale di Alta Matematica (INDAM) and under the activities of the 2022 PRIN project “Sustainable Tomographic Imaging with Learning and rEgularization”. This study was carried out within the MICS (Made in Italy—Circular and Sustainable) Extended Partnership and received funding from the European Union Next-GenerationEU (Piano Nazionale di ripresa e resilienza (PNRR)—Missione 4 Componente 2, Investimento 1.3—D.D. 1551.11-10-2022, PE00000004).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The code is available at https://github.com/AleBenfe/CPNP (accessed on 25 January 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Sapienza, D.; Franchini, G.; Govi, E.; Bertogna, M.; Prato, M. Deep Image Prior for medical image denoising, a study about parameter initialization. Front. Appl. Math. Stat. 2022, 8, 995225. [Google Scholar] [CrossRef]

- Cascarano, P.; Sebastiani, A.; Comes, M.C.; Franchini, G.; Porta, F. Combining Weighted Total Variation and Deep Image Prior for natural and medical image restoration via ADMM. In Proceedings of the 2021 21st International Conference on Computational Science and Its Applications (ICCSA), Cagliari, Italy, 13–16 September 2021; pp. 39–46. [Google Scholar]

- Coli, V.; Piccolomini, E.L.; Morotti, E.; Zanni, L. A fast gradient projection method for 3D image reconstruction from limited tomographic data. J. Physics Conf. Ser. 2021, 904, 012013. [Google Scholar] [CrossRef]

- Benfenati, A.; Causin, P.; Lupieri, M.; Naldi, G. Regularization Techniques for Inverse Problem in DOT Applications. J. Phys. Conf. Ser. 2020, 1476, 012007. [Google Scholar] [CrossRef]

- Calisesi, G.; Ghezzi, A.; Ancora, D.; D’Andrea, C.; Valentini, G.; Farina, A.; Bassi, A. Compressed sensing in fluorescence microscopy. Prog. Biophys. Mol. Biol. 2022, 168, 66–80. [Google Scholar] [CrossRef] [PubMed]

- Benfenati, A. upU-Net Approaches for Background Emission Removal in Fluorescence Microscopy. J. Imaging 2022, 8, 142. [Google Scholar] [CrossRef]

- Cascarano, P.; Comes, M.C.; Sebastiani, A.; Mencattini, A.; Loli Piccolomini, E.; Martinelli, E. DeepCEL0 for 2D single-molecule localization in fluorescence microscopy. Bioinformatics 2022, 38, 1411–1419. [Google Scholar] [CrossRef]

- Štěpán, J.; del Pino Alemán, T.; Bueno, J.T. Novel framework for the three-dimensional NLTE inverse problem. Astron. Astrophys. 2022, 659, A137. [Google Scholar] [CrossRef]

- Benfenati, A.; La Camera, A.; Carbillet, M. Deconvolution of post-adaptive optics images of faint circumstellar environments by means of the inexact Bregman procedure. A&A 2016, 586, A16. [Google Scholar] [CrossRef]

- Conroy, K.E.; Kochoska, A.; Hey, D.; Pablo, H.; Hambleton, K.M.; Jones, D.; Giammarco, J.; Abdul-Masih, M.; Prša, A. Physics of eclipsing binaries. V. General framework for solving the inverse problem. Astrophys. J. Suppl. Ser. 2020, 250, 34. [Google Scholar] [CrossRef]

- Bertero, M.; Boccacci, P. Introduction to Inverse Problems in Imaging; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Bertero, M.; Boccacci, P.; Ruggiero, V. Inverse Imaging with Poisson Data; IOP Publishing: Bristol, UK, 2018; pp. 2053–2563. [Google Scholar] [CrossRef]

- Benfenati, A.; Ruggiero, V. Image regularization for Poisson data. J. Phys. Conf. Ser. 2015, 657, 012011. [Google Scholar] [CrossRef]

- Di Serafino, D.; Landi, G.; Viola, M. Directional TGV-based image restoration under Poisson noise. J. Imaging 2021, 7, 99. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Lanza, A.; Pragliola, M.; Sgallari, F. Whiteness-based parameter selection for Poisson data in variational image processing. Appl. Math. Model. 2023, 117, 197–218. [Google Scholar] [CrossRef]

- Bertero, M. Regularization methods for linear inverse problems. In Inverse Problems: Lectures Given at the 1st 1986 Session of the Centro Internazionale Matematico Estivo (CIME) Held at Montecatini Terme, Italy, May 28–June 5 1986; Springer: Berlin/Heidelberg, Germany, 2006; pp. 52–112. [Google Scholar]

- Zanni, L.; Benfenati, A.; Bertero, M.; Ruggiero, V. Numerical methods for parameter estimation in Poisson data inversion. J. Math. Imaging Vis. 2015, 52, 397–413. [Google Scholar] [CrossRef]

- Bevilacqua, F.; Lanza, A.; Pragliola, M.; Sgallari, F. Nearly exact discrepancy principle for low-count Poisson image restoration. J. Imaging 2021, 8, 1. [Google Scholar] [CrossRef]

- Mylonopoulos, D.; Cascarano, P.; Calatroni, L.; Piccolomini, E.L. Constrained and unconstrained inverse Potts modelling for joint image super-resolution and segmentation. Image Process. Line 2022, 12, 92–110. [Google Scholar] [CrossRef]

- Cascarano, P.; Franchini, G.; Kobler, E.; Porta, F.; Sebastiani, A. Constrained and unconstrained deep image prior optimization models with automatic regularization. Comput. Optim. Appl. 2023, 84, 125–149. [Google Scholar] [CrossRef] [PubMed]

- Wen, Y.W.; Chan, R.H. Parameter selection for total-variation-based image restoration using discrepancy principle. IEEE Trans. Image Process. 2011, 21, 1770–1781. [Google Scholar] [CrossRef] [PubMed]

- Golub, G.H.; Von Matt, U. Tikhonov Regularization for Large Scale Problems. In Scientific Computing: Proceedings of the Workshop, Hong Kong, 10–12 March 1997; Springer: Berlin/Heidelberg, Germany, 1997; pp. 3–26. [Google Scholar]

- Campagna, R.; Crisci, S.; Cuomo, S.; Marcellino, L.; Toraldo, G. Modification of TV-ROF denoising model based on Split Bregman iterations. Appl. Math. Comput. 2017, 315, 453–467. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S. Total variation based image restoration with free local constraints. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 1, pp. 31–35. [Google Scholar]

- Lingenfelter, D.J.; Fessler, J.A.; He, Z. Sparsity regularization for image reconstruction with Poisson data. In Computational Imaging VII; SPIE: Bellingham, WA, USA, 2009; Volume 7246, pp. 96–105. [Google Scholar]

- Cascarano, P.; Calatroni, L.; Piccolomini, E.L. Efficient ℓ0 Gradient-Based Super-Resolution for Simplified Image Segmentation. IEEE Trans. Comput. Imaging 2021, 7, 399–408. [Google Scholar]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Gottschling, N.M.; Antun, V.; Adcock, B.; Hansen, A.C. The troublesome kernel: Why deep learning for inverse problems is typically unstable. arXiv 2020, arXiv:2001.01258. [Google Scholar]

- Antun, V.; Renna, F.; Poon, C.; Adcock, B.; Hansen, A.C. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc. Natl. Acad. Sci. USA 2020, 117, 30088–30095. [Google Scholar] [CrossRef]

- Arridge, S.; Maass, P.; Öktem, O.; Schönlieb, C.B. Solving inverse problems using data-driven models. Acta Numer. 2019, 28, 1–174. [Google Scholar] [CrossRef]

- Venkatakrishnan, S.V.; Bouman, C.A.; Wohlberg, B. Plug-and-play priors for model based reconstruction. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 945–948. [Google Scholar]

- Pendu, M.L.; Guillemot, C. Preconditioned Plug-and-Play ADMM with Locally Adjustable Denoiser for Image Restoration. SIAM J. Imaging Sci. 2023, 16, 393–422. [Google Scholar] [CrossRef]

- Cascarano, P.; Piccolomini, E.L.; Morotti, E.; Sebastiani, A. Plug-and-Play gradient-based denoisers applied to CT image enhancement. Appl. Math. Comput. 2022, 422, 126967. [Google Scholar] [CrossRef]

- Kamilov, U.S.; Mansour, H.; Wohlberg, B. A plug-and-play priors approach for solving nonlinear imaging inverse problems. IEEE Signal Process. Lett. 2017, 24, 1872–1876. [Google Scholar] [CrossRef]

- Hurault, S.; Kamilov, U.; Leclaire, A.; Papadakis, N. Convergent Bregman Plug-and-Play Image Restoration for Poisson Inverse Problems. arXiv 2023, arXiv:2306.03466. [Google Scholar]

- Romano, Y.; Elad, M.; Milanfar, P. The little engine that could: Regularization by denoising (RED). SIAM J. Imaging Sci. 2017, 10, 1804–1844. [Google Scholar] [CrossRef]

- Combettes, P.L.; Pesquet, J.C. Proximal splitting methods in signal processing. In Fixed-Point Algorithms for Inverse Problems in Science and Engineering; Springer: Berlin/Heidelberg, Germany, 2011; pp. 185–212. [Google Scholar]

- Chierchia, G.; Chouzenoux, E.; Combettes, P.L.; Pesquet, J.C. The Proximity Operator Repository. Available online: http://proximity-operator.net/index.html (accessed on 25 January 2024).

- Chan, S.H.; Wang, X.; Elgendy, O.A. Plug-and-Play ADMM for Image Restoration: Fixed-Point Convergence and Applications. IEEE Trans. Comput. Imaging 2017, 3, 84–98. [Google Scholar] [CrossRef]

- Sun, Y.; Wu, Z.; Xu, X.; Wohlberg, B.; Kamilov, U.S. Scalable plug-and-play ADMM with convergence guarantees. IEEE Trans. Comput. Imaging 2021, 7, 849–863. [Google Scholar] [CrossRef]

- Nair, P.; Gavaskar, R.G.; Chaudhury, K.N. Fixed-Point and Objective Convergence of Plug-and-Play Algorithms. IEEE Trans. Comput. Imaging 2021, 7, 337–348. [Google Scholar] [CrossRef]

- Cascarano, P.; Benfenati, A.; Kamilov, U.S.; Xu, X. Constrained Regularization by Denoising with Automatic Parameter Selection. IEEE Signal Process. Lett. 2024, 31, 556–560. [Google Scholar] [CrossRef]

- Immerkaer, J. Fast noise variance estimation. Comput. Vis. Image Underst. 1996, 64, 300–302. [Google Scholar] [CrossRef]

- Lin, T.; Ma, S.; Zhang, S. On the Global Linear Convergence of the ADMM with MultiBlock Variables. SIAM J. Optim. 2015, 25, 1478–1497. [Google Scholar] [CrossRef]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the 23rd British Machine Vision Conference (BMVC), Surrey, UK, 3–7 September 2012. [Google Scholar]

- Kodak Lossless True Color Image Suite. Available online: https://r0k.us/graphics/kodak/ (accessed on 25 January 2024).

- Zhang, K.; Zuo, W.; Gu, S.; Zhang, L. Learning Deep CNN Denoiser Prior for Image Restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.M. Non-local means denoising. Image Process. Line 2011, 1, 208–212. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).