Enhanced Waste Sorting Technology by Integrating Hyperspectral and RGB Imaging

Abstract

1. Introduction

2. Results

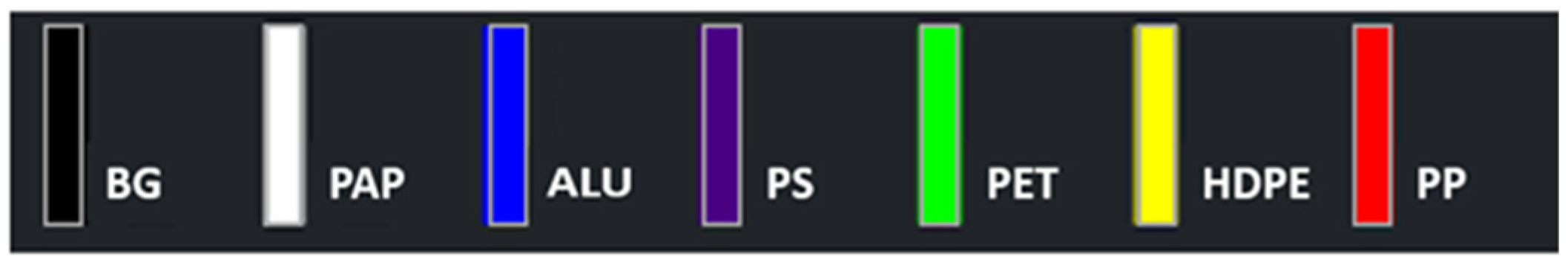

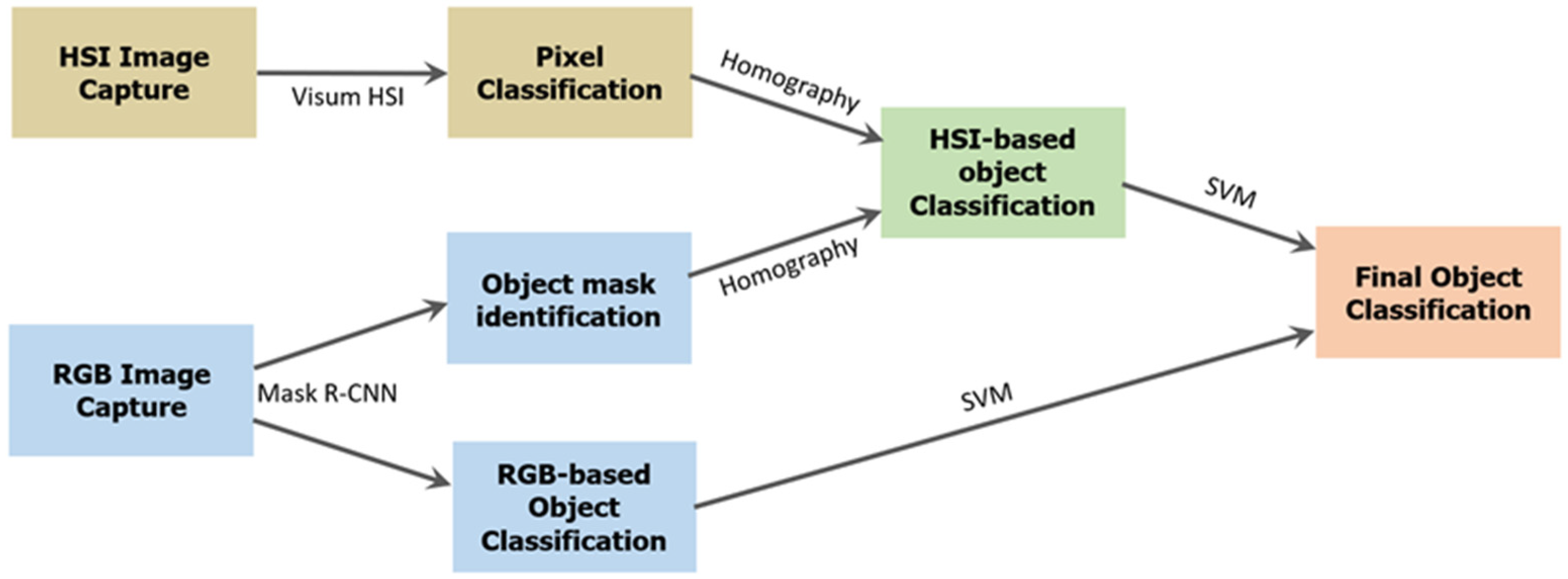

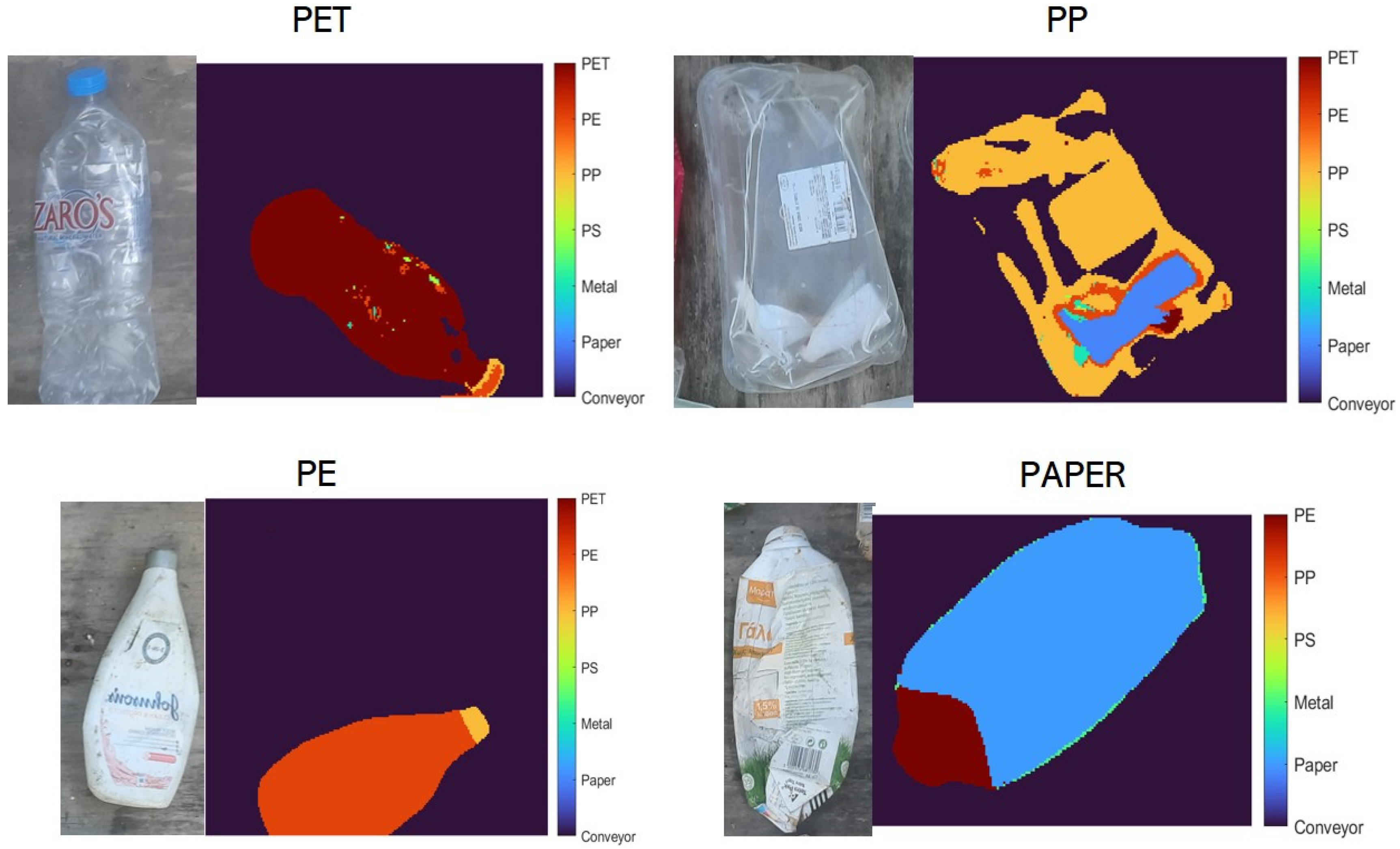

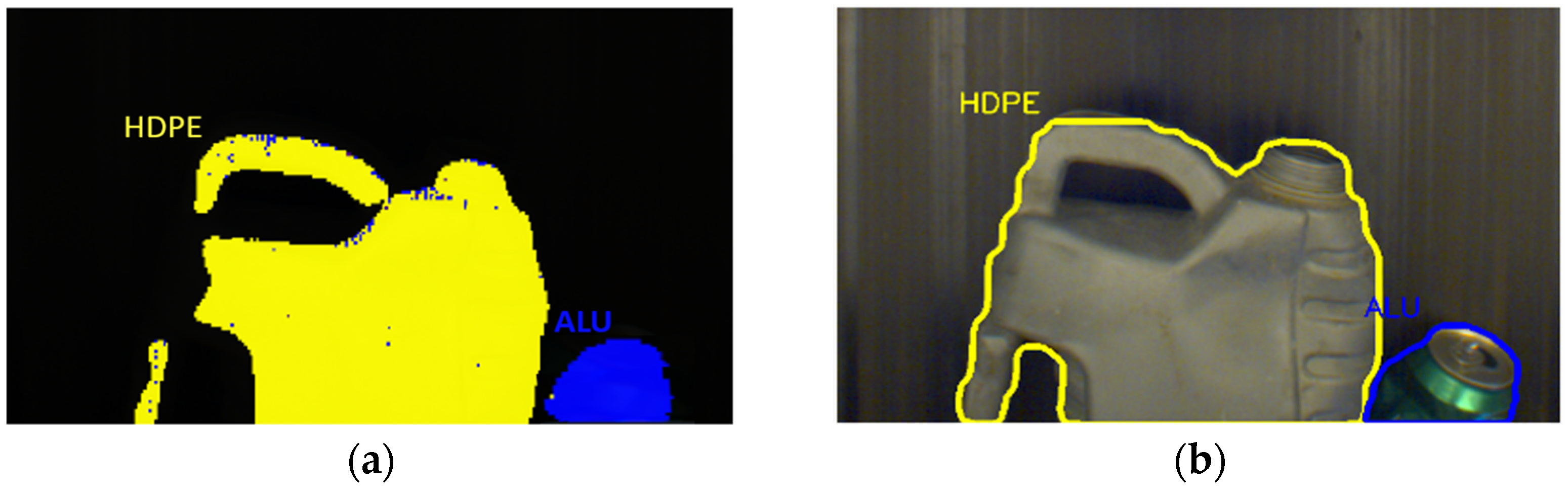

2.1. HSI-Based Waste Classification

2.2. RGB-Based Waste Classification

2.3. Integrated HSI and RGB Decision Making

3. Discussion

- An HSI module operating in the infrared spectrum, performing pixel-level material classification using spectral signatures.

- An RGB-based module operating in the visible-spectrum to detect and classify waste objects based on visual appearance.

- A bi-modal system integrating HSI and RGB outputs to combine spectral and visual information, improving classification accuracy.

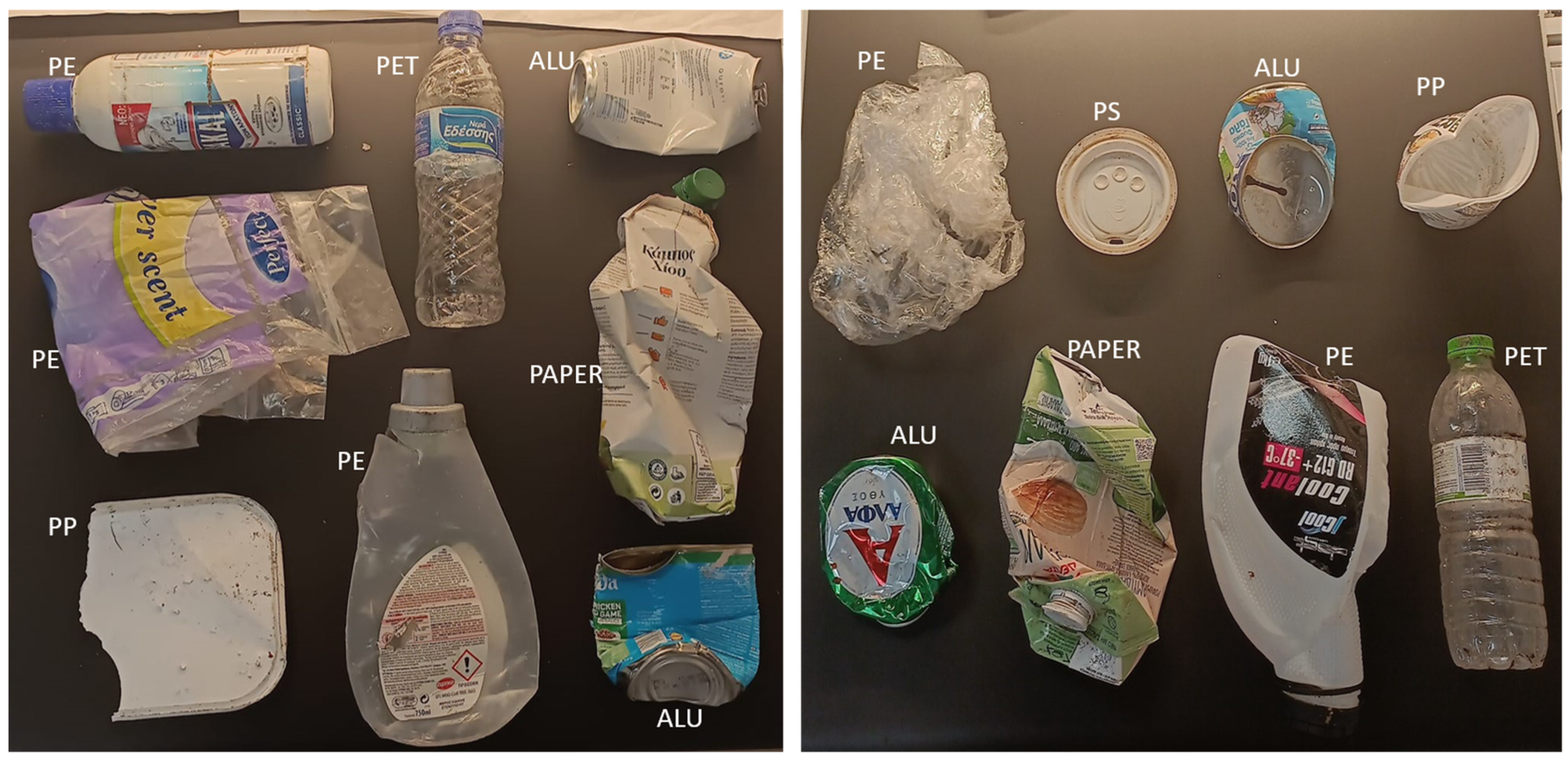

4. Materials and Methods

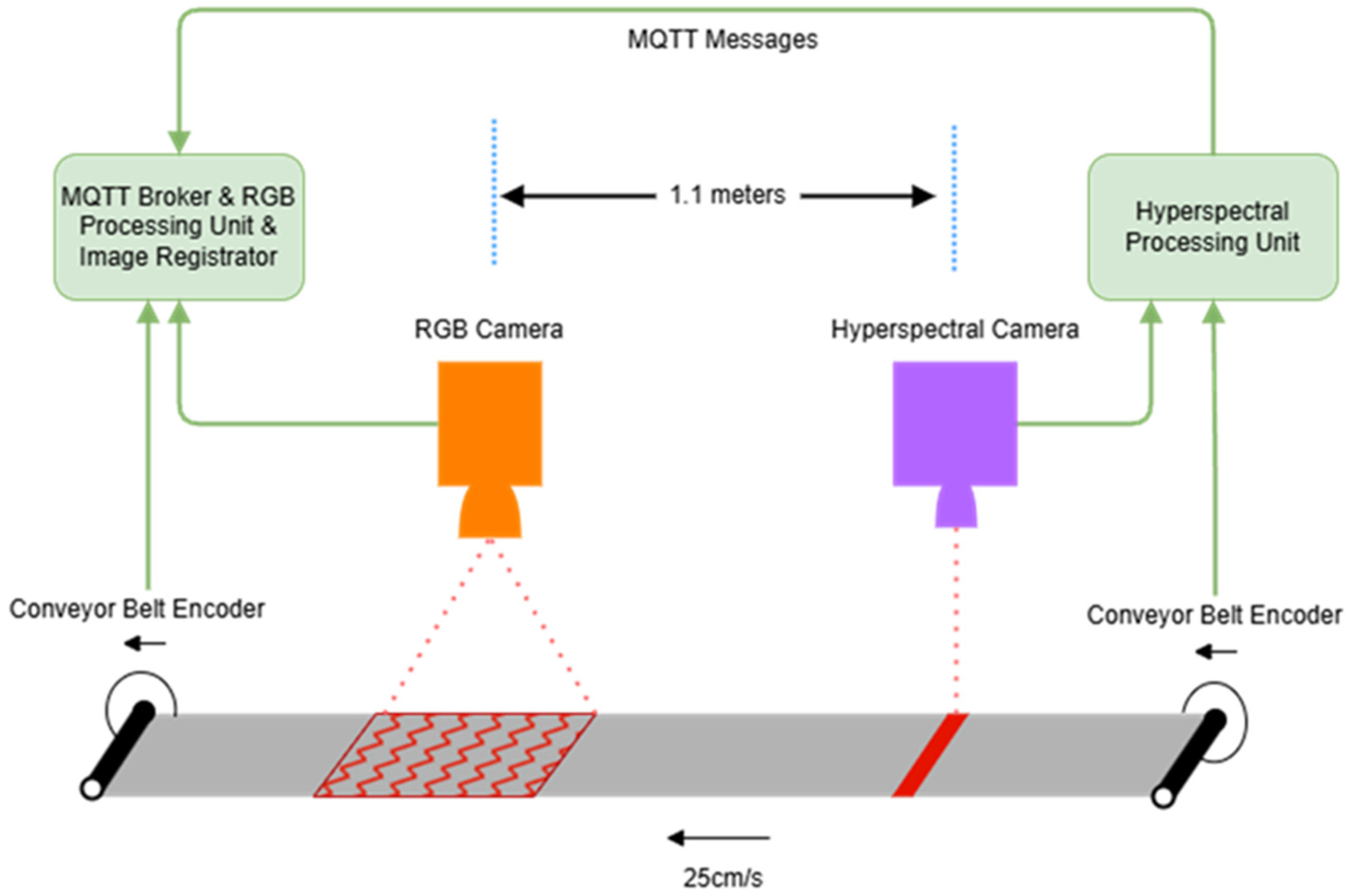

4.1. Experimental Setup

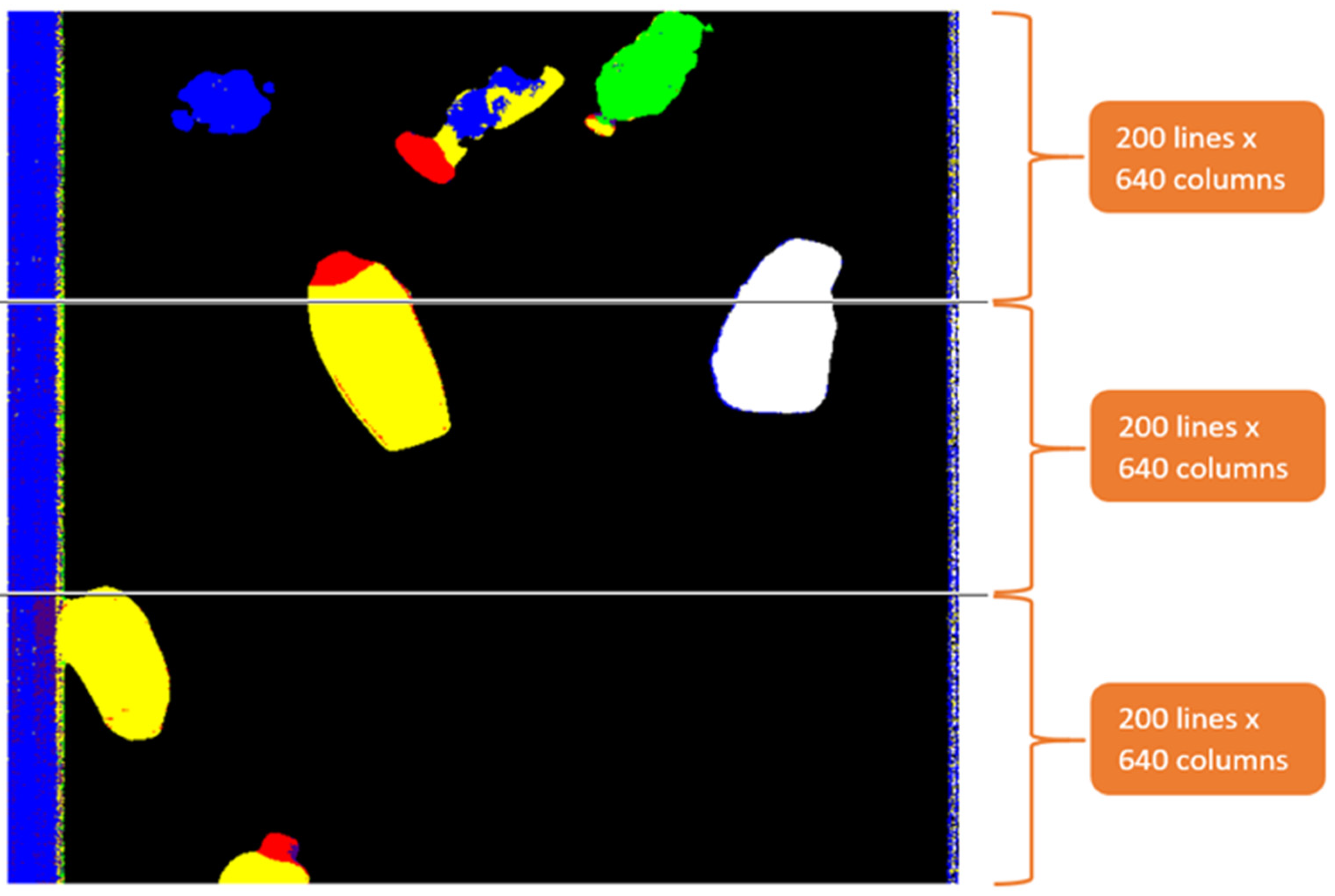

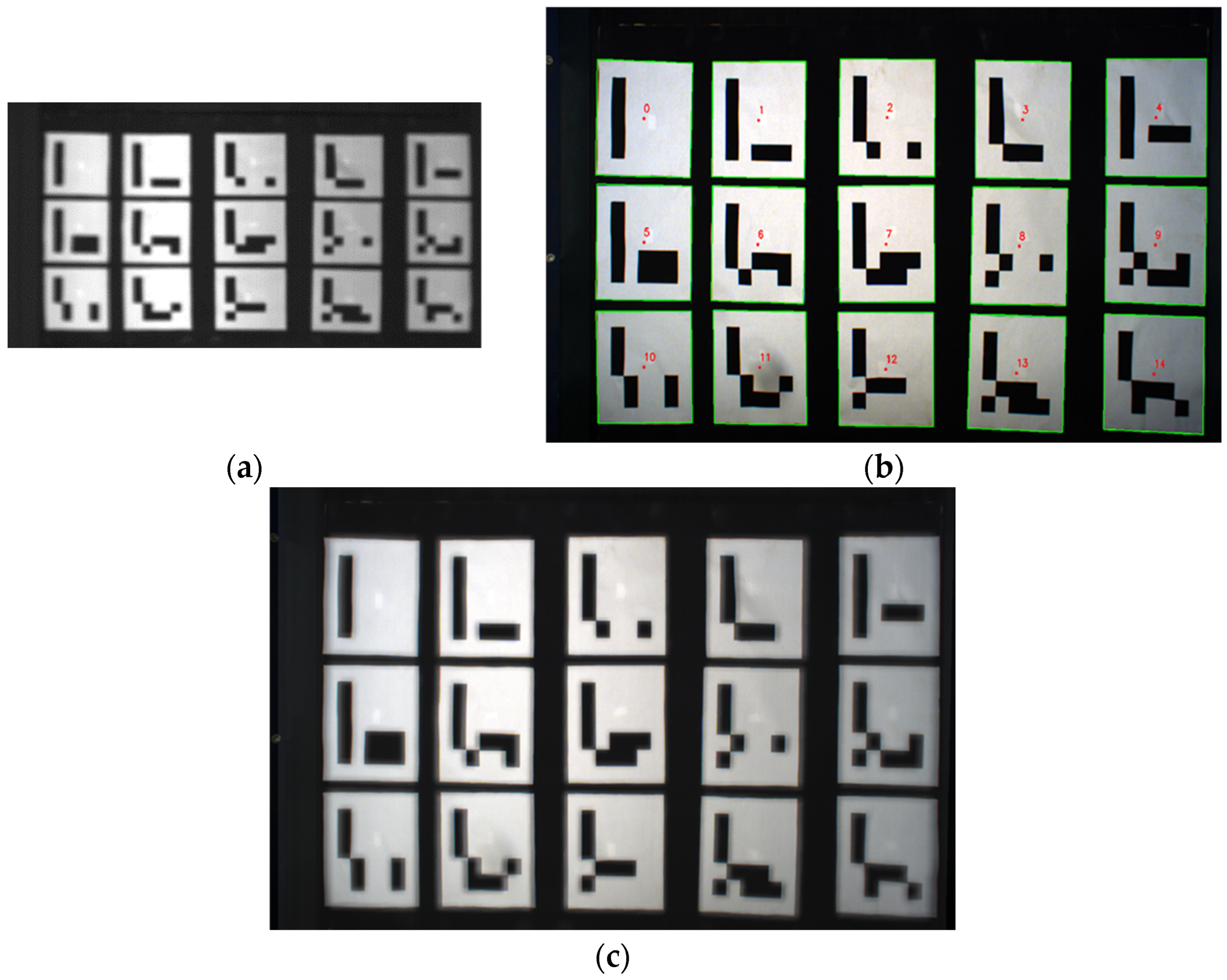

4.2. HSI System and Data Acquisition

4.3. Waste Classification in the RGB Domain

4.4. Integrated Decision Making from HSI and RGB Waste Classification

- The sizes of the two images are different: HSI batches of size 200 × 640 vs. RGB images of size 1280 × 1080,

- The view angles of the two cameras are different: vertical HSI line scans vs. Two-dimensional RGB scans with perspective distortions affected by the relative position of the camera and the object.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arbeláez-Estrada, J.C.; Vallejo, P.; Aguilar, J.; Tabares-Betancur, M.S.; Ríos-Zapata, D.; Ruiz-Arenas, S.; Rendón-Vélez, E. A Systematic Literature Review of Waste Identification in Automatic Separation Systems. Recycling 2023, 8, 86. [Google Scholar] [CrossRef]

- Kiyokawa, T.; Takamatsu, J.; Koyanaka, S. Challenges for Future Robotic Sorters of Mixed Industrial Waste: A Survey. IEEE Trans. Autom. Sci. Eng. 2024, 21, 1023–1040. [Google Scholar] [CrossRef]

- Gadaleta, G.; De Gisi, S.; Todaro, F.; D’Alessandro, G.; Binetti, S.; Notarnicola, M. Assessing the Sorting Efficiency of Plastic Packaging Waste in an Italian Material Recovery Facility: Current and Upgraded Configuration. Recycling 2023, 8, 25. [Google Scholar] [CrossRef]

- Packaging Waste Statistics—Statistics Explained—Eurostat. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Packaging_waste_statistics#Data_sources (accessed on 26 August 2025).

- Amigo, J.M.; Grassi, S. Configuration of Hyperspectral and Multispectral Imaging Systems. Data Handl. Sci. Technol. 2019, 32, 17–34. [Google Scholar] [CrossRef]

- Zheng, Y.; Bai, J.; Xu, J.; Li, X.; Zhang, Y. A Discrimination Model in Waste Plastics Sorting Using NIR Hyperspectral Imaging System. Waste Manag. 2018, 72, 87–98. [Google Scholar] [CrossRef]

- Tamin, O.; Gubin Moung, E.; Dargham, J.A.; Yahya, F.; Omatu, S.; Moung, E.G. A Review of Hyperspectral Imaging-Based Plastic Waste Detection State-of-the-Arts. Int. J. Electr. Comput. Eng. (IJECE) 2023, 13, 3407–3419. [Google Scholar] [CrossRef]

- Jiang, Z.; Yu, Z.; Yu, Y.; Huang, Z.; Ren, Q.; Li, C. Spatial Resolution Enhancement for Pushbroom-Based Microscopic Hyperspectral Imaging. Appl. Opt. 2019, 58, 850. [Google Scholar] [CrossRef]

- Krasniewski, J.; Dabała, Ł.; Lewandowski, M. Hyperspectral Imaging for Analysis and Classification of Plastic Waste. In Proceedings of the 25th International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2020; pp. 4805–4812. [Google Scholar] [CrossRef]

- Shennib, F.; Schmitt, K. Data-Driven Technologies and Artificial Intelligence in Circular Economy and Waste Management Systems: A Review. In Proceedings of the IEEE International Symposium on Technology and Society (ISTAS), Ontario, CA, USA, 28–31 October 2021. [Google Scholar] [CrossRef]

- Lu, W.; Chen, J. Computer Vision for Solid Waste Sorting: A Critical Review of Academic Research. Waste Manag. 2022, 142, 29–43. [Google Scholar] [CrossRef]

- Wu, T.W.; Zhang, H.; Peng, W.; Lü, F.; He, P.J. Applications of Convolutional Neural Networks for Intelligent Waste Identification and Recycling: A Review. Resour. Conserv. Recycl. 2023, 190, 106813. [Google Scholar] [CrossRef]

- Koskinopoulou, M.; Raptopoulos, F.; Papadopoulos, G.; Mavrakis, N.; Maniadakis, M. Robotic Waste Sorting Technology: Toward a Vision-Based Categorization System for the Industrial Robotic Separation of Recyclable Waste. IEEE Robot. Autom. Mag. 2021, 28, 50–60. [Google Scholar] [CrossRef]

- Bashkirova, D.; Abdelfattah, M.; Zhu, Z.; Akl, J.; Alladkani, F.; Hu, P.; Ablavsky, V.; Calli, B.; Bargal, S.A.; Saenko, K. ZeroWaste Dataset: Towards Deformable Object Segmentation in Cluttered Scenes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; p. 21115. [Google Scholar] [CrossRef]

- Luo, Y. Research on Sorting System of Industrial Robot Based on Machine Vision. In Proceedings of the 2024 International Conference on Power, Electrical Engineering, Electronics and Control (PEEEC), Athens, Greece, 14–16 August 2024; pp. 270–274. [Google Scholar] [CrossRef]

- Tsagarakis, N.; Maniadakis, M. On the Generation and Assessment of Synthetic Waste Images. In Proceedings of the 2024 IEEE Conference on Artificial Intelligence, Singapore, 25–27 June 2024; pp. 1011–1016. [Google Scholar] [CrossRef]

- AI-Powered Waste Sorting Solutions. Available online: https://ampsortation.com/ (accessed on 2 July 2025).

- Robenso. Available online: https://www.robenso.gr/ (accessed on 2 July 2025).

- SamurAI® Series—Sorting Robots—Machinex. Available online: https://www.machinexrecycling.com/sorting/equipment/samurai-sorting-robot/ (accessed on 2 July 2025).

- Recycleye QualiBot®—Recycleye. Available online: https://recycleye.com/robotics-3/ (accessed on 2 July 2025).

- Fast Picker|ZenRobotics. Available online: https://www.terex.com/zenrobotics/robots/fast-picker (accessed on 2 July 2025).

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A.J. Advanced Spectral Classifiers for Hyperspectral Images: A Review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Xu, Y.; Mao, Y.; Li, H.; Shen, J.; Xu, X.; Wang, S.; Zaman, S.; Ding, Z.; Wang, Y. A Deep Learning Model Based on RGB and Hyperspectral Images for Efficiently Detecting Tea Green Leafhopper Damage Symptoms. Smart Agric. Technol. 2025, 10, 100817. [Google Scholar] [CrossRef]

- Zhan, Y.; Wang, Y.; Yu, X. Semisupervised Hyperspectral Image Classification Based on Generative Adversarial Networks and Spectral Angle Distance. Sci. Rep. 2023, 13, 22019. [Google Scholar] [CrossRef]

- Mesa, A.R.; Chiang, J.Y. Multi-Input Deep Learning Model with Rgb and Hyperspectral Imaging for Banana Grading. Agriculture 2021, 11, 687. [Google Scholar] [CrossRef]

- Zheng, L.; Zhao, M.; Zhu, J.; Huang, L.; Zhao, J.; Liang, D.; Zhang, D. Fusion of Hyperspectral Imaging (HSI) and RGB for Identification of Soybean Kernel Damages Using ShuffleNet with Convolutional Optimization and Cross Stage Partial Architecture. Front. Plant Sci. 2023, 13, 1098864. [Google Scholar] [CrossRef]

- Neo, E.R.K.; Low, J.S.C.; Goodship, V.; Debattista, K. Deep Learning for Chemometric Analysis of Plastic Spectral Data from Infrared and Raman Databases. Resour. Conserv. Recycl. 2023, 188, 106718. [Google Scholar] [CrossRef]

- Bonifazi, G.; Fiore, L.; Gasbarrone, R.; Hennebert, P.; Serranti, S. Detection of Brominated Plastics from E-Waste by Short-Wave Infrared Spectroscopy. Recycling 2021, 6, 54. [Google Scholar] [CrossRef]

- Castro-Díaz, M.; Osmani, M.; Cavalaro, S.; Cacho, Í.; Uria, I.; Needham, P.; Thompson, J.; Parker, B.; Lovato, T. Hyperspectral Imaging Sorting of Refurbishment Plasterboard Waste. Appl. Sci. 2023, 13, 2413. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Kounalakis, N.; Alexakis, G.; Raptopoulos, F.; Maniadakis, M. Cost-Effective Robotic Recycling Workers: From Lab Experiments to Real-World Deployment. In Proceedings of the International Conference on Agents and Artificial Intelligence, ICAART, Porto, Portugal, 23–25 February 2025. [Google Scholar]

- Singh, M.K.; Hait, S.; Thakur, A. Hyperspectral Imaging-Based Classification of Post-Consumer Thermoplastics for Plastics Recycling Using Artificial Neural Network. Process Saf. Environ. Prot. 2023, 179, 593–602. [Google Scholar] [CrossRef]

- Lubongo, C.; Bin Daej, M.A.A.; Alexandridis, P. Recent Developments in Technology for Sorting Plastic for Recycling: The Emergence of Artificial Intelligence and the Rise of the Robots. Recycling 2024, 9, 59. [Google Scholar] [CrossRef]

- Araujo-Andrade, C.; Bugnicourt, E.; Philippet, L.; Rodriguez-Turienzo, L.; Nettleton, D.; Hoffmann, L.; Schlummer, M. Review on the Photonic Techniques Suitable for Automatic Monitoring of the Composition of Multi-Materials Wastes in View of Their Posterior Recycling. Waste Manag. Res. 2021, 39, 631. [Google Scholar] [CrossRef] [PubMed]

- Taneepanichskul, N.; Hailes, H.C.; Miodownik, M.; Taneepanichskul, N.; Hailes, H.C.; Miodownik, M. Using Hyperspectral Imaging and Machine Learning to Identify Food Contaminated Compostable and Recyclable Plastics. UCL Open Env. 2025, 7, 3237. [Google Scholar] [CrossRef]

- Bonifazi, G.; Capobianco, G.; Serranti, S. A Hierarchical Classification Approach for Recognition of Low-Density (LDPE) and High-Density Polyethylene (HDPE) in Mixed Plastic Waste Based on Short-Wave Infrared (SWIR) Hyperspectral Imaging. Spectrochim. Acta A Mol. Biomol. Spectrosc. 2018, 198, 115–122. [Google Scholar] [CrossRef]

- Bonifazi, G.; Capobianco, G.; Serranti, S. Hyperspectral Imaging and Hierarchical PLS-DA Applied to Asbestos Recognition in Construction and Demolition Waste. App. Sci. 2019, 9, 4587. [Google Scholar] [CrossRef]

- Bonifazi, G.; Fiore, L.; Gasbarrone, R.; Palmieri, R.; Serranti, S. Hyperspectral Imaging Applied to WEEE Plastic Recycling: A Methodological Approach. Sustainability 2023, 15, 11345. [Google Scholar] [CrossRef]

- Mazanov, G.; Iliushina, A.; Nesteruk, S.; Pimenov, A.; Stepanov, A.; Mikhaylova, N.; Somov, A. Hyperspectral Data Driven Solid Waste Classification. IEEE Access 2025, 13, 3551097. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++ Better Real-Time Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1108–1121. [Google Scholar] [CrossRef] [PubMed]

| Class | Precision | Sensitivity | Specificity | FNR | FPR | F1-Score |

|---|---|---|---|---|---|---|

| PET | 0.740 | 0.987 | 0.950 | 0.013 | 0.050 | 0.846 |

| PP | 0.900 | 0.918 | 0.980 | 0.082 | 0.020 | 0.909 |

| PE | 0.920 | 0.786 | 0.983 | 0.214 | 0.017 | 0.848 |

| PS | 0.960 | 0.980 | 0.992 | 0.020 | 0.008 | 0.970 |

| PAPER | 0.950 | 0.979 | 0.990 | 0.021 | 0.010 | 0.964 |

| ALU | 0.990 | 0.861 | 0.998 | 0.139 | 0.002 | 0.921 |

| Class | Object Classification >50/70% Pixels | Object Classification >90% Pixels |

|---|---|---|

| PET | 5/5 | 1/5 |

| PP | 10/10 | 6/10 |

| PE | 8/8 | 4/8 |

| PS | 2/2 | 2/2 |

| PAPER | 8/8 | 7/8 |

| ALU | 6/6 | 6/6 |

| Material | Precision | Sensitivity | Specificity | FNR | FPR | F1-Score |

|---|---|---|---|---|---|---|

| PET | 0.94 | 0.93 | 0.995 | 0.064 | 0.005 | 0.93 |

| PP/PS | 0.84 | 0.87 | 0.986 | 0.159 | 0.014 | 0.85 |

| PE | 0.96 | 0.98 | 0.985 | 0.036 | 0.015 | 0.99 |

| PAPER | 0.97 | 0.96 | 0.090 | 0.031 | 0.010 | 0.96 |

| ALU | 0.99 | 0.99 | 0.999 | 0.010 | 0.001 | 0.99 |

| Material | Precision | Sensitivity | Specificity | FNR | FPR | F1-Score |

|---|---|---|---|---|---|---|

| PET | 1.00 | 0.97 | 1.00 | 0.0 | 0.0 | 1.00 |

| PP | 0.88 | 1.00 | 0.97 | 0.0 | 0.03 | 0.97 |

| PE | 1.00 | 0.97 | 1.00 | 0.04 | 0.0 | 0.98 |

| PS | 1.0 | 0.67 | 1.00 | 0.0 | 0.0 | 1.00 |

| PAPER | 1.00 | 1.00 | 1.00 | 0.0 | 0.0 | 1.00 |

| ALU | 1.00 | 1.00 | 1.00 | 0.0 | 0.0 | 1.00 |

| Dark_PP | 1.00 | 0.50 | 1.00 | 0.0 | 0.0 | 0.67 |

| Success Rate | |||

|---|---|---|---|

| Material | RGB | HSI (>50%) | JOINT/SVM |

| PET | 92.79 | 86.75 | 98.99 |

| PP | 89.66 | 94.25 | 98.85 |

| PE | 97.89 | 97.89 | 97.89 |

| PS | 0.0 | 100.0 | 100.0 |

| PAPER | 98.15 | 98.25 | 100.0 |

| ALU | 100.0 | 88.82 | 100.0 |

| Dark_PP | 0.0 | 0.0 | 83.33 |

| Material CLASS | Description |

|---|---|

| PET | Polyethylene-terephthalate-based waste materials |

| PP | Polypropylene-based waste materials |

| PE | Polyethylene-based waste materials |

| PS | Polystyrene-based waste materials |

| PAPER | Paper and tetrapak-like waste materials |

| ALU | Aluminium waste materials |

| Feature | Description |

|---|---|

| Mask RCNN_Class | Instance segmentation class |

| Mask RCNN_Confidence | Instance segmentation score |

| P_Total | Number of RGB Masks’ Pixels |

| P_nonB_HSI | Number of HSI Masks’ Pixels |

| HSI_PET_Percentage | Percentage of PET classified pixels in mask |

| HSI_PE_Percentage | Percentage of PE classified pixels in mask |

| HSI_ALU_Percentage | Percentage of ALU classified pixels in mask |

| HSI_PAP_Percentage | Percentage of PAP classified pixels in mask |

| HSI_PP_Percentage | Percentage of PP classified pixels in mask |

| HSI_PS_Percentage | Percentage of PS classified pixels in mask |

| HSI_Background_Percentage | Percentage of Background classified pixels in mask |

| Cross_Check_Class | Ground Truth |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alexakis, G.; Pellegrino, M.; Rodriguez-Turienzo, L.; Maniadakis, M. Enhanced Waste Sorting Technology by Integrating Hyperspectral and RGB Imaging. Recycling 2025, 10, 179. https://doi.org/10.3390/recycling10050179

Alexakis G, Pellegrino M, Rodriguez-Turienzo L, Maniadakis M. Enhanced Waste Sorting Technology by Integrating Hyperspectral and RGB Imaging. Recycling. 2025; 10(5):179. https://doi.org/10.3390/recycling10050179

Chicago/Turabian StyleAlexakis, Georgios, Marina Pellegrino, Laura Rodriguez-Turienzo, and Michail Maniadakis. 2025. "Enhanced Waste Sorting Technology by Integrating Hyperspectral and RGB Imaging" Recycling 10, no. 5: 179. https://doi.org/10.3390/recycling10050179

APA StyleAlexakis, G., Pellegrino, M., Rodriguez-Turienzo, L., & Maniadakis, M. (2025). Enhanced Waste Sorting Technology by Integrating Hyperspectral and RGB Imaging. Recycling, 10(5), 179. https://doi.org/10.3390/recycling10050179