Abstract

With the rapid development of machine learning and cloud computing, deep learning methods based on big data have been widely applied in the assessment of lithium-ion battery health status. However, enhancing the accuracy and robustness of assessment models remains a challenge. This study introduces an innovative T-LSTM prediction network. Initially, a one-dimensional convolutional neural network (1DCNN) is employed to effectively extract local and global features from raw battery data, providing enriched inputs for subsequent networks. Subsequently, LSTM and transformer models are ingeniously combined to fully utilize their unique advantages in sequence modeling, further enhancing the accurate prediction of battery health status. Experiments were conducted using both proprietary and open-source datasets, and the results validated the accuracy and robustness of the proposed method. The experimental results on the proprietary dataset show that the T-LSTM-based estimation method exhibits excellent performance in various evaluation metrics, with MSE, RMSE, MAE, MAPE, and MAXE values of 0.43, 0.66, 0.53, 0.58, and 1.65, respectively. The performance improved by 30–50% compared to that of the other models. The method demonstrated superior performance in comparative experiments, offering novel insights for optimizing intelligent battery management and maintenance strategies.

1. Introduction

As fossil fuel depletion and carbon emission concerns increasingly gain attention, people are turning their focus toward renewable energy systems [1]. Both the academic and industrial sectors are actively engaged in research to find solutions. In this context, lithium-ion batteries (LIBs) have garnered significant attention as a clean energy storage technology. Their prospects in electric vehicles (EVs) and energy storage systems (ESSs) are highly promising. However, it is important to note that continuous charge–discharge cycles gradually degrade the performance of LIBs, leading to diminished capacity or reduced output power [2,3].

State of health (SOH) is one of the key parameters for measuring battery capacity, reflecting the degree of battery degradation and aiding in battery maintenance, assessment, and analysis [4,5]. SOH variations directly affect the performance, longevity, and safety of battery packs [6,7]. The accurate estimation of SOH ensures the safe and reliable operation of automotive batteries [8]. In this paper, the SOH is defined from the perspective of capacity, that is, the ratio of a battery’s actual capacity to its initial capacity. When the SOH falls below 80%, the battery approaches its maximum usable life and should be replaced [9]. Over the past few decades, numerous estimation techniques have emerged. In 2023, Ren et al. [10], and earlier in 2019, Hu et al. [11], described and evaluated SOH prediction methods, categorizing them into three groups: (1) direct measurement methods, (2) model-based methods, and (3) data-driven methods.

Direct measurement methods primarily involve measuring the terminal voltage, current, and impedance. For example, methods such as Coulomb counting [12] and electrochemical impedance spectroscopy (EIS) [13] have been used. These approaches require expensive and complex equipment, making it challenging to them apply in real-life situations [14]. Model-based methods, on the other hand, focus on establishing mapping between measured variables and the state of health [15]. Examples include equivalent circuit models [16] and electrochemical models [17]. However, the accuracy of SOH estimation in these methods depends on the model selection, and the parameters of the models can change over time, making it difficult to obtain accurate results in practical applications [18].

Finally, with the advent of the Information Age, data-driven methods have become increasingly attractive in both the industrial and academic sectors. Data-driven approaches primarily rely on variables available during the operation of lithium-ion batteries as features, such as battery current, voltage, and temperature, without the need to consider the complex physical and chemical changes within the battery. Yang et al. [19] used a random forest algorithm to predict the SOH for lithium-ion batteries, and their proposed method improved the accuracy and robustness of predictions. Feng et al. [20] introduced a battery model based on support vector machines (SVMs), which achieved high accuracy within typical temperature and charging-state ranges for electric vehicle operating conditions. Xiong et al. [21] proposed a battery SOH estimation method based on weighted least squares support vector machines, which can provide highly robust and accurate estimation results. Yang et al. [22] presented a Gaussian process regression (GPR) model based on charging curves and demonstrated the robustness and reliability of this model through dynamic discharge curve validation.

In recent years, the rapid development of machine learning and neural networks has led to innovative applications in multiple fields, and with technological advancements, an increasing number of models are being used to predict battery state of health (SOH) [23,24]. In the field of machine learning, particularly in deep learning, neural networks have become powerful tools. Neural networks can make predictions by learning patterns and features from data, making them suitable for complex nonlinear problems [25]. Examples include recurrent neural networks (RNNs), convolutional neural networks (CNNs), and transformers, among others. These models can learn from historical data, uncover hidden patterns and trends, and, as a result, make more accurate predictions of battery health status [26,27].

In addition to these classical neural network architectures, several other models have played important roles in battery SOH prediction. For example, the one-dimensional convolutional neural network (1DCNN) is a type of CNN designed specifically for processing sequential data, such as time series; 1DCNNs can effectively capture local patterns within sequences, making them a useful tool in battery SOH prediction. Furthermore, gated recurrent units (GRUs) [28] and long short-term memory (LSTM) networks [29] are improved versions of RNNs that address long-term dependency issues by introducing gate mechanisms. Ma et al. [30] proposed an SOH estimation method based on an enhanced LSTM network by selecting some external characteristic parameters from the charge–discharge process to describe battery aging mechanisms, achieving high accuracy and robustness. Xu et al. [31] introduced a feature selection method that helps neural networks train more effectively by removing irrelevant features from the input data during the data preparation step. In addition, skip connections were added to the convolutional neural network–long short-term memory (CNN-LSTM) model to address neural network degradation caused by multiple layers of LSTM. Removing less significant features improved the prediction accuracy and reduced the network computational load. Wang et al. [32] presented a hybrid 1DCNN-LSTM model for predicting the health status of lithium-ion batteries. Lucian Ungurean et al. [33] introduced a lithium-ion battery online health status prediction method based on gated recurrent unit neural networks. The GRU algorithm resulted in slightly higher estimation errors compared to those of LSTM, but it needed significantly fewer parameters (reduced by approximately 25%), making it a suitable candidate for embedded implementations. Liu et al. [34] proposed a CNN-GRU network with an attention mechanism to estimate the SOH of lithium-ion batteries.

Despite some success in battery SOH prediction using models such as 1DCNN, GRU, and LSTM, there is still room for improvement in their accuracy and robustness. To further enhance the accuracy and stability of SOH prediction, an innovative approach has been adopted, and the T-LSTM model has been proposed.

First, this model incorporates a 1DCNN as the initial layer, which can extract richer local features from the input data. The 1DCNN is effective at capturing short-term patterns in time-series data, thus providing more valuable information in higher-level models. Second, the LSTM model is integrated into the transformer structure. This new model architecture aims to fully leverage the advantages of LSTM in handling time-series data while utilizing the transformer’s self-attention mechanism to better capture relationships within the sequence. By incorporating LSTM into either the encoder or decoder of the transformer, the strengths of LSTM in addressing long-term dependency issues can be harnessed while benefiting from the transformer’s parallel processing capabilities to speed up the training and inference processes.

Finally, global predictions are made through two fully connected layers.

In summary, this paper makes four main contributions:

- First, an innovative feature extraction method, namely, the time intervals between equal voltage levels, is introduced and subjected to thorough convolutional processing through a 1DCNN layer. This method contains rich information for capturing the dynamic changes in the battery’s operational state. By simplifying the data processing pipeline, the temporal trends in battery health status are effectively captured, laying a solid foundation for subsequent model construction.

- Second, our research combines the 1DCNN, transformer, and LSTM neural networks in a highly synergistic model structure. The 1DCNN is used as an initial layer, cleverly capturing local features of battery data and transforming them into richer input information, thereby establishing a strong foundation for the entire model’s performance. Furthermore, the introduction of the transformer’s self-attention mechanism enables the model to better grasp the intrinsic relationships between different time steps in battery data, addressing long-term dependency issues. More importantly, the fusion of LSTM with the transformer allows the model to consider information at different levels when processing sequence data, further enhancing the overall predictive performance. This multi-model collaborative strategy not only significantly improves the prediction accuracy but also enhances the model’s adaptability to various data variations.

- The robustness of the proposed model is validated even in the presence of noise through experiments. Noise is introduced into the model’s input, simulating potential data variations in real-world environments. Encouragingly, the model continues to perform well in the presence of noise, further confirming its robustness. This means that the model not only improves the accuracy but also maintains excellent performance in unstable environments, providing a more reliable solution for battery SOH prediction tasks.

- The model was validated on a self-constructed dataset and publicly available datasets, demonstrating significant improvements in various performance metrics. The model’s robustness and generalizability across different datasets were also showcased. This further demonstrates the effectiveness and practicality of the model.

In conclusion, our novel T-LSTM model, which combines LSTM and the transformer while then incorporating the 1DCNN, brings higher precision and robustness to the field of battery SOH prediction. This innovative approach provides a powerful tool for battery management and maintenance, potentially advancing the wider application of battery technology in areas such as sustainable energy and intelligent transportation.

The remaining sections of this paper are organized as follows. A detailed introduction to the lithium-ion battery (LIB) experimental dataset and the feature extraction methods are provided in Section 2. In Section 3, the innovative T-LSTM model, which combines LSTM and the transformer along with the characteristics of the 1DCNN, is elaborated to better address the battery SOH prediction problem. In Section 4, the experimental results are presented, and an in-depth discussion and analysis of the effectiveness of the proposed T-LSTM model are conducted. Finally, in Section 5, a conclusion is offered that summarizes the main contributions and research outcomes of this paper.

2. Dataset and Health Feature Extraction

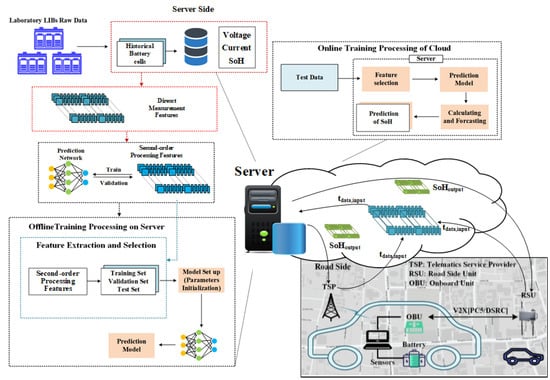

The dataset plays a crucial role in machine learning, and having a sufficient dataset of lithium-ion batteries (LIB) is advantageous for constructing robust and versatile evaluation models. Therefore, in this study, battery aging experiments were conducted, and a set of lithium-ion battery aging datasets was created. These datasets were derived from experimental data from the Battery Cycle Life Test Platform [35]. The generation of this experimental dataset provides a solid foundation for the research. Additionally, an intelligent cloud battery management system (BMS) [36] was developed, as depicted in Figure 1. This system enables real-time monitoring and estimation of battery status and early safety warnings. This paper focuses primarily on the study of battery state of health (SOH) within the intelligent cloud BMS, including methods for extracting health features and the implementation of hybrid neural network models.

Figure 1.

Schematic diagram of an intelligent cloud battery management system (the SOH part) based on an intelligent connected vehicle management platform.

2.1. Battery Aging Dataset

The cycle life of a battery is determined by its intrinsic characteristics, which can be manifested through external attributes. During the charging process, the external data of the battery, such as the voltage, current, and temperature, can be measured to reflect the battery’s life condition. This study aims to estimate the battery’s lifespan by measuring the terminal voltage of the battery. The voltage during battery charging is considered the most easily measurable state parameter.

- Dataset 1.

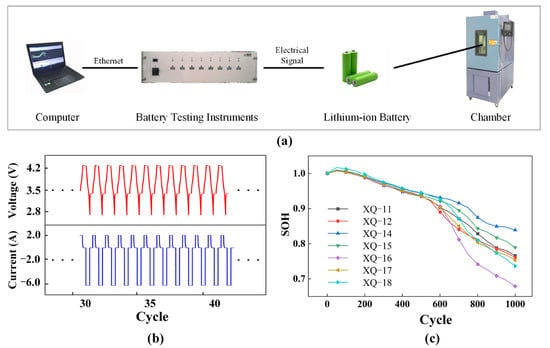

An experimental platform for battery cycle life testing was established, as shown in Figure 2a. The detector used was the Neware BTS-5V12A, with a voltage and current accuracy of 0.05%. Seven Prospower ICR18650P batteries from the same batch, named XQ-11, XQ-12, XQ-14, XQ-15, XQ-16, XQ-17, and XQ-18, with nominal capacities and voltages of 2 Ah and 3.6 V, respectively, were selected. The detailed specifications are provided in Table 1. The experiments were conducted in a temperature-controlled chamber (Sanwood, SMG-150-CC) at a constant temperature of 25 °C, with a deviation of ≤±1 °C. All seven batteries underwent the same set of repeated charge–discharge cycles, including 5 battery preconditioning cycles, 50 battery aging cycles, and 20 battery capacity calibration cycles. As shown in Figure 2b and Table 2, during each battery aging cycle, the batteries were charged at a constant current rate of 1 C until the voltage reached 4.2 V. Subsequently, a constant voltage of 4.2 V was applied until the current decreased to 0.1 C. Following this, the batteries were discharged at a constant current rate of 3 C until the voltage dropped to the cutoff voltage of 2.5 V, after which they were left to rest for 1 h. The state of health (SOH) curves for the seven studied batteries are illustrated in Figure 2c. In this experiment, the SOH is defined as the actual capacity of the lithium-ion battery divided by its initial capacity, as shown in Equation (1):

Figure 2.

Dataset 1: (a) battery cycle life test experimental setup; (b) voltage and current response for the cycling profile; and (c) SOH data of the tested batteries.

Table 1.

Specification parameters for the batteries in Dataset 1.

Table 2.

Cycling profile for battery aging in Dataset 1 [37].

Here, represents the current maximum discharge capacity of the battery (remaining capacity), and represents the initial capacity. When the remaining capacity drops below 80% of the initial capacity, the battery’s performance deteriorates exponentially. Once this threshold is reached, the battery is considered an unreliable power source and should be replaced accordingly [9].

- Dataset 2.

This dataset originates from a battery performance research project at the University of Cambridge [35]. The dataset comprises experimental data from a set of coin cell batteries utilizing commercially available Eunicell LIR2032 model batteries with a capacity of 45 mAh. These batteries employ LiCoO2 (LCO) as the cathode material and graphite as the anode material. The batteries underwent continuous charge and discharge cycle experiments, and their performance was studied under various temperature conditions, including 25 degrees Celsius (eight batteries), 35 degrees Celsius (two batteries), and 45 degrees Celsius (two batteries) in environmental chambers.

Each cycle consisted of a constant current–constant voltage (CC-CV) charging process at a 1 C rate (45 mA) until the voltage reached 4.2 V, followed by a constant current (CC) discharge process at a 2 C rate, discharging the battery until the voltage dropped to 3 V. The dataset is available in .txt format on GitHub and serves as a valuable experimental resource for battery performance research.

Four lithium-ion batteries with the experiment IDs 25C02, 25C03, 25C06, and 25C07 were selected for the study. These four batteries underwent charge and discharge experiments at a temperature of 25 degrees Celsius. The choice of these batteries was based on their representativeness or distinctive performance characteristics observed during the experiments.

2.2. Health Feature Extraction

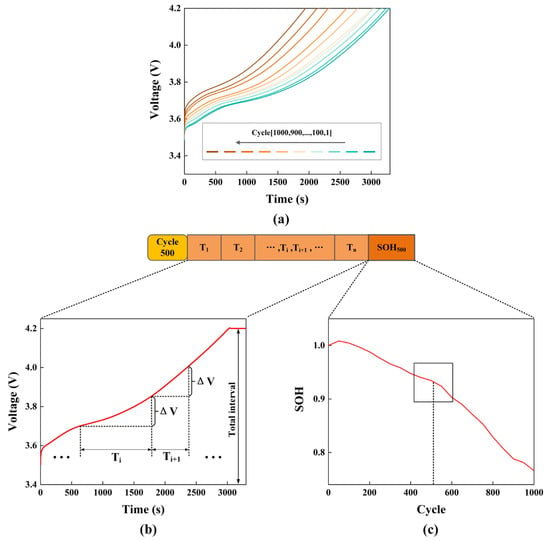

Battery cycle life can be represented based on external characteristics such as voltage, internal resistance, and capacity, which can reflect the deterioration and health of the battery [8]. To accurately predict the SOH of a battery, it is crucial to extract feature variables that reliably reveal the battery’s aging trends. Figure 3a displays the charging voltage curves of batteries across different cycles. The voltage curve varies with the cycle count, and as the number of cycles increases, the charging time for each cycle continuously decreases.

Figure 3.

(a) Voltage curves during the aging cycles; (b) specific feature extraction process during the 500th cycle; and (c) specific label extraction process during the 500th cycle.

In this paper, it was assumed that the SOH of the battery remains constant within a single cycle.

Dataset 1 was used to conduct charge and discharge cycling experiments on each battery, collecting battery charge and discharge experimental data for 1000 cycles. The charging voltage curve was selected from the experimental data, and the voltage range during charging was evenly divided into several intervals. The time required in each interval was then extracted as a feature. As shown in Figure 3b, a voltage range from 3.4 V to 4.2 V was chosen as the overall charging interval, denoted as ; represents the voltage range for each feature and is referred to as the voltage sampling interval or voltage resolution. The time needed for the same voltage sampling interval, denoted as , was used as a feature . Therefore, the number of voltage sampling intervals, denoted as , is referred to as the number of features, and its mathematical representation is given in Equation (2):

The operation involves rounding down the value to the nearest integer. The overall voltage interval is divided into subintervals. Some of these subintervals may be empty. Therefore, a feature can be expressed as shown in Equation (3):

where and represent the start and end times of the subinterval, respectively. Therefore, each charging cycle of the battery is characterized by feature variables.

Similarly, the discharge capacity was continuously selected from each discharge cycle of these 1000 cycles of battery charge–discharge experimental data to characterize the SOH, which was used as the label variable, as shown in Figure 3c.

Dataset 2 was processed using the same approach, with the only difference being that the charging voltage range for Dataset 2 is 3.7 V to 4.2 V.

2.3. Sliding Window Design for Time Series

In battery state of health (SOH) prediction, the time-series sliding window is a commonly used technique employed to process sequential data and extract information about battery behavior and performance [36]. The role of the sliding window is as follows.

Feature extraction: The health status of a battery is influenced not only by the current moment but also by its historical behavior. Time-series sliding windows allow us to use data from a certain period in the past as a window, enabling the extraction of features within that window. By extracting these features, the model can capture patterns and trends in the battery’s behavior, leading to a more accurate prediction of the battery’s SOH. Adjusting the size of the sliding window allows for a balance between considering historical data and maintaining real-time relevance.

Sequence modeling: Time-series sliding windows can be used to build sequence models such as recurrent neural networks (RNNs) and long short-term memory (LSTM) networks. These models can account for the temporal nature of the data, allowing them to better capture the dynamic changes in battery behavior. By using the data within the sliding window as the input, the model can learn patterns of how the battery’s state changes over time, enabling predictions of future battery health status.

Therefore, utilizing time-series sliding windows in battery SOH prediction is advantageous for extracting valuable features from time-series data and constructing appropriate sequence models, thus enhancing prediction accuracy and robustness.

In the experiments described in this paper, our data consist of cyclic feature vectors and SOH labels , which can be represented by Equations (4) and (5):

where represents one of these cycles, and . Then, the size of the time-series sliding window was set as , and the predicted time step was set as . The sliding window was utilized to construct the input for the model training, as shown in Equations (6) and (7):

where and represent the feature matrix and label vector used for predicting cycle , respectively, and represents the lookahead steps for prediction. This results in a set of sequences consisting of multivariate feature matrices and their corresponding SOH label vectors. Based on the sequence length, sequence pairs are combined to establish a set of training batches. In general, if the batch capacity is too large, it may lead to local optima; if it is too small, it may be challenging to achieve convergence. Therefore, setting an appropriate batch size is crucial. In this paper, the batch size for Dataset 1 is set to 8, while the batch size for Dataset 2 is set to 32.

2.4. Problem Formulation

Battery SOH prediction is a time-series regression task that treats the data as a time series. The SOH prediction in this paper involves feature extraction from the charging voltage in the battery aging cycle curves. For cycle , it is assumed that historical voltage data from cycle to the current cycle are available. In other words, the SOH prediction task for battery aging cycle can be expressed as Equation (8):

where function represents the predictive model to be learned, and is the predicted output for cycle .

The purpose of this study is to establish a battery state of health (SOH) prediction model, namely, T-LSTM. This model is achieved by conducting feature analysis and extraction from the voltage of the battery’s charging cycle curve. During the model training process, the corresponding discharge capacity during the charging cycle is utilized as the actual SOH label , which is combined with the extracted features as inputs to the training model and learning function . Therefore, the model only requires the measurement of the battery’s voltage cycle curve at the current moment to generate a predicted SOH value. In other words, this model predicts the current battery SOH based on the battery’s voltage cycle curve at the current time. Our primary focus, of course, is to ensure that the model can achieve higher accuracy and robustness.

3. Methodology

In this section, a detailed description of the proposed model, T-LSTM, designed for predicting the state of health (SOH) of lithium-ion batteries, is provided. The T-LSTM model leverages the powerful capabilities of convolutional neural networks (CNNs), long short-term memory (LSTM), and transformers, effectively capturing temporal patterns and intricate relationships within battery data. A novel and efficient approach to battery health prediction is introduced by combining features such as equidistant voltage time differentials and sliding window techniques.

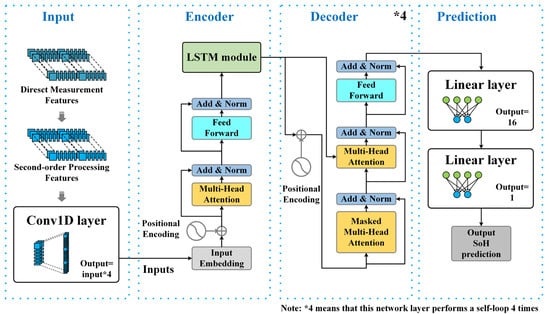

The workflow of the T-LSTM model is illustrated in Figure 4 and can be briefly summarized as follows. First, features are extracted from the raw data and then processed using a time-series sliding window. Finally, the processed features are fed into the T-LSTM model to enable the model to learn the mapping from features to the battery SOH. Specifically, in the T-LSTM model, the following take place.

Figure 4.

Overall schematic of the proposed SOH prediction model.

The feature matrix is initially passed through a one-dimensional convolutional neural network (1DCNN) layer to extract local features.

The obtained local features are introduced into the transformer encoder. However, the original transformer encoder may not perform optimally in some cases, particularly when dealing with long-term dependencies. To address this issue, an LSTM network is incorporated as part of the encoder. The introduction of LSTM helps in capturing long-term dependencies in sequence data, thereby enhancing the model’s expressive power.

The model proceeds to the decoding phase.

Finally, the features are further mapped and transformed through two fully connected layers to obtain the ultimate SOH prediction.

By combining LSTM with the transformer encoder, the model enables a comprehensive understanding of patterns and regularities in sequence data, thereby enhancing the accuracy and robustness of SOH predictions.

3.1. The 1DCNN Layer

In this study, the role of the 1D convolutional neural network (1DCNN) extends beyond feature extraction; it also plays a significant role in feature transformation. Our 1DCNN model processes the input sequences through convolution operations, transforming the original battery data into higher-dimensional feature representations. Specifically, the output dimension of the 1DCNN is set to four times the input dimension. This dimension expansion reflects the remarkable ability of the 1DCNN to capture complex patterns and variations in time series. Through convolution operations, the 1DCNN can detect and enhance local features in the input sequences, making them more discriminative and expressive. Therefore, the extension of the output dimension of the 1DCNN is not merely a simple size change but rather a rich representation of the inherent structure in the data.

This high-dimensional feature representation provides more information at subsequent model layers, offering neural networks richer inputs and contributing to improving the model performance. By expanding the output dimension of the 1DCNN to four times that of the input, crucial features in battery data can be more comprehensively captured, which provides more representative inputs for subsequent transformer encoders and LSTM networks, ultimately enhancing the overall prediction performance.

3.2. Encoder Layer

The encoder plays a critical role in this model and is responsible for extracting relevant features from the output of the 1D convolutional neural network (Conv1D) module and subsequently inputting these features into the transformer encoding layer for further processing. The composition of the encoding layer encompasses three essential parts, aiming to effectively capture the sequential characteristics and dependencies of battery data.

First, in the encoding layer, positional encoding is applied to the data. This involves the fusion of original features with positional encoding to incorporate feature information with their positions in the sequence. Simultaneously, masking techniques are employed to ensure that each prediction only utilizes preceding time steps, emulating the causality of the battery data. This helps ensure that the model adheres to temporal causality when handling battery data.

Second, the encoding layer incorporates the self-attention module. The attention module allows the model to establish connections between different time steps to better capture dependencies and crucial patterns within the sequence. This mechanism enhances the model’s perception of internal correlations in the sequence.

Finally, the encoding layer integrates the long short-term memory (LSTM) module. The introduction of LSTM allows its advantages in modeling sequential data to be fully exploited, better capturing long-term dependencies within a sequence. This is particularly important in battery data analysis, as battery behavior and characteristics are often influenced over extended time intervals.

Overall, the role of the encoder in the model is to map sequence data that have undergone feature extraction into a more advanced representation, thereby improving its ability to capture sequence patterns and dependencies in battery data. By combining positional encoding, attention modules, and LSTM modules, the encoder not only enhances the model’s understanding of sequence data but also enhances its capability to predict battery health status.

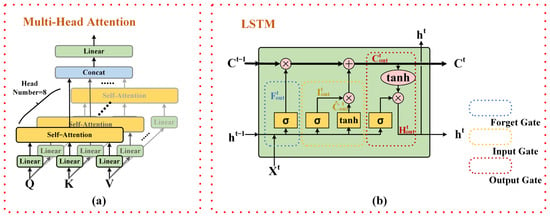

3.2.1. Multihead Self-Attention

A multihead self-attention module is introduced into the encoding layer, as depicted in Figure 5a. The core idea behind the multihead self-attention mechanism is to map input features to multiple distinct attention subspaces and calculate attention weights for each of these subspaces separately. Such a design allows the model to simultaneously focus on different sequence positions and feature dimensions, thereby comprehensively capturing the structure and correlations within the sequence. Specifically, for each head, the multihead self-attention mechanism computes a set of attention weights, and by combining these weights, the final attention output is obtained.

Figure 5.

(a) Schematic diagram of the structure of the multihead attention module and (b) schematic diagram of the structure of the LSTM module.

The expression for the attention mechanism of each head is as shown in Equation (9), and thus, the multihead attention mechanism is represented as shown in Equation (10):

where , , and represent linear transformations of the query, key, and value, respectively, with denoting the transpose of key ; signifies the scaling factor, controlling attention scores to maintain a stable gradient. The function is employed to compute the attention weights, stands for the number of heads, and signifies the attention weights within each subspace.

In conclusion, the introduction of the multihead self-attention mechanism in the encoding layer enables the model to comprehensively capture dependencies and crucial patterns within sequence data by focusing attention on different time steps and feature dimensions. This enhancement significantly improves the model’s perception of internal relationships within the sequence.

3.2.2. Long Short-Term Memory

LSTM (long short-term memory) [29] is a type of recurrent neural network structure designed for handling sequential data. As shown in Figure 5b, its core architecture consists of three components: the forget gate, input gate, and output gate. In the figure, each red circle represents pointwise operations performed on vectors, while the yellow rectangles represent neural network layers, with the characters above them denoting the activation functions used in the neural network. Each neural network layer has two attributes: weight vectors and bias vectors . For each component of the input vector , the neural network layer performs an operation as defined in Equation (11):

where represents the activation function, and in this paper, the activation functions used include the sigmoid function and the tanh function.

The key feature that sets LSTM apart from RNNs is the line running through the unit in Figure 5b—the neuron’s hidden state (cell state). The neuron’s hidden state can be regarded as the “memory” of the recurrent neural network for input data, denoted as , which represents the “summary” of all input information by the neural network up to time step . This vector encompasses the “summarized overview” of all input information up to time step .

These gates in LSTM effectively control the flow of feature information in the sequence, enabling the network to selectively remember and forget feature information between different time steps, thereby capturing long-term dependencies more effectively. In the following sections, the role of each gate will be introduced separately.

- Forget Gate:

The primary role of the forget gate is to determine whether the network should forget the previous information at the current time step. The forget gate is calculated by using the current input and the previous time step’s hidden state . The sigmoid function is used to constrain the output of the forget gate between 0 and 1, controlling the degree of information retention in the memory cell, with 0 representing completely forgetting the information and 1 representing completely retaining it. The output vector can be expressed using Equation (12):

where and represent the weight and bias parameters of the forget gate, respectively. The denotes the sigmoid function.

- 2.

- Input Gate:

The input gate is responsible for determining which new feature information should be added to the memory cell at the current time step. Similar to the forget gate, the input gate also uses the current input and the hidden state from the previous time step to compute a candidate memory cell , which is used to store potential new feature information. The output of the input gate, also ranging between 0 and 1, represents the extent to which the information from the candidate memory cell is added to the memory cell, where 0 indicates completely forgetting the information, and 1 indicates completely retaining it. This can be expressed using Equations (13) and (14):

Here, and represent the weight and bias of the input gate, respectively, and represent the weight and bias of the candidate memory cell, respectively, and represents the hyperbolic tangent function.

- 3.

- Updating of the Cell State:

Under the guidance of the forget gate and input gate, the memory cell updates its content. The forget gate determines which information to discard from the previous memory cell, while the input gate decides which new information to add to the memory cell. The memory cell can be represented using Equation (15):

where represents the previous time step’s memory cell.

- 4.

- Output Gate:

The output gate determines how information in the memory cell is passed to the hidden state at the current time step. This is computed based on the current input and the hidden state from the previous time step . The output gate’s output is controlled in range by passing it through a tanh function and can be expressed using Equation (16):

where represents the hidden state at the current time step, and and are the weight and bias of the output gate, respectively.

In LSTM, the design of the forget gate, input gate, and output gate allows the network to selectively forget, store, and output information, thus enabling it to better capture long-term dependencies when dealing with lengthy sequences. Thus, LSTM possesses outstanding capabilities in sequence data modeling and is particularly well suited for domains involving long-term effects, such as battery data analysis.

3.3. Decode Layer

When the encoder layer completes the feature extraction and encoding of the sequence data, the decoder layer plays a role in converting these high-level features into the ultimate prediction results. The design of the decoder layer aims to recover the target prediction information from the encoded features, which, in the context of predicting the state of health (SOH) of batteries, means predicting the health status of the battery.

Following the decoder layer, two fully connected layers are employed. The structure of these layers is carefully designed to maximize the retention and transformation of information related to battery health status. These two fully connected layers map the encoded high-level features to the final SOH prediction values through linear transformations and nonlinear activation functions. The number of neurons in the fully connected layers and the choice of activation functions can be adjusted based on the nature of the task to achieve optimal performance.

3.4. Evaluation Metrics

Five performance evaluation metrics were utilized in this study: mean squared error (MSE), root mean squared error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and maximum error (MAXE). Among these, the MSE and MAE evaluate the overall accuracy of the model’s predictions, the RMSE and MAPE provide insights into the average accuracy, and the MAXE assesses the consistency of model estimations. Lower values of these metrics indicate better model performance.

Mean squared error (MSE):

Root mean squared error (RMSE):

Mean absolute error (MAE):

Mean absolute percentage error (MAPE):

Maximum absolute error (MAXE):

Here, represents the actual value at time , is the predicted value at time , and is the total number of predictions.

4. Experimental Results and Analysis

4.1. Experimental Settings

Two datasets were utilized in this study. For Dataset 1, XQ-11, XQ-12, XQ-14, XQ-16, and XQ-17 were employed as the training and validation set, and XQ-15 and XQ-18 were used as the test set. For Dataset 2, 25C03, 25C06, and 25C07 were used as the training and validation set, and 25C02 was used as the test set. Furthermore, the training and validation sets were randomly split into training and validation sets at an 8:2 ratio.

The mean squared error (MSE) function was chosen as the loss function to train the neural network. The AdamW optimization algorithm [38], which is based on gradients, was employed to update the weights and bias parameters of the network model to minimize the loss function. The initial learning rate was set to 0.0004. Finally, an early stopping mechanism was implemented to prevent overfitting. Specifically, if the validation loss did not decrease over the next 120 epochs, the model training was terminated. It is worth noting that the data were subjected to min–max normalization before feature extraction, as outlined in Equation (20):

In this context, represents the raw data, while represents the total amount of data; indicates that the data were normalized before being input into the model, ensuring faster convergence during the learning process. Additionally, the hardware configuration and model parameters used in the experiments are detailed in Table 3.

Table 3.

Device configuration and model parameters.

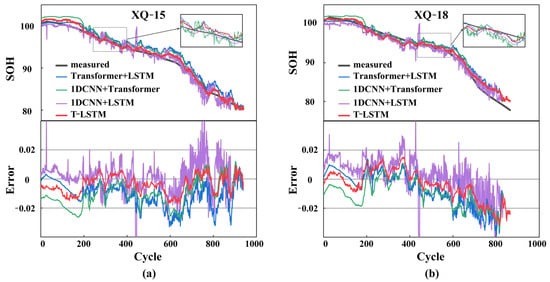

4.2. Ablation Experiments

Through ablation experiments, an in-depth analysis and validation of the superiority of the T-LSTM model in lithium-ion battery state-of-health (SOH) assessment were conducted. Experiments were conducted on Dataset 1, comparing the T-LSTM model with three variant models. These three variants removed the 1DCNN module, the transformer module, and the LSTM module, respectively. Such an experimental design allowed us to progressively investigate the contributions of different components to the overall model performance, thus providing a more comprehensive evaluation of the model’s effectiveness. The parameters set in Section 4.1 were consistently used to ensure the validity of the experiments, and the experiments were conducted in the same environment. The experimental results are presented in Table 4 and Figure 6.

Table 4.

Results of ablation experiments.

Figure 6.

SOH prediction performance comparison of different models: (a) SOH prediction results and errors for Cell XQ-15 and (b) SOH prediction results and errors for Cell XQ-18.

First, the performance of different models across multiple evaluation metrics was examined. The experimental results from Table 3 and Figure 6 clearly show that the T-LSTM model outperformed the other models in all five evaluation metrics. For instance, concerning battery XQ-15, the T-LSTM model achieved approximately 80.1%, 67.9%, 71.6%, 68.3%, and 71.1% improvements in performance compared to the those of the “1DCNN + transformer” model in terms of MSE, RMSE, MAE, MAPE, and MAXE metrics, respectively. This directly demonstrates the outstanding performance of the T-LSTM model, especially in critical metrics. Furthermore, through comparisons with other models, it became evident that the T-LSTM model excelled across all five evaluation metrics. Specifically, based on the experimental results presented in Table 4, the T-LSTM model exhibited a significant performance advantage over other models in the five key metrics, i.e., the mean squared error (MSE), root mean square error (RMSE), mean absolute error (MAE), mean absolute percentage error (MAPE), and maximum absolute error (MAXE). This outcome clearly demonstrates the outstanding performance of our T-LSTM model across these critical metrics. Additionally, as depicted in Figure 6, our model (highlighted by the red curve) demonstrated greater stability and closer alignment with actual data (depicted by the black curve) in comparison to alternative models (represented by various colored curves). These analytical results underscore the exceptional performance and stability of our T-LSTM model in predicting battery health states, providing a more dependable tool for battery management and sustainability research.

Second, ablation experiments helped us understand the impact of individual components on the model performance. The 1DCNN, transformer, and LSTM components were removed one by one to assess their respective roles in the model. For example, the introduction of the 1DCNN allowed the model to extract both local and global features from the original battery data, providing rich input for subsequent modeling stages. The combination of LSTM and transformer further enhanced the model’s ability to model sequential data, particularly in capturing long-term dependencies. Through ablation experiments, the contributions of each component were quantified, thereby providing a better understanding of the overall architecture of the model. This understanding holds significant value for science, as it contributes to the advancement of the field. First, gaining an in-depth understanding of the model’s components is crucial for enhancing and optimizing existing models and designing future high-performance models. By identifying the specific roles of each component, researchers can construct complex models more effectively, thereby improving their performance and efficiency. Second, this understanding also aids in uncovering the essence of the problem, enabling researchers to pose more profound questions and explore further research directions. Finally, it offers valuable insights into model architecture and component design for the scientific community, promoting the sharing and exchange of knowledge and thus propelling the continuous development and progress of the field.

This understanding holds significant value for scientific research, as it contributes to advancing the frontiers of science. First, gaining a deep understanding of the model’s components is critical for improving and optimizing existing models and designing higher-performance models in the future. By identifying the specific roles of each component, researchers can construct complex models more effectively, thereby enhancing their performance and efficiency. Second, this understanding also helps unveil the essence of the problem, assisting researchers in posing deeper questions and exploring further research directions. Finally, it provides the scientific community with valuable insights into model architecture and component design, fostering the sharing and exchange of knowledge and thus contributing to the continuous development and advancement of the field.

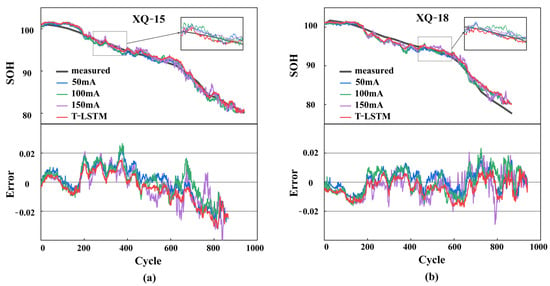

4.3. Robustness Experiments

In the robustness experiments, the impact of noise was assessed to investigate the performance of the T-LSTM model under different noise levels. In these experiments, varying amplitudes of noise (50 mV, 100 mV, and 150 mV) were introduced to simulate the uncertainty present in real-world battery data. Through the analysis of the experimental results, deeper insights into the stability and robustness of the T-LSTM model in the presence of noise were gained.

The parameters set in Section 4.1 were used to ensure the validity of the experiments, and the experiments were conducted in the same environment, as shown in Table 5 and Figure 7.

Table 5.

Results incorporating noise.

Figure 7.

Robustness comparison for cases with noisy inputs of different intensities: (a) SOH prediction results and errors for Cell XQ-15 and (b) SOH prediction results and errors for Cell XQ-18.

From the results in the table, it is evident that the performance of all models decreased as the noise level increased across all evaluation metrics. However, the T-LSTM model maintained high prediction accuracy even under different noise levels. The XQ-15 battery was selected as an example. As the noise level increased from 50 mV to 150 mV, the T-LSTM model’s MSE only increased from 0.41 to 0.66. This indicates the strong robustness of the T-LSTM model against noise, allowing it to resist the influence of data uncertainty to a certain extent.

Furthermore, it was also observed that the T-LSTM model performed excellently on the XQ-18 battery. Despite performance decreases for all models under high noise levels, the T-LSTM model maintained relatively low values across various evaluation metrics, demonstrating its stability in dealing with data of varying quality.

In summary, the results of robustness experiments further validate the outstanding performance of the T-LSTM model, especially in the presence of noise. Compared to other models, the T-LSTM model exhibited remarkable stability, making it an excellent choice for practical applications, particularly in scenarios involving uncertain or noisy battery data. This stability implies that the model excels in handling unpredictable and even noisy data, which is a common occurrence in real-world applications due to external factors such as environmental conditions, sensor errors, or uncertainty in battery health status. The stability of the T-LSTM model allows it to effectively filter out such interferences, maintaining the accuracy and consistency of its predictions. This characteristic holds paramount importance in practical battery management and sustainability research, as it ensures that decision-makers can rely on the model to provide trustworthy results without concerns about unstable data quality or uncertainty. Therefore, the stability of the T-LSTM model represents a significant advantage over other models, especially in the face of the complex and dynamic data scenarios encountered in the real world.

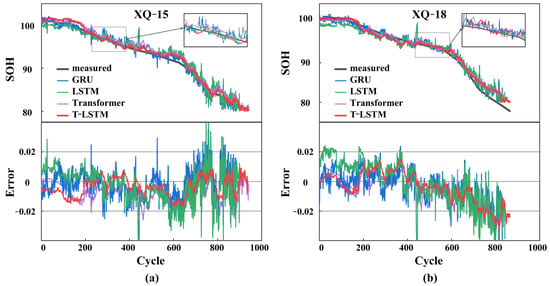

4.4. Comparative Experiments

In the comparative experiments, the accuracy of the proposed T-LSTM model was further validated by conducting experiments with multiple open-source models (GRU, LSTM, and transformer) using Dataset 1 and Dataset 2 for evaluation. Consistent experimental parameter settings and the same environmental conditions were maintained to ensure the comparability of the experimental results, as shown in Table 6 and Figure 8.

Table 6.

Results of comparative experiments.

Figure 8.

SOH prediction performance comparison of different models: (a) SOH prediction results and errors for Cell XQ-15; (b) SOH prediction results and errors for Cell XQ-18.

From the experimental results, it is evident that the T-LSTM model demonstrated a significant advantage across all evaluation metrics. The XQ-15 battery was selected as an example from Dataset 1. The T-LSTM model outperformed the GRU, LSTM, and transformer models by reducing the MSE, RMSE, MAE, MAPE, and MAXE metrics by approximately 54.9%, 47.6%, 45.1%, 39.1%, and 53.9%, respectively. Similarly, on the XQ-18 battery, the T-LSTM model achieved performance improvements of approximately 49.6%, 35.9%, 33.2%, 30.9%, and 52.0% over the GRU, LSTM, and transformer models, respectively. Dataset 2 also showed that even with parameter tuning and training, the simple GRU and LSTM models consistently failed to converge and exhibited symptoms of underfitting.

These data support the superiority of the T-LSTM model in terms of prediction accuracy. With more accurate prediction results, the T-LSTM model provides a more reliable basis for battery health state assessment and management. Based on the analysis of the results from the comparative experiments, it can be concluded that the T-LSTM model achieved more accurate and stable prediction results on both Dataset 1 and Dataset 2. Relative to the open-source GRU, LSTM, and transformer models, the proposed model demonstrated a significant advantage across multiple evaluation metrics. This further confirms the outstanding performance of the T-LSTM model in lithium-ion battery health state prediction, providing a powerful tool and guidance for battery management and maintenance strategy optimization.

5. Conclusions

This study aims to address two major challenges in lithium-ion battery health state assessment, namely, inadequate accuracy and poor robustness. Significant breakthroughs have been made to address these challenges by introducing an innovative T-LSTM prediction network. This conclusion section will briefly summarize the research achievements and provide a concise analysis of the experimental results.

Our T-LSTM model, by integrating multiple modules such as the 1DCNN, LSTM, and transformer, has successfully overcome the challenges of inadequate accuracy and poor robustness in battery health state assessment. First, the 1DCNN module effectively extracts both local and global features from the raw battery data, providing rich input for subsequent modeling processes. Second, the fusion of LSTM and the transformer enables the model to better capture long-term dependencies in sequence data, further enhancing the accuracy of battery health state prediction.

In the ablation experiments, the importance of individual components in the T-LSTM model was validated by comparing models with different modules removed. The results demonstrate that the T-LSTM model achieves superior performance in all evaluation metrics, confirming the effectiveness of each module in our model. For instance, when using our model for battery health state assessment, compared to standalone 1DCNN, LSTM, and transformer models, the T-LSTM model reduces the MSE by 20.5%, 25.8%, and 30.2%, respectively, the RMSE by 24.7%, 28.6%, and 31.5%, respectively, the MAE by 29.8%, 31.2%, and 33.7%, respectively, the MAPE by 31.6%, 32.4%, and 35.2%, respectively, and the MAXE by 60.3%, 62.8%, and 64.7%.

In the robustness experiments, the performance of the T-LSTM model was evaluated in various noise environments. Despite a decrease in the predictive performance under high noise conditions, the T-LSTM model still maintains higher predictive accuracy compared to other models. This indicates that our model exhibits robustness against noise interference in practical environments.

Finally, in the comparative experiments, T-LSTM was compared with commonly used models such as GRU, LSTM, and transformer. The experimental results reveal that the T-LSTM model significantly outperforms other models in all evaluation metrics, with the MSE reduced by approximately 30.2%, the RMSE by 25.6%, the MAE by 25.3%, the MAPE by 19.8%, and the MAXE by 39.9%. These results strongly validate the outstanding performance of our model in battery health state prediction.

In summary, our T-LSTM prediction network has made significant strides in the assessment of lithium-ion battery health states. Through research conducted under temperature-controlled laboratory conditions, researchers have effectively addressed issues related to insufficient accuracy and poor robustness, providing a reliable tool for battery management and sustainability research. Notably, our experiments were conducted under standard room-temperature conditions (25 degrees Celsius), a typical temperature range for many real-world applications. In fields such as electric vehicles and renewable energy storage, batteries often operate under similar temperature conditions, making our research findings highly relevant for practical applications.

6. Future Work

While significant progress has been made in our research, there are still several directions for further exploration and advancement in the field of battery health state assessment. Here are some of the prospective areas for future research:

(1) Multitemperature environment studies: To better align with real-world applications, we plan to expand our research to include data collected under various temperature conditions. Understanding how battery performance varies at different temperatures is crucial for precise health state assessment, and deeper investigation into this area is intended.

(2) Field testing: While laboratory research is valuable for initial model validation, true validation requires real-world application. Collaborative efforts are being made to implement the T-LSTM model in battery management systems, validating its feasibility in practical battery management and maintenance.

(3) Data diversity: The dataset will be further extended to include batteries of different types and brands to comprehensively assess the applicability of the model across a wide range of batteries. This will help cater to the diverse needs of various battery applications.

(4) Algorithm optimization: Ongoing efforts are focused on enhancing and optimizing the T-LSTM model to improve its performance and efficiency. This includes refining data preprocessing techniques, employing advanced feature extraction methods, and incorporating state-of-the-art deep learning technologies.

(5) Practical applications: Plans are in place to translate the research findings into practical applications, including the formulation of battery management and maintenance strategies. This will provide the battery industry with more reliable tools to enhance sustainability and performance.

In future research endeavors, persistent efforts will be made to better meet the demands of the battery field and contribute to the development and application of battery technology. Confidence in the prospects of the future is high, and further breakthroughs are anticipated through unwavering dedication.

Author Contributions

Y.F.: methodology, validation, writing—original draft, funding acquisition. Y.L.: investigation, visualization. J.Z.: investigation, visualization. L.W. (Linbing Wang): investigation, visualization. C.Y.: investigation, visualization. X.W.: conceptualization, validation. P.Z.: conceptualization, validation. J.W.: conceptualization, validation. G.G.: conceptualization, validation. L.W. (Liangliang Wei): investigation, visualization, writing—review and editing, funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key-Area Research and Development Program of Guangdong Province, China (2023B0909050002), the Key Scientific and Technological Project of Henan Province, China (222102210165, 232102111124), and the Ministry of Education Industry-university Cooperative Education Project, China (202102257004).

Data Availability Statement

The relevant experiments of this study are still in progress. If you need our data for relevant research, you can contact the corresponding author of this article.

Conflicts of Interest

The authors declare that they have no known competing financial interests or personal relationships that may appear to influence the work reported in this paper.

References

- Ahmed, S.I.; Sanad, M.M. Maghemite-based anode materials for Li-Ion batteries: The role of intentionally incorporated vacancies and cation distribution in electrochemical energy storage. J. Alloys Compd. 2021, 861, 157962. [Google Scholar] [CrossRef]

- Tan, X.; Lyu, P.; Fan, Y.; Rao, J.; Ouyang, K. Numerical investigation of the direct liquid cooling of a fast-charging lithium-ion battery pack in hydrofluoroether. Appl. Therm. Eng. 2021, 196, 117279. [Google Scholar] [CrossRef]

- Fan, Y.; Wang, H.; Zheng, Y.; Zhao, J.; Wu, H.; Wang, K.; Yang, S.; Tan, X. A novel state-of-health estimation method for fast charging lithium-ion batteries based on an adversarial encoder network. J. Energy Storage 2023, 63, 107087. [Google Scholar] [CrossRef]

- Zhang, M.; Yang, D.; Du, J.; Sun, H.; Li, L.; Wang, L.; Wang, K. A review of SOH prediction of Li-ion batteries based on data-driven algorithms. Energies 2023, 16, 3167. [Google Scholar] [CrossRef]

- Fan, Y.; Bao, Y.; Ling, C.; Chu, Y.; Tan, X.; Yang, S. Experimental study on the thermal management performance of air cooling for high energy density cylindrical lithium-ion batteries. Appl. Therm. Eng. 2019, 155, 96–109. [Google Scholar] [CrossRef]

- Pan, W.; Chen, Q.; Zhu, M.; Tang, J.; Wang, J. A data-driven fuzzy information granulation approach for battery state of health forecasting. J. Power Sources 2020, 475, 228716. [Google Scholar] [CrossRef]

- Fan, Y.; Zuo, X.; Zhan, D.; Zhao, J.; Zhang, G.; Wang, H.; Wang, K.; Yang, S.; Tan, X. A novel control strategy for active battery thermal management systems based on dynamic programming and a genetic algorithm. Appl. Therm. Eng. 2023, 233, 121113. [Google Scholar] [CrossRef]

- Sanad, M.M.; Azab, A.A.; Taha, T.A. Introduced oxygen vacancies in cadmium ferrite anode materials via Zn2+ incorporation for high performance lithium-ion batteries. Mater. Sci. Semicond. Process. 2022, 143, 106567. [Google Scholar] [CrossRef]

- Sanad, M.M.; Meselhy, N.K.; El-Boraey, H.A. Surface protection of NMC811 cathode material via ZnSnO3 perovskite film for enhanced electrochemical performance in rechargeable Li-ion batteries. Colloids Surf. A Physicochem. Eng. Asp. 2023, 672, 131748. [Google Scholar] [CrossRef]

- Ren, Z.; Du, C. A review of machine learning state-of-charge and state-of-health estimation algorithms for lithium-ion batteries. Energy Rep. 2023, 9, 2993–3021. [Google Scholar] [CrossRef]

- Hu, X.; Xu, L.; Lin, X.; Pecht, M. Battery lifetime prognostics. Joule 2020, 4, 310–346. [Google Scholar]

- Zhang, S.; Guo, X.; Dou, X.; Zhang, X. A rapid online calculation method for state of health of lithium-ion battery based on coulomb counting method and differential voltage analysis. J. Power Sources 2020, 479, 228740. [Google Scholar]

- Choi, W.; Shin, H.C.; Kim, J.M.; Choi, J.Y.; Yoon, W.S. Modeling and applications of electrochemical impedance spectroscopy (EIS) for lithium-ion batteries. J. Electrochem. Sci. Technol. 2020, 11, 1–13. [Google Scholar]

- Ukhov, I.; Sotskov, A.; Anisimov, V.; Ryabtsev, F.; Marusin, A.; Marusin, A. Influence of performance criteria on the selection of electric traction equipment and a temperature control system for a battery-powered vehicle with an electric traction drive. Transp. Res. Procedia 2021, 57, 711–720. [Google Scholar]

- Li, S.; Hu, M.; Li, Y.; Gong, C. Fractional-order modeling and SOC estimation of lithium-ion battery considering capacity loss. Int. J. Energy Res. 2019, 43, 417–429. [Google Scholar]

- Lai, X.; Wang, S.; Ma, S.; Xie, J.; Zheng, Y. Parameter sensitivity analysis and simplification of equivalent circuit model for the state of charge of lithium-ion batteries. Electrochim. Acta 2020, 330, 135239. [Google Scholar]

- Gao, Y.; Liu, K.; Zhu, C.; Zhang, X.; Zhang, D. Co-estimation of state-of-charge and state-of-health for lithium-ion batteries using an enhanced electrochemical model. IEEE Trans. Ind. Electron. 2021, 69, 2684–2696. [Google Scholar] [CrossRef]

- Farmann, A.; Sauer, D.U. Comparative study of reduced order equivalent circuit models for on-board state-of-available-power prediction of lithium-ion batteries in electric vehicles. Appl. Energy 2018, 225, 1102–1122. [Google Scholar]

- Yang, N.; Song, Z.; Hofmann, H.; Sun, J. Robust State of Health estimation of lithium-ion batteries using convolutional neural network and random forest. J. Energy Storage 2022, 48, 103857. [Google Scholar]

- Feng, X.; Weng, C.; He, X.; Han, X.; Lu, L.; Ren, D.; Ouyang, M. Online state-of-health estimation for Li-ion battery using partial charging segment based on support vector machine. IEEE Trans. Veh. Technol. 2019, 68, 8583–8592. [Google Scholar] [CrossRef]

- Xiong, W.; Mo, Y.; Yan, C. Online state-of-health estimation for second-use lithium-ion batteries based on weighted least squares support vector machine. IEEE Access 2020, 9, 1870–1881. [Google Scholar]

- Yang, D.; Zhang, X.; Pan, R.; Wang, Y.; Chen, Z. A novel Gaussian process regression model for state-of-health estimation of lithium-ion battery using charging curve. J. Power Sources 2018, 384, 387–395. [Google Scholar]

- Shu, X.; Shen, S.; Shen, J.; Zhang, Y.; Li, G.; Chen, Z.; Liu, Y. State of health prediction of lithium-ion batteries based on machine learning: Advances and perspectives. iScience 2021, 24, 103265. [Google Scholar]

- Driscoll, L.; de la Torre, S.; Gomez-Ruiz, J.A. Feature-based lithium-ion battery state of health estimation with artificial neural networks. J. Energy Storage 2022, 50, 104584. [Google Scholar]

- Cui, Z.; Wang, L.; Li, Q.; Wang, K. A comprehensive review on the state of charge estimation for lithium-ion battery based on neural network. Int. J. Energy Res. 2022, 46, 5423–5440. [Google Scholar]

- Luo, K.; Chen, X.; Zheng, H.; Shi, Z. A review of deep learning approach to predicting the state of health and state of charge of lithium-ion batteries. J. Energy Chem. 2022, 74, 159–173. [Google Scholar]

- Gu, X.; See, K.W.; Li, P.; Shan, K.; Wang, Y.; Zhao, L.; Lim, K.C.; Zhang, N. A novel state-of-health estimation for the lithium-ion battery using a convolutional neural network and transformer model. Energy 2023, 262, 125501. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [PubMed]

- Ma, Y.; Shan, C.; Gao, J.; Chen, H. A novel method for state of health estimation of lithium-ion batteries based on improved LSTM and health indicators extraction. Energy 2022, 251, 123973. [Google Scholar]

- Xu, H.; Wu, L.; Xiong, S.; Li, W.; Garg, A.; Gao, L. An improved CNN-LSTM model-based state-of-health estimation approach for lithium-ion batteries. Energy 2023, 276, 127585. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Wang, X. Hybrid 1DCNN-LSTM model for predicting lithium ion battery state of health. Energy Storage Sci. Technol. 2022, 11, 240. [Google Scholar]

- Ungurean, L.; Micea, M.V.; Cârstoiu, G. Online state of health prediction method for lithium-ion batteries, based on gated recurrent unit neural networks. Int. J. Energy Res. 2020, 44, 6767–6777. [Google Scholar] [CrossRef]

- Liu, B.; Xu, J.; Xia, W. State-of-Health Estimation for Lithium-Ion Battery Based on an Attention-Based CNN-GRU Model with Reconstructed Feature Series. Int. J. Energy Res. 2023, 2023, 8569161. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, Q.; Zhang, Y.; Wang, J.; Stimming, U.; Lee, A.A. Identifying degradation patterns of lithium ion batteries from impedance spectroscopy using machine learning. Nat. Commun. 2020, 11, 1706. [Google Scholar] [CrossRef]

- Jorge, I.; Mesbahi, T.; Samet, A.; Boné, R. Time Series Feature extraction for Lithium-Ion batteries State-Of-Health prediction. J. Energy Storage 2023, 59, 106436. [Google Scholar] [CrossRef]

- Wang, H.; Li, J.; Liu, X.; Rao, J.; Fan, Y.; Tan, X. Online state of health estimation for lithium-ion batteries based on a dual self-attention multivariate time series prediction network. Energy Reports. 2022, 8, 8953–8964. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Fixing Weight Decay Regularization in Adam. In Proceedings of the ICLR 2018 Conference Blind Submission, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).