1. Introduction

Lithium-ion batteries have emerged as a cornerstone technology across multiple industries worldwide. Notably, they play a crucial role in the rapid advancement of electric vehicles (EVs) and large-scale battery energy storage systems (BESSs), two sectors that are pivotal to the transition towards sustainable energy solutions. For both of these applications, the associated Battery Management System (BMS) is responsible for optimising battery performance by managing parameters such as voltage, current, and temperature [

1]. There is considerable motivation from researchers globally to improve the performance of BMSs [

2], as they can extend battery life, reduce failure risks, and mitigate thermal runaway.

EVs rely heavily on efficient battery management to operate safely. The BMS is responsible for several critical functions, including temperature control, cell equalisation, charging and discharging management, and fault analysis. Effective battery state estimation, encompassing the State of Charge (SoC), state of health (SoH), and Remaining Useful Life (RUL), is vital for ensuring EV safety and performance [

3]. Traditional estimation methods, such as the Kalman filter, often lack the adaptability needed to account for battery ageing effects, potentially compromising BMS performance [

4].

In addition, BESSs are increasingly crucial in efforts to decarbonise the power sector. By facilitating the integration of renewable energy sources into the power grid, BESSs also contribute to grid stability and reliability. The BMS is vital to the viability of BESS projects. It is responsible for safety, the efficient management of performance, and determining the SoH of batteries. SoH predictions provide crucial information for the maintenance and replacement of batteries. Furthermore, trading strategies for BESSs must consider and optimise the SoH to balance short-term gains and long-term battery life.

However, the gains generated by BESSs through providing flexibility services can be offset by the degradation cost of the lithium-ion batteries. In addition to lifetime and number of cycles, this degradation is sensitive to energy throughput, temperature, SoC, depth of discharge (DoD) and charge/discharge rate (C-rate) [

5]. Furthermore, accurate state-of-health (SoH) estimation is crucial as it dictates the energy throughput, which determines the energy available for trading. This directly impacts the revenue generated from BESS projects and influences key financial metrics such as the Levelised Cost of Storage (LCOS) and Internal Rate of Return (IRR), ultimately governing the economic viability of these projects.

A major difficulty for SoH predictions in both EV and BESS applications is the inherent path dependency [

6], i.e., the impact of the precise chronological usage of the battery on the SoH. Accounting for path dependency is not inherent to model-based methods—whether they are based on fundamental electrochemical equations [

7,

8,

9] or circuit-based equivalent models combined with filtering techniques [

10]—because path dependency is still not well understood. Moreover, capturing long-term SoH dependencies on the degradation stress factors mentioned previously within such frameworks require a large quantity of data to be collected over long periods of time, as demonstrated in Ref. [

11].

A more efficient approach to SoH prediction is to employ data-driven methods [

12] that can store and update key information from degradation data as they become available. Traditional data-driven methods for SoH prediction often involve handcrafted feature extraction followed by regression techniques. For instance, Feng et al. [

13] used Gaussian process regression (GPR) and polynomial regression to predict SoH and RUL, extracting health indicators from charging current. These methods, while effective, can be time-consuming and labour-intensive, requiring expertise in feature design. Additionally, kernel methods, like support vector machines (SVMs), have been applied for SoH estimation [

14]. However, selecting dominant data points can dilute path dependency and reduce prediction accuracy. Neural network (NN)-based techniques can be effective because they have an internal state that can represent path information.

The long short-term memory (LSTM) neural network architecture is a popular approach for time-series forecasting due to its ability to capture some long-term dependencies. Zhang et al. [

15] studied LSTM-based neural networks for SoH predictions. Their results indicated that LSTM networks were generally more accurate and precise than SVM-based models. More recent studies have focused on end-to-end deep learning approaches using measurement data such as voltage, current, and temperature. Li et al. [

16] combined LSTM and CNN networks to separately predict SoH and remaining useful life (RUL) in an end-to-end mode. LSTM models are well suited for time-series data and can capture some long-term dependencies, which are essential for battery SoH predictions. However, they have limitations such as long training times and a restricted ability to capture very long-term dependencies, which can affect their practical application. This is particularly relevant for SoH prediction as accurate modelling over a large number of cycles is desirable.

Recognising the limitations of LSTM models, researchers have explored more advanced architectures. Cai et al. [

17] demonstrated that transformers, when combined with deep neural networks (DNNs), were more effective for battery SoH prediction. The attention mechanism in transformers allows them to assign importance to specific timesteps, thereby capturing long-term dependencies more efficiently than LSTM models. Song et al. [

18] further enhanced prediction accuracy by incorporating positional and temporal encoding in transformers to predict battery RUL, addressing the challenge of capturing sequence information.

Zhang et al. [

19] integrated an attention layer into a gated residual unit (GRU) architecture, combining it with particle filter information to make accurate RUL predictions. This approach leveraged the strengths of both attention mechanisms and traditional filtering techniques, enhancing the robustness of the predictions.

Fan et al. [

20] designed a gated recurrent unit–convolutional neural network (GRU-CNN) for direct SoH prediction, which combined the temporal processing capabilities of GRUs with the spatial feature extraction power of CNNs. Gu et al. [

21] created a model by combining a CNN and transformer for SoH prediction. The CNN was used to incorporate time-dependent features, whereas the transformer modelled time-independent features. This hybrid approach effectively utilised different neural network architectures to enhance prediction accuracy.

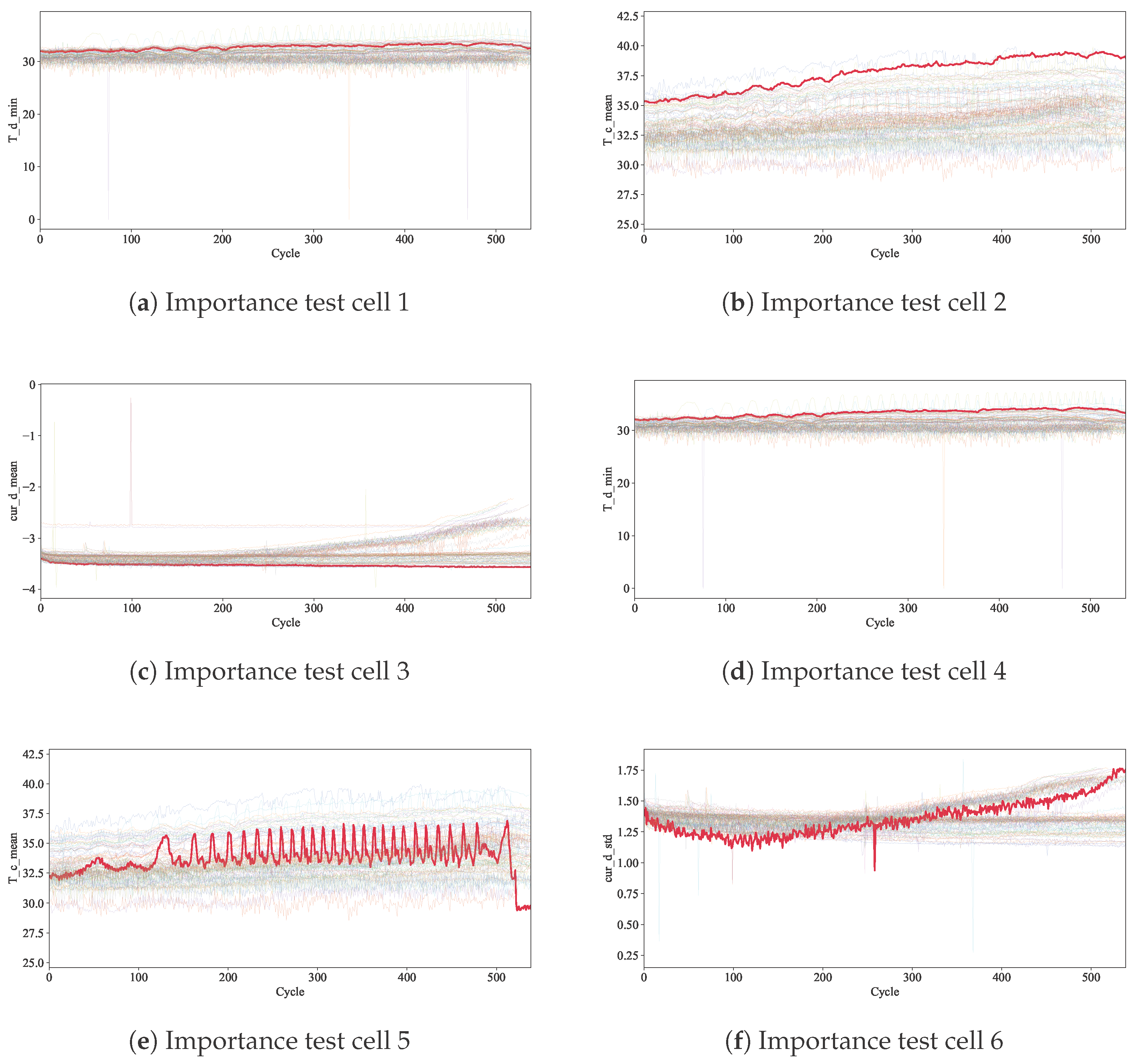

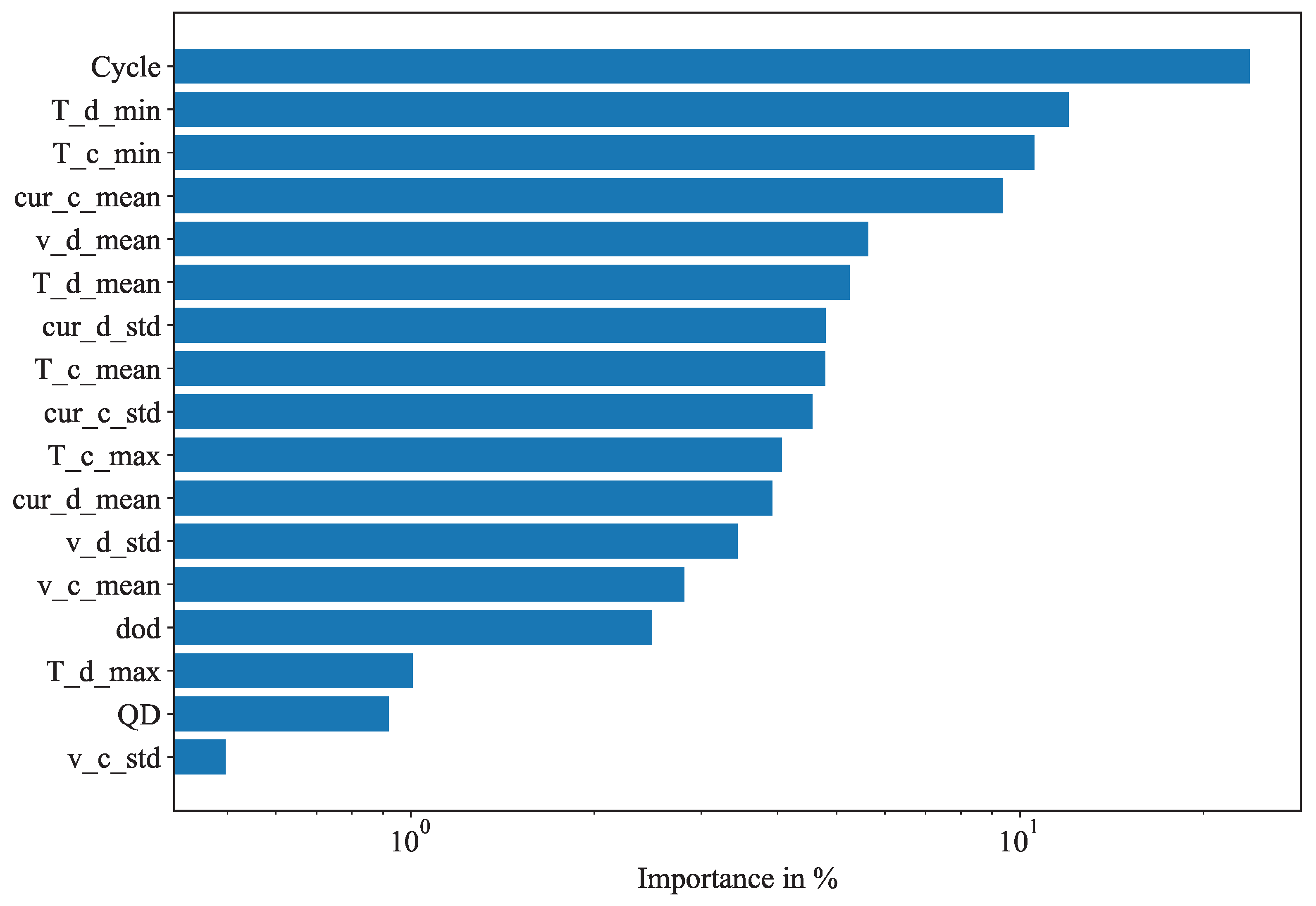

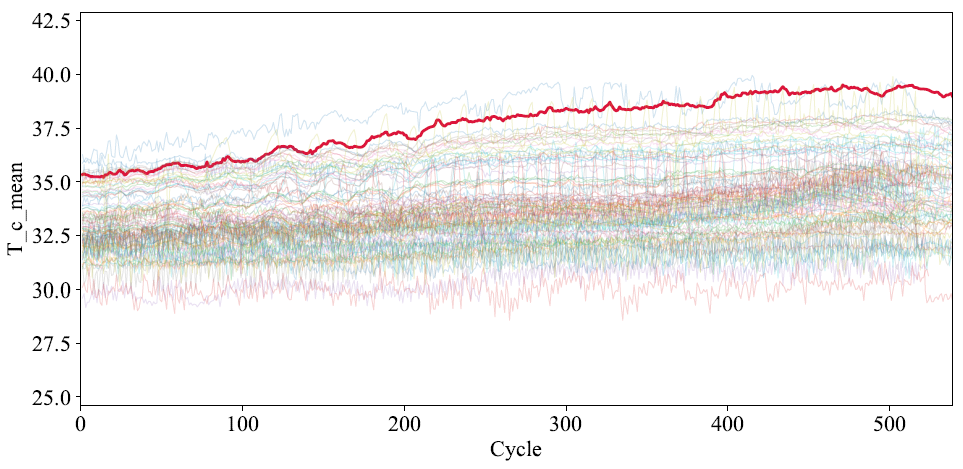

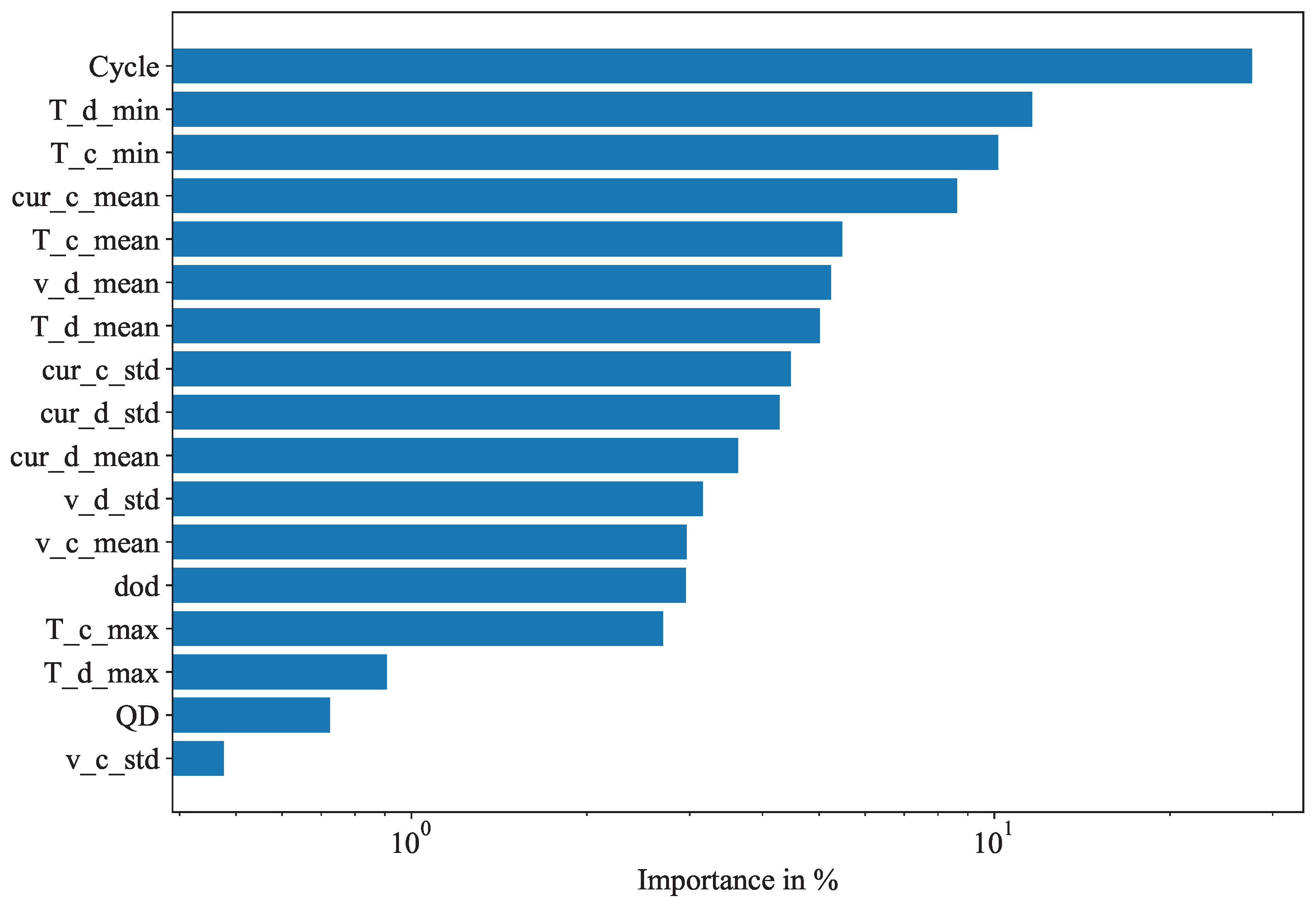

Despite these advancements, previous approaches often struggle with capturing complex dependencies and require extensive computational resources for training. This study presents a novel method for modelling battery degradation and thus sheds light on a critical component of understanding this capability—interpretability of underlying stress factors—using a Temporal Fusion Transformer (TFT) [

22]. The TFT model has proven to be more accurate than standard deep learning approaches for various sequence lengths. It leverages historical conditions and known future inputs to make predictions from as few as 100 input cycles. The model integrates specialised components to provide interpretability, offering insights into path-dependent degradation processes. By incorporating static metadata, our approach provides a robust solution applicable to various battery chemistries and operating conditions. This work aims to enhance the reliability and safety of BMS SoH estimation for EV and BESS applications, providing valuable insights into battery degradation processes and informing optimal management strategies.

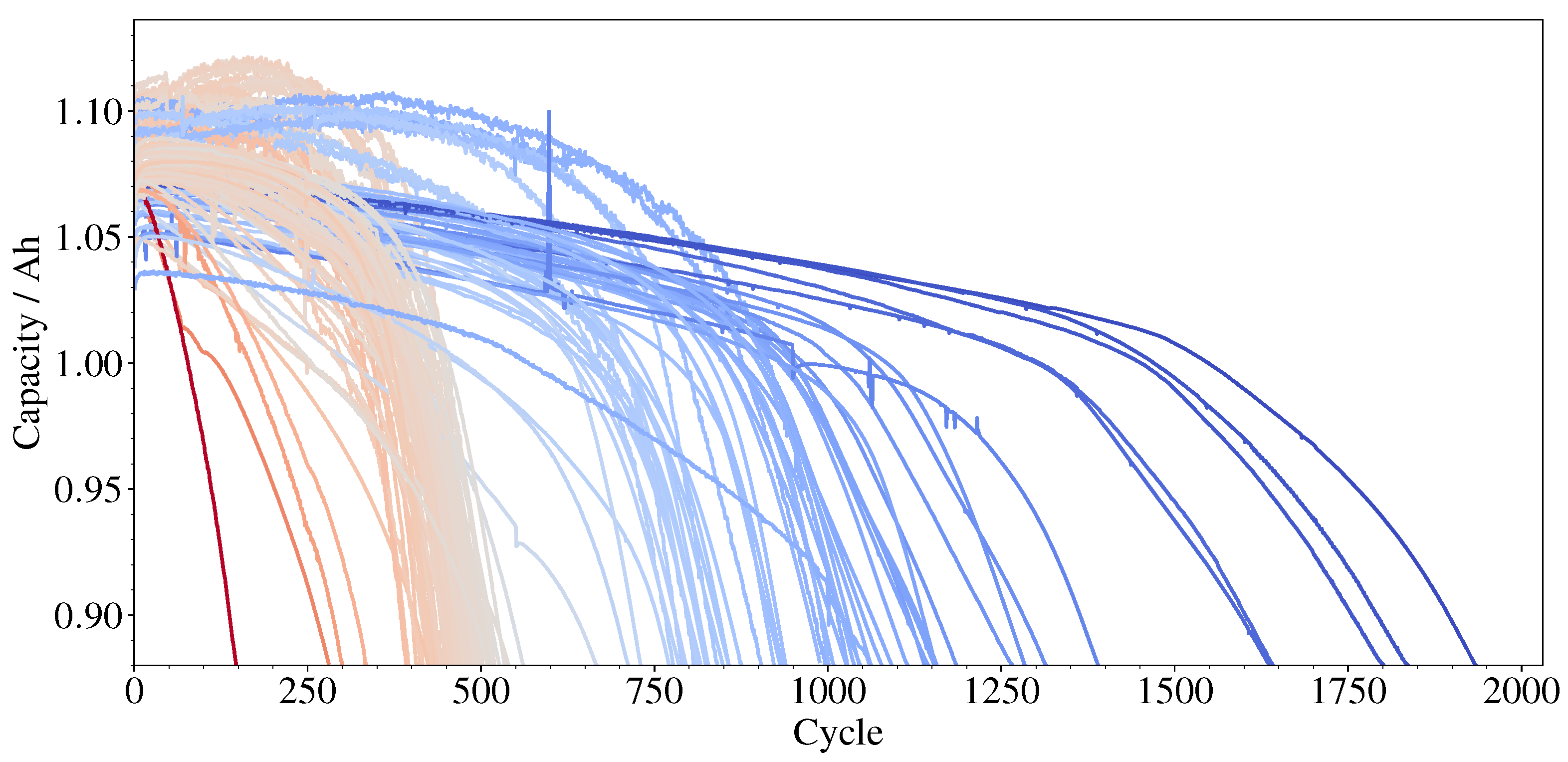

In this study, we outline our approach, apply it to a dataset comprising over 100 cells, evaluate its performance relative to other standard neural networks, and demonstrate its potential for interpretability.

4. Conclusions

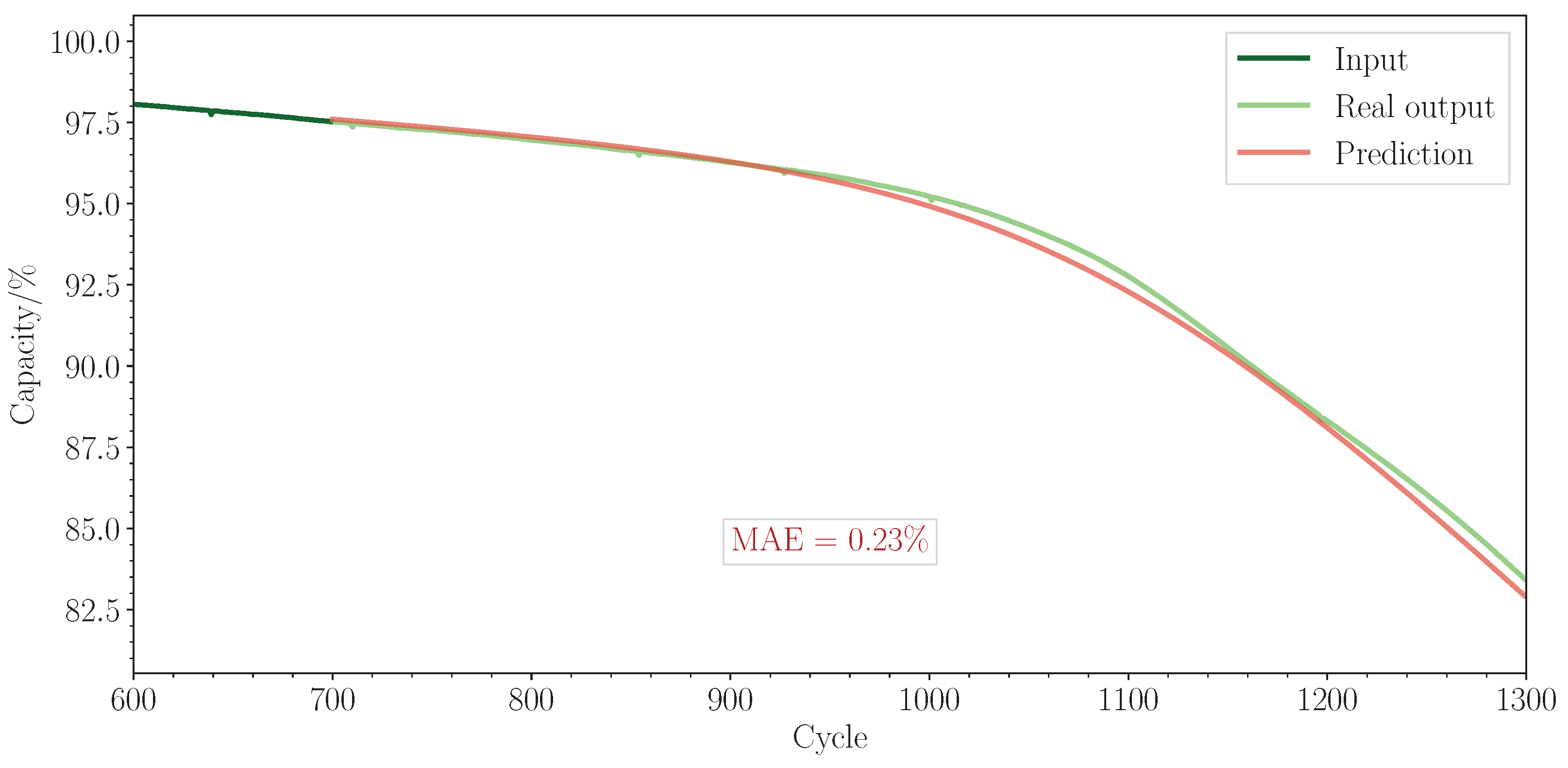

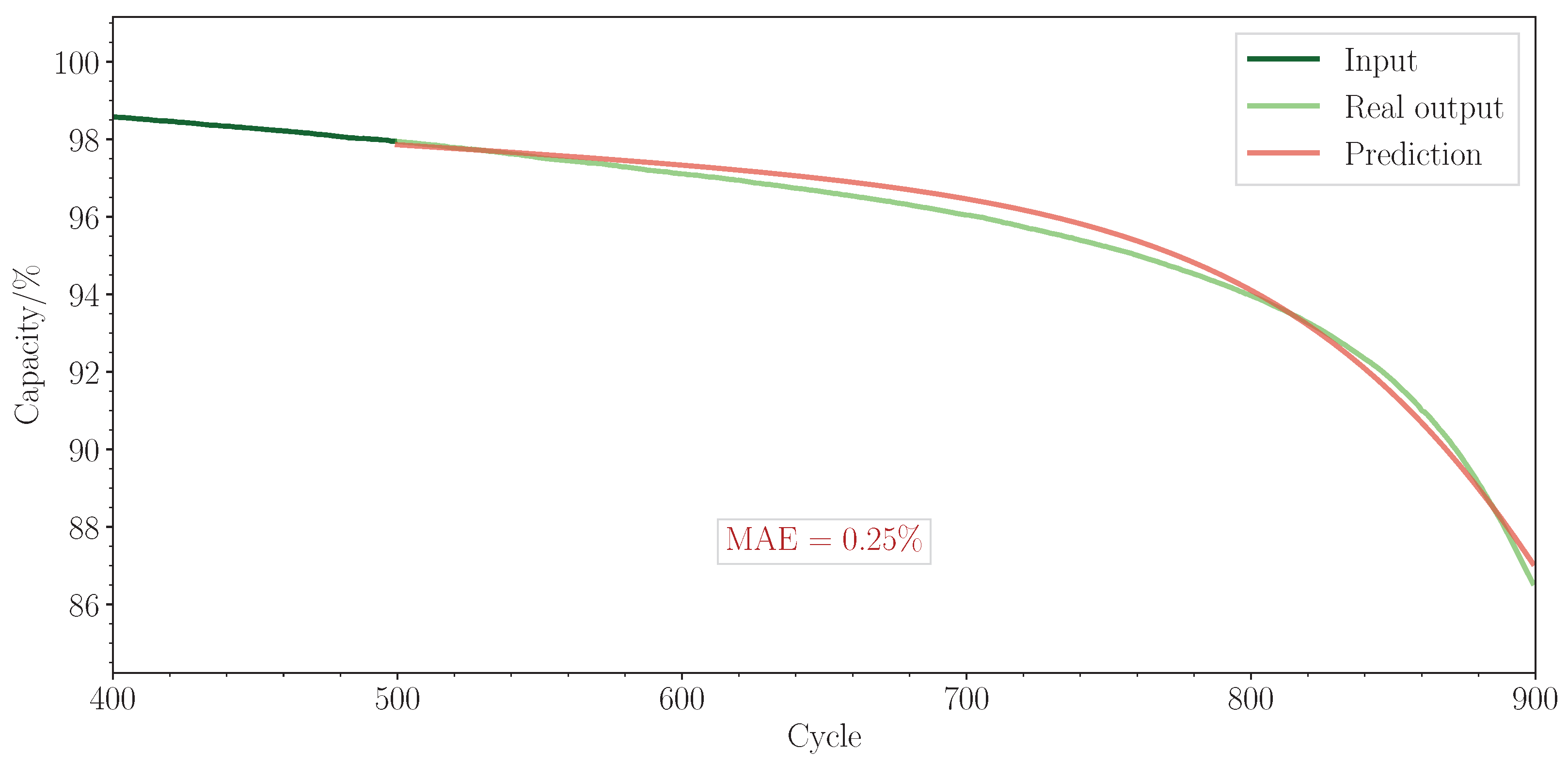

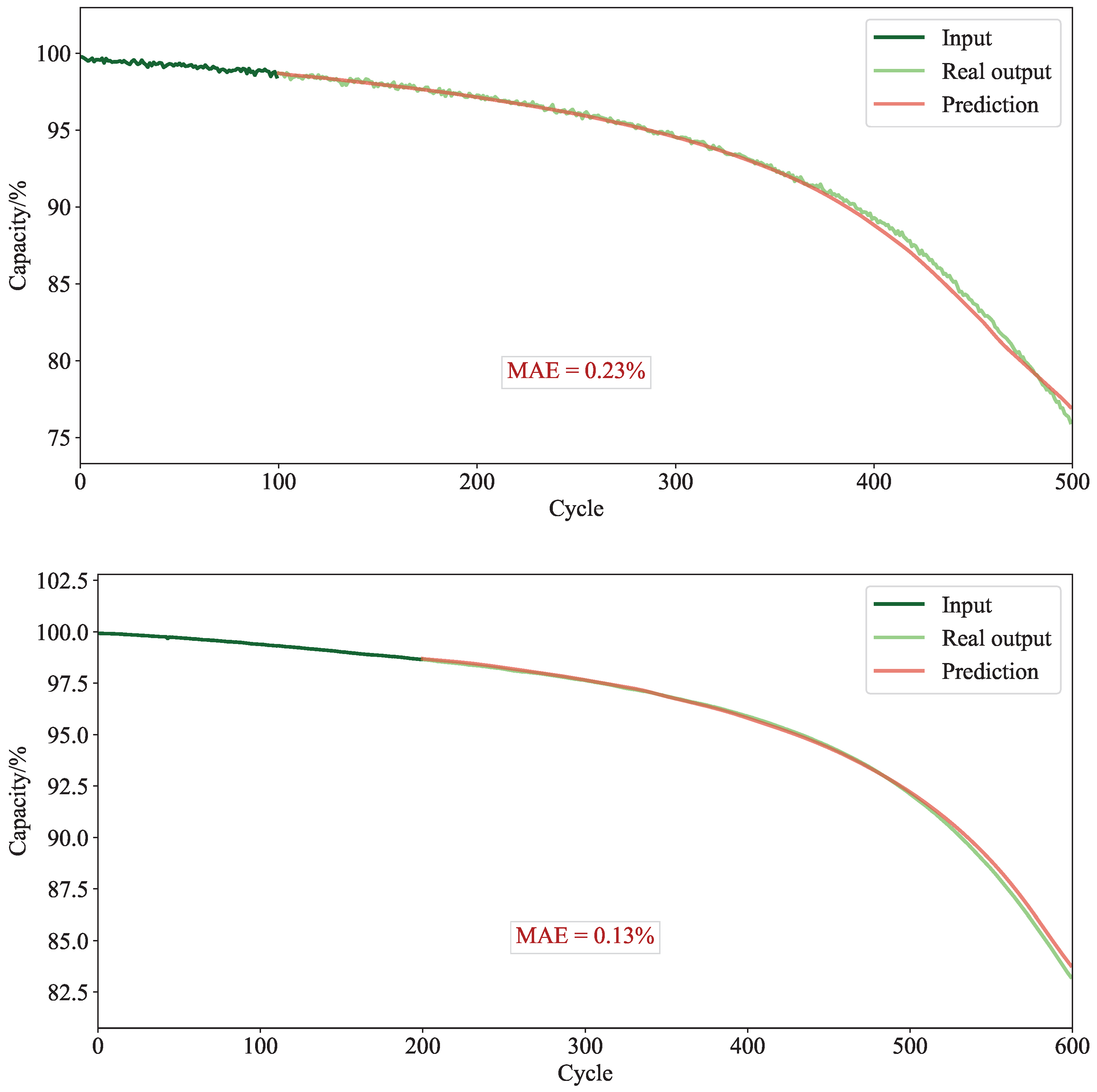

This study presented a novel approach for modelling battery degradation using a Temporal Fusion Transformer. The proposed model demonstrated superior accuracy compared to standard deep learning methods across various sequence lengths. The mean absolute error for the predicted capacity curve was found to be between 0.67% and 0.85%, compared with 0.87–1.40% for the benchmark models.

The model effectively integrates both continuous and categorical inputs, which can be either static or time-varying. In addition, it offers interpretable outputs, yielding valuable insights into specific factors that impact a given battery’s degradation. This could enable a user to extend the life of a battery during operation. Moreover, the model is capable of accurately forecasting degradation curves with as few as 100 input cycles.

The model presented in this study has great potential to enhance the reliability and safety of lithium-ion batteries in electric vehicles or energy storage systems. Moreover, the model is agnostic to cell chemistry and can be readily applied to other battery types, provided that suitable training data are available.