1. Introduction

Promoting the development and implementation of Battery Electric Vehicles (BEVs) is one of the key ongoing measures to transition towards a low-carbon energy model. Despite their many advantages over conventional vehicles, BEVs adoption remains limited due to certain constraints compared to Internal Combustion Engine Vehicles (ICEVs). Their range and battery recharging times are two of the most significant drawbacks of BEVs.

However, the average range of BEVs has more than doubled in the last decade, while recharging times have been significantly reduced over the same period. For these reasons, BEVs can now undertake long trips with minimal time differences compared to ICEVs. This difference ranges between 11% and 27%, depending on the BEV’s range and charging capabilities, with BEVs requiring more time than ICEVs [

1,

2].

Given that there are enough high-power DC fast chargers along the route, the duration of a long-distance trip in a BEV essentially depends on two factors. On the one hand, it depends on the battery capacity—and therefore the BEV’s range—and on the other hand, on the charging power the battery can handle. BEV long-distance trip times are expected to converge with ICEV times as ultra-fast chargers become widely adopted [

3]. Users consider overall travel time—which includes both driving time and charging time—more important than the charging duration itself, as they typically stop to rest every 2–3 h during long trips, which can be done at fast-charging stations [

4].

Charging power gradually decreases from 30–40% of the battery’s State of Charge (SoC) and drops significantly beyond 70–80%. Therefore, stopping charging at 80% SoC instead of reaching 100% significantly reduces total travel time (including charging stops) [

3,

5]. Additionally, due to undesirable secondary electrochemical reactions, charging above 80% SoC accelerates battery degradation [

1,

6]. Despite this effect, it is important to mention that Lithium-Ion Phosphate batteries have very constant voltages over most of the SoC. Thereby the estimation of the SoC mostly relies on Coulomb counting the power in and out. This needs frequent recalibration to be correct. Therefore, LFP batteries should be charged to 100% to get the SoC estimation of the BMS recalibrated, as an accurate SoC estimation helps regulate the charging process [

7].

Moreover, DC fast charging above 80% SoC prevents the full utilization of the available charging potential at fast-charging stations by reducing charging power and, consequently, charger availability, which may lead to unnecessary waiting times for other users [

8,

9]. These delays may occur occasionally during peak hours of daily fast-charging station usage and can become significant in exceptional cases, such as during holiday rush hour traffic [

10]. They may also be very frequent if the DC fast-charging network is not sufficiently well-developed [

11,

12].

On the other hand, prolonged DC fast charging over time causes a significant increase in the battery’s internal temperature, which, in addition to reducing power delivery, can, in severe cases, lead to internal short circuits that may destroy the battery and even cause explosions [

1,

13]. Furthermore, it has been proven that high battery temperatures lead to the fragmentation of electrode materials due to thermal expansion mismatch, causing cell destruction and the consequent degradation of the battery [

7,

14].

It is neither necessary nor sustainable to size BEV batteries based on maximum usage requirements for long trips [

3]. In fact, although manufacturers such as NIO and GAC are developing BEVs with ranges exceeding 1000 km, the weight and cost of the required batteries lead to several drawbacks for these vehicles. Notably, these include poorer performance in terms of longitudinal (acceleration and braking) and transverse (cornering) dynamics, a higher Total Cost of Ownership (TCO) compared to vehicles with lower-capacity batteries, and lower energy efficiency [

15]. Instead, a more effective approach is to size BEV batteries around 400 km range and design optimal fast-charging strategies that minimize charging sessions and reduce total travel times.

Choosing a DC fast-charging station with a charging power that matches the maximum power the vehicle’s battery can handle, along with preconditioning (heating) the battery before charging, can significantly reduce charging times by up to 50% in some cases [

1,

16,

17]. Battery preconditioning is especially important to achieve optimal performance [

13] and when performing DC fast charging at very low temperatures [

18,

19]. Under such conditions, not only does charging efficiency decrease significantly [

20], leading to considerably longer charging times [

21], but battery degradation also increases, reducing its lifespan [

22]. For this reason, most EVs’ manufacturers—including Audi, Hyundai, MG and Tesla—incorporate an automatic preconditioning system when the vehicle’s navigation system sets a DC fast-charging station as the destination.

Considering that the main parameters influencing a battery’s charging session are well known, two key factors play an important role in optimizing battery electric vehicles’ DC fast charging for long-distance trips. The first one is the user, who is usually not aware of the optimal State of Charge (SoC) range that maximizes the charging session’s power. This combined with the fact that most BEV drivers experience the so-called “range anxiety” [

23,

24], leads to charging the battery outside its optimal SoC window, which can reduce efficiency and increase charging times. For example, a user may stop at a fast-charging station with 30% SoC and charge the battery up to 95%. This would result in a charging session approximately 50% longer compared to a session from 10% to 75% SoC, despite both recovering the same range.

The second parameter is the difference between the charging power curves of each BEV on the market, which varies significantly across different models and brands. This aspect also plays a crucial role in minimizing long-distance trip times. Charging power is higher at low SoC levels and decreases as the charge percentage increases. This trend results from battery management strategies, which aim to accelerate charging when the battery is less charged and reduce it as it approaches higher SoC levels in order to preserve battery health.

This paper analyzes data from a broad sample of BEVs available on the market, based on testing and recording the main parameters of their charging sessions, and establishes the optimal SoC window for maximizing charging power and reducing stop times. The goal is to provide users with practical information to optimize their vehicles’ DC fast-charging sessions for long-distance trips.

2. Materials and Methodology

This section shows the materials that were used to carry out this research and explains the methodology used for obtaining the optimal DC fast-charging strategies for a wide range of battery electric vehicles during long-distance trips.

In order to obtain sufficient data to draw solid conclusions, 62 BEVs from different segments were tested. All tested vehicles are available in the European market.

Table 1 shows the number of EVs tested in this research, classified by segment. As can be seen, segments C, D, and E are the most numerous, as these types of vehicles are more likely to be used for long-distance trips. However, segments A, B, F and commercial vehicles were also tested to provide useful information for their respective users.

High-power DC fast-charging stations, of up to 350 kW, were used in this research to recharge the EVs’ batteries. Choosing the appropriate charging equipment ensured that the EVs’ battery could be charged to its maximum admissible power, avoiding the limitation in the charging speed that could be caused by the charging station. Therefore, DC fast-charging equipment was selected specifically for each vehicle according to their battery architecture (400 or 800 V) and maximum charging power capabilities. All these charging stations were equipped with CCS Combo 2 connectors, as all tested vehicles equipped this type of DC fast-charging port.

The first step in this research was to obtain the fast-charging power (kW) vs. SoC (%) curve for each BEV, since manufacturers usually only provide the maximum DC fast-charging power achievable under ideal conditions. However, this value is not constant throughout the charging session and is typically only reached at the beginning if the battery’s SoC is sufficiently low and the battery has been properly pre-conditioned. The fast-charging curve is essential for evaluating the EVs’ travel capability and for determining the optimal fast-charging strategy for each vehicle, as it directly affects recharging times [

5].

In order to obtain the fast-charging power curves, all BEVs were tested at a high-power DC fast-charging station. To ensure optimal fast-charging conditions, all vehicles equipped with this feature underwent 45 min of battery preconditioning before the charging session began. Charging power, SoC and battery temperature were recorded using both the data provided by the charging station and the information retrieved from each vehicle’s Battery Management System (BMS) via the EOBD connector. Data was collected at 1% SoC intervals between 10% and 90% throughout the entire charging session.

Figure 1 shows two of the tested vehicles during the tests along with all the data recorded during each fast-charging session.

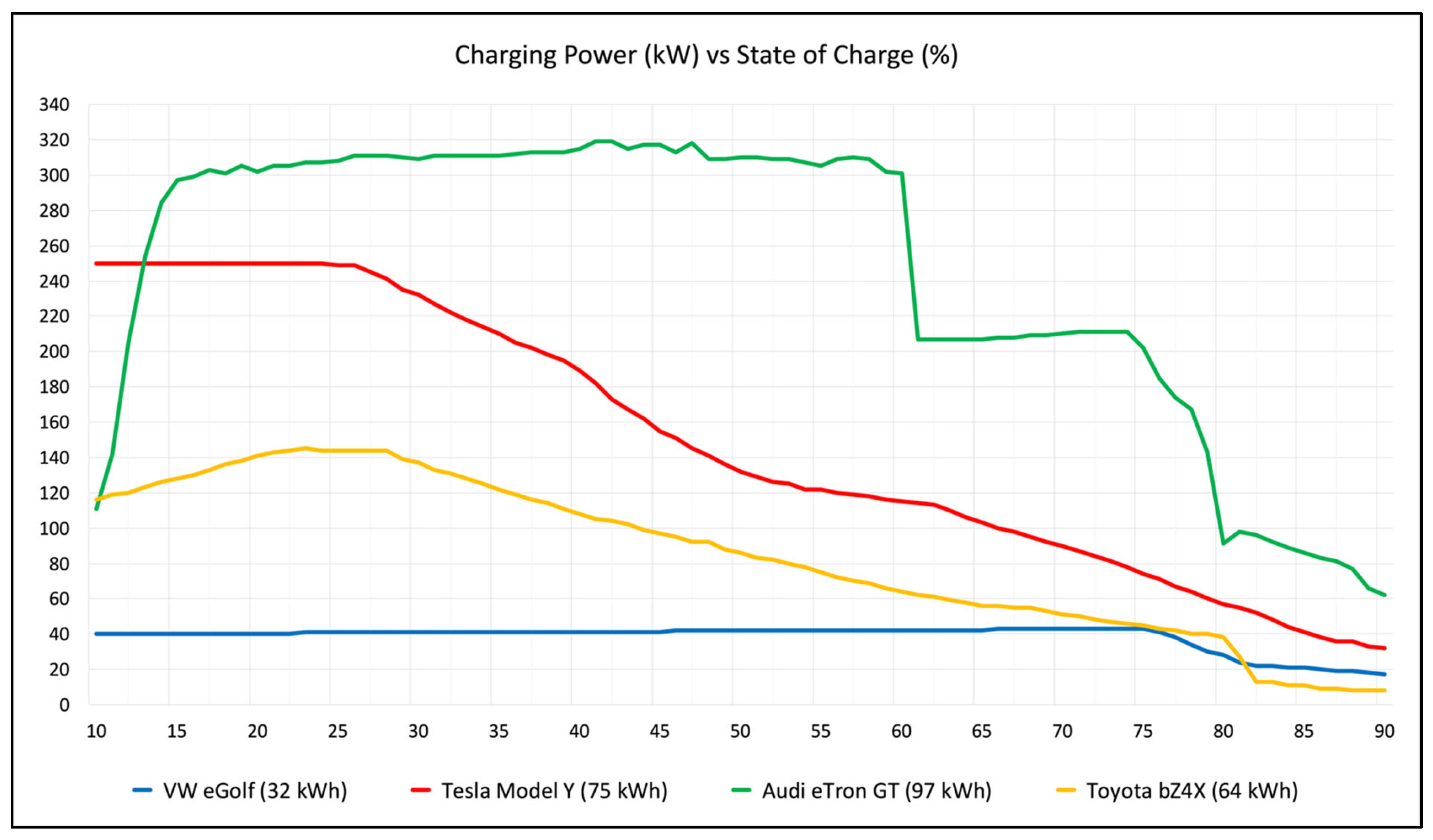

On the other hand,

Figure 2 shows, as a reference, the fast-charging power curves of 4 of the 62 tested vehicles (Audi eTron GT, Tesla Model Y, Toyota bZ4X and Volkswagen eGolf). These vehicles have been chosen as examples to highlight the significant differences in fast-charging power curves among various technologies (i.e., 400 V vs. 800 V battery architectures or passive vs. active thermal management systems). The figure in brackets corresponds to the nominal net capacity of each vehicle’s battery, expressed in kWh.

The amount of energy stored in a battery during a charging session depends on the charging power that the battery can handle during this time. In this case, charging power (kW) is plotted against SoC (%), and the area under the curve represents the amount of energy delivered to the battery during charging.

For this reason, fast-charging capabilities do not depend merely on the maximum charging power an EV can reach under ideal conditions, as this level is often maintained only for a short period. Therefore, maintaining a high power level over a wide SoC range is more important in order to recharge the battery faster and more efficiently.

A larger area under the curve corresponds to a greater total amount of energy transferred to the battery. For example, the Audi eTron GT (green line) maintains significantly higher charging power for a longer period compared to the Tesla Model Y (red), Toyota bZ4X (yellow), and VW eGolf (blue), indicating that it can accumulate more energy in a shorter time. Conversely, vehicles with lower power curves accumulate energy more slowly, extending the charging session and, therefore, prolonging long-distance trips.

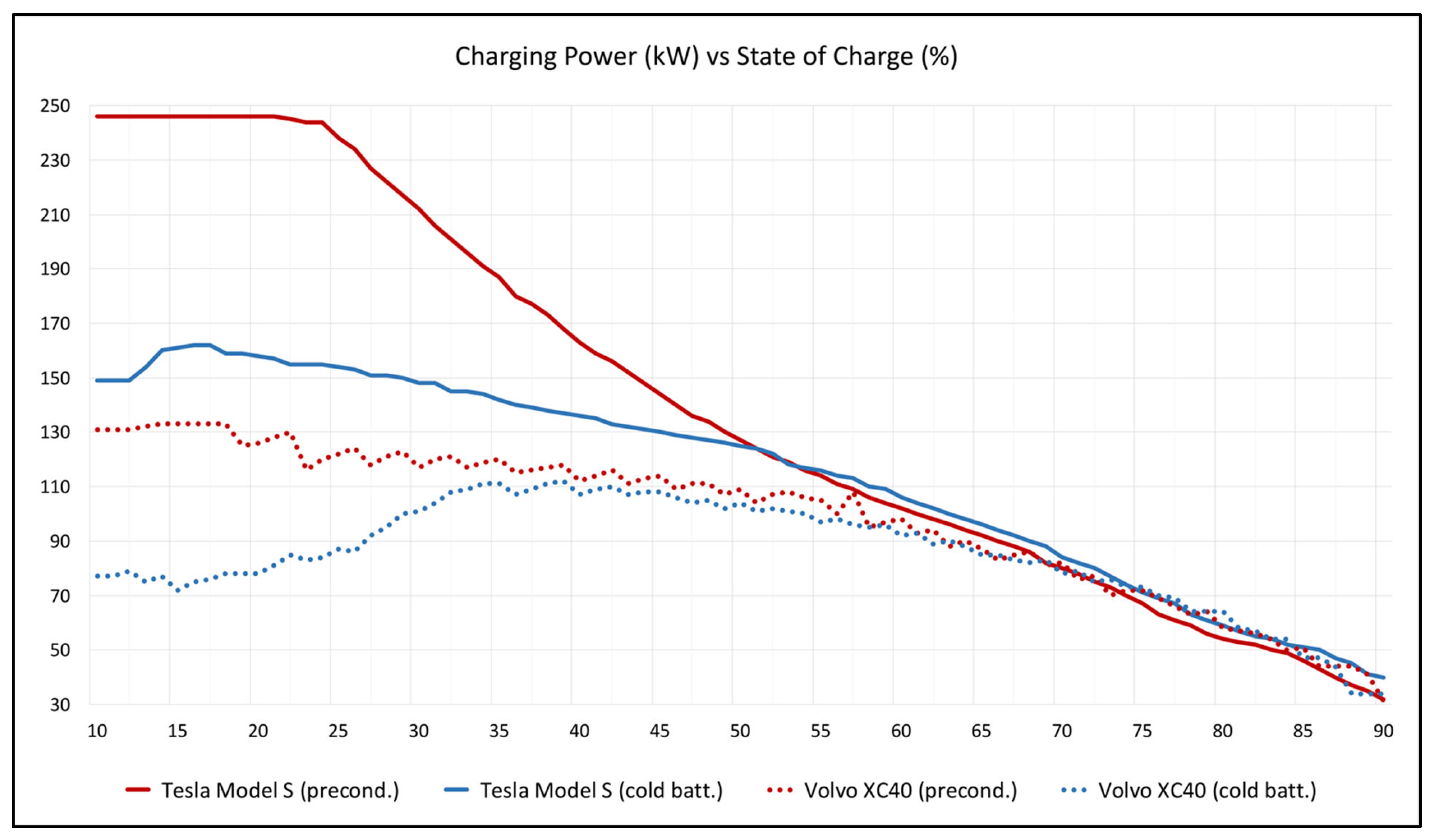

Moreover, as the battery’s fast-charging capabilities depend significantly on its temperature, several vehicles’ fast-charging power curves were registered both with the battery being cold and pre-conditioned prior to the charging session. Cold battery temperatures ranged from 8 to 14 °C, while preconditioning was carried out for 45 min prior to fast charging, raising battery temperatures between 41 and 52 °C. This was done to show the influence of battery temperature and pre-conditioning in the batteries’ fast-charging capabilities.

Figure 3 shows the difference between the fast-charging power curves of a cold and a preconditioned battery for both a Tesla Model S and a Volvo XC40. As can be seen, the initial charging power of a cold battery can be almost half that of the preconditioned battery. However, both power curves tend to converge over time, as the thermal management system—and the charging process itself—gradually warm up the cold battery. For the same charging duration, a preconditioned battery within the 10% to 50% SoC range can store up to twice the amount of energy as a cold battery. This difference can significantly reduce fast-charging sessions and, consequently, overall long-distance trip times.

In addition to the fast-charging curve, two other factors play an essential role in an EV’s long-distance travel capability. One of them is the battery’s net capacity, typically expressed in kWh. This net capacity refers to the usable energy content of the battery, as the actual capacity—known as gross or nominal capacity—is higher but partially unavailable for safety and durability reasons. The difference between the gross and net capacity is called the battery buffer and usually ranges from 5 to 10% of the nominal capacity.

The other factor that influences an EV’s long-distance travel capability is its energy consumption at highway speeds, typically measured in Wh/km or kWh/100 km. Although every EV has a standard rated consumption and range, these values vary significantly depending on the standardized test procedure under which the vehicle was tested and type approved. The most common standard test protocols are WLTP [

25] for Europe, EPA [

26] for the USA, and CLTC [

27] for China.

However, the standardized rated consumption of any of these protocols includes a mix of city, interurban, and highway driving. Since EVs tend to have significantly lower energy consumption in city driving—unlike ICE vehicles—the standardized ratings are not representative of real long-distance trips consumption.

For this reason, this research considers actual highway consumption values obtained under real-world conditions, as published by EV Database

https://ev-database.org/ (accessed on 8 March 2025). Moreover, as EV energy consumption can vary considerably (by up to 30%) depending on weather conditions, two scenarios were considered: the first, called Cold Weather, represents a worst-case scenario with an outside temperature of −10 °C and cabin heating in use; the second, Mild Weather, represents a best-case scenario with an outside temperature of 23 °C and no air conditioning. The low-temperature condition of −10 °C was selected because it represents a cold climate in which vehicles are still commonly operated in many countries.

Table 2 below shows the battery specifications and highway energy consumption for a sample of the 62 EVs analysed in this study. The battery net capacity and Mild and Cold Weather highway consumption for each vehicle analyzed in this study are provided in

Table A4 of

Appendix A.

Since most new battery electric vehicles can travel more than 300 km on a single charge, a 1000 km journey at highway speed was considered as long-distance trip. The first leg starts with a fully charged battery (100% SoC), and the first charging stop occurs when the battery charge reaches 10% SoC. The rest of the trip is divided into several charging legs, depending on the vehicle’s needs. The time required to recharge the battery from 10% to 90% SoC is calculated for each charging stop based on the vehicle’s previously obtained charging power curve, with each subsequent leg also ending at 10% SoC.

To ensure uniform and comparable testing conditions across all vehicles, the following assumptions were established:

All electric vehicles are assumed to support the Plug & Charge protocol, eliminating any time delays associated with initiating or ending the charging session.

Charging stations are assumed to consistently supply the power requested by the vehicles’ BMS throughout the charging process.

Battery degradation is not considered: all batteries are assumed to have a State of Health (SoH) of 100%.

Environmental conditions align with the previously defined “Mild” and “Cold” weather scenarios.

The assumed traffic conditions and road network enable continuous driving at a constant speed of 120 km/h.

Figure 4 presents an infographic outlining the testing methodology and the procedure used to determine the optimal charging strategy for each vehicle.

The real range (in kilometers) of each vehicle is calculated, according to Equation (1), by dividing the battery’s net capacity, expressed in kilowatt-hours (kWh), by the vehicle’s real energy consumption, expressed in watt-hours per kilometer (Wh/km).

On the other hand, the total charging time (in minutes) is equal to the number of charging stops (n) multiplied by the sum of the charging session duration and the detour time, as can be seen in the following Equation (2):

After that, the total trip time (in hours) is calculated by adding the total time spent charging to the driving time. The driving time is obtained by dividing the total distance (1000 km) by the cruising speed (120 km/h). Accordingly, the following Equation (3) is obtained:

This results in a driving time of 8.33 h, to which the total charging time must be added to obtain the full trip duration. Then, the minimum number of charging stops is determined by considering the previously calculated real range and the fact that each charging stop occurs when the battery’s state of charge (SoC) reaches 10%.

Finally, a MATLAB script calculates the total time required to recharge the battery, considering a range between the minimum number of charging stops and a maximum of 15 stops. It thereby determines the optimal number of stops that minimizes the total charging time—and therefore, the total trip time—under the assumption that there are sufficient fast-charging stations along the route and that each recharge includes a 5 min detour.

3. Results and Analysis

This section presents the key findings of the study for a representative subset of the 62 battery electric vehicles analyzed. Comprehensive results for all tested vehicles are provided in

Appendix A, where they are presented in tabular form.

Table 3 presents the top 5, among all 62 EVs, that achieve the largest reductions in total trip time when optimized charging strategies are applied under Mild Weather conditions. For each model, the table compares the minimum number of charging stops required to complete the 1000 km journey with the optimal number of stops derived from the charging power curves and consumption data. The “Time saved” column quantifies the improvement in total recharging time achieved by adopting the optimal strategy. The minimum and optimal charging stops, along with time saved in Mild Weather conditions for each vehicle analyzed in this study, are provided in

Table A1 of

Appendix A.

Under Mild Weather conditions, the Toyota bZ4X (64 kWh) exhibits the largest absolute time saving, reducing total recharging time by approximately 49.73 min when increasing the number of charging stops from three to six, thereby leveraging shorter and more efficient charging sessions. This corresponds to a 32.85% reduction compared to the minimum-stop strategy.

The Nissan Townstar (45 kWh) achieves a total recharging time reduction of 34.61 min, from 242.72 min with six stops to 208.11 min with nine stops, representing a 14.26% improvement. This gain is particularly notable for a small-capacity battery vehicle operating at high fast-charging frequencies.

The Volvo C40 (75 kWh) benefits from a time saving of 23.04 min, achieved by increasing stops from three to five, corresponding to an 18.00% reduction. The Polestar 2 (61 kWh) follows closely, with a 20.48 min reduction (16.33%) when increasing stops from three to five.

Finally, the MG Cyberster (75 kWh) achieves a time saving of 16.53 min (17.45%) by increasing stops from two to four, highlighting that even high-capacity battery EVs can benefit from optimized charging intervals under favorable ambient conditions.

These results indicate that, for most EV models, increasing the frequency of charging stops—thus operating more frequently within the battery’s higher-power charging range—can significantly reduce total recharging time, enhancing long-distance travel efficiency.

On the other hand,

Table 4 presents the five best battery electric vehicles achieving the highest time savings by using optimized charging strategies under Cold Weather conditions. As in

Table 3 (Mild Weather conditions), the results indicate that increasing the number of charging stops—thereby maintaining the battery within a higher charging power range—can significantly reduce total recharging time. However, the magnitude of time savings in cold conditions is substantially greater due to the more pronounced negative impact of low temperatures on energy consumption and charging performance. The minimum and optimal charging stops, along with time saved in Cold Weather conditions for each vehicle analyzed in this study, are provided in

Table A2 of

Appendix A.

Under Cold Weather conditions, absolute time savings are markedly higher due to the higher energy consumption and reduced charging power at low temperatures. The Toyota bZ4X (64 kWh) achieves the greatest improvement, reducing total recharging time by 116.00 min (45.09%) when increasing stops from four to eight.

The Nissan Townstar (45 kWh) records a 105.36 min saving (26.68%) when increasing stops from seven to ten. This result underscores the significant impact of optimization in small-battery vehicles affected by low-temperature charging limitations.

The Polestar 2 (61 kWh) achieves a 49.88 min reduction (25.01%) when increasing stops from four to eight, while the Volvo C40 (75 kWh) shows a 41.69 min saving (22.27%) with an increase from four to seven stops.

The Hyundai Inster (46 kWh) records the smallest absolute gain in this set, with a 35.45 min reduction (16.77%) when increasing stops from five to six, reflecting the influence of both battery size and charging profile on optimization outcomes.

A cross-comparison of

Table 3 and

Table 4 reveals that Cold Weather conditions amplify the absolute benefits of optimal charging strategies, with time savings more than doubling for the top performers compared to Mild Weather. For example, the Toyota bZ4X increases its absolute gain from 49.73 min to 116.00 min, and the Nissan Townstar from 34.61 min to 105.36 min. This disparity is primarily attributed to the higher energy consumption at low temperatures, and the lower charging power attainable at reduced battery temperatures, which prolongs each individual charging session; thus, breaking recharging into more frequent but shorter sessions mitigates the overall time penalty.

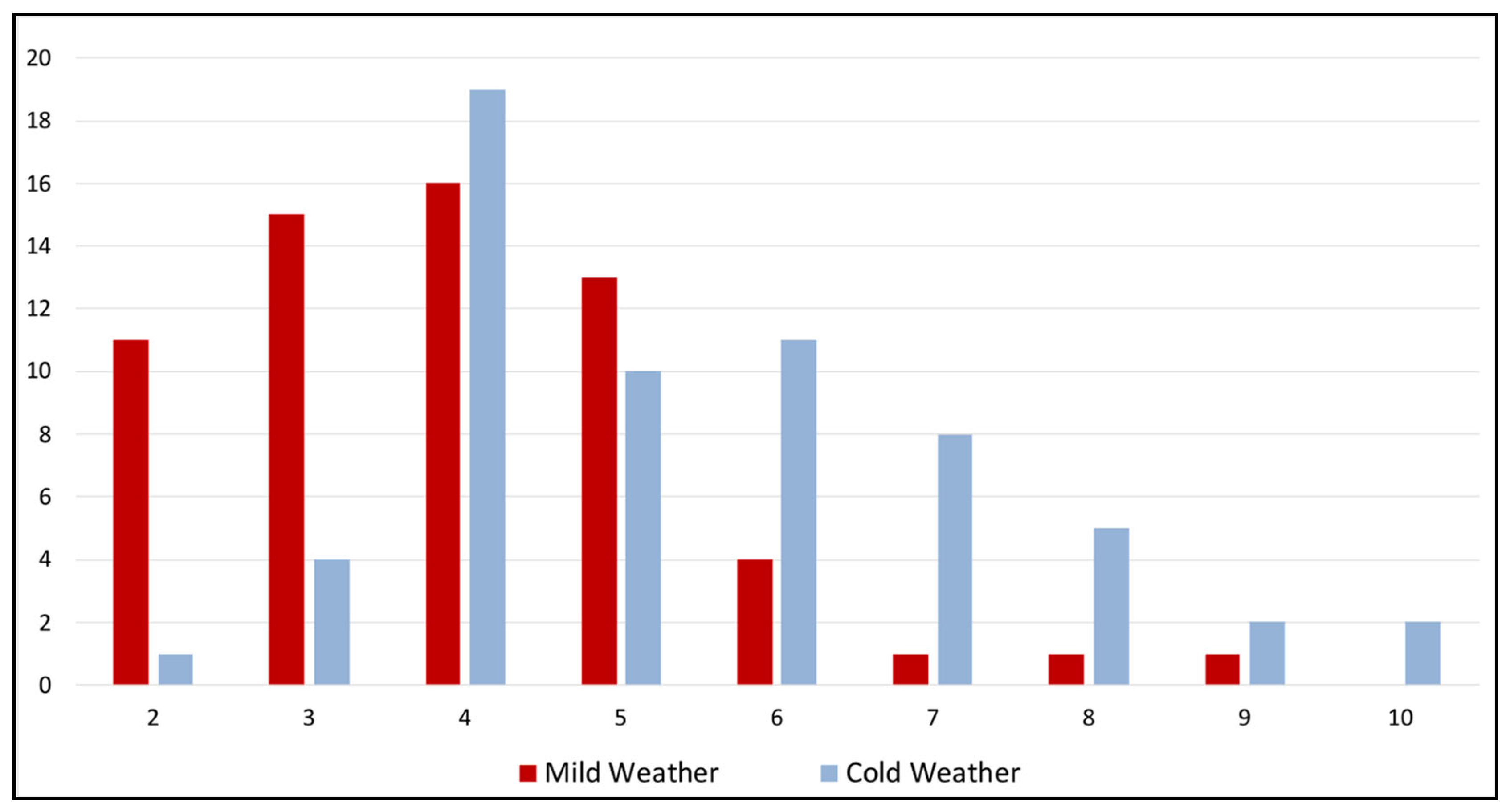

Figure 5 synthesizes the time-saving performance, in minutes, of the vehicles listed in

Table 3 and

Table 4, providing a direct visual comparison between Mild and Cold Weather scenarios. The bar plots highlight the substantial increase in absolute time savings under Cold Weather conditions across all models, confirming the trends identified in the tabular analysis. Notably, the Toyota bZ4X and Nissan Townstar display the most pronounced improvements, while vehicles with larger battery capacities such as the Volvo C40 and MG Cyberster show more moderate, though still significant, gains. This visualization underscores the importance of adapting charging strategies to environmental conditions in order to minimize total travel time during long-distance trips.

On the other hand,

Figure 6 illustrates the distribution of the optimal number of charging stops for the 62 EVs analyzed in this study, comparing Mild and Cold Weather scenarios. Under Mild Weather conditions, the optimal strategy typically involves between 3 and 5 charging stops, with the majority of vehicles clustering around 3 and 4 stops. By contrast, Cold Weather conditions lead to a systematic increase in the number of optimal stops, with most EVs requiring between 4 and 7 charging events. Notably, the mean value shifts from 3.94 stops in Mild Weather to 5.48 stops in Cold Weather, with several vehicles even exceeding 8 stops when operating under adverse thermal conditions.

These aggregated results complement the findings presented in

Table 3 and

Table 4, where individual vehicle case studies highlighted significant time savings achieved through optimized charging strategies. While

Table 3 and

Table 4 demonstrated the magnitude of time reduction for specific EVs,

Figure 6 generalizes these observations by showing how colder climates consistently increase the number of required charging stops. Taken together, the results confirm that Cold Weather not only extends charging times, but also alters the optimal trip planning strategy, thereby reinforcing the role of thermal management and battery preconditioning in mitigating performance losses.

Finally, the research determined an optimal mean SoC cutoff point of 58.7% under Mild Weather conditions and 59.6% under Cold Weather conditions, with a standard deviation of 10.1 and 10.4, respectively. These findings provide valuable guidance for EV users seeking to optimize long-distance trip times, as they indicate that charging beyond 70% SoC is generally not advantageous unless specific factors—such as the distance to the next fast-charging station—require it. The optimal SoC cutoff point for each vehicle analyzed in this study, under Mild and Cold Weather conditions, is provided in

Table A3 of

Appendix A.

5. Conclusions

The results have shown an optimal charging cutoff point much before 80% State of Charge, which is the typical manufacturer’s recommendation. This finding indicates that, in general, charging beyond 65% SoC is inefficient for minimizing trip duration, unless dictated by station availability constraints. Moreover, low ambient temperatures have demonstrated to increase the number of optimal charging stops. For both reasons, the optimal strategy is to increase the number of stops while keeping them shorter, generally every 2–3 h of driving.

From a user perspective, the results provide actionable insights for planning long-distance trips. By adopting charging strategies that stop sessions around 60–70% SoC and increasing the number of short, high-power sessions, drivers can reduce overall travel times by up to 30 to 40% under favourable conditions. In colder climates, preconditioning emerges as a key enabler, mitigating the otherwise severe penalties on charging efficiency.

Despite the robustness of the findings, certain limitations must be acknowledged. Firstly, the analysis assumes ideal charging infrastructure availability, whereas in real-world scenarios station occupancy, detours, and charging power fluctuations may alter the optimal strategies. Secondly, the study focused on highway driving at a constant speed of 120 km/h, which may not capture the full variability of on-road driving conditions. Additionally, the results are based on data from currently available EVs; future models with higher charging capabilities or larger battery capacities may shift the identified optimal cutoff point. Future research should therefore integrate dynamic traffic simulations, station availability models, and seasonal energy mix assessments to provide a more comprehensive evaluation of EV long-distance travel feasibility.