1. Introduction

The tomato originates from the western coast of South America as well as the high Andes Mountains, stretching from the center of Ecuador to northern Chile and the Galápagos Islands [

1]. Tomatoes are one of the most popular vegetables worldwide, valued for their taste, nutrition, and consumer preference. According to the Food and Agriculture Organization (FAO), approximately 189 million tons of tomatoes are produced worldwide, with China accounting for 67.5 million tons, followed by India and Turkey with 21.1 million tons and 13 million tons, respectively [

2]. Tomatoes contain carbohydrates, organic acids, amino acids, vitamins, pigments, various mineral, and phenolic compounds that are beneficial for human health [

3]. Minerals such as calcium (Ca), potassium (K), sodium (Na), phosphorus (P), magnesium (Mg), sulfur (S), and chlorine (Cl) are crucial for human health. These minerals need to be present within the human body at levels of more than 50 mg/day [

4]. Minerals such as calcium, magnesium, potassium, phosphorus, and sodium, while comprising a small fraction of a vegetable’s dry matter, are critical to its nutritional value and quality [

1,

2,

3]. Their presence highlights the importance of analyzing and optimizing mineral content in crops for human consumption, which is vital for improving crop quality [

5].

The analysis of macro- and micro-elements that play a crucial role in human health and nutrition is an important aspect for studies related to cultivation, processing, and breeding. These elements are quantified through a series of laboratory analyses, which demand significant time, manpower, energy, and high cost. Furthermore, there is a growing challenge in processing and interpreting the vast amount of data acquired through analysis. This highlights the need for alternative, efficient, and cost-effective approaches. In this context, the present study aims to explore the potential of machine learning techniques for predicting the mineral content of tomato (

Solanum lycopersicum L.) fruits. The specific research objective is to develop predictive models that can estimate macro- and micro-element concentrations based on spectral and image data, thus reducing reliance on conventional laboratory procedures. The study also addresses the problem of limited existing research on using machine learning for mineral prediction in tomatoes, compared to the relatively well-studied parameters such as color, size, or phytochemical content. In recent years, significant research has been carried out on the use of machine learning and advanced analytical methods in studies on tomato (

Solanum lycopersicum L.) quality and nutrient content prediction. For example, spectrophotometric analyses and multiple regression models have been successfully applied to determine chlorophyll and carotenoid dynamics during the development of tomato fruit [

6]. Similarly, the prediction of phytochemicals such as lycopene and β-carotene with artificial neural networks and portable spectroscopic devices demonstrated the potential of these techniques in nutrient profile analysis [

7,

8]. In addition, methods such as machine vision and deep learning have provided effective results in the assessment of physical properties and disease status of tomatoes [

9,

10]. These studies prove that machine learning offers a wide range of applications in the prediction of tomato quality parameters and also holds promise for the analysis of less studied parameters such as mineral content. However, a significant research gap exists in the application of machine learning techniques to predict the mineral element composition of tomatoes. This is an underexplored area with significant potential to advance both agricultural practices and nutritional assessment. Therefore, this study aims to develop and validate machine learning models for accurately predicting the macro- and micro-element content in tomatoes, thereby addressing the current limitations of traditional analytical approaches and providing a foundation for more efficient quality assessment methods.

The effects of environmental and postharvest factors on the nutritional components of tomatoes play a critical role in the development of prediction models. In a study conducted on this subject, the lycopene content of tomatoes stored at different temperatures and times was estimated by multiple regression analysis. Hydroponically grown tomatoes were stored at 10, 15, 20, 25, and 30 °C for 7 d after harvest, and the a* and b* values were calculated by measuring the color changes before and after storage with a chromometer. The lycopene content was modeled using color, temperature, and time variables before storage, and it was determined that the lycopene content increased when the tomatoes were stored at 20 °C and above (R

2 = 0.76) [

11]. In another study, the sensitivity of tomatoes to mechanical impacts was evaluated and bruise prediction models were developed [

12]. Within the scope of the study, the impact forces that the tomatoes were exposed to during transportation and processing were measured with acceleration sensors, and it was determined that the highest impact levels occurred during placement in and removal from harvest crates. Models were developed using multivariate analysis methods such as linear regression, artificial neural networks, and logistic regression for rot prediction. The findings showed that high temperature (>20 °C) and low relative humidity increased rot formation, and the probability of rot increased as the impact force increased [

12]. On the other hand, the effects of storage temperature and duration on rot formation and quality deterioration in tomatoes were investigated by the same study. In the experiments, steel balls with a certain mass were dropped on tomatoes from different heights (20, 40, and 60 cm) in order to determine the rot formation threshold, and then the samples were stored at 10 and 22 °C for 10 d, and physical, chemical, and nutritional changes were analyzed every 2 d. Six different regression models were created to predict rot area and quality changes, and it was determined that factors such as storage time, rot area, weight loss, color changes, total soluble solids, and pigment content changed significantly (

p < 0.05) as the impact level and temperature increased. The findings showed that firmness, brightness, and color saturation decreased significantly at 22 °C and at high impact levels. Regression models with R

2 values ranging from 0.76 to 0.95 showed strong performance in predicting rot formation and quality losses [

13]. In addition to these studies, a Decision Support System (DSS) was developed to determine the ideal harvest time by estimating the total soluble solids (°Brix) content in industrial tomato cultivation. Within the scope of the study, a data set consisting of 33 input variables was created, including quality data (pH, Bostwick, L, a/b, average weight, °Brix), hybrid type used, weather conditions, and soil data for six different growing periods in the northwestern Peloponnese region of Greece. Thirteen different machine learning algorithms were tested, and the k-nearest neighbor (kNN) algorithm was selected as the fastest and most effective method. It was determined that the estimated °Brix values showed a similar pattern to the real °Brix values. This DSS, which uses real-time weather data as an input, is considered an important tool to help farmers determine the ideal time to harvest the best-quality tomatoes [

14]. Finally, a study was conducted to determine the yield gap, analyze production constraints, and increase yield in greenhouse tomato cultivation [

15]. A total of 110 greenhouse tomato cultivations in the southern region of Uruguay were studied during the 2014/15 and 2015/16 seasons, and the yields were compared with the potential and achievable yields. The potential yield was calculated with a simulation model based on photosynthetic active radiation (PAR) and light use efficiency, and the assimilation distribution and fruit yield were estimated using the TOMSIM model. The yield gap was determined as 44% or 10.7 kg/m

2 compared to the potential yield. The study showed that yield can be increased by reducing leaf pruning and increasing plant density in long summer and short spring/summer cultivation, while higher yields can be achieved in autumn cultivation by early planting, reducing leaf pruning, and increasing greenhouse light transmittance [

15]. A similar approach is suggested for the estimation of selected minerals in beef-type tomatoes and the integration of data related to both agronomic conditions and postharvest processes into machine learning algorithms. However, studies focusing on the estimation of the mineral content of tomatoes are limited in the current literature. In particular, the rapid analysis of minerals such as potassium, calcium, and magnesium is of great importance in terms of agricultural productivity and human nutrition. This study addresses the gap in the existing literature regarding the lack of robust, non-destructive techniques for the estimation of the mineral content in tomatoes, with a particular focus on potassium, calcium, and magnesium. Inspired by previous methods such as vis–NIR spectroscopy and multivariate analysis [

16,

17], the current study aimed to present an optimized machine learning model for the estimation of the mineral content in beef-type tomatoes. The findings obtained will provide an innovative contribution both for mineral optimization of producers and for consumer health.

In line with this objective, the nutritional elements of a beef-type tomato variety were predicted using machine learning methods. Three supervised machine learning techniques were used: k-nearest neighbors (kNN), artificial neural networks (ANNs), and Support Vector Regression (SVR). The prediction performance of the models was evaluated using various metrics, including mean absolute percentage error (MAPE), mean absolute error (MAE), root mean square error (RMSE), mean square error (MSE), and R-squared (R2). Additionally, box plots and scatter plots were used to visually represent the model performances.

3. Results and Discussion

The statistical results obtained as a result of the quality analyses performed on the tomatoes used in the experiments are shown in

Table 1. All variables used in the study take continuous values.

The mean concentration of calcium is 360.62 mg/100 g, with a moderate standard deviation (87.62), indicating moderate variability. The negative kurtosis (−0.57) suggests a platykurtic distribution—flatter than normal—with fewer outliers and a relatively uniform spread. Potassium exhibits the highest mean (2305.29 mg/100 g) and variance (132,832.67), reflecting substantial variation across samples. A kurtosis of −0.35 indicates a slightly platykurtic, well-distributed data set without extreme tails. Magnesium displays a stable distribution with a mean of 115.41 mg/100 g and low variability (SD = 23.58). Kurtosis (−0.41) implies a near-normal, symmetric distribution. The most notable feature for phosphorous is its high kurtosis (3.67), indicating a leptokurtic distribution—data is heavily concentrated around the mean with relatively thin tails. This suggests that P levels are generally consistent.

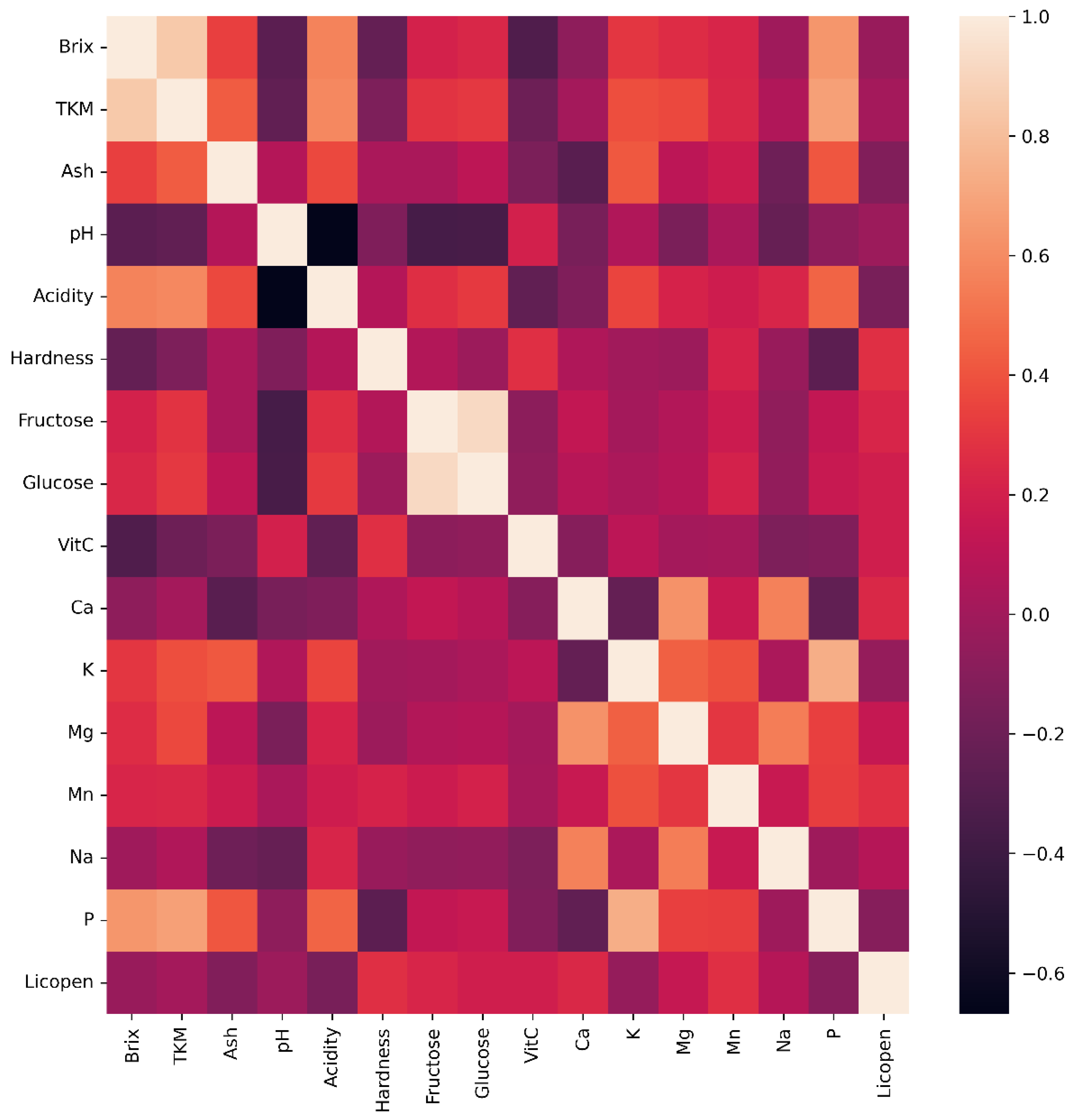

The heat map (

Figure 3) shows the correlations of the variables used. Feature selection means eliminating unnecessary or ineffective independent variables when predicting the target variable. Using forward and backward feature selection methods, unnecessary variables were not included in the analysis. As a result of both feature selection methods, the color-related variables L (lightness coordinate in CIE Lab* color space), a (green-red color component), b (blue-yellow color component), c (chroma saturation), and h (hue angle) and vitamin C were not included in the model.

In this study, which focused on estimating the nutritional content of tomatoes, all samples were obtained under consistent growing conditions to minimize the influence of external environmental factors such as temperature and humidity. This controlled approach allowed for a more accurate assessment of the ability of machine learning models to predict mineral content based solely on the internal properties of tomatoes. Three different machine learning methods were used in this context: artificial neural networks, Support Vector Regression, and k-nearest neighbor. The performance of the models was analyzed using evaluation metrics results and is presented in

Table 2.

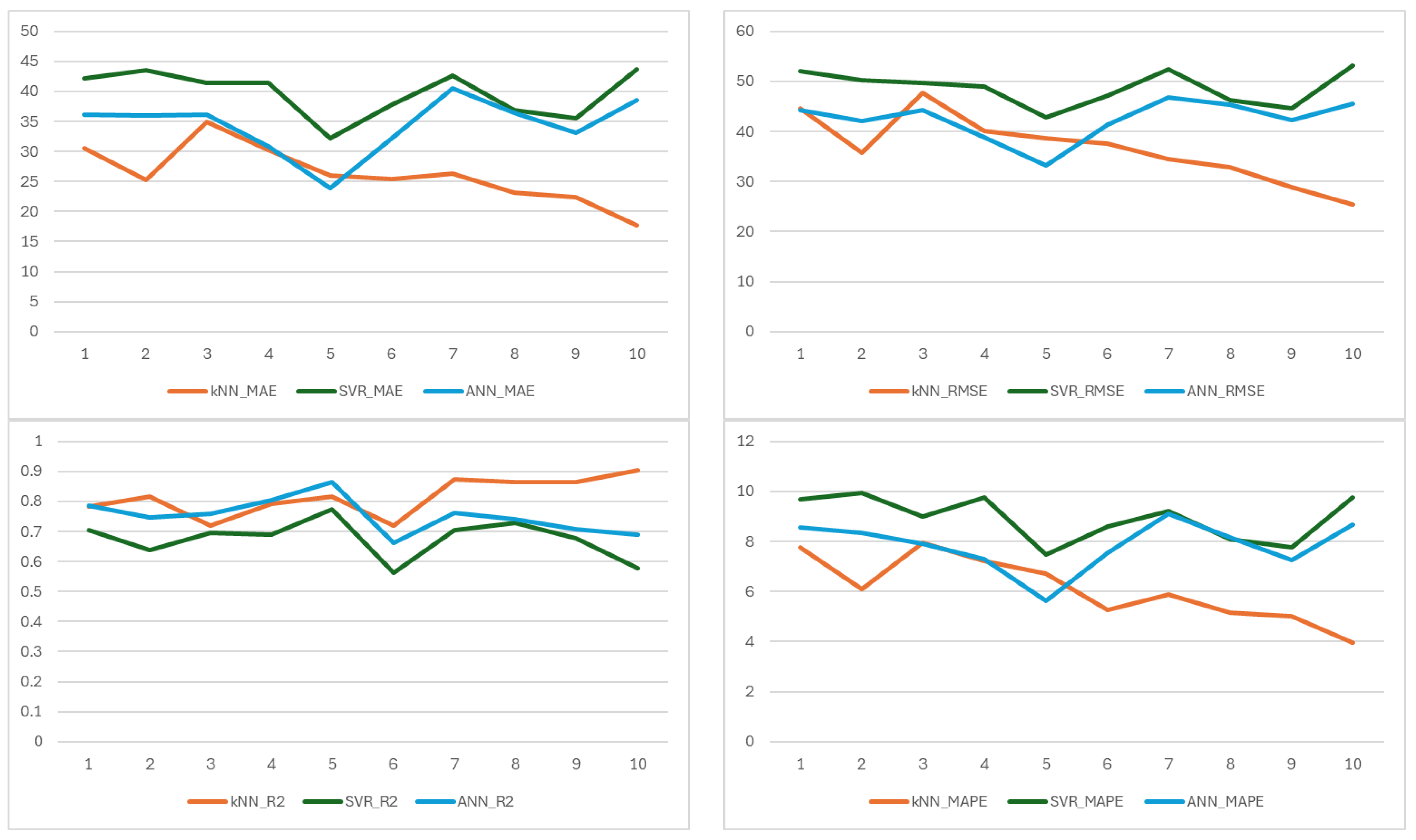

On a fold basis, the metric results of each ML model established to estimate the amount of potassium element are shown in

Figure 4. kNN consistently achieved the highest R

2 values across all 10 folds. This consistency highlights kNN’s strong generalization ability and its effectiveness in capturing non-linear relationships in the data. The SVR model performed poorly in several folds (e.g., folds 6, 9, and 10), suggesting inadequate model fit and a tendency toward underfitting. ANN demonstrated high R

2 in fold 4, which suggests that the data in this partition may follow a predominantly linear pattern, which ANN can effectively model. However, the lower values in other folds reveal its limitation in capturing non-linear interactions, a common constraint of linear models.

kNN achieved the lowest RMSE values in 8 out of 10 folds. The low RMSE in folds 5, 8, and 10 reflects high predictive accuracy in those subsets. SVR recorded the highest RMSE values across most folds. The exceptionally high error in fold 6 (RMSE = 312.48) suggests a significant prediction failure, possibly due to poor handling of outliers or non-linear patterns. ANN showed intermediate performance. While ANN outperformed kNN in fold 4, it generally exhibited higher errors in other folds, indicating inconsistent performance.

MAE provides a robust measure of average prediction error, and it is less sensitive to extreme values than RMSE. kNN again demonstrated superior performance. The consistently low MAE across folds underscores kNN’s ability to minimize average prediction deviation. SVR had the highest MAE in all folds except fold 2. This indicates that SVR’s predictions deviate substantially from actual values on average. ANN performed better than SVR but worse than kNN.

MAPE expresses prediction error as a percentage of actual values, enabling scale-independent comparison. kNN achieved the lowest MAPE values in all folds. SVR exhibited the highest MAPE. Values exceeding 10% in fold 6 suggest unacceptably high relative errors in certain data partitions. ANN showed best performance in fold 4 (4.63%). While acceptable, it consistently underperformed compared to kNN.

The metric results of each ML model established to estimate the amount of phosphorus element are shown in

Figure 5 on a fold basis. The kNN model demonstrates the most consistent and lowest MAE values across 10 folds. In contrast, the SVR model exhibits higher errors, yielding an average MAE of around 15.30. The ANN model shows intermediate performance. Notably, ANN’s performance deteriorates significantly in fold 6, indicating potential instability or sensitivity to data partitioning.

The kNN model again demonstrates the most favorable performance. SVR shows higher RMSE values, fluctuating between 15.50 (fold 10) and 27.07 (fold 7), with a mean RMSE of about 20.69. The ANN model exhibits the highest variability. The notably high RMSE values for both SVR and ANN in folds 6 and 7 suggest that these models struggle with certain data subsets, possibly due to overfitting, inadequate generalization, or sensitivity to outlier instances within those folds.

The kNN model achieves the lowest MAPE values with a mean MAPE of approximately 4.04%. This indicates that, on average, kNN’s predictions deviate from actual values by less than 5%, reflecting high relative accuracy. In contrast, the ANN and SVR models show even greater variability. The exceptionally high MAPE in fold 6 for ANN (over 10%) highlights a significant failure in relative prediction accuracy in that partition, further underscoring its instability.

The kNN model consistently achieves high R2 values. This indicates that kNN explains a substantial portion of the target variance across all folds. SVR performs moderately, with a mean of approximately 0.681. The ANN model displays the widest variation in R2. The extremely low R2 in fold 6 suggests a near-complete failure of ANN to capture the underlying data structure in that fold, likely due to poor convergence, overfitting, or suboptimal hyperparameter settings.

The metric results of each ML model established to estimate the amount of magnesium element are shown in

Figure 6 on a fold basis. Analysis of MAPE values reveals that the kNN model achieved the lowest average MAPE of 4.77% across all folds, outperforming both SVR (6.05%) and ANN (5.48%). kNN demonstrated highly accurate percentage-wise predictions and consistent performance across folds. In contrast, the SVR model exhibited notably high MAPE values in certain folds—such as 7.91% in fold 9—suggesting poor generalization and instability in prediction accuracy under specific data splits.

With respect to RMSE, the kNN model demonstrated the best average performance, achieving particularly low RMSE values in folds 4 and 5. The SVR model showed high RMSE values, especially in fold 9 (14.26), indicating the presence of significant prediction errors and inconsistent model behavior. Although the ANN model performed comparably to kNN in some folds, it exhibited higher variability, with elevated RMSE values in folds 3 and 9 (11.98 and 12.76), reflecting less stable predictive accuracy.

In terms of R2, the kNN model achieved the highest average coefficient of determination (0.838), surpassing SVR (0.770) and ANN (0.802). kNN consistently explained a high proportion of variance, with R2 values exceeding 0.85 in folds 1, 4, 5, and 7. The SVR model, on the other hand, showed a notably lower average R2, indicating limited explanatory power. While the ANN model performed moderately, it generally underperformed compared to kNN across most folds, except in fold 5, where it achieved the highest R2 (0.920).

Overall, among the three models evaluated, kNN consistently demonstrated superior performance across all evaluation metrics—MAPE, RMSE, and R2—indicating robustness, accuracy, and reliability. SVR exhibited the weakest average performance with high variance across folds, while ANN showed moderate but less stable results.

The metric results of each ML model established to estimate the amount of calcium element are shown in

Figure 7 on a fold basis. In terms of MAE, the kNN model consistently achieved the lowest error values across all folds. The ANN model achieved a relatively low MAE of 23.87 in fold 5 but generally performed worse than kNN and comparably to or slightly better than SVR in other folds.

Regarding MAPE, kNN achieved an average value of 6.20%, significantly outperforming SVR (8.83%) and ANN (7.54%). In contrast, SVR exhibited consistently high MAPE values. ANN showed moderate performance in MAPE, surpassing SVR but remaining less accurate than kNN.

For RMSE, the average values were 36.51 for kNN, 47.84 for SVR, and 42.05 for ANN. kNN achieved a notably low RMSE in folds 9 and 10 (28.89 and 25.41), demonstrating robustness in minimizing large errors. SVR, however, showed a high RMSE across all folds, indicating instability and sensitivity to outliers. Although ANN achieved the lowest RMSE (33.19) in fold 5, it generally underperformed compared to kNN in the remaining folds.

The R2 results revealed that kNN explained an average of 81.8% of the variance in the target variable, significantly higher than SVR (68.7%) and ANN (75.7%). kNN achieved R2 values above 0.75 in 8 out of 10 folds, indicating strong and consistent explanatory power. SVR, by contrast, remained below 0.60 in folds 6, 9, and 10 and only exceeded 0.70 in two folds, reflecting poor generalization. ANN achieved the highest R2 (0.865) in fold 5 but exhibited higher variability and lower overall consistency compared to kNN.

Paired

t-tests and Wilcoxon’s signed-rank tests were performed using MAPE values in 10 folds for each nutrient element. These tests were used to evaluate whether the differences in performance between models were statistically significant. The results are presented in

Table 2. The ML models were compared with each other. However, since the best model among the ML models was the one created with kNN, the model created with PLS was only compared with the model created with kNN.

Potassium: kNN achieved a lower MAPE than SVR in 9 out of 10 folds, and it was lower than ANN in 8 out of 10 folds. The mean MAPE difference is 3.18 percentage points (kNN vs. SVR) and 1.65 points (kNN vs. ANN). Both parametric (t-test) and non-parametric (Wilcoxon') tests confirm that kNN significantly outperforms both SVR and ANN in predicting potassium. Phosphorus: kNN achieved a lower MAPE than both SVR and ANN in all 10 folds. The average MAPE reduction is 3.21 points (vs. SVR) and 3.38 points (vs. ANN), which is highly significant in practical terms. Both statistical tests strongly support the superiority of kNN. Magnesium: kNN achieved a lower MAPE than SVR in 9 out of 10 folds, and it was lower than ANN in 8 out of 10 folds. Calcium: The MAPE advantage is consistent and statistically significant. kNN outperformed SVR in 9 out of 10 folds, and ANN in 8 out of 10 folds. The average MAPE difference is 2.83 points (vs. SVR) and 1.74 points (vs. ANN), both statistically significant.

Finally, the kNN model consistently outperforms both ANN and SVR across all four nutrients. The performance advantage of kNN is statistically significant (p < 0.05) in all pairwise comparisons against ANN and SVR. The most dramatic improvement is observed for phosphorus (P), where kNN reduces MAPE from ~7.2% to 3.95%, nearly halving the prediction error. No significant difference was found between SVR and ANN for any nutrient.

Table 3 summarizes the performance of the three machine learning algorithms (ANN, SVR, and kNN) and traditional statistical methods (Partial Least Squares Regression (PLS)) for predicting each nutrient element using R

2, RMSE, MAE, and MAPE metrics.

Machine Learning Models Results

Calcium: The best performance was achieved by kNN (R2 = 0.8150, RMSE = 36.59, MAE = 26.17, MAPE = 6.10%), followed by ANN (R2 = 0.7521) and SVR (R2 = 0.6749). The R2 value of 0.815 indicates that kNN explains over 81% of the variance in Ca content. A low MAPE (6.10%) confirms high predictive accuracy. Ca has a relatively normal, platykurtic distribution. kNN benefits from local similarity in feature space, making it effective when data points cluster naturally. Although SVR is powerful, especially for non-linear structures, kernel selection or hyperparameter tuning may be insufficient here. Similarly, ANN may not have fully utilized its potential due to hyperparameter tuning errors.

Potassium: Similarly, kNN is again the best model (R2 = 0.7818, RMSE = 164.53, MAE = 113.13, MAPE = 4.48%), outperforming ANN (R2 = 0.6929) and SVR (R2 = 0.5573). MAPE indicates very good prediction accuracy at 4.48%. ANN is in second place. SVR, on the other hand, is quite weak. Potassium’s broad distribution (variance: 132,832.67) and high mean may have amplified the difficulty for SVR and may create generalization difficulties in margin-based models such as SVR. kNN may have made more accurate predictions by properly evaluating the neighborhood of high-value examples. Although ANN’s RMSE is high, its low MAPE indicates that while the absolute error is high for large values, the proportional error is low.

Magnesium: For Mg, all models performed relatively well, reflecting the variable’s narrow distribution and lower variance (553.16). kNN again achieved the best performance (R2 = 0.8349, RMSE = 9.19, MAE = 6.43, MAPE = 4.76%), slightly outperforming ANN (R2 = 0.8142) and SVR (R2 = 0.7781). Stable target variables such as Mg are easier to predict, and kNN excels particularly in cases of low error tolerance.

Phosphorus: For P, kNN also provided the best results (R2 = 0.8723, RMSE = 12.75, MAE = 8.35, MAPE = 3.95%), while ANN and SVR showed lower but comparable performance (R2 = 0.6596 and R2 = 0.6709, respectively). kNN dramatically outperforms ANN and SVR. R2 = 0.8723 is exceptional, explaining nearly 87% of P’s variability. MAPE of 3.95% indicates very high practical accuracy. P’s leptokurtic distribution (kurtosis = 3.67) means that values are tightly clustered around the mean. kNN excels in such dense regions by leveraging proximity. ANN underperformed, possibly due to limited model complexity or insufficient hyperparameter optimization.

Overall, kNN consistently outperformed both ANN and SVR across all nutrient elements. This indicates that the relationships between explanatory variables and nutrient elements may be highly local and non-linear, conditions under which kNN performs well. ANN generally ranked second, where it performed moderately well, particularly on Mg and K, suggesting its potential for capturing non-linear interactions, albeit with a limited data size. SVR exhibited the weakest performance, likely due to suboptimal kernel and parameter selection, especially for high-variance targets such as Ca and K.

Traditional Statistical Model Results

Calcium: The PLS model performs strongly (R2 = 0.798, MAPE = 6.78%), outperforming ANN and SVR, which confirms its status as a robust baseline for spectral data. However, the kNN model still achieves statistically significant improvements over PLS in all metrics (p < 0.05 via paired t-test on MAPE). This demonstrates that kNN effectively captures both linear and non-linear relationships in the data.

Potassium: PLS performs very strongly, achieving R2 = 0.776 and MAPE = 5.81%, outperforming ANN and SVR. However, kNN still achieves better performance in all metrics, especially in MAPE (4.48% vs. 5.81%). According to the paired t-test results, kNN vs. PLS (p = 0.0043) on per-fold MAPE is statistically significant.

Magnesium: PLS is the best traditional model (R2 = 0.828), slightly better than ANN and SVR. kNN marginally outperforms PLS in all metrics. According to the paired t-test results, kNN vs. PLS (MAPE, p = 0.0021) is significant at α = 0.05.

Phosphorus: PLS is highly effective (R2 = 0.861, MAPE = 4.32%), close to kNN, but kNN still outperforms PLS in all metrics. According to the paired t-test results, kNN vs. PLS (MAPE, p = 0.0005) is significant.

kNN consistently outperforms PLS in all metrics. The performance advantage of kNN is statistically significant (p < 0.05) in all cases. SVR performs poorly compared to PLS and kNN.

Abdipour et al. [

36] made predictions with a value of R

2 = 0.861, RMSE = 0.563, and MAE = 0.432 with the ANN model. In another study, Guo et al. [

37] made predictions with a value of R

2 = 0.871 and RMSE = 1.474 with the Support Vector Machine model. Huang et al. [

5] made predictions with a value of R

2 = 0.856, RMSE = 0.1020, and MAE = 0.0793 with the ANN model. Lan et al. [

38] made predictions with a value of R

2 = 0.974 and RMSE = 0.258 with the ANN model. Torkashvand et al. [

39] made predictions with a value of R

2 = 0.850 and RMSE = 0.539 with the ANN model. In this study, the best R

2 = 0.8150, RMSE = 36.59, MAE = 26.17, and MAPE = 6.10% for calcium was obtained using the model established with the kNN method. The best R

2 = 0.7818, RMSE = 164.53, MAE = 113.13, and MAPE = 4.48% for potassium was obtained using the model established with the kNN method. The best R

2 = 0.8349, RMSE = 9.19, MAE = 6.43, and MAPE = 4.76% for magnesium was obtained using the model established with the kNN method. The best R

2 = 0.8723, RMSE = 12.75, MAE = 8.35, and MAPE = 3.95% for phosphorous was obtained using the model established with the kNN method.

Many ML methods are used to solve problems in the field of agriculture [

40,

41,

42]. In this study, three methods with different working principles, namely SVR, ANN, and kNN, were preferred, predictions were made, and comparisons were made between the results. The kNN method was more successful than all the models established. Kumar et al. (2024) highlighted the success of the kNN algorithm in creating recommendation systems for precision agriculture [

43].

While the study focuses on the application of machine learning techniques, especially kNN, ANN, and SVR, comparing these approaches with traditional chemical analysis methods is very useful in terms of the importance of the research. Traditional methods for nutrient analysis, such as inductively coupled plasma (ICP) and atomic absorption spectroscopy (AAS), are quite accurate but suffer from significant disadvantages, including high costs, time consumption, and the need for sample destruction [

44]. In contrast, machine-learning-based approaches, as shown in this study, offer a faster and scalable solution for nutrient prediction [

37]. Several recent studies have explored the integration of machine learning with spectroscopic methods such as near-infrared (NIR) and hyperspectral imaging for nutrient prediction and have demonstrated their potential to offer comparable or superior accuracy while being significantly more efficient [

45,

46]. Furthermore, our study highlights the advantages of machine learning models in addressing the limitations of simpler statistical approaches. For example, compared to linear regression, machine learning models such as ANN and kNN are better equipped to process complex, high-dimensional data sets and can decipher non-linear relationships between features that traditional methods might miss [

47,

48]. This flexibility and robustness make machine learning an increasingly popular choice for agricultural data analysis, as it allows for the inclusion of various variables without making strong assumptions about the data structure.

According to the results of the models established for the prediction of nutrient elements, the training and test phase values are extremely close to each other. In this case, it can be said that there is no overlearning problem during the training phase. It can also be considered as an indication that the established models can make consistent predictions for nutrient elements in tomatoes. The ability to predict nutrient levels in tomatoes in a non-destructive way using machine learning has important practical implications, especially in precision agriculture. These models can be integrated into automated systems for real-time nutrient analysis and provide agricultural enterprises with accurate and immediate data to optimize fertilization applications, increase crop yield, and improve quality control [

39,

49]. In addition, the models can be adapted to evaluate various other crops, facilitating their use in large-scale agricultural and greenhouse operations, which are increasingly adopting digital agricultural tools for monitoring and decision-making [

37,

47]. In addition, such models can support sustainability goals by reducing resource wastage and minimizing the need for chemical testing, which is both costly and time-consuming [

46,

50].

Compared to conventional analytical methods widely used for mineral content determination, such as atomic absorption spectroscopy (AAS) and inductively coupled plasma–optical emission spectroscopy (ICP-OES) [

51], the machine-learning-based approach proposed in this study offers several practical advantages. While conventional methods provide highly accurate and reliable quantitative results, they require complex sample preparation processes and are time-consuming, destructive, and costly. In contrast, the integration of machine learning with non-destructive measurements (e.g., vis–NIR spectroscopy or image-based features) provides rapid and relatively low-cost predictions suitable for in situ or real-time applications. Several previous studies have successfully applied regression models or artificial neural networks to predict chemical or physical properties in tomatoes; however, limited attention has been paid to the prediction of specific mineral elements. The current study addresses this gap by focusing on the prediction of essential minerals such as potassium, calcium, and magnesium and demonstrates that the proposed models can achieve promising predictive performance with minimal preprocessing. This approach may be particularly useful for large-scale agricultural monitoring and decision-making processes where efficiency and non-destructiveness are critical [

48]. Therefore, the practical applications of this research are very important not only to improve agricultural practices but also to advance the transition to more efficient and environmentally friendly agricultural practices.