1. Introduction

Lentinula edodes is the second most cultivated mushroom species worldwide, with a history of over 800 years of cultivation. It is not only delicious and unique in flavor but also possesses health benefits such as tumor prevention and immunity enhancement [

1,

2]. In breeding, cultivation, and quality evaluation, the phenotypic features of

Lentinula edodes, such as pileus diameter and stipe thickness, are crucial: they are not only the key basis for optimizing production and screening good varieties but also an important reference for consumers to intuitively judge the appearance and freshness of products [

3]. Since the beginning of the 21st century, the production of

Lentinula edodes has continued to increase. In some years, market demand has exceeded supply, leading to an imbalance between supply and demand [

4]. Accurate prediction of the yield of

Lentinula edodes fruiting bodies can optimize planting plans and resource allocation, stabilize market supply and demand, and ensure industrial profitability. Therefore, real-time detection of phenotypic features and fresh weight of

Lentinula edodes is particularly important in the cultivation process and market regulation.

Traditional methods for measuring the phenotypic features and yield of

Lentinula edodes primarily rely on manual measurement, which is cumbersome and inefficient. Similarly to

Lentinula edodes, the size and length characteristics of various crops can intuitively reflect their growth status and quality, representing a key aspect of crop phenomics research. With the support of machine vision technology, methods for measuring phenotypic parameters of various crops have been successively developed [

5,

6,

7]. He et al. [

8] extracted the edge contours of soybean pods using a Gaussian filter function and Canny edge detector and calculated the length, width, and area of the pods by combining the minimum bounding rectangle method and the maximum inscribed circle method. Lou et al. [

9] used a UNet model with an attention mechanism to segment images of broadleaf tree seedlings, combined with a generative adversarial network to restore occluded parts of branches, and extracted phenotypic data such as seedling height, stem diameter, and canopy width. Xu et al. [

10] used three digital cameras to acquire images of maize plants and reconstructed 3D point clouds to extract detailed phenotypic parameters such as stem length, branch length, number of branches, and branch angle. Based on measured crop size and length data, crop and fruit yield can also be inferred, providing data support for crop breeding and yield prediction [

11,

12,

13]. Fass et al. [

14] used the SAM model to segment tomatoes in images and count the number of pixels in the tomato regions, successfully predicting seven indicators of tomato yield, firmness, and total soluble solids. However, the above methods for phenotypic feature measurement still face issues such as low automation, high requirements for detection environments, and low efficiency, making them unsuitable for direct application in phenotypic feature extraction of

Lentinula edodes fruiting bodies.

In recent years, various object detection and segmentation models have rapidly developed, facilitating the automated identification of crop types, quantities, and characteristics such as pests and diseases, injecting new vitality into crop phenomics research [

15,

16,

17,

18,

19]. Sun et al. [

20] used the YOLOv5 model to successfully detect moldy areas in rice grains contaminated by

Aspergillus niger,

Penicillium citrinum, and

Aspergillus griseus, with accuracy rates of 89.26%, 91.15%, and 90.19%, respectively. Ding et al. [

21] used MobileNetV2 as the backbone of the DeepLabV3+ model to successfully identify and segment lesions on apple surfaces, addressing issues such as blurred edges and large shape variations. Bai et al. [

22] designed the RiceNet network to process RGB images of rice fields collected by unmanned aerial vehicles. RiceNet integrates a feature extractor frontend and a feature decoder module, enabling counting, locating, and sizing of rice plants. Ding et al. [

23] improved the YOLOv8 model to enhance its detection capability for small objects and accurately identify downy mildew and powdery mildew in cucumbers. The above models are all specialized optimization models for specific crops, effectively promoting the development of related crop phenotype detection technologies. However, these models generally suffer from the problems of a large parameter count and high computational complexity, requiring strict computing power for deployment platforms, making it difficult to achieve efficient deployment on portable devices such as Raspberry Pi. This limitation greatly restricts the promotion and application of related technologies in production practice, making it difficult for the efficiency of phenotype detection to meet practical needs. Therefore, the core of developing a practical detection and yield prediction system for

Lentinula edodes fruiting bodies lies in constructing a lightweight object detection and segmentation model that meets the recognition needs of

Lentinula edodes fruiting bodies.

YOLOv11 is the latest generation of real-time object detection models developed by Ultralytics, known for its low computational requirements and strong recognition capabilities. Among these, YOLOv11-Seg is a class of instance segmentation models with a small model size and powerful segmentation performance [

24,

25]. This study is based on the YOLOv11n-Seg model; a lightweight YOLO-SFCB model was constructed to accurately identify and segment the stipe and pileus of

Lentinula edodes in different growth states. The YOLO-SFCB model, combined with spatial information, can measure the stipe height, stipe diameter, pileus thickness, and pileus width of

Lentinula edodes fruiting bodies and predict fresh and dry weight.

2. Materials and Methods

2.1. Dataset Collection

In this study, a self-built dataset was used to train the segmentation model for

Lentinula edodes fruiting bodies. From March to June 2024, 56 different batches of

Lentinula edodes samples from 40 mushroom sticks were selected as subjects for image-based phenotypic feature collection in Fuping County, Baoding City, Hebei Province. Images were captured using the 12-megapixel rear camera of an iPhone 11 mobile phone, with the image resolution set to 4032 × 3024 pixels and the shooting distance fixed at 15 cm. The shooting angle is kept perpendicular to the long axis of the

Lentinula edodes stick to obtain a standard side view for measurement. After the fruiting body of

Lentinula edodes emerges, they mature in 5–7 days. To ensure the dataset covers the complete growth stages of

Lentinula edodes fruiting bodies from early development to maturation and harvest, images of the front and both sides of the

Lentinula edodes were taken daily between 8:00 and 10:00 a.m. and 16:00 and 18:00 p.m.

Figure 1 visually illustrates the morphological changes in

Lentinula edodes throughout the growth cycle.

During the image acquisition process, vernier calipers were used to measure and record four types of phenotypic features—stipe height, stipe diameter, pileus thickness, and pileus width—for each sample of

Lentinula edodes fruiting body. After the fruiting bodies reached maturity, 120 samples were selected from the mushroom shed, and their phenotypic data and fresh weight were recorded. These mature

Lentinula edodes were then dried in an oven, and the dry weight was measured using the constant-temperature atmospheric pressure drying method [

26].

2.2. Dataset Processing

The four types of phenotypic features of

Lentinula edodes can be obtained from side-view images of its fruiting bodies. After excluding frontal images from the collected data, a total of 722 side view images of

Lentinula edodes fruiting bodies were retained. These side view images are divided into training, validation, and testing sets in an 8:1:1 ratio to ensure independence between the datasets [

27,

28]. In order to improve the generalization ability of the model, data augmentation operations were performed on the training set, validation set, and test set, including brightness adjustment, rotation, and noise addition. When performing data augmentation operations, the brightness is randomly adjusted by ±20%, the image rotation angle range is set to −45° to +45°, the noise type is Gaussian noise, the noise mean is 0, and the standard deviation is 10. After data augmentation, the training set contains 2304 images, the validation set has 292 images, and the test set has 292 images.

Image annotations were created using Labelme, with polygon masks outlining the stipe and pileus of each

Lentinula edodes. The resulting JSON files were converted to TXT format compatible with YOLOv11-Seg. The data enhancement and annotation process is shown in

Figure 2.

2.3. Construction of the YOLO-SFCB Model

2.3.1. Introduce the ShuffleNetV2 Network in the Backbone Section

Cross stage partial darknet (CSP Darknet) is the backbone network of YOLOv11-Seg, which improves the efficiency and accuracy of feature extraction by introducing Cross Stage Partial Networks (CSP Net) on the basis of traditional Darknet architecture [

29]. However, the stacking of multi-layer convolutional layers and C3k2 modules increases the complexity of the model.

Lentinula edodes cultivation is intensive, and the computing resources of portable devices are limited, requiring lightweight models. ShuffleNetV2 maintains rich feature expression ability while significantly reducing the number of parameters through channel mixing operation, making it suitable for extracting texture features and overall morphological features of

Lentinula edodes. ShuffleNetV2 unit structure is shown in

Figure 3.

ShuffleNetV2 is an improved version of ShuffleNet that introduces a unique channel shuffle operation to enhance inter-channel information exchange and fusion, making it more accurate than other lightweight networks of similar complexity [

30]. By rearranging the channel order of the original feature maps, the channel shuffle operation breaks information isolation between channels, thereby strengthening the model’s representational capacity and generalization ability.

Figure 3 illustrates the unit structure of ShuffleNetV2. It can be observed that the unit module divides the feature channels into two parts: one part remains unchanged, while the other undergoes convolutional and depthwise convolution operations. The two feature channels are then merged, followed by a channel shuffle operation to enhance inter-channel feature communication. In the downsampling module, depthwise convolution and standard convolution are applied to the unchanged feature channels based on the unit structure. Owing to its lightweight network design, ShuffleNetV2 significantly reduces model complexity while maintaining baseline performance.

2.3.2. Incorporation of C3k2-FasterBlock Lightweight Feature Extractor into Neck

The C3k2 module of YOLOv11-Seg is responsible for important feature extraction and feature fusion in the network, but the C3k2 module has many convolutional layers, and the computational burden is still relatively large. In order to further lighten the model, the Bottleneck module in the C3k module is replaced by the FasterBlock module, and the structure of the new C3k2-FasterBlock lightweight feature extraction module is shown in

Figure 4.

The FasterBlock module serves as the core building unit of the FasterNet network. It integrates Partial Convolution (PConv) and Pointwise Convolution (PWConv) to achieve efficient feature extraction and information aggregation [

31]. PConv, a distinctive component within the FasterBlock module, enhances computational efficiency by minimizing redundant computation and memory access. For an input feature map with input and output dimensions of (

c,

h,

w) and a convolution kernel of size

k ×

k, the floating point operations (FLOPs) and memory access cost (MAC) of a standard convolution operation are given in Equation (1), while the FLOPs and MAC for PConv are provided in Equation (2).

Here, cp represents the number of convolution channels of PConv. When cp = c/2, the FLOPs of PConv are only 1/4 of those in standard convolution (Conv), and the MAC is reduced to 1/2. The FasterBlock module connects two PWConv after the PConv and employs residual connections at the end to add the input feature map to the output feature map. This design not only reduces computational load but also enhances training stability. The introduction of the C3k2-FasterBlock module forms a collaborative optimization with the ShuffleNetV2 backbone network. The partial convolution design of this module is aimed at addressing the computational bottleneck of the neck network, while maintaining multi-scale feature fusion capability and further reducing computational overhead.

2.3.3. CBAM Attention Mechanism Integration in Front of Small Object Detection Head

After the aforementioned lightweight improvements, the model has achieved reductions in both size and computational requirements. However, the original model exhibits insufficient recognition capability for small-sized

Lentinula edodes fruiting bodies. To address this, a CBAM attention module is incorporated before the small object detection head in the improved model, enhancing the new model’s ability to detect and segment small

Lentinula edodes. The structure of the CBAM attention mechanism is shown in

Figure 5.

The convolutional block attention module (CBAM) mechanism is a dual attention structure that integrates both a Channel attention module (CAM) and a Spatial attention module (SAM), enhancing the model’s ability to extract salient features [

32]. CAM performs global max pooling and global average pooling operations on each channel to generate channel attention weights. By multiplying the channel attention weights with each starting channel, the more important feature channels for the task can be dynamically highlighted. SAM can generate spatial attention weights by maximizing and averaging the input feature map along the channel dimension. By weighting the original feature map with spatial attention weights, important image regions can be highlighted and the influence of unimportant regions can be reduced.

By sequentially connecting CAM and SAM, the CBAM attention mechanism effectively incorporates both channel-wise and spatial feature representations. The dual attention of CBAM can optimize model performance from both feature channels and spatial position dimensions. Channel attention enhances the key features that distinguish Lentinula edodes from complex backgrounds, while spatial attention can effectively focus on specific areas where small volume Lentinula edodes are located.

In summary, the structure of the

Lentinula edodes instance segmentation model developed in this study, based on YOLOv11-Seg, is illustrated in

Figure 6. The improved model has been named YOLO-SFCB.

2.4. Model Evaluation Metrics

The evaluation of model complexity incorporates three primary metrics: parameter count (Params), FLOPs, and inference time. Lower Params and FLOPs signify a more lightweight model that requires less computational power. The inference time reflects the recognition and segmentation speed of the model. The measurement was conducted on an NVIDIA RTX 4060 Ti 8 GB graphics card, with 10 warm-up iterations. 500 test images were inferred and the average time was calculated. The batch size was 1 to simulate real-time application scenarios.

Model performance evaluation includes Precision, Recall, and mean average precision (mAP). Accuracy and recall represent the detection accuracy and recognition ability of the model, respectively, while mAP comprehensively reflects the overall performance of target recognition and segmentation. Their calculation methods are shown in Equations (3)–(5).

In the formula: TP (true positive) represents the true positive sample size; FP (false positive) refers to the number of negative samples incorrectly labeled as positive samples; FN (false negative) denotes the count of positive samples that were incorrectly not detected; N is the total number of single-target samples; j is the index of the single-target sample; L is the number of targets.

2.5. The Relationship Between the Distance of the Object and the Pixel-to-Physical Length Ratio

Using a fixed object-distance method to capture images of Lentinula edodes fruiting bodies and calculate phenotypic features is straightforward in process, but it imposes high requirements for imaging conditions, which can also limit measurement efficiency. Calculating the pixel-to-physical length ratio of the camera at different shooting distances can reduce the difficulty of image acquisition and improve measurement efficiency.

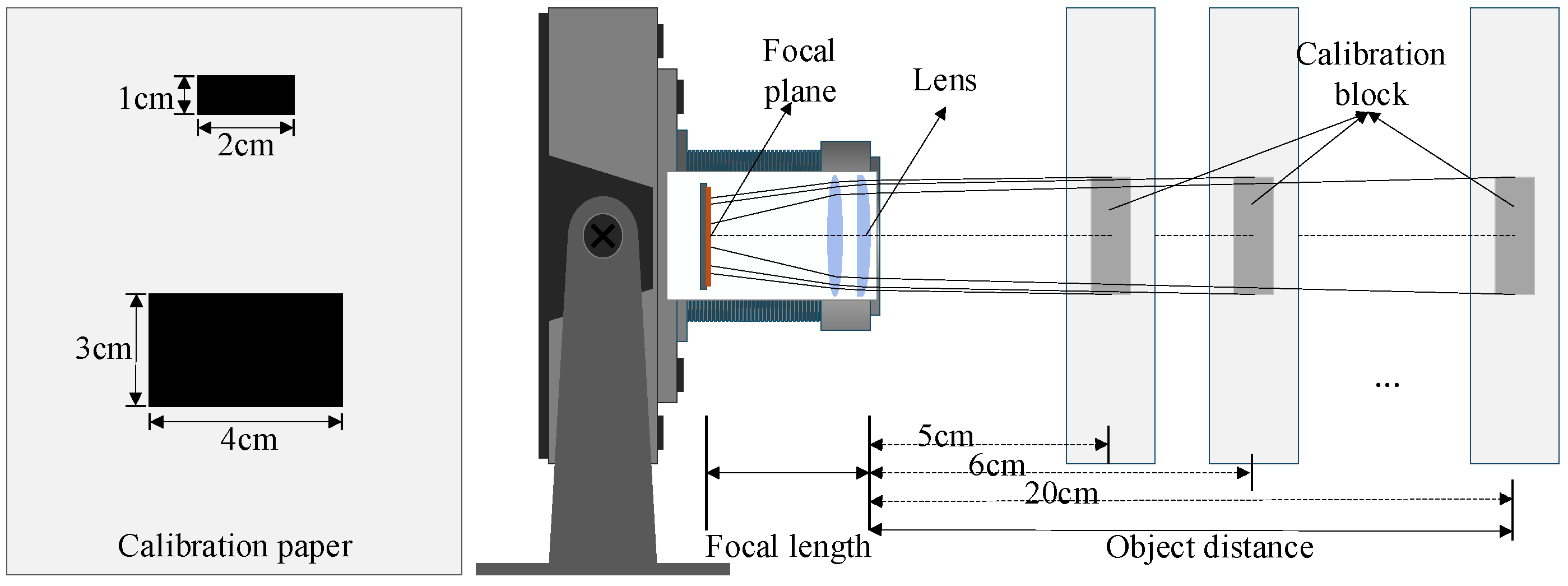

When studying the relationship between pixel-to-physical length ratio and shooting distance, the standard block method was employed to determine the pixel-to-physical length ratio of an industrial camera at distances ranging from 5 cm to 20 cm. The experiment uses an industrial camera equipped with a 2.8 mm ultra-wide angle lens (aperture F1.0–2.6), which integrates real-time digital distortion correction function to ensure reliable geometric accuracy of image data under complex lighting conditions in

Lentinula edodes cultivation environment. The standard block and imaging setup are illustrated in

Figure 7. First, images of the standard block were captured using the industrial camera at 1 cm intervals from 5 cm to 20 cm. The resolution of the captured images was then reduced to 640 × 640 pixels, and the actual ratio between pixels and physical length was calculated for each distance. Finally, a regression equation was fitted with shooting distance as the independent variable and the pixel ratio as the dependent variable.

After capturing images of the Lentinula edodes fruiting bodies, this fitted equation can be used to calculate the phenotypic parameters of Lentinula edodes at various shooting distances.

2.6. Extraction Method of the Phenotypic Features of the Fruiting Body of Lentinula edodes

The growth angles of

Lentinula edodes vary significantly, leading to considerable angular differences among the stipe, pileus, and the cultivation stick. This requires a measurement method capable of adapting to diverse angle variations. To address this, the OpenCV library was used to process the segmented images of

Lentinula edodes and calculate the phenotypic parameters of the mushroom bodies [

33]. The procedures for measuring the stipe height, stipe diameter, pileus thickness, and pileus width are as follows:

(1) The YOLO-SFCB model was employed to identify and segment the stipe and pileus of Lentinula edodes in the images.

(2) OpenCV was used to extract the minimum-area rotated bounding rectangle of the stipe and pileus regions to mitigate measurement errors caused by variations in the growth angle of Lentinula edodes.

(3) Direction determination: For the pileus region, the longer side of the minimum rotated bounding rectangle is taken as the pileus width direction, and the shorter side as the thickness direction. For the stipe region, the direction parallel to the longer side of the pileus bounding rectangle is used as the stipe diameter direction, while the direction with the larger angular deviation relative to the stick is considered the stipe height direction.

In terms of measurement location: The pileus thickness is measured as the length across the mask region at the center point of the rotated bounding rectangle along the thickness direction. The stipe diameter is measured similarly at the center location along the diameter direction. The pileus width and stipe height are directly taken as the side lengths of the rotated bounding rectangle in their respective directions, as shown in

Figure 8.

(4) The number of pixels corresponding to the four phenotypic features is calculated, and the actual physical dimensions—stipe height, stipe diameter, pileus thickness, and pileus width—are derived based on the physical length per pixel.

2.7. Prediction Method of Lentinula edodes Fruiting Body Yield

After obtaining the measurements of the four phenotypic features of

Lentinula edodes fruiting bodies, along with fresh weight and dry weight data, the relationship between phenotypic parameters and yield can be investigated, providing support for yield prediction in

Lentinula edodes. The yield prediction dataset consists of a total of 120 samples containing measured phenotypic parameters and yield values of

Lentinula edodes. When constructing the

Lentinula edodes yield dataset, first sort all samples by weight value from low to high. Subsequently, 2 out of every 10 consecutive samples were randomly selected and included in the test set, while the remaining 8 were included in the training set, ensuring that both the training and testing sets could evenly cover the complete yield prediction interval. The final training set contains 96 samples, and the test set contains 24 samples [

34].

To explore the mathematical relationship between phenotypic parameters and yield, pileus width, stipe diameter, and total height of

Lentinula edodes were used as inputs, while fresh weight and dry weight were set as outputs. Yield simulation experiments were conducted using the least squares method [

35], BP neural network (back propagation neural network) [

36], and random forest [

37], respectively.

3. Results

3.1. Experimental Platform and Model Training Parameters

In the Windows 11 operating system environment, the YOLOv11-Seg model and the improved model undergo single deterministic training without using pretrained weights and gradient optimization. In terms of hardware configuration, an Intel(R) CoreTM i5-12600KF @ 3.60 GHZ processor, equipped with 32 GB RAM and an NVIDIA RTX 4060 Ti 8 GB graphics card, was utilized to provide robust computational support for model training. The software environment selected was CUDA (compute unified device architecture) version 11.7, relying on the Pytorch 2.0.1 deep learning framework, executed on the PyCharm 2024.1.6, with the Python version set to 3.10.

Table 1 shows the hyperparameters during the model training process [

38].

3.2. Comparison of the Effects of Different Attention Mechanisms

The attention mechanism mimics the human ability to filter irrelevant information and focus on key features, thereby enhancing the model’s ability to capture important characteristics. To evaluate the impact of different attention mechanisms on the performance of the YOLOv11-Seg model, several attention modules—including SE (squeeze and excitation attention) [

39], ECA (efficient channel attention) [

40], CA (coordinate attention) [

41], EMA (expectation maximization attention) [

42], GAM (global attention mechanism) [

43], and the CBAM mechanism used in this study—were incorporated ahead of the small-object detection head. A comparative analysis of their performance improvements is presented in

Table 2.

From

Table 2, it can be seen that the effect of pure channel attention on model improvement is not as significant as that of mixed attention. This is because during the shooting process, the

Lentinula edodes fruiting bodies are always located at the center of the image, thus fixing the target position and allowing spatial attention in mixed attention to play a role. Furthermore, after adding CBAM attention, the model’s mAP50-95 reached 82.0%, surpassing that of GAM attention. This indicates that the sequential integration of channel attention and spatial attention is more suitable for the mushroom sub-entity detection and segmentation task in this study.

3.3. Ablation Experiment of YOLO-SFCB Model

In order to verify the improvement effect of the proposed model, ablation experiments were carried out for three improvements, and the effects of ShuffleNetV2 lightweight network, C3k2-FasterBlock feature extraction module and CBAM attention on the recognition and segmentation performance of the original model and

Lentinula edodes were analyzed. The results of the ablation experiment are shown in

Table 3.

It can be observed that after incorporating the ShuffleNetV2 network into the Backbone section, the model’s Params and FLOPs decreased by 29% and 23%, respectively, and mAP50-95 decreased to 77.7%. This indicates that the ShuffleNetV2 network can effectively reduce the complexity of the YOLOv11-Seg model, but at the cost of sacrificing some model performance.

Replacing the C3k2 module in the original model’s Neck section with the C3k2-FasterBlock module resulted in a further reduction in Params and FLOPs, along with improvements in both Precision and Recall. This demonstrates that the PConv module can reduce computational load while slightly enhancing model performance.

Introducing the CBAM attention mechanism before the small target detection head led to a 0.7% increase in Precision and a 1.3% improvement in Recall. This suggests that CBAM attention, through its dual mechanism, enhances the model’s focus on important feature channels and critical spatial regions, thereby improving its ability to identify and segment Lentinula edodes fruiting bodies.

Compared with the original model, the improved YOLO-SFCB model shows reductions in Params and FLOPs by 29% and 25%, respectively, a decrease in inference time by 9.8%, and increases in Precision and Recall by 1% and 1.3%, achieving an mAP50-95 of 80.5%. All key performance indicators demonstrate noticeable enhancement.

To more intuitively demonstrate YOLO-SFCB’s improved performance in identifying and segmenting

Lentinula edodes fruiting bodies, Grad-CAM (gradient weighted class activation mapping) was employed for visual evaluation. Attention heatmaps of YOLOv11-Seg and YOLO-SFCB generated by Grad-CAM are shown in

Figure 9. The figure displays four

Lentinula edodes fruiting bodies in different growth stages. Warm-colored regions indicate areas of greater model attention, with darker colors representing higher contribution of the corresponding regions to the model’s decision.

By comparing

Figure 9a and

Figure 9c, it is evident that YOLO-SFCB demonstrates stronger recognition capability for

Lentinula edodes in early growth stages, with higher confidence levels. This indicates that incorporating the CBAM attention mechanism before the small object detection head effectively enhances the model’s ability to recognize small

Lentinula edodes. Notably, while YOLOv11n-Seg encounters misidentification issues in segmentation tasks, YOLO-SFCB effectively reduces such interference while maintaining high recognition accuracy.

Observation of the heatmaps in

Figure 9b,d reveals that YOLOv11-Seg is relatively sensitive to background interference, resulting in lower confidence and misidentification problems. In contrast, the improved YOLO-SFCB model significantly enhances its ability to filter out background disturbances through the dual optimization of PConv and CBAM attention, showing stronger focus on the

Lentinula edodes fruiting bodies.

The combined results from ablation experiments and heatmap analysis indicate that eliminating irrelevant feature channels while strengthening the model’s spatial attention capability contributes to improved segmentation performance in Lentinula edodes recognition. Coupled with the lightweight efficiency of the ShuffleNetV2 architecture, these improvements make YOLO-SFCB particularly suitable for Lentinula edodes recognition and segmentation tasks under low-computation conditions.

3.4. Performance Comparison with Different Segmentation Models

In order to further explore the recognition and segmentation performance of the improved YOLO-SFCB model on the fruiting body of

Lentinula edodes, the classical two-stage instance segmentation model Mask R-CNN (mask region-based convolutional neural network), the single-stage segmentation model YOLACT (you only look at coefficients), and the previous YOLO segmentation models were selected for performance comparison. To ensure fairness in comparison, all models were trained and evaluated under identical training data, hardware platforms, and hyperparameter settings. The segmentation effect is shown in

Figure 10, and the performance comparison results are shown in

Figure 11.

Figure 10 indicates that all models perform well in segmenting

Lentinula edodes fruit bodies, accurately capturing their overall contours. However,

Figure 11 reveals considerable differences in computational complexity: Mask R-CNN and YOLACT exhibit FLOPs of 239.3 G and 89.7 G, respectively, with large model sizes, unsuitable for deployment on mobile platforms. In contrast, the YOLO series models show significantly lower FLOPs and model sizes. This advantage stems from their single-stage architecture, which unifies detection and segmentation, along with a lightweight design that effectively reduces computational costs. As a result, the YOLO models achieve notably higher inference speeds.

Furthermore, the volume variation in

Lentinula edodes at different growth stages influences recognition performance: models perform better in identifying larger

Lentinula edodes compared to smaller ones. The pileus is easier to recognize due to its distinct color contrast with the background, whereas the stipe, being similar in color to the substrate, poses greater recognition challenges. The mAP50 values of different growth stages and modes of pileus and stipe in

Figure 11 indicate that the recognition accuracy of

Lentinula edodes in the early growth stage is usually lower than that in the growth and maturation stages. The overall performance of Mask R-CNN and YOLACT in the recognition task of

Lentinula edodes is not as good as the YOLO series models, but the YOLO-SFCB model enhances its feature extraction ability for

Lentinula edodes by introducing the CBAM attention mechanism, thus exhibiting better adaptability than other comparison models in this scenario.

Compared to other models, the YOLO-SFCB model proposed in this study requires the least computation and has the smallest model size, achieving an FPS (frames per second) of 58.26 frames per second—higher than the original YOLOv11n-Seg model. In addition, YOLO-SFCB achieves average mAP50 values of 97.5% for the Lentinula edodes stipe and 98.4% for the pileus across different growth stages, surpassing all other segmentation models. These comprehensive results demonstrate that the YOLO-SFCB model offers a more balanced performance, delivering excellent segmentation accuracy for Lentinula edodes identification while maintaining low computational requirements suitable for deployment on lightweight devices.

3.5. Model Deployment Experiment

The lightweight YOLO-SFCB model is capable of recognizing and segmenting

Lentinula edodes fruiting bodies on low-computing-power platforms. To evaluate the detection model’s operational performance, it was deployed on a Raspberry Pi 5. The Raspberry Pi 5 is compact and cost-effective, equipped with a 64-bit quad-core ARM Cortex-A76 processor running at 2.4 GHz and a VideoCore VII GPU operating at 800 MHz, delivering a 2 to 3 times performance improvement over the previous generation. Testing was conducted using the 8 GB memory version of the device, with a rated power of 25 W, as shown in

Figure 12.

A total of 50 images of Lentinula edodes fruiting bodies were selected as the test set for the Raspberry Pi model inference experiment. Inference segmentation experiments were conducted on these images using both the YOLOv11n-Seg and YOLO-SFCB models deployed on the Raspberry Pi 5. The average inference time for processing an image was calculated for each model.

To evaluate and compare the inference efficiency of the YOLOv11n-Seg and YOLO-SFCB models deployed on the Raspberry Pi5 platform, we randomly selected 50 images of Lentinula edodes fruiting bodies as the test set. Both models were converted to ONNX format, and segmentation inference experiments were conducted on each image using CPU processing. The average inference time was calculated for each model to quantitatively assess their computational performance. The results showed that YOLOv11n-Seg required an average of 308 ms per image, while the YOLO-SFCB model reduced this time to 236 ms—a 23.5% decrease compared to the original model. This experiment demonstrates that the lightweight improvements of the YOLO-SFCB model are highly effective, making it more suitable for deployment on mobile and edge computing devices.

3.6. Pixel-Physical Length Ratio Fitting Results

Using industrial cameras to capture images of standard blocks and calculating the pixel-physical length ratio at distances of 5–20 cm, it has been found through multiple experiments that the actual length represented by the pixel points increases with the increase in object distance, and exhibits a linear relationship. The fitted mathematical relationship is shown in Equation (6).

In the equation, OD represents the object distance, and PD represents the actual physical length represented by a single pixel, with units measured in centimeters. The goodness of fit indicator R2 reached 0.998. Use Equation (6) to randomly measure the length and width information of blocks within a distance of 5–20 cm, and the calculated value has an error of less than 0.5 mm compared to the actual block length.

3.7. Effect Analysis of Phenotypic Feature Extraction of Lentinula edodes Fruiting Bodies

This study selected 100 additional samples of

Lentinula edodes fruiting bodies that were photographed and measured for phenotype parameters. The phenotype information of four types was measured using the method of this study and compared with the values measured by a vernier caliper. Finally, the measurement errors of various phenotype parameters were calculated separately, and the results are shown in

Figure 13.

From

Figure 13, it can be seen that the four phenotype parameters have a high overall correlation with the actual values measured by the vernier caliper. The

R2 values of stipe diameter, stipe height, pileus width, and pileus thickness reached 0.81, 0.95, 0.99, and 0.96, respectively, indicating good consistency between image-based measurement methods and manual measurements. The correlation between the stipe diameter is slightly low, because its length range is small, and small measurement differences can cause a decrease in

R2.

When using a vernier caliper for manual measurement, two types of errors are mainly introduced: one is operational error, which is difficult to standardize force application and reading due to the need to avoid damaging the Lentinula edodes body; the second is the morphological adaptability error, which is caused by the natural bending and irregular shape of various parts of the fruiting body of Lentinula edodes, resulting in the inability to unify the measurement angle and position. These errors have varying degrees of impact on the measurement of different traits. According to Bland–Altman analysis, the average residual between stipe diameter and pileus thickness is less than 0, which is 0.12 mm and 0.10 mm, respectively. The reason is that the vernier caliper cannot touch the Lentinula edodes body, resulting in an overall bias in manual measurement values. The average residual between the stipe height and pileus width is −0.35 mm and −0.10 mm, respectively, which is higher than the measurement value of the vernier caliper. This indicates that the measurement angle determined by the minimum rotation of the external rectangle is more accurate, avoiding the disadvantage of difficulty in determining the angle manually.

Overall, the average residual of phenotypic parameter differences in Lentinula edodes fruiting bodies based on image measurement is less than 0.35 mm, with a standard deviation of less than 1.4 mm. The absolute difference does not increase with the increase in physical size, proving that image-based measurement methods can achieve accurate measurement of phenotypic parameters in Lentinula edodes fruiting bodies.

3.8. Analysis of Prediction Results for Lentinula edodes Fruiting Bodies

This study used three methods—least squares method, BP neural network, and random forest—to learn the fresh and dry weight training set data of

Lentinula edodes fruiting bodies. The BP neural network architecture consisted of an input layer (3 neurons), two hidden layers (64 and 32 neurons, ReLU activation), and a linear output layer (1 neuron). It was trained using the Adam optimizer with L2 regularization (λ = 0.001). The random forest model was configured with 100 estimators, a maximum tree depth of 10, and a random state of 42. All models were rigorously evaluated using 10-fold cross-validation repeated 5 times. The model performance metrics are summarized in

Table 4, while the training set data distribution and Bland–Altman analysis are presented in

Figure 14.

As shown in

Table 4, the least squares method performed the best in predicting the fresh and dry weight of

Lentinula edodes fruiting bodies, with average

R2 values of 0.94 and 0.90, respectively, and standard deviations of only 0.03 and 0.07. The sample distribution in

Figure 14 and Bland–Altman indicates that the least squares method predicts fresh and dry weight values closer to the true values, with smaller residuals, indicating the lowest overall error and best performance. In contrast, the results of random forest are relatively poor, with average

R2 values of 0.91 and 0.88, respectively, and relative errors greater than those of least squares and BP neural network.

The learning results of the three models indicate a strong linear relationship between the selected phenotype parameters and the weight of

Lentinula edodes. Among them, pileus width has the closest relationship with weight and is the feature with the highest contribution value. The total height contributes less than 0.17 in all three models, indicating the lowest correlation with weight. The least squares method was used to predict the fresh and dry weight of 24

Lentinula edodes fruiting body test set samples that did not participate in training; the sample distribution is shown in

Figure 15.

As shown in

Figure 15, the fresh weight and dry weight of

Lentinula edodes fruiting bodies predicted using these formulas are close to the actual measured values. The average absolute measurement error of fresh weight is 0.66 g, and the average relative error is 3.11%. The average absolute measurement error of dry weight is 0.16 g, and the average relative error is 7.19%. Based on the analysis of the training and testing sets, the average measurement errors of fresh weight and dry weight using the least squares method are 1.56 g and 0.39 g, respectively, which can meet the requirements for predicting the yield of

Lentinula edodes fruiting bodies.

4. Discussion

4.1. Segmentation Model

In the development of automated measurement technologies for crop phenotypic parameters, the size and computational complexity of segmentation models remain critical factors limiting their widespread application [

9,

10,

14]. For instance, the CSW-YOLO model proposed by Xu et al. [

44] achieved an mAP50 of 96.7% in bitter melon detection but has a model size of 20.7 MB. Similarly, the YOLOTree model developed by Luo et al. [

45] for estimating crown volume contains 3.0 M parameters. Models of this kind generally suffer from large size and high computational demands, making them difficult to deploy directly in real production environments for phenotypic parameter measurement.

To overcome these challenges, the YOLO-SFCB model proposed in this study substantially reduces model size and computational burden through a series of lightweight design strategies. By incorporating the ShuffleNetV2 backbone and the C3k2-FasterBlock module, the number of Params and FLOPs are significantly reduced. In addition, the CBAM attention mechanism embedded before the detection head enhances the model’s ability to accurately segment Lentinula edodes fruiting bodies of different sizes. In the end, the Params of YOLO-SFCB are only 2.0 M, and FLOPs are only 7.8 G, with the potential for direct deployment on portable devices.

However, this study still has certain limitations. The datasets used are all based on a single strain and culture medium, with limited sample size and scene diversity; in addition, the performance of the model under complex conditions such as low light, water mist interference, or blurred target boundaries has not been systematically validated, and these factors may affect its generalization ability in diverse production environments. In future research, we will further collect images of Lentinula edodes under different substrates, strains, and growth environments to systematically verify the robustness of the model under variable conditions and continuously improve the adaptability and reliability of YOLO-SFCB in practical applications.

4.2. Phenotypic Feature Detection and Yield Prediction of Lentinula edodes

The crop phenotype feature detection technology based on visible light imaging can be mainly divided into two types: three-dimensional reconstruction and two-dimensional plane analysis. In recent years, 3D reconstruction methods have received widespread attention due to their high measurement accuracy [

46,

47]. For example, Xu et al. [

48] segmented the fruiting body of

Lentinula edodes based on the YOLOv8x model and measured parameters such as stipe width and pileus thickness using 3D reconstruction technology, controlling the average error to around 10%. Although this method has high accuracy, its process is complex and its efficiency is low, making it difficult to meet the requirements of high-throughput phenotype detection. In contrast, measurement methods based on two-dimensional images are more convenient, such as Lu et al. [

49] using the YOLOv3 model to identify

Lentinula edodes pileus and estimate their diameter, thereby predicting harvest time; However, the phenotype parameters that can be obtained by this method are limited, and the overall measurement accuracy is low. The phenotype detection and yield prediction method for

Lentinula edodes proposed in this study balances measurement accuracy with operational convenience, effectively unifying the advantages of both methods. This method has low limitations on the distance of image capture and can extract various phenotype parameters, including stipe diameter, stipe height, pileus width, and pileus thickness. The average residuals of each indicator are 0.12 mm, −0.35 mm, −0.10 mm, and 0.10 mm, respectively, verifying the feasibility of high-precision phenotype measurement based on two-dimensional images. The fresh weight and dry weight prediction models established based on the least squares method have

R2 values of 0.94 and 0.90, respectively, indicating a strong correlation between the extracted phenotype parameters and yield, providing a reliable basis for yield estimation.

However, the measurement accuracy of this method is still affected by the segmentation effect. If there is a deviation in the segmentation area, it will directly interfere with the parameter estimation results. In addition, current methods still have certain requirements for the shooting angle, which to some extent limits the efficiency of phenotype extraction. In future research, we will further expand the measurable phenotype feature types, develop a more comprehensive Lentinula edodes phenotype collection system, and, based on this, construct an automatic evaluation system for Lentinula edodes grades to support quality grading and intelligent decision-making in actual production.

5. Conclusions

This study proposes a method for measuring the phenotype characteristics and predicting the yield of Lentinula edodes based on instance segmentation models, which solves the problems of low efficiency and easy damage to the Lentinula edodes body in traditional Lentinula edodes phenotype feature detection methods. A segmentation network, YOLO-SFCB, is proposed for recognizing and segmenting Lentinula edodes fruiting bodies on mobile devices. By introducing the ShuffleNetV2 network, the C3k2-FasterBlock module, and the CBAM attention mechanism, the ability to recognize and segment small targets is enhanced while lightweighting the model. The experimental results show that the YOLO-SFCB model achieves an mAP50-95 of 80.5% on a self-built dataset and an inference time of 236 ms on a Raspberry Pi, balancing accuracy and efficiency. The average residual of the phenotype measurement method combined with OpenCV is less than 0.35 mm. The MAE predicted by the least squares method for fresh weight and dry weight are 1.56 g and 0.39 g, respectively, and the overall error is within an acceptable range.

In summary, the phenotype feature measurement and yield prediction method proposed in this study is a solution with low computing power requirements and high measurement accuracy and has the potential to be promoted in the field of automatic detection and evaluation of Lentinula edodes. Future research will focus on developing phenotype feature measurement methods that integrate multiple angles, reducing dependence on shooting angles and further improving measurement accuracy. In addition, we will continue to expand the image data of different edible fungi to further improve the applicability of the model and assist in the development of automated cultivation and harvesting technology for edible fungi.