1. Introduction

The citrus industry, including the farming of Citrus reticulata Blanco, contributes significantly to global agriculture, especially in tropical and subtropical regions, but faces significant threats from Huanglongbing (HLB). Rapid and accurate detection is crucial for maintaining productivity and farmer incomes [

1,

2]. Machine learning (ML) has advanced disease diagnosis through digital imaging; however, traditional ML methods rely on manual feature extraction, whereas deep learning (DL) overcomes this limitation by automatically learning discriminative features, thereby improving efficiency and accuracy [

3,

4]. Though DL models have high parameter counts and training costs, they offer faster testing speeds, aiding early disease identification and preventing economic losses [

5,

6,

7,

8]. ML has gained traction in object detection for its feature extraction and pattern recognition strengths [

9]. Convolutional Neural Networks (CNNs), like DenseNet-121, have been applied to citrus disease detection, achieving 95% accuracy through pre-trained weights [

10,

11]. While You Only Look Once (YOLO) achieves 90% precision through comprehensive data augmentation [

12], its inherent design generates excessive redundant bounding boxes. This necessitates Non-Maximum Suppression (NMS), a process that may inadvertently suppress valid detections of small objects due to their lower confidence scores [

13]. Transformer-based models, like Detection Transformer (DETR), use self-attention to capture global relationships and have demonstrated superior generalization [

14,

15,

16,

17]. DETR integrates a CNN backbone with a transformer encoder–decoder, eliminating NMS and simplifying the detection process [

18,

19,

20]. However, DETR suffers from slow convergence and struggles with small object detection, prompting various improvements. Xizhou et al. [

21] developed Deformable-DETR, a novel architecture that improves shape adaptability by employing deformable attention mechanisms. Meng et al. [

22] introduced Conditional-DETR, which optimizes attention with a variational autoencoder. Gao et al. [

23] presented Spatially Modulated Co-Attention DETR (SMCA-DETR), which significantly improves small object detection by incorporating multi-scale attention modules. Cao et al. [

24] proposed CF-DETR, a coarse-to-fine framework that enhances detection accuracy through local context refinement. Hao et al. [

25] proposed DINO (Detection transformer with Instance Noise Optimization), which reduces label noise to achieve better convergence performance.

Notably, the Real-Time Detection TRansformer (RT-DETR) [

26] has made breakthroughs in real-time object detection with its efficient hybrid encoder, effectively handling multi-scale features. In evaluations on the Common Objects in Context (COCO) dataset, RT-DETR has shown advantages over similarly sized YOLO detectors in both speed and accuracy. Zhu et al. [

27] proposed an improved RT-DETR that creatively integrates features generated from multi-scale perception, greatly enhancing feature extraction capabilities and improving accuracy in drone detection, achieving precisions of 95.6% and 97.8% on two drone datasets. Li et al. [

28] introduced an improved high-precision and robust model for free-range chicken detection based on RT-DETR (EMSC-DETR), significantly enhancing the computational efficiency of the transformer. Although EMSC-DETR has a speed advantage compared to other transformer-based models, its parameters and computational complexity cannot be compared to those of lightweight models.

To achieve high precision and recall in the identification of Huanglongbing (HLB) in citrus, while also considering cost and training time, it is recommended to abandon the use of YOLO and instead select a lightweight model from the DETR series, such as the real-time end-to-end object detection model RT-DETR, as an improved baseline model. Compared to YOLO, RT-DETR features a hybrid encoder that effectively handles multi-scale features and Intersection over Union (IoU)-aware query selection, enabling RT-DETR to achieve higher computational efficiency than YOLO models with comparable accuracy, demonstrating superior performance in detecting small objects in agricultural contexts. Huangfu et al. [

29] utilizing the improved lightweight HHS-RT-DETR model for citrus Huanglongbing detection. The experimental results indicate that it achieves an accuracy of 92.4%, outperforming YOLOv5m and YOLOv8n, though there remains room for further improvement. Li et al. [

30] demonstrated the applicability of RT-DETR-SoilCuc in cucumber germination detection within soil environments, exhibiting certain advantages over similarly sized YOLO series models. However, these studies primarily focused on large groups or individual objects, without adequately addressing challenges such as severe occlusion or small object detection.

Based on the challenges identified in detecting Huanglongbing (HLB) in citrus, including low detection accuracy and inefficiency, this paper proposes an improved HLB detection model called MSHLB-DETR. Built on RT-DETR, this model integrates a novel transformer module (SDRM) and a contextual information learning module (CG Block), significantly enhancing detection accuracy for HLB in complex environments while reducing model complexity and increasing detection speed. This advancement provides robust technical support for precise and efficient HLB detection in citrus orchards.

The main contributions of this study are summarized as follows:

(1) A dataset for citrus Huanglongbing (HLB) disease is constructed, capturing images of HLB-affected citrus in natural orchard environments. The dataset records the growth conditions of citrus leaves under natural settings. Additionally, a novel RGB image enhancement algorithm is proposed, specifically addressing the challenge of subtle visual color features associated with HLB symptoms on citrus leaves.

(2) To address the issue of small target feature loss, a novel transformer module called the Smart Disease Recognition Multi-scale Transformer (SDRM) is proposed. SDRM incorporates a space-to-depth (SPD) module and an inverted residual mobile block (IRMB), which facilitate deep interaction and information flow between local and global features, minimizing the loss of critical information and significantly enhancing the computational efficiency of the transformer.

(3) Additionally, to address the detection and differentiation challenges caused by occlusions in diseased citrus leaves, this study introduces an innovative feature learning module within the transformer encoder called the Context-Guided Block (CG Block). Inspired by traditional self-attention mechanisms and the human visual system’s reliance on contextual information for scene comprehension, the CG Block learns both the local features of target objects and contextual information regarding their surroundings. This results in a more precise feature representation, enhancing the fusion of adjacent feature maps. By fully utilizing the global context information obtained from adjacent high-level features in the backbone network, the model achieves a more accurate localization of overlapping diseased leaf targets.

2. Materials and Methods

2.1. Data Acquisition and Preprocessing

The dataset used in this study consists of 4347 citrus Huanglongbing (HLB) images captured in a citrus orchard in Ganzhou, Jiangxi Province, China, during February and July 2024. The specific experimental plot was located at geographical coordinates 25°48′ N, 114°55′ E. All images were acquired using a REDMI K40 (Xiaomi Technology Co., Ltd., Beijing, China) with a resolution of 3024 × 3024 pixels. The images were captured at varying distances ranging from approximately 0.5 to 3 m from the citrus trees, representing typical working distances for in-field plant phenotyping and manual inspection. This distance range ensures the acquisition of both close-up leaf details and broader canopy-level features, effectively capturing the multi-scale characteristics of HLB symptoms in natural growing conditions. Environmental conditions during image acquisition were systematically recorded to ensure experimental transparency. Data collection was conducted during daylight hours (8:00–17:00) under natural lighting conditions, with illumination intensities ranging from 15,000 to 80,000 lux depending on weather conditions and the time of day. The temperature varied between 12 and 28 °C during February and 25 and 35 °C during July, reflecting the typical seasonal variations in the region. Relative humidity ranged from 55% to 85% across different collection days. All images were acquired under clear to partly cloudy conditions to ensure consistent lighting quality. These environmental parameters cover the typical growing conditions of citrus orchards in this region and provide important context for understanding the visual characteristics of the captured leaf images.

Prior to augmentation, all collected images underwent manual quality screening. Images were removed if they were blurry, had severe lighting issues, contained irrelevant subjects, or were near-duplicates of other shots, ensuring the quality and relevance of the base dataset. To enhance model robustness and effectively prevent overfitting, a series of data augmentation techniques was applied to process the image data [

31,

32]. To improve detection accuracy in the Huanglongbing-affected regions of citrus images, an innovative data augmentation method was proposed, specifically targeting the features of diseased leaves. Through an in-depth analysis of the visual characteristics of these diseased leaves, a transformation formula was developed for image processing, as shown in Equation (1).

Equation (1) provides a physiologically informed transformation to amplify HLB symptoms and suppress confounding noise by processing the digital intensity values (0–255) of the RGB channels. Here, “I” denotes the adjusted pixel intensity after enhancement, applied uniformly to each pixel. The constants α, β, and γ are empirically tuned to emphasize the red and green spectral regions (R: 620–750 nm; G: 495–570 nm) most affected by HLB, leveraging the correspondence between the camera’s color filters and human visual perception. The first component, α·R + β·G, is tailored to the pathology of HLB. It enhances the specific spectral bands (red and green) that signify the loss of chlorophyll and the emergence of yellowing pigments, while the diagnostically irrelevant blue channel is omitted to minimize its susceptibility to noise. The second component, −(1 − (R + G)/255)·(γ + β), acts as an adaptive corrector. It counters the effects of uneven lighting by boosting contrast in dimly lit areas and simultaneously suppressing the influence of common dark background noises like soil and shadows. The synergistic effect of both terms ensures that HLB signatures are robustly enhanced against complex orchard backgrounds, providing a purified input for the detection model. Prior to training and detection, the aforementioned method is employed to augment the collected images of Huanglongbing-infected citrus. The color of the citrus leaf epidermis is transformed into a deeper orange-yellow hue, overall brightness is increased, and the contrast between diseased and healthy leaves is enhanced. Furthermore, the color of the diseased leaf areas becomes more pronouncedly orange-yellow, significantly improving the recognition accuracy of the diseased regions. This enhancement provides better input images for subsequent object detection algorithms, thereby increasing the recognition accuracy of the Huanglongbing detection model for citrus leaves. As shown in

Figure 1, this study employed several image enhancement methods, including Histogram Equalization, RGB Enhancement, Gaussian Noise addition, and Horizontal Rotation, to expand the dataset. The augmented dataset consists of a total of 4367 images, which were then split into training, validation, and testing sets at an approximately 7:1:2 ratio. Image annotation was performed using the Python-based labeling tool LabelImg (

https://github.com/tzutalin/labelImg, accessed on 8 October 2025), which utilizes rectangular annotation frames and generates label files in VOC format. Since the symptoms of citrus Huanglongbing (HLB) naturally occurring in orchards typically involve systemic pathological changes across the entire leaf rather than localized lesions, and considering the need to evaluate the overall health status of the citrus tree, this study adopts a whole-leaf annotation approach. This strategy not only ensures the accurate capture of systemic disease characteristics but also provides a more comprehensive basis for assessing tree health in orchard management. The categories include Huanglongbing (HLB), healthy (health), and other minor diseases (ill), with their respective proportions of 75%, 18%, and 7%. The “ill” category comprises conditions that may present visual similarities to HLB or cause detection challenges, including citrus scab, citrus canker, and nutritional deficiencies (particularly zinc and magnesium deficiency). This grouping reflects field conditions where multiple pathologies may co-occur and allows for an evaluation of model specificity against non-HLB conditions. It is noteworthy that all images retained their natural backgrounds to ensure that the model was trained and evaluated under realistic orchard conditions.

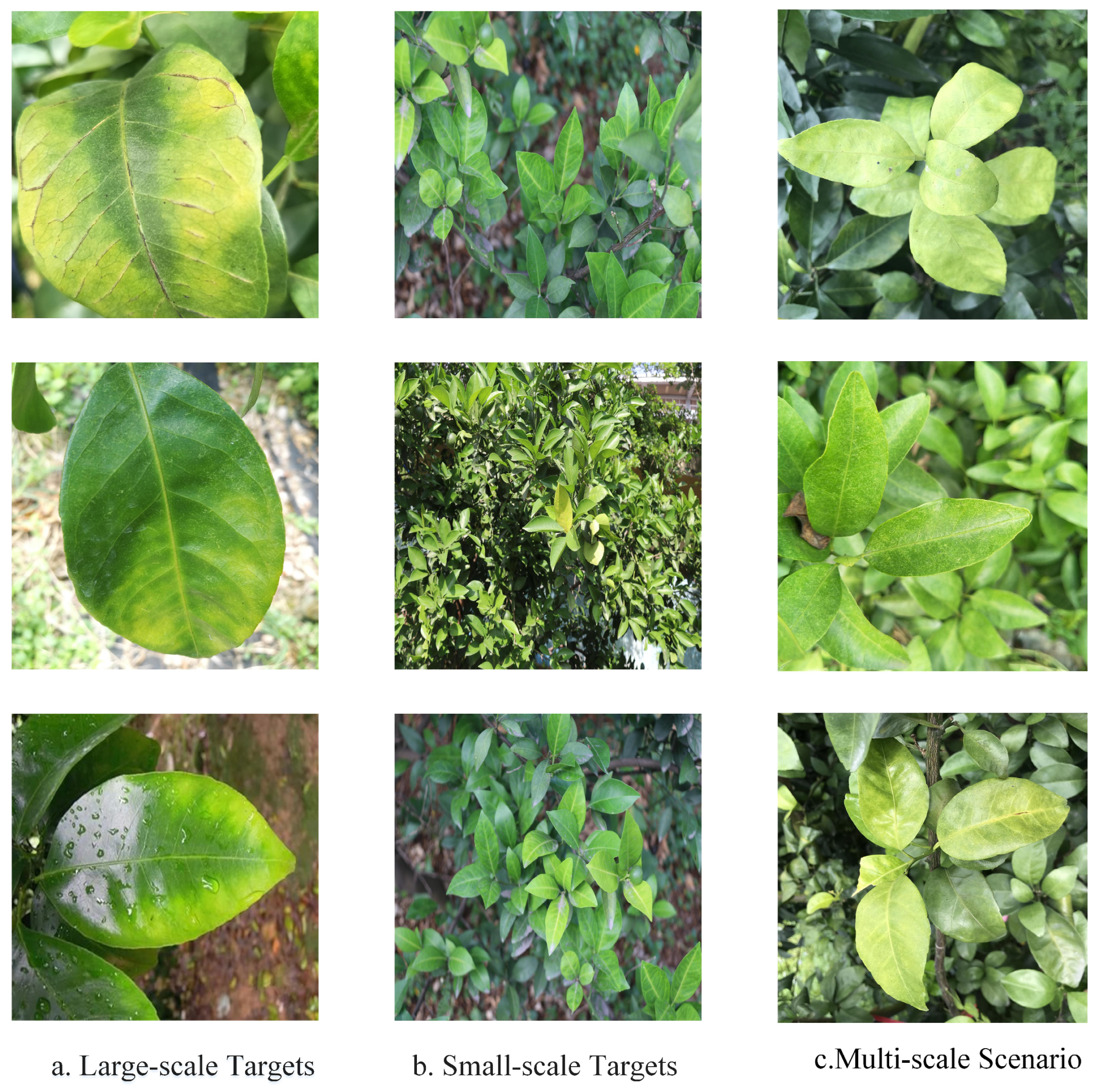

2.2. Structure and Features of Datasets

The structure and characteristics of the dataset have a decisive impact on model performance. In the aggregate, the training set, validation set, and testing set in this study contain 3056, 874, and 437 images, respectively. In some datasets, the distribution of target sizes is uneven, with a predominance of small targets and only a few large targets, as illustrated in

Figure 2. Following the COCO evaluation protocol established by Lin et al. [

33], targets can be categorized as small (areas < 32

2), medium (32

2 < areas < 96

2), or large (areas > 96

2), where “areas” refers to the number of pixels, measured in square pixels.

Table 1 summarizes the total number of bounding boxes and the proportions of different target sizes for each dataset, with “boxes” indicating the total number of bounding boxes [

34]. In total, all datasets contain 24,258 targets, with small targets comprising the largest proportion at 55.12%. Naturally, there are significant challenges in object detection for Huanglongbing images.

Environment: Detecting diseased citrus leaves in outdoor environments is significantly more challenging than in controlled settings. Complex backgrounds and highly variable lighting conditions impede the discrimination of diseased leaves from healthy ones based on color and shape. These factors necessitate the development of robust models capable of distinguishing subtle symptomatic features within cluttered visual scenes.

Target Scale: In the process of detecting diseased citrus leaves in images, the sizes of leaves and diseased areas are varying along with the distance between the camera and the leaves. As the distances from the camera to the target increase, the pixel numbers occupied by the leaves and diseased areas in the image decrease, resulting in noticeable scale variations among leaves within a single image. This issue is particularly pronounced in side-angle shots, where perspective changes can lead to even greater variations in leaf size and shape, thereby increasing the difficulty of accurately detecting diseased leaves in the images.

Target Characteristics: In the natural environment of citrus trees, leaves often cluster together, increasing additional challenges for image-based disease detection due to overlapping and occlusion. The dense arrangement of leaves presents significant challenges for the precise localization and regression of bounding boxes, particularly under mutual occlusion. These occlusions and the need for tightly positioned bounding boxes increase the difficulty of feature extraction, potentially leading to convergence issues during the model training process.

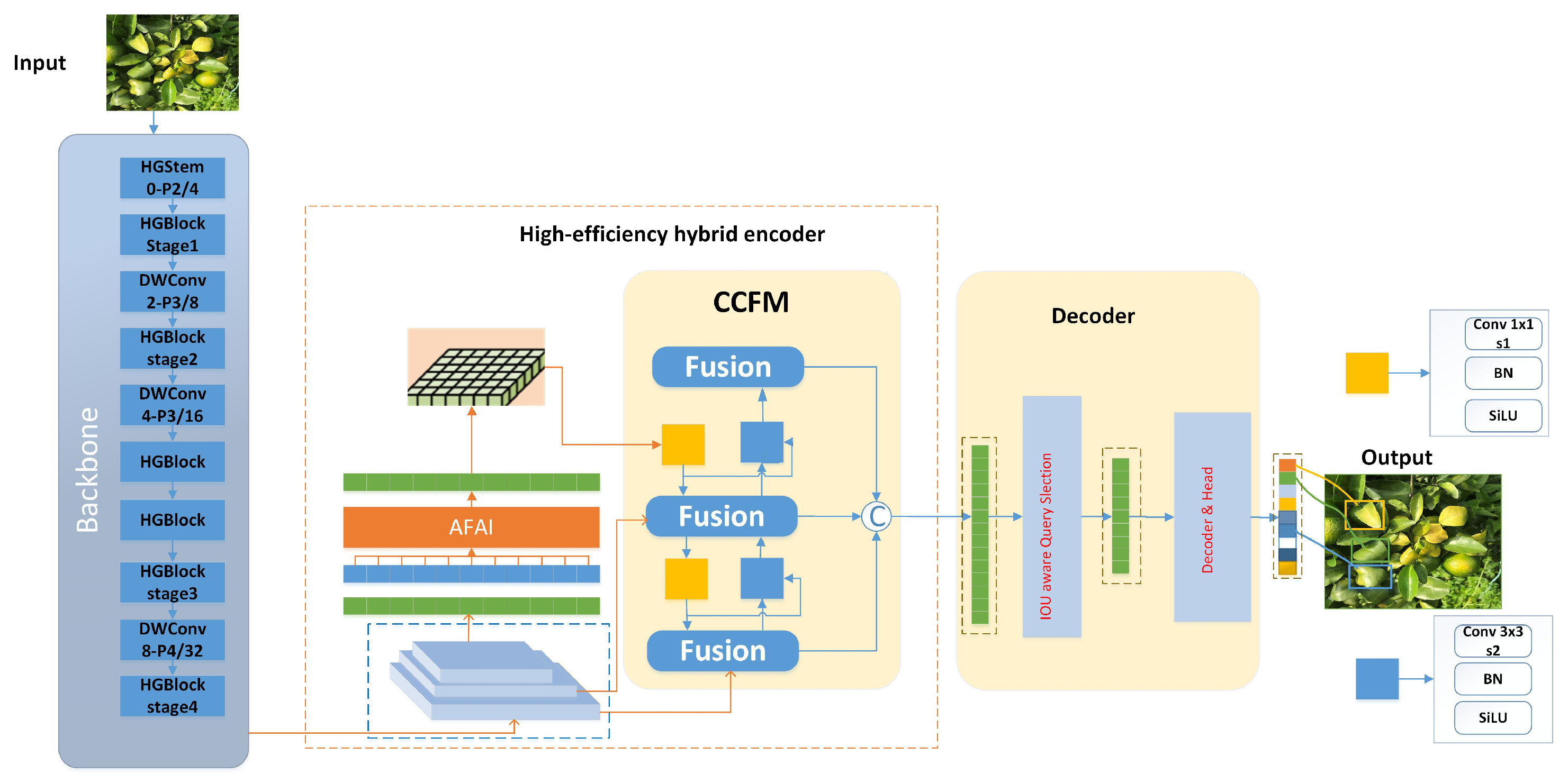

2.3. RT-DETR Structure

RT-DETR is an innovative real-time end-to-end object detector that integrates the multi-scale feature processing capabilities of Vision Transformers (ViTs), ensuring high-speed performance without sacrificing accuracy. It consists of a backbone network, hybrid encoder, transformer decoder, and auxiliary predictor head, eliminating handcrafted components like the Non-Maximum Suppression (NMS) found in the YOLO series. The backbone network utilizes the CNN to extract features at different levels, including high-level features (downsampled 32 times), mid-level features (downsampled 16 times), and low-level features (downsampled 8 times). The hybrid encoder enhances multi-scale feature interaction through Attention-based Intra-scale Feature Interaction (AIFI) and Cross-scale Feature Fusion (CCFM) modules, improving feature representation. Additionally, IoU-aware query selection optimizes object query initialization, while adjustable decoder layers provide flexible inference speed. The experimental results show that RT-DETR outperforms YOLO models of similar size in both speed and accuracy on the COCO dataset. Given its high detection performance, RT-DETR serves as a strong foundation for detecting Huanglongbing (HLB) in citrus. The structure of RT-DETR is illustrated in

Figure 3.

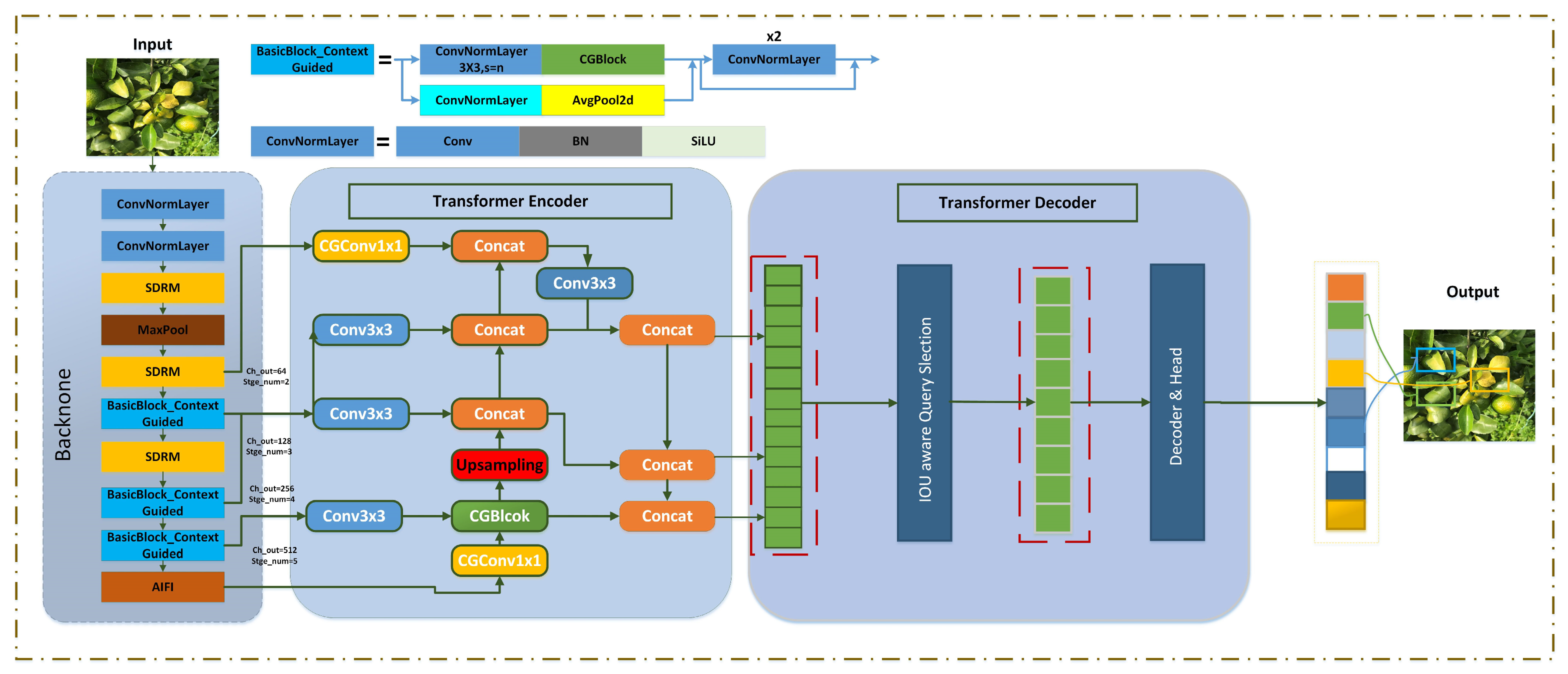

2.4. The Improved MSHLB-DETR Model

To address the challenges of multi-scale object detection in complex agricultural environments, this study proposes an enhanced and efficient detection model based on RT-DETR, named Multi-Scale Huanglongbing DETR (MSHLB-DETR), with its architecture depicted in

Figure 4. While extensively validated on the specific task of citrus Huanglongbing detection, the model’s design principles are generalizable. MSHLB-DETR comprises four key components: a backbone feature extraction network, an optimized encoder, a feature fusion network, and a decoder. In the ResNet-18 backbone, the novel SDRM replaces the first downsampling layer in Stage 2, substituting the original depthwise convolution (DWConv) with stride = 2. This modification enables more efficient feature extraction for small targets in the early stages of the network. The SDRM integrates a space-to-depth (SPD) module and an inverted residual mobile block (IRMB). The SPD component, consisting of an SPD layer and a non-strided convolutional layer, mitigates the information loss typical of strided convolution and pooling operations, thereby preserving finer image details. The IRMB module combines the lightweight characteristics of CNNs with the dynamic modeling capability of transformers, enhancing accuracy in dense prediction tasks. Crucially, the SDRM converts spatial features into depth features, facilitating deep interaction between local and global features while boosting computational efficiency. Furthermore, the model enhances the original transformer encoder with the Context-Guided Block (CG Block), a feature learning module that captures the combined features of local details and their surrounding context, thereby improving the integration of global contextual information. More detailed explanations of these improved modules are provided below.

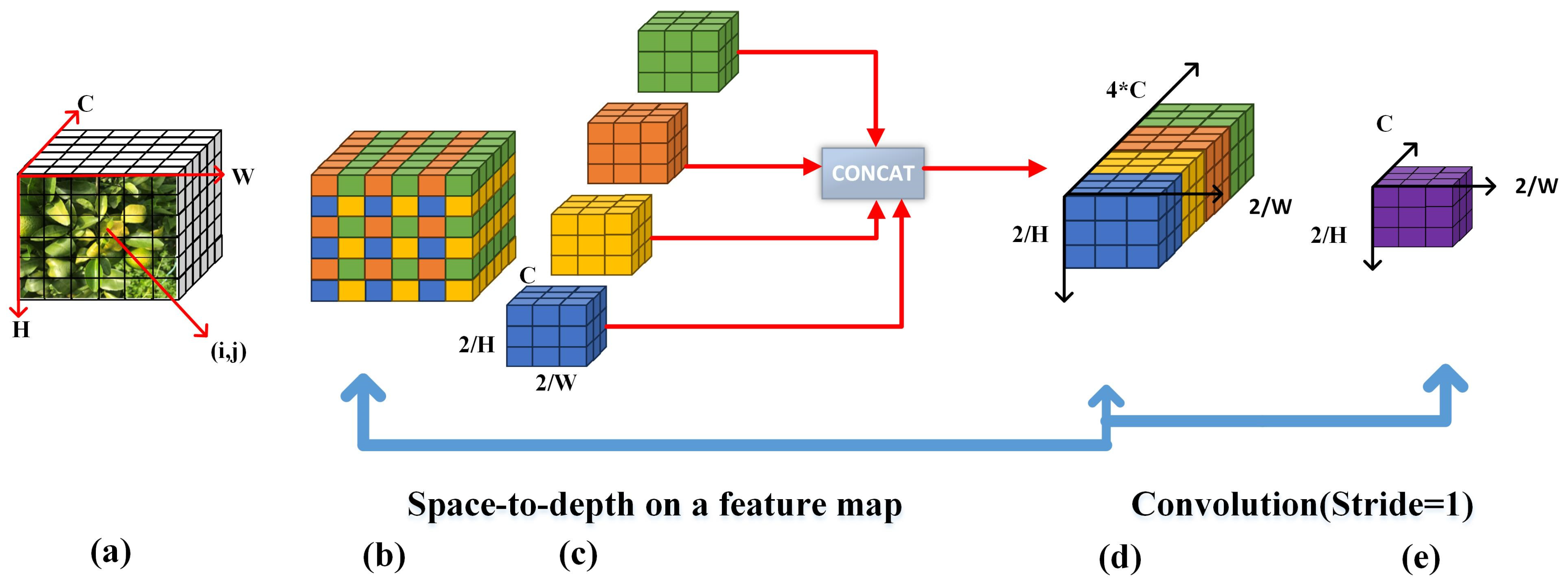

2.4.1. Space-to-Depth (SPD)

Capturing diseased citrus leaves in images in orchard environments presents two main challenges due to multi-scale targets: not only does it make small targets difficult to detect accurately, but it also increases the difficulty of detecting edge targets within the image. These challenges often overlap, as small targets located at the image edges are both small and edge targets, facing an even higher level of detection difficulty. The combination of small target size and incomplete information for edge targets requires detection algorithms with greater precision and robustness to ensure the accurate recognition of these challenging targets [

35]. Classical CNNs like AlexNet [

36] and ResNet [

37] play key roles in visual recognition but rely on stride convolution and pooling, which may cause information loss, degrading small target detection due to low resolution and limited contextual information. In multi-scale citrus leaf images, models tend to prioritize larger targets, as they occupy more prominent areas, leading to suboptimal small target detection.

To address low-resolution and small target detection challenges, this study introduces Space-to-Depth Convolution (SPD-Conv), which replaces strided convolution and pooling with CNN building blocks—a space-to-depth (SPD) layer and a non-strided convolution layer—enhancing feature preservation for small and edge targets. As shown in

Figure 5, the feature map (

Figure 5a) represents the traditional input feature map with channel count (C), height (H), and width (W). Through the space-to-depth operation (

Figure 5b), spatial blocks of pixels are rearranged into the depth/channel dimension, increasing the channel count to 4 while halving the spatial dimensions. Afterward, channel merging is applied (

Figure 5c), where four different channel groups are combined along the channel dimension. The merged feature map is then added to other processed feature maps (

Figure 5d). Finally, a convolution with a stride of 1 is applied to the resulting output feature map (

Figure 5e), reducing the channel dimension to 1 while maintaining the spatial resolution, which is still 1/4 of the original size. Liu et al. [

38] have demonstrated the effectiveness of SPD-Conv in mitigating information loss during the downsampling process, and Han et al. [

39] have shown its excellent performance in small target detection and segmentation tasks.

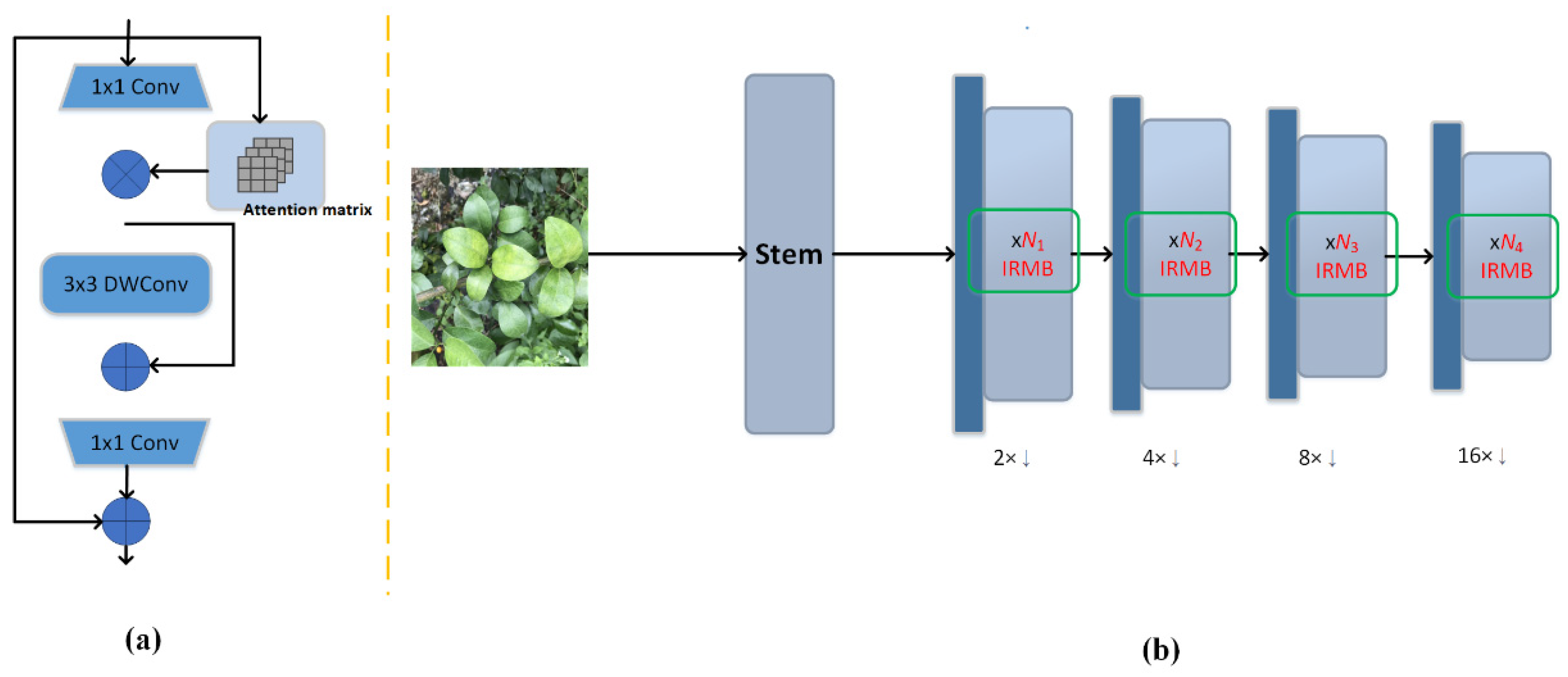

2.4.2. Inverted Residual Mobile Block (IRMB)

In densely packed canopies, mutual occlusion among leaves results in severely occluded or merged leaf appearances, which complicates the accurate regression of bounding boxes for individual instances.

The inverted residual mobile block (IRMB) combines the efficiency of CNN architectures for local feature modeling with the dynamic modeling capabilities of transformers for long-range interactions. This study constructs a ResNet-like model composed solely of IRMBs for downstream tasks, with IRMB stacking at different levels enabling the development of a more efficient and lightweight model.

For the dense prediction of diseased citrus leaves, this study designs a four-stage ResNet-like efficient model based on a series of IRMBs. To ensure lightweight computation, multi-head self-attention is replaced with window multi-head self-attention, and standard convolutions are substituted with depthwise convolution (DWConv). Since the query (

), key (

), and value (

) features generate a large number of additional parameters, we assume that the input image

satisfies

and

. The efficient module first applies a multi-layer perceptron (MLP) operation with a dilation rate λ to perform dimensionality expansion.

where

represents the expanded feature map obtained by processing the original input X through a multi-layer perceptron with expansion factor

, “

” indicates that the value of each channel and each position in the input image “

” are within the range of real numbers, and

corresponds to the number of channels, height, and width of the image. The term

indicates that the output maintains the same spatial dimensions (H × W) but expands the channel dimension from C to λC. Imagine this as creating an “enhanced version” of the original features—while the spatial structure remains intact, each position now carries λ times more feature information, enabling a richer representation of subtle disease patterns. During this operation, skip connections are incorporated to enhance the information flow and stability within the network while reducing the number of parameters. The resulting window multi-head self-attention, implemented through these modifications, is referred to as extended window multi-head self-attention (EW-MHSA). The efficient operator is redefined using

to further enhance image features, and the specific implementation can be expressed as follows:

This equation defines our efficient feature transformation operator F(·) that integrates extended window multi-head self-attention (EW-MHSA), depthwise convolution (DW-Conv), and skip connections. The processing pipeline operates as follows: EW-MHSA captures long-range dependencies between image regions through its attention mechanism; DW-Conv then processes local feature patterns, while skip connections preserve original information during transformations. This design achieves an optimal balance between global feature capture and local detail modeling. The inverted residual mobile block effectively combines the advantages of dynamic global context and static local features, significantly enhancing the transmission of feature information and the expressive power of the network, leading to improved performance in dense object detection. Therefore, the inverted residual mobile block is chosen to update the basic building blocks of the RT-DETR backbone model.

As illustrated in

Figure 6, the process begins with generating the query (

) and key (

) vectors, which serve as the foundation for the self-attention mechanism. A dilated convolution is then applied to generate the feature key (

) vector. Next, window self-attention is performed on

,

, and

to enable long-range interactions. Subsequently, DWConv is used to model local features, effectively decoupling channel mixing and spatial mixing, thereby reducing computational load while preserving local feature sensitivity.

Finally, a compressing convolution restores the number of channels, and the output is added to the input to form a residual connection, facilitating gradient flow in deep networks and enhancing model performance. Notably, since the core operations of dilated convolution and the self-attention mechanism involve matrix multiplication, self-attention can be computed before executing the dilated convolution [

40]. This approach reduces the number of floating-point operations while maintaining computational equivalence, thereby improving model efficiency.

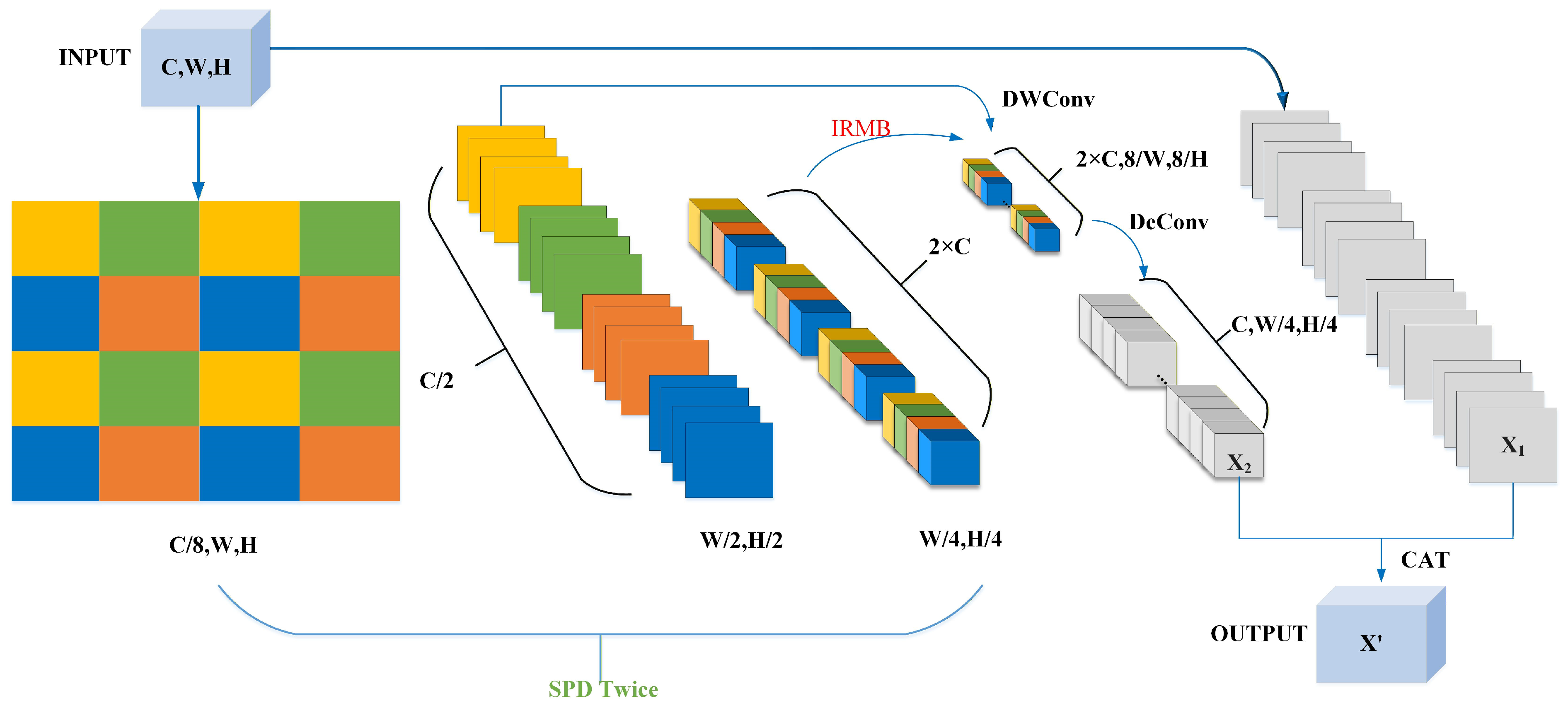

2.4.3. Smart Disease Recognition for Citrus Huanglongbing with Multi-Scale (SDRM)

To address the challenges of citrus disease detection in complex backgrounds, multi-scale targets, and clustering occlusions, this study introduces the Smart Disease Recognition Module (SDRM), which strategically integrates the complementary strengths of the space-to-depth (SPD) and inverted residual mobile block (IRMB) components. The SPD component serves as a feature-preserving frontend that converts spatial information into channel depth through lossless downsampling, effectively reducing information loss while enhancing the detection of small-scale leaf targets. The IRMB component then processes these enriched features using an efficient transformer-inspired architecture, employing attention mechanisms to improve feature flow in densely occluded regions. The synergistic SDRM architecture enhances global feature extraction while preserving the local details and boundary features of citrus leaves, making it particularly suitable for detecting HLB symptoms under challenging orchard conditions where both fine-scale details and contextual understanding are essential for accurate diagnosis. The SDRM structure is illustrated in

Figure 7.

The SDRM consists of two branches: One branch utilizes DWConv operations, which reduces both parameters and computational load compared to regular convolution. In the other branch, SDRM uses DWConv followed by two SPD operations to reduce the feature map size. This branch then connects to the IRMB module, identifying key features from dense regions and supplementing global information to improve the model’s accuracy in recognizing clustered regions. After deconvolution (DeConv), feature maps X1 and X2 are concatenated along the channel dimension, producing the final feature map following DWConv. This process ensures that the feature map after SDRM possesses both local and global representational capabilities.

2.4.4. Context-Guided Block (CG Block)

To enhance the accuracy of citrus disease detection, this study proposes a Context-Guided (CG) Block inspired by the human visual system. The CG Block encodes multi-level contextual information through three complementary pathways: local features, surrounding context, and global semantic guidance. It incorporates an efficient attention-like mechanism that jointly models spatial dependencies while applying channel-wise weighting based on global context, similar to the SENet architecture. This design effectively balances representational power and computational efficiency, making it particularly well-suited for dense object recognition scenarios. By integrating local and contextual features, CG Block significantly improves the model’s ability to detect citrus diseases in complex orchard environments, especially under conditions of occlusion, cluttered backgrounds, and subtle symptom presentation. It enhances the recognition of fine-grained and edge-based disease characteristics, thereby optimizing both detection accuracy and model reliability.

As shown in

Figure 8, human vision improves recognition by incorporating local details (purple) and global context (red), underscoring the importance of surrounding information. This combined approach strengthens feature extraction and detection capability in challenging scenes.

Figure 8d illustrates CG Block’s structure. The local feature extractor

uses 3 × 3 convolution to learn from eight neighboring vectors. The surrounding context extractor

applies 3 × 3 dilated convolution to expand the receptive field. The joint feature extractor

concatenates features, followed by Batch Normalization (BN) and Exponential Linear Unit (PReLU) activation. The global feature extractor

employs global pooling and fully connected layers to generate a weighted vector, refining feature emphasis through element-wise multiplication (C). The effectiveness of CG Block in enhancing contextual learning has been demonstrated in prior research [

41].

2.5. Evaluation Metrics and Experimental Environments

This study evaluates the object detection model using key metrics, including parameters (Params), giga-floating-point operations (GFLOPs), frames per second (FPS), and mean average precision (

). Params reflect the model’s storage requirements, while GFLOPs measure computational complexity based on forward and backward propagation. FPS serves as an indicator of processing speed, collectively assessing the model’s deployment efficiency.

quantifies detection performance by calculating the area under the precision–recall (PR) curve. Precision is the ratio of correctly identified samples to all detected samples, while recall represents the ratio of correctly identified samples to all actual samples. As precision increases, recall often decreases, highlighting their trade-off. The precision–recall calculation formulas are provided accordingly.

where TP (True Positive) is the number of correctly predicted positive samples, FP (False Positive) is the number of incorrectly predicted positive samples, and FN (False Negative) is the number of positive samples missed by the model. The mean average precision (mAP) evaluates overall object detection performance by averaging AP across categories, making it suitable for multi-class tasks.

represents the average precision at a 50% IoU threshold, while

averages precision from IoU 50% to 95% in 5% intervals. IoU measures the accuracy of predicted bounding boxes. FPS (frames per second) indicates the number of images processed per second. The main formulas are as follows.

where C represents the total number of categories. Preprocess represents the preprocessing time of the image, inference is the inference time of the image, postprocess is the postprocessing time of the image, and their units are milliseconds. The experimental environment used in this study includes a Windows 11 system (CPU: Intel(R) Core (TM) i5-12490F; GPU: NVIDIA GeForce RTX 4070 SUPER; RAM: 32 GB). Model construction was conducted using Python version 3.10.14, with the CUDA 12.1 library for GPU acceleration and the PyTorch 2.3.0 deep learning framework. Details on hyperparameter settings are listed in

Table 2.

3. Experiment Results and Analysis

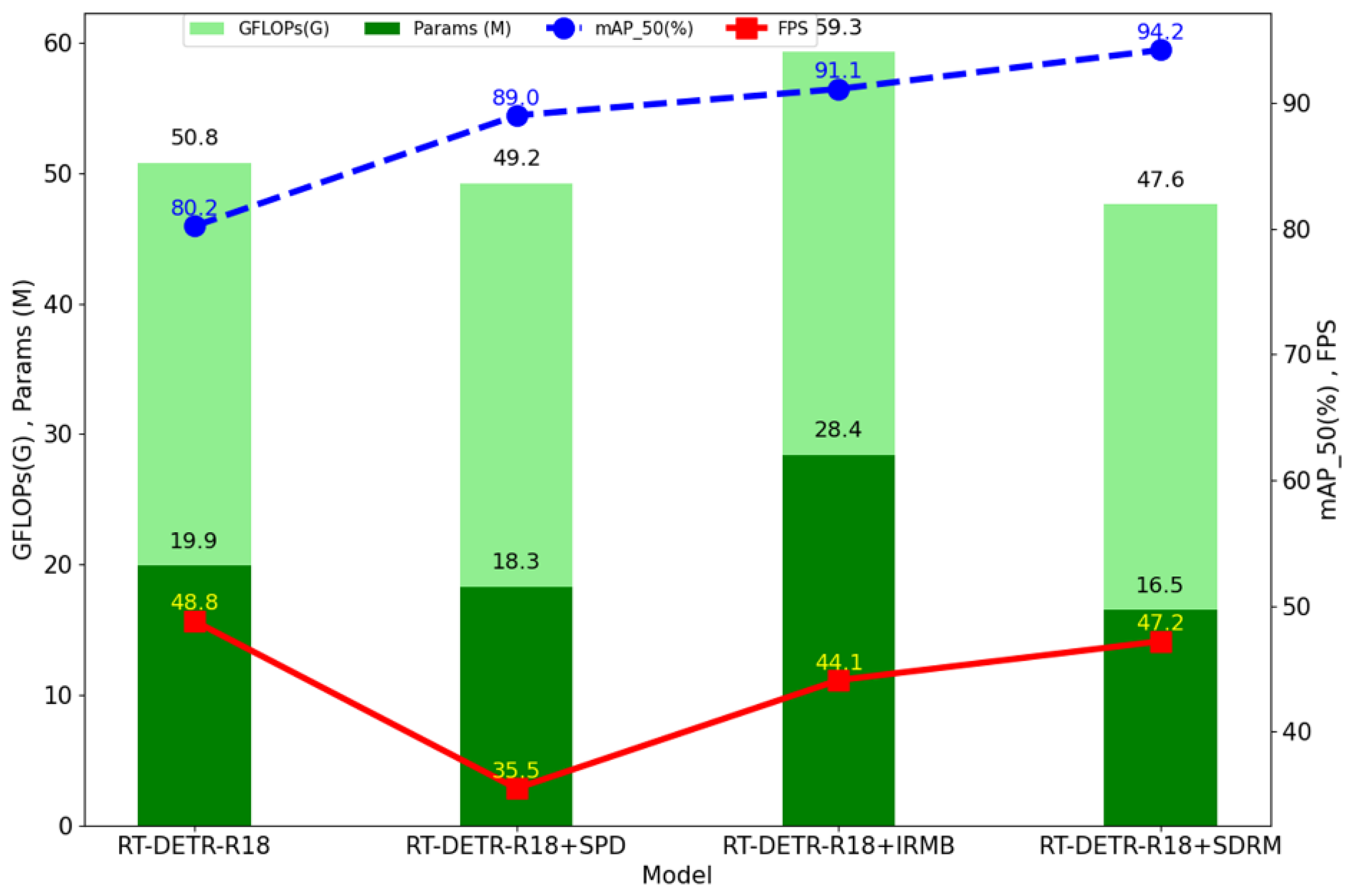

3.1. Backbone Network Design and Selection

The backbone network is crucial to model performance. Baidu developed four RT-DETR variants, and this study selected RT-DETR-L, RT-DETR-VGG, RT-DETR-R18, RT-DETR-R34, and RT-DETR-RegNet for comparison. RT-DETR-L serves as the baseline without backbone substitution. Evaluations were based on parameters, computational complexity, and detection accuracy, with the results detailed in

Table 3. As a lightweight ResNet model, ResNet18 features 18 weight layers and low complexity. It has only 19.9M parameters, significantly fewer than other networks, reducing computational requirements, storage, and deployment costs while minimizing overfitting risk. ResNet18 also has the lowest computational demand at 50.8 GFLOPs, making it suitable for resource-constrained environments. It achieved the highest

at 80.2%, enhancing small target detection and localization precision. As a result, RT-DETR-R18 is selected as the base model for subsequent improvements in this study.

3.2. Improving the Backbone Network Based on the SDRM

The SDRM, integrating SPD and the IRMB, significantly improves detection accuracy and generalization. To validate its efficiency, ablation experiments were conducted, with the results shown in

Table 4. Adding SPD and the IRMB to the baseline increased

by 8.8% and 10.8%, respectively, while combining both in the SDRM led to a 15.5% increase and a reduction in parameters. SPD reduces feature map size while increasing channels, accelerating transformer computations by minimizing attention cycles and inner product operations. The IRMB enhances local–global feature interaction. Overall, the SDRM effectively leverages both feature types, improving model generalization for complex scenes while boosting computational efficiency. Metric visualizations are shown in

Figure 9.

3.3. The Effectiveness of the CG Block Module

After improving the backbone network of RT-DETR with the SDRM, the model demonstrates a significant improvement in mAP metrics on the test set. However, further enhancement is still required for prediction performance in complex occlusion scenarios. The original RT-DETR model employs a conventional self-attention mechanism in its transformer encoder component, which may limit expressive feature mapping, particularly for dense occlusion prediction tasks. To address this limitation and further enhance its generalization ability while preserving its prediction capability, the improved model incorporates CGblock, a lightweight module that enhances object detection by fusing multi-scale context through dilated convolutions and channel attention mechanisms.

To validate the effectiveness of CGBlock’s hierarchical context fusion, we compare it with representative attention mechanisms (SimAM [

42] and CAA [

43]) under identical settings, focusing on their trade-offs between accuracy and efficiency. CGBlock achieves 96.0% mAP50 with only 48.3 GFLOPs, surpassing SimAM and CAA by 3.1% and 1.4% in accuracy while running 2.8× and 1.8× faster, respectively. This demonstrates its superior capability in balancing accuracy and efficiency. The impact of different attention modules on the recognition results is presented in

Table 5.

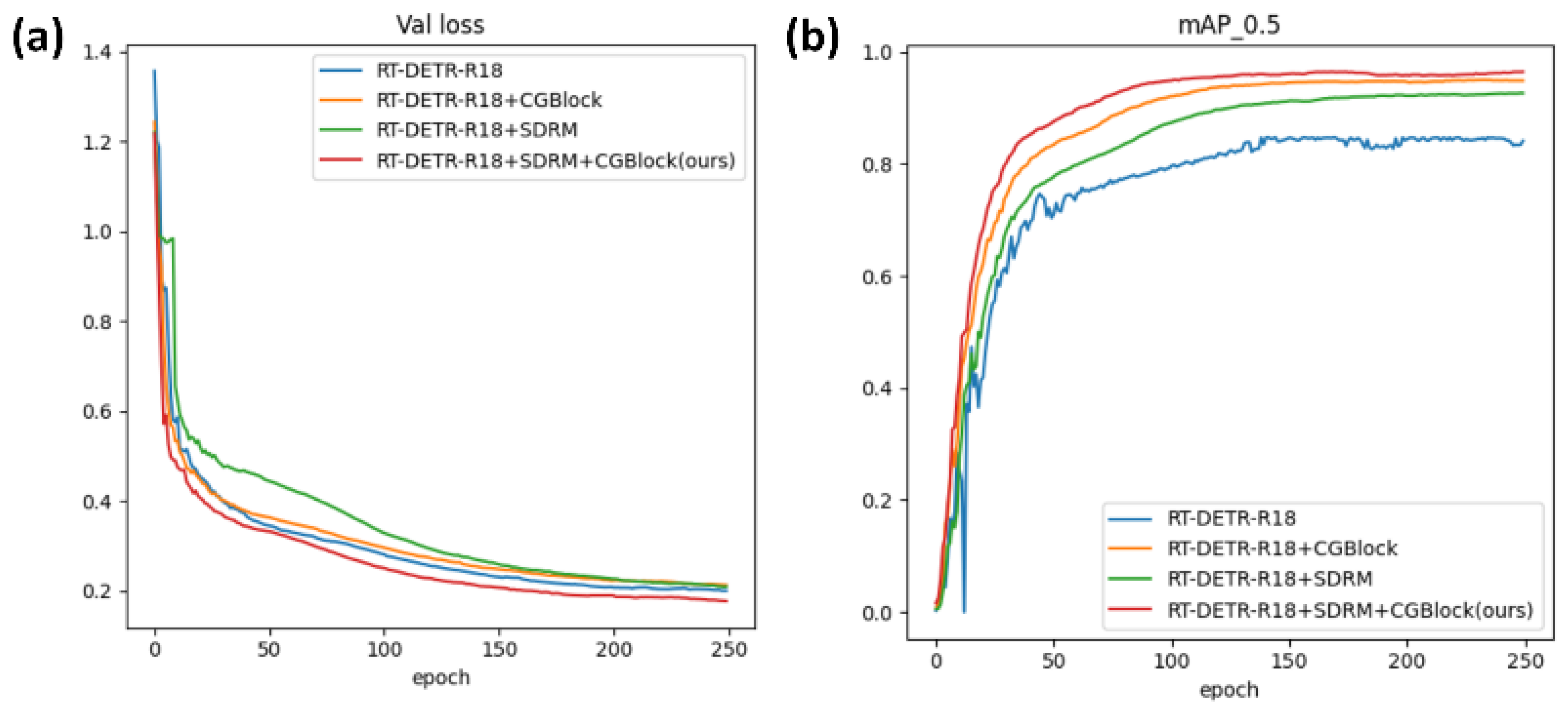

3.4. Ablation Experiments on the Proposed MSHLB-DETR

To assess the effectiveness of the SDRM and CG Block, ablation experiments on MSHLB-DETR were conducted, with the results shown in

Table 6. Replacing DWConv with the SDRM in RT-DETR increased

by 14% while achieving the lowest parameter count. Modifying the transformer encoder to include only CG Block improved FPS and enhanced dense target recognition. Compared to RT-DETR-R18, MSHLB-DETR achieved the highest

at 96.0%, with other metrics slightly improved or comparable, demonstrating superior generalization. The training curves in

Figure 10 reveal that RT-DETR-R18 exhibited significant oscillations during the early training phase and slower convergence, suggesting a potential risk of overfitting. In contrast, the other models showed rapid

improvement by the 75th epoch, followed by stable convergence. Overall, models equipped with the SDRM and CG Block outperformed baseline methods, demonstrating faster convergence and the highest accuracy while also improving continual learning stability.

3.5. Comparison Experiments of Different Models

In this experimental phase, we thoroughly evaluated the MSHLB-DETR model’s capability to detect citrus leaf diseases. The comparison aimed to illustrate the performance differences between various network models, with a particular emphasis on MSHLB-DETR’s superior accuracy and detection speed. In this study, the MSHLB-DETR model was evaluated for citrus leaf disease detection and compared with eight leading object detection algorithms. The comparison models included both CNN-based and transformer-based architectures. Specifically, YOLOv5, YOLOv8 [

44], YOLOv12 [

45], and Faster-R-CNN [

46] were chosen as CNN-based models, while DEIM-D-FINE-L [

47], RT-DETRv3-R18 [

48], DINO, and RT-DETR-R18 represented transformer-based models. To ensure fairness, the performance metrics selected for comparison included

,

, parameters, GFLOPs, and FPS. A visualization of the comparison models is presented in

Table 7 and

Figure 11.

Among the CNN-based models, the YOLO series demonstrates slightly lower accuracy and generalization capability across various scenarios when compared to the MSHLB-DETR model. Specifically, YOLOv5 achieves an

of 72.5% on the test set, significantly worse than MSHLB-DETR (96.0%). Although the YOLOv8 model benefits from a more complex architecture, yielding relatively good results in

and FPS (outperforming YOLOv5’s 72.5% and 41.0 FPS and YOLOv12’s 78.7% and 40.8 FPS), its anchor-based detection paradigm, constrained receptive fields, and NMS-induced errors hinder its ability to match transformer-based models, and this explains why its

remains significantly lower than MSHLB-DETR’s 96.0%. Additionally, Faster-R-CNN’s single-layer, lower-resolution feature maps result in suboptimal accuracy. When training CNN-based models, there is a tendency for these models to focus on recognizing and locating larger target objects. This bias may reduce detection accuracy for smaller targets, especially when multiple object sizes are present in the image [

49]. In contrast, the proposed MSHLB-DETR effectively boosted a higher detection accuracy, particularly with small targets, outperforming the CNN-based models.

Among the transformer-based models, DETR has the lowest and shows a slower convergence rate compared to other models trained on the same number of images. Notably, DINO demonstrates remarkable performance on the test set due to its rapid convergence during training, with an only 6.6% lower than that of MSHLB-DETR. However, its GFLOPs are nearly double those of MSHLB-DETR, and it shows lower precision in . Furthermore, when training transformer-based models, particularly those based on DETR, there is often a tendency to perform well in small object detection, as DETR’s design enables it to capture global context information about all objects within an image. This capability allows DETR-based models to detect small objects more effectively, thereby enhancing overall performance in object detection tasks. In conclusion, the proposed MSHLB-DETR effectively addresses challenges such as small object detection and the loss of contextual information, making it superior in these aspects.

In natural environments, citrus leaf detection must account for complex conditions including heterogeneous lighting, partial occlusion by other plant organs, and cluttered backgrounds. To address these challenges, we evaluated the performance of various models in different scenarios, comparing MSHLB-DETR, YOLOv8, Faster R-CNN, and RT-DETR-R18.

Figure 12 provides comprehensive visual evidence of MSHLB-DETR’s detection capabilities under challenging orchard conditions. The comparative analysis reveals several distinct advantages of our approach: In occlusion-heavy scenarios (

Figure 12B), MSHLB-DETR demonstrates remarkable resilience, accurately identifying diseased leaves that remain undetected by other methods. While Faster R-CNN, YOLOv8, and RT-DETR-R18 exhibit significant missed detections and positional inaccuracies for occluded targets, our model maintains precise localization through its context-guided design. This capability is particularly evident in edge occlusion cases where conventional detectors struggle with partial visibility. The model’s proficiency with densely packed small targets (

Figure 12A,C) further underscores its architectural superiority. Where competing methods fail to distinguish individual leaves in clustered regions, MSHLB-DETR’s innovative feature fusion strategy enables the clear separation and accurate detection of small-scale targets. This advantage is crucial for practical applications where early disease symptoms often appear on distant or minimally visible leaves. Multi-scale detection challenges (

Figure 12C) are effectively addressed by our approach, as demonstrated by MSHLB-DETR’s consistent performance across varying target sizes. The missed detections observed in YOLOv8 and RT-DETR-R18 under these conditions highlight the limitations of their feature representation capabilities compared to our method. Notably, in complex background environments (

Figure 12D), MSHLB-DETR excels at detecting diseased leaves under mild occlusions and at image edges. The model’s precision in capturing subtle boundary features allows it to identify minute pathological changes even when leaves partially blend with the background—a capability that directly stems from our context-aware architecture. These visual comparisons collectively demonstrate that MSHLB-DETR’s innovations in feature fusion and context guidance translate to tangible performance improvements across all challenging conditions examined. The model’s consistent accuracy under occlusion, dense clustering, multi-scale variation, and complex backgrounds confirms its robustness and readiness for real-world agricultural applications.

As illustrated in

Figure 13, a visual comparison between the heatmaps generated by different models and the corresponding original images reveals significant differences in attention distribution for citrus disease detection. Although MSHLB-DETR, YOLOv8, Faster R-CNN, and RT-DETR-R18 each exhibit their respective strengths, the heatmaps produced by MSHLB-DETR stand out prominently. Not only does MSHLB-DETR accurately highlight the high-concentration regions of citrus disease, but its attention distribution is also highly aligned with the actual disease locations in the original images. Compared to YOLOv8 and Faster R-CNN, MSHLB-DETR demonstrates superior control over false-positive hotspots, with attention more distinctly and centrally focused on the critical targets, thereby reducing the risk of both false alarms and missed detections.

While RT-DETR-R18 displays a broader coverage of the detection area, it appears less precise in identifying disease features under complex scenarios. As shown in

Figure 12A,C, the RT-DETR-R18 model struggles to effectively focus on the subtle features of citrus disease within large and complex detection regions. This limitation is likely attributed to its coarse-grained attention across expansive areas, which may compromise its ability to capture fine-grained disease characteristics, increasing the likelihood of erroneous predictions. In contrast, the heatmaps generated by MSHLB-DETR not only intuitively reflect its high sensitivity to small targets under complex backgrounds but also reveal its strong specificity in object detection tasks. This specificity is manifested in the model’s capacity to accurately distinguish and identify critical features of citrus disease, maintaining robust performance even under conditions of dense coverage and visual occlusion.

Figure 14 presents the confusion matrices of MSHLB-DETR and the main comparative models on the test dataset across three categories: HLB, Healthy, and Other. The results demonstrate that MSHLB-DETR achieves the highest classification reliability, with most samples concentrated along the diagonal, particularly for HLB detection. In contrast, YOLOv8 and RT-DETR-R18 show higher misclassification rates between Healthy and Other leaves, indicating difficulty in handling subtle visual differences. These findings confirm that MSHLB-DETR not only improves detection accuracy, as reported in

Table 7, but also achieves higher reliability in distinguishing between disease and non-disease classes. This further highlights the model’s practical potential for deployment in real-world citrus orchards.

3.6. Discussion

The experimental results presented in this study demonstrate that the proposed MSHLB-DETR model achieves state-of-the-art performance in detecting citrus Huanglongbing under complex orchard conditions. This section provides a comprehensive analysis of these findings and their implications.

The significant performance improvement in MSHLB-DETR over baseline models can be attributed to its innovative architectural design. The 15.8% increase in mAP50 compared to RT-DETR-R18, while maintaining comparable inference speed, highlights the effectiveness of our approach. This enhancement is particularly notable given the challenging nature of our dataset, which contains over 52% small targets and numerous occluded leaf scenarios. The SDRM proves crucial for handling small targets through its space-to-depth transformation and inverted residual mobile block. This design effectively mitigates information loss during downsampling while preserving computational efficiency. Simultaneously, CG Block demonstrates strong capability in processing occluded leaves by leveraging contextual information from surrounding regions. The synergistic combination of these modules enables robust performance in dense orchard environments where traditional detectors often fail. The development of MSHLB-DETR addresses critical limitations in current agricultural disease detection systems. By maintaining high accuracy under varying lighting conditions, complex backgrounds, and different leaf arrangements, our model shows strong potential for real-world deployment. This capability represents a significant advancement over conventional methods that typically require controlled imaging conditions.

Despite its strong performance, we acknowledge certain limitations in this study. First, the model’s sensitivity to early-stage infections requires further enhancement. The current dataset contains a larger proportion of severely infected leaves with obvious visual features. While this distribution reflects the reality of field scouting priorities and contributes to the model’s high overall accuracy, it may limit the model’s sensitivity to early-stage, mildly infected leaves whose symptoms are subtle and harder to recognize. Second, the model’s generalization capability across different citrus varieties and geographical regions requires further validation. Finally, the current computational requirements, although efficient for a research model, may present challenges for deployment on resource-constrained edge devices. These limitations define clear directions for our future research. Our immediate plan is to collect more samples with mild and moderate infection levels and to annotate symptom severity explicitly. This will enable a stratified evaluation of model performance and direct improvement in early detection capabilities. Concurrently, we will focus on expanding the training dataset to include more diverse growing conditions and citrus cultivars. To address deployment challenges, we will develop optimized versions of MSHLB-DETR through model compression and quantization techniques. Furthermore, the adaptable architecture of our model shows promising potential for extension to other critical agricultural vision tasks, such as pest detection and nutrient deficiency identification.

In summary, MSHLB-DETR represents a significant step forward in agricultural computer vision, demonstrating that specialized architectural designs can effectively address the unique challenges of plant disease detection in complex environments.