Abstract

Accurate real-time detection of blueberry maturity is vital for automated harvesting. However, existing methods often fail under occlusion, variable lighting, and dense fruit distribution, leading to reduced accuracy and efficiency. To address these challenges, we designed a lightweight deep learning framework that integrates improved feature extraction, attention-based fusion, and progressive transfer learning to enhance robustness and adaptability To overcome these challenges, we propose BMDNet-YOLO, a lightweight model based on an enhanced YOLOv8n. The backbone incorporates a FasterPW module with parallel convolution and point-wise weighting to improve feature extraction efficiency and robustness. A coordinate attention (CA) mechanism in the neck enhances spatial-channel feature selection, while adaptive weighted concatenation ensures efficient multi-scale fusion. The detection head employs a heterogeneous lightweight structure combining group and depthwise separable convolutions to minimize parameter redundancy and boost inference speed. Additionally, a three-stage transfer learning framework (source-domain pretraining, cross-domain adaptation, and target-domain fine-tuning) improves generalization. Experiments on 8250 field-collected and augmented images show BMDNet-YOLO achieves 95.6% mAP@0.5, 98.27% precision, and 94.36% recall, surpassing existing baselines. This work offers a robust solution for deploying automated blueberry harvesting systems.

1. Introduction

In the modernization of agriculture, intelligent production of berry crops has emerged as a key strategy for enhancing industry competitiveness. Among these, blueberries are a high-value crop whose harvesting efficiency and quality directly determine economic returns [1,2]. Currently, blueberry harvesting remains predominantly manual, creating a stark contradiction with the crop’s unique biological characteristics. Blueberries have thin, delicate skins, low resistance to mechanical damage, and a highly concentrated ripening period. Globally, the blueberry industry generates more than USD 9 billion annually and is projected to exceed USD 13 billion by 2031 [3,4]. Manual harvesting still dominates production: typical workers pick only 25–30 kg of fruit per hour, with expert pickers reaching up to 50 kg/h [5]. Moreover, harvesting delays or fruit drop during peak ripening can cause yield losses of 15–30%, and mechanical harvesting under suboptimal conditions may result in losses exceeding 30% of gross yield [6,7]. These factors lead to substantial reductions in profitability and underscore the urgency of developing automated harvesting systems. Against this backdrop, the development of automated harvesting systems with environmental perception, intelligent decision-making, and precise manipulation capabilities has become an inevitable solution to overcome this industrial bottleneck [8,9,10,11].

The core performance of automated harvesting systems depends on their adaptability to complex field environments and the accuracy of target recognition, with real-time and precise maturity assessment being a critical step for intelligent harvesting decisions, such as determining harvest timing and selecting fruits at optimal ripeness [12,13,14]. However, blueberry recognition in natural settings faces multiple challenges: (i) orchard environments are highly unstructured, where interlaced branches and clustered fruits cause severe occlusion and target overlap; (ii) lighting conditions are highly dynamic, with spectral variations reaching 3000 K, significantly affecting fruit surface reflectance [15,16,17]; and (iii) fruits at different maturity stages exhibit minimal spectral differences, with RGB space overlap as high as 68% and HSV space separability of only 23% [18,19,20,21]. These factors reduce the detection accuracy of traditional image recognition methods to below 72%, which falls far short of industrial application requirements.

The rapid progress of machine learning and deep learning, which has revolutionized multiple fields [22,23,24,25,26,27,28,29,30,31,32,33], has also driven significant breakthroughs in fruit detection and maturity recognition. Convolutional neural network (CNN)-based object detection methods can automatically learn deep features such as fruit texture and gloss, overcoming the reliance of traditional algorithms on color features [34,35,36]. Current research primarily follows two technical paths: (i) multi-task learning frameworks that share features across tasks such as fruit detection and grading; however, these methods exhibit high model complexity and significant computational overhead, making them unsuitable for resource-constrained, real-time applications [37,38]; and (ii) lightweight optimization strategies based on the YOLO series, which not only inherit the feature learning ability of CNNs but also provide end-to-end detection with real-time speed, superior adaptability to small and dense targets, and better deployment efficiency on embedded devices. These models balance accuracy and efficiency through network compression, attention enhancement, and loss function refinement, offering better suitability for detecting small, clustered fruits [39,40,41,42,43,44]. Studies have demonstrated the adaptability of YOLO architectures for blueberry detection. For example, Ye et al. [45] improved YOLOv9 into CR-YOLOv9, achieving an 11.3% increase in mAP@0.5 and 52 FPS on edge platforms, while Liu et al. [46] integrated the CBAM attention module into YOLOv5, improving mAP by 9%, though performance bottlenecks persist under occlusion and complex lighting.

Taken together, the key challenges in blueberry maturity detection include: (1) occlusion and scale variation caused by clustered fruits; (2) feature confusion resulting from complex backgrounds and dynamic lighting conditions; and (3) color similarity between immature fruits and leaves, which complicates recognition. Existing high-accuracy models typically exhibit large parameter sizes and high computational complexity, making them unsuitable for embedded deployment and real-time applications. Therefore, developing a detection model that balances high precision with lightweight design has become a core technical requirement for automated blueberry harvesting.

The main contributions of this study are summarized as follows:

- A lightweight blueberry maturity detection model, BMDNet-YOLO, is proposed based on an improved YOLOv8n architecture, achieving a balance between high accuracy and low computational cost through deep feature enhancement and structural optimization;

- A FasterPW module is introduced into the backbone to improve feature extraction efficiency, while the neck integrates a coordinate attention (CA) mechanism and an adaptive weighted concatenation module for efficient multi-scale feature fusion;

- The detection head employs a lightweight shared convolution structure based on heterogeneous convolution (HetConv), effectively reducing parameter redundancy and significantly improving inference speed;

- A three-stage transfer learning strategy—comprising source-domain pretraining, domain adaptation, and target (domain fine-tuning) is developed to accelerate convergence and enhance generalization in complex scenarios.

2. Materials and Methods

2.1. Dataset

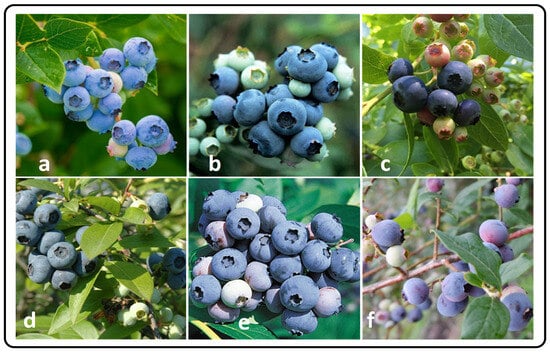

To develop an ecologically representative dataset for blueberry maturity detection, multi-modal image acquisition was conducted at the Duxiushan Blueberry Plantation in Anhui Province, China (116°44′55″ E, 30°34′3″ N). To comprehensively capture morphological variations across the growth cycle, a multi-source heterogeneous data collection strategy was employed. First, full-cycle sampling was carried out during the ripening season, covering three typical lighting periods (morning, noon, and evening) under varying weather conditions, including sunny, cloudy, and backlit scenarios. Second, a six-dimensional scene matrix was constructed using a controlled variable approach, encompassing key factors such as lighting conditions, occlusion levels, spatial distribution, background complexity, camera angles, and focal lengths to ensure data diversity and representativeness. For imaging, an industrial-grade system (resolution: 4000 × 3000 pixels; color depth: 12 bits) was utilized with a polarizing filter set, capturing RAW images under a D65 standard light source, followed by color correction and conversion to the sRGB color space. The final benchmark dataset comprises 1980 annotated samples, with representative examples shown in Figure 1.

Figure 1.

Examples of blueberries under different complex scenarios: (a) sunny, (b) cloudy, (c) backlight, (d) leaf occlusion, (e) densely clustered fruits, and (f) sparsely distributed fruits.

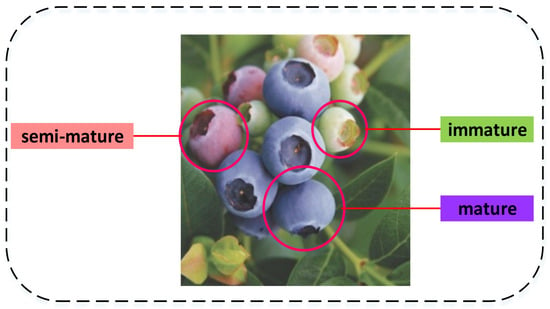

Based on morphological differences during blueberry fruit development, a three-level maturity classification system was established. Fruits at the mature stage are large in size and exhibit deep purple to blue-black coloration; those at the ripening stage are slightly smaller, displaying transitional hues from pink to light purple; and immature fruits are small, bright green, and firm in texture. This grading system was visualized using pseudo-color mapping and validated through cross-analysis with a spectroradiometer (ASD FieldSpec 4, Analytical Spectral Devices, Inc. Boulder, CO, USA)

texture analyzer (TA.XT Plus, Stable Micro Systems Ltd., Surrey GU7 1YL, UK), achieving a classification accuracy of 92.7%. These results provide a reliable phenotypic foundation for developing subsequent intelligent sorting algorithms. Representative illustrations of different maturity stages are shown in Figure 2.

Figure 2.

Diagram of blueberry maturity levels.

Based on the collected raw images, a blueberry ripeness visual analysis dataset (BRVAD) was constructed. Images were first standardized to 96 × 96 pixels to reduce storage requirements, and annotated using the LabelImg tool into three categories: “mature” (fully ripe), “semi-mature” (partially ripe), and “immature” (unripe). Multi-dimensional data augmentation, combining random geometric transformations with image enhancement and applying affine transformations to adjust annotation coordinates, expanded the dataset to 8250 valid samples. The dataset was then split into training, validation, and testing sets in a 7:2:1 ratio to ensure sufficient training and independent evaluation. The sample distribution is summarized in Table 1.

Table 1.

Distribution of Blueberry Dataset.

2.2. Baseline Model

This study adopts the YOLO series as the baseline framework for blueberry maturity detection. YOLO models employ a single-stage detection paradigm, enabling simultaneous object classification and bounding box regression within a single forward pass, thereby achieving high inference speed without compromising accuracy—an essential requirement for real-time operation on agricultural edge devices [47,48,49,50,51]. Additionally, the series leverages multi-scale feature fusion to effectively capture subtle morphological and color variations of blueberries, which measure only 0.8–1.5 cm in diameter, and has been widely validated across agricultural detection tasks for its robustness and adaptability. YOLOv8 [52,53,54], an advanced single-stage detection algorithm, builds upon YOLOv5 and YOLOv7 with key architectural upgrades, delivering higher accuracy and faster inference for real-time applications. It adopts an end-to-end approach, processing the entire image in one pass using the CSPDarknet backbone for feature extraction, while the Cross-Stage Partial (CSP) structure reduces redundant computation [48]. The Focus module efficiently captures multi-scale features. The detection head introduces a decoupled design, independently predicting class probabilities and bounding box coordinates to optimize feature learning, and integrates an anchor-free mechanism to directly estimate center offsets and width-height values, simplifying training and improving small-object detection. For feature fusion, YOLOv8 employs a PANet structure with bidirectional path aggregation to enhance multi-resolution feature complementarity. Bounding box regression is optimized using CIoU loss, which jointly considers overlap, center distance, and aspect ratio, thereby improving localization accuracy. Through these innovations, YOLOv8 achieves industrial-grade real-time performance, delivering up to 150 FPS at 96 × 96 resolution, while significantly enhancing robustness for dense, small, and occluded targets.

To meet the stringent requirements for model lightweighting and real-time performance in blueberry maturity detection under natural conditions, this study conducted a systematic evaluation of the YOLOv8 series, which includes five variants: YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x [55,56,57,58,59], with parameters and computational complexity increasing sequentially. Among them, YOLOv8n, as the lightweight version, achieves an optimal balance between accuracy and speed through depthwise separable convolutions and channel reordering, making it particularly suitable for deployment on edge devices. Therefore, YOLOv8n was selected as the baseline model in this study, upon which structural improvements were introduced in feature extraction and feature fusion. Based on these enhancements, we propose BMDNet-YOLO (Blueberry Maturity Detection Network based on YOLO), aiming to improve detection accuracy in complex backgrounds without increasing model parameters or computational load.

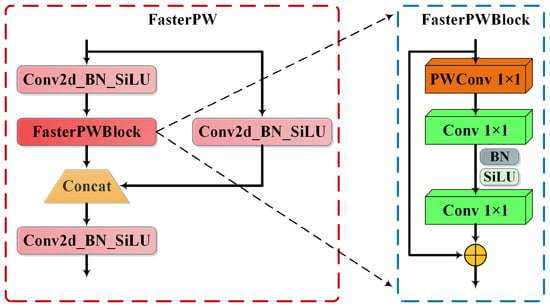

2.3. Backbone Optimization: FasterPW

To accelerate neural network inference, most existing studies focus on reducing floating-point operations (FLOPs). However, lower FLOPs do not always translate into reduced latency due to inefficient floating-point execution [60]. To address this issue, the FasterNeXt network introduced Partial Convolution (PConv), which improves computational efficiency while reducing FLOPs, thereby shortening inference delay without compromising accuracy. Nonetheless, PConv still suffers from limitations, including restricted stride, insufficient receptive field, difficulty in determining convolution ratios, and additional computational overhead [61].

To overcome these drawbacks, this study replaces PConv in FasterNeXt with Pointwise Convolution (PWConv) [62], constructing a FasterPW architecture. PWConv is a special multi-channel convolution with a fixed kernel size of 1 × 1. Let the input feature map be X of size , the output feature map be Y of size , and the kernel be W of size . The PWConv operation is expressed as:

where is the input pixel at position () in channel c, is the weight connecting input channel c to output channel k, and is the bias term. Since PWConv involves no spatial downsampling, it preserves spatial resolution while performing channel-wise weighted summation for feature mapping. Its computational complexity depends only on the number of input/output channels and the feature map size, offering significantly higher efficiency compared to traditional convolutions (complexity proportional to kernel size squared).

Beyond reduced complexity, PWConv acts as a local channel context aggregator, enabling cross-channel interactions at each spatial location and strengthening inter-channel correlation. For blueberry images, PWConv facilitates deep fusion between color and texture channels, thereby improving feature discriminability.

Based on this, the proposed FasterPW architecture (Figure 3) adopts a modular design comprising three standard convolutional layers and multiple FasterPWBlock modules, forming a lightweight feature extraction network. The core design principles include: (1) applying PWConv within FasterPWBlock for efficient channel interaction; (2) combining multiple FasterPWBlocks with standard convolutional modules (ConvModules) to build a hierarchical structure for robust spatial feature capture.

Figure 3.

The construction process of the FasterPW structure.

Finally, the YOLOv8 backbone was modified by replacing its C2f structure with the lightweight FasterPW modules, effectively reducing parameter count, FLOPs, and memory access cost while maintaining efficient feature extraction and improving network lightweighting.

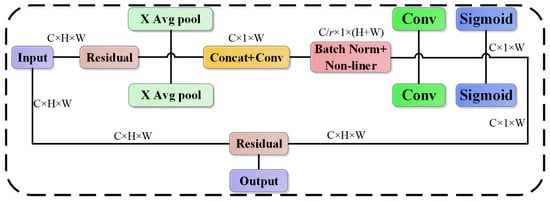

2.4. Coordinate Attention Mechanism

To address insufficient spatial feature representation caused by concentrated target-scale distribution, adjacency or overlap between fruits, and leaf occlusion in complex scenes within the blueberry dataset, this study integrates the Coordinate Attention (CA) mechanism [63] into the neck of the feature extraction network to enhance feature focusing. As shown in Figure 4, CA embeds precise positional information during channel attention weight generation, achieving joint channel–spatial encoding and enabling spatially-aware response modulation. This spatially sensitive attention mechanism strengthens the model’s ability to delineate boundary features in overlapping regions and applies a dynamic weighting strategy to enhance local features. It is particularly effective in densely clustered fruit scenarios, significantly improving edge information capture and mitigating false negatives and false positives caused by spatial competition.

Figure 4.

CA attention mechanism. Note: C is number of channels; H stands for image height; W represents image width; r is reduction factor.

The CA mechanism operates through two steps: coordinate information embedding and attention generation. First, the input feature map undergoes global average pooling to capture directional global information along horizontal and vertical axes, expressed as:

These pooled descriptors are concatenated to form a joint spatial encoding feature, which is then processed by a convolution module for deep feature refinement. Specifically, a convolution kernel compresses channels by a reduction ratio r (to of the original), followed by batch normalization and a ReLU activation , producing an enhanced spatial-aware feature map f, formalized as:

Next, f is split into two tensors along spatial dimensions. Each tensor is processed using convolutions to generate feature maps and , which are restored to the original channel dimension. After applying the sigmoid function, attention weights along two directions are computed as:

Finally, these attention weights are fused with the original feature map through element-wise multiplication, yielding the output feature map Y with embedded coordinate attention:

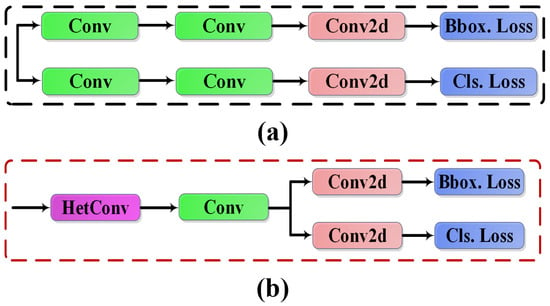

2.5. Lightweight Shared Convolution Head Based on HetConv

The detection head in YOLOv8 adopts the decoupled architecture of YOLOX, which, however, introduces significant parameter redundancy and computational overhead. To address the excessive parameterization of the detection head, we propose a lightweight shared convolution head based on Heterogeneous Kernel Convolution (HetConv) [64]. HetConv, an innovative deep learning architecture, integrates and kernels to form a hybrid computation mechanism. Its core design introduces a tunable parameter P to divide convolution operations into multiple parallel branches. This heterogeneous configuration dynamically adjusts receptive field combinations, substantially reducing FLOPs and parameter count while preserving critical feature extraction capabilities. By combining the spatial modeling strength of kernels with the channel interaction efficiency of kernels, HetConv achieves an optimal trade-off between performance and efficiency, making it an effective solution for lightweight network design.

In the improved structure, feature maps first pass through a shared convolution layer and then through independent Conv2d modules for task-specific processing, followed by separate computation of classification loss (Cls Loss) and bounding box regression loss (Bbox Loss). Figure 5 illustrates the comparison between the original YOLOv8 head and the improved design. Through parameter sharing between branches, the modified head significantly reduces model complexity and parameter count while maintaining detection performance, thereby improving inference speed and overall detection accuracy for blueberry targets.

Figure 5.

(a) The head of YOLOv8; (b) the head of BMDNet-YOLO.

In standard convolution, all kernels share the same size. Assuming an input feature map size of , input channels M, kernel size , and output channels N, the computational cost for standard convolution is:

To quantify HetConv efficiency, let parameter PPP represent the number of different kernel types in the filter. In HetConv, of the kernels adopt size , and the remaining () use size . For HetConv with partial P-configuration, the computational cost for kernels of size is:

The cost for remaining kernels is:

Thus, the total cost for HetConv is:

The ratio of HetConv to standard convolution is:

When , HetConv degenerates to standard convolution. In this study, and . Under these settings, the computational cost of HetConv is approximately 33% of that of standard convolution, demonstrating a significant reduction in complexity and providing theoretical support for constructing efficient lightweight convolutional neural networks.

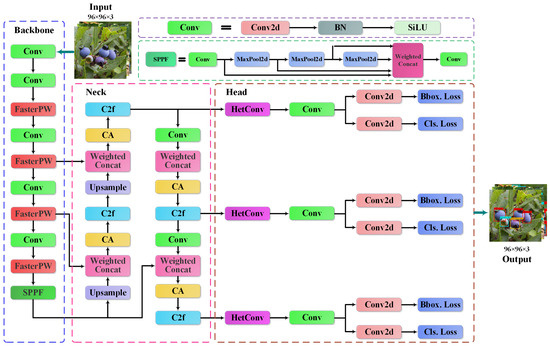

2.6. Proposed Model

The overall architecture of the improved blueberry maturity detection model, BMDNet-YOLO, is illustrated in Figure 6. The model consists of four functional modules: input, backbone, neck, and detection head, forming an end-to-end feature extraction and detection framework. In the backbone, the original C2f module is replaced with the FasterPW module, which leverages partial convolution and channel reorganization to maintain multi-scale feature extraction capability while significantly reducing computational complexity and improving inference efficiency. To address illumination variation, leaf occlusion, and background interference in natural scenes, the CA mechanism is introduced into the neck, jointly modeling spatial and channel information to achieve precise attention on target regions and enhance feature representation of color gradients and texture details related to maturity. For improved multi-scale feature fusion, the traditional Concat operation is replaced by a Weighted Concat module, which applies dynamic channel-weighting to adaptively integrate shallow and deep features, assigning weights based on feature importance to strengthen morphological representations across different maturity stages. The detection head adopts a shared convolution structure based on HetConv. This design combines multi-scale kernels within a parallel parameter-sharing framework, reducing parameter redundancy while preserving multi-task inference performance, thereby achieving simultaneous improvements in detection accuracy and model lightweighting.

Figure 6.

Structural diagram of BMDNet-YOLO for blueberry maturity detection.

During inference, the input module first applies adaptive histogram equalization and normalization, followed by data augmentation to generate multi-scale training samples. The backbone then extracts hierarchical features via the FasterPW module, compressing redundant information and reorganizing channel dimensions for efficient encoding. In the neck, CA enhances spatial-channel attention, guiding the model to focus on fruit core regions, while the Weighted Concat module adaptively fuses multi-scale features by dynamically adjusting feature importance. Finally, the detection head performs joint modeling through HetConv-based parameter sharing and heterogeneous convolution, further reducing computational cost without compromising accuracy. This architecture significantly accelerates inference while maintaining high detection precision, fulfilling real-time maturity grading requirements for agricultural automation.

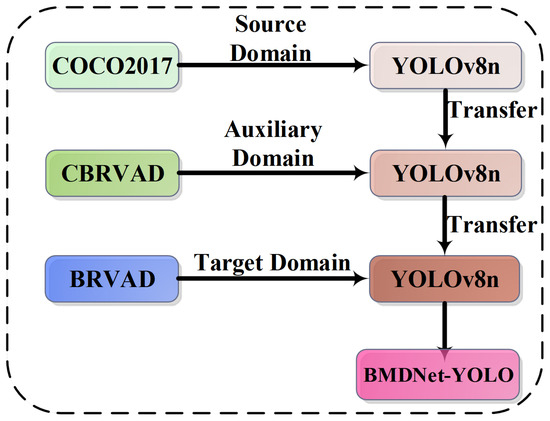

2.7. Transfer Learning

To enhance accuracy and generalization in cross-domain image recognition while reducing training time, this study introduces a three-stage transfer learning framework for systematic optimization of the detection model. This framework employs a weight knowledge transfer strategy to effectively migrate the feature encoding capability of pretrained models to the target network, thereby accelerating convergence, improving stability, and mitigating overfitting through parameter regularization. The technical pipeline is illustrated in Figure 7, and consists of three progressive stages: Stage 1: Source-Domain Pretraining. A large-scale generic object detection dataset, COCO2017 (http://cocodataset.org/#download, accessed on 2 June 2025), is used to extensively pretrain the lightweight YOLOv8n model, enabling multi-scale visual feature learning and establishing a universal feature representation paradigm. The pretrained weights obtained at this stage provide generalized feature encoding capacity for cross-domain transfer. Stage 2: Domain Adaptation Enhancement. To bridge the domain gap, a coffee ripeness visual analysis dataset (CBRVAD) was constructed using the same acquisition strategy and imaging devices as the BRVAD dataset. Data were collected at the Puer Aini Manor in Simao District, Puer City, Yunnan Province (101°24′15.404″ E, 22°38′54.110″ N), resulting in 2200 multimodal images of coffee fruits at different ripeness stages. All images were resized to pixels. Representative samples are shown in Figure 8. This resizing strategy was adopted to ensure computational efficiency and consistency; it preserves the essential color, texture, and shape features required for ripeness classification while reducing redundancy and mitigating overfitting. Comparative experiments confirmed that the resizing process did not cause significant accuracy degradation, but instead improved training stability and efficiency. Continued training on CBRVAD strengthens the model’s ability to capture hierarchical phenotypic features in plant structures. Stage 3: Target-Domain Fine-Tuning. The final adaptation stage involves fine-tuning the model on the blueberry ripeness detection dataset using a differential learning rate strategy. This step optimizes feature decoupling and task-specific adaptation, enabling the detector to accurately capture the spectral and morphological characteristics of blueberry fruits, ultimately yielding a specialized model for blueberry maturity detection. The proposed three-stage transfer learning paradigm follows a progressive optimization path of “generic feature learning → domain-specific enhancement → target-task adaptation.” This approach effectively alleviates data scarcity in the target domain while preserving network lightweighting, achieving a balance between detection accuracy and deployment efficiency.

Figure 7.

Transfer learning pipeline.

Figure 8.

Sample images from the coffee dataset.

3. Experiments and Results

3.1. Model Training Environment and Parameters Setup

The experimental platform was built on the PyTorch 2.0 deep learning framework, running on Windows 11 (64-bit) with an Intel® Core™ i7-14700 processor (Intel Corporation, Santa Clara, CA, USA) and an NVIDIA GeForce RTX 4090 (NVIDIA Corporation, Santa Clara, CA, USA). To enhance training efficiency and convergence performance, the Adam optimizer was employed with a momentum parameter of 0.9 and a fixed weight decay of 0. This optimizer dynamically adjusts the learning rate to alleviate potential gradient vanishing during early training, ensuring greater stability in parameter updates. A two-phase training strategy was adopted: during the first 50 epochs, frozen training (batch size = 16) was applied by freezing backbone parameters to stabilize feature extraction; during the subsequent 50 epochs, the network entered an unfreezing stage (batch size = 8), enabling all parameters to update for global optimization. This progressive training mechanism balances stability and adaptability, accelerates overall convergence, and significantly improves final model performance.

3.2. Evaluation Metrics

To quantitatively evaluate model performance, Precision, Recall, and mean Average Precision at IoU 0.5 (mAP@0.5) were adopted as evaluation metrics [65,66]. Precision measures the proportion of correctly identified positive samples among all predicted positives, reflecting the model’s accuracy in minimizing false positives. Recall denotes the proportion of actual positive samples correctly detected, indicating the model’s ability to reduce false negatives. mAP@0.5, a key metric in object detection, represents the mean of Average Precision (AP) across all classes when the Intersection over Union (IoU) threshold is set to 0.5.

where donates true positive; donates false negative; donates false positive; N donates class.

3.3. Comparative Performance Analysis of Different Models

To verify the superiority of BMDNet-YOLO in blueberry maturity detection, comparative experiments were conducted against four representative object detection models: Faster R-CNN [66], SSD [67], YOLOv5s [68], and YOLOv8n [69]. The results are summarized in Table 2.

Table 2.

Performance comparison of different models.

From a core detection perspective, BMDNet-YOLO achieved the highest performance across all metrics. Specifically, mAP@0.5 reached 95.6%, representing improvements of 8.8%, 11.3%, 7.5%, and 3.6% over Faster R-CNN, YOLOv5s, SSD, and YOLOv8n, respectively. Precision and recall rose to 98.27% and 94.36%, respectively, securing the top rank among all models. In complex scenarios, the proposed model significantly reduced missed detections of small targets and false detections of overlapping fruits by optimizing multi-scale feature fusion, thereby achieving both accuracy and robustness improvements over the YOLOv8n baseline.

In terms of lightweight design, BMDNet-YOLO introduced lightweight attention modules and dynamic channel-weighting strategies, reducing the parameter size to 1.845 M and FLOPs to 5.124 GFLOPs, which is 41.5% smaller than YOLOv8n, while maintaining detection accuracy. Memory usage and computational cost were notably lower than all comparison models, ensuring clear advantages for deployment on mobile and edge devices. For real-time performance, inference time was reduced to 19.7 ms, an 11.7% improvement over YOLOv8n, demonstrating substantial speed gains without compromising accuracy. These results indicate that BMDNet-YOLO effectively breaks the trade-off between lightweight design and high accuracy, offering a practical solution for mobile-based blueberry quality detection with real-time and precise grading capabilities.

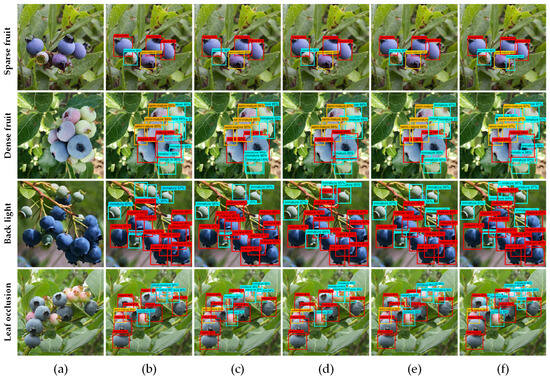

To further assess model robustness, visualization experiments were conducted on the blueberry test set under typical conditions, including sparse and dense fruit distribution, backlighting, and leaf occlusion (Figure 9). Results show that BMDNet-YOLO achieved complete detection with no missed, false, or duplicate detections across all scenarios, whereas other models exhibited varying degrees of error. Specifically, Faster R-CNN and SSD showed duplicate bounding boxes under backlighting and dense fruit conditions, while Faster R-CNN, YOLOv5s, SSD, and YOLOv8n all missed detections when fruits were partially occluded by leaves. Two major factors contributed to these failures in traditional models: (i) color similarity between immature fruits and background foliage, leading to class confusion, and (ii) insufficient localization accuracy for occluded targets. In contrast, BMDNet-YOLO effectively mitigated these issues through enhanced multi-scale feature fusion and attention mechanisms, improving target localization and feature discrimination in complex scenarios.

Figure 9.

Detection performance comparison under different scenarios: (a) Original images; (b) Faster R-CNN; (c) SSD; (d) YOLOv5s; (e) YOLOv8n; (f) BMDNet-YOLO.

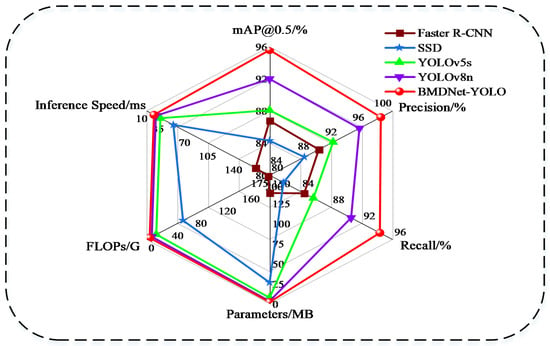

Additionally, a radar chart (Figure 10) provides a multi-dimensional visualization comparing BMDNet-YOLO with Faster R-CNN, SSD, YOLOv5s, and YOLOv8n. The results indicate that BMDNet-YOLO consistently leads in accuracy, inference speed, and parameter efficiency. Notably, in challenging conditions (such as dynamic lighting, overlapping targets, and small-object detection) the proposed model achieved simultaneous improvements in detection confidence and inference speed, validating its engineering applicability as a lightweight real-time detection framework. With its ability to maintain high accuracy while significantly reducing parameters and computational complexity, BMDNet-YOLO demonstrates strong potential for deployment on resource-constrained embedded devices, offering a robust, low-latency detection solution for agricultural harvesting robots and other real-world applications.

Figure 10.

Multi-dimensional performance comparison of different models.

3.4. Comparative Experiments of Different Attention Mechanisms

To systematically evaluate the impact of the Coordinate Attention (CA) mechanism on YOLOv8n performance in blueberry maturity detection, comparative experiments were conducted on the BRVAD dataset. A YOLOv8-CA detection framework was constructed by embedding the CA module into the neck of the backbone network. Additionally, three mainstream attention mechanisms (SE [70], CBAM [71], and ECA [72]) were integrated into YOLOv8n to build corresponding variants for quantitative comparison. The results are summarized in Table 3.

Table 3.

Performance comparison of different attention mechanisms.

The results indicate that YOLOv8n-CA significantly improves detection performance without increasing model size or computational cost. Compared with the baseline YOLOv8n, mAP@0.5 increased by 1.74%, precision by 2.23%, and recall by 3.34%. These gains directly correspond to a reduction in false positives (improved precision) and missed detections (improved recall). In horizontal comparison, CA outperformed SE, CBAM, and ECA in overall performance and exhibited stronger robustness across varying data distributions. Notably, the lightweight design of CA ensures no significant computational overhead when integrated into the neck, validating its ability to deliver performance gains without compromising model efficiency. Overall, CA enhances spatial position awareness, strengthens feature focusing and target localization, and achieves an optimal balance between detection accuracy and inference speed, providing a viable strategy for real-time detection optimization.

3.5. Ablation Study

To analyze the synergistic effects of the proposed modules and verify the scientific validity of the improvements, a series of controlled ablation experiments were conducted. All hyperparameters, training strategies, and runtime environments were kept identical, with the original YOLOv8n serving as the baseline. Four key modules were progressively integrated: the FasterPW feature extraction structure, the CA attention mechanism, the Weighted Concat module, and the HetConv lightweight detection head. Core evaluation metrics included mAP@0.5, Precision, Recall, parameter size (Params), and FLOPs. The results are presented in Table 4.

Table 4.

Ablation study of different module combinations.

The results show that both individual modules and their combinations influenced model performance. Introducing FasterPW (group b) reduced parameters by 16.12% and FLOPs by 15.95%, while improving mAP@0.5, Precision, and Recall by 1.01%, 1.31%, and 3.03%, confirming its dual advantage in lightweighting and feature extraction efficiency. Comparisons between groups h and i further demonstrate its positive contribution. Adding the CA module (group f) enhanced detection accuracy, with improvements of 3.22%, 2.34%, and 3.36% in mAP@0.5, Precision, and Recall over the baseline, validating CA’s role in strengthening feature representation and target localization. Incorporating the Weighted Concat module (group i) further optimized performance, yielding gains of 3.37%, 2.6%, and 3.52% compared to YOLOv8n, and showing superior multi-scale fusion compared to group f. By contrast, introducing HetConv (group e) reduced parameters and FLOPs by 25.4% and 27.4%, respectively, but slightly decreased accuracy due to parameter sharing between branches, which weakened feature expression. Overall, the integrated BMDNet-YOLO (group j) achieved the best balance, with 95.6% mAP@0.5, 98.27% Precision, and 94.36% Recall, while reducing parameters and FLOPs by 41.56% and 42.15%, respectively, fully validating the effectiveness of the proposed improvements.

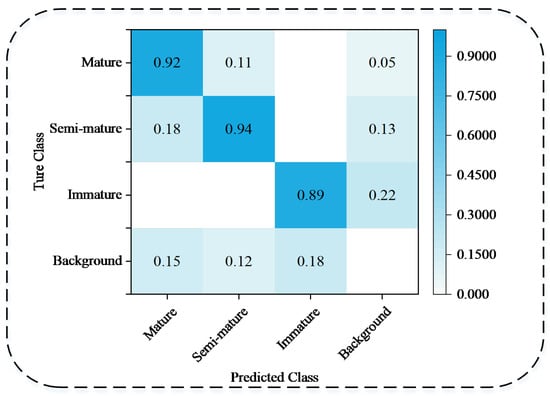

To further assess class discrimination, a confusion matrix of BMDNet-YOLO on the blueberry dataset was generated (Figure 11). Results show some misclassifications between mature and semi-mature categories, primarily due to: (i) overlapping color ranges between mature and semi-mature fruit skins, and (ii) enhanced diffuse reflection from waxy fruit surfaces, which reduces discriminability in the saturation channel of the HSV space. These factors increase difficulty in constructing discriminative boundaries during feature learning. Nonetheless, overall detection performance remained high with very low false detection rates, indicating that BMDNet-YOLO demonstrates strong robustness and practical applicability.

Figure 11.

Confusion matrix of BMDNet-YOLO on the blueberry dataset.

3.6. Comparative Experiments on Transfer Learning

To comprehensively evaluate the effect of transfer learning strategies on optimizing detection model performance, comparative experiments were designed to analyze differences under two training paradigms: (1) Non-transfer learning group: models were trained end-to-end on the blueberry ripeness visual analysis dataset (BRVAD) using randomly initialized parameters for the feature extraction layers, with COCO2017 pretrained weights applied directly. (2) Transfer learning group: models were first pretrained on COCO2017, then adapted to the coffee ripeness visual analysis dataset (CBRVAD) for domain-specific training. The weights obtained at this stage were subsequently used to initialize the model, which was then fine-tuned on BRVAD, achieving cross-crop knowledge transfer through a “pretraining–fine-tuning” paradigm. This design aimed to quantitatively compare detection accuracy and generalization performance across the two groups and to verify the performance gains achieved by progressive transfer learning in cross-domain tasks.

As shown in Table 5, transfer learning yielded significant improvements. For the YOLOv8n baseline, transfer learning increased mAP@0.5, Precision, and Recall by 0.7%, 1.34%, and 1%, respectively. The effect was more pronounced for the optimized BMDNet-YOLO, where gains reached 3.2%, 3.57%, and 3.56%, pushing detection accuracy to its highest level. These results demonstrate that transfer learning, by leveraging parameter reuse and feature sharing, not only accelerates convergence but also overcomes performance bottlenecks, particularly under complex backgrounds and cross-species detection tasks. Moreover, the findings indicate that architectural optimization amplifies the benefits of transfer learning, offering a practical direction for cross-domain detection tasks.

Table 5.

Performance comparison of models with and without transfer learning.

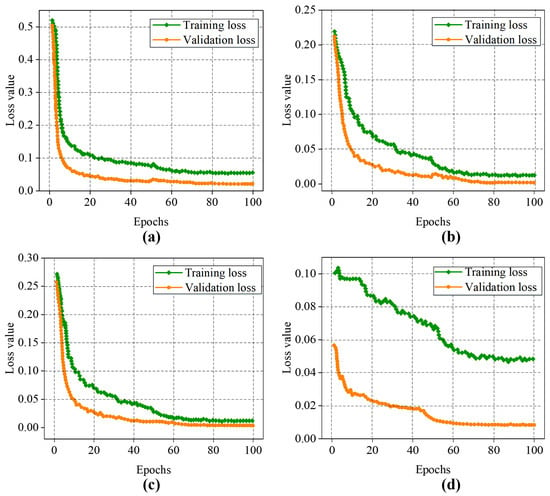

Figure 12 illustrates the dynamic evolution of loss values before and after transfer learning. Without transfer learning, models exhibited higher initial losses; with transfer learning, the loss curves became smoother, convergence accelerated, and final steady-state losses were significantly reduced. This validates the effectiveness of transfer learning in accelerating optimization and improving generalization through knowledge transfer.

Figure 12.

Loss variation curves before and after transfer learning: (a) YOLOv8n before transfer learning; (b) YOLOv8n after transfer learning; (c) BMDNet-YOLO before transfer learning; (d) BMDNet-YOLO after transfer learning.

For YOLOv8n, models without transfer learning displayed pronounced fluctuations in validation loss during early training, whereas transfer learning delayed and attenuated these fluctuations, yielding a smoother convergence curve. For BMDNet-YOLO, the effect was even stronger: training loss decreased more rapidly, validation loss converged to lower levels, and overall training efficiency improved substantially.

The comparative results show that BMDNet-YOLO consistently outperformed YOLOv8n, regardless of whether transfer learning was applied; however, transfer learning further amplified its advantages. From both training dynamics and accuracy performance, these findings confirm that transfer learning not only stabilizes feature extraction and decision boundary construction but also plays a critical role in achieving a balance between lightweight design and high accuracy in object detection tasks.

4. Discussion

In the transformation toward intelligent agriculture, blueberry harvesting faces significant technical bottlenecks. Existing detection models (e.g., YOLOv5x-CBAM) achieve less than 80% accuracy under complex orchard conditions, while manual harvesting costs account for over 40% of total input, and maturity misjudgment leads to annual losses of 15–20% [46]. To address these issues, the proposed BMDNet-YOLO model demonstrates breakthrough improvements in accuracy, efficiency, and application adaptability. Its core value, limitations, and future optimization directions are summarized as follows.

4.1. Advantages

BMDNet-YOLO exhibits substantial advantages across multiple dimensions. Performance: By integrating the FasterPW module in the backbone, the CA attention mechanism in the neck, and combining Weighted Concat with HetConv in the detection head, the model achieves a balance between lightweight design and accuracy, reaching a recall of 94.36% and outperforming mainstream models such as Faster R-CNN and YOLOv8n [8,46]. Scenario adaptability: With the incorporation of a three-stage transfer learning framework, the model demonstrates stronger generalization under challenging conditions such as severe occlusion and dynamic illumination, surpassing methods like YOLOv5x-CBAM and CR-YOLOv9 [46,73]. Industrial value: As a replicable paradigm for small-fruit crop detection, the model has the potential to drive the commercialization of “one-click harvesting” systems, alleviating labor shortages and reducing resource waste by approximately 30% [74,75].

4.2. Challenges

Despite its strengths, three limitations remain in practical applications. First, the model depends on the BRVAD dataset constructed under specific conditions, which lacks samples from extreme environments, potentially causing performance fluctuations in cross-regional deployment. Moreover, the performance of BMDNet-YOLO in other natural conditions such as heavy rain, fog, or extremely low illumination has not yet been systematically validated, and potential degradation in these scenarios remains an open challenge for future work. In addition, the present study has not yet assessed the model’s adaptability to different blueberry cultivars, whose variations in size, color tone, and ripening characteristics may influence recognition accuracy, leaving cross-cultivar generalization as an important topic for future investigation. Second, this study focuses solely on blueberry maturity detection, without integrating tasks such as fruit localization or pest and disease recognition, limiting its utility for fully automated harvesting workflows. Third, cross-domain adaptation protocols for clustered fruit detection remain underdeveloped, and insufficient hardware adaptation research for low-power edge devices restricts deployment flexibility and scalability.

4.3. Future Perspectives

To address these challenges, future work may advance along three lines: (i) expanding the dataset to cover diverse regions and extreme environments, combined with adversarial training to enhance model robustness; (ii) developing a multi-task framework integrating maturity detection, pest and disease recognition, and fruit localization to enhance the intelligence of harvesting robots; and (iii) formulating cross-domain adaptation protocols for clustered fruit, while optimizing model quantization and pruning strategies for edge deployment, aiming to achieve low-power consumption (over 20% energy reduction) and high efficiency (exceeding 30 FPS), thereby enabling large-scale deployment of agricultural harvesting systems. In addition, future studies may leverage synthetic data generation and digital twin technologies to simulate complex field conditions [76], accelerate model training, and improve the scalability and reliability of intelligent harvesting solutions.

5. Conclusions

This study addresses the challenges of illumination variation, leaf occlusion, and densely clustered small targets in blueberry maturity detection under complex natural environments by proposing BMDNet-YOLO, a high-precision and lightweight detection model that achieves a synergistic balance between accuracy and efficiency. The model incorporates the FasterPW module to enhance feature extraction efficiency, integrates the CA attention mechanism to strengthen spatial feature representation, and combines the Weighted Concat module with a HetConv-based lightweight detection head to optimize multi-scale feature fusion and detection performance. Furthermore, a three-stage transfer learning framework was introduced to improve feature transferability and enhance generalization in cross-domain detection tasks. Experimental results demonstrate that BMDNet-YOLO achieved an mAP@0.5 of 95.6%, with Precision and Recall of 98.27% and 94.36%, respectively, while maintaining a parameter size of only 1.845 MB and computational cost of 5.124 GFLOPs. This confirms that the model delivers significant lightweighting without compromising accuracy. Compared with mainstream detection methods, BMDNet-YOLO achieves superior overall performance, particularly in balancing accuracy, inference speed, and resource consumption, highlighting its potential for deployment in intelligent agricultural harvesting scenarios. Future research will focus on extending model functionality beyond maturity detection to include fruit localization, grading, and pest/disease identification within a multi-task framework, thereby enabling multi-dimensional intelligent detection. In addition, efforts will explore efficient deployment strategies on edge devices, aiming to support large-scale implementation of automated harvesting systems in agriculture.

Author Contributions

Conceptualization, H.S. and R.-F.W.; methodology, R.-F.W.; software, H.S.; validation, H.S.; formal analysis, H.S.; investigation, H.S.; resources, R.-F.W.; data curation, H.S.; writing—original draft preparation, H.S.; writing—review and editing, R.-F.W.; visualization, H.S.; supervision, R.-F.W.; project administration, R.-F.W.; funding acquisition, H.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Open Research Fund of Anhui Province Key Laboratory of Machine Vision Inspection (KLMVI-2024-HIT-12), China Postdoctoral Science Foundation(Certificate Number: 2025M771716), the Anhui Province Science and Technology Innovation Breakthrough Plan (No.202423i08050056), and the Anhui Province Higher Education Institutions Mid-aged and Young Teachers Training Action Program(YQYB2024059).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author(s).

Acknowledgments

The authors would like to acknowledge Anhui Duxiu Mountain Blueberry Technology Development Co., Ltd., Yunnan Aini Agriculture and Husbandry (Group) Co.,Ltd. and North China Institute of Science and Technology for their continuous support of our research, as well as to the editors and reviewers for their careful review and valuable suggestions on this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Duan, Y.; Tarafdar, A.; Chaurasia, D.; Singh, A.; Bhargava, P.C.; Yang, J.; Li, Z.; Ni, X.; Tian, Y.; Li, H.; et al. Blueberry fruit valorization and valuable constituents: A review. Int. J. Food Microbiol. 2022, 381, 109890. [Google Scholar] [CrossRef] [PubMed]

- Avendano, E.E.; Raman, G. Blueberry consumption and exercise: Gap analysis using evidence mapping. J. Altern. Complement. Med. 2021, 27, 3–11. [Google Scholar] [CrossRef]

- Norabuena-Figueroa, R.; García-Fernández, J. Logistical Process and Improvement of the Packing Area in the Export of Peruvian Blueberries: Advancing Sustainable Development Goal 8. In Fostering Sustainable Development Goals: New Dimensions and Dynamics; Springer: Berlin/Heidelberg, Germany, 2024; p. 80. [Google Scholar]

- Hammami, A.M.; Guan, Z.; Cui, X. Foreign Competition Reshaping the Landscape of the US Blueberry Market. Choices 2024, 39, 1–9. [Google Scholar]

- Kim, E.; Freivalds, A.; Takeda, F.; Li, C. Ergonomic evaluation of current advancements in blueberry harvesting. Agronomy 2018, 8, 266. [Google Scholar] [CrossRef]

- Brondino, L.; Borra, D.; Giuggioli, N.R.; Massaglia, S. Mechanized blueberry harvesting: Preliminary results in the Italian context. Agriculture 2021, 11, 1197. [Google Scholar] [CrossRef]

- Gallardo, R.K.; Zilberman, D. The economic feasibility of adopting mechanical harvesters by the highbush blueberry industry. HortTechnology 2016, 26, 299–308. [Google Scholar] [CrossRef]

- Wang, R.F.; Qu, H.R.; Su, W.H. From Sensors to Insights: Technological Trends in Image-Based High-Throughput Plant Phenotyping. Smart Agric. Technol. 2025, 12, 101257. [Google Scholar] [CrossRef]

- Zhang, S.; Zhao, K.; Huo, Y.; Yao, M.; Xue, L.; Wang, H. Mushroom image classification and recognition based on improved ConvNeXt V2. J. Food Sci. 2025, 90, e70133. [Google Scholar] [CrossRef]

- Zhou, G.; Wang, R.F. The Heterogeneous Network Community Detection Model Based on Self-Attention. Symmetry 2025, 17, 432. [Google Scholar] [CrossRef]

- Cui, K.; Tang, W.; Zhu, R.; Wang, M.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Fine, P.; et al. Efficient Localization and Spatial Distribution Modeling of Canopy Palms Using UAV Imagery. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4413815. [Google Scholar] [CrossRef]

- Tobar-Bolaños, G.; Casas-Forero, N.; Orellana-Palma, P.; Petzold, G. Blueberry juice: Bioactive compounds, health impact, and concentration technologies—A review. J. Food Sci. 2021, 86, 5062–5077. [Google Scholar] [CrossRef]

- Lyu, X.; Wang, H.; Xu, G.; Chu, C. Research on fruit picking test of blueberry harvesting machinery under transmission clearance. Heliyon 2024, 10, e34740. [Google Scholar] [CrossRef]

- Thota, J.; Kim, E.; Freivalds, A.; Kim, K. Development and evaluation of attachable anti-vibration handle. Appl. Ergon. 2022, 98, 103571. [Google Scholar] [CrossRef]

- Tan, C.; Li, C.; Perkins-Veazie, P.; Oh, H.; Xu, R.; Iorizzo, M. High throughput assessment of blueberry fruit internal bruising using deep learning models. Front. Plant Sci. 2025, 16, 1575038. [Google Scholar] [CrossRef] [PubMed]

- Haydar, Z.; Esau, T.J.; Farooque, A.A.; Zaman, Q.U.; Hennessy, P.J.; Singh, K.; Abbas, F. Deep learning supported machine vision system to precisely automate the wild blueberry harvester header. Sci. Rep. 2023, 13, 10198. [Google Scholar] [CrossRef] [PubMed]

- Jing, X.; Wang, Y.; Li, D.; Pan, W. Melon ripeness detection by an improved object detection algorithm for resource constrained environments. Plant Methods 2024, 20, 127. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Zhang, Y.; Liu, J.; Liu, D.; Chen, C.; Li, Y.; Zhang, X.; Touko Mbouembe, P.L. An improved YOLOv7 model based on Swin Transformer and Trident Pyramid Networks for accurate tomato detection. Front. Plant Sci. 2024, 15, 1452821. [Google Scholar] [CrossRef] [PubMed]

- Júnior, M.R.B.; Dos Santos, R.G.; de Azevedo Sales, L.; Vargas, R.B.S.; Deltsidis, A.; de Oliveira, L.P. Image-based and ML-driven analysis for assessing blueberry fruit quality. Heliyon 2025, 11, e42288. [Google Scholar] [CrossRef]

- Mu, C.; Yuan, Z.; Ouyang, X.; Sun, P.; Wang, B. Non-destructive detection of blueberry skin pigments and intrinsic fruit qualities based on deep learning. J. Sci. Food Agric. 2021, 101, 3165–3175. [Google Scholar] [CrossRef]

- Wei, Z.; Yang, H.; Shi, J.; Duan, Y.; Wu, W.; Lyu, L.; Li, W. Effects of different light wavelengths on fruit quality and gene expression of anthocyanin biosynthesis in blueberry (Vaccinium corymbosm). Cells 2023, 12, 1225. [Google Scholar] [CrossRef]

- Sun, L.; Shi, W.; Tian, X.; Li, J.; Zhao, B.; Wang, S.; Tan, J. A plane stress measurement method for CFRP material based on array LCR waves. NDT E Int. 2025, 151, 103318. [Google Scholar] [CrossRef]

- Zhang, Z.; Janvekar, N.A.S.; Feng, P.; BHASKAR, N. Graph-Based Detection of Abusive Computational Nodes. US Patent 12,223,056, 11 February 2025. [Google Scholar]

- Zhou, Y.; Xia, H.; Yu, D.; Cheng, J.; Li, J. Outlier detection method based on high-density iteration. Inf. Sci. 2024, 662, 120286. [Google Scholar] [CrossRef]

- Lu, W.; Wang, J.; Wang, T.; Zhang, K.; Jiang, X.; Zhao, H. Visual style prompt learning using diffusion models for blind face restoration. Pattern Recognit. 2025, 161, 111312. [Google Scholar] [CrossRef]

- Hao, H.; Yao, E.; Pan, L.; Chen, R.; Wang, Y.; Xiao, H. Exploring heterogeneous drivers and barriers in MaaS bundle subscriptions based on the willingness to shift to MaaS in one-trip scenarios. Transp. Res. Part A Policy Pract. 2025, 199, 104525. [Google Scholar] [CrossRef]

- Jiang, T.; Wang, Y.; Ye, H.; Shao, Z.; Sun, J.; Zhang, J.; Chen, Z.; Zhang, J.; Chen, Y.; Li, H. SADA: Stability-guided Adaptive Diffusion Acceleration. In Proceedings of the Forty-second International Conference on Machine Learning, Vancouver, BC, Canada, 13–19 July 2025. [Google Scholar]

- Zhou, Y.; Liu, C.; Urgaonkar, B.; Wang, Z.; Mueller, M.; Zhang, C.; Zhang, S.; Pfeil, P.; Horn, D.; Liu, Z.; et al. PBench: Workload Synthesizer with Real Statistics for Cloud Analytics Benchmarking. arXiv 2025, arXiv:2506.16379. [Google Scholar] [CrossRef]

- Zhao, K.; Huo, Y.; Xue, L.; Yao, M.; Tian, Q.; Wang, H. Mushroom Image Classification and Recognition Based on Improved Swin Transformer. In Proceedings of the 2023 IEEE 6th International Conference on Information Systems and Computer Aided Education (ICISCAE), Virtual, 23–25 September 2023; IEEE: Piscataway Township, NJ, USA, 2023; pp. 225–231. [Google Scholar]

- Pan, C.H.; Qu, Y.; Yao, Y.; Wang, M.J.S. HybridGNN: A Self-Supervised graph neural network for efficient maximum matching in bipartite graphs. Symmetry 2024, 16, 1631. [Google Scholar] [CrossRef]

- Li, L.; Li, J.; Wang, H.; Georgieva, T.; Ferentinos, K.; Arvanitis, K.; Sygrimis, N. Sustainable energy management of solar greenhouses using open weather data on MACQU platform. Int. J. Agric. Biol. Eng. 2018, 11, 74–82. [Google Scholar] [CrossRef]

- Shao, Z.; Wang, Y.; Wang, Q.; Jiang, T.; Du, Z.; Ye, H.; Zhuo, D.; Chen, Y.; Li, H. FlashSVD: Memory-Efficient Inference with Streaming for Low-Rank Models. arXiv 2025, arXiv:2508.01506. [Google Scholar]

- Wei, Z.L.; An, H.Y.; Yao, Y.; Su, W.C.; Li, G.; Saifullah; Sun, B.F.; Wang, M.J.S. FSTGAT: Financial Spatio-Temporal Graph Attention Network for Non-Stationary Financial Systems and Its Application in Stock Price Prediction. Symmetry 2025, 17, 1344. [Google Scholar] [CrossRef]

- Garillos-Manliguez, C.A.; Chiang, J.Y. Multimodal deep learning and visible-light and hyperspectral imaging for fruit maturity estimation. Sensors 2021, 21, 1288. [Google Scholar] [CrossRef]

- Dong, Y.; Qiao, J.; Liu, N.; He, Y.; Li, S.; Hu, X.; Yu, C.; Zhang, C. GPC-YOLO: An Improved Lightweight YOLOv8n Network for the Detection of Tomato Maturity in Unstructured Natural Environments. Sensors 2025, 25, 1502. [Google Scholar] [CrossRef]

- Zhu, R.; Cui, K.; Tang, W.; Wang, R.F.; Alqahtani, S.; Lutz, D.; Yang, F.; Fine, P.; Karubian, J.; Plemmons, R.; et al. From Orthomosaics to Raw UAV Imagery: Enhancing Palm Detection and Crown-Center Localization. arXiv 2025, arXiv:2509.12400. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, X.; Cheng, Y.; Wu, X.; Sun, X.; Hou, R.; Wang, H. Fruit freshness detection based on multi-task convolutional neural network. Curr. Res. Food Sci. 2024, 8, 100733. [Google Scholar] [CrossRef]

- Qu, H.; Zheng, C.; Ji, H.; Huang, R.; Wei, D.; Annis, S.; Drummond, F. A deep multi-task learning approach to identifying mummy berry infection sites, the disease stage, and severity. Front. Plant Sci. 2024, 15, 1340884. [Google Scholar] [CrossRef]

- Zhao, M.; Cui, B.; Yu, Y.; Zhang, X.; Xu, J.; Shi, F.; Zhao, L. Intelligent Detection of Tomato Ripening in Natural Environments Using YOLO-DGS. Sensors 2025, 25, 2664. [Google Scholar] [CrossRef]

- Wang, X.; Liu, J. Tomato anomalies detection in greenhouse scenarios based on YOLO-Dense. Front. Plant Sci. 2021, 12, 634103. [Google Scholar] [CrossRef]

- Lyu, S.; Zhou, X.; Li, Z.; Liu, X.; Chen, Y.; Zeng, W. YOLO-SCL: A lightweight detection model for citrus psyllid based on spatial channel interaction. Front. Plant Sci. 2023, 14, 1276833. [Google Scholar] [CrossRef] [PubMed]

- Cui, K.; Zhu, R.; Wang, M.; Tang, W.; Larsen, G.D.; Pauca, V.P.; Alqahtani, S.; Yang, F.; Segurado, D.; Lutz, D.A.; et al. Detection and Geographic Localization of Natural Objects in the Wild: A Case Study on Palms. In Proceedings of the Thirty-Fourth International Joint Conference on Artificial Intelligence, IJCAI-25, Montreal, QC, Canada, 16–22 August 2025; pp. 9601–9609. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Y. SLGA-YOLO: A lightweight castings surface defect detection method based on fusion-enhanced attention mechanism and self-architecture. Sensors 2024, 24, 4088. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Chu, H.Q.; Qin, Y.M.; Hu, P.; Wang, R.F. Empowering Smart Soybean Farming with Deep Learning: Progress, Challenges, and Future Perspectives. Agronomy 2025, 15, 1831. [Google Scholar] [CrossRef]

- Ye, R.; Shao, G.; Gao, Q.; Zhang, H.; Li, T. CR-YOLOv9: Improved YOLOv9 multi-stage strawberry fruit maturity detection application integrated with CRNET. Foods 2024, 13, 2571. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zheng, H.; Zhang, Y.; Zhang, Q.; Chen, H.; Xu, X.; Wang, G. “Is this blueberry ripe?”: A blueberry ripeness detection algorithm for use on picking robots. Front. Plant Sci. 2023, 14, 1198650. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Liu, N.; Zhuang, X.; Wang, Y.; Liu, G.; Liu, Y.; Wang, C.; Gong, Z.; Liu, K.; Yu, G.; et al. LSC-YOLO: Small Target Defects Detection Model for Wind Turbine Blade Based on YOLOv9. In Proceedings of the International Conference on Neural Information Processing, Vancouver, BC, Canada, 10–15 December 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 256–270. [Google Scholar]

- Di, X.; Cui, K.; Wang, R.F. Toward Efficient UAV-Based Small Object Detection: A Lightweight Network with Enhanced Feature Fusion. Remote Sens. 2025, 17, 2235. [Google Scholar] [CrossRef]

- Wang, J.; Liu, N.; Liu, G.; Liu, Y.; Zhuang, X.; Zhang, L.; Wang, Y.; Yu, G. RSD-YOLO: A Defect Detection Model for Wind Turbine Blade Images. In Proceedings of the International Conference on Computer Engineering and Networks, Kashi, China, 18–21 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 423–435. [Google Scholar]

- Wang, R.F.; Tu, Y.H.; Li, X.C.; Chen, Z.Q.; Zhao, C.T.; Yang, C.; Su, W.H. An Intelligent Robot Based on Optimized YOLOv11l for Weed Control in Lettuce. In Proceedings of the 2025 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Toronto, ON, Canada, 13–16 July 2025; p. 1. [Google Scholar]

- Huo, Y.; Wang, R.F.; Zhao, C.T.; Hu, P.; Wang, H. Research on Obtaining Pepper Phenotypic Parameters Based on Improved YOLOX Algorithm. AgriEngineering 2025, 7, 209. [Google Scholar] [CrossRef]

- Yi, F.; Mohamed, A.S.A.; Noor, M.H.M.; Ani, F.C.; Zolkefli, Z.E. YOLOv8-DEE: A high-precision model for printed circuit board defect detection. PeerJ Comput. Sci. 2024, 10, e2548. [Google Scholar] [CrossRef]

- Deng, B.; Lu, Y.; Li, Z. Detection, counting, and maturity assessment of blueberries in canopy images using YOLOv8 and YOLOv9. Smart Agric. Technol. 2024, 9, 100620. [Google Scholar] [CrossRef]

- Xu, Y.; Li, H.; Zhou, Y.; Zhai, Y.; Yang, Y.; Fu, D. GLL-YOLO: A Lightweight Network for Detecting the Maturity of Blueberry Fruits. Agriculture 2025, 15, 1877. [Google Scholar] [CrossRef]

- Ding, J.; Hu, J.; Lin, J.; Zhang, X. Lightweight enhanced YOLOv8n underwater object detection network for low light environments. Sci. Rep. 2024, 14, 27922. [Google Scholar] [CrossRef]

- Wang, J.; Meng, R.; Huang, Y.; Zhou, L.; Huo, L.; Qiao, Z.; Niu, C. Road defect detection based on improved YOLOv8s model. Sci. Rep. 2024, 14, 16758. [Google Scholar] [CrossRef]

- Guarnido-Lopez, P.; Ramirez-Agudelo, J.F.; Denimal, E.; Benaouda, M. Programming and setting up the object detection algorithm YOLO to determine feeding activities of beef cattle: A comparison between YOLOv8m and YOLOv10m. Animals 2024, 14, 2821. [Google Scholar] [CrossRef]

- Cinar, I. Comparative analysis of machine learning and deep learning algorithms for knee arthritis detection using YOLOv8 models. J. X-Ray Sci. Technol. 2025, 33, 565–577. [Google Scholar] [CrossRef]

- Liu, J.; Wang, L.; Xu, H.; Pi, J.; Wang, D. Research on Beef Marbling Grading Algorithm Based on Improved YOLOv8x. Foods 2025, 14, 1664. [Google Scholar] [CrossRef] [PubMed]

- Fuengfusin, N.; Tamukoh, H. Mixed-precision weights network for field-programmable gate array. PLoS ONE 2021, 16, e0251329. [Google Scholar] [CrossRef] [PubMed]

- Ye, R.; Gao, Q.; Li, T. BRA-YOLOv7: Improvements on large leaf disease object detection using FasterNet and dual-level routing attention in YOLOv7. Front. Plant Sci. 2024, 15, 1373104. [Google Scholar] [CrossRef]

- Hua, B.; Tran, M.K.; Yeung, S.K. Pointwise convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 984–993. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Singh, P.; Verma, V.K.; Rai, P.; Namboodiri, V.P. HetConv: Heterogeneous kernel-based convolutions for deep CNNs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4835–4844. [Google Scholar]

- Wang, R.F.; Qin, Y.M.; Zhao, Y.Y.; Xu, M.; Schardong, I.B.; Cui, K. RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition. AI 2025, 6, 235. [Google Scholar] [CrossRef]

- An, J.; Zhang, D.; Xu, K.; Wang, D. An OpenCL-based FPGA accelerator for Faster R-CNN. Entropy 2022, 24, 1346. [Google Scholar] [CrossRef]

- Tian, L.; Zhang, H.; Liu, B.; Zhang, J.; Duan, N.; Yuan, A.; Huo, Y. VMF-SSD: A novel V-space based multi-scale feature fusion SSD for apple leaf disease detection. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 20, 2016–2028. [Google Scholar] [CrossRef]

- Guo, K.; He, C.; Yang, M.; Wang, S. A pavement distresses identification method optimized for YOLOv5s. Sci. Rep. 2022, 12, 3542. [Google Scholar] [CrossRef]

- Wang, Z.; Hua, Z.; Wen, Y.; Zhang, S.; Xu, X.; Song, H. E-YOLO: Recognition of estrus cow based on improved YOLOv8n model. Expert Syst. Appl. 2024, 238, 122212. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Ye, B.; Xue, R.; Xu, H. ASD-YOLO: A lightweight network for coffee fruit ripening detection in complex scenarios. Front. Plant Sci. 2025, 16, 1484784. [Google Scholar] [CrossRef]

- Varzakas, T.; Smaoui, S. Global food security and sustainability issues: The road to 2030 from nutrition and sustainable healthy diets to food systems change. Foods 2024, 13, 306. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, H.W.; Dai, Y.Q.; Cui, K.; Wang, H.; Chee, P.W.; Wang, R.F. Resource-Efficient Cotton Network: A Lightweight Deep Learning Framework for Cotton Disease and Pest Classification. Plants 2025, 14, 2082. [Google Scholar] [CrossRef] [PubMed]

- Guan, A.; Zhou, S.; Gu, W.; Wu, Z.; Gao, M.; Liu, H.; Zhang, X.P. Dynamic Simulation and Parameter Calibration-Based Experimental Digital Twin Platform for Heat-Electric Coupled System. IEEE Trans. Sustain. Energy 2025. Early Access. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).