Image-Based Detection of Chinese Bayberry (Myrica rubra) Maturity Using Cascaded Instance Segmentation and Multi-Feature Regression

Abstract

1. Introduction

2. Materials and Methods

2.1. Experimental Environment

2.2. Allocation of the Dataset

2.3. Color Evaluation Indicators

2.3.1. Instance Segmentation Model (SOLOv2-Light) Training

2.3.2. Maturity Regression Model (MFENet) Training

2.3.3. Dataset Partitioning

2.4. Performance Indicators

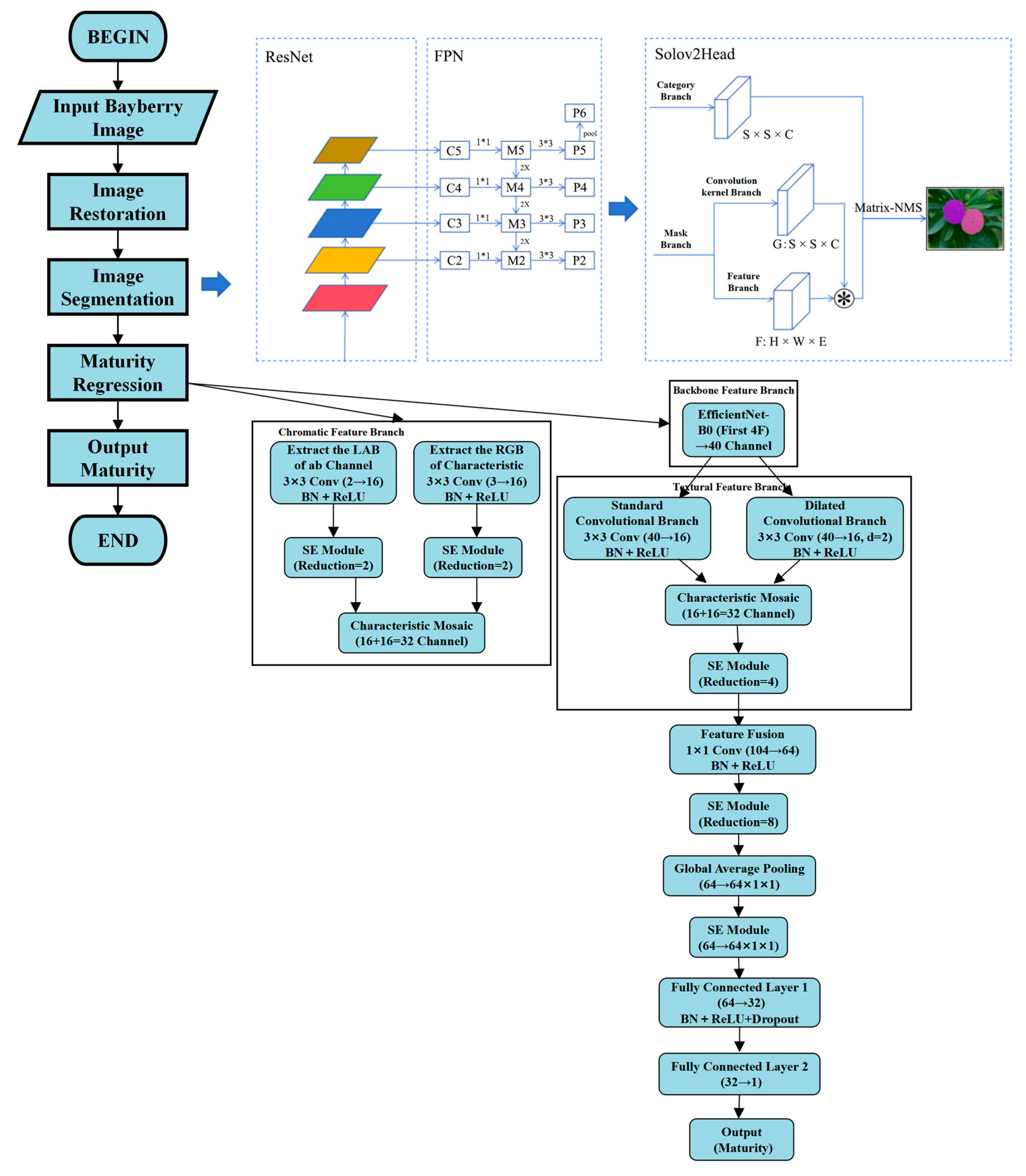

2.5. Overall Design of the Cascading Framework

2.6. Lightweight Instance Segmentation Model

2.6.1. SOLOv2 Model Network Architecture

2.6.2. Dynamic Instance Segmentation Mechanism

Category Branch

Mask Branch

2.6.3. Lightweight Improvement

Convolutional Layer Simplification

Feature Channel Compression

Detection Scale Optimization

2.7. Multi-Feature Fusion Maturity Regression Model

2.7.1. MFENet Network Structure

2.7.2. Multimodal Feature Learning

Backbone Feature Branch

Chromatic Feature Branch

Textural Feature Branch

3. Results

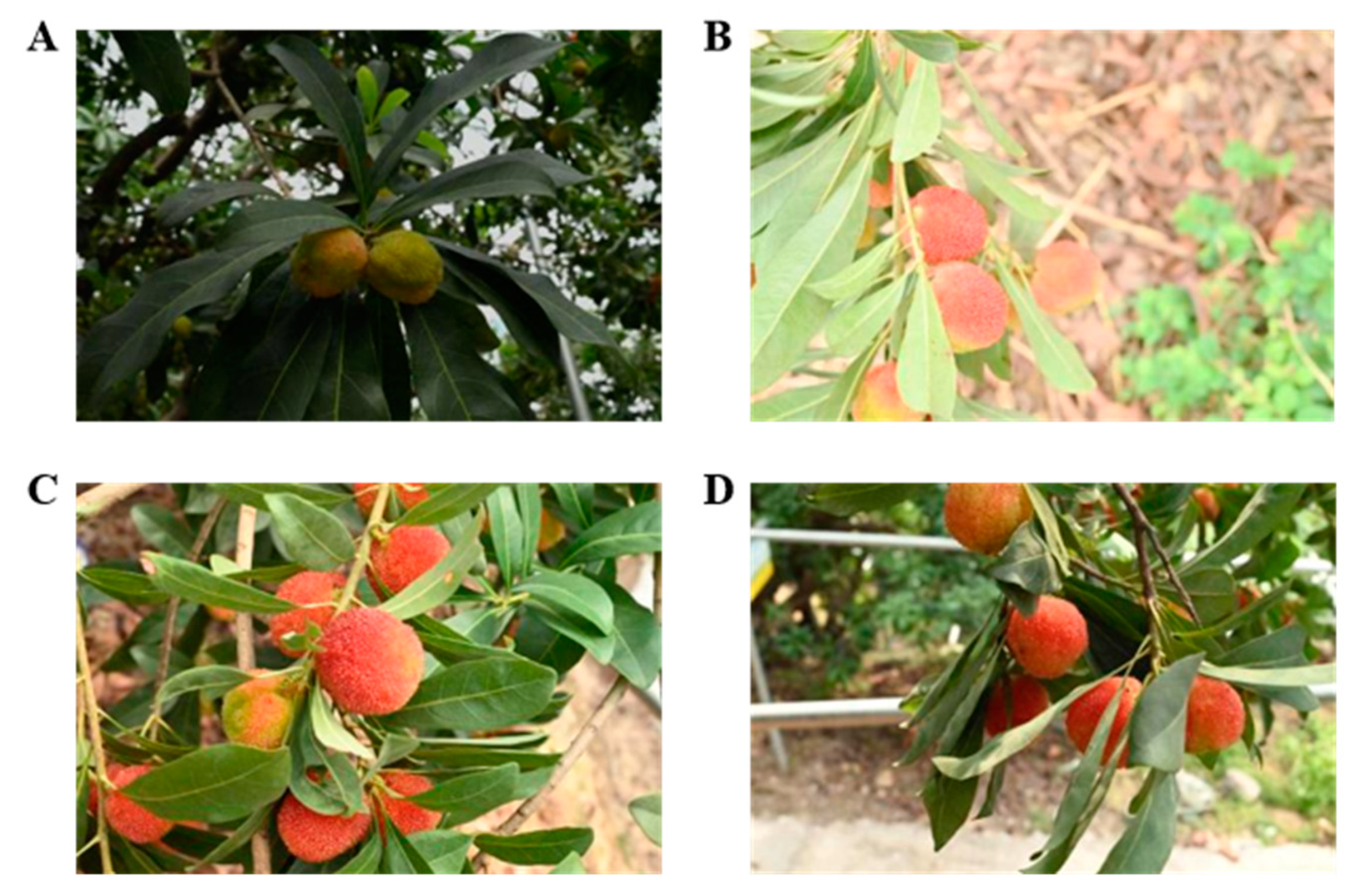

3.1. Dataset Construction and Preprocessing

3.1.1. Data Collection and Annotation

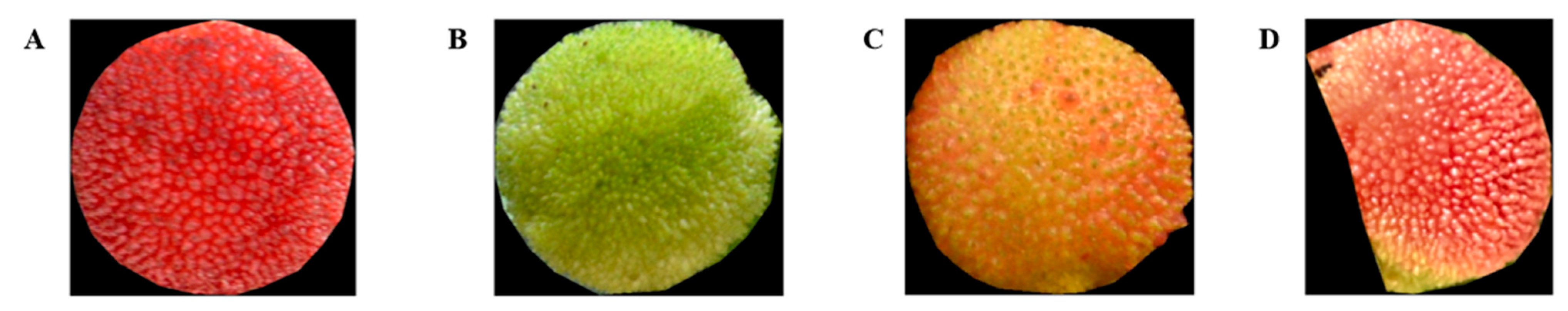

3.1.2. Illumination Invariance Enhancement Based on LAB Color Space

3.1.3. Construction of the Chinese Bayberry Instance Segmentation Dataset

3.1.4. Construction of the Chinese Bayberry Maturity Regression Dataset

3.2. Feature Fusion and Regression Prediction

3.2.1. Feature Fusion Strategy

3.2.2. Attention Mechanism

3.2.3. Regression Head Design

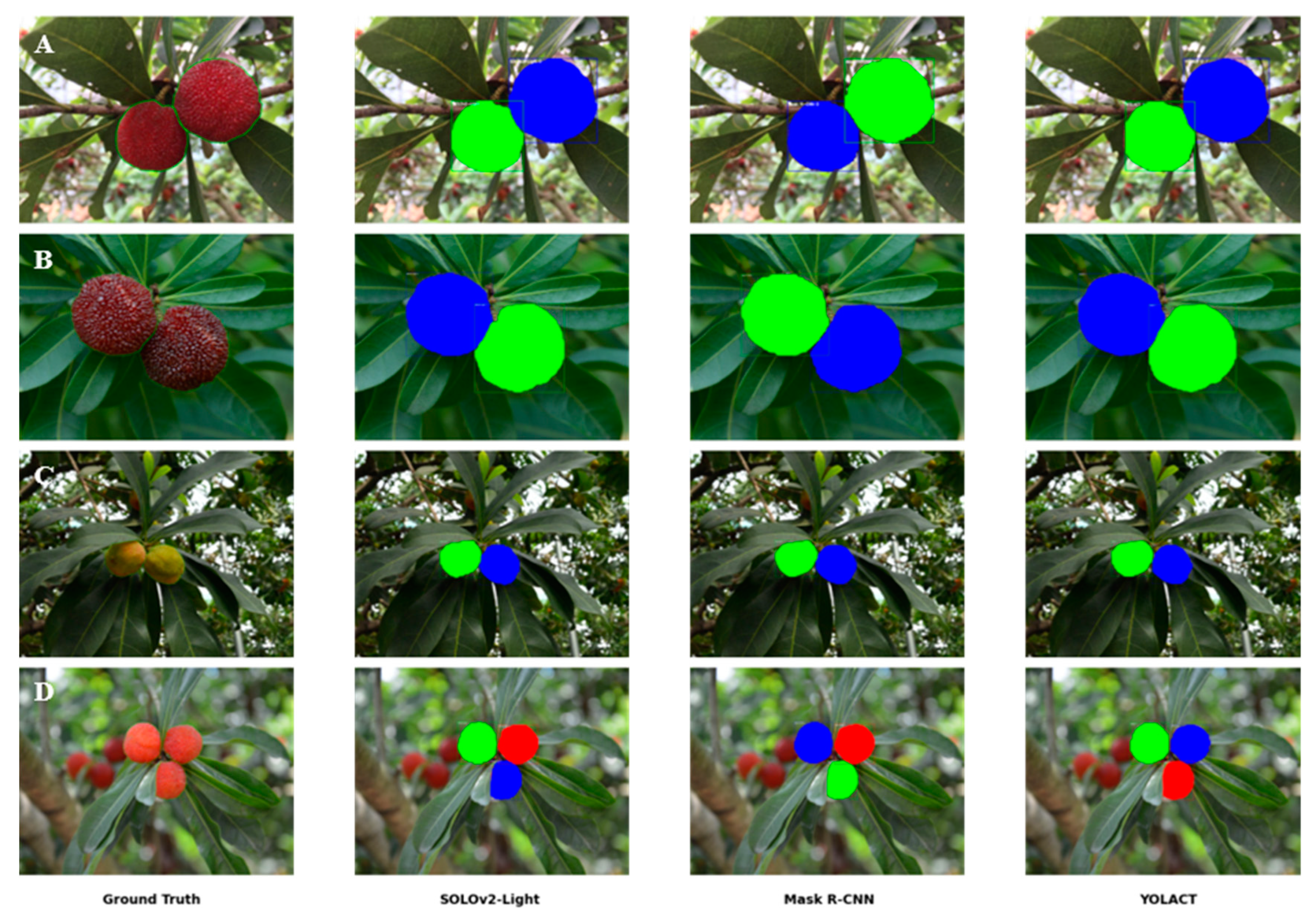

3.3. Instance Segmentation Performance Evaluation

3.3.1. Lightweight Efficiency Validation of SOLOv2

3.3.2. Instance Segmentation Quantitative Evaluation

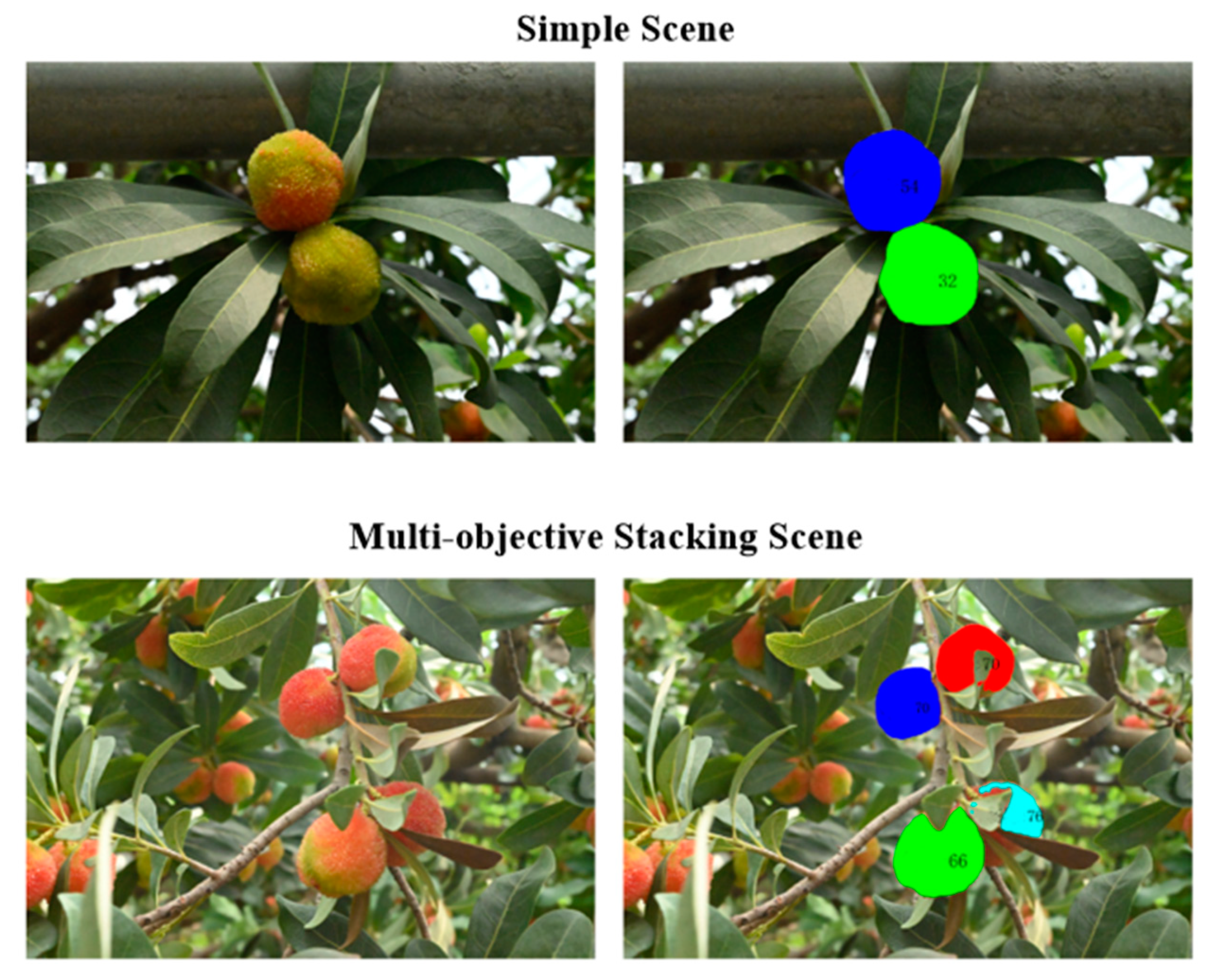

3.3.3. Instance Segmentation Qualitative Analysis

3.4. Maturity Regression Performance Assessment

3.4.1. MFENet Module Ablation Experiments

3.4.2. Recognition Performance Evaluation

3.4.3. Compared to the Existing Methods

4. Discussion

4.1. Advantages of Cascaded Architecture

4.2. Practical Implications of Lightweight Design

4.3. Comparison with State-of-the-Art Trends

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, S.W.; Yu, Z.P.; Sun, L.; Liang, S.M.; Xu, F.; Li, S.J.; Zheng, X.L.; Yan, L.J.; Huang, Y.H.; Qi, X.J.; et al. T2T reference genome assembly and genome-wide association study reveal the genetic basis of Chinese bayberry fruit quality. Hortic. Res. 2024, 11, uhae033. [Google Scholar] [CrossRef]

- Ren, H.; He, Y.; Qi, X.; Zheng, X.; Zhang, S.; Yu, Z.P.; Hu, F.R. The bayberry database: A multiomic database for Myrica rubra, an important fruit tree with medicinal value. BMC Plant Biol. 2021, 21, 452. [Google Scholar] [CrossRef]

- Yang, H.; Li, X.; Wang, L.Q.; Zhang, H.; Kang, C.; Sun, C.; Cao, J.P. Research progress on postharvest preservation of Chinese bayberry fruit. J. Zhejiang Univ. 2023, 49, 200–212. [Google Scholar] [CrossRef]

- Yang, Z.F.; Zheng, Y.H.; Cao, S.F. Influence of harvest maturity on fruit quality, color development and phenylalanine ammonia-lyase (PAL) activities in Chinese bayberry during storage. Acta Hortic. 2013, 1012, 171–175. [Google Scholar] [CrossRef]

- Wendler, R. The maturity of maturity model research: A systematic mapping study. Inf. Softw. Technol. 2012, 54, 1317–1339. [Google Scholar] [CrossRef]

- Agrawal, P.; Bose, R.; Gupta, G.K.; Kaur, G.; Paliwal, S.; Raut, A. Advancements in Computer Vision: A Comprehensive Review. In Proceedings of the 2024 International Conference on Innovations and Challenges in Emerging Technologies (ICICET), Nagpur, India, 7–8 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Mohammadi, S.; Sattarpanah Karganroudi, S.; Rahmanian, V. Advancements in Smart Nondestructive Evaluation of Industrial Machines: A Comprehensive Review of Computer Vision and AI Techniques for Infrastructure Maintenance. Machines 2025, 13, 11. [Google Scholar] [CrossRef]

- Xiang, X.J.; Zhou, K.; Fei, Z.S.; Zheng, Y.P.; Yao, J.N. Maturity detection method of Myrica rubra based on improved YOLOX algorithm. J. Chin. Agric. Mech. 2023, 44, 201–208. [Google Scholar] [CrossRef]

- Ye, Z.; Liu, Y.; Li, Q. Recent Progress in Smart Electronic Nose Technologies Enabled with Machine Learning Methods. Sensors 2021, 21, 7620. [Google Scholar] [CrossRef]

- Naik, S.K.; Murthy, C.A. Hue-preserving color image enhancement without gamut problem. IEEE Trans. Image Process. 2003, 12, 1591–1598. [Google Scholar] [CrossRef]

- Wang, A.; Qian, W.; Li, A.; Xu, Y.; Hu, J.; Xie, Y.; Zhang, L. NVW-YOLOv8s: An improved YOLOv8s network for real-time detection and segmentation of tomato fruits at different ripeness stages. Comput. Electron. Agric. 2024, 219, 108833. [Google Scholar] [CrossRef]

- Saedi, S.I.; Rezaei, M.; Khosravi, H. Dual-path lightweight convolutional neural network for automatic sorting of olive fruit based on cultivar and maturity. Postharvest Biol. Technol. 2024, 216, 113054. [Google Scholar] [CrossRef]

- Chen, W.; Liu, M.; Zhao, C.; Li, X.; Wang, Y. MTD-YOLO: Multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 2024, 216, 108533. [Google Scholar] [CrossRef]

- Feng, J.; Jiang, L.; Zhang, J.; Zheng, H.; Sun, Y.; Chen, S.; Yu, M.; Hu, W.; Shi, D.; Sun, X.; et al. Nondestructive determination of soluble solids content and pH in red bayberry (Myrica rubra) based on color space. J. Food Sci. Technol. 2020, 57, 4541–4550. [Google Scholar] [CrossRef]

- Shao, Y.N.; He, Y. Nondestructive measurement of the internal quality of bayberry juice using Vis/NIR spectroscopy. J. Food Eng. 2007, 79, 1015–1019. [Google Scholar] [CrossRef]

- Zou, X.; Zhang, J.; Huang, X.; Zheng, K.; Wu, S.; Shi, J. Distinguishing watermelon maturity based on acoustic characteristics and near infrared spectroscopy fusion technology. Trans. Chin. Soc. Agric. Eng. 2019, 35, 301–307. [Google Scholar] [CrossRef]

- Swamy, K.V.; Rajaneesh, S.; Mahalaxmi, S.; Revanth, P. Watermelon Classification using Machine Learning with Enhanced Features. In Proceedings of the 2025 International Conference on Advances in Modern Age Technologies for Health and Engineering Science (AMATHE), Shivamogga, India, 24–25 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Lazim, S.S.R.; Nawi, M.N.; Bejo, S.K.; Shariff, A.R.M. Prediction and classification of soluble solid contents to determine the maturity level of watermelon using visible and shortwave near infrared spectroscopy. Int. Food Res. J. 2022, 29, 1372–1379. [Google Scholar] [CrossRef]

- Ranjan, S.; Rahul, H.C.; Ajay, S.; Manoj, K. RF-DETR Object Detection vs YOLOv12: A Study of Transformer-based and CNN-based Architectures for Single-Class and Multi-Class Greenfruit Detection in Complex Orchard Environments Under Label Ambiguity. Comp. Sci. 2025, 2504, 13099. [Google Scholar] [CrossRef]

- Lin, X.; Liao, D.; Du, Z.; Wen, B.; Wu, Z.; Tu, X. SDA-YOLO: An Object Detection Method for Peach Fruits in Complex Orchard Environments. Sensors 2025, 25, 4457. [Google Scholar] [CrossRef]

- Jin, T.; Han, X.; Wang, P.; Zhang, Z.; Guo, J.; Ding, F. Enhanced deep learning model for apple detection, localization, and counting in complex orchards for robotic arm-based harvesting. Smart Agri Technol. 2025, 10, 100784. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comp. Electr. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- He, L.; Wu, D.; Zheng, X.; Xu, F.; Lin, S.; Wang, S.; Ni, F.; Zheng, F. RLK-YOLOv8: Multi-stage detection of strawberry fruits throughout the full growth cycle in greenhouses based on large kernel convolutions and improved YOLOv8. Front. Plant Sci. 2025, 16, 1552553. [Google Scholar] [CrossRef] [PubMed]

- Al-Sai, Z.A.; Husin, M.H.; Syed-Mohamad, S.M.; Abdullah, R.; Zitar, R.A.; Abualigah, L.; Gandomi, A.H. Big Data Maturity Assessment Models: A Systematic Literature Review. Big Data Cogn. Comput. 2023, 7, 2. [Google Scholar] [CrossRef]

- Bao, Z.; Li, W.; Chen, J.; Chen, H.; John, V.; Xiao, C.; Chen, Y. Predicting and Visualizing Citrus Color Transformation Using a Deep Mask-Guided Generative Network. Plant Phenomics 2023, 5, 0057. [Google Scholar] [CrossRef] [PubMed]

- Júnior, M.R.B.; dos Santos, R.G.; de Azevedo Sales, L.; Vargas, R.B.S.; Deltsidis, A.; de Oliveira, L.P. Image-based and ML-drivenanalysis for assessing blueberry fruit quality. Heliyon 2025, 11, e42288. [Google Scholar] [CrossRef]

- Kang, S.; Fan, J.; Ye, Y.; Li, C.; Du, D.; Wang, J. Maturity recognition and localisation of broccoli under occlusion based on RGB-Dinstance segmentation network. Biosyst. Eng. 2025, 250, 270–284. [Google Scholar] [CrossRef]

- Kang, S.; Li, D.; Li, B. Maturity identification and category determination method of broccoli based on semantic segmentation models. Comp. Electr. Agric. 2024, 217, 108633. [Google Scholar] [CrossRef]

- Aguiar, F.P.L.; Nääs, I.A.; Okano, M.T. Bridging the Gap Between Computational Efficiency and Segmentation Fidelity in Object-Based Image Analysis. Animals 2024, 14, 3626. [Google Scholar] [CrossRef]

- Li, Y.; Li, J.; Luo, L.; Wang, L.; Zhi, Q. Tomato ripeness and stem recognition based on improved YOLOX. Sci. Rep. 2025, 15, 1924. [Google Scholar] [CrossRef]

- Li, X.; Xu, C.; Korban, S.S.; Chen, K. Regulatory Mechanisms of Textural Changes in Ripening Fruits. Crit. Rev. Plant Sci. 2010, 29, 222–243. [Google Scholar] [CrossRef]

- Chae, Y. Color appearance shifts depending on surface roughness, illuminants, and physical colors. Sci. Rep. 2022, 12, 1371. [Google Scholar] [CrossRef]

- Mo, Y.; Bai, S.; Chen, W. ASHM-YOLOv9: A Detection Model for Strawberry in Greenhouses at Multiple Stages. Appl. Sci. 2025, 15, 8244. [Google Scholar] [CrossRef]

- Sui, X.; Zou, J.; Geng, Z.; Yang, H.; Hou, J.; Feng, L. Electrochemical impedance spectroscopy for pear ripeness detection and integration with robotic manipulators. Food Control. 2025, 177, 111425. [Google Scholar] [CrossRef]

- Meng, Z.; Du, X.; Sapkota, R.; Ma, Z.; Cheng, H. YOLOv10-pose and YOLOv9-pose: Real-time strawberry stalk pose detection models. Comput. Ind. 2025, 165, 104231. [Google Scholar] [CrossRef]

- Ramu, T.B.; Kocherla, R.; Sirisha, G.N.V.G.; Lakshmi Chetana, V.; Vidya Sagar, P.; Balamurali, R.; Boddu, N. Transformer based models with hierarchical graph representations for enhanced climate forecasting. Sci. Rep. 2025, 15, 23464. [Google Scholar] [CrossRef]

| Model | Backbone Network | mAP | AP50 | AP75 | Size/MB | Flops |

|---|---|---|---|---|---|---|

| SOLOv2 | Resnet50 | 0.802 a | 0.792 a | 0.792 a | 46.233 a | 223G a |

| SOLOv2-Light | Resnet50 | 0.788 b | 0.782 a | 0.782 a | 31.161 b | 58G b |

| Model | Backbone Network | mAP | AP50 | AP75 | Size/MB | Flops |

|---|---|---|---|---|---|---|

| SOLOv2-Light | Resnet50 | 0.788 a | 0.792 a | 0.792 a | 31.161 c | 58G c |

| Mask R-CNN | Resnet50 | 0.756 b | 0.782 a | 0.782 ab | 43.977 a | 235G a |

| YOLACT | Resnet50 | 0.767 b | 0.793 a | 0.761 b | 34.734 b | 62G b |

| Model | Color Feature Extraction | Textural Feature Extraction | MAE | rmse | Size/MB |

|---|---|---|---|---|---|

| MFENet | × | × | 4.4184 a | 5.6980 a | 118.82 b |

| MFENet | × | √ | 4.1745 ab | 5.7797 a | 119.84 b |

| MFENet | √ | × | 4.2696 a | 5.8487 a | 180.09 a |

| MFENet | √ | √ | 3.9466 b | 5.0061 b | 181.10 a |

| Models | Accuracytotal (%) | AccuracyImmature (%) | Accuracysemi-mature (%) | Accuracymature (%) | Time (s) |

|---|---|---|---|---|---|

| Model | 95.51 a | 97.22 a | 98.84 a | 80.77 b | 0.98 b |

| YOLOX-s | 80.6 b | 83.4 b | 74.6 c | 83.8 a | 1.81 a |

| Faster R-CNN | 83.6 b | 85.9 b | 89.9 b | 75 c | 1.89 a |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Sun, L.; Wang, Y.; Yang, H.; Zhang, S. Image-Based Detection of Chinese Bayberry (Myrica rubra) Maturity Using Cascaded Instance Segmentation and Multi-Feature Regression. Horticulturae 2025, 11, 1166. https://doi.org/10.3390/horticulturae11101166

Zheng H, Sun L, Wang Y, Yang H, Zhang S. Image-Based Detection of Chinese Bayberry (Myrica rubra) Maturity Using Cascaded Instance Segmentation and Multi-Feature Regression. Horticulturae. 2025; 11(10):1166. https://doi.org/10.3390/horticulturae11101166

Chicago/Turabian StyleZheng, Hao, Li Sun, Yue Wang, Han Yang, and Shuwen Zhang. 2025. "Image-Based Detection of Chinese Bayberry (Myrica rubra) Maturity Using Cascaded Instance Segmentation and Multi-Feature Regression" Horticulturae 11, no. 10: 1166. https://doi.org/10.3390/horticulturae11101166

APA StyleZheng, H., Sun, L., Wang, Y., Yang, H., & Zhang, S. (2025). Image-Based Detection of Chinese Bayberry (Myrica rubra) Maturity Using Cascaded Instance Segmentation and Multi-Feature Regression. Horticulturae, 11(10), 1166. https://doi.org/10.3390/horticulturae11101166