Evaluation of Convolutional Neural Network-Based Posture Identification Model of Older Adults: From Silhouette of Sagittal Photographs

Abstract

1. Introduction

2. Methods

2.1. Raw Images and Correct Labels in Supervised Data

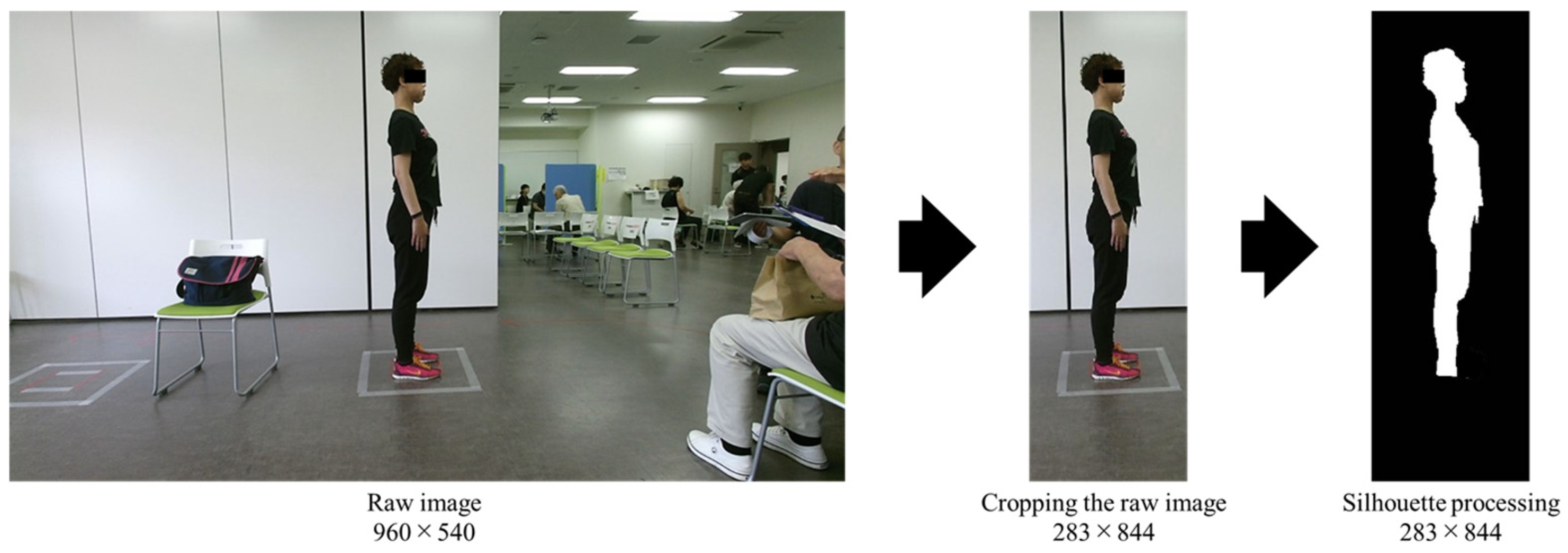

2.2. Pre-Processing of Raw Images

2.3. Construction Model

2.4. Evaluation Models

3. Results

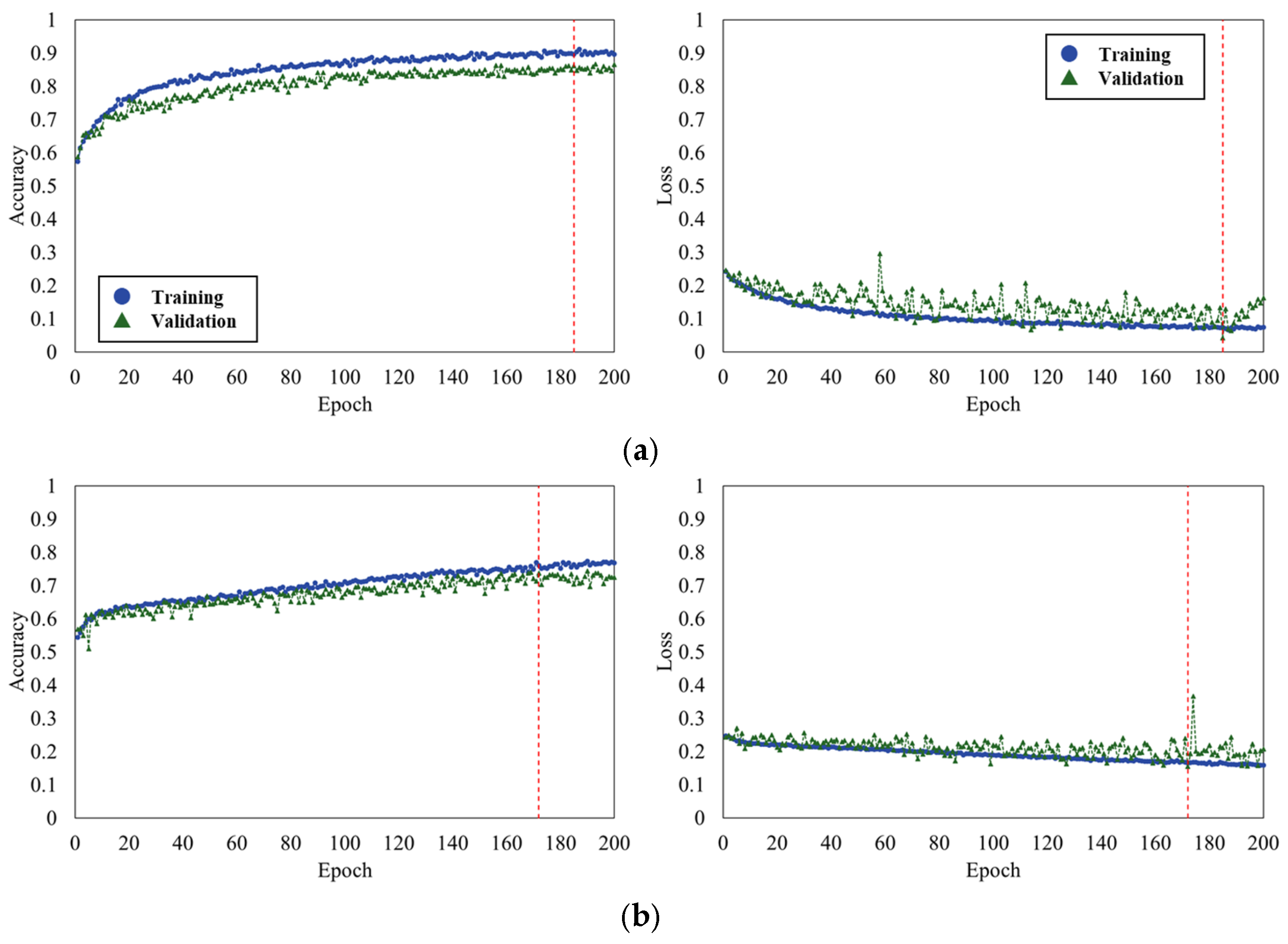

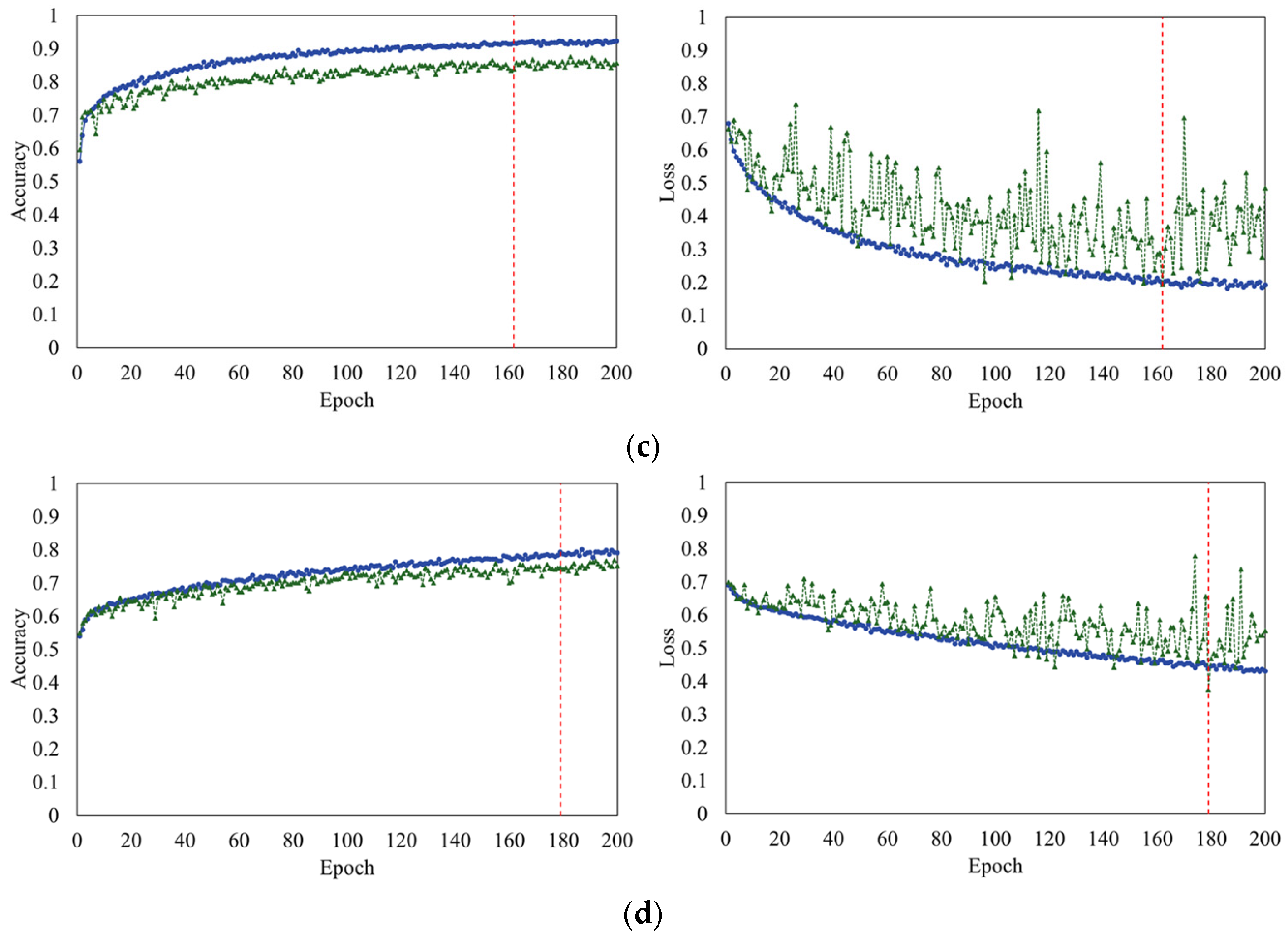

3.1. Accuracy and Loss in Models Construction

3.2. Agreement of Output and Correct Label Using Test Set

4. Discussion

4.1. Performance in the Four Models

4.2. Performance of the Two MSE Models Using Test Set

4.3. Limitation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cabinet Office Japan. Annual Report on the Ageing Society. 2022. Available online: https://www8.cao.go.jp/kourei/english/annualreport/2022/pdf/2022.pdf (accessed on 17 September 2024).

- Statistics Bureau of Japan. Current Population Estimates as of October 1. 2022. Available online: https://www.stat.go.jp/english/data/jinsui/2022np/index.html (accessed on 17 September 2024).

- Ministry of Health. Plan of Healthy Life Expectancy. Available online: https://www.mhlw.go.jp/content/12601000/000514142.pdf (accessed on 10 October 2024).

- Ministry of Health. Lifespan and Healthy Life Expectancy. Available online: https://www.e-healthnet.mhlw.go.jp/information/hale/h-01-002.html (accessed on 10 October 2024).

- Hirotsu, K.; Inoue, Y.; Tanaka, M.; Moriguchi, S.; Ogawa, T. Factors Related to the Extension of Healthy Life Expectancy in the Super Aged Society. Yamaguchi Prefect. Univ. 2007, 8, 47–54. [Google Scholar]

- Fujisawa, M. A Clinical Study of the Quality of Life in the Care of the Elderly. Bull. Jr. Coll. Shukutoku 2006, 45, 131–145. [Google Scholar]

- Ministry of Health. The 2000 Ministry of Health, Labor, and Welfare Report of Japan. Available online: https://www.mhlw.go.jp/toukei_hakusho/hakusho/kousei/2000/dl/03.pdf (accessed on 10 October 2024).

- Ministry of Health. Comprehensive Survey of Living Conditions. 2019. Available online: https://www.mhlw.go.jp/toukei/saikin/hw/k-tyosa/k-tyosa19/index.html (accessed on 10 October 2024).

- Demura, S.; Yamaji, S. Efforts and Problems in Comprehensive Fall Risk Assessment for Elderly People Living at Home. Jpn. J. Phys. Fit. Sports Med. 2012, 61, 6–7. [Google Scholar]

- Takei, H. Evaluation and Treatment for Posture. Spinal Surg. 2013, 27, 119–124. [Google Scholar] [CrossRef]

- Oatis, C.A.; Yamazaki, A.; Sato, S.; Shirahoshi, S.; Fujikawa, T.; Ikeya, M. Kinesiology: The Mechanics and Pathomechanics of Human Movement; Round Flat, Inc.: Tokyo, Japan, 2012; ISBN 10-4904613198. [Google Scholar]

- Maekawa, M.; Yoshizawa, E.; Hayata, G.; Ohashi, S. Physical and Psychological Effects of Postural Educational Intervention for Students Experienced School Refusal. Curr. Psychol. 2023, 42, 3510–3519. [Google Scholar] [CrossRef]

- Kendall, F.P.; McCreary, E.K.; Provance, P.G. Muscles: Testing and Function, with Posture and Pain; Lippincott Williams and Wilkins: Philadelphia, PA, USA, 2014; p. 560. ISBN 10-0781747805. [Google Scholar]

- Yanagida, M.; Ohno, Y.; Yamagami, T. A Preliminary Study on the Feasibility and Significance of Simple and Objective Posture Evaluation Methods to Minimize the Nursing Care Needs of Elderly People. Kitakanto Med. J. 2015, 65, 141–147. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Akkus, Z.; Galimzianova, A.; Hoogi, A.; Rubin, D.L.; Erickson, B.J. Deep Learning for Brain MRI Segmentation: State of the Art and Future Directions. J. Digit. Imaging 2017, 4, 449–459. [Google Scholar] [CrossRef]

- Kondou, H.; Morohashi, R.; Ichioka, H.; Bandou, R.; Matsunari, R.; Kawamoto, M.; Idota, N.; Ting, D.; Kimura, S.; Ikegaya, H. Deep Neural Networks-Based Age Estimation of Cadavers Using CT Imaging of Vertebrae. Int. J. Environ. Res. Public Health 2023, 20, 4806. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep Learning for Chest Radiograph Diagnosis: A Retrospective Comparison of the CheXNeXt Algorithm to Practicing Radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Altameem, A.; Mahanty, C.; Poonia, R.C.; Saudagar, A.K.J.; Kumar, R. Breast Cancer Detection in Mammography Images Using Deep Convolutional Neural Networks and Fuzzy Ensemble Modeling Techniques. Diagnostics 2022, 12, 1812. [Google Scholar] [CrossRef] [PubMed]

- Galbusera, F.; Niemeyer, F.; Wilke, H.-J.; Bassani, T.; Casaroli, G.; Anania, C.; Costa, F.; Brayda-Bruno, M.; Sconfienza, L.M. Fully Automated Radiological Analysis of Spinal Disorders and Deformities: A Deep Learning Approach. Eur. Spine J. 2019, 28, 951–960. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Fan, J.; Peng, T.; Zheng, P.; Zhang, X.; Tang, R. Multimodal Data-Based Deep Learning Model for Sitting Posture Recognition toward Office Workers’ Health Promotion. Sens. Actuators A Phys. 2023, 350, 114150. [Google Scholar] [CrossRef]

- Cotton, R.J.; DeLillo, A.; Cimorelli, A.; Shah, K.; Peiffer, J.D.; Anarwala, S.; Abdou, K.; Karakostas, T. Markerless Motion Capture and Biomechanical Analysis Pipeline. arXiv 2023, arXiv:2303.10654. [Google Scholar]

- Wang, J.; Chen, D.; Zhu, M.; Sun, Y. Risk Assessment for Musculoskeletal Disorders Based on the Characteristics of Work Posture. Autom. Constr. 2021, 131, 103921. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef]

- Kim, W.; Sung, J.; Saakes, D.; Huang, C.; Xiong, S. Ergonomic Postural Assessment Using a New Open-Source Human Pose Estimation Technology (OpenPose). Int. J. Ind. Ergon. 2021, 84, 103164. [Google Scholar] [CrossRef]

- Barberi, E.; Chillemi, M.; Cucinotta, F.; Milardi, D.; Raffaele, M.; Salmeri, F.; Sfravara, F. Posture Interactive Self Evaluation Algorithm Based on Computer Vision. In Advances on Mechanics, Design Engineering and Manufacturing IV. JCM 2022; Gerbino, S., Lanzotti, A., Martorelli, M., Mirálbes Buil, R., Rizzi, C., Roucoules, L., Eds.; Lecture Notes in Mechanical Engineering; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Barberi, E.; Chillemi, M.; Cucinotta, F.; Sfravara, F. Fast Three-Dimensional Posture Reconstruction of Motorcyclists Using OpenPose and a Custom MATLAB Script. Sensors 2023, 23, 7415. [Google Scholar] [CrossRef]

- Gadotti, I.C.; Armijo-Olivo, S.; Silveira, A.; Magee, D. Reliability of the Craniocervical Posture Assessment: Visual and Angular Measurements Using Photographs and Radiographs. J. Manip. Physiol. Ther. 2013, 36, 619–625. [Google Scholar] [CrossRef]

- Sugiyama, N.; Yoshihiro, K.; Hitoshi, K.; Toru, M.; Noriyuki, K. Agreement in the Postural Assessment of Older Adults by Physical Therapists Using Clinical and Imaging Methods. Geriatrics 2024, 2, 40. [Google Scholar] [CrossRef]

- Piñero-Fuentes, E.; Canas-Moreno, S.; Rios-Navarro, A.; Domínguez-Morales, M.; Sevillano, J.L.; Linares-Barranco, A. A Deep-Learning Based Posture Detection System for Preventing Telework-Related Musculoskeletal Disorders. Sensors 2021, 21, 5236. [Google Scholar] [CrossRef] [PubMed]

- Markova, V.; Markov, M.; Petrova, Z.; Filkova, S. Assessing the Impact of Prolonged Sitting and Poor Posture on Lower Back Pain: A Photogrammetric and Machine Learning Approach. Computers 2024, 13, 231. [Google Scholar] [CrossRef]

- Saito, K. Deep Learning from the Basics; O’Reilly Japan: Tokyo, Japan, 2016; p. 320. ISBN 978-4873117584. [Google Scholar]

- Flach, P.; Takemura, A.; Tanaka, K.; Kobayashi, K.; Hyodo, M.; Katayama, S.; Yamamoto, M.; Yoshida, T.; Hayashi, K.; Matsui, H.; et al. Machine Learning: Algorithmic Techniques for Interpreting Data; Translated from Japanese; Asakura Publishing Co., Ltd.: Tokyo, Japan, 2017. [Google Scholar]

- Flach, P. Machine Learning: The Art and Science of Algorithms that Make Sense of Data; Cambridge University Press: Cambridge, UK, 2012; ISBN 10-1107096391. [Google Scholar]

- Tetsuo, T.; Masahiro, S.; Haruhiko, N. Deep Neural Network-Based Silhouette Identification in Fashion Images. J. Jpn. Soc. Kansei Eng. 2020, 19, 117–126. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the ICLR 2016, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Team Culpo. Introduction to Object Detection, GAN, Autoencoder, and Image Processing. In Advanced and Implementation Deep Learning Using PyTorch/TensorFlow2; Translated from Japanese; SHUWA System Co., Ltd.: Tokyo, Japan, 2021; ISBN 104798064645. [Google Scholar]

- Doi, S.; Kobayashi, M.; Numata, N. A Fast Object Detection Method from High Resolution Images; Information Processing Society of Japan: Fukushima, Japan, 2019; pp. 1480–1485. [Google Scholar]

- Fujii, A. Machine Learning Engineering for Practical Use; Translated from Japanese; KODANSHA Ltd.: Tokyo, Japan, 2021; ISBN 10-4065259819. [Google Scholar]

- Dipanjan, S.; Raghav, B.; Tushar, S. Practical Machine Learning with Python: A Problem-Solver’s Guide to Building Real-World Intelligent Systems; Apress: New York, NY, USA, 2018. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the ICLR 2016, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Okatani, T. Deep Learning, Revised 2nd ed.; Translated from Japanese; KODANSHA Ltd.: Tokyo, Japan, 2022; ISBN 10-4065133327. [Google Scholar]

- Kevin, J.; Koray, K.; Marc’Aurelio, R.; Yann, L. What is the Best Multi-Stage Architecture for Object Recognition? In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Hirai, Y. First Pattern Recognition: Deep Learning; Translated from Japanese; Morikita Publishing Co., Ltd.: Tokyo, Japan, 2022; ISBN 10-4627850719. [Google Scholar]

- Geoffrey, E.H.; Nitish, S.; Alex, K.; Ilya, S.; Ruslan, R.S. Improving Neural Networks by Preventing Co-Adaptation of Feature Detectors. arXiv 2012, arXiv:1207.0580. [Google Scholar]

- Andreas, C.M.; Sarah, G.; Nakata, S. Getting Started with Machine Learning in Python—Learn the Basics of Feature Engineering and Machine Learning with Scikit-Learn; Translated from Japanese; O’Reilly Japan, Inc.: Tokyo, Japan, 2017; ISBN 10-4873117984. [Google Scholar]

- Andreas, C.M.; Sarah, G. Introduction to Machine Learning with Python: A Guide for Data Scientists; O’Reilly Media: Sebastopol, CA, USA, 2016; ISBN 10-1449369413. [Google Scholar]

- Song, J.; Gu, S.; Zhang, Y.; Mao, C. A Survey of Remote Sensing Image Classification Based on CNNs. In Geospatial Semantics, Ontology and Knowledge Graphs for Big Earth Data; Taylor & Francis: Hoboken, NJ, USA, 2019; pp. 232–254. [Google Scholar]

- Van Rossum, G. Python; Python Software Foundation: Wilmington, DE, USA, 1991; Available online: https://www.python.org/ (accessed on 10 October 2024).

- Chollet, F. Keras. GitHub, 2015. Available online: https://github.com/fchollet/keras (accessed on 10 October 2024).

- Cohen, J. A Coefficient of Agreement for Nominal Scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Byrt, T.; Bishop, J.; Carlin, J.B. Bias, Prevalence and Kappa. J. Clin. Epidemiol. 1993, 46, 423–429. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Juan, R.T.; Diana, M.C.; Alfonso, R.; Edgar, A.C.; Julio, A.R. Loss Functions and Metrics in Deep Learning. arXiv 2024, arXiv:2307.02694. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Hui, L.; Belkin, M. Evaluation of Neural Architectures Trained with Square Loss vs BCE in Classification Tasks. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar]

- Yamada, S.; Ebara, T.; Uehara, T.; Inada, A.; Kamijima, M. Can Postural Pattern Assessment Be Used to Estimate Pelvic Incidence?: Reliability and Validity of Simple Classification Tools for Postural Pattern Assessment. Jpn. J. Ergon. 2022, 57, 288–293. [Google Scholar] [CrossRef]

- Takei, H. A Posture Textbook to Help You Regain Your Correct and Ideal Posture; Translated from Japanese; Natsumesha Co., Ltd.: Tokyo, Japan, 2015; ISBN 978-4-8163-5925-5. [Google Scholar]

- Kulkarni, S.; Deshmukh, S.; Fernandes, F.; Patil, A.; Jabade, V. PoseAnalyser: A Survey on Human Pose Estimation. SN Comput. Sci. 2023, 4, 136. [Google Scholar] [CrossRef]

| Name | Version |

|---|---|

| Python | 3.6.10 |

| Cudatoolkit | 10.1 |

| Cudnn | 7.6.4 |

| Kares | 2.3.1 |

| TensorFlow-gpu | 2.1.0 |

| (a) | ||||||

| Correct label | ||||||

| MSE & Adam | MSE & SGD | |||||

| Ideal | Non-ideal | Ideal | Non-ideal | |||

| Output label | Ideal | 2427 | 87 | 2177 | 717 | |

| Non-ideal | 57 | 2913 | 307 | 2283 | ||

| (b) | ||||||

| Correct label | ||||||

| MSE & Adam | MSE & SGD | |||||

| Ideal | Non-ideal | Ideal | Non-ideal | |||

| Output label | Ideal | 735 | 156 | 665 | 339 | |

| Non-ideal | 93 | 844 | 163 | 661 | ||

| (c) | ||||||

| Correct label | ||||||

| MSE & Adam | MSE & SGD | |||||

| Ideal | Non-ideal | Ideal | Non-ideal | |||

| Output label | Ideal | 696 | 149 | 636 | 331 | |

| Non-ideal | 132 | 851 | 192 | 669 | ||

| (a) | |||

| MSE & Adam (%) | MSE & SGD (%) | ||

| Accuracy | 97 | 81 | |

| Sensitivity | 97 | 76 | |

| Specificity | 98 | 88 | |

| (b) | |||

| MSE & Adam (%) | MSE & SGD (%) | ||

| Accuracy | 86 | 73 | |

| Sensitivity | 84 | 66 | |

| Specificity | 89 | 80 | |

| (c) | |||

| MSE & Adam (%) | MSE & SGD (%) | ||

| Accuracy | 85 | 71 | |

| Sensitivity | 85 | 67 | |

| Specificity | 84 | 77 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sugiyama, N.; Kai, Y.; Koda, H.; Morihara, T.; Kida, N. Evaluation of Convolutional Neural Network-Based Posture Identification Model of Older Adults: From Silhouette of Sagittal Photographs. Geriatrics 2025, 10, 49. https://doi.org/10.3390/geriatrics10020049

Sugiyama N, Kai Y, Koda H, Morihara T, Kida N. Evaluation of Convolutional Neural Network-Based Posture Identification Model of Older Adults: From Silhouette of Sagittal Photographs. Geriatrics. 2025; 10(2):49. https://doi.org/10.3390/geriatrics10020049

Chicago/Turabian StyleSugiyama, Naoki, Yoshihiro Kai, Hitoshi Koda, Toru Morihara, and Noriyuki Kida. 2025. "Evaluation of Convolutional Neural Network-Based Posture Identification Model of Older Adults: From Silhouette of Sagittal Photographs" Geriatrics 10, no. 2: 49. https://doi.org/10.3390/geriatrics10020049

APA StyleSugiyama, N., Kai, Y., Koda, H., Morihara, T., & Kida, N. (2025). Evaluation of Convolutional Neural Network-Based Posture Identification Model of Older Adults: From Silhouette of Sagittal Photographs. Geriatrics, 10(2), 49. https://doi.org/10.3390/geriatrics10020049