Abstract

While cycling presents environmental benefits and promotes a healthy lifestyle, the risks associated with overtaking maneuvers by motorized vehicles represent a significant barrier for many potential cyclists. A large-scale analysis of overtaking maneuvers could inform traffic researchers and city planners how to reduce these risks by better understanding these maneuvers. Drawing from the fields of sensor-based cycling research and from LiDAR-based traffic data sets, this paper provides a step towards addressing these safety concerns by introducing the Salzburg Bicycle 3d (SaBi3d) data set, which consists of LiDAR point clouds capturing car-to-bicycle overtaking maneuvers. The data set, collected using a LiDAR-equipped bicycle, facilitates the detailed analysis of a large quantity of overtaking maneuvers without the need for manual annotation through enabling automatic labeling by a neural network. Additionally, a benchmark result for 3D object detection using a competitive neural network is provided as a baseline for future research. The SaBi3d data set is structured identically to the nuScenes data set, and therefore offers compatibility with numerous existing object detection systems. This work provides valuable resources for future researchers to better understand cycling infrastructure and mitigate risks, thus promoting cycling as a viable mode of transportation.

Dataset

https://osf.io/k7cg9 (accessed on 18 July 2024).

Dataset License

CC-By Attribution 4.0 International.

1. Summary

Cycling has multiple advantages over other modes of transport, such as reducing emissions in city centers [1] and promoting a healthy lifestyle [2]. Therefore the European Union, and some of its member states like Austria, have declared the goal of increasing the bicycle modal share [3,4]. However, cycling is one of the most dangerous modes of transport [5]. Despite the positive effects of cycling, it involves a higher risk of fatality compared to other transport modes, especially when coupled with inadequate cycling infrastructure [6].

Longitudinal overtaking maneuvers by motorized vehicles contribute most to a feeling of subjective risk, thus presenting a barrier to cycling participation [7]. In addition, these maneuvers can lead to severe injury in the event of an accident [8]. Therefore, many studies have tried to capture the characteristics of overtaking maneuvers in recent years. Moreover, sensor equipped bicycles have become a widespread tool in cycling research [9]. On commercially available bikes, only a few sensors and assistance systems are available. Kapousizis et al. [10] provide an excellent categorization of these and possible future systems into different levels of “smartness”, depending on their capabilities.

Most works on overtaking maneuvers capture limited data such as the minimum lateral clearance and overtaking velocity at a single point [11,12,13], providing only a snapshot of an overtaking maneuver without capturing data representing the whole maneuver, which is a complex process. On the other hand, works relying on more detailed data, such as LiDAR point clouds, often require manual annotation, which is time-consuming and expensive [14,15]. A third approach is to use test tracks and equipment with extensive sensor coverage [16,17]. However, naturalistic studies are often preferable to obtain results representative of real-world interactions. Therefore, it would be desirable to have a system that records point cloud data from an instrumented bicycle, automatically detecting vehicles in the data and extracting rich metrics. This would allow for a very detailed description of overtaking maneuvers in naturalistic environments without the need for further manual annotation, thereby removing the drawbacks mentioned above.

To this end, we present a new data set, the Salzburg Bicycle 3d (SaBi3d) data set, which is a first step towards bridging this gap. It consists of 59 overtaking maneuvers manually annotated in a total of 4811 point clouds collected by a LiDAR-equipped bicycle. Following the categorization by [10], this can also be seen as a preliminary step in enabling Smart Bicycles to reach level 2 on the smartness scale, i.e., being capable of detecting surrounding vehicles and avoiding collisions. This data set can be seamlessly substituted for the nuScenes [18] data set for object detection tasks without any additional adaptations.

2. Materials and Methods

To the best of the author’s knowledge, no LiDAR data sets collected by a smart bicycle exist. This section includes a short review of the related research area. This way, the necessary context for understanding and using this data set is provided. Subsequently, the data collection method using a LiDAR-equipped bicycle and the data preparation are discussed, followed by a description of the metadata. Finally, the creation of the object detection benchmark results is outlined.

2.1. Related Work

There are two research areas related to this work. The first is cycling research, which uses LiDAR, and more generally, laser-based distance measurements. LiDAR scanners only recently became small, light, and cheap enough to be mounted on bicycles and so there are a limited number of works in this area. The second area is LiDAR-based traffic data sets collected by LiDARs mounted on a moving platform, which was a motorized vehicle in all the referenced works. This is a wide area of study, therefore only the most relevant works are listed. Finally, in this section, a short introduction to the 3D object detection system used for the benchmark results and its surrounding research is provided.

2.1.1. Lasers and LiDARs on Bicycles

There are multiple recent works on using single beam laser range finders on bicycles. Most often, they are used for measuring lateral clearance during overtaking maneuvers [7,11,12,13,17]. Another idea is to control a single laser-based distance sensor to search for and track single cars. This approach was developed and extensively investigated in a series of works by Jeon Woonsung and Rajesh Rajamani [19,20,21,22,23]. This led to a LiDAR-based collision warning system on a bicycle [24,25], an approach also pursued by other authors [26]. One work first collected maps of the infrastructure, detecting moving objects by comparing newly collected data to these maps [27]. This approach, however, is not sufficiently scalable for widespread use in collision warning systems.

LiDARs have also been used for analyzing cycling infrastructure. One work uses LiDARs on a bicycle to differentiate the cycling path from the surroundings [28]. It utilizes the same bike and sensor setup as in this work. The collected data, however, are unique to each paper, with [28] focusing on bicycle paths with limited to no traffic, and this work purposefully focusing on roads with a significant number of overtaking maneuvers. Due to the different aims of these studies, i.e., semantic segmentation in [28] and 3D object detection in this work, the provided labels are also different in both structure and the contained information. Another work uses LiDARs found in current smartphones and tablets for detecting infrastructure damages [29]. So far, however, there has been no detailed analysis of traffic situations using LiDARs on a bicycle, although such works have been planned [30].

2.1.2. Autonomous Driving Data Sets

Within the area of autonomous driving for motorized road vehicles, the task of locating vehicles in LiDAR data has been worked on extensively in recent years. Therefore, research and especially data sets from this area can be used as a basis for similar research regarding LiDARs on bicycles. Commonly used data sets in this area are KITTI [31], nuScenes [18], and Waymo [32]. KITTI is the pioneering but by now outdated data set in this area, containing only a fraction of the scenes compared to newer ones and only containing a limited amount of variation in weather, road, and lighting conditions. The Waymo data set is of similar structure and format to nuScenes but is not as frequently used for object detection algorithms. While some recent well-performing approaches are implemented for both nuScenes and KITTI [33,34], most are only implemented for nuScenes [35,36,37,38,39], which is why this data set was chosen as a kind of blueprint for the SaBi3d data set. However, the major shortcomings of this data set for the task at hand are as follows: (1) The data were recorded from a car; (2) The sequences are short (approximately 20 s), which makes it unlikely to capture complete overtaking maneuvers.

nuScenes uses its own nuScenes Detection Score (NDS) as an evaluation metric, which is composed of different Mean Average Precision (mAP) scores and True Positive scores for the different classes [18]. While in the nuScenes data set multiple classes are annotated, this is not necessary for the SaBi3d data set since only car-to-bicycle overtaking maneuvers are of interest. Therefore, the mAP of the class ‘car’ was taken as evaluation metric, which was also usual in earlier data sets [31,40].

2.1.3. 3D Object Detection

3D object detection algorithms are almost exclusively based on neural networks [41,42]. Object detection was originally a computer vision task performed on image data [43], but has recently been adapted for point cloud data [44].

A 3D object detection algorithm consists of the following three components: (1) point cloud representation; (2) feature extraction; (3) core object detection [42,44,45].

As a benchmark algorithm, VISTA [35] was chosen since it scored highest on the nuScenes leaderboard at the time. In the pre-processing step, point clouds are voxelized along the x, y, and z axes in a predefined range of [, ] m, [, ] m, and [, ] m. Then, 3D feature maps are extracted according to a method proposed by [46]. They, in turn, use submanifold sparse convolution [47,48,49], following the work of [39]. These 3D feature maps are then collapsed twice into 2D feature maps: once into bird’s-eye-view () and once into a range view (), i.e., front view [39]. Then, further UNet-like [50] convolution operations are performed on the two 2D feature maps [35]. The VISTA module then takes both of the resulting feature maps as inputs [51,52]. The input features of and are projected into queries and keys . Values are taken from . They also propose a decoupling of the classification and regression task, further projecting and into via 1D convolution [35]. Attention weights are calculated as in [53] for both of the feature maps:

The outputs are then fed into individual Feed-Forward Networks for the final results [35].

The core object detector can be varied, but the Object as Hotspots detector [51] yielded the best results [35]. Therefore, the same setup was used for the SaBi3d benchmark algorithm.

Before training, the data were augmented according to [39] in order to counteract the class imbalance present in the nuScenes data set.

2.2. Data Collection

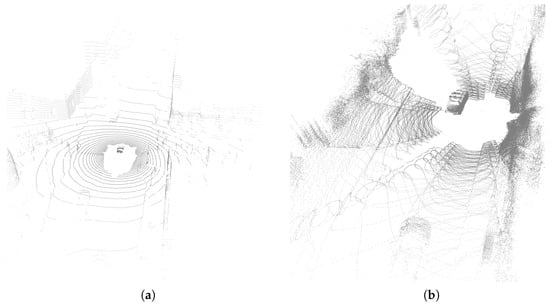

The data were collected using a Holoscene Edge bike by Boréal Bikes1, as displayed in Figure 1. While this bicycle was equipped with multiple cameras, LiDAR sensors, IMUs, GNSS, and further sensors to measure brake activation, humidity, and temperature, only the LiDAR sensors were used for this work. This was done to gain a more nuanced picture of the overtaking maneuver while preserving accurate depth information. The LiDAR sensors were Horizon LiDAR sensors by Livox [54]. Their scanning pattern is different from the scanning pattern used in previous data sets, such as KITTI and nuScenes, which use rotating sensors. The Livox Horizon uses a scanning pattern in the form of a recumbent eight. The difference can be seen in Figure 2. Point clouds were sampled with a frequency of 5 Hz. The bicycle was equipped with five LiDAR sensors of this type. Since each of them had a horizontal field of view (FOV) of 81.7°, a full 360° FOV could be achieved by positioning them appropriately.

Figure 1.

The instrumented Holoscene Edge bicycle by Boréal Bikes. ©wildbild/Salzburg Research.

Figure 2.

The different scanning patterns of the sensors used in nuScenes and SaBi3d. The nuScenes point clouds display a clearly visible circular scanning pattern, while the SaBi3d point clouds show the characteristics of the scanning pattern of the Livox Horizon sensors, resulting in a “recumbent eight” pattern of five individual sensors that were then merged into a single point cloud. (a) Scanning pattern used in the nuScenes data set; (b) scanning pattern of the Livox Horizon.

All overtaking maneuvers were collected by a cyclist riding alone in urban areas. Our main focus was aimed towards cycling as a mode of transport rather than a sport. Therefore, we did not include data collected from within a group of cyclists. Furthermore, other riders in a group might have occluded the LiDAR sensors.

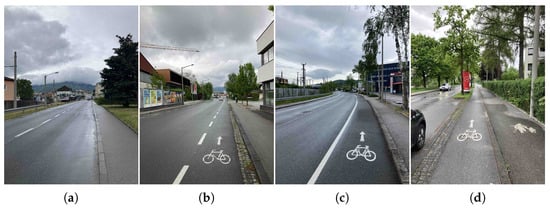

The data were collected on weekday afternoons in October 2022 in Salzburg, Austria. The data were taken from four different locations, which were selected in such a way that a variety of speed limits (30, 40, and 50 km/h) and types of bicycle infrastructure (share-the-road, suggested cycle lane, cycle lane, cycle track) would be captured, as shown in Figure 3 and Table 1. All scenes were recorded on urban two-lane roads. Another criterion for the selection of suitable locations was a road width of 6 to 8.5 m. This narrow width was expected to lead to close overtaking maneuvers. We focused on the more problematic types of infrastructure, e.g., narrow, urban streets with relevant amounts of traffic. However, we expect a system trained on our data to be suited to spacier, rural roads as well.

Figure 3.

Different kinds of cycling infrastructure present in the data set. (a) Share-the-road; (b) suggested cycle lane; (c) cycle lane; (d) cycle track.

Table 1.

Information about the speed limits and predominant cycling infrastructure of captured scenes. The first column specifies the character sequence on which the scene token ends. The second column denotes the speed limit of the respective road section. The last column refers to the predominant cycling infrastructure. Other types are included for short sections since cycling infrastructure frequently changes within urban environments.

2.3. Data Preparation

After the data were recorded, the individual point clouds of each of the sensors were merged into one point cloud for each point in time [28]. The timestamps between each individual point cloud varied slightly but they were all mapped to the closest multiple of 200 ms. The point clouds were further transformed into the format of the nuScenes point clouds.

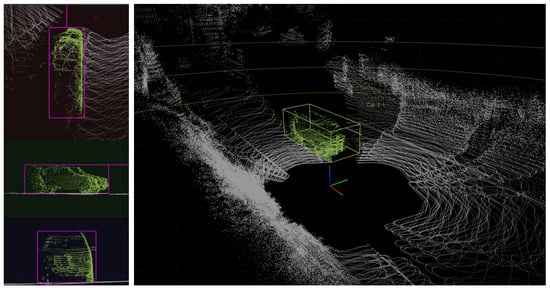

Using the open-source annotation tool SUSTechPOINTS2 [55], cars were annotated in four sequences, resulting in approximately 10,000 bounding boxes in 4811 frames, which corresponds to approximately 17 min of recordings. The functionality of this tool is displayed and described in Figure 4.

Figure 4.

The interface of the SUSTechPOINTS annotation tool. On the left, three different projections are displayed and the bounding boxes can be adjusted from there. On the right, the whole 3D point cloud can be viewed.

To allow for the evaluation of training results with this data set, the SaBi3d data set was split into a training split and validation split. The validation split was supposed to contain approximately 15–20% of the total data. One scene was chosen according to its length, resulting in an 82/18% training/validation split.

2.4. Metadata Generation

Furthermore, metadata in the format of nuScenes were generated. This allows for the seamless substitution of the nuScenes data set for the SaBi3d data set when using it for training 3D object detection algorithms. The nuScenes metadata are a database that consists of 13 tables in which each has a primary key token and some are linked by foreign keys3. An overview of the tables in the original nuScenes data set and the changes made for the SaBi3d data set are displayed in Table 2. The tables are split into three sections. The first set of tables was not changed at all. The second set was manually adjusted since only minor adjustments were needed. The third set of tables was generated from the recorded point cloud data themselves since it required iterating over the scenes, frames, or annotation files.

Table 2.

Description of the metadata of the nuScenes data set and the adjustments made in the SaBi3d data set.

Some seemingly unnecessary adjustments were made in order to guarantee the seamless substitution of the nuScenes and SaBi3d data set. This involved the inclusion of a solid black PNG as map data and the definition of an ego pose at zero for every frame. Leaving those out would not allow further processing for object detection through the nuScenes devkit4, a popular tool provided by the creators of the data set that helps with pre-processing and evaluation that is used by many object detection algorithms [35,37,39,56].

In addition to the nuScenes-style annotation of cars in the individual point clouds, annotations for full vehicle trajectories were added as well. In particular, whether a vehicle performed an overtaking maneuver and whether it constituted oncoming traffic was annotated. This annotation was not needed for 3D object detection but will be useful for potential subsequent work on this data set focusing on the automatic detection of overtaking maneuvers.

2.5. 3D Object Detection Benchmark

Along with the data set itself, we provide 3D object detection benchmark results for comparison with future works using the data set. By doing this, we follow the example of the KITTI [31], Waymo [32], and nuScenes [18] papers, which provide baselines for each of their benchmark tasks by applying recent well-performing algorithms to their data set. As a baseline, the VISTA [35] algorithm was chosen5. It was hypothesized that the voxelized point cloud representation would minimize the effect of the different scanning pattern of the Livox Horizon Lidar sensors. Therefore, a model trained on the nuScenes data set should easily be transferable to the SaBi3d data. However, the object detection algorithm was not the focus of our work; VISTA was merely chosen for a proof of concept. The implementation details and code for this algorithm can be found in [35]. Any other system aimed at nuScenes should also be able to employ the presented data set.

3. Results and Discussion

In this section, the training results of the VISTA [35] algorithm on the SaBi3d data set are presented and discussed. Furthermore, the general features and limitations of the data set are discussed.

3.1. Results of 3D Object Detection

While reproducing the results of VISTA [35] on nuScenes [18], it was discovered that the detection performance of VISTA heavily relies on the class-balanced grouping and sampling (CBGS) pre-processing step [39]. Without the resampling step, poor results were achieved (Table 3). While the original algorithm yielded results between 85.0 and 85.5 mAP of a maximum of 100, the result without resampling was much lower at 53.6 mAP. It was in a similar range when training and evaluating only on the class ‘car’, i.e., 58.1 mAP. This posed a problem since the SaBi3d data set only includes one single class; therefore, resampling based on classes was not possible.

Table 3.

Evaluation results on nuScenes data set.

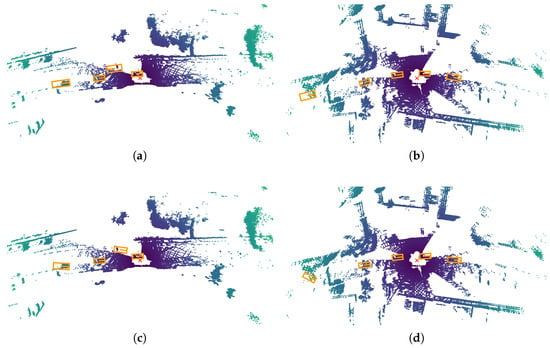

However, when evaluating the results on the SaBi3d evaluation split (Table 4), this proved not to be a problem. The evaluation on the model trained on nuScenes data with resampling yielded very poor results (0.3 mAP). When fine-tuning this model on only the class ‘cars’ from the nuScenes data set, a slight improvement to 12.6 mAP was observed. However, when fine-tuning it on the SaBi3d training data, a mAP of 80.2 on the SaBi3d evaluation data was achieved. Notably, training the model from uninitialized weights yielded results of 79.1 mAP, almost as high as the fine-tuned model. This shows that the expensive pre-training on the nuScenes data set is not necessary to achieve good results on the SaBi3d data set with current 3D object detection algorithms. Two frames were selected before training based on their ground truth labels in such a way that they exhibited interesting and difficult properties (the presence of occluded vehicles, oncoming traffic, and multiple motorized vehicles). The predictions of this model are visualized in Figure 5.

Table 4.

Evaluation results on SaBi3d data set.

Figure 5.

Ground truth (a,b) and predictions by the model trained on the SaBi3d data set (c,d) on selected frames of the validation split of the SaBi3d data set. For the visualization, 3D bounding boxes are projected onto a 2D plane with a bird’s-eye-view. The red ‘x’ marks the ego position of the recording vehicle, yellow boxes refer to the bounding boxes of cars, the dash in each bounding box denotes the front of a bounding box, and the points on a color spectrum from purple to green mark points of increasing distance from the ego vehicle. The results are generally promising, only minor errors were made. For example, the orientation of the leftmost box in (d) is incorrect. The correct prediction of the location of the second box from the right in (c), which is a very occluded vehicle, is notable.

3.2. Features and Limitations of Data Set

The presented data set is novel in many ways, though limited in some. First, while this work only focuses on single-rider urban car-to-bicycle overtaking maneuvers, this is a common situation in urban traffic. This data set is the first publicly available one of this kind that addresses these situations from the perspective of cycling as a means of transport, in contrast to cycling as exercise. Second, the sensor setup of this data set was somewhat uncommon, leading to unusual point cloud patterns (cf. Figure 2). However, this was mitigated by the merging of the five individual point clouds into one. Furthermore, it was adapted to the nuScenes data format so that a completely seamless interchange of these data sets is possible, providing ease of use for other researchers. Third, while the amount of data were limited and not comparable with data sets in autonomous motorized vehicle research, this is the first publicly available LiDAR data set from the perspective of a cyclist.

4. Conclusions

To increase the modal share of cycling, which is desirable because of its environmental and health benefits, the objective and perceived characteristics of dangerous overtaking maneuvers need to be understood. A deeper understanding will enable traffic planners to implement measures that will enhance safety. This topic has been studied using a variety of sensors and methods. LiDAR is an obvious choice for measuring the required accurate distance and speed of overtaking cars; however, until now, the costly manual annotation step has presented a major obstacle to its usage. The presented SaBi3d data set is the first public annotated data set of LiDAR point clouds of car-to-bicycle overtaking maneuvers collected by the bicycle itself, allowing for training and evaluation of a corresponding object detection system. To facilitate the use of our data set, we chose to structure the data almost identically to the popular nuScenes data set, allowing it to be used in various existing object detection systems. We also trained the best system (at the time of our evaluation) according to the nuScenes 3D object detection benchmark on our data to provide strong baseline results. Any works using this data set can and should strive to achieve at least this level of object detection performance before pursuing any follow-up applications.

Future work might include creating similar data sets using different LiDAR setups for ease of usage by other research groups. Another consequent step could be designing a tracking component on top of the benchmark system or another detection algorithm, and a system for calculating safety metrics to allow end-to-end assessments of overtaking maneuvers. And finally, using the same approach and structure, similar data sets could be created to cover other interesting maneuvers, e.g., at intersections.

Author Contributions

Conceptualization, C.O. and M.B.; methodology, C.O. and M.B.; software, C.O.; validation, C.O. and M.B.; formal analysis, C.O. and M.B.; investigation, C.O. and M.B.; resources, C.O. and M.B.; data curation, C.O. and M.B.; writing—original draft preparation, C.O.; writing—review and editing, C.O. and M.B.; visualization, C.O.; supervision, M.B.; project administration, M.B.; funding acquisition, M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Austrian Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK) under Grant GZ 2021-0.641.557.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data set is available at https://osf.io/k7cg9 (accessed on 18 July 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LiDAR | Light Detection and Ranging |

| NDS | nuScenes Detection Score |

| mAP | Mean Average Precision |

| VISTA | Dual Cross-VIew SpaTial Attention |

| FOV | Field of View |

| CBGS | Class-balanced Grouping and Sampling |

Notes

| 1 | https://www.borealbikes.com (accessed on 18 July 2024). |

| 2 | https://github.com/naurril/SUSTechPOINTS (accessed on 18 July 2024). |

| 3 | For detailed description see: https://www.nuscenes.org/nuscenes#data-format (accessed on 18 July 2024). |

| 4 | https://github.com/nutonomy/nuscenes-devkit (accessed on 18 July 2024). |

| 5 | Code available at https://github.com/Gorilla-Lab-SCUT/VISTA (accessed on 18 July 2024). |

References

- Lindsay, G.; Macmillan, A.; Woodward, A. Moving urban trips from cars to bicycles: Impact on health and emissions. Aust. N. Z. J. Public Health 2011, 35, 54–60. [Google Scholar] [CrossRef] [PubMed]

- Buehler, R.; Pucher, J.R. (Eds.) Cycling for Sustainable Cities; Urban and industrial environments, The MIT Press: Cambridge, MA, USA; London, UK, 2021. [Google Scholar]

- European Union. European Declaration on Cycling; European Union: Brussels, Belgium, 2023; C/2024/2377. [Google Scholar]

- Illek, G.; Braun, L.; Jellinek, R.; Reidlinger, B.; Leindl, A.; Chiu, K.; Homola, T. Radverkehrsförderung in Österreich; Bundesministerium für Klimaschutz, Umwelt, Energie, Mobilität, Innovation und Technologie: Wien, Austria, 2022. [Google Scholar]

- Wegman, F.; Zhang, F.; Dijkstra, A. How to make more cycling good for road safety? Accid. Anal. Prev. 2012, 44, 19–29. [Google Scholar] [CrossRef] [PubMed]

- European Transport Safety Council. How Safe is Walking and Cycling in Europe; PIN Flash Report 38; Technical report; European Transport Safety Council: Brussels, Belgium, 2020. [Google Scholar]

- Beck, B.; Perkins, M.; Olivier, J.; Chong, D.; Johnson, M. Subjective experiences of bicyclists being passed by motor vehicles: The relationship to motor vehicle passing distance. Accid. Anal. Prev. 2021, 155, 106102. [Google Scholar] [CrossRef] [PubMed]

- Merk, J.; Eckart, J.; Zeile, P. Subjektiven Verkehrsstress objektiv messen–ein EmoCycling-Mixed-Methods-Ansatz. In Proceedings of the CITIES 20.50–Creating Habitats for the 3rd Millennium: Smart–Sustainable–Climate Neutral. Proceedings of the REAL CORP 2021, 26th International Conference on Urban Development, Regional Planning and Information Society, Vienna, Austria, 7–10 September 2021; pp. 767–778. [Google Scholar]

- Gadsby, A.; Watkins, K. Instrumented bikes and their use in studies on transportation behaviour, safety, and maintenance. Transp. Rev. 2020, 40, 774–795. [Google Scholar] [CrossRef]

- Kapousizis, G.; Ulak, M.B.; Geurs, K.; Havinga, P.J. A review of state-of-the-art bicycle technologies affecting cycling safety: Level of smartness and technology readiness. Transp. Rev. 2023, 43, 430–452. [Google Scholar] [CrossRef]

- Beck, B.; Chong, D.; Olivier, J.; Perkins, M.; Tsay, A.; Rushford, A.; Li, L.; Cameron, P.; Fry, R.; Johnson, M. How Much Space Do Drivers Provide When Passing Cyclists? Understanding the Impact of Motor Vehicle and Infrastructure Characteristics on Passing Distance. Accid. Ana. Prev. 2019, 128, 253–260. [Google Scholar] [CrossRef] [PubMed]

- López, G.; Pérez-Zuriaga, A.M.; Moll, S.; García, A. Analysis of Overtaking Maneuvers to Cycling Groups on Two-Lane Rural Roads using Objective and Subjective Risk. Transp. Res. Rec. J. Transp. Res. Board 2020, 2674, 148–160. [Google Scholar] [CrossRef]

- Moll, S.; López, G.; Rasch, A.; Dozza, M.; García, A. Modelling Duration of Car-Bicycles Overtaking Manoeuvres on Two-Lane Rural Roads Using Naturalistic Data. Accid. Ana. Prev. 2021, 160, 106317. [Google Scholar] [CrossRef]

- Dozza, M.; Schindler, R.; Bianchi-Piccinini, G.; Karlsson, J. How Do Drivers Overtake Cyclists? Accid. Ana. Prev. 2016, 88, 29–36. [Google Scholar] [CrossRef]

- Rasch, A. Modelling Driver Behaviour in Longitudinal Vehicle-Pedestrian Scenarios. Master’s Thesis, Chalmers University of Technology, Göteborg, Sweden, 2018. [Google Scholar]

- Rasch, A.; Boda, C.N.; Thalya, P.; Aderum, T.; Knauss, A.; Dozza, M. How Do Oncoming Traffic and Cyclist Lane Position Influence Cyclist Overtaking by Drivers? Accid. Ana. Prev. 2020, 142, 105569. [Google Scholar] [CrossRef]

- Rasch, A.; Dozza, M. Modeling Drivers’ Strategy When Overtaking Cyclists in the Presence of Oncoming Traffic. IEEE Trans. Intell. Transp. Syst. 2022, 23, 2180–2189. [Google Scholar] [CrossRef]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuScenes: A multimodal dataset for autonomous driving. arXiv 2020, arXiv:1903.11027. [Google Scholar]

- Jeon, W.; Rajamani, R. A novel collision avoidance system for bicycles. In Proceedings of the 2016 American Control Conference (ACC), Boston, MA, USA, 6–8 July 2016; pp. 3474–3479. [Google Scholar] [CrossRef]

- Jeon, W.; Rajamani, R. Active Sensing on a Bicycle for Accurate Tracking of Rear Vehicle Maneuvers. In Proceedings of the ASME 2016 Dynamic Systems and Control Conference, Minneapolis, MN, USA, 12–14 October 2016; Volume 2: Mechatronics; Mechatronics and Controls in Advanced Manufacturing; Modeling and Control of Automotive Systems and Combustion Engines; Modeling and Validation; Motion and Vibration Control Applications; Multi-Agent and Networked Systems; Path Planning and Motion Control; Robot Manipulators; Sensors and Actuators; Tracking Control Systems; Uncertain Systems and Robustness; Unmanned, Ground and Surface Robotics; Vehicle Dynamic Controls; Vehicle Dynamics and Traffic Control. p. V002T31A004. [Google Scholar] [CrossRef]

- Jeon, W.; Rajamani, R. Rear Vehicle Tracking on a Bicycle Using Active Sensor Orientation Control. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2638–2649. [Google Scholar] [CrossRef]

- Jeon, W.; Rajamani, R. Active Sensing on a Bicycle for Simultaneous Search and Tracking of Multiple Rear Vehicles. IEEE Trans. Veh. Technol. 2019, 68, 5295–5308. [Google Scholar] [CrossRef]

- Jeon, W.; Xie, Z.; Craig, C.; Achtemeier, J.; Alexander, L.; Morris, N.; Donath, M.; Rajamani, R. A Smart Bicycle That Protects Itself: Active Sensing and Estimation for Car-Bicycle Collision Prevention. IEEE Control. Syst. Mag. 2021, 41, 28–57. [Google Scholar] [CrossRef]

- Xie, Z.; Rajamani, R. On-Bicycle Vehicle Tracking at Traffic Intersections Using Inexpensive Low-Density Lidar. In Proceedings of the 2019 American Control Conference (ACC), Philadelphia, PA, USA, 10–12 July 2019; pp. 593–598. [Google Scholar] [CrossRef]

- Xie, Z.; Jeon, W.; Rajamani, R. Low-Density Lidar Based Estimation System for Bicycle Protection. IEEE Trans. Intell. Veh. 2021, 6, 67–77. [Google Scholar] [CrossRef]

- Van Brummelen, J.; Emran, B.; Yesilcimen, K.; Najjaran, H. Reliable and Low-Cost Cyclist Collision Warning System for Safer Commute on Urban Roads. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 003731–003735. [Google Scholar]

- Muro, S.; Matsui, Y.; Hashimoto, M.; Takahashi, K. Moving-Object Tracking with Lidar Mounted on Two-wheeled Vehicle. In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics, Prague, Czech Republic, 29–31 July 2019; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2019; pp. 453–459. [Google Scholar] [CrossRef]

- Niedermüller, A.; Beeking, M. Transformer Based 3D Semantic Segmentation of Urban Bicycle Infrastructure. J. Locat. Based Serv. 2024, 1–23. [Google Scholar] [CrossRef]

- Vogt, J.; Ilic, M.; Bogenberger, K. A Mobile Mapping Solution for VRU Infrastructure Monitoring via Low-Cost LiDAR-sensors. J. Locat. Based Serv. 2023, 17, 389–411. [Google Scholar] [CrossRef]

- Yoshida, N.; Yamanaka, H.; Matsumoto, S.; Hiraoka, T.; Kawai, Y.; Kojima, A.; Inagaki, T. Development of Safety Measures of Bicycle Traffic by Observation with Deep-Learning, Drive Recorder Data, Probe Bicycle with LiDAR, and Connected Simulators. In Proceedings of the Contributions to the 10th International Cycling Safety Conference 2022 (ICSC2022), Technische Universität, Dresden, Germany, 8–10 November 2022; pp. 183–185. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. arXiv 2020, arXiv:1912.04838. [Google Scholar]

- Erçelik, E.; Yurtsever, E.; Liu, M.; Yang, Z.; Zhang, H.; Topçam, P.; Listl, M.; Çaylı, Y.K.; Knoll, A. 3D Object Detection with a Self-supervised Lidar Scene Flow Backbone. In Proceedings of the Computer Vision–ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer: Cham, Switzerland, 2022; pp. 247–265. [Google Scholar] [CrossRef]

- Lu, Y.; Hao, X.; Li, Y.; Chai, W.; Sun, S.; Velipasalar, S. Range-Aware Attention Network for LiDAR-based 3D Object Detection with Auxiliary Point Density Level Estimation. arXiv 2022, arXiv:2111.09515. [Google Scholar]

- Deng, S.; Liang, Z.; Sun, L.; Jia, K. VISTA: Boosting 3D Object Detection via Dual Cross-VIew SpaTial Attention. arXiv 2022, arXiv:2203.09704. [Google Scholar]

- Li, Y.; Chen, Y.; Qi, X.; Li, Z.; Sun, J.; Jia, J. Unifying Voxel-based Representation with Transformer for 3D Object Detection. arXiv 2022, arXiv:2206.00630. [Google Scholar]

- Lee, J.; Koh, J.; Lee, Y.; Choi, J.W. D-Align: Dual Query Co-attention Network for 3D Object Detection Based on Multi-frame Point Cloud Sequence. arXiv 2022, arXiv:2210.00087. [Google Scholar]

- Zheng, W.; Hong, M.; Jiang, L.; Fu, C.W. Boosting 3D Object Detection by Simulating Multimodality on Point Clouds. arXiv 2022, arXiv:2206.14971. [Google Scholar]

- Zhu, B.; Jiang, Z.; Zhou, X.; Li, Z.; Yu, G. Class-balanced Grouping and Sampling for Point Cloud 3D Object Detection. arXiv 2019, arXiv:1908.09492. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Mao, J.; Shi, S.; Wang, X.; Li, H. 3D Object Detection for Autonomous Driving: A Review and New Outlooks. arXiv 2022, arXiv:2206.09474. [Google Scholar]

- Wu, Y.; Wang, Y.; Zhang, S.; Ogai, H. Deep 3D Object Detection Networks Using LiDAR Data: A Review. IEEE Sens. J. 2021, 21, 1152–1171. [Google Scholar] [CrossRef]

- Liang, W.; Xu, P.; Guo, L.; Bai, H.; Zhou, Y.; Chen, F. A survey of 3D object detection. Multimed. Tools Appl. 2021, 80, 29617–29641. [Google Scholar] [CrossRef]

- Zamanakos, G.; Tsochatzidis, L.; Amanatiadis, A.; Pratikakis, I. A comprehensive survey of LIDAR-based 3D object detection methods with deep learning for autonomous driving. Comput. Graph. 2021, 99, 153–181. [Google Scholar] [CrossRef]

- Fernandes, D.; Silva, A.; Névoa, R.; Simões, C.; Gonzalez, D.; Guevara, M.; Novais, P.; Monteiro, J.; Melo-Pinto, P. Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Inf. Fusion 2021, 68, 161–191. [Google Scholar] [CrossRef]

- Chen, Q.; Sun, L.; Cheung, E.; Yuille, A.L. Every View Counts: Cross-View Consistency in 3D Object Detection with Hybrid-Cylindrical-Spherical Voxelization. In Proceedings of the Advances in Neural Information Processing Systems, Online, 6–12 December 2020; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M.F., Lin, H., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 21224–21235. [Google Scholar]

- Graham, B. Spatially-sparse convolutional neural networks. arXiv 2014, arXiv:1409.6070. [Google Scholar]

- Graham, B. Sparse 3D convolutional neural networks. arXiv 2015, arXiv:1505.02890. [Google Scholar]

- Graham, B.; van der Maaten, L. Submanifold Sparse Convolutional Networks. arXiv 2017, arXiv:1706.01307. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar] [CrossRef]

- Chen, Q.; Sun, L.; Wang, Z.; Jia, K.; Yuille, A. Object as Hotspots: An Anchor-Free 3D Object Detection Approach via Firing of Hotspots. arXiv 2020, arXiv:1912.12791. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-based 3D Object Detection and Tracking. arXiv 2021, arXiv:2006.11275. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Livox Tech. Livox Horizon: User Manual v1.0; Technical report; Livox Tech: Wanchai, Hong Kong, 2019. [Google Scholar]

- Li, E.; Wang, S.; Li, C.; Li, D.; Wu, X.; Hao, Q. SUSTech POINTS: A Portable 3D Point Cloud Interactive Annotation Platform System. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1108–1115. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Y.; Li, B. SECOND: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).