Regression-Based Approach to Test Missing Data Mechanisms

Abstract

:1. Introduction

2. Existing Approaches for Testing Missing Data Mechanisms

2.1. Dixon Test

2.2. Little Test

2.3. Jamshidian and Jalal Test

2.4. Comparison of the Three Tests

3. Regression-Based Approach

3.1. Principle

3.2. Continuous Case

3.3. Binary Case

3.4. Categorical Case

3.5. Discussion

4. Simulation Study

4.1. General Setting

- Experiment set 1: independent data:

- Continuous data with a distribution;

- Continuous data with a distribution;

- Binary data with a Bernoulli distribution ;

- Polytomous data with a distribution.

- Experiment set 2: correlated data:

- Continuous data with different uniform and normal distributions.

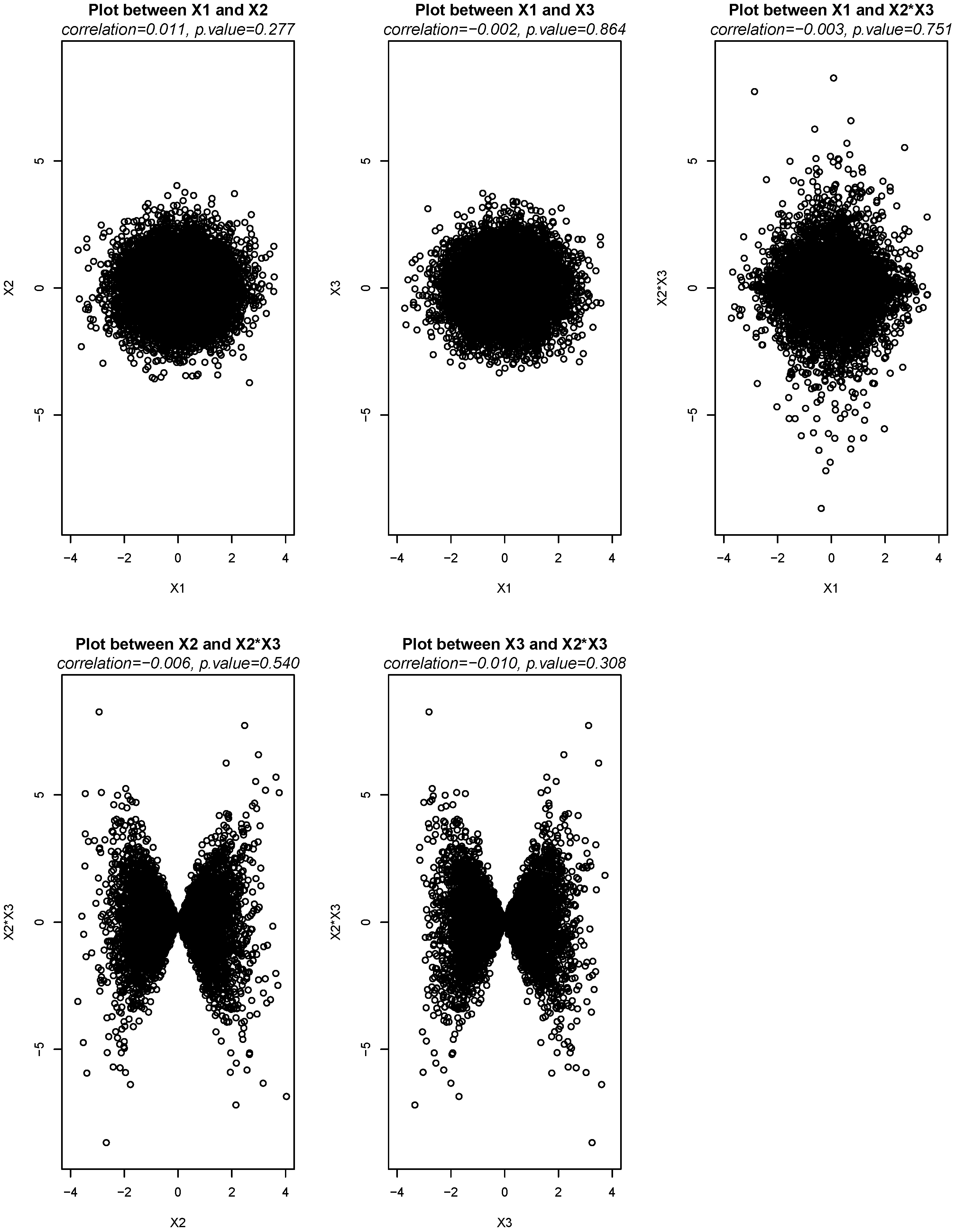

- ;

- ;

- ;

4.2. Simulated Missing Data Mechanisms

- MCAR: A random vector of size n containing uniformly distributed data between 0 and 1 is generated. Then, all data above the th percentile in are selected and the corresponding observations in are replaced with MD.Example. Let . In this case, is missing when the random vector is larger than its 80th percentile.

- MAR1: In the first MAR mechanism, the MD for are caused by only one other variable. One of the variables between and , say, , is randomly chosen to become the cause of the MD for . Then, all data above the th percentile in are selected, and the corresponding observations in are replaced with MD.Example. Let . In this case, is missing when is larger than its 20th percentile.

- MAR2: In the second MAR mechanism, the MD for are caused by two independent variables. Two variables between and , say, and , are randomly chosen as the cause of the MD for . Then, first, select all data above the th percentile in and replace the corresponding observations in with MD. Second, do the same with . Since some missing data generated from could have already been generated from , continue to generate MD from by going under the th percentile until exactly h percent of data are replaced by MD for .Example. Let . In this case, is missing when and are larger than their 90th percentile. Since some MD generated from could have already been generated from , then the largest values from are additionally used until exactly 20% of the data for are missing.

- MAR3: The third MAR-generating mechanism is quite similar to MAR2, but it uses three different variables to generate MD. The difference with MAR2 is that it uses the second and third variables to build an interaction term (simple multiplication) and generates the second part of the MD from this interaction term rather than from the original variables. The interaction term allows to make the generation of MD more complex and to have an indirect explanation of MD.Example. Let . In this case, is missing when and the interaction between and (simple multiplication) are larger than their 90th percentile. Since some MD generated from the interaction term could have already been generated from , then the largest values from are additionally used until exactly 20% of the data for is missing.

- MAR4: The last MAR mechanism is similar to MAR1, except that the MD are caused by an interaction term built from two variables randomly selected from instead of from only one randomly selected variable.Example. Let . In this case, is missing when the interaction between and is larger than its 80th percentile.

4.3. Simulation Procedure

4.4. Experiment Set 1: Independent Data

4.4.1. Uniform Distribution

- with a sample size of 1000;

- with a sample size of 1000.

- The Jamshidian and Jalal procedure uses a combination of two tests: the modified Hawkins test and a non-parametric distribution test. However, there is no adjustment for the total error, which is problematic for simultaneous tests [27];

- Its procedure is such that when data follow a multivariate normal distribution, the test rejects the null hypothesis more often. Thus, it is impossible to compare the application of the test on different types of data;

- The construction of the Hawkins test is such that whatever the distribution, when the sample size is relatively small the test fails to reject the null hypothesis (lack of statistical power). This means that when there is a relatively small quantity of MD, it is too easy for the modified Hawkins test to accept the null hypothesis;

- It is known that non-parametric tests are generally less powerful than parametric ones [41], and the Jamshidian and Jalal procedure uses a non-parametric test to check for missingness.

4.4.2. Normal Distribution

4.4.3. Comparison of the Continuous Results

4.4.4. Binary Distribution

- (a) Represents the results of the RB approach for MAR1 data. It shows how this approach behaves as a function of the quantity of MD and the sample size;

- (b) Represents the difference between the RB approach and the Little test when the sample size is equal to 100;

- (c)–(e) are the same as (b), except that the sample size increases respectively to 250, 500, and 1000.

4.4.5. Multinomial Distribution

4.5. Experiment Set 2: Correlated Data

4.6. Discussion

5. Application on Real Data

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. R Code for Generated Missing Data Mechanisms

Appendix B. Simulation Results of Experiment Set 1

| % of MD | n = 100 | n = 250 | n = 500 | n = 1000 | n = 2000 | n = 10,000 | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MCAR | MAR1 | MCAR | MAR1 | MCAR | MAR1 | MCAR | MAR1 | MCAR | MAR1 | MCAR | MAR1 | |||||||||||||

| L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | |

| 50% | 96.2 | 93.9 | 0 | 29.8 | 94.7 | 94.5 | 0 | 20.0 | 93.9 | 95.3 | 0 | 11.9 | 95.6 | 95.9 | 0 | 9.1 | 95.6 | 94.7 | 0 | 6.5 | 95.2 | 95.7 | 0 | 3.7 |

| 45% | 96.3 | 93.4 | 0 | 32.7 | 95.4 | 94.4 | 0 | 21.3 | 94.0 | 94.6 | 0 | 14.2 | 96.0 | 96.3 | 0 | 9.8 | 95.4 | 95.2 | 0 | 8.3 | 96.1 | 94.2 | 0 | 2.5 |

| 40% | 95.9 | 94.6 | 0 | 35.2 | 93.8 | 93.7 | 0 | 23.9 | 94.6 | 95.3 | 0 | 18.5 | 96.1 | 95.2 | 0 | 12.6 | 95.6 | 94.8 | 0 | 9.3 | 95.8 | 95.4 | 0 | 3.6 |

| 35% | 96.0 | 93.5 | 0 | 37.6 | 95.1 | 95.4 | 0 | 24.7 | 93.7 | 95.8 | 0 | 21.4 | 95.6 | 95.4 | 0 | 13.5 | 95.5 | 94.5 | 0 | 10.3 | 96.1 | 94.8 | 0 | 4.0 |

| 30% | 96.3 | 95.8 | 0 | 44.5 | 95.0 | 96.4 | 0 | 27.4 | 94.6 | 96.6 | 0 | 20.6 | 96.1 | 95.6 | 0 | 14.4 | 94.5 | 94.6 | 0 | 11.6 | 95.3 | 95.1 | 0 | 3.5 |

| 25% | 95.5 | 96.4 | 0 | 48.2 | 95.6 | 96.1 | 0 | 32.3 | 94.5 | 95.3 | 0 | 23.0 | 96.9 | 96.5 | 0 | 16.6 | 94.4 | 94.3 | 0 | 11.6 | 96.5 | 94.7 | 0 | 4.2 |

| 20% | 95.0 | 95.7 | 0 | 51.1 | 95.4 | 95.4 | 0 | 35.7 | 93.4 | 94.8 | 0 | 25.2 | 95.2 | 94.8 | 0 | 19.6 | 94.1 | 95.6 | 0 | 12.6 | 95.4 | 94.7 | 0 | 5.3 |

| 15% | 95.1 | 95.3 | 0 | 57.4 | 95.2 | 94.6 | 0 | 43.4 | 94.8 | 96.1 | 0 | 32.7 | 95.9 | 96.3 | 0 | 20.5 | 95.6 | 94.1 | 0 | 16.0 | 95.6 | 95.1 | 0 | 7.2 |

| 10% | 96.2 | 94.6 | 0 | 65.8 | 95.7 | 95.4 | 0 | 52.8 | 94.9 | 95.6 | 0 | 40.3 | 96.2 | 95.8 | 0 | 28.8 | 96.3 | 95.3 | 0 | 22.1 | 95.4 | 95.3 | 0 | 7.3 |

| 5% | 96.5 | 94.5 | 9.6 | 79.5 | 95.5 | 95.0 | 0 | 65.8 | 94.4 | 95.5 | 0 | 53.4 | 96.2 | 96.3 | 0 | 39.0 | 95.5 | 95.6 | 0 | 29.6 | 95.1 | 95.6 | 0 | 12.5 |

| 4% | 96.5 | 94.7 | 32.9 | 81.8 | 95.5 | 95.4 | 0 | 70.2 | 95.3 | 94.4 | 0 | 55.1 | 95.3 | 97.1 | 0 | 45.0 | 95.4 | 95.1 | 0 | 34.8 | 95.6 | 95.2 | 0 | 14.4 |

| 3% | 97.3 | 94.2 | 61.3 | 85.0 | 95.6 | 95.3 | 0 | 72.7 | 95.2 | 95.6 | 0 | 63.7 | 96.1 | 96.3 | 0 | 49.1 | 95.0 | 94.7 | 0 | 38.6 | 96.3 | 93.4 | 0 | 17.4 |

| 2% | 97.4 | 93.3 | 85.3 | 87.6 | 95.4 | 95.0 | 5.1 | 79.7 | 96.1 | 94.6 | 0 | 70.2 | 96.3 | 96.0 | 0 | 59.2 | 95.3 | 94.5 | 0 | 45.8 | 95.7 | 95.6 | 0 | 20.6 |

| 1% | 99.2 | 93.9 | 98.5 | 93.4 | 97.7 | 95.0 | 57.8 | 87.0 | 96.6 | 95.3 | 3.2 | 80.5 | 97.2 | 95.6 | 0 | 70.7 | 94.0 | 96.8 | 0 | 58.9 | 95.1 | 95.8 | 0 | 26.6 |

| n = 10,000 | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| % of MD | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | ||||||

| L | RB | L | RB | L | RB | L | RB | L | RB | L | RB | |

| 50% | 95.2 | 94.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0 |

| 45% | 96.1 | 95.0 | 0 | 0 | 0 | 0 | 0 | 0.1 | 0 | 0.2 | 0 | 0 |

| 40% | 95.8 | 94.1 | 0 | 0.3 | 0 | 0.1 | 0 | 0 | 0 | 0.2 | 0 | 0.1 |

| 35% | 96.1 | 95.7 | 0 | 0.5 | 0 | 0 | 0 | 0.3 | 0 | 0.2 | 0 | 0 |

| 30% | 95.3 | 96.2 | 0 | 0.3 | 0 | 0.4 | 0 | 0.1 | 0 | 0.1 | 0 | 0 |

| 25% | 96.5 | 95.3 | 0 | 0.2 | 0 | 0.2 | 0 | 0.1 | 0 | 0.3 | 0 | 0 |

| 20% | 95.4 | 96.1 | 0 | 0.4 | 0 | 0.4 | 0 | 0.3 | 0 | 0.1 | 0 | 0 |

| 15% | 95.6 | 95.3 | 0 | 0.2 | 0 | 0.5 | 0 | 0.6 | 0 | 0.5 | 0 | 0 |

| 10% | 95.4 | 95.9 | 0 | 0.8 | 0 | 1.3 | 0 | 1.0 | 0 | 0.3 | 0 | 0 |

| 5% | 95.1 | 96.1 | 0 | 2.3 | 0 | 2.8 | 0 | 3.0 | 0 | 0.5 | 0 | 0.2 |

| 4% | 95.6 | 96.2 | 0 | 1.8 | 0 | 3.4 | 0 | 5.0 | 0 | 0.9 | 0 | 0.6 |

| 3% | 96.3 | 96.0 | 0 | 2.8 | 0 | 4.3 | 0 | 4.7 | 0 | 2.0 | 0 | 0.2 |

| 2% | 95.7 | 96.5 | 0 | 4.6 | 0 | 7.0 | 0 | 8.8 | 0 | 2.5 | 0 | 0.9 |

| 1% | 95.1 | 95.8 | 0 | 9.2 | 0 | 15.1 | 0 | 17.5 | 0 | 3.9 | 0 | 2.1 |

Appendix C. Simulation Results of Experiment Set 2

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 94.1 | 0 | 0 | 0 | 0 | 0 | 97.4 | 0 | 0 | 0 | 0 | 0 |

| 45% | 100.0 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 | 0 | 0 | 0 | 0 |

| 40% | 92.9 | 0 | 0 | 0 | 0 | 0 | 97.1 | 0 | 0 | 0 | 0 | 0 |

| 35% | 76.9 | 0 | 0 | 0 | 0 | 0 | 87.9 | 0 | 0 | 0 | 0 | 0 |

| 30% | 86.4 | 0 | 0 | 0 | 0 | 0 | 87.2 | 0 | 0 | 0 | 0 | 0 |

| 25% | 85.7 | 0 | 0 | 0 | 0 | 0 | 91.2 | 0 | 0 | 0 | 0 | 0 |

| 20% | 96.2 | 0 | 0 | 0 | 0 | 0 | 96.4 | 0 | 0 | 0 | 0 | 0 |

| 15% | 94.4 | 0 | 0 | 0 | 0 | 0 | 94.9 | 0 | 0 | 0 | 0 | 0 |

| 10% | 87.5 | 0 | 0 | 0 | 0 | 0 | 92.1 | 0 | 0 | 0 | 0 | 0 |

| 5% | 100.0 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 | 0 | 0 | 0 | 0 |

| 4% | 100.0 | 0 | 0 | 0 | 0 | 0 | 97.3 | 0 | 0 | 0 | 0 | 0 |

| 3% | 100.0 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 | 0 | 0 | 0 | 0 |

| 2% | 100.0 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 | 0 | 0 | 0 | 0 |

| 1% | 85.7 | 0 | 0 | 0 | 0 | 0 | 90.0 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 97.5 | 0 | 0 | 0 | 0 | 0 | 94.9 | 0 | 0 | 0 | 0 | 0 |

| 45% | 96.7 | 0 | 0 | 0 | 0 | 0 | 93.8 | 0 | 0 | 0 | 0 | 0 |

| 40% | 94.2 | 0 | 0 | 0 | 0 | 0 | 93.1 | 0 | 0 | 0 | 0 | 0 |

| 35% | 92.6 | 0 | 0 | 0 | 0 | 0 | 94.6 | 0 | 0 | 0 | 0 | 0 |

| 30% | 92.9 | 0 | 0 | 0 | 0 | 0 | 96.0 | 0 | 0 | 0 | 0 | 0 |

| 25% | 92.1 | 0 | 0 | 0 | 0 | 0 | 95.5 | 0 | 0 | 0 | 0 | 0 |

| 20% | 95.1 | 0 | 0 | 0 | 0 | 0 | 95.1 | 0 | 0 | 0 | 0 | 0 |

| 15% | 96.2 | 0 | 0 | 0 | 0 | 0 | 91.1 | 0 | 0 | 0 | 0 | 0 |

| 10% | 93.9 | 0 | 0 | 0 | 0 | 0 | 94.3 | 0 | 0 | 0 | 0 | 0 |

| 5% | 96.6 | 0 | 0 | 0 | 0 | 0 | 95.7 | 0 | 0 | 0 | 0 | 0 |

| 4% | 93.9 | 0 | 0 | 0 | 0 | 0 | 93.9 | 0 | 0 | 0 | 0 | 0 |

| 3% | 96.0 | 0 | 0 | 0 | 0 | 0 | 94.9 | 0 | 0 | 0 | 0 | 0 |

| 2% | 97.0 | 0 | 0 | 0 | 0 | 0 | 93.8 | 0 | 0 | 0 | 0 | 0 |

| 1% | 92.3 | 0 | 0 | 0 | 0 | 0 | 92.5 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 97.5 | 0 | 0 | 0 | 0 | 0 | 94.5 | 0 | 0 | 0 | 0 | 0 |

| 45% | 97.0 | 0 | 0 | 0 | 0 | 0 | 95.8 | 0 | 0 | 0 | 0 | 0 |

| 40% | 93.6 | 0 | 0 | 0 | 0 | 0 | 95.1 | 0 | 0 | 0 | 0 | 0 |

| 35% | 96.7 | 0 | 0 | 0 | 0 | 0 | 94.8 | 0 | 0 | 0 | 0 | 0 |

| 30% | 94.6 | 0 | 0 | 0 | 0 | 0 | 98.6 | 0 | 0 | 0 | 0 | 0 |

| 25% | 95.6 | 0 | 0 | 0 | 0 | 0 | 95.9 | 0 | 0 | 0 | 0 | 0 |

| 20% | 97.3 | 0 | 0 | 0 | 0 | 0 | 95.8 | 0 | 0 | 0 | 0 | 0 |

| 15% | 95.2 | 0 | 0 | 0 | 0 | 0 | 96.3 | 0 | 0 | 0 | 0 | 0 |

| 10% | 94.5 | 0 | 0 | 0 | 0 | 0 | 95.9 | 0 | 0 | 0 | 0 | 0 |

| 5% | 93.7 | 0 | 0 | 0 | 0 | 0 | 95.6 | 0 | 0 | 0 | 0 | 0 |

| 4% | 91.9 | 0 | 0 | 0 | 0 | 0 | 91.9 | 0 | 0 | 0 | 0 | 0 |

| 3% | 94.4 | 0 | 0 | 0 | 0 | 0 | 94.0 | 0 | 0 | 0 | 0 | 0 |

| 2% | 97.9 | 0 | 0 | 0 | 0 | 0 | 93.5 | 0 | 0 | 0 | 0 | 0 |

| 1% | 94.7 | 0 | 0 | 0 | 0 | 0 | 94.5 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 88.2 | 0 | 0 | 0 | 0 | 0 | 94.7 | 0 | 0 | 0 | 0 | 0 |

| 45% | 95.2 | 0 | 0 | 0 | 0 | 0 | 97.7 | 0 | 0 | 0 | 0 | 0 |

| 40% | 92.9 | 0 | 0 | 0 | 0 | 0 | 97.1 | 0 | 0 | 0 | 0 | 0 |

| 35% | 92.3 | 0 | 0 | 0 | 0 | 0 | 93.9 | 0 | 0 | 0 | 0 | 0 |

| 30% | 90.9 | 0 | 0 | 0 | 0 | 0 | 89.7 | 0 | 0 | 0 | 0 | 0 |

| 25% | 85.7 | 0 | 0 | 0 | 0 | 0 | 91.2 | 0 | 0 | 0 | 0 | 0 |

| 20% | 96.2 | 0 | 0 | 0 | 0 | 0 | 96.4 | 0 | 0 | 0 | 0 | 0 |

| 15% | 88.9 | 0 | 0 | 0 | 0 | 0 | 92.3 | 0 | 0 | 0 | 0 | 0 |

| 10% | 93.8 | 0 | 0 | 0 | 0 | 0 | 89.5 | 0 | 0 | 0 | 0 | 0 |

| 5% | 200.0 | 0 | 0 | 0 | 0 | 0 | 100.0 | 0 | 0 | 0 | 0 | 0 |

| 4% | 92.3 | 0 | 0 | 0 | 0 | 0 | 89.2 | 0 | 0 | 0 | 0 | 0 |

| 3% | 94.1 | 0 | 0 | 0 | 0 | 0 | 91.2 | 0 | 0 | 0 | 0 | 0 |

| 2% | 91.7 | 0 | 0 | 0 | 0 | 0 | 96.2 | 0 | 0 | 0 | 0 | 0 |

| 1% | 92.9 | 0 | 0 | 0 | 0 | 0 | 90.0 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 96.2 | 0 | 0 | 0 | 0 | 0 | 93.9 | 0 | 0 | 0 | 0 | 0 |

| 45% | 93.9 | 0 | 0 | 0 | 0 | 0 | 94.2 | 0 | 0 | 0 | 0 | 0 |

| 40% | 97.9 | 0 | 0 | 0 | 0 | 0 | 90.3 | 0 | 0 | 0 | 0 | 0 |

| 35% | 93.2 | 0 | 0 | 0 | 0 | 0 | 94.6 | 0 | 0 | 0 | 0 | 0 |

| 30% | 93.5 | 0 | 0 | 0 | 0 | 0 | 95.7 | 0 | 0 | 0 | 0 | 0 |

| 25% | 95.8 | 0 | 0 | 0 | 0 | 0 | 96.1 | 0 | 0 | 0 | 0 | 0 |

| 20% | 97.5 | 0 | 0 | 0 | 0 | 0 | 94.7 | 0 | 0 | 0 | 0 | 0 |

| 15% | 94.5 | 0 | 0 | 0 | 0 | 0 | 92.3 | 0 | 0 | 0 | 0 | 0 |

| 10% | 92.4 | 0 | 0 | 0 | 0 | 0 | 94.7 | 0 | 0 | 0 | 0 | 0 |

| 5% | 96.6 | 0 | 0 | 0 | 0 | 0 | 95.1 | 0 | 0 | 0 | 0 | 0 |

| 4% | 93.9 | 0 | 0 | 0 | 0 | 0 | 96.5 | 0 | 0 | 0 | 0 | 0 |

| 3% | 96.6 | 0 | 0 | 0 | 0 | 0 | 94.9 | 0 | 0 | 0 | 0 | 0 |

| 2% | 97.6 | 0 | 0 | 0 | 0 | 0 | 93.8 | 0 | 0 | 0 | 0 | 0 |

| 1% | 94.7 | 0 | 0 | 0 | 0 | 0 | 92.5 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 95.1 | 0 | 0 | 0 | 0 | 0 | 94.8 | 0 | 0 | 0 | 0 | 0 |

| 45% | 97.6 | 0 | 0 | 0 | 0 | 0 | 95.5 | 0 | 0 | 0 | 0 | 0 |

| 40% | 93.1 | 0 | 0 | 0 | 0 | 0 | 94.0 | 0 | 0 | 0 | 0 | 0 |

| 35% | 96.1 | 0 | 0 | 0 | 0 | 0 | 96.5 | 0 | 0 | 0 | 0 | 0 |

| 30% | 92.6 | 0 | 0 | 0 | 0 | 0 | 96.3 | 0 | 0 | 0 | 0 | 0 |

| 25% | 95.6 | 0 | 0 | 0 | 0 | 0 | 95.3 | 0 | 0 | 0 | 0 | 0 |

| 20% | 97.8 | 0 | 0 | 0 | 0 | 0 | 93.3 | 0 | 0 | 0 | 0 | 0 |

| 15% | 94.0 | 0 | 0 | 0 | 0 | 0 | 96.6 | 0 | 0 | 0 | 0 | 0 |

| 10% | 95.8 | 0 | 0 | 0 | 0 | 0 | 95.9 | 0 | 0 | 0 | 0 | 0 |

| 5% | 96.2 | 0 | 0 | 0 | 0 | 0 | 94.7 | 0 | 0 | 0 | 0 | 0 |

| 4% | 97.1 | 0 | 0 | 0 | 0 | 0 | 91.3 | 0 | 0 | 0 | 0 | 0 |

| 3% | 97.0 | 0 | 0 | 0 | 0 | 0 | 94.3 | 0 | 0 | 0 | 0 | 0 |

| 2% | 94.2 | 0 | 0 | 0 | 0 | 0 | 93.8 | 0 | 0 | 0 | 0 | 0 |

| 1% | 98.8 | 0 | 0 | 0 | 0 | 0 | 95.4 | 0 | 0 | 0 | 0 | 0 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 94.1 | 5.6 | 4.2 | 11.5 | 10.7 | 13.0 | 89.5 | 11.4 | 4.8 | 15.6 | 10.5 | 10.9 |

| 45% | 90.5 | 2.3 | 20.8 | 4.5 | 4.3 | 23.8 | 93.2 | 6.0 | 20.0 | 16.1 | 2.4 | 14.6 |

| 40% | 92.9 | 6.1 | 6.7 | 11.1 | 3.4 | 11.1 | 94.1 | 10.7 | 11.5 | 12.5 | 5.7 | 12.5 |

| 35% | 92.3 | 7.7 | 10.5 | 7.1 | 10.0 | 5.0 | 97.0 | 13.5 | 26.5 | 21.1 | 10.0 | 17.0 |

| 30% | 86.4 | 5.9 | 10.0 | 10.5 | 0.0 | 7.7 | 92.3 | 9.1 | 18.4 | 11.8 | 7.7 | 16.7 |

| 25% | 100.0 | 0.0 | 15.0 | 10.0 | 13.0 | 20.0 | 94.1 | 12.3 | 21.6 | 15.9 | 18.3 | 28.6 |

| 20% | 100.0 | 17.4 | 20.0 | 12.5 | 3.8 | 23.5 | 96.4 | 24.6 | 25.0 | 10.5 | 8.2 | 25.0 |

| 15% | 100.0 | 19.0 | 16.7 | 37.5 | 12.9 | 14.3 | 97.4 | 38.0 | 32.5 | 33.3 | 20.4 | 24.4 |

| 10% | 81.2 | 48.0 | 29.4 | 20.0 | 23.4 | 20.0 | 89.5 | 48.1 | 28.6 | 30.0 | 21.7 | 28.0 |

| 5% | 86.7 | 55.0 | 46.7 | 33.3 | 31.2 | 40.0 | 90.9 | 39.0 | 37.8 | 34.1 | 40.9 | 51.3 |

| 4% | 100.0 | 50.0 | 20.0 | 71.4 | 26.9 | 23.8 | 97.3 | 60.0 | 36.4 | 61.8 | 29.5 | 28.9 |

| 3% | 100.0 | 50.0 | 41.2 | 50.0 | 39.1 | 25.0 | 100.0 | 52.2 | 38.9 | 31.9 | 30.2 | 35.4 |

| 2% | 75.0 | 54.5 | 22.2 | 22.2 | 44.0 | 56.5 | 88.5 | 60.5 | 18.4 | 33.3 | 43.2 | 56.0 |

| 1% | 100.0 | 33.3 | 21.4 | 35.7 | 9.1 | 43.5 | 100.0 | 40.5 | 21.6 | 46.2 | 29.7 | 52.3 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 96.2 | 18.1 | 17.0 | 14.9 | 15.7 | 10.0 | 95.5 | 32.0 | 25.2 | 15.7 | 25.5 | 20.4 |

| 45% | 94.5 | 18.3 | 22.1 | 17.0 | 12.8 | 7.5 | 95.5 | 32.9 | 32.2 | 18.9 | 27.7 | 17.7 |

| 40% | 93.7 | 22.4 | 17.7 | 16.6 | 14.3 | 14.4 | 95.8 | 36.1 | 26.8 | 22.0 | 28.2 | 26.1 |

| 35% | 93.8 | 27.3 | 22.0 | 20.8 | 14.6 | 14.3 | 94.6 | 43.4 | 27.8 | 19.4 | 37.8 | 28.7 |

| 30% | 92.9 | 23.0 | 27.6 | 19.9 | 15.9 | 19.1 | 94.6 | 44.8 | 33.0 | 25.2 | 30.8 | 28.2 |

| 25% | 96.4 | 25.3 | 33.1 | 21.5 | 21.2 | 23.6 | 95.2 | 44.8 | 31.6 | 23.2 | 30.7 | 30.2 |

| 20% | 95.6 | 36.6 | 29.3 | 25.8 | 19.6 | 30.2 | 95.4 | 56.9 | 40.0 | 26.5 | 33.0 | 36.1 |

| 15% | 94.0 | 38.9 | 33.7 | 25.1 | 22.8 | 34.3 | 91.4 | 58.5 | 35.4 | 28.3 | 34.2 | 37.5 |

| 10% | 93.9 | 56.0 | 32.3 | 34.1 | 24.0 | 34.5 | 92.5 | 58.2 | 38.2 | 26.6 | 35.4 | 38.1 |

| 5% | 96.0 | 48.8 | 40.3 | 33.9 | 30.9 | 42.7 | 93.6 | 63.8 | 44.3 | 35.3 | 34.2 | 44.4 |

| 4% | 93.3 | 55.6 | 37.2 | 37.9 | 32.6 | 35.5 | 97.1 | 58.2 | 35.6 | 33.1 | 38.3 | 46.1 |

| 3% | 97.7 | 58.4 | 34.8 | 35.4 | 29.1 | 45.2 | 92.8 | 56.4 | 41.7 | 35.8 | 37.5 | 46.7 |

| 2% | 94.6 | 55.2 | 35.5 | 39.8 | 34.9 | 50.5 | 95.2 | 66.9 | 43.4 | 36.6 | 41.0 | 47.5 |

| 1% | 94.1 | 56.9 | 36.7 | 43.6 | 38.3 | 47.6 | 95.2 | 64.1 | 36.9 | 47.7 | 43.6 | 52.4 |

| % of MD | ||||||||||||

| MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | MCAR | MAR1 | MAR2 | MAR3 | MAR4 | MAR4i | |

| 50% | 91.7 | 29.0 | 22.5 | 11.9 | 20.3 | 22.1 | 95.4 | 58.0 | 36.2 | 17.0 | 30.3 | 28.4 |

| 45% | 97.6 | 40.9 | 22.4 | 14.6 | 28.3 | 20.0 | 93.6 | 57.8 | 30.3 | 15.5 | 31.3 | 25.7 |

| 40% | 95.4 | 43.4 | 24.3 | 10.3 | 25.1 | 24.4 | 95.1 | 58.5 | 30.8 | 15.5 | 30.3 | 32.5 |

| 35% | 94.4 | 42.6 | 24.7 | 12.6 | 28.4 | 25.3 | 93.9 | 62.1 | 36.1 | 13.9 | 30.8 | 28.9 |

| 30% | 95.0 | 45.9 | 24.4 | 13.7 | 22.3 | 28.0 | 94.0 | 63.1 | 30.2 | 16.7 | 33.4 | 28.6 |

| 25% | 95.6 | 49.2 | 28.9 | 11.5 | 31.8 | 25.0 | 93.7 | 58.3 | 30.0 | 22.3 | 32.4 | 28.5 |

| 20% | 98.4 | 48.9 | 28.1 | 14.4 | 27.2 | 29.3 | 94.2 | 55.6 | 27.8 | 14.8 | 26.9 | 29.7 |

| 15% | 94.0 | 58.1 | 29.1 | 20.5 | 22.8 | 32.4 | 96.9 | 59.9 | 30.8 | 18.4 | 29.8 | 30.7 |

| 10% | 92.7 | 54.5 | 25.8 | 18.4 | 24.3 | 32.4 | 96.9 | 55.7 | 30.2 | 21.9 | 31.1 | 34.3 |

| 5% | 96.9 | 54.9 | 30.2 | 20.8 | 22.7 | 34.6 | 92.3 | 55.2 | 37.9 | 20.4 | 36.4 | 36.1 |

| 4% | 96.0 | 55.6 | 25.0 | 21.9 | 33.0 | 40.2 | 96.4 | 57.2 | 26.7 | 24.3 | 36.4 | 38.3 |

| 3% | 95.4 | 57.2 | 25.9 | 17.9 | 31.1 | 42.1 | 93.7 | 58.6 | 27.6 | 22.3 | 33.9 | 42.6 |

| 2% | 94.8 | 57.0 | 31.8 | 26.5 | 30.0 | 37.4 | 96.6 | 57.3 | 31.0 | 25.5 | 31.6 | 34.3 |

| 1% | 98.2 | 61.4 | 32.1 | 23.1 | 25.0 | 43.4 | 95.4 | 56.8 | 27.9 | 28.7 | 30.6 | 44.6 |

| % of MD | ||||||

|---|---|---|---|---|---|---|

| 50% | 17 | 38 | 157 | 311 | 204 | 328 |

| 45% | 21 | 44 | 181 | 292 | 168 | 359 |

| 40% | 14 | 34 | 190 | 289 | 173 | 348 |

| 35% | 13 | 33 | 176 | 299 | 180 | 345 |

| 30% | 22 | 39 | 170 | 277 | 202 | 351 |

| 25% | 21 | 34 | 165 | 310 | 206 | 319 |

| 20% | 26 | 55 | 203 | 285 | 182 | 330 |

| 15% | 18 | 39 | 183 | 326 | 168 | 323 |

| 10% | 16 | 38 | 197 | 318 | 165 | 320 |

| 5% | 15 | 33 | 176 | 327 | 159 | 338 |

| 4% | 13 | 37 | 180 | 312 | 173 | 335 |

| 3% | 17 | 34 | 176 | 292 | 197 | 335 |

| 2% | 12 | 26 | 166 | 291 | 191 | 352 |

| 1% | 14 | 30 | 169 | 333 | 169 | 329 |

References

- Allison, P.D. Missing Data; Sage Publications: Thousand Oaks, CA, USA, 2001. [Google Scholar]

- Schafer, J.L.; Graham, J.W. Missing data: Our view of the state of the art. Psychol. Methods 2002, 7, 147–177. [Google Scholar] [CrossRef] [PubMed]

- Rubin, D.B. Multiple Imputation for Nonresponse in Surveys; John Wiley & Sons: Hoboken, NJ, USA, 2004; Volume 81. [Google Scholar]

- Little, R.J.; Rubin, D.B. Statistical Analysis with Missing Data; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Enders, C.K. Applied Missing Data Analysis; Guilford Press: New York, NY, USA, 2010. [Google Scholar]

- Heitjan, D.F.; Basu, S. Distinguishing “missing at random” and “missing completely at random”. Am. Stat. 1996, 50, 207–213. [Google Scholar]

- Van Buuren, S. Multiple imputation of discrete and continuous data by fully conditional specification. Stat. Methods Med. Res. 2007, 16, 219–242. [Google Scholar] [CrossRef] [PubMed]

- Seaman, S.R.; White, I.R. Review of inverse probability weighting for dealing with missing data. Stat. Methods Med. Res. 2013, 22, 278–295. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Chen, M.H.; Ibrahim, J.G. Bayesian analysis for generalized linear models with nonignorably missing covariates. Biometrics 2005, 61, 767–780. [Google Scholar] [CrossRef] [PubMed]

- Fitzmaurice, G. Missing data: Implications for analysis. Nutrition 2008, 24, 200–202. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Peng, C.Y.J. Principled missing data methods for researchers. SpringerPlus 2013, 2, 222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Diggle, P.; Kenward, M.G. Informative drop-out in longitudinal data analysis. Appl. Stat. 1994, 43, 49–93. [Google Scholar] [CrossRef]

- Dixon, W.J. BMDP Statistical Software Manual: To Accompany the 1990 Software Release; University of California Press: Oakland, CA, USA, 1990; Volume 1. [Google Scholar]

- Little, R.J. A test of missing completely at random for multivariate data with missing values. J. Am. Stat. Assoc. 1988, 83, 1198–1202. [Google Scholar] [CrossRef]

- Jamshidian, M.; Jalal, S. Tests of homoscedasticity, normality, and missing completely at random for incomplete multivariate data. Psychometrika 2010, 75, 649–674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jamshidian, M.; Yuan, K.H. Examining missing data mechanisms via homogeneity of parameters, homogeneity of distributions, and multivariate normality. Wiley Interdiscip. Rev. Comput. Stat. 2014, 6, 56–73. [Google Scholar] [CrossRef]

- Jamshidian, M.; Jalal, S.J.; Jansen, C. MissMech: An R package for testing homoscedasticity, multivariate normality, and missing completely at random (MCAR). J. Stat. Softw. 2014, 56, 1–31. [Google Scholar] [CrossRef] [Green Version]

- Powers, D.A.; Xie, Y. Statistical Methods for Categorical Data Analysis; Academic Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Park, T.; Davis, C.S. A test of the missing data mechanism for repeated categorical data. Biometrics 1993, 49, 631–638. [Google Scholar] [CrossRef]

- Park, T.; Lee, S.Y. A test of missing completely at random for longitudinal data with missing observations. Stat. Med. 1997, 16, 1859–1871. [Google Scholar] [CrossRef]

- Chen, H.Y.; Little, R.J. A test of missing completely at random for generalised estimating equations with missing data. Biometrika 1999, 86, 1–13. [Google Scholar] [CrossRef]

- Hawkins, D.M. A new test for multivariate normality and homoscedasticity. Technometrics 1981, 23, 105–110. [Google Scholar] [CrossRef]

- Scholz, F.W.; Stephens, M.A. K-sample Anderson-Darling tests. J. Am. Stat. Assoc. 1987, 82, 918–924. [Google Scholar]

- Anderson, T.W.; Darling, D.A. A test of goodness of fit. J. Am. Stat. Assoc. 1954, 49, 765–769. [Google Scholar] [CrossRef]

- Gabriel, K.R. Simultaneous test procedures–some theory of multiple comparisons. Ann. Math. Stat. 1969, 40, 224–250. [Google Scholar] [CrossRef]

- Westfall, P.H.; Young, S.S. Resampling-Based Multiple Testing: Examples and Methods for p-Value Adjustment; John Wiley & Sons: Thousand Oaks, CA, USA, 1993. [Google Scholar]

- Benjamini, Y.; Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B (Methodol.) 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Noé, M. The calculation of distributions of two-sided Kolmogorov-Smirnov type statistics. Ann. Math. Stat. 1972, 43, 58–64. [Google Scholar] [CrossRef]

- Baumgartner, W.; Weiß, P.; Schindler, H. A nonparametric test for the general two-sample problem. Biometrics 1998, 54, 1129–1135. [Google Scholar] [CrossRef]

- Marozzi, M. Some notes on the location–scale Cucconi test. J. Nonparametr. Stat. 2009, 21, 629–647. [Google Scholar] [CrossRef]

- Marozzi, M. The multisample Cucconi test. Stat. Methods Appl. 2014, 23, 209–227. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Kruskal, W.H.; Wallis, W.A. Use of ranks in one-criterion variance analysis. J. Am. Stat. Assoc. 1952, 47, 583–621. [Google Scholar] [CrossRef]

- Bartholomew, D.J.; Steele, F.; Galbraith, J.; Moustaki, I. Analysis of Multivariate Social Science Data; Chapman and Hall/CRC: Boca Raton, FL, USA, 2008. [Google Scholar]

- Tshering, S.; Okazaki, T.; Endo, S. A Method to Identify Missing Data Mechanism in Incomplete Dataset. IJCSNS 2013, 13, 14. [Google Scholar]

- Diggle, P.J. Testing for random dropouts in repeated measurement data. Biometrics 1989, 45, 1255–1258. [Google Scholar] [CrossRef]

- Van Buuren, S.; Oudshoorn, C. Multivariate Imputation by Chained Equations: MICE V1.0 User’s Manual; Technical Report; TNO: Den Haag, The Netherlands, 2000. [Google Scholar]

- Styan, G.P. Hadamard products and multivariate statistical analysis. Linear Algebra Its Appl. 1973, 6, 217–240. [Google Scholar] [CrossRef] [Green Version]

- Rouzinov, S.; Berchtold, A. RBtest: Regression-Based Approach for Testing the Type of Missing Data. 2019. Available online: http://CRAN.R-project.org/package=RBtest (accessed on 24 January 2020).

- R Core Team. R: A Language and Environment for Statistical Computing. 2015. Available online: https://www.R-project.org/ (accessed on 1 April 2019).

- Tanizaki, H. Power comparison of non-parametric tests: Small-sample properties from Monte Carlo experiments. J. Appl. Stat. 1997, 24, 603–632. [Google Scholar] [CrossRef]

- Maggiori, C.; Rossier, J.; Krings, F.; Johnston, C.S.; Massoudi, K. Career Pathways and Professional Transitions: Preliminary Results from the First Wave of a 7-Year Longitudinal Study. In Surveying Human Vulnerabilities across the Life Course; Oris, M., Roberts, C., Joye, D., Stähli, M.E., Eds.; Springer: Oxford, UK, 2016; pp. 131–157. [Google Scholar]

- Cohen, J.; Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences; Routledge: Abingdon-on-Thames, UK, 2013. [Google Scholar]

- Van Buuren, S. Flexible Imputation of Missing Data; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018. [Google Scholar]

| IQ1 | IQ2 | IQ3 | IQ4 | IQ5 | IQ6 | IQ7 | IQ8 | IQ9 | IQ10 | IQ11 | IQ12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Complete data | 95 | 119 | 94 | 134 | 130 | 92 | 128 | 100 | 99 | 110 | 105 | 132 |

| Incomplete data | 95 | 119 | 94 | - | - | 92 | - | 100 | 99 | 110 | 105 | - |

| % of MD | MCAR | MAR1 | MAR2 | |||||||||

| JJ | D | L | RB | JJ | D | L | RB | JJ | D | L | RB | |

| 50% | 95.4 | 95.0 | 95.6 | 95.3 | 90.6 | 0 | 0 | 8.5 | 0 | 0 | 0 | 11.8 |

| 45% | 94.7 | 94.5 | 93.2 | 94.6 | 43.8 | 0 | 0 | 9.7 | 1.3 | 0 | 0 | 12.3 |

| 40% | 95.4 | 95.6 | 96.0 | 94.7 | 4.2 | 0 | 0 | 10.8 | 4.8 | 0 | 0 | 16.6 |

| 35% | 94.9 | 94.9 | 94.5 | 96.0 | 0.1 | 0 | 0 | 9.8 | 12.0 | 0 | 0 | 18.5 |

| 30% | 95.5 | 95.2 | 94.8 | 96.3 | 0 | 0 | 0 | 15.5 | 20.3 | 0 | 0 | 21.0 |

| 25% | 95.0 | 95.9 | 95.3 | 96.4 | 0 | 0 | 0 | 17.9 | 32.0 | 0 | 0 | 20.8 |

| 20% | 94.4 | 94.5 | 94.5 | 94.4 | 0 | 0 | 0 | 19.5 | 44.3 | 0 | 0 | 26.2 |

| 15% | 92.8 | 95.1 | 94.4 | 96.5 | 0.6 | 0 | 0 | 22.6 | 44.3 | 0 | 0 | 26.2 |

| 10% | 95.1 | 95.1 | 95.3 | 94.6 | 4.0 | 0 | 0 | 25.7 | 63.2 | 0 | 0 | 34.4 |

| 5% | 93.1 | 95.3 | 95.4 | 95.6 | 28.8 | 0 | 0 | 41.3 | 75.8 | 0 | 0 | 50.8 |

| 4% | 94.5 | 94.9 | 94.8 | 95.4 | 38.2 | 0 | 0 | 40.9 | 78.5 | 0 | 0 | 58.0 |

| 3% | 95.3 | 94.4 | 95.6 | 96.1 | 51.6 | 0 | 0 | 46.7 | 81.5 | 0.6 | 0 | 63.3 |

| 2% | 93.1 | 94.8 | 95.6 | 95.2 | 61.8 | 0 | 0 | 57.9 | 82.7 | 11.8 | 0.3 | 72.5 |

| 1% | 92.2 | 90.9 | 95.6 | 95.7 | 75.1 | 0 | 0 | 70.1 | 81.1 | 62.5 | 19.0 | 82.8 |

| % of MD | MAR3 | MAR4 | MAR4i | |||||||||

| JJ | D | L | RB | JJ | D | L | RB | JJ | D | L | RB | |

| 50% | 0 | 0 | 0 | 14.2 | 9.6 | 0 | 0 | 12.2 | 95.1 | 0 | 0 | 15.0 |

| 45% | 0 | 0 | 0 | 14.2 | 9.0 | 0 | 0 | 12.0 | 79.5 | 0 | 0 | 11.9 |

| 40% | 0.1 | 0 | 0 | 14.2 | 0 | 0 | 0 | 11.9 | 36.5 | 0 | 0 | 12.7 |

| 35% | 0.5 | 0 | 0 | 20.4 | 0 | 0 | 0 | 13.1 | 8.6 | 0 | 0 | 12.9 |

| 30% | 2.7 | 0 | 0 | 20.9 | 0 | 0 | 0 | 14.2 | 1.7 | 0 | 0 | 11.1 |

| 25% | 7.0 | 0 | 0 | 20.8 | 0 | 0 | 0 | 15.2 | 0.1 | 0 | 0 | 10.4 |

| 20% | 16.1 | 0 | 0 | 27.0 | 0 | 0 | 0 | 16.9 | 0 | 0 | 0 | 12.3 |

| 15% | 31.0 | 0 | 0 | 29.5 | 0 | 0 | 0 | 19.3 | 0 | 0 | 0 | 13.6 |

| 10% | 46.6 | 0 | 0 | 36.0 | 0 | 0 | 0 | 23.9 | 0 | 0 | 0 | 14.2 |

| 5% | 64.5 | 0 | 0 | 54.5 | 0.2 | 0 | 0 | 32.2 | 0 | 0 | 0 | 20.5 |

| 4% | 67.6 | 0.2 | 0 | 55.8 | 0.4 | 0 | 0 | 32.7 | 0 | 0 | 0 | 19.0 |

| 3% | 72.0 | 0.8 | 0 | 61.9 | 3.6 | 0 | 0 | 41.7 | 0.4 | 0 | 0 | 27.6 |

| 2% | 80.3 | 14.3 | 0.5 | 72.3 | 12.0 | 0 | 0 | 45.6 | 1.7 | 0 | 0 | 32.5 |

| 1% | 78.3 | 61.8 | 13.5 | 79.5 | 41.9 | 0 | 0 | 56.1 | 10.6 | 0 | 0 | 37.4 |

| % of MD | MCAR | MAR1 | MAR2 | |||||||||

| JJ | D | L | RB | JJ | D | L | RB | JJ | D | L | RB | |

| 50% | 95.6 | 94.4 | 94.9 | 94.0 | 94.7 | 0 | 0 | 12.6 | 4.7 | 0 | 0 | 11.8 |

| 45% | 96.1 | 95.0 | 95.0 | 95.3 | 90.3 | 0 | 0 | 12.6 | 6.8 | 0 | 0 | 14.0 |

| 40% | 95.6 | 94.9 | 95.4 | 96.2 | 81.5 | 0 | 0 | 12.3 | 8.0 | 0 | 0 | 12.5 |

| 35% | 94.0 | 94.4 | 96.1 | 94.6 | 71.8 | 0 | 0 | 13.2 | 7.8 | 0 | 0 | 13.6 |

| 30% | 95.0 | 95.0 | 94.7 | 95.1 | 60.9 | 0 | 0 | 14.6 | 9.0 | 0 | 0 | 15.8 |

| 25% | 96.3 | 93.9 | 93.9 | 95.8 | 48.5 | 0 | 0 | 16.7 | 10.3 | 0 | 0 | 15.3 |

| 20% | 94.5 | 95.0 | 95.1 | 96.2 | 45.9 | 0 | 0 | 17.9 | 9.4 | 0 | 0 | 19.2 |

| 15% | 95.2 | 94.6 | 95.5 | 94.4 | 48.4 | 0 | 0 | 18.9 | 11.3 | 0 | 0 | 20.7 |

| 10% | 95.1 | 95.8 | 95.2 | 95.5 | 54.6 | 0 | 0 | 22.5 | 11.0 | 0 | 0 | 25.4 |

| 5% | 94.4 | 95.3 | 95.2 | 94.9 | 70.2 | 0 | 0 | 29.7 | 15.8 | 0 | 0 | 34.5 |

| 4% | 92.8 | 96.2 | 95.8 | 96.5 | 77.1 | 0 | 0 | 32.1 | 16.8 | 0 | 0 | 39.0 |

| 3% | 94.7 | 95.7 | 96.1 | 96.4 | 79.9 | 0 | 0 | 36.8 | 19.6 | 0 | 0 | 43.6 |

| 2% | 94.3 | 95.3 | 95.3 | 97.2 | 85.4 | 0 | 0 | 43.6 | 27.1 | 2.1 | 0 | 52.0 |

| 1% | 94.3 | 94.2 | 95.7 | 95.4 | 87.5 | 0 | 0 | 51.2 | 37.3 | 64.1 | 4.6 | 62.9 |

| % of MD | MAR3 | MAR4 | MAR4i | |||||||||

| JJ | D | L | RB | JJ | D | L | RB | JJ | D | L | RB | |

| 50% | 0.2 | 0 | 0 | 16.6 | 95.2 | 93.8 | 94.7 | 64.8 | 95.1 | 0 | 0 | 15.0 |

| 45% | 0 | 0 | 0 | 18.1 | 82.4 | 95.3 | 96.3 | 61.7 | 80.6 | 0 | 0 | 14.5 |

| 40% | 0 | 0 | 0 | 19.7 | 42.7 | 94.5 | 94.7 | 61.4 | 36.7 | 0 | 0 | 13.6 |

| 35% | 0 | 0 | 0 | 19.0 | 12.5 | 95.4 | 94.4 | 59.2 | 9.7 | 0 | 0 | 12.6 |

| 30% | 0 | 0 | 0 | 18.8 | 1.8 | 95.5 | 93.5 | 62.2 | 0.8 | 0 | 0 | 11.9 |

| 25% | 0 | 0 | 0 | 18.6 | 0.3 | 95.6 | 91.1 | 61.6 | 0 | 0 | 0 | 10.6 |

| 20% | 0 | 0 | 0 | 24.6 | 0.1 | 94.4 | 88.7 | 61.1 | 0 | 0 | 0 | 11.4 |

| 15% | 0 | 0 | 0 | 27.9 | 0 | 95.3 | 85.4 | 62.2 | 0 | 0 | 0 | 13.6 |

| 10% | 0 | 0 | 0 | 33.9 | 0 | 95.0 | 85.4 | 69.2 | 0 | 0 | 0 | 15.1 |

| 5% | 0 | 0 | 0 | 43.8 | 0 | 95.2 | 79.5 | 71.3 | 0 | 0 | 0 | 19.9 |

| 4% | 0 | 0 | 0 | 46.0 | 0 | 94.8 | 78.6 | 74.8 | 0 | 0 | 0 | 19.6 |

| 3% | 0.3 | 0.8 | 0 | 53.6 | 0.1 | 94.3 | 75.2 | 78.6 | 0.1 | 0 | 0 | 24.2 |

| 2% | 1.4 | 14.3 | 0 | 63.0 | 0.7 | 95.3 | 71.6 | 77.1 | 1.8 | 0 | 0 | 28.3 |

| 1% | 9.5 | 79.3 | 2.4 | 70.9 | 7.8 | 95.3 | 66.0 | 83.8 | 15.4 | 0 | 0 | 39.5 |

| Variables | Number of MD | RB | D | JJ | L |

|---|---|---|---|---|---|

| Children | 103 | 0 | 6; 6 | ||

| Income | 62 | 100 | 8; 4 | ||

| General health | 20 | 82 | 8; 4 | ||

| Education | 13 | 98 | 8; 4 | ||

| Household | 9 | 58 | 0; 12 | ||

| Self-rated health | 9 | 99 | 6; 6 | ||

| Marital status | 7 | 90 | 5; 7 | ||

| Agreeableness | 4 | 99 | 0; 12 | ||

| Conscientiousness | 4 | 100 | 0; 12 | ||

| Extraversion | 4 | 100 | 0; 12 | ||

| Neuroticism | 4 | 100 | 0; 12 | ||

| Number of jobs | 4 | 100 | 8; 4 | ||

| Openness | 4 | 100 | 0; 12 | ||

| Age | 0 | - | - | ||

| Benefits | 0 | - | - | ||

| Gender | 0 | - | - | ||

| Nationality | 0 | - | - | ||

| Work rate | 0 | - | - | ||

| Overall | 0.086 | 0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rouzinov, S.; Berchtold, A. Regression-Based Approach to Test Missing Data Mechanisms. Data 2022, 7, 16. https://doi.org/10.3390/data7020016

Rouzinov S, Berchtold A. Regression-Based Approach to Test Missing Data Mechanisms. Data. 2022; 7(2):16. https://doi.org/10.3390/data7020016

Chicago/Turabian StyleRouzinov, Serguei, and André Berchtold. 2022. "Regression-Based Approach to Test Missing Data Mechanisms" Data 7, no. 2: 16. https://doi.org/10.3390/data7020016

APA StyleRouzinov, S., & Berchtold, A. (2022). Regression-Based Approach to Test Missing Data Mechanisms. Data, 7(2), 16. https://doi.org/10.3390/data7020016