A Systematic Survey of ML Datasets for Prime CV Research Areas—Media and Metadata

Abstract

1. Introduction

1.1. Rationale

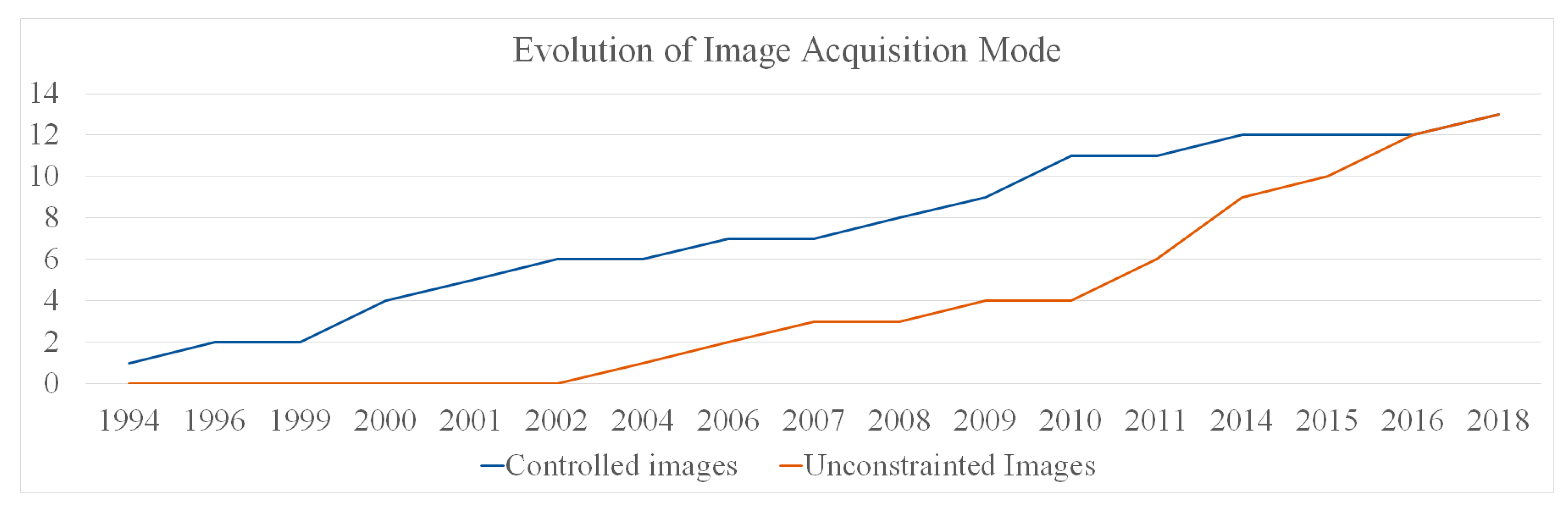

1.2. Objectives

2. Methods

2.1. Research Questions

2.2. Search Strategy and Study Selection

- ▪

- Inclusion criteria:

- ▪

- Papers pertaining to the employment of ML techniques for computer vision (regarding the different specific application areas covered by this survey), which describe the employed datasets;

- ▪

- Papers or websites describing specific datasets for MLCV;

- ▪

- Papers comprising dataset surveys for specific MLCV application areas;

- ▪

- Papers describing metadata formats for the expression of MLCV ground-truth information.

- ▪

- Exclusion criteria:

- ▪

- Papers/datasets with a small number of citations/mentions (typically bellow 100) in the literature. Exceptions were made for more recently (last 2 years) published papers (less than 60 citations);

- ▪

- Similar documental sources i.e., studies with similar content done by the by same authors. However, if the results were different in both studies, they were retained.

- ▪

- The dataset should have scientific relevance, revealed by its uptake by the concerned MLCV research community which translates into papers and citations;

- ▪

- The information pertaining to the dataset, obtained from the various documenting sources that describe them, should not be overly incomplete or incoherent.

2.3. Data Extraction, Synthesis, and Analysis

- ▪

- ▪

- The synthesis/addition of the similar aspects (columns of the same table), from all datasets on a year-by-year basis, to formulate responses to the research questions. These responses are exposed in Section 4, employing graphs (which chart the above-mentioned calculations) and text. In the next paragraphs, we explain how we proceeded to attain such a synthesis for the different aspects surveyed across the different datasets types.

3. MLCV Dataset Assessment Results

3.1. Introduction

3.2. Facial Recognition Datasets

3.2.1. Olivetti Face Database

3.2.2. FERET Database

3.2.3. XM2VTSDB

3.2.4. 3D_RMA Database

3.2.5. University of Oulu Physics-Based Face Database

3.2.6. Yale Face Database(s)

3.2.7. Face Recognition Grand Challenge Database(s)

- ▪

- The still training set is designed for training still face recognition. It comprises 12,776 images from 222 subjects (6388 controlled still images and 6388 uncontrolled ones). It contains from nine to 16 subject sessions per subject;

- ▪

- The 3D training set contains 3D scans and, controlled and uncontrolled, still images from 943 subject sessions.

3.2.8. FG-NET

3.2.9. Surveillance Cameras Face Database

3.2.10. BU-3DFE Database

3.2.11. Labeled Faces in the Wild (LFW)

3.2.12. CAS-PEAL Face Database

3.2.13. CMU Multi-PIE Face Database

3.2.14. PubFig

3.2.15. Radboud Faces Database

3.2.16. Texas 3D Face Recognition Database

3.2.17. YouTube Faces DB

3.2.18. ChokePoint Dataset

3.2.19. FaceScrub

3.2.20. CASIA-WebFace

3.2.21. Face Image Project

3.2.22. EURECOM Kinect Face Dataset

3.2.23. CelebFaces

3.2.24. MegaFace

3.2.25. UMD Faces

3.2.26. IMDB-WIKI

3.2.27. VGGFace2

3.2.28. Tufts Face Database

3.3. Image Segmentation, Object and Scenario Recognition Datasets

3.3.1. Columbia University Image Library

3.3.2. Microsoft Research Cambridge Dataset

- ▪

- Pixel-wise labeled images from the v1 database (240 images, nine object classes);

- ▪

- Pixel-wise labeled images from the v2 database (591 images, 23 object classes).

3.3.3. Berkeley Segmentation Dataset

3.3.4. RGB-D Object Recognition Dataset

3.3.5. The NYU Object Recognition Benchmark

3.3.6. Pictures of 3D objects on Turntable

3.3.7. Caltech-256

3.3.8. LabelMe

3.3.9. ImageNet

3.3.10. Cambridge-Driving Labeled Video Database

3.3.11. CIFAR Datasets

3.3.12. NUS-WIDE

3.3.13. MIT Indoor Scenes

3.3.14. SBU Captioned Photo Dataset

3.3.15. STL-10 Image Recognition

3.3.16. PRID 2011

3.3.17. CUB-200-2011

3.3.18. Semantic Boundaries Dataset

3.3.19. Stanford Dogs Dataset

3.3.20. Pascal Visual Object Classes Project

3.3.21. NYU Depth Dataset (v2)

3.3.22. Leafsnap Dataset

3.3.23. Oxford-IIIT Pet Dataset

3.3.24. LISA Traffic Sign Dataset

3.3.25. Daimler Urban Segmentation Dataset

3.3.26. Stanford Cars Dataset

3.3.27. FGVC-Aircraft Benchmark Dataset

3.3.28. Microsoft Research Dense Visual Annotation Corpus

3.3.29. Microsoft Common Objects in Context

3.3.30. The Middlebury Datasets

3.3.31. Flickr30k

3.3.32. iLIDS-VID

3.3.33. Belgium Traffic Sign Dataset

3.3.34. Pascal Context

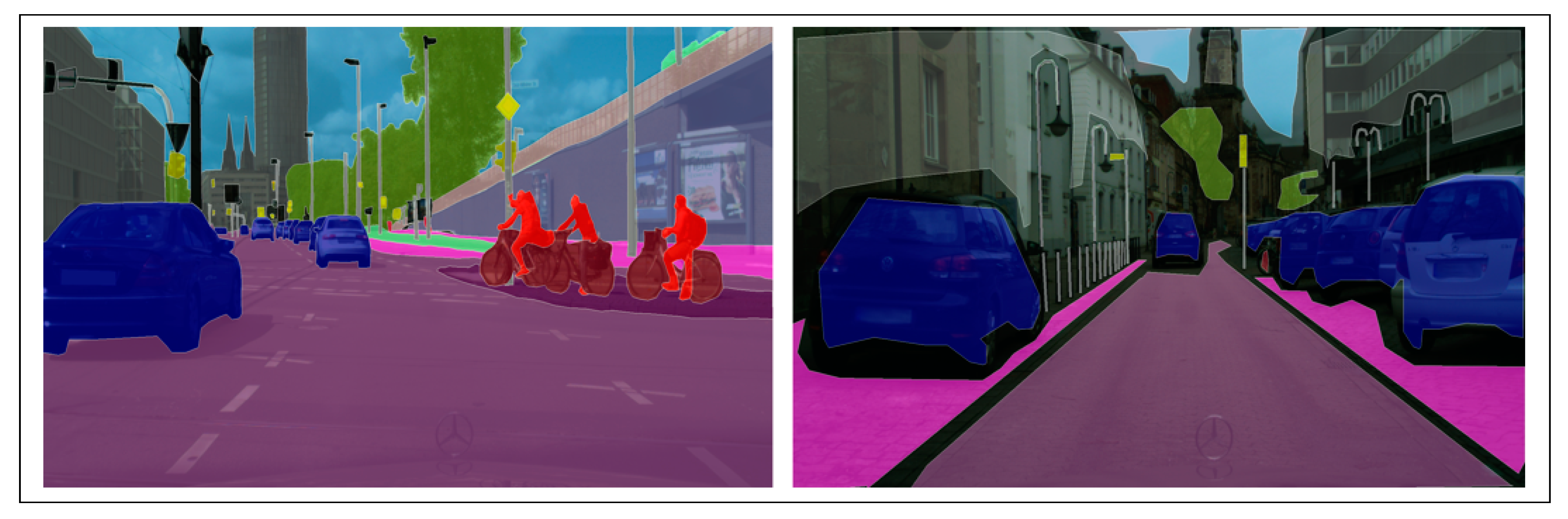

3.3.35. Cityscapes Dataset

3.3.36. Comprehensive Cars Dataset

3.3.37. YouTube-8M Dataset(s)

3.3.38. Densely Annotated Video Segmentation

3.3.39. iNaturalist

3.3.40. Visual Genome

3.3.41. Open Images Dataset (v5)

3.3.42. YouTube-Bounding Boxes

3.4. Object Tracking Datasets

3.4.1. Human Eva

3.4.2. ETH

3.4.3. Daimler Pedestrian Detection and Tracking Dataset

3.4.4. TUD

3.4.5. Caltech Pedestrian Database

3.4.6. KITTI Benchmark Suite Dataset

3.4.7. ALOV++

3.4.8. Visual Tracker Benchmark Dataset

3.4.9. TColor-128

3.4.10. NUS-PRO

3.4.11. UAV123

3.4.12. VOT Challenge Dataset

3.5. Activity and Behavior Detection Datasets

3.5.1. CAVIAR Project Benchmark Datasets

3.5.2. KTH Dataset

3.5.3. WEIZMAN Dataset

3.5.4. ETISEO Dataset

3.5.5. CASIA Action

3.5.6. HOHA

3.5.7. MSR Action

3.5.8. HOLLYWOOD2

3.5.9. i3DPost Multi-View (2009)

3.5.10. BEHAVE Dataset

3.5.11. TV Human Interaction Dataset

3.5.12. MuHAVi Dataset

3.5.13. UT-Interaction (2010)

3.5.14. Human Motion Database (HMDB51)

3.5.15. VIRAT

3.5.16. VideoWeb

3.5.17. MPII Cooking Activities Dataset

3.5.18. UCF101 Dataset

3.5.19. ADL Activity Recognition Dataset

3.5.20. The Sports-1M

3.5.21. THUMOS Dataset

3.5.22. ActivityNet

3.5.23. FCVID

3.5.24. AVA Actions

3.6. Multipurpose Datasets

3.6.1. YFCC-100M

3.6.2. SUN Database

3.6.3. MIT Flickr Material Database

3.6.4. VidTIMIT

3.6.5. Berkeley DeepDrive

3.6.6. Oxford Robotcar Dataset

- ▪

- Cameras—1 Point Gray Bumblebee XB3 trinocular stereo camera, acquiring images with 1280 × 960 resolution at 16Hz; 3 Point Gray Grasshopper2 monocular cameras, acquiring images with 1024 × 1024 resolution;

- ▪

- LIDAR: 2 SICK LMS-151 2D LIDAR, operating at 50 Hz with 50 m range; 1 SICK LD-MRS 3D LIDAR operating at 12.5 Hz with a 50 m range;

- ▪

- GPS/INS—1 NovAtel SPAN-CPT ALIGN with inertial and GPS/GLONASS navigation system.

3.7. Overview of Metadata Formats Employed in CV Datasets

3.7.1. Pascal VoC

3.7.2. COCO JSON

- ▪

- Info—high-level information about the dataset

- ▪

- Licenses—list of image licenses that apply to images in the dataset.

- ▪

- Categories—list of categories. Categories can belong to a supercategory

- ▪

- Images—all the image information in the dataset without bounding-box or segmentation information

- ▪

- Annotations—list of every individual object annotation from every image in the dataset

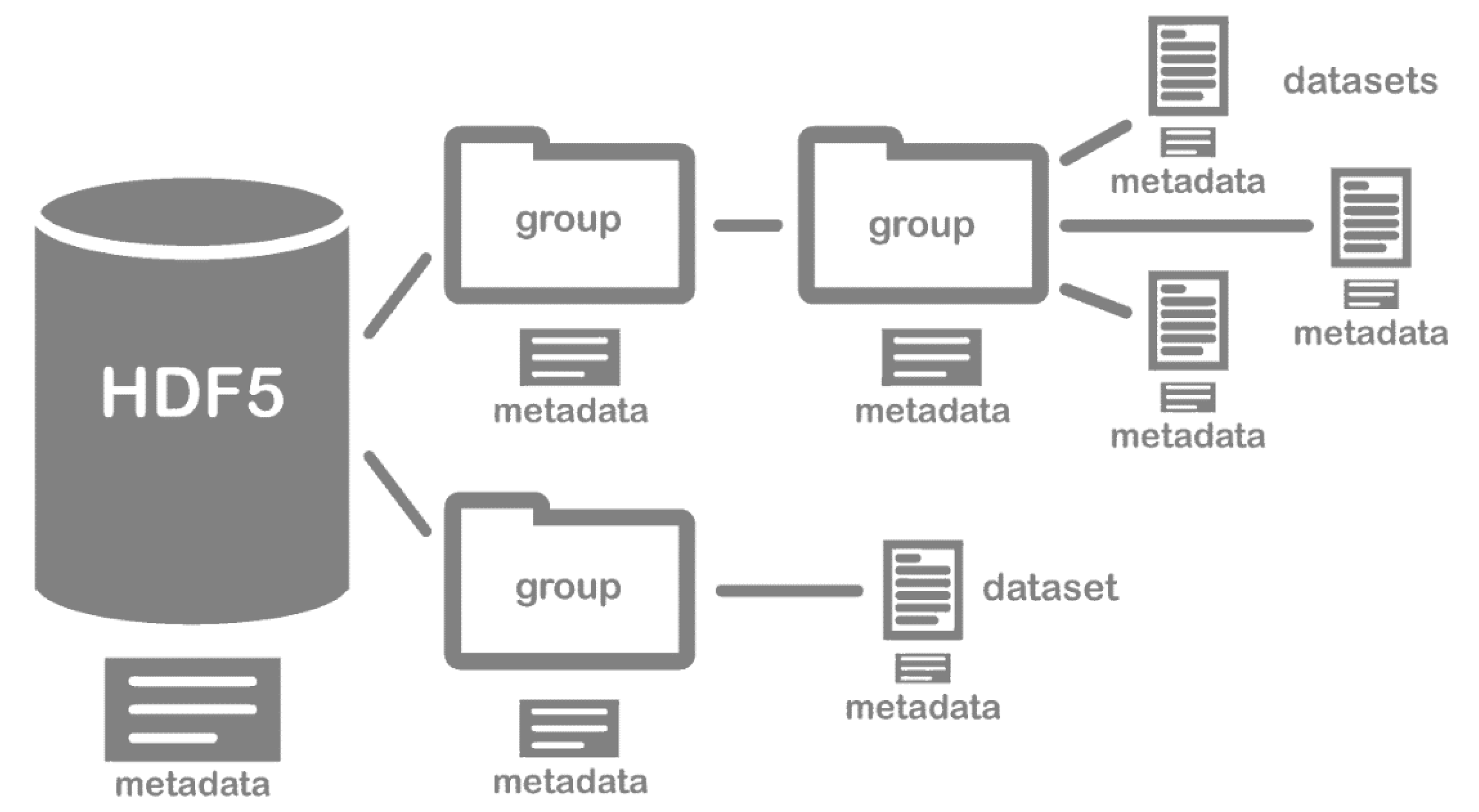

3.7.3. HDF-5

- ▪

- Group—similar to a folder, within an HDF5 file, that may contain other groups or datasets within it;

- ▪

- Dataset—the actual data contained within the HDF5 file. Datasets are often (but do not have to be) stored within groups in the file.

3.7.4. CAVIAR’s CVML

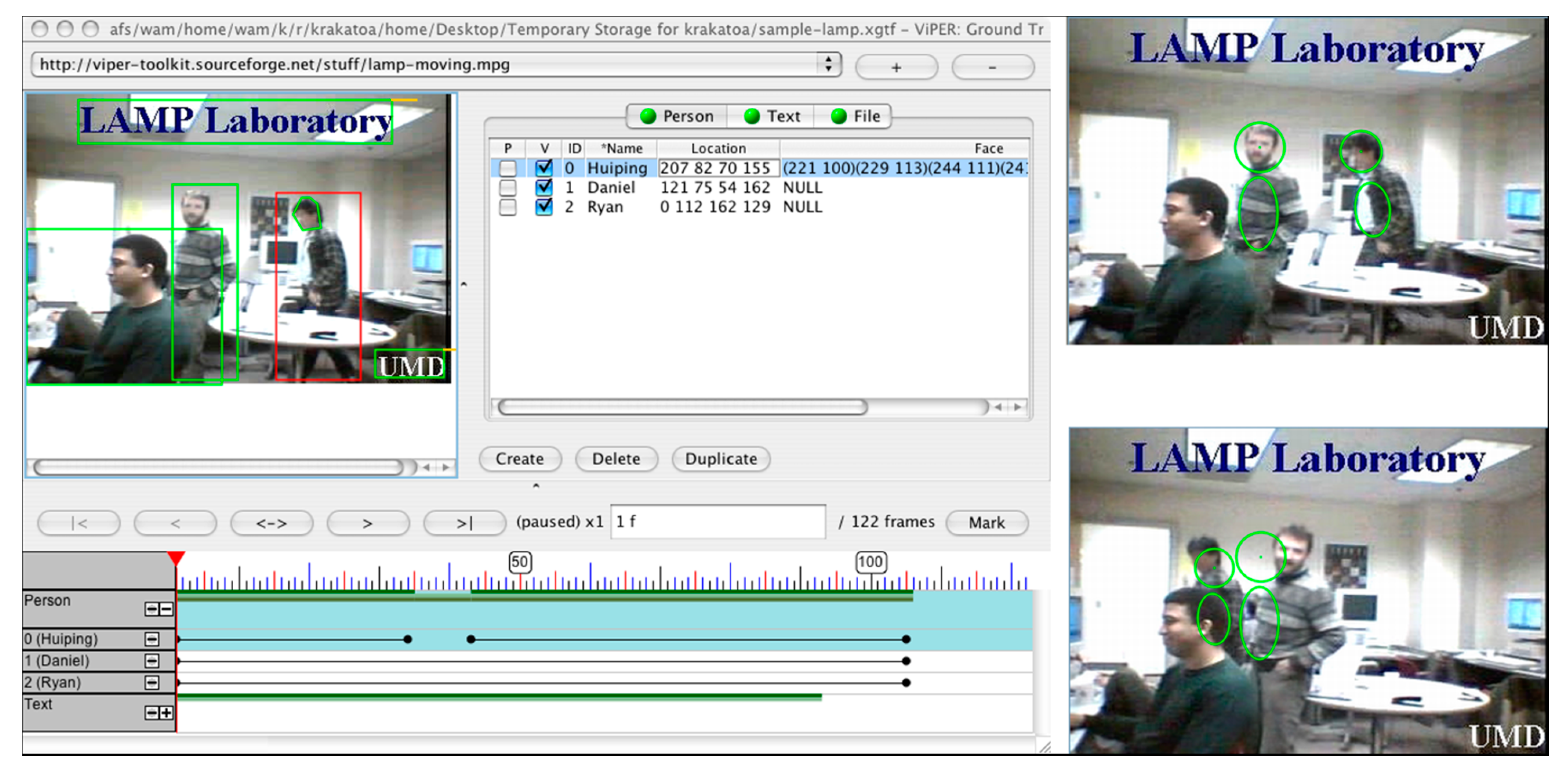

3.7.5. ViPER

4. Results Summary, Analysis, and Discussion

4.1. Analysis of Datasets for Facial Recognition

4.2. Analysis of Datasets for Object/Scenario Detection and Recognition

4.3. Analysis of Datasets for Object Tracking

4.4. Analysis of Datasets for Activity and Behavior Detection

4.5. Analysis of Multipurpose Datasets

4.6. Analysis of Employed Metadata Formats

- ▪

- Base format—the base textual format employed for the specific metadata format/language;

- ▪

- Metadata type—this represents the dimension and focus of the enabled annotations. The possible such types are low-level media feature metadata; image segmenting metadata; spatial, temporal, and spatio-temporal dimensions describing metadata; content descriptive metadata that address the semantics of the media; and administrative metadata that describe such aspects as creation date, creator, etc.

- ▪

- Vocabulary expressiveness—structuredness and comprehensiveness of the annotation vocabulary. The employment of an ontology adds to the vocabulary’s structuredness. In their turn, ontologies may support only object concepts or also (and thus being more comprehensive) relationships concepts;

- ▪

- Granularity—whether the permitted annotations may only apply to entire content assets as a whole or if they may focus on specific sub-parts of it. In static images, the tools in scope may enable scene or global-level annotations (for the entire image), or region-based, local, and segment-based annotations (image segments). For video content, annotation may refer to the entire video, temporal segments of it (shots), individual frames, regions within frames, or to moving regions, i.e., a region followed across a sequence of frames;

- ▪

- Employment—the datasets in which each format is employed.

4.7. Overall Analysis and Discussion

5. Limitations

- ▪

- Crossing information obtained from various different documental sources about each MLCV dataset so as to acquire information that is complete and coherent;

- ▪

- Approaching a very broad group of datasets (for each of the surveyed application domains) to average out any partial information insufficiencies and increase the representativeness of our sample;

- ▪

- Leaving out of the synthesis and analysis, statistically aberrational data, whilst explicitly mentioning that exclusion (Section 4) and justifying it.

6. Conclusions

6.1. The Way Forward

6.2. Final Remarks

Author Contributions

Funding

Conflicts of Interest

References

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Chaquet, J.M.; Carmona, E.J.; Fernández-Caballero, A. A survey of video datasets for human action and activity recognition. Comput. Vision Image Underst. 2013, 117, 633–659. [Google Scholar] [CrossRef]

- Jaimes, A.; Sebe, N. Multimodal human-computer interaction: A survey. Comput. Vision Image Underst. 2007, 108, 116–134. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Mariano, V.Y. Performance Evaluation of Object Detection Algorithms. In Proceedings of the 16th International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; pp. 965–969. [Google Scholar]

- Everingham, M.; Eslami, S.A.; van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vision 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Zhang, J.; Li, W.; Ogunbona, P.O.; Wang, P.; Tang, C. RGB-D-based action recognition datasets: A survey. Pattern Recognit. 2016, 60, 86–105. [Google Scholar] [CrossRef]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vision 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Borji, A.; Cheng, M.M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Visual Media 2019, 5, 117–150. [Google Scholar] [CrossRef]

- Bernardi, R.; Cakici, R.; Elliott, D.; Erdem, A.; Erdem, E.; Ikizler-Cinbis, N.; Keller, F.; Muscat, A.; Plank, B. Automatic description generation from images: A survey of models, datasets, and evaluation measures. J. Artif. Intell. Res. 2016, 55, 409–442. [Google Scholar] [CrossRef]

- Samaria, F.S.; Harter, A.C. Parameterisation of a stochastic model for human face identification. In Proceedings of the 1994 IEEE Workshop on Applications of Computer Vision, IEEE, Sarasota, FL, USA, 5–7 December 1994; pp. 138–142. [Google Scholar]

- Olivetti Face Database Website. Available online: http://www.cam-orl.co.uk/facedatabase.html (accessed on 19 January 2021).

- The FERET Database WebPage. Available online: https://www.nist.gov/programs-projects/face-recognition-technology-feret (accessed on 19 January 2021).

- National Science and Technology Council, Preparing for the Future of Artificial Intelligence. 2016. Available online: https://obamawhitehouse.archives.gov/sites/default/files/whitehouse_files/microsites/ostp/NSTC/preparing_for_the_future_of_ai.pdf (accessed on 19 January 2021).

- Messer, K.; Matas, J.; Kittler, J.; Luettin, J.; Maitre, G. XM2VTSDB: The extended M2VTS database. In Proceedings of the Second International Conference on Audio and Video-Based Biometric Person Authentication, Washington, DC, USA, 22–24 March 1999; Volume 964, pp. 965–966. [Google Scholar]

- XM2VTSDB Website. Available online: http://www.ee.surrey.ac.uk/CVSSP/xm2vtsdb/ (accessed on 19 January 2021).

- Beumier, C.; Acheroy, M. Automatic 3D face authentication. Image Vision Comput. 2000, 18, 315–321. [Google Scholar] [CrossRef]

- 3D_RMA Database Website. Available online: http://www.sic.rma.ac.be/~beumier/DB/3d_rma.html (accessed on 19 January 2021).

- Marszalec, E.A.; Martinkauppi, J.B.; Soriano, M.N.; Pietikaeinen, M. Physics-based face database for color research. J. Electron. Imaging 2000, 9, 32–39. [Google Scholar]

- Georghiades, A.S.; Belhumeur, P.N.; Kriegman, D.J. From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 643–660. [Google Scholar] [CrossRef]

- Yale Face Databases Website. Available online: http://cvc.cs.yale.edu/cvc/projects/yalefaces/yalefaces.html (accessed on 19 January 2021).

- Phillips, P.J.; Flynn, P.J.; Scruggs, T.; Bowyer, K.W.; Chang, J.; Hoffman, K.; Marques, J.; Min, J.; Worek, W. Overview of the face recognition grand challenge. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), IEEE, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 947–954. [Google Scholar]

- Panis, G.; Lanitis, A. An overview of research activities in facial age estimation using the FG-NET aging database. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 737–750. [Google Scholar]

- Grgic, M.; Delac, K.; Grgic, S. SCface–surveillance cameras face database. Multimed. Tools Appl. 2011, 51, 863–879. [Google Scholar] [CrossRef]

- Yin, L.; Wei, X.; Sun, Y.; Wang, J.; Rosato, M.J. A 3D facial expression database for facial behavior research. In Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR06), IEEE, Southampton, UK, 10–12 April 2006; pp. 211–216. [Google Scholar]

- Huang, G.B.; Ramesh, M.; Berg, T.; Learned-Miller, E. Labeled Faces in the Wild: A Database for Studying Face Recognition in Unconstrained Environments; Technical Report 07–49; University of Massachusetts: Amherst, MA, USA, 2007. [Google Scholar]

- Gao, W.; Cao, B.; Shan, S.; Chen, X.; Zhou, D.; Zhang, X.; Zhao, D. The CAS-PEAL large-scale Chinese face database and baseline evaluations. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2007, 38, 149–161. [Google Scholar]

- Gross, R.; Matthews, I.; Cohn, J.; Kanade, T.; Baker, S. Multi-pie. Image Vision Comput. 2010, 28, 807–813. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Berg, A.C.; Belhumeur, P.N.; Nayar, S.K. Attribute and simile classifiers for face verification. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, IEEE, Kyoto, Japan, 27 September–4 October 2009; pp. 365–372. [Google Scholar]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.; Hawk, S.T.; van Knippenberg, A.D. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Gupta, S.; Castleman, K.R.; Markey, M.K.; Bovik, A.C. Texas 3D face recognition database. In Proceedings of the 2010 IEEE Southwest Symposium on Image Analysis & Interpretation (SSIAI), IEEE, Austin, TX, USA, 23–25 May 2010; pp. 97–100. [Google Scholar]

- Wolf, L.; Hassner, T.; Maoz, I. Face recognition in unconstrained videos with matched background similarity. In Proceedings of the 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2011), IEEE, Colorado Springs, CO, USA, 21–23 June 2011; pp. 529–534. [Google Scholar]

- Wong, Y.; Chen, S.; Mau, S.; Sanderson, C.; Lovell, B.C. Patch-based probabilistic image quality assessment for face selection and improved video-based face recognition. In 2011 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR 2011 WORKSHOPS); IEEE: Colorado Springs, CO, USA, 2011; pp. 74–81. [Google Scholar]

- Ng, H.W.; Winkler, S. A data-driven approach to cleaning large face datasets. In 2014 IEEE International Conference on Image Processing (ICIP); IEEE: Paris, France, 2014; pp. 343–347. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Learning face representation from scratch. arXiv 2014, arXiv:1411.7923. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. For. Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Min, R.; Kose, N.; Dugelay, J.L. Kinectfacedb: A kinect database for face recognition. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 1534–1548. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.; Tang, X. Hybrid deep learning for face verification. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1489–1496. [Google Scholar]

- Kemelmacher-Shlizerman, I.; Seitz, S.M.; Miller, D.; Brossard, E. The megaface benchmark: 1 million faces for recognition at scale. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4873–4882. [Google Scholar]

- Bansal, A.; Nanduri, A.; Castillo, C.D.; Ranjan, R.; Chellappa, R. Umdfaces: An annotated face dataset for training deep networks. In 2017 IEEE International Joint Conference on Biometrics (IJCB); IEEE: Denver, CO, USA, 2017; pp. 464–473. [Google Scholar]

- Rothe, R.; Timofte, R.; van Gool, L. Dex: Deep expectation of apparent age from a single image. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 11–18 December 2015; pp. 10–15. [Google Scholar]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. Vggface2: A dataset for recognising faces across pose and age. In 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018); IEEE: Xi’an, China, 2018; pp. 67–74. [Google Scholar]

- Tufts Face Database Webpage at Kaggle. Available online: https://www.kaggle.com/kpvisionlab/tufts-face-database (accessed on 19 January 2021).

- Nene, S.A.; Nayar, S.K.; Murase, H. Columbia Object Image Library (coil-100)-Technical Report No. CUCS-006-96. 1996. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.360.6420&rep=rep1&type=pdf (accessed on 19 January 2021).

- Microsoft Research Cambridge Dataset Website. Available online: https://www.microsoft.com/en-us/research/project/image-understanding (accessed on 19 January 2021).

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the 8th IEEE International Conference on Computer Vision. ICCV 2001; IEEE: Vancouver, BC, Canada, 2001; Volume 2, pp. 416–423. [Google Scholar]

- Lai, K.; Bo, L.; Ren, X.; Fox, D. A large-scale hierarchical multi-view rgb-d object dataset. In 2011 IEEE International Conference on Robotics and Automation; IEEE: Shanghai, China, 2011; pp. 1817–1824. [Google Scholar]

- LeCun, Y.; Huang, F.J.; Bottou, L. Learning methods for generic object recognition with invariance to pose and lighting. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004; CVPR 2004; IEEE: Washington, DC, USA, 2004; Volume 2, p. II-104. [Google Scholar]

- Moreels, P.; Perona, P. Evaluation of features detectors and descriptors based on 3D objects. In Tenth IEEE International Conference on Computer Vision (ICCV’05); IEEE: Beijing, China, 17–21 October 2005; Volume 1, pp. 800–807. [Google Scholar]

- Griffin, G.; Holub, A.; Perona, P. Caltech-256 object category dataset (Self-published). 2007. Available online: https://authors.library.caltech.edu/7694/1/CNS-TR-2007-001.pdf (accessed on 19 January 2021).

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vision 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 248–255. [Google Scholar]

- Brostow, G.J.; Fauqueur, J.; Cipolla, R. Semantic object classes in video: A high-definition ground truth database. Pattern Recognit. Lett. 2009, 30, 88–97. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.222.9220&rep=rep1&type=pdf (accessed on 19 January 2021).

- Chua, T.S.; Tang, J.; Hong, R.; Li, H.; Luo, Z.; Zheng, Y. NUS-WIDE: A real-world web image database from National University of Singapore. In Proceedings of the ACM International Conference on Image and Video Retrieval, Santorini, Greece, 8–10 July 2009; pp. 1–9. [Google Scholar]

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 413–420. [Google Scholar]

- SBU Captioned Photo Dataset Webpage. Available online: http://vision.cs.stonybrook.edu/~vicente/sbucaptions (accessed on 19 January 2021).

- Ordonez, V.; Kulkarni, G.; Berg, T.L. Im2text: Describing images using 1 million captioned photographs. In Proceedings of the 24th International Conference on Neural Information Processing Systems, Granada, Spain, 12–17 December 2011; pp. 1143–1151. [Google Scholar]

- Coates, A.; Ng, A.; Lee, H. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Ft., Lauderdale, FL, USA, 11–13 April 2011; pp. 215–223. [Google Scholar]

- Hirzer, M.; Beleznai, C.; Roth, P.M.; Bischof, H. Person re-identification by descriptive and discriminative classification. In Scandinavian Conference on Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011; pp. 91–102. [Google Scholar]

- Caltech-UCSD Birds-200-2011 Dataset Website. Available online: http://www.vision.caltech.edu/visipedia/CUB-200-2011.html (accessed on 19 January 2021).

- Hariharan, B.; Arbeláez, P.; Bourdev, L.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In 2011 International Conference on Computer Vision; IEEE: Barcelona, Spain, 2011; pp. 991–998. [Google Scholar]

- Khosla, A.; Jayadevaprakash, N.; Yao, B.; Li, F.F. Novel dataset for fine-grained image categorization: Stanford dogs. In Proceedings of the CVPR Workshop on Fine-Grained Visual Categorization (FGVC), Colorado Springs, CO, USA, 25 June 2011; Volume 2. No. 1. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor segmentation and support inference from rgbd images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 746–760. [Google Scholar]

- Kumar, N.; Belhumeur, P.N.; Biswas, A.; Jacobs, D.W.; Kress, W.J.; Lopez, I.C.; Soares, J.V. Leafsnap: A computer vision system for automatic plant species identification. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 502–516. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A.; Jawahar, C.V. Cats and dogs. In 2012 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Providence, RI, USA, 2012; pp. 3498–3505. [Google Scholar]

- Mogelmose, A.; Trivedi, M.M.; Moeslund, T.B. Vision-based traffic sign detection and analysis for intelligent driver assistance systems: Perspectives and survey. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1484–1497. [Google Scholar] [CrossRef]

- Scharwächter, T.; Enzweiler, M.; Franke, U.; Roth, S. Efficient multi-cue scene segmentation. In German Conference on Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2013; pp. 435–445. [Google Scholar]

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 554–561. [Google Scholar]

- Maji, S.; Rahtu, E.; Kannala, J.; Blaschko, M.; Vedaldi, A. Fine-grained visual classification of aircraft. arXiv 2013, arXiv:1306.5151. [Google Scholar]

- Microsoft Research Dense Visual Annotation Corpus Download Page. Available online: https://www.microsoft.com/en-us/download/details.aspx?id=52523 (accessed on 19 January 2021).

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nešić, N.; Wang, X.; Westling, P. High-resolution stereo datasets with subpixel-accurate ground truth. In German Conference on Pattern Recognition; Springer: Cham, Switzerland, 2014; pp. 31–42. [Google Scholar]

- Young, P.; Lai, A.; Hodosh, M.; Hockenmaier, J. From image descriptions to visual denotations: New similarity metrics for semantic inference over event descriptions. Trans. Assoc. Comput. Linguist. 2014, 2, 67–78. [Google Scholar] [CrossRef]

- Wang, T.; Gong, S.; Zhu, X.; Wang, S. Person Re-Identification by Video Ranking. In Proceedings of the 13th European Conference on Computer Vision (ECCV); Springer: Zurich, Switzerland, 2014. [Google Scholar]

- Timofte, R.; Zimmermann, K.; van Gool, L. Multi-view traffic sign detection, recognition, and 3D localisation. Mach. Vision Appl. 2014, 25, 633–647. [Google Scholar] [CrossRef]

- Mottaghi, R.; Chen, X.; Liu, X.; Cho, N.G.; Lee, S.W.; Fidler, S.; Urtasun, R.; Yuille, A. The role of context for object detection and semantic segmentation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 891–898. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Scharwächter, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset. In Proceeding of the 28th IEEE Conference on Computer Vision and Pattern Recognition, Workshop on the Future of Datasets in Vision; IEEE: Boston, MA, USA, 11 June 2015; Volume 2. [Google Scholar]

- Yang, L.; Luo, P.; Loy, C.C.; Tang, X. A large-scale car dataset for fine-grained categorization and verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3973–3981. [Google Scholar]

- YouTube8M Dataset Webpage at Google Research Website. Available online: https://research.google.com/youtube8m (accessed on 19 January 2021).

- Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; van Gool, L.; Gross, M.; Sorkine-Hornung, A. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 724–732. [Google Scholar]

- van Horn, G.; Aodha, O.M.; Song, Y.; Cui, Y.; Sun, C.; Shepard, A.; Adam, H.; Perona, P.; Belongie, S. The inaturalist species classification and detection dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8769–8778. [Google Scholar]

- Enzweiler, M.; Gavrila, D.M. Monocular pedestrian detection: Survey and experiments. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 2179–2195. [Google Scholar] [CrossRef] [PubMed]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.J.; Shamma, D.A.; et al. Visual genome: Connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vision 2017, 123, 32–73. [Google Scholar] [CrossRef]

- Open Images Dataset Website. Available online: https://ai.googleblog.com/2016/09/introducing-open-images-dataset.html (accessed on 19 January 2021).

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Duerig, T.; et al. The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. arXiv 2018, arXiv:1811.00982. [Google Scholar] [CrossRef]

- Sigal, L.; Black, M.J. Humaneva: Synchronized Video and Motion Capture Dataset for Evaluation of Articulated Human Motion; Brown Univertsity TR: Providence, RI, USA, 2006; p. 120. [Google Scholar]

- Ess, A.; Leibe, B.; van Gool, L. Depth and appearance for mobile scene analysis. In 2007 IEEE 11th International Conference on Computer Vision; IEEE: Rio de Janeiro, Brazil, 2007; pp. 1–8. [Google Scholar]

- Wojek, C.; Walk, S.; Schiele, B. Multi-cue onboard pedestrian detection. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 794–801. [Google Scholar]

- Dollár, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: A benchmark. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 304–311. [Google Scholar]

- KITTI Benchmark Suite Dataset Website. Available online: http://www.cvlibs.net/datasets/kitti (accessed on 19 January 2021).

- Smeulders, A.W.; Chu, D.M.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 1442–1468. [Google Scholar]

- Visual Tracker Benchmark Dataset Webpage. Available online: http://cvlab.hanyang.ac.kr/tracker_benchmark/datasets.html (accessed on 19 January 2021).

- Liang, P.; Blasch, E.; Ling, H. Encoding color information for visual tracking: Algorithms and benchmark. IEEE Trans. Image Process. 2015, 24, 5630–5644. [Google Scholar] [CrossRef] [PubMed]

- Li, A.; Lin, M.; Wu, Y.; Yang, M.H.; Yan, S. Nus-pro: A new visual tracking challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 335–349. [Google Scholar] [CrossRef]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Kristan, M.; Matas, J.; Leonardis, A.; Felsberg, M.; Pflugfelder, R.; Kamarainen, J.K.; Zajc, L.C.; Drbohlav, O.; Lukezic, A.; Berg, A.; et al. The seventh visual object tracking vot2019 challenge results. In Proceedings of the 12th IEEE International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2019. [Google Scholar]

- CAVIAR Project Website. Available online: http://homepages.inf.ed.ac.uk/rbf/CAVIAR/caviar.htm (accessed on 19 January 2021).

- KTH Dataset for Recognition of human actions HomePage. Available online: http://www.nada.kth.se/cvap/actions (accessed on 19 January 2021).

- Schuldt, C.; Laptev, I.; Caputo, B. Recognizing human actions: A local SVM approach. In Proceedings of the 17th International Conference on Pattern Recognition, 2004; ICPR 2004; IEEE: Cambridge, UK, 2004; Volume 3, pp. 32–36. [Google Scholar]

- WEIZMANN Dataset HomePage. Available online: http://www.wisdom.weizmann.ac.il/%7Evision/SpaceTimeActions.html (accessed on 19 January 2021).

- Blank, M.; Gorelick, L.; Shechtman, E.; Irani, M.; Basri, R. Actions as space-time shapes. In Tenth IEEE International Conference on Computer Vision (ICCV’05); IEEE: Beijing, China, 17–21 October 2005; Volume 2, pp. 1395–1402. [Google Scholar]

- ETSIO Dataset HomePage. Available online: http://www-sop.inria.fr/orion/ETISEO (accessed on 19 January 2021).

- Nghiem, A.T.; Bremond, F.; Thonnat, M.; Valentin, V. ETISEO, performance evaluation for video surveillance systems. In 2007 IEEE Conference on Advanced Video and Signal Based Surveillance; IEEE: London, UK, 2007; pp. 476–481. [Google Scholar]

- CASIA Action Dataset Website. Available online: http://www.cbsr.ia.ac.cn/english/Action%20Databases%20EN.asp (accessed on 19 January 2021).

- Laptev, I.; Marszalek, M.; Schmid, C.; Rozenfeld, B. Learning realistic human actions from movies. In 2008 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Anchorage, AK, USA, 2008; pp. 1–8. [Google Scholar]

- Yuan, J.; Liu, Z.; Wu, Y. Discriminative subvolume search for efficient action detection. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 2442–2449. [Google Scholar]

- Marszalek, M.; Laptev, I.; Schmid, C. Actions in context. In 2009 IEEE Conference on Computer Vision and Pattern Recognition; IEEE: Miami, FL, USA, 2009; pp. 2929–2936. [Google Scholar]

- Gkalelis, N.; Kim, H.; Hilton, A.; Nikolaidis, N.; Pitas, I. The i3dpost multi-view and 3d human action/interaction database. In 2009 Conference for Visual Media Production; IEEE: London, UK, 2009; pp. 159–168. [Google Scholar]

- BEHAVE Dataset HomePage. Available online: http://groups.inf.ed.ac.uk/vision/BEHAVEDATA (accessed on 19 January 2021).

- Blunsden, S.J.; Fisher, R.B. The BEHAVE video dataset: Ground truthed video for multi-person behavior classification. Ann. BMVA 2010, 4, 1–12. [Google Scholar]

- TV Human Interaction Dataset HomePage. Available online: http://www.robots.ox.ac.uk/~alonso/tv_human_interactions.html (accessed on 19 January 2021).

- Patron-Perez, A.; Marszalek, M.; Zisserman, A.; Reid, I. High Five: Recognising human interactions in TV shows. In Proceedings of the British Machine Vision Conference (BMVC), Aberystwyth, UK, 31 August–3 September 2010. [Google Scholar]

- MuHAVi Dataset HomePage. Available online: http://velastin.dynu.com/MuHAVi-MAS (accessed on 19 January 2021).

- Singh, S.; Velastin, S.A.; Ragheb, H. Muhavi: A multicamera human action video dataset for the evaluation of action recognition methods. In 2010 7th IEEE International Conference on Advanced Video and Signal Based Surveillance; IEEE: Boston, MA, USA, 2010; pp. 48–55. [Google Scholar]

- Ryoo, M.S.; Aggarwal, J.K. Spatio-temporal relationship match: Video structure comparison for recognition of complex human activities. In 2009 IEEE 12th International Conference on Computer Vision; IEEE: Kyoto, Japan, 2009; pp. 1593–1600. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A Large Video Database for Human Motion Recognition. In Proceedings of the 13th International Conference on Computer Vision (ICCV 2011), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Oh, S.; Hoogs, A.; Perera, A.; Cuntoor, N.; Chen, C.C.; Lee, J.T.; Mukherjee, S.; Aggarwal, J.K.; Lee, H.; Davis, L.; et al. A large-scale benchmark dataset for event recognition in surveillance video. In CVPR 2011; IEEE: Colorado Springs, CO, USA, 2011; pp. 3153–3160. [Google Scholar]

- Denina, G.; Bhanu, B.; Nguyen, H.T.; Ding, C.; Kamal, A.; Ravishankar, C.; Roy-Chowdhury, A.; Ivers, A.; Varda, B. Videoweb dataset for multi-camera activities and non-verbal communication. In Distributed Video Sensor Networks; Springer: London, UK, 2011; pp. 335–347. [Google Scholar]

- Rohrbach, M.; Amin, S.; Andriluka, M.; Schiele, B. A Database for Fine Grained Activity Detection of Cooking Activities. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2012), IEEE, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. UCF101: A Dataset of 101 Human Action, Classes from Videos in the Wild (Technical Report CRCV-TR-12–01), Centre for Research in Computer Vision from the University of Central Florida. 2012. Available online: https://arxiv.org/pdf/1212.0402.pdf (accessed on 19 January 2021).

- Pirsiavash, H.; Ramanan, D. Detecting activities of daily living in first-person camera views. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Providence, RI, USA, 16–21 June 2012; pp. 2847–2854. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Fei-Fei, L. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Idrees, H.; Zamir, A.R.; Jiang, Y.G.; Gorban, A.; Laptev, I.; Sukthankar, R.; Shah, M. The THUMOS challenge on action recognition for videos in the wild. Comput. Vision Image Underst. 2017, 155, 1–23. [Google Scholar] [CrossRef]

- Heilbron, F.C.; Escorcia, V.; Ghanem, B.; Niebles, J.C. Activitynet: A large-scale video benchmark for human activity understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 961–970. [Google Scholar]

- Jiang, Y.G.; Wu, Z.; Wang, J.; Xue, X.; Chang, S.F. Exploiting feature and class relationships in video categorization with regularized deep neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 352–364. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.G.; Wu, Z.; Wang, J.; Xue, X.; Chang, S.F. FCVID: Fudan-Columbia Video Dataset. Available online: http://www.yugangjiang.info/publication/TPAMI17-supplementary.pdf (accessed on 19 January 2021).

- Gu, C.; Sun, C.; Ross, D.A.; Vondrick, C.; Pantofaru, C.; Li, Y.; Vijayanarasimhan, S.; Toderici, G.; Ricco, S.; Sukthankar, R.; et al. Ava: A video dataset of spatio-temporally localized atomic visual actions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6047–6056. [Google Scholar]

- Thomee, B.; Shamma, D.A.; Friedland, G.; Elizalde, B.; Ni, K.; Poland, D.; Borth, D.; Li, L.J. The new data and new challenges in multimedia research. arXiv 2015, arXiv:1503.01817. [Google Scholar]

- Xiao, J.; Hays, J.; Ehinger, K.A.; Oliva, A.; Torralba, A. Sun database: Large-scale scene recognition from abbey to zoo. In 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; IEEE: San Francisco, CA, USA, 2010; pp. 3485–3492. [Google Scholar]

- Sharan, L.; Rosenholtz, R.; Adelson, E.H. Accuracy and speed of material categorization in real-world images. J. Vision 2014, 14, 12. [Google Scholar] [CrossRef]

- Sanderson, C. Automatic Person Verification Using Speech and Face Information. Ph.D. Thesis, School of Microelectronic Engineering of the Faculty of Engineering and Information Technology Griffith University, Brisbane, Australia, 2003. [Google Scholar]

- Yu, F.; Chen, H.; Wang, X.; Xian, W.; Chen, Y.; Liu, F.; Madhavan, V.; Darrell, T. BDD100K: A diverse driving dataset for heterogeneous multitask learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 15–16 June 2020; pp. 2636–2645. [Google Scholar]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 year, 1000 km: The Oxford RobotCar dataset. Int. J. Robot. Res. 2017, 36, 3–15. [Google Scholar] [CrossRef]

- FERET Colour Database Website. Available online: https://www.nist.gov/itl/products-and-services/color-feret-database (accessed on 19 January 2021).

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2d & 3d face alignment problem?(and a dataset of 230,000 3d facial landmarks). In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Torralba, A.; Fergus, R.; Freeman, W.T. 80 million tiny images: A large data set for nonparametric object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1958–1970. [Google Scholar] [CrossRef]

- Catster Website. Available online: http://www.catster.com/ (accessed on 19 January 2021).

- Dogster Website. Available online: http://www.dogster.com/ (accessed on 19 January 2021).

- COCO Image Segmentation Challenge Website. Available online: https://cocodataset.org/#home (accessed on 19 January 2021).

- Hodosh, M.; Young, P.; Hockenmaier, J. Framing image description as a ranking task: Data, models and evaluation metrics. J. Artif. Intell. Res. 2013, 47, 853–899. [Google Scholar] [CrossRef]

- Open Images Extended–Crowdsourced Dataset Website. Available online: https://research.google/tools/datasets/open-images-extended-crowdsourced/ (accessed on 19 January 2021).

- Real, E.; Shlens, J.; Mazzocchi, S.; Pan, X.; Vanhoucke, V. Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5296–5305. [Google Scholar]

- Website for Team AnnieWAY. Available online: http://www.kit.edu/kit/english/pi_2011_6778.php (accessed on 19 January 2021).

- Wu, Y.; Lim, J.; Yang, M.H. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2411–2418. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Lukežič, A.; Zajc, L.Č.; Vojíř, T.; Matas, J.; Kristan, M. Now you see me: Evaluating performance in long-term visual tracking. arXiv 2018, arXiv:1804.07056. [Google Scholar]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T object tracking: Benchmark and baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef]

- Lukezic, A.; Kart, U.; Kapyla, J.; Durmush, A.; Kamarainen, J.K.; Matas, J.; Kristan, M. CDTB: A color and depth visual object tracking dataset and benchmark. In Proceedings of the 12th IEEE International Conference on Computer Vision, Kyoto, Japan, 24 September–4 October 2019; pp. 10013–10022. [Google Scholar]

- List, T.; Fisher, R.B. CVML–An XML-based Computer Vision Markup Language. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26 August 2004. ICPR 2004. [Google Scholar]

- Project ViPER Website. Available online: http://viper-toolkit.sourceforge.net (accessed on 19 January 2021).

- Jankowski, C.; Kalyanswamy, A.; Basson, S.; Spitz, J. NTIMIT: A phonetically balanced, continuous speech telephone bandwidth speech database. In International Conference on Acoustics; Speech and Signal Processing: Albuquerque, MA, USA, 1990; Volume 1, pp. 109–112. [Google Scholar]

- HDF5 Support Page. Available online: http://portal.hdfgroup.org/display/HDF5/HDF5 (accessed on 19 January 2021).

- NeonScience Webpage on HDF5. Available online: https://www.neonscience.org/about-hdf5 (accessed on 19 January 2021).

- Doemann, D.; Mihalcik, D. Tools and techniques for video performances evaluation. In Proceedings of the 15th International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000; pp. 167–170. [Google Scholar]

- Visual Object Tracking Challenge Website. Available online: https://www.votchallenge.net/ (accessed on 19 January 2021).

- Castro, H.; Alves, A.P. Cognitive Object Format. In International Conference on Knowledge Engineering and Ontology Development; Funchal: Madeira, Portugal, 2009. [Google Scholar]

- Castro, H.; Monteiro, J.; Pereira, A.; Silva, D.; Coelho, G.; Carvalho, P. Cognition Inspired Format for the Expression of Computer Vision Metadata. Multimed. Tools Appl. 2016, 75, 17035–17057. [Google Scholar] [CrossRef]

- Castro, H.; Andrade, M.T. ML Datasets as Synthetic Cognitive Experience Records. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2018, 10, 289–313. [Google Scholar]

- Hall, W.; Pesenti, J. Growing the artificial intelligence industry in the UK. In Department for Digital, Culture, Media & Sport and Department for Business, Energy & Industrial Strategy; OGL: London, UK, 2017. [Google Scholar]

- Gal, M.S.; Rubinfeld, D.L. Data standardization. NYUL Rev. 2019, 94, 737. [Google Scholar] [CrossRef]

- Open Data Institute Website. Available online: https://theodi.org/ (accessed on 19 January 2021).

| RQ# | Research Questions |

|---|---|

| Pertaining to Facial Recognition (FR) Datasets | |

| RQ1 | Amount of media content in FR datasets—what is the current situation and how has it evolved throughout time? |

| RQ2 | Number of identified individuals in FR datasets—what is the current situation and how has it evolved throughout time? |

| RQ3 | Metadata in FR datasets—what are the main aspects registered in the metadata, the employed formats, and how have they evolved? |

| RQ4 | Image acquisition modes (constrained vs. free) in FR datasets—what is the current situation and how has it evolved throughout time? |

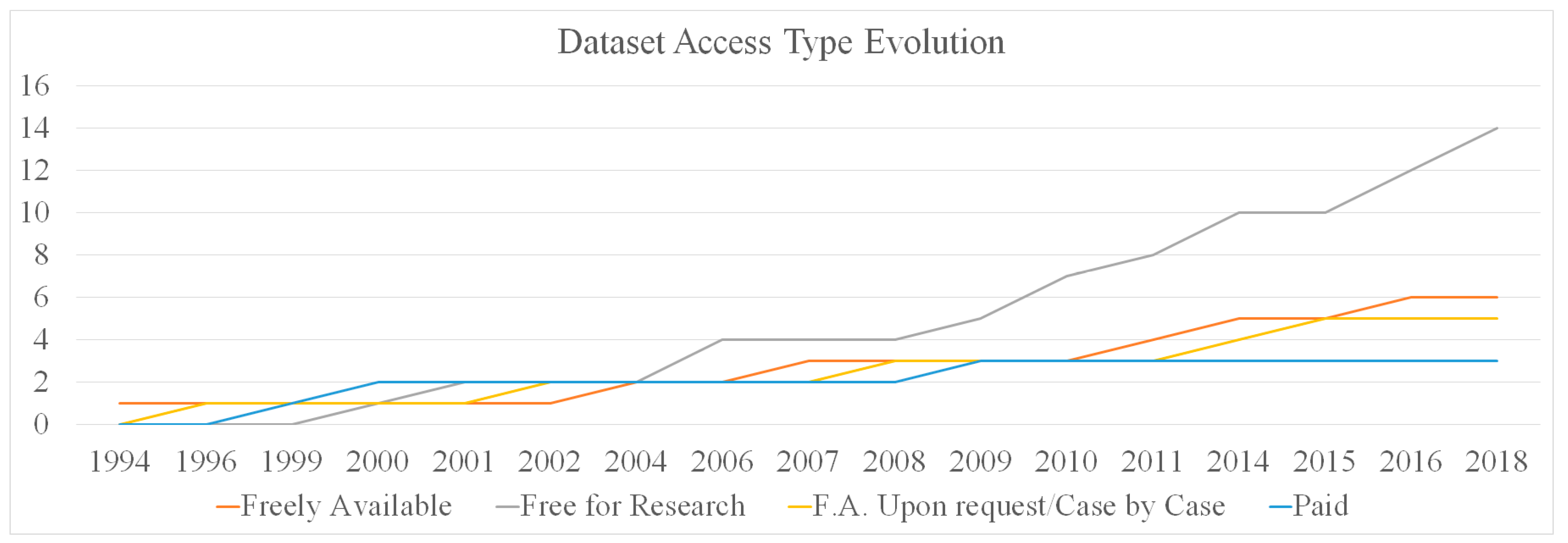

| RQ5 | Modes of access licensing to FR datasets—how have dataset access licensing modes evolved? |

| Pertaining to Object and/or Scenario Detection and Recognition (OSDR) Datasets | |

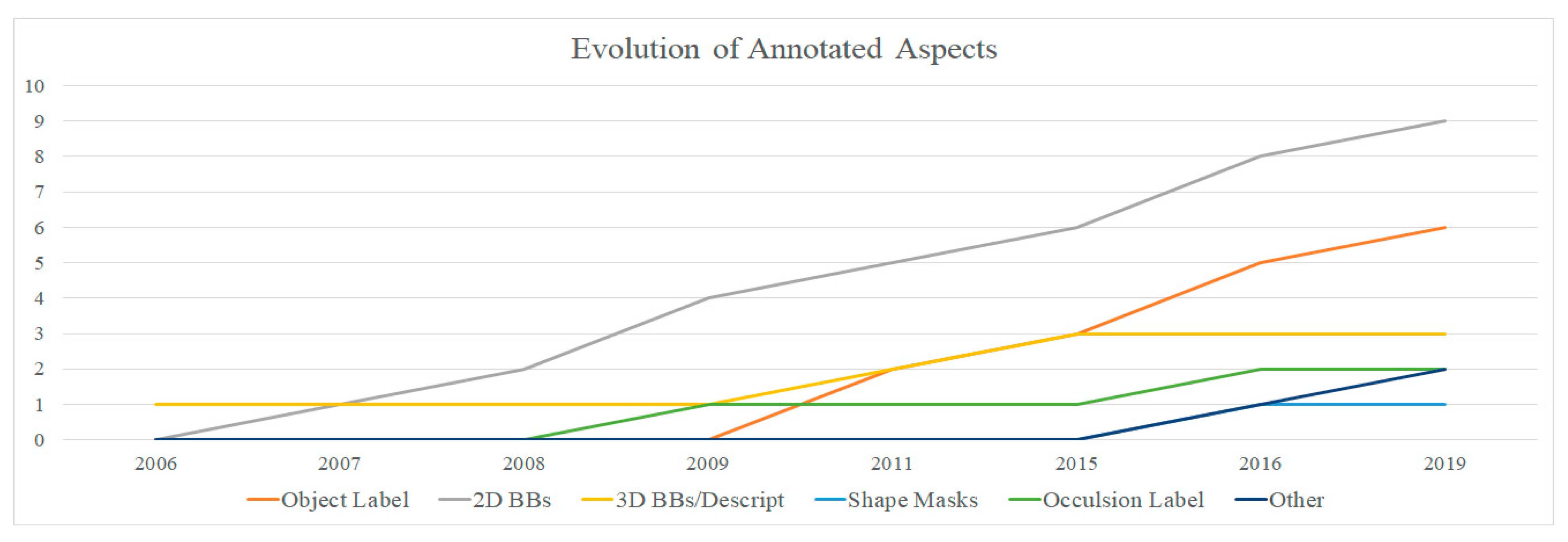

| RQ6 | Amount of media content in OSDR datasets—what is the current situation and how has it evolved throughout time? |

| RQ7 | Number of identified objects/scenarios in OSDR datasets—what is the current situation and how has it evolved throughout time? |

| RQ8 | Metadata in OSDR datasets—what are the main aspects registered in the metadata, employed formats, and how have they evolved? |

| RQ9 | Modes of access licensing to OSDR datasets—how have dataset access licensing modes evolved? |

| Object Tracking (OT) Datasets | |

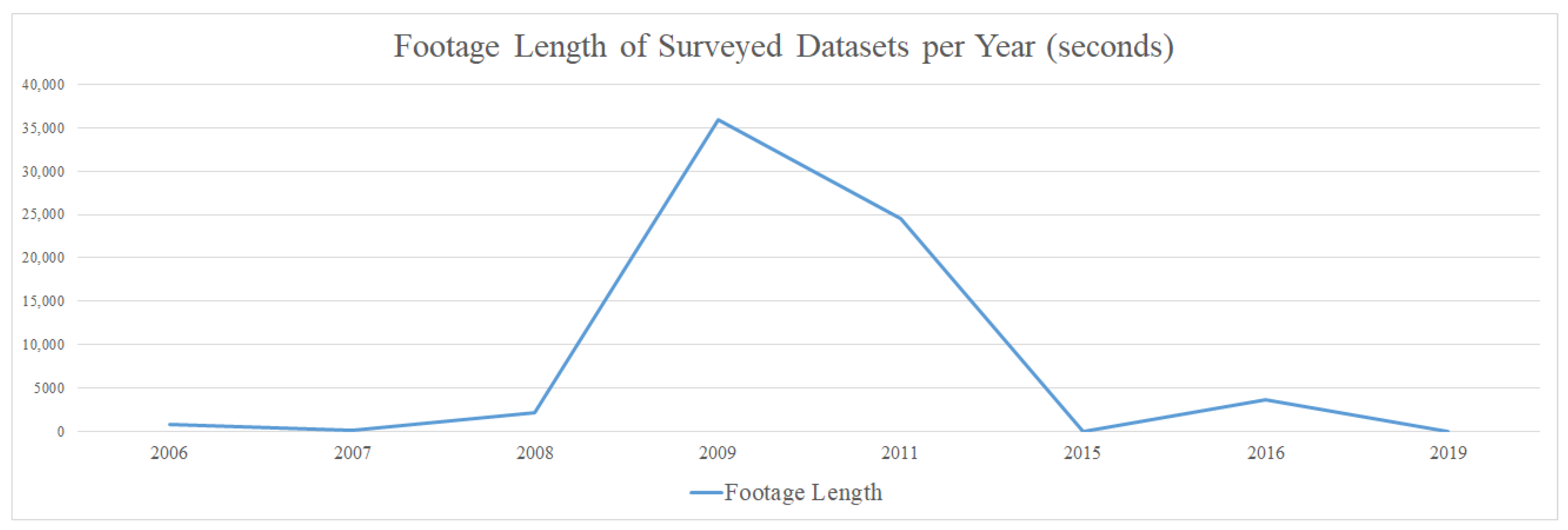

| RQ10 | Amount of footage in OT datasets—what is the current situation and how has it evolved throughout time? |

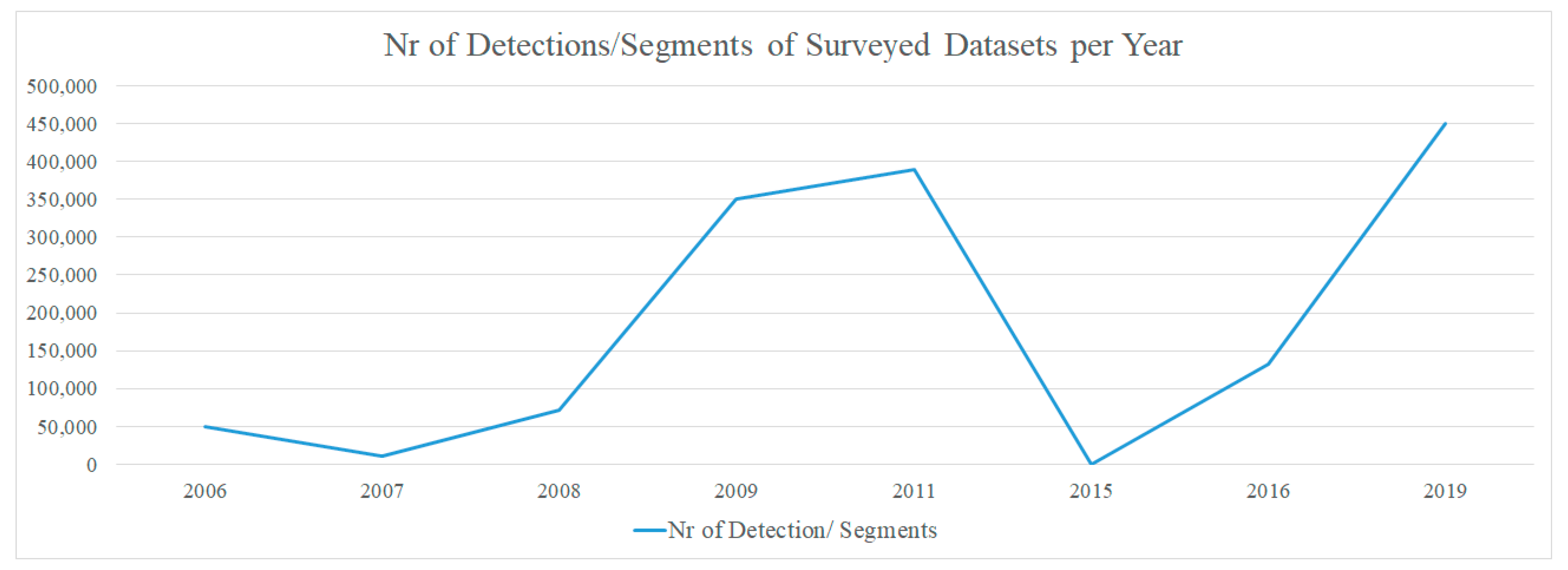

| RQ11 | Number of tracked objects classes in OT datasets—what is the current situation and how has it evolved throughout time? |

| RQ12 | Number of individual objects detections in OT datasets—what is the current situation and how has it evolved throughout time? |

| RQ13 | Number of individual objects tracked in OT datasets—what is the current situation and how has it evolved throughout time? |

| RQ14 | Metadata in OT datasets—what are the main aspects registered in the metadata, employed formats, and how have they evolved? |

| RQ15 | Modes of access licensing to OT datasets—how have dataset access licensing modes evolved? |

| Activity and Behavior Detection (ABD) Datasets | |

| RQ16 | Amount of footage in ABD datasets—what is the current situation and how has it evolved throughout time? |

| RQ17 | Amount of images in ABD datasets—what is the current situation and how has it evolved throughout time? |

| RQ18 | Number of activity detections in ABD datasets—what is the current situation and how has it evolved throughout time? |

| RQ19 | Number of activity classes targeted in ABD datasets—what is the current situation and how has it evolved throughout time? |

| RQ20 | Metadata in ABD datasets—what are the main aspects registered in the metadata, employed formats, and how have they evolved? |

| RQ21 | Modes of access licensing to ABD datasets—how have dataset access licensing modes evolved? |

| Metadata Formats | |

| RQ22 | What are the most widely used, if any, formats for the expression of MLCV datasets metadata? |

| Crowdsourcing | |

| RQ23 | What are the means employed for crowdsourcing (metadata production)? |

| Name | Creation | Refs. | Nr of Images | Identities | GT Metadata | Licensing | Notes |

|---|---|---|---|---|---|---|---|

| Olivetti Face Database | 1994 | [11,12] | 400 | 40 | EntityID, facial expression, lighting, eye glasses | Freely available | Controlled images Download in bulk |

| The FERET DB | 1996 | [13,14] | >14 K | 1199 | EntityID, pose | Free for research Case-by-case | Controlled images Download in bulk |

| XM2VTSDB | 1999 | [16,17] | - | 295 | - | Available at a payment | Video content Physical distribution (CD ROM) |

| 3D RMA | 2000 | [18,19] | 720 | 120 | Person name | Free for research | 3D captures from structured light |

| UOPB | 2000 | [20] | >2000 | 125 | Entity ID, camera calibration, illumination | Available for a fees for delivery costs | Controlled images Physical distribution (CD ROM) |

| Extended Yale Faces DB | 2001 | [21,22] | 16,128 | 28 | Entity ID, pose, illumination in file/dir name | Free for research | Controlled images Download in bulk |

| FRGCD | 2002 | [23] | 50,000 | 222 | Person Name | Case-by-case | Controlled images |

| FG-NET | 2004 | [24] | 1002 | 82 | EntityID, age, gender, facial landmark points, etc. | Freely available | Unconstrained images Download in bulk |

| SCFace | 2006 | [25] | 4160 | 130 | Entity ID, birth date, gender, facial occlusions; camera number, distance, and angle; coordinates of eyes, nose and mouth | Free for research | Unconstrained images |

| BU-3DFE | 2006 | [26] | 2500 | 100 | Subject ID, gender race, facial expression, feature point set and pose model. | Free for research. Negotiable for commercial use | Controlled images 3D facial captures |

| LFW | 2007 | [27] | 13,233 | 5749 | Person Name | Publicly available | Unconstrained images Download in bulk |

| CAS-PEAL Face DB | 2008 | [28] | >30 K | 1040 | Entity ID, gender and age, lighting, pose, expression, accessories, distance, time, resolution, eye locations. | Free for research on case-by-case basis | Constrained images |

| CMU Multi-PIE | 2009 | [29] | >750 K | 337 | Subject, expression, illumination, camera view | Available under paid license | Constrained images Physical distribution (disk drive) |

| PubFig | 2009 | [30] | 58,797 | 200 | Entity ID, age, gender, facial landmarks, accessories, pose, facial expression, lighting | Free for non-commercial use | Download of the metadata. Individual retrieval of images from Internet |

| Radboud Faces Database | 2010 | [31] | 8040 | 67 | Entity ID, emotion, and gaze in file name | Free for research and non-commercial | Controlled images Download in bulk |

| Texas 3DFRD | 2010 | [32] | 2298 | 118 | Entity ID, gender, ethnicity, expression, 25 fiducial points | Free for research | Constrained images, multi-modal images |

| YouTube Faces DB | 2011 | [33] | >600 K | 1595 | Identity, bounding box, head pose in .mat files | Publicly available | Unconstrained images Download in bulk |

| ChokePoint | 2011 | [35] | 64,204 | 54 | Subject ID and eye position | Free for research | Unconstrained images |

| FaceScrub | 2014 | [36] | >100 K | 530 | Person name | CC License for metadata | Unconstrained images Download of file URLs |

| CASIA-WebFaces | 2014 | [37] | >494 K | 10,575 | Person name | Free for research and non-commercial | Unconstrained images Download in bulk |

| Face Image Project | 2014 | [38] | >26 K | 2284 | Subject ID, age, gender, facial BB, pose and tilt | Publicly available | Unconstrained images Download in bulk |

| EURECOM KFC | 2014 | [39] | >2.8 K | 52 | Subject ID, face status, gender, age, occlusions, facial landmarks | Available on case-by-case basis | Constrained images |

| CelebFaces | 2015 | [40] | >202 K | 10,177 | Celebrity identity face b. box landmark locations binary attributes (ad hoc format) | Free for research Case-by-case | Piecemeal download from Google Drive |

| MegaFace | 2016 | [41] | 4.7 M | 672K | Entity ID, facial BB and landmarks | Free for research | Unconstrained images Download in bulk/chunks |

| UMDFaces | 2016 | [42] | 4 M | 8277 | Entity ID, facial BB, gender, pose, 21 keypoints (A. Mechanical Turk) | Freely Available | Unconstrained images |

| IMDB-WIKI | 2016 | [43] | >520 K | 20,284 | Entity ID, birth, name, gender, year image was acquired, facial location and location scores | Free for academic purposes | Unconstrained images Download in chunks |

| VGGFace2 | 2018 | [44] | >3.3 M | >9000 | Entity ID, facial BBs and keypoints, pose, age in .txt and .csv files. | CC ASA Licence | Unconstrained images Download in bulk |

| Tufts Face Database | 2018 | [45] | 100 K | 112 | Entity ID, gender, age | Free for non-commercial research and education | Seven image modalities Download in chunks (per image modality) |

| Name | Creation | Refs. | Number of Images | Number of Detection/Segments | Number of Objects/Scenarios | Number of Annotators | GT Metadata | Licensing/Notes |

|---|---|---|---|---|---|---|---|---|

| COIL-100 | 1996 | [46] | 7.2 K | - | 100 | - | Object label and pose | Freely available |

| MSRCD | 2000 | [47] | >800 | >800 | 34 | - | Object ID and shape masks | Freely available for non-commercial use Download in bulk |

| BSD | 2001 | [48] | 800 | 3000 | - | 25 | Segmentation maps in .mat files | Freely available |

| RGB-D ORD | 2001 | [49] | 250 K | 250 K | 300 | - | Segmentation mask image file BB for video frames in .mat file | Freely available for non-commercial use Piecemeal download |

| NORB | 2004 | [50] | >194 K | >194 K | 5 | - | Object labels and BBs | Freely available for research Download in chunks |

| P3DTT | 2005 | [51] | 2 × 144 × 3 × 100 | - | 100 | - | Object labels and perspectives | Freely available for research |

| Caltech-256 | 2007 | [52] | 30,607 | - | 256 | - | Object labels | Freely available for research |

| LabelMe | 2008 | [53] | 30,369 | 111,490 | 2888 | - | Object labels, BBS, polygons, segment. Masks (Pascal VoC) | Freely available for research |

| ImageNet | 2009 | [54] | >14 M | >1 M | 21,841 | Crowdsourced | Object classification, BBs, features in (Pascal VOC) | Freely available for non-commercial use Piecemeal download |

| CamVid | 2009 | [55] | >39 K | ≈700 × 32 | 32 | - | Pixel-level object segmentations | Freely available |

| CIFAR-10/100 | 2009 | [56] | 60 K | - | 10/100 | - | Object label | Freely available |

| NUS-WIDE | 2009 | [58] | 269,648 | 425,059 | 5018(Flkr) 81(man) | - | Object labels | Freely available |

| MIT Indoor Scenes | 2009 | [59] | 15,620 | - | 67 | - | Scene label (Pascal VOC) | Freely available |

| SBU CPD | 2011 | [60,61] | 1 M | 1 M | - | Crowdsourced/automated | Image captions | Freely available |

| SLT-10 | 2011 | [62] | 100 K | 500 | 10 | - | Object label | Obtained from ImageNet Download in bulk |

| PRID 2011 | 2011 | [63] | ≈ (475 + 856) × 125 | ≈ (475 + 856) × 125 | 245 | - | Bounding boxes | Freely available |

| CUB-200-2011 | 2011 | [64] | 11,788 | >>11,788 | 200 | - | Label, BBs, parts, and attributes | Freely available for research |

| SBD | 2011 | [65] | 11355 | >20 k | 20 | Crowdsourced (Amazon Mechanical Turk) | Object boundaries and labels | Freely available for research |

| Stanford Dogs | 2011 | [66] | 20,580 | >20 K | 120 | - | Object label and BBs | Freely available for non-commercial use |

| Pascal VOC | 2012 | [6] | >11 K | >33 K | 20 | Crowdsourced | Object label, BBs, pixel-wise masks, reference points and actions (Pascal VOC) | Freely available, Flickr terms of use Download in bulk |

| NYU Depth D. | 2012 | [67] | (500 K) 1.5 K | >35 K | 894 | Crowdsourced (Amazon Mechanical Turk) | Pixel-wise object labels and masks | Freely available Download in bulk |

| Leafsnap | 2012 | [68] | >30 K | >30 K | 185 | - | Tree species, segmented images | Freely available Download in bulk |

| Oxford-IIIT Pet | 2012 | [69] | >7 K | >7 K | 23 | - | Animal breed label, head BB, body segmentation | Freely available |

| LISA TSDB | 2012 | [72] | 6610 | 7855 | 49 | - | BBs and label | Freely available for research |

| DUSD | 2013 | [73] | 10 K | 25 K | 5 | - | Pixel-level semantic class annotations | Freely available for research |

| Stanford Cars | 2013 | [75] | >16 K | >16 K | 196 | Crowdsourced (Amazon Mechanical Turk) | Car make, model, year, BB (.mat files) | Freely available Download in bulk |

| FGVC-Aircraft | 2013 | [76] | >10 K | >10 K | 102 | Crowdsourced (Amazon Mechanical Turk) | Aircraft model and bb (.txt files) | Freely available for research Download in bulk |

| MS DVAC | 2014 | [77] | 500 | 100 K | 4K | Crowdsourced (Amazon Mechanical Turk) | Object labels and BBs | Freely available for research Download in chunks |

| MS COCO | 2014 | [78] | >328 K | 2.5 M | 91 | Crowdsourced (Amazon Mechanical Turk) | Object labels and segmentations (JSON) | Freely available for research Download in chunks |

| MDs | 2014 | [80] | >2 K | - | - | - | Disparity maps | Freely available Piecemeal downloads |

| Flickr30k | 2014 | [81] | >30 K | >158 K (captions) | - | Crowdsourced (Amazon Mechanical Turk) | Image textual description | - |

| iLIDS-VID | 2014 | [83] | ≈600 × 73 | ≈600 × 73 (presumably) | 300 | - | BBs and various other info in (XML) ViPER compliant format | Freely available for research |

| BelgiumTS | 2014 | [84] | 145 K | 13,444 | 4565 | - | BBs, camera ID, and pose | Freely available for research |

| Pascal Context | 2014 | [85] | 10,103 | 10,103 × 12 | 540 | 6 | Pixel-wise segmentation masks, labels (Pascal VOC) | Freely available for research |

| Cityscapes | 2015 | [74] | >200 K total 25 K annot. | ≈(5000 × 30) + (25,000 × 20) | 30 | - | Finer and coarser pixel-wise object annotations/segmentations | Freely available for research |

| CompCars | 2015 | [86] | >214 K | >50 K | 1716 + 163 | - | Car make, model, part, attribute, view | Freely available Piecemeal downloads |

| YouTube-8M | 2016 | [87] | Millions | 237 K | 3862 | Crowdsourced/automated | Audio-visual features, video level labels | Freely available CC BY 4.0 |

| DAVIS | 2016 | [88] | 3600 | 3455 | >4 | - | Binary masks | BSD License |

| iNaturalist | 2017 | [89] | >850 K | >560 K | >5K | Crowdsourced (iNaturalist effort) | Species label and BB (same format as COCO) | Freely available for research Download in chunks |

| YouTube-BB | 2017 | [98] | 10.5 M | >5.6 M | 23 | Crowdsourced (Amazon Mechanical Turk) | Object label and bounding box | Freely available for research Download in bulk |

| Visual Genome | 2017 | [90] | >108 K | >4.5 M | ≈13,041 + 13,894 | Crowdsourced (Amazon Mechanical Turk) | Region descriptions, objects, attributes, relationships, region graphs, scene graphs, and question–answer pairs | Freely available CC BY 4.0 Download in chunks |

| Open Images Dataset (v5) | 2018 | [92,93] | 9 M | 36.5 M(img-l) 15.4 M(BBs) 375K(rels) | 19.9 K(img-l) 600(BBs) 57(rels.) | - | Image-level labels, bounding boxes | Hosted at Github Freely available CC BY 4.0 |

| YouTube-8M Segments | 2019 | [87] | Millions | 237 K | 1000 | Crowdsourced/automated | Audio-visual features, video and frame level labels | Freely available CC BY 4.0 |

| Name | Creation | Refs. | Footage Length | Number of Tracked Classes | Number of Tracked Objects | Number of Detection/Segments | GT Metadata | Licensing/Notes |

|---|---|---|---|---|---|---|---|---|

| Human Eva | 2006 | [96] | 833 s | 6 | 4 | 50k | 3D body poses descriptions (motion capture) | Freely available for research |

| ETH | 2007 | [97] | 153 s | 1 | Hundreds | 10,958 | Bounding Boxes | Freely available |

| Daimler | 2008 | [98] | 428 s + 27 m | 1 | 259 | 72,152 | Bounding Boxes | Freely available for research |

| TUD | 2009 | [99] | - | 1 | 311 | 1326 + 1776 | Bounding Boxes | Freely available |

| Calthech D | 2009 | [100] | 10 H | 1 | >2k | 350k | Bounding Boxes, occlusion labels | Freely available for research |

| Kitti | 2011 | [4,101] | 6 H | 8 | >2160 | >300k | Object labels and 3D BB across time | Available under CC ShareAlike 3.0 |

| ALOV++ | 2011 | [103] | ≈315 × 9.2 s | 64 | 315 | >89K | Object type and BB | Freely available |

| VTB | 2015 | [104] | - | - | >100 | Tens of Thousands | Object label and 3D BB | Freely available |

| TColor-128 | 2015 | [106] | - | tens | 128 | Thousands | Bounding Boxes | Freely available for research |

| NUS-PRO | 2016 | [107] | - | 17 | 160 | ≈73 × 300 + 292 | Object type, BBs, pixel-wise mask, occlusion type, fiducial points | Freely available |

| UAV123 | 2016 | [108] | ≈ 123 × 30s | 6+ | ≈123 | >110K | Object type and BB | Available under request |

| VOT Challenge | 2019 | [109] | - | - | >250 | >450K | Object labels, BBs. Frame visual attributed (.txt file) | Freely available under various licenses Provides toolkit |

| Name | Creation | Refs. | Footage Length | Nr of Images | Nr of Detection/Segments | Nr of Activities | GT Metadata | Licensing/Notes |

|---|---|---|---|---|---|---|---|---|

| CAVIAR | 2004 | [114] | ≈3853 s | ≈96,325 | >>96,325 | 8 | Bounding boxes, observed behavior, head position, gaze direction, or hand, feet, and shoulder positions | Freely available |

| KTH | 2004 | [116,117] | ≈2391 × 4s | ≈2391 × 4 × 25 | - | 6 | Action labels and frame spans | Freely available |

| WEIZMAN | 2005 | [118,119] | - | Thousands | - | 10 | Activity label (AL) per video. Background and foreground masks per image. | Freely available |

| ETISEO | 2007 | [120,121] | - | - | - | 15 | AL, BBs | Freely available for research (on a case-by-case basis) |

| CASIA Action | 2007 | [123] | ≈1446 × 18 s | ≈1446 × 18 × 25 | >1446 | 15 | AL, subjects, per sequence | Free for research (for commercial upon request) |

| HOHA | 2008 | [124] | - | - | ≈231 + 143 + 217 | 8 | AL label per sequence | Freely available |

| MSR Action | 2009 | [125] | ≈325 s | ≈325 × 25 | 63 | 3 | Spatio-temporal BB per action | Freely available for for resarch |

| HOLLYWOOD2 | 2009 | [126] | 18 H | ≈1.59 M | - | 22 | AL per sequence | Freely available |

| I3DPost | 2009 | [127] | - | - | 104 | 13 | Person ID, AL per seq., binary masks, 3D mesh per frame | Freely available for research |

| BEHAVE | 2010 | [128,129] | ≈3600 s | >90 K | >90K | 10 | AL per frame range, BB per frame | Freely available for research |

| TVH ID | 2010 | [130,131] | - | ≈85,500 | >>85,500 | 4 | BB, head orientation, interaction label | Freely available for research |

| MuHAVi | 2010 | [132,133] | - | - | - | 17 | People silhouettes per frame, AL frame ranges | Freely available |

| UT-Interaction | 2010 | [134] | 20 × 1 | 20 × 60 × 30 | 60 + 180 | 6 | AL, frame range, BBs, per activity per sequence | Freely available |

| HMDB51 | 2011 | [135] | - | - | - | 51 | AL, visible body parts, number of people, per sequence | Freely available |

| VIRAT | 2011 | [136] | 29 H | ≈29 × 3600 × 30 | >>29 × 3600 × 30 | 23 | BB per frame, AL frame range (A. Mechanical Turk) | Freely available |

| VideoWeb | 2011 | [137] | 2.5 H | ≈2.5 × 3600 × 30 | >51 | 9 | AL, frame range per sequence XLS format | Available under request |

| MPII | 2012 | [138] | >8 H | >881 K | 5609/1071 | 65 | AL per frame range, bodily part position per frame | Freely available for research |

| UCF101 | 2012 | [139] | 27 H | ≈27 × 3600 × 25 | ≈13,320 | 101 | AL per sequence | Freely available |

| ADL | 2012 | [140] | >10 H | >1 M | ≈1 M/30 | 18 | Activity and object labels, BBs, object tracks, interaction events | Freely available for research |

| Sports-1M | 2014 | [141] | - | - | - | 487 | ALs per sequence | Freely available |

| THUMOS | 2015 | [142] | 430 H | >45 M | - | 101/20 | AL and range | Freely available for research |

| ActivityNet | 2015 | [143] | 849 H | ≈849 × 3600 × 30 | 23,064 | 203 | AL per sequence (A. Mechanical Turk) | Freely available for research |

| FCVID | 2015 | [144,145] | 4232 H | - | >91,223 | 239 | AL per sequence | Freely available for research upon request |

| AVA Actions | 2018 | [146] | 437 × 15 MN | 437 × 900 | 1.62 M | 80 | BB and AL per keyframes, tracklets | YouTube video, metadata freely available |

| Name | Creation | Refs. | Footage Length | Number of Images | Number of Detection/Segments | Number of Classes | GT Metadata | Licensing/Notes |

|---|---|---|---|---|---|---|---|---|

| YFCC-100M | 2015 | [91] | - | >68 M | >>68 M | - | User tags, machine tags, etc. | Creative Commons |

| SUN Database | 2010 | [147] | - | >146 K | - | thousands | Labels and polygons (Pascal VOC) | Freely available for research |

| FMD | 2014 | [148] | - | 1000 | 1000 | 10 | Material labels | Creative Commons |

| VidTIMIT | 2003 | [149] | ≈1935 s | ≈45,580 | - | - | Spoken content, head pose | Freely available for research |

| BDD100k | 2020 | [151] | >111 H | - | >>3.3 M | - | Object label, BBs, weather, lane markings, drivable areas, pixel-level annotations | Freely available for research upon request |

| Oxford Robotcar | 2017 | [152] | - | >20 M | - | - | LIDAR, GPS, and INS data | CC BY-NC-SA 4.0 |

| Name | Base Format | Metadata Type | Vocabulary Expressiveness | Granularity | Employment |

|---|---|---|---|---|---|

| PASCAL VoC | XLML | low-level media features, segmentation, content semantics | free text | scenario and region | LabelMe, ImageNet, Pascal Visual Object Classes Project, Pascal Context, MIT Indoor Sceenes, SUN Database, Hollywood2 |

| COCO JSON | JSON | segmentation, content semantics, administrative metadata | free text | scenario and region | iNaturalist, MegaFace and UrbanSound (JSON but not COCO JSON) |

| HDF-5 | Binary | - | custom | custom | |

| CVML | XML | low-level media features, segmentation, content semantics | free text | frames, regions in frames, moving regions, shots | CAVIAR Project |

| ViPER | XML | segmentation, content semantics | free text | scenario and region | ViPER Project, iLIDS-VID, ETISEO Dataset, BEHAVE Dataset, THUMOS |

| Name | Application Domain | Crowdsourcing Means | Notes |

|---|---|---|---|

| UMDFaces | Facial Recognition | Amazon Mechanical Turk | |

| CUB-200-2011 | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| SBD | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| Stanford Cars Dataset | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| FGVC-Aircraft | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| MS DVAC | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| MS COCO | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| Flickr30k | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| YouTube-BB | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| Visual Genome | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| NYU Depth D. | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| iNaturalist | Segmentation, Object and Scenario Recognition | Amazon Mechanical Turk | |

| ImageNet | Segmentation, Object and Scenario Recognition | Other crowdsourcing means | |

| Open Images Dataset | Segmentation, Object and Scenario Recognition | Google Crowdsource Android app | Partial crowdsourcing |

| VIRAT | Activity and Behavior Detection | Amazon Mechanical Turk | |

| ActivityNet | Activity and Behavior Detection | Amazon Mechanical Turk | |

| AVA Actions | Activity and Behavior Detection | Other crowdsourcing means | |

| Mozilla Common Voice | Speech Recognition | Other crowdsourcing means | |

| Fluent Speech Commands | Speech Recognition | Other crowdsourcing means |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Castro, H.F.; Cardoso, J.S.; Andrade, M.T. A Systematic Survey of ML Datasets for Prime CV Research Areas—Media and Metadata. Data 2021, 6, 12. https://doi.org/10.3390/data6020012

Castro HF, Cardoso JS, Andrade MT. A Systematic Survey of ML Datasets for Prime CV Research Areas—Media and Metadata. Data. 2021; 6(2):12. https://doi.org/10.3390/data6020012

Chicago/Turabian StyleCastro, Helder F., Jaime S. Cardoso, and Maria T. Andrade. 2021. "A Systematic Survey of ML Datasets for Prime CV Research Areas—Media and Metadata" Data 6, no. 2: 12. https://doi.org/10.3390/data6020012

APA StyleCastro, H. F., Cardoso, J. S., & Andrade, M. T. (2021). A Systematic Survey of ML Datasets for Prime CV Research Areas—Media and Metadata. Data, 6(2), 12. https://doi.org/10.3390/data6020012