A System for Acquisition, Processing and Visualization of Image Time Series from Multiple Camera Networks

Abstract

:1. Introduction

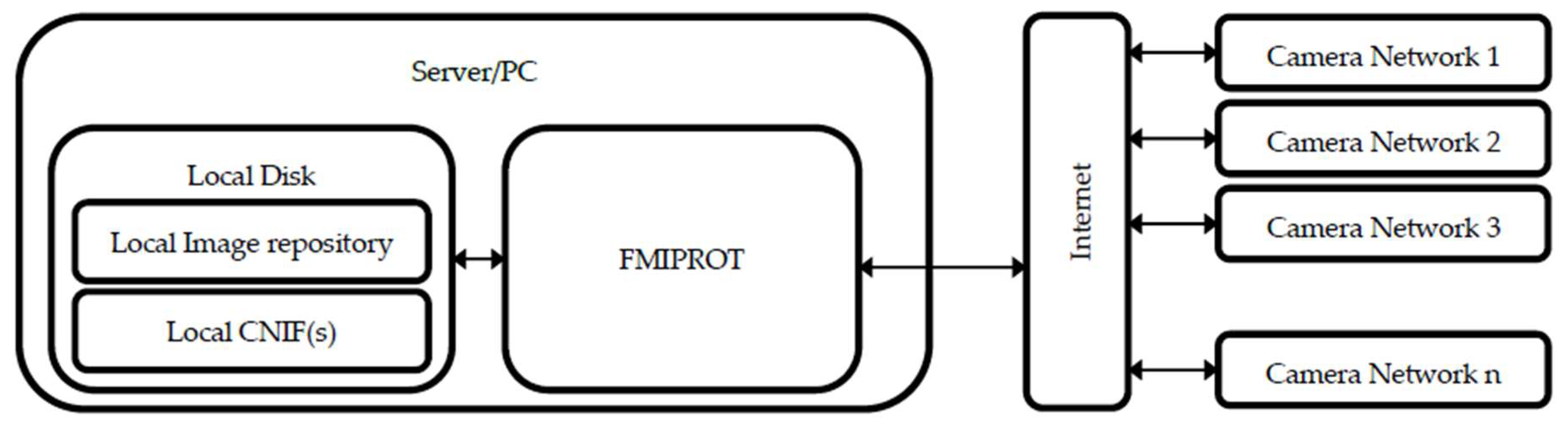

2. System Architecture

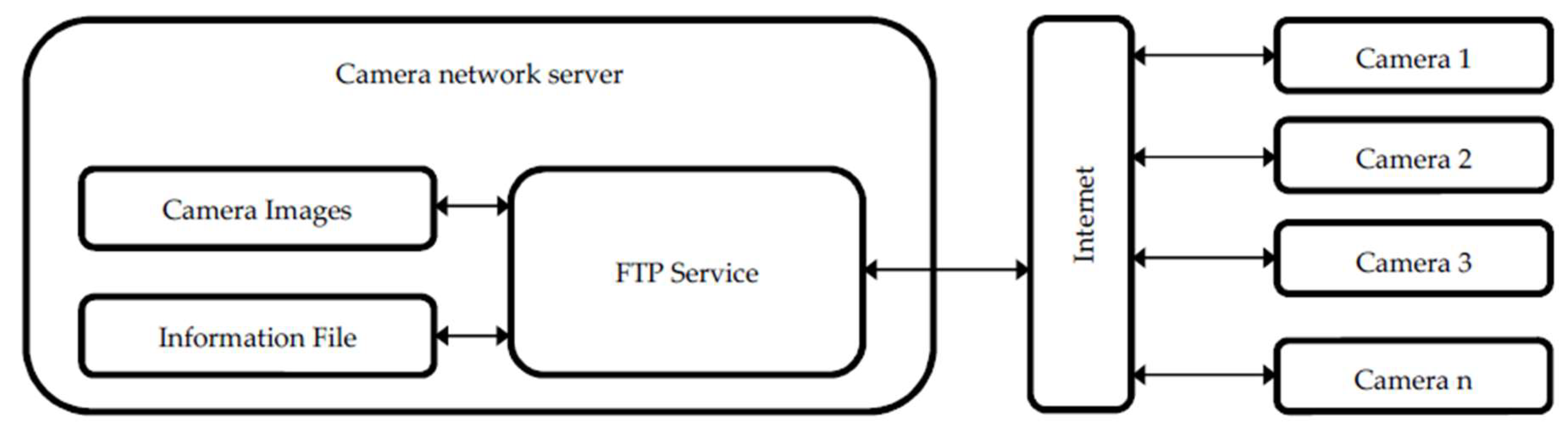

2.1. Camera Networks

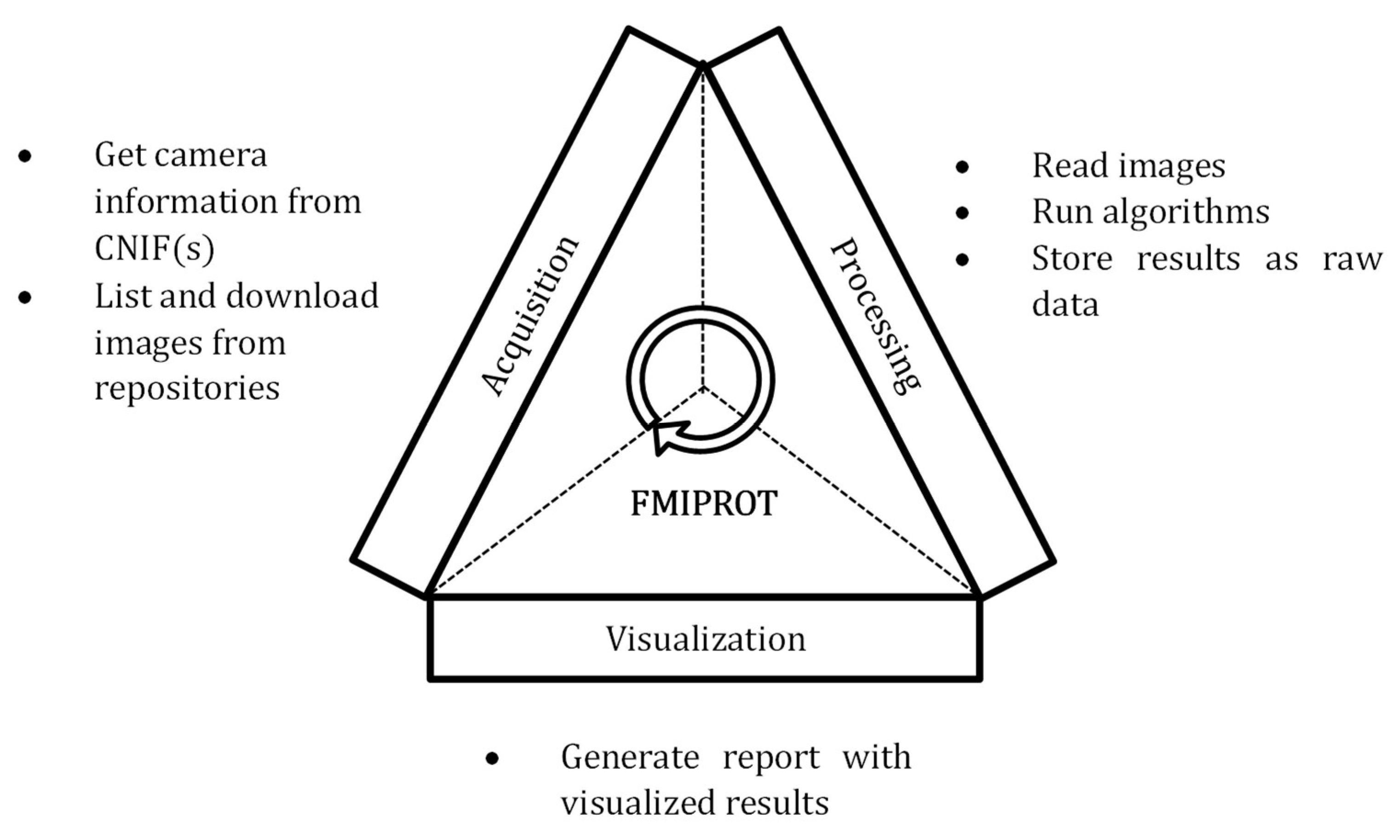

2.2. FMIPROT

2.2.1. Features

2.2.2. Software

2.2.3. Workflow

2.3. Image Processing Algorithms

2.3.1. Color Fraction Indices (Color Chromatic Coordinates)

2.3.2. Green-Red Vegetation Index (GRVI)

2.3.3. Green Excess Index (2G-rbi)

2.3.4. Custom Color Index

3. Examples of Applications

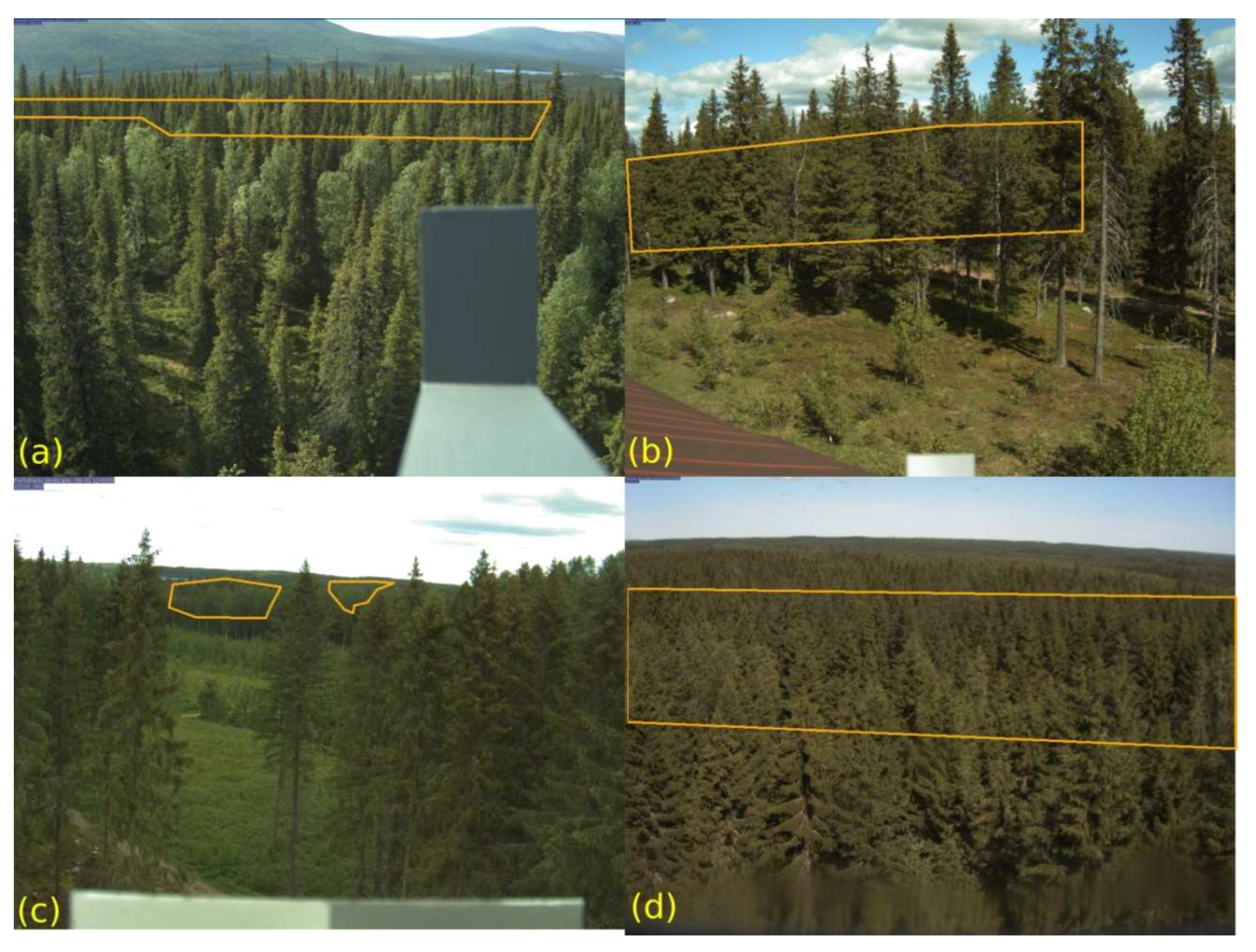

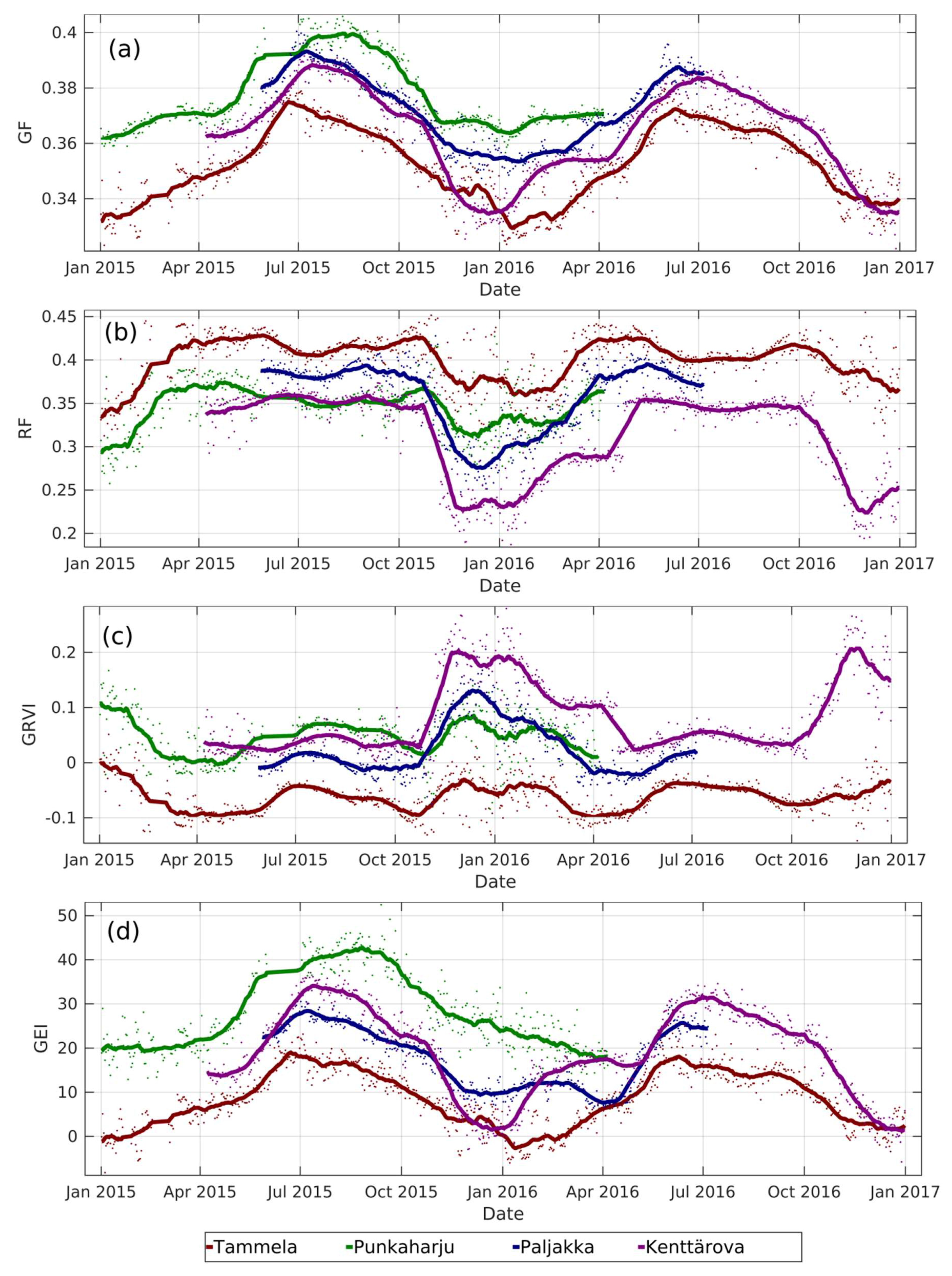

3.1. Extraction of Vegetation Indices for Same Species in Different Locations

3.2. Extraction of Vegetation Indices for Two Species in a Single Camera Field of View

3.3. GCC Changes by Vegetation Patch in Sodankylä Wetland

4. Demonstration for Proposed Operational Monitoring

5. Discussion and Further Developments

- Monitoring land cover change for environmental monitoring

- Agricultural applications, such as crop monitoring and management to help food security

- Detailed vegetation and forest monitoring

- Observation of coastal zones (marine environmental monitoring, coastal zone mapping)

- Inland water monitoring

- Snow cover monitoring

- Flood mapping and management (risk analysis, loss assessment, and disaster management during floods)

- Logistic services for municipalities and public authorities

- Traffic security (road conditions, visibility of traffic signs).

Author Contributions

Funding

Conflicts of Interest

References

- Wingate, L.; Ogée, J.; Cremonese, E.; Filippa, G.; Mizunuma, T.; Migliavacca, M.; Moisy, C.; Wilkinson, M.; Moureaux, C.; Wohlfahrt, G.; et al. Interpreting canopy development and physiology using a European phenology camera network at flux sites. Biogeosciences 2015, 12, 5995–6015. [Google Scholar] [CrossRef] [Green Version]

- Ahrends, H.E.; Brügger, R.; Stöckli, R.; Schenk, J.; Michna, P.; Jeanneret, F.; Wanner, H.; Eugster, W. Quantitative phenological observations of a mixed beech forest in northern Switzerland with digital photography: Phenology by use of digital photography. J. Geophys. Res. Biogeosci. 2008, 113. [Google Scholar] [CrossRef]

- Ahrends, H.; Etzold, S.; Kutsch, W.; Stoeckli, R.; Bruegger, R.; Jeanneret, F.; Wanner, H.; Buchmann, N.; Eugster, W. Tree phenology and carbon dioxide fluxes: Use of digital photography for process-based interpretation at the ecosystem scale. Clim. Res. 2009, 39, 261–274. [Google Scholar] [CrossRef]

- Bater, C.W.; Coops, N.C.; Wulder, M.A.; Hilker, T.; Nielsen, S.E.; McDermid, G.; Stenhouse, G.B. Using digital time-lapse cameras to monitor species-specific understorey and overstorey phenology in support of wildlife habitat assessment. Environ. Monit. Assess. 2011, 180, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Linkosalmi, M.; Aurela, M.; Tuovinen, J.-P.; Peltoniemi, M.; Tanis, C.M.; Arslan, A.N.; Kolari, P.; Aalto, T.; Rainne, J.; Laurila, T. Digital photography for assessing vegetation phenology in two contrasting northern ecosystems. Geosci. Instrum. Meth. 2016, 1–25. [Google Scholar] [CrossRef]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Mizunuma, T.; Koyanagi, T.; Mencuccini, M.; Nasahara, K.N.; Wingate, L.; Grace, J. The comparison of several colour indices for the photographic recording of canopy phenology of Fagus crenata Blume in eastern Japan. Plant. Ecol. Divers. 2011, 4, 67–77. [Google Scholar] [CrossRef]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of Green-Red Vegetation Index for Remote Sensing of Vegetation Phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef] [Green Version]

- Nijland, W.; Coops, N.C.; Coogan, S.C.P.; Bater, C.W.; Wulder, M.A.; Nielsen, S.E.; McDermid, G.; Stenhouse, G.B. Vegetation phenology can be captured with digital repeat photography and linked to variability of root nutrition in Hedysarum alpinum. Appl. Veg. Sci. 2013, 16, 317–324. [Google Scholar] [CrossRef]

- Peltoniemi, M.; Aurela, M.; Böttcher, K.; Kolari, P.; Loehr, J.; Hokkanen, T.; Karhu, J.; Linkosalmi, M.; Tanis, C.M.; Metsämäki, S.; et al. Networked web-cameras monitor congruent seasonal development of birches with phenological field observations. Agric. For. Meteorol. 2018, 249, 335–347. [Google Scholar] [CrossRef]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.-L. Use of digital webcam images to track spring green-up in a deciduous broadleaf forest. Oecologia 2007, 152, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Y.; Tan, Z.; Song, Q.; Liang, N.; Yu, L.; Zhao, J. Using digital cameras for comparative phenological monitoring in an evergreen broad-leaved forest and a seasonal rain forest. Ecol. Inform. 2012, 10, 65–72. [Google Scholar] [CrossRef]

- Migliavacca, M.; Galvagno, M.; Cremonese, E.; Rossini, M.; Meroni, M.; Sonnentag, O.; Cogliati, S.; Manca, G.; Diotri, F.; Busetto, L.; et al. Using digital repeat photography and eddy covariance data to model grassland phenology and photosynthetic CO2 uptake. Agric. For. Meteorol. 2011, 151, 1325–1337. [Google Scholar] [CrossRef]

- Lopes, A.P.; Nelson, B.W.; Wu, J.; de Alencastro Graça, P.M.; Tavares, J.V.; Prohaska, N.; Martins, G.A.; Saleska, S.R. Leaf flush drives dry season green-up of the Central Amazon. Remote Sens. Environ. 2016, 182, 90–98. [Google Scholar] [CrossRef]

- Yang, X.; Tang, J.; Mustard, J.F. Beyond leaf color: Comparing camera-based phenological metrics with leaf biochemical, biophysical, and spectral properties throughout the growing season of a temperate deciduous forest: Seasonality of leaf properties. J. Geophys. Res. Biogeosci. 2014, 119, 181–191. [Google Scholar] [CrossRef]

- Keenan, T.F.; Darby, B.; Felts, E.; Sonnentag, O.; Friedl, M.A.; Hufkens, K.; O’Keefe, J.; Klosterman, S.; Munger, J.W.; Toomey, M.; et al. Tracking forest phenology and seasonal physiology using digital repeat photography: A critical assessment. Ecol. Appl. 2014, 24, 1478–1489. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arslan, A.; Tanis, C.; Metsämäki, S.; Aurela, M.; Böttcher, K.; Linkosalmi, M.; Peltoniemi, M. Automated Webcam Monitoring of Fractional Snow Cover in Northern Boreal Conditions. Geosciences 2017, 7, 55. [Google Scholar] [CrossRef]

- Bernard, É.; Friedt, J.M.; Tolle, F.; Griselin, M.; Martin, G.; Laffly, D.; Marlin, C. Monitoring seasonal snow dynamics using ground based high resolution photography (Austre Lovénbreen, Svalbard, 79° N). ISPRS J. Photogramm. 2013, 75, 92–100. [Google Scholar] [CrossRef] [Green Version]

- Garvelmann, J.; Pohl, S.; Weiler, M. From observation to the quantification of snow processes with a time-lapse camera network. Hydrol. Earth Syst. Sci. 2013, 17, 1415–1429. [Google Scholar] [CrossRef] [Green Version]

- Härer, S.; Bernhardt, M.; Corripio, J.G.; Schulz, K. PRACTISE—Photo Rectification and ClassificaTIon SoftwarE (V.1.0). Geosci. Model Dev. 2013, 6, 837–848. [Google Scholar] [CrossRef]

- Salvatori, R.; Plini, P.; Giusto, M.; Valt, M.; Salzano, R.; Montagnoli, M.; Cagnati, A.; Crepaz, G.; Sigismondi, D. Snow cover monitoring with images from digital camera systems. Ital. J. Remote Sens. 2011, 137–145. [Google Scholar] [CrossRef]

- Richardson, A.D.; Hufkens, K.; Milliman, T.; Aubrecht, D.M.; Chen, M.; Gray, J.M.; Johnston, M.R.; Keenan, T.F.; Klosterman, S.T.; Kosmala, M.; et al. PhenoCam Dataset v1.0: Vegetation Phenology from Digital Camera Imagery, 2000–2015; ORNL DAAC: Oak Ridge, TN, USA, 2017. [Google Scholar] [CrossRef]

- Australian Phenocam Network. Available online: https://phenocam.org.au/ (accessed on 9 May 18).

- Peltoniemi, M.; Aurela, M.; Böttcher, K.; Kolari, P.; Loehr, J.; Karhu, J.; Linkosalmi, M.; Tanis, C.M.; Tuovinen, J.-P.; Arslan, A.N. Webcam network and image database for studies of phenological changes of vegetation and snow cover in Finland, image time series from 2014 to 2016. Earth Syst. Sci. Data 2018, 10, 173–184. [Google Scholar] [CrossRef] [Green Version]

- Tsuchida, S.; Nishida, K.; Kawato, W.; Oguma, H.; Iwasaki, A. Phenological eyes network for validation of remote sensing data. J. Remote Sens. Soc. Jpn. 2005, 25, 282–288. [Google Scholar]

- Morris, D.; Boyd, D.; Crowe, J.; Johnson, C.; Smith, K. Exploring the Potential for Automatic Extraction of Vegetation Phenological Metrics from Traffic Webcams. Remote Sens. 2013, 5, 2200–2218. [Google Scholar] [CrossRef] [Green Version]

- Jacobs, N.; Burgin, W.; Fridrich, N.; Abrams, A.; Miskell, K.; Braswell, B.H.; Richardson, A.D.; Pless, R. The global network of outdoor webcams: Properties and applications. In Proceedings of the 17th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Seattle, WA, USA, 4–6 November 2009; ACM Press: New York, NY, USA, 2009; p. 111. [Google Scholar] [CrossRef]

- Filippa, G.; Cremonese, E.; Migliavacca, M.; Galvagno, M.; Forkel, M.; Wingate, L.; Tomelleri, E.; Morra di Cella, U.; Richardson, A.D. Phenopix: A R package for image-based vegetation phenology. Agric. For. Meteorol. 2016, 220, 141–150. [Google Scholar] [CrossRef] [Green Version]

- PhenoCam Software Tools. Available online: https://phenocam.sr.unh.edu/webcam/tools/ (accessed on 11 June 2018).

- Tanis, C.M.; Arslan, A.N. Finnish Meteorological Institute Image Processing Toolbox (Fmiprot) User Manual; Zenodo: Genève, Switzerland, 2017. [Google Scholar] [CrossRef]

- Coelho, L.P. Mahotas: Open source software for scriptable computer vision. J. Open. Res. Softw. 2013, 1, e3. [Google Scholar] [CrossRef]

- MONIMET EU Life+ (LIFE12 ENV/FI/000409). Available online: https://monimet.fmi.fi/ (accessed on 9 May 2018).

- Peltoniemi, M.; Aurela, M.; Böttcher, K.; Kolari, P.; Linkosalmi, M.; Loehr, J.; Tanis, C.M.; Arslan, A.N. Datasheet of Ecosystem Cameras Installed in Finland in Monimet Life+ Project; Zenodo: Genève, Switzerland, 2017. [Google Scholar] [CrossRef]

- SPICE—Solid Precipitation Intercomparison Experiment. Available online: https://ral.ucar.edu/projects/SPICE/ (accessed on 9 May 2018).

| ROI | Preview Image | GF Plot |

|---|---|---|

| 1 |  |  |

| 2 |  |  |

| 3 |  |  |

| 4 |  |  |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanis, C.M.; Peltoniemi, M.; Linkosalmi, M.; Aurela, M.; Böttcher, K.; Manninen, T.; Arslan, A.N. A System for Acquisition, Processing and Visualization of Image Time Series from Multiple Camera Networks. Data 2018, 3, 23. https://doi.org/10.3390/data3030023

Tanis CM, Peltoniemi M, Linkosalmi M, Aurela M, Böttcher K, Manninen T, Arslan AN. A System for Acquisition, Processing and Visualization of Image Time Series from Multiple Camera Networks. Data. 2018; 3(3):23. https://doi.org/10.3390/data3030023

Chicago/Turabian StyleTanis, Cemal Melih, Mikko Peltoniemi, Maiju Linkosalmi, Mika Aurela, Kristin Böttcher, Terhikki Manninen, and Ali Nadir Arslan. 2018. "A System for Acquisition, Processing and Visualization of Image Time Series from Multiple Camera Networks" Data 3, no. 3: 23. https://doi.org/10.3390/data3030023

APA StyleTanis, C. M., Peltoniemi, M., Linkosalmi, M., Aurela, M., Böttcher, K., Manninen, T., & Arslan, A. N. (2018). A System for Acquisition, Processing and Visualization of Image Time Series from Multiple Camera Networks. Data, 3(3), 23. https://doi.org/10.3390/data3030023