A Data Set of Human Body Movements for Physical Rehabilitation Exercises

Abstract

Abstract

Dataset

Dataset License

1. Summary

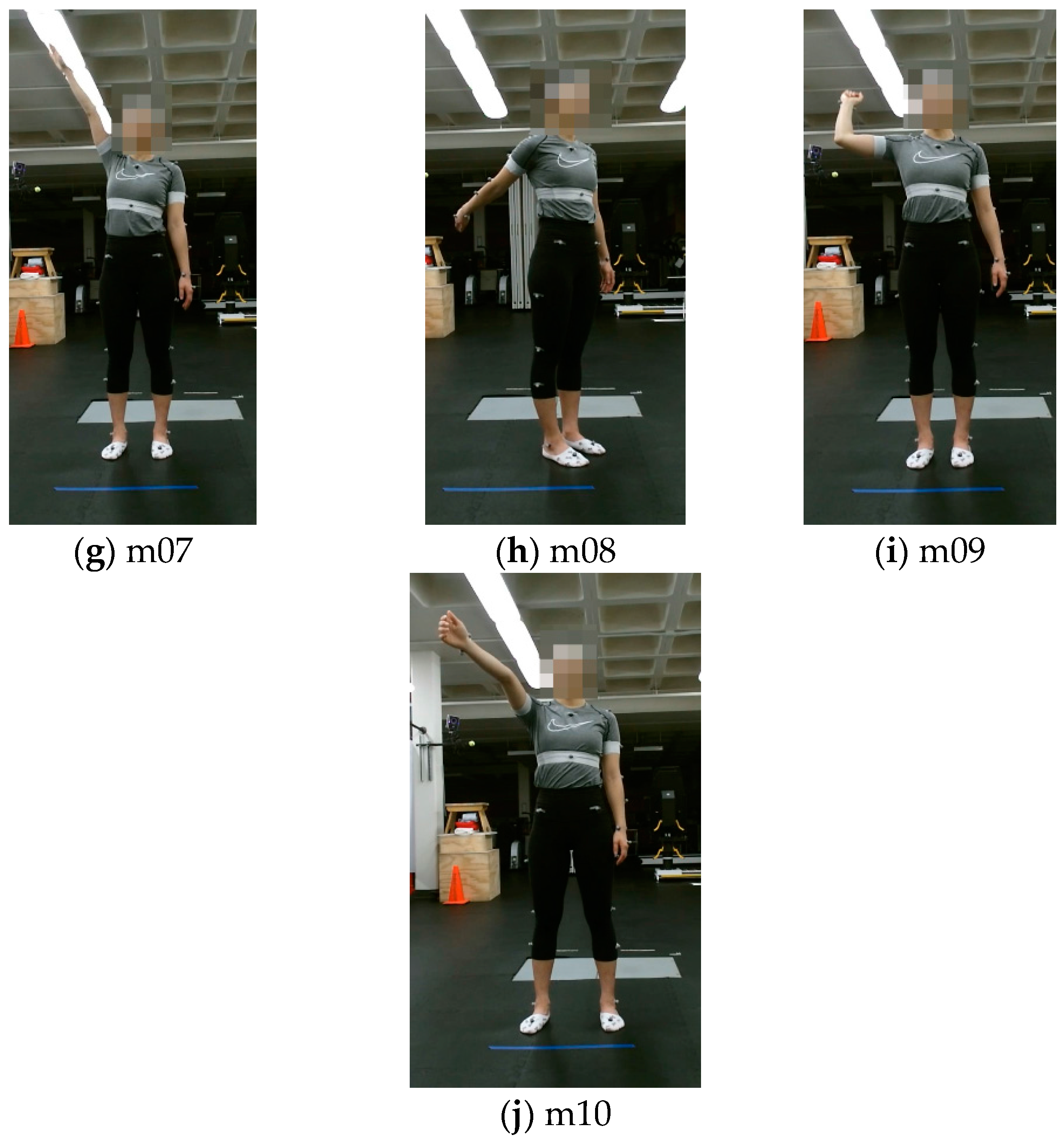

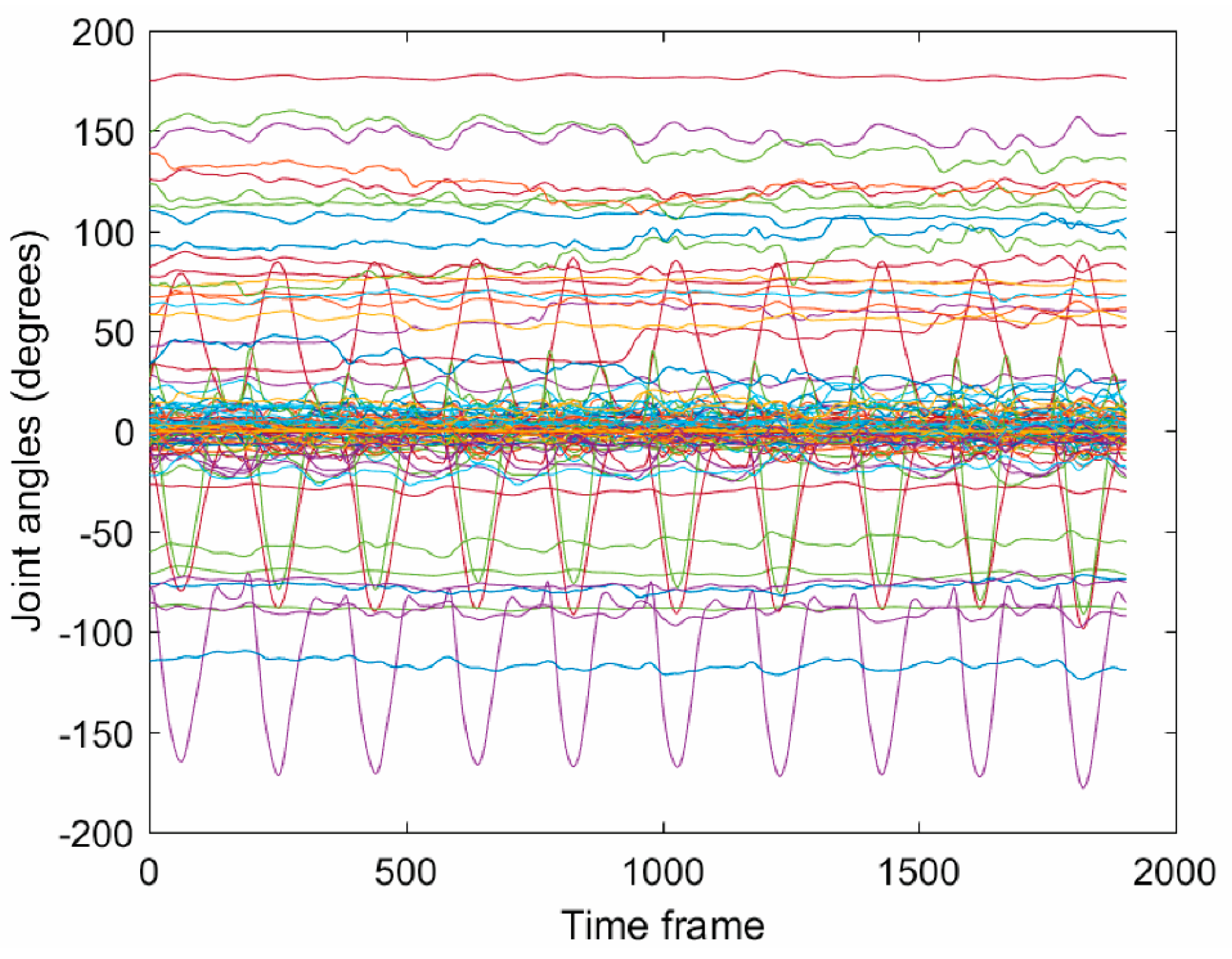

2. Data Description

3. Methods

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Machlin, S.R.; Chevan, J.; Yu, W.W.; Zodet, M.W. Determinants of utilization and expenditures for episodes of ambulatory physical therapy among adults. Phys. Ther. 2011, 91, 1018–1029. [Google Scholar] [CrossRef] [PubMed]

- Hush, J.M.; Cameron, K.; Mackey, M. Patient satisfaction with musculoskeletal physical therapy care: A systematic review. Phys. Ther. 2011, 91, 25–36. [Google Scholar] [CrossRef] [PubMed]

- Jack, K.; McLean, S.M.; Moffett, J.K.; Gardiner, E. Barriers to treatment adherence in physiotherapy outpatient clinics: A systematic review. Man. Ther. 2010, 15, 220–228. [Google Scholar] [CrossRef] [PubMed]

- Aung, M.S.H.; Kaltwang, S.; Romera-Paredes, B.; Martinez, B.; Singh, A.; Cella, M.; Valstar, M.; Meng, H.; Kemp, A.; Shafizadeh, M.; et al. The automatic detection of chronic pain-related expression: Requirements, challenges, and the multimodal EmoPain dataset. IEEE Trans. Affect. Comput. 2016, 7, 435–451. [Google Scholar] [CrossRef]

- Hsu, C.-J.; Meierbachtol, A.; George, S.Z.; Chmielewski, T.L. Fear of reinjury in atheletes: Implications and rehabilitation. Phys. Ther. 2017, 9, 162–167. [Google Scholar] [CrossRef]

- Maciejasz, P.; Eschweiler, J.; Gerlach-Hahn, K.; Jansen-Troy, A.; Leonhardt, S. A survey on robotic devices for upper limb rehabilitation. J. NeuroEng. Rehabil. 2014, 11, 3. [Google Scholar] [CrossRef] [PubMed]

- Gauthier, L.V.; Kane, C.; Borstad, A.; Strah, N.; Uswatte, G.; Taub, E.; Morris, D.; Hall, A.; Arakelian, M.; Mark, V. Video game rehabilitation for outpatient stroke (VIGoROUS): Protocol for a multicenter comparative effectiveness trial of inhome gamified constraint-induced movement therapy for rehabilitation of chronic upper extremity hemiparesis. BMC Neurol. 2017, 17, 109. [Google Scholar] [CrossRef] [PubMed]

- Kinect Sensor for Xbox One, Microsoft Devices. Available online: https://www.microsoft.com/en-us/store/d/kinect-sensor-for-xbox-one/91hq5578vksc (accessed on 16 October 2017).

- Vakanski, A.; Ferguson, J.M.; Lee, S. Mathematical modeling and evaluation of human motions in physical therapy using mixture density neural networks. Physiother. Rehabil. 2016, 1, 118. [Google Scholar] [CrossRef]

- Vakanski, A.; Ferguson, J.M.; Lee, S. Metrics for performance evaluation of patient exercises during physical therapy. Int. J. Phys. Med. Rehabil. 2017, 5, 3. [Google Scholar] [CrossRef] [PubMed]

- UTD (University of Texas at Dallas)—MHAD (Multimodal Human Action Dataset). Available online: http://www.utdallas.edu/~kehtar/UTD-MHAD.html (accessed on 10 August 2017).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Han, F.; Reily, B.; Hoff, W.; Zhang, H. Space-time representation of people based on 3D skeletal data: A review. Comput. Vis. Image Underst. 2017, 158, 85–105. [Google Scholar] [CrossRef]

- CMU Multi-Modal Activity Database. NSF EEEC-0540865. Available online: http://kitchen.cs.cmu.edu (accessed on 10 August 2017).

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Berkeley MHAD: A comprehensive multimodal human action database. In Proceedings of the 2013 IEEE Workshop on Applications of Computer Vision (WACV), Tampa, FL, USA, 15–17 January 2013. [Google Scholar]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Mining actionlet ensemble for action recognition with depth camera. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Ar, I.; Akgul, Y.S. A computerized recognition system for home-based physiotherapy exercises using an RGBD camera. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 1160–1171. [Google Scholar] [CrossRef] [PubMed]

- Nishiwaki, G.A.; Uragbe, Y.; Tanaka, K. EMG analysis of lower muscles in three different squat exercises. J. Jpn. Ther. Assoc. 2006, 9, 21–26. [Google Scholar] [CrossRef] [PubMed]

- Reiss, A.; Stricker, D. Creating and benchmarking a new dataset for physical activity monitoring. In Proceedings of the 5th International Conference on Pervasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2012. [Google Scholar]

- Lin, J.F.-S.; Kulic, D. Online segmentation of human motions for automated rehabilitation exercise analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 168–180. [Google Scholar] [CrossRef] [PubMed]

- Komatireddy, R.; Chokshi, A.; Basnett, J.; Casale, M.; Goble, D.; Shubert, T. Quality and quantity of rehabilitation exercises delivered by a 3-D motion controlled camera: A pilot study. Int. J. Phys. Med. Rehabil. 2016, 2, 214. [Google Scholar] [CrossRef] [PubMed]

- Sahrmann, S. Diagnosis and Treatment of Movement Impairment Syndromes; Mosby: St. Louis, MO, USA, 2008. [Google Scholar]

- Ludewig, P.M.; Lawrence, R.L.; Braman, J.P. What’s in a name? Using movement system diagnoses versus pathoanatomic diagnoses. J. Orthop. Sports Phys. Ther. 2013, 43, 280–283. [Google Scholar] [CrossRef] [PubMed]

- Cook, G. Movement: Functional Movement Systems: Screening, Assessment, and Corrective Strategies; On Target Publication: Aptos, CA, USA, 2010. [Google Scholar]

- Vicon Products, Vicon Devices. Available online: https://www.vicon.com/products (accessed on 16 October 2017).

- Plug-In Gait Reference Guide, Vicon Motion Systems; Vicon Industries Inc.: Hauppauge, New York, USA, 2016.

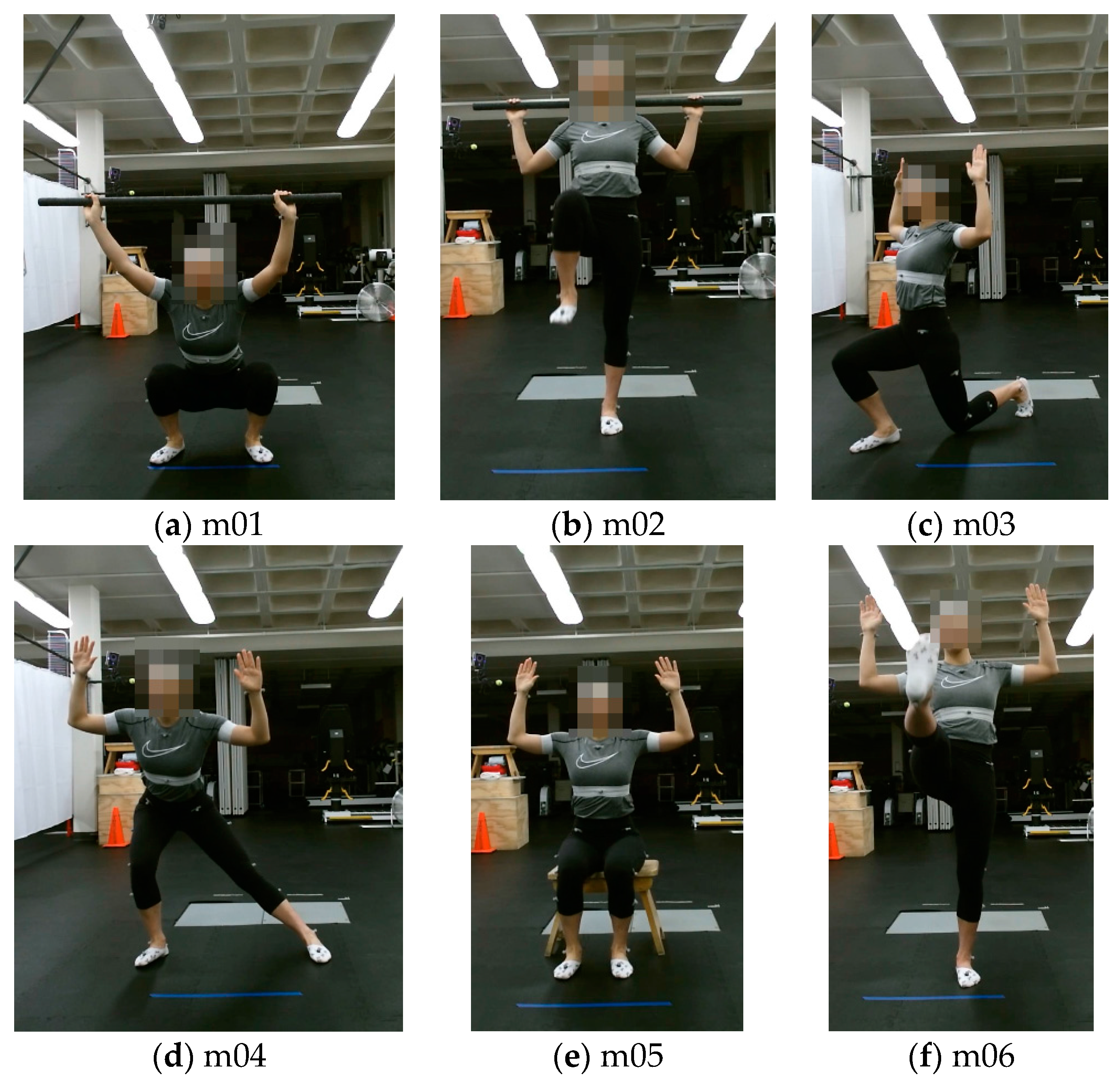

| Order | Movement | Description | Non-Optimal Movement |

|---|---|---|---|

| m01 | Deep squat | Subject bends the knees to descend the body toward the floor with the heels on the floor, the knees aligned over the feet, the upper body remains aligned in the vertical plane | Subject does not maintain upright trunk posture, unable to squat past parallel, demonstrates knee valgus collapse or trunk flexion greater than 30° |

| m02 | Hurdle step | Subject steps over the hurdle, while the hips, knees, and ankles of the standing leg remain vertical | Subject does not maintain upright trunk posture, has less than 89° hip flexion, does not maintain femur in neutral position |

| m03 | Inline lunge | Subject takes a step forward and lowers the body toward the floor to make contact with the knee behind the front foot | Subject unable to maintain upright trunk posture, has rear knee reach the floor, or has a lateral deviation in forward step |

| m04 | Side lunge | Subject takes a step to the side and lowers the body toward the floor | Subject displays moderate to significant knee valgus collapse, pelvis drops or rises more than 5°, trunk angle of less than 30°, thigh angle of more than 45°, center of knee is anterior to the toes |

| m05 | Sit to stand | Subject lifts the body from a chair to a standing position | Subject unable to maintain upright trunk posture, pelvis rises 5° or more, uses arms or compensatory motion to stand, unable to maintain balance or shifts weight to one leg, displays moderate to significant knee valgus collapse |

| m06 | Standing active straight leg raise | Subject raises one leg in front of the body while keeping the leg straight and the body vertical | Subject unable to maintain upright trunk posture, pelvis deviates 5° or more, more than 6° of knee flexion and less than 59° of hip flexion |

| m07 | Standing shoulder abduction | Subject raises one arm to the side by a lateral rotation, keeping the elbow and wrist straight | Subject unable to maintain upright trunk posture or head in neutral position, lift arm does not remain in plane of motion, less than 160° of abduction |

| m08 | Standing shoulder extension | Subject extends one arm rearward, keeping the elbow and wrist straight | Subject unable to maintain upright trunk posture or head in neutral position, lift arm does not remain in sagittal plane, less than 45° of extension |

| m09 | Standing shoulder internal–external rotation | Subject bends one elbow to a 90° angle, and rotates the forearm forward and backward | Subject unable to maintain upright trunk posture or head in neutral position, arm positioning less than 60° of motion in both directions |

| m10 | Standing shoulder scaption | Subject raises one arm in front of the chest until reaching the shoulders height, keeping the elbow and wrist straight | Subject unable to maintain upright trunk posture or head in neutral position, arm lift is not maintained in correct plane, less than 90° of motion |

| Joint Order | Vicon Positions | Vicon Angles | Kinect Positions and Angles |

|---|---|---|---|

| 1 | LFHD—Left head front | Head (absolute) | Waist (absolute) |

| 2 | RFHD—Right head front | Left head | Spine |

| 3 | LBHD—Left back head | Right head | Chest |

| 4 | RBHD—Rright back head | Left neck | Neck |

| 5 | C7—7th cervical vertebra | Right neck | Head |

| 6 | T10—10th thoracic vertabra | Left clavicle | Head tip |

| 7 | CLAV—Clavicle | Right clavicle | Left Collar |

| 8 | STRN—Sternum | Thorax (absolute) | Left Upper arm |

| 9 | RBAK—Right back | Left thorax | Left for arm |

| 10 | LSHO—Left shoulder | Right thorax | Left hand |

| 11 | LUPA—Left upper arm | Pelvis (absolute) | Right collar |

| 12 | LELB—Left elbow | Left pelvis | Right upper arm |

| 13 | LFRM—Left forearm | Right pelvis | Right forearm |

| 14 | LWRA—Left wrist A | Left hip | Right hand |

| 15 | LWRB—Left wrist B | Right hip | Left upper leg |

| 16 | LFIN—Left finger | Left femur | Left lower leg |

| 17 | RSHO—Right shoulder | Right femur | Left foot |

| 18 | RUPA—Right upper arm | Left knee | Left leg toes |

| 19 | RELB—Right elbow | Right knee | Right upper leg |

| 20 | RFRM—Right forearm | Left tibia | Right lower leg |

| 21 | RWRA—Right wrist A | Right tibia | Right foot |

| 22 | RWRB—Right wrist B | Left ankle | Right leg toes |

| 23 | RFIN—Right finger | Right ankle | |

| 24 | LASI—Left ASIS | Left foot | |

| 25 | RASI—Right ASIS | Right foot | |

| 26 | LPSI—Left PSIS | Left toe | |

| 27 | RPSI—Right PSIS | Right toe | |

| 28 | LTHI—Left thigh | Left shoulder | |

| 29 | LKNE—Left knee | Right shoulder | |

| 30 | LTIB—Left tibia | Left elbow | |

| 31 | LANK—Left ankle | Right elbow | |

| 32 | LHEE—Left heel | Left radius | |

| 33 | LTOE—Left toe | Right radius | |

| 34 | RTHI—Right thigh | Left wrist | |

| 35 | RKNE—Right knee | Right wrist | |

| 36 | RTIB—Right tibia | Left upperhand | |

| 37 | RANK—Right ankle | Right upperhand | |

| 38 | RHEE—Right heel | Left hand | |

| 39 | RTOE—Right toe | Right hand |

| Subject ID | Gender | Height (cm) | Weight (kg) | BMI | Dominant Side | Profession |

|---|---|---|---|---|---|---|

| s01 | Female | 169.0 | 69.4 | 24.3 | Right | Grad student |

| s02 | Male | 180.0 | 83.0 | 25.6 | Right | Grad student |

| s03 | Male | 169.5 | 64.8 | 22.6 | Right | Faculty |

| s04 | Female | 178.5 | 79.4 | 24.9 | Right | Faculty |

| s05 | Male | 185.5 | 148.6 | 43.2 | Right | Grad student |

| s06 | Female | 164.6 | 53.6 | 19.8 | Right | Grad student |

| s07 | Female | 166.1 | 53.1 | 19.2 | Left | Grad student |

| s08 | Male | 170.5 | 77.3 | 26.6 | Right | Grad student |

| s09 | Female | 164.0 | 56.0 | 20.8 | Right | Grad student |

| s10 | Male | 174.2 | 94.7 | 31.2 | Left | Grad student |

| Subject ID | Hurdle Step | Inline Lunge | Side Lunge | Standing ASLR | Standing ShAbd | Standing ShExt | Standing ShIrEr | Standing ShScp |

|---|---|---|---|---|---|---|---|---|

| s01 | R | L | R | R | R | R | R | R |

| s02 | L | R | R | R | R | R | R | R |

| s03 | R | L | R | R | R | R | R | R |

| s04 | R | L | R | R | R | R | R | R |

| s05 | R | L | R | R | R | R | R | R |

| s06 | R | L | R | R | R | R | R | R |

| s07 | L | R | L | L | L | L | L | L |

| s08 | R | L | R | R | R | R | R | R |

| s09 | R | L | R | R | R | R | R | R |

| s10 | L | R | L | L | L | L | L | L |

| Subject ID | s01 | s02 | s03 | s04 | s05 | s06 | s07 | s08 | s09 | s10 |

|---|---|---|---|---|---|---|---|---|---|---|

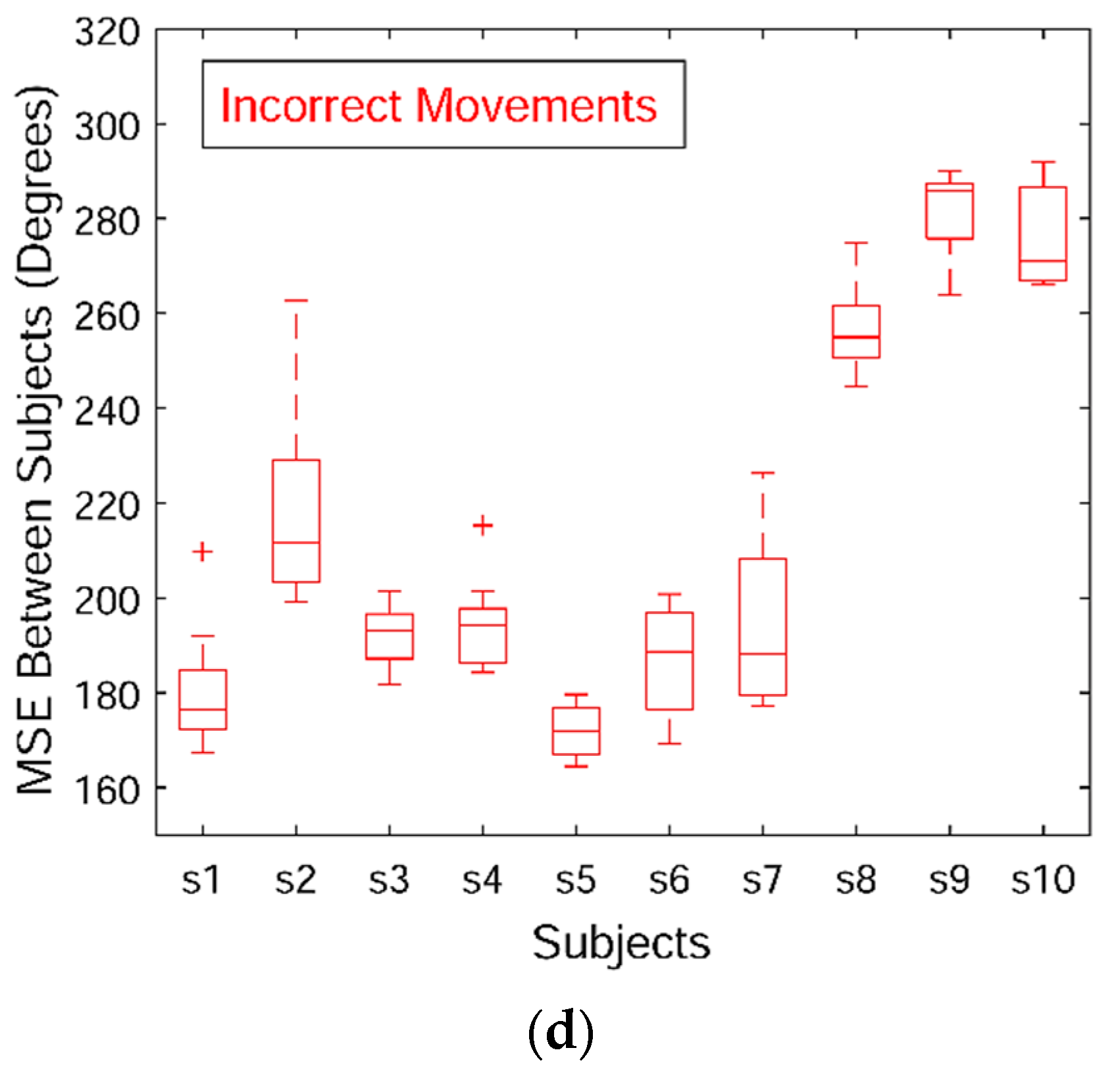

| m01-c | 164 (2) | 186 (5) | 162 (2) | 187 (5) | 163 (2) | 171 (5) | 180 (2) | 174 (4) | 277 (19) | 303 (2) |

| m01-i | 180 (13) | 219 (21) | 192 (6) | 194 (9) | 171 (6) | 187 (12) | 194 (16) | 257 (9) | 281 (10) | 276 (11) |

| m02-c | 99 (1) | NA | 143 (17) | 120 (25) | 136 (4) | 112 (3) | NA | 109 (2) | 213 (2) | NA |

| m02-i | 111 (3) | NA | 150 (17) | 131 (26) | 126 (10) | 121 (6) | NA | 121 (9) | 208 (32) | NA |

| m03-c | 161 (1) | NA | NA | 166 (3) | 175 (7) | 196 (26) | NA | 218 (22) | 163 (1) | NA |

| m03-i | 180 (4) | NA | NA | 178 (5) | 192 (16) | 211 (17) | NA | 228 (23) | 175 (7) | NA |

| m04-c | 170 (2) | 146 (14) | 154 (12) | 151 (18) | 135 (14) | 138 (3) | NA | 128 (6) | 152 (20) | NA |

| m04-i | 267 (3) | 199 (31) | 163 (26) | 147 (4) | 168 (16) | 144 (2) | NA | 138 (9) | 162 (19) | NA |

| m05-c | 309 (2) | 178 (2) | 163 (3) | 164 (2) | 169 (14) | 186 (3) | 168 (9) | 199 (29) | 215 (3) | 205 (17) |

| m05-i | 245 (5) | 189 (26) | 188 (18) | 182 (10) | 211 (19) | 205 (7) | 187 (15) | 218 (24) | 216 (27) | 210 (9) |

| m06-c | 231 (1) | 179 (21) | 206 (46) | 192 (5) | 185 (5) | 174 (2) | NA | 196 (8) | 226 (23) | NA |

| m06-i | 208 (3) | 184 (10) | 185 (4) | 187 (7) | 195 (9) | 180 (3) | NA | 205 (10) | 231 (14) | NA |

| m07-c | 85 (2) | 77 (4) | 76 (3) | 75 (3) | 82 (8) | 96 (9) | NA | 103 (16) | 78 (5) | NA |

| m07-i | 99 (7) | 104 (9) | 108 (10) | 115 (9) | 104 (10) | 155 (62) | NA | 207 (48) | 123 (15) | NA |

| m08-c | 127 (1) | 115 (5) | 105 (4) | 100 (2) | 105 (7) | 117 (1) | NA | 169 (17) | 129 (38) | NA |

| m08-i | 183 (33) | 120 (8) | 113 (6) | 108 (5) | 118 (10) | 128 (4) | NA | 123 (2) | 122 (6) | NA |

| m09-c | 223 (2) | 126 (40) | 111 (2) | 111 (3) | 115 (3) | 123 (5) | NA | 188 (25) | 145 (41) | NA |

| m09-i | 138 (8) | 120 (7) | 121 (8) | 127 (7) | 159 (39) | 142 (13) | NA | 129 (6) | 124 (12) | NA |

| m10-c | 133 (1) | 127 (9) | 129 (5) | 128 (3) | 141 (47) | 293 (16) | NA | 197 (36) | 195 (8) | NA |

| m10-i | 204 (2) | 141 (24) | 137 (3) | 140 (6) | 157 (43) | 269 (10) | NA | 199 (24) | 161 (25) | NA |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vakanski, A.; Jun, H.-p.; Paul, D.; Baker, R. A Data Set of Human Body Movements for Physical Rehabilitation Exercises. Data 2018, 3, 2. https://doi.org/10.3390/data3010002

Vakanski A, Jun H-p, Paul D, Baker R. A Data Set of Human Body Movements for Physical Rehabilitation Exercises. Data. 2018; 3(1):2. https://doi.org/10.3390/data3010002

Chicago/Turabian StyleVakanski, Aleksandar, Hyung-pil Jun, David Paul, and Russell Baker. 2018. "A Data Set of Human Body Movements for Physical Rehabilitation Exercises" Data 3, no. 1: 2. https://doi.org/10.3390/data3010002

APA StyleVakanski, A., Jun, H.-p., Paul, D., & Baker, R. (2018). A Data Set of Human Body Movements for Physical Rehabilitation Exercises. Data, 3(1), 2. https://doi.org/10.3390/data3010002