Abstract

The growing implementation of digital platforms and mobile devices in educational environments has generated the need to explore new approaches for evaluating the learning experience beyond traditional self-reports or instructor presence. In this context, the NPFC-Test dataset was created from an experimental protocol conducted at the Experiential Classroom of the Institute for the Future of Education. The dataset was built by collecting multimodal indicators such as neuronal, physiological, and facial data using a portable EEG headband, a medical-grade biometric bracelet, a high-resolution depth camera, and self-report questionnaires. The participants were exposed to a digital test lasting 20 min, composed of audiovisual stimuli and cognitive challenges, during which synchronized data from all devices were gathered. The dataset includes timestamped records related to emotional valence, arousal, and concentration, offering a valuable resource for multimodal learning analytics (MMLA). The recorded data were processed through calibration procedures, temporal alignment techniques, and emotion recognition models. It is expected that the NPFC-Test dataset will support future studies in human–computer interaction and educational data science by providing structured evidence to analyze cognitive and emotional states in learning processes. In addition, it offers a replicable framework for capturing synchronized biometric and behavioral data in controlled academic settings.

1. Introduction

Nowadays, the use of portable devices such as laptop computers, tablets, and smartphones is a standard for engaging in learning activities through digital platforms and environments. Students interact with different tasks that involve the use of multimedia, previously designed by pedagogical architects to ensure an engaging and motivating learning experience [1]. However, evaluating the student experience may be challenging in the absence of an instructor during the learning session [2], where students are unable to provide actionable feedback and receive timely interventions. Therefore, additional assessment instruments are required to better understand students’ interactions and experiences with educational technologies [3].

A field of study that seeks to address such a challenge is multimodal learning analytics (MMLA), which combines the use of technological devices to collect, process, analyze, and report data on the interactions that take place within learning environments and their [1]. Different applications of MMLA can be found in the literature, where multiple attributes such as body postures, hand and facial gestures, spoken interactions, eye movement, neuronal activity, spatial positioning, and physical learning environment factors are captured and analyzed in different learning contexts through the use of devices and sensors such as depth cameras, lavalier microphones, eye-trackers, portable electroencephalograms (EEG), location trackers, and smartwatches, among others [4,5].

These sensors are capable of capturing physiological signals that serve as indirect indicators of affective and cognitive states. Recent advances in wearable technologies and sensor fusion have demonstrated the value of combining physiological and behavioral data to infer emotional states with high accuracy in real-time learning scenarios. For instance, the use of electrodermal activity (EDA), photoplethysmography (PPG), and facial expression analysis has proven effective in monitoring cognitive and affective responses during task execution, especially when paired with artificial intelligence algorithms for emotion recognition [6,7].

This paper presents a dataset that derives from an experiment conducted in the Living Lab at the Institute for the Future of Education (IFE) in Tecnologico de Monterrey. The experiment, namely, the “Neuronal, Physiological, and Facial Coding Test Experiment” (NPFC-Test), seeks to collect multimodal data through a high-resolution camera, a portable EEG headband, a medical-grade smartwatch, and self-reports in a human–computer interaction (HCI) scenario for assessing the valence, arousal, and concentration attributes present in equivalent learning contexts. The NPFC-Test experiment is part of a larger project at the IFE called the “Experiential Classroom” (IFE EC), which consists of the equipment of a modular learning space to conduct MMLA research on the behaviors and interactions taking place between faculty members and students in different teaching and learning contexts [8]. Several studies have emphasized the importance of evaluating user engagement and cognitive–affective responses through multimodal data in human–computer interaction research. In this context, biometric and behavioral indicators have been effectively used to analyze learners’ cognitive load and emotional states during task execution [9,10].

Understanding the relationship between multimodal attributes—such as neural, physiological, and behavioral signals—and learner behaviors including emotional responses, cognitive load, and engagement remains a key challenge in human–computer interaction research for education. In particular, prior studies have emphasized the relevance of academic emotions and their strong connection to student engagement in learning environments [11], as well as the potential of wearable technologies for detecting engagement levels in real-time [12]. Recent developments in digital education have further underscored the need for objective tools capable of evaluating these dimensions, especially in contexts where direct teacher presence is not available. In this regard, biometric sensors—such as EEG headbands, physiological wristbands, and facial recognition cameras—have been employed to capture affective and attentional states during remote or hybrid learning activities [13].

Through multimodal data fusion techniques, these signals have been processed to construct detailed profiles of student experience and inform pedagogical decisions based on real-time feedback [4]. The expansion of wearable technologies has further contributed to this field by offering low-cost and highly sensitive solutions for continuous monitoring [14]. Artificial intelligence has also played a pivotal role, allowing for real-time emotion recognition that enhances the personalization of digital learning environments [15]. EEG-based systems, in particular, have been used to detect engagement levels and adapt instructional content accordingly [16]. In this context, the dataset presented herein contributes to ongoing efforts by offering a structured and replicable framework for studying cognitive and emotional dynamics through multimodal learning analytics. The purpose of documenting and sharing the NPFC-Test experiment dataset with the scientific community is for educational and data science researchers to contribute to further developing the field of MMLA, which aims at closing the gap between technology (tools) and pedagogy (theory) by analyzing educational data traces and linking them to pedagogical constructs, behavioral indicators, and actionable feedback [17].

The contents of this paper are structured as follows: Section 2 presents a description of the NPFC-Test experimental design and the procedures involved in obtaining the dataset. Section 3 describes the records included in the NPFC-Test dataset, along with details regarding storage and access. Section 4 provides information to support the technical validation of the dataset. Finally, Section 5 provides a detailed overview of the dataset structure, including the organization, formats, and key features of the records included in the NPFC-Test dataset.

2. Methods

As previously mentioned in Section 1, the IFE EC project seeks to enable experiments that study the interactions and behaviors present in different learning scenarios where technological devices are used by faculty members and students. Therefore, the NPFC-Test was designed to establish a baseline for future experiments that involve a similar technological setting to study different learning activities and approaches. The following subsections describe the NPFC-Test experimental design and methods for obtaining the resulting dataset.

2.1. Objective

The objective of the NPFC-Test experiment is to use multimodal devices to collect, extract, and process the neural activity related to the learner with an integrated, physiological, and facial coding activity, along with self-reports on valence, arousal, and concentration, throughout the execution of a time-based digital test that includes multiple audiovisual stimuli and concentration tasks.

2.2. Research Questions

The following research questions were formulated in order to illustrate potential lines of inquiry that may be supported by the dataset. It should be noted that these questions are not intended to be answered within the scope of this data descriptor. Instead, they are provided as a reference to guide future research focused on affective computing, human–computer interaction, and multimodal learning analytics.

- Is facial coding activity from the learner associated with self-reported valence levels in the NPFC-Test?

- Is physiological activity from the learner associated with self-reported arousal levels in the NPFC-Test?

- Is neuronal activity from the learner associated with self-reported concentration levels in the NPFC-Test?

- Are self-reported valence and arousal levels from the NPFC-Test associated with the emotional states inferred by various facial coding algorithms?

2.3. NPFC-Test Structure

The NPFC-Test consists of a 20-min digital test divided into 22 sections, where a learner is presented with multiple audiovisual stimuli and mathematics, concentration, and writing tasks. The test is automated and each stimulus or task is followed by a self-report on valence, arousal, and concentration for the learner to capture his/her perceptions.

The first section of the test is designed to collect information related to the participant profile, while the final section is intended to capture the participant experience throughout the experiment. Table 1 briefly describes the contents in each of the NPFC-Test sections. A full description of each section is be provided in the “NPFC-Test Details”.

Table 1.

List of NPFC-Test sections.

2.4. Multimodal Devices

The NPFC-Test experiment makes use of three multimodal devices for neuronal, physiological, and facial coding data collection. A brief description of each device, along with their functionality and data collection capabilities are described next. The motivation for selecting such devices for the NPFC-Test experiment was primarily related to their portability, integration with mobile devices, data collection capabilities, ease of use and equipment, and low intrusiveness.

2.4.1. Muse 2 Headband

The Muse 2 headband (Figure 1) from InteraXon is a portable EEG measurement device with four electrodes designed to improve the meditation skills of its users by giving them feedback on brain activity through guided meditations in a dedicated mobile app. This device is originally intended for recreational use, therefore, additional software tools had to be procured for it to be used as an EEG sensor in the experiment [18].

Figure 1.

(a) Muse 2. (b) Empatica EmbracePlus. (c) Azure Kinect.

2.4.2. Empatica EmbracePlus Smartwatch

EmbracePlus (Figure 1) is a smartwatch designed for continuous health monitoring. Its a medical- grade wearable equipped with an optical photoplethysmogram (PPG) sensor, a ventral electrodermal activity (EDA) sensor, a digital temperature sensor, an accelerometer, and gyroscope, allowing its users to collect biomarker data continuously for long-term clinical studies. This device was developed by Empatica, an MIT Media Lab spin-off company born in Cambridge, Massachusetts [19].

2.4.3. Azure Kinect

The Azure Kinect (Figure 1) is a continuous-wave (CW) time-of-flight (ToF) camera developed by Microsoft, which supports depth sensing modes and RGB camera with a resolution up to 3840 × 2160 [20] The Azure Kinect is a predecessor of the Xbox Kinect, which is no longer designed for the video game market but rather as a developer kit for the development of AI and computer vision tools [21].

2.5. NPFC-Test Details

The NPFC-Test consists of 22 sections that involve audiovisual stimuli, multidisciplinary tasks, and self-reports. The specific details on the contents and activities developed by the participants in each test section are described next. Next to the title of each task, the maximum duration in minutes and seconds that the user was allowed to spend on each task is shown.

- Participant Profile (01:30): It is a brief questionnaire where the user is asked demographic data such as age, gender, dominant hand, and highest level of education, as well as their general physical condition with questions such as average hours of sleep, consumption of medication or psychoactive substances, and history of neurological and cardiac conditions.A summary of the participant demographics is presented in Table 2 to provide an overview of the dataset composition and support its potential for generalization.

Table 2.

Demographic Summary of NPFC-Test Participants.

Table 2.

Demographic Summary of NPFC-Test Participants.

| Characteristic | Distribution |

|---|---|

| Number of participants | 41 |

| Average age | 23.2 years |

| Gender | Female (44%), Male (56%) |

| Dominant hand | Right-handed (100%) |

| Average sleep per night | 6–7 h (72%), more than 7 h (20%), 5–6 h (8%) |

| Reported stress (at time of test) | A little stressed (48%), None (52%) |

| Use of psychoactive substances (last 8 h) | None reported (100%) |

| Neurological conditions (history) | None reported (100%) |

| Cardiac conditions (history) | None reported (100%) |

| Eyeglass use during session | Yes (56%), No (44%) |

| Highest completed education | Bachelor degree (52%), High school (36%), Not reported (12%) |

- Initial meditation (02:25): An initial 2:10 min meditation, which serves to relax the user and establish a baseline of neutrality to achieve calibration of the user’s physiological states.

- Initial meditation self-report (00:25): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Mathematics task (01:00): A mathematical skill exercise where the user is asked to calculate the numbers of a series in descending order in a maximum time of one minute, i.e., 128, 121, 114, 107, 100, ...

- Mathematics task self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Auditory stimulation (02:37): The anthem of the institution is played for 2 min and 37 s in order to measure the stimuli generated by the anthem in the members of the university community.

- Auditory stimulation self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Concentration video 1 (00:40): A 22-s video where the user is asked to focus on 4 balls bouncing at different times while they change shape and the background changes color.

- Concentration video 1 task (00:40): A questionnaire where the user is asked how many times the balls bounced and if they noticed changes in the shape of the balls and in the color of the background. It also asks if any additional figures appeared in the video.

- Concentration video 2 (00:38): A 20-s video in which the user is asked to concentrate on the balls bouncing towards the center of the screen while the central target changes shape and the background changes color.

- Concentration video 2 task (00:50): A questionnaire asking the user how many times the balls bounced and if they noticed changes in the shape of the center target and in the color of the background. It also asks if any additional figures appeared in the video.

- Concentration videos self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Emotional video (03:00): It is the emotional story of two children where they show all the effort they make to be able to buy a mobile phone while always accompanying each other all the way to face all the problems together.

- Emotional video self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Writing tasks instructions (00:10): It is an information box where the user is told that the next 3 pages contain a open-ended question about emotions where they will have 45 s to answer each one.

- Writing task 1 (00:45): The user is asked to mention at least two things they love.

- Writing task 2 (00:45): The user is asked to mention at least two things they hate.

- Writing task 3 (00:45): The user is asked to mention at least two things that make them feel angry.

- Writing tasks self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- Final meditation (02:00): A final 1:47 min meditation, which serves to relax the user and return to a baseline of neutrality of the user’s physiological states.

- Final meditation self-report (00:20): A self-report for the user to indicate how they felt about their concentration, valence, and motivation regarding the task they just completed.

- User experience survey (01:00): A short questionnaire where participants are asked about the user experience, e.g., the comfort of using each of the 4 devices and the format of the test.

As shown in Table 3, participants answered three self-report questions after each task. These questions were rated using a five-point Likert scale to evaluate alertness, enjoyment, and perceived cognitive demand.

Table 3.

Self-report questions about the task.

3. Experimental Protocol

The NPFC-Test experiment was executed within a controlled environment in a 10 m2 closed meeting room equipped with a desk, two chairs, a high performance desktop PC with wireless keyboard and mouse, a 32 inch curved display, and gaming headphones. The space featured a constant temperature of 25 °C and optimal lighting conditions. Participants engaged in the experiment individually, and the Living Lab (LL) team was present only at the beginning and end of each session to give them instructions and guidance. The invitation to participate in the NPFC-Test experiment was shared through multiple institutional channels, ensuring accessibility to interested parties and individuals from different academic backgrounds and departments so that we could ensure a random sample of convenience. Each experimental session lasted 30 min, a total of 21 min were dedicated to the interaction of participants with the NPFC-Test, and the remaining time was for informational and logistical purposes of the experiment. The following experimental protocol was followed for each of the participants:

3.1. Informed Consent Letter

Upon arrival at the experimental site, an informed consent letter was provided to the participant by the LL team. The document included each step of the procedure and technical specifications of the multimodal devices involved, with particular emphasis on safeguarding the well-being of each participant. It explicitly stated that no health risks or hazards were associated with the experiment. Furthermore, the participant was informed that all collected data would be depersonalized to guarantee confidentiality and privacy throughout the research process. The informed consent letter was duly signed by all individuals participating in the experiment.

3.2. Device Equipment

Before the experiment began, each multimodal device was paired to a mobile tablet, or connected to a high performance desktop PC, ready to begin with data collection. The Azure Kinect camera had been previously positioned above the computer screen and in front of the seating area assigned to the participant. Once a participant had signed the informed consent letter, the LL team proceeded to install the multimodal devices in the following order and manner.

- EmbracePlus: The smartwatch was placed on the non-dominant hand of the participant and behind the wrist ulna, according to the recommendations by Empatica.

- Muse 2: Before the headband was installed on the participant, the forehead and the back of the ears were cleaned by the LL team using a saline solution to ensure proper connectivity with the electrodes. Once the headband had been correctly positioned, a connectivity check was performed using the data collection software, and the data recording process was initiated.

- Gaming Headphones: The headphones were carefully installed on the participants head, ensuring that the Muse 3 headband remained in the correct position and with good connectivity in the data collection software.

3.3. NPFC-Test

When the LL team made sure that the participant was ready to begin with the experiment, they explained the operation and controls of the NPFC-test and left the meeting room for the total duration of the test. To be aware that the participant had successfully completed the experiment, the LL team programmed an automatic notification through the test’s web page.

3.4. Closing Activities

Once the participant completed the experiment, the LL team returned to the meeting room, helped the participant remove all devices, stopped data collection, and answered any doubts that the participant might have. It is important to note that if a participant required prescription glasses, the LL team asked them to remove them before equipping them with the Muse 2 headband and the gaming headphones and assisted in putting them back on before the experiment began.

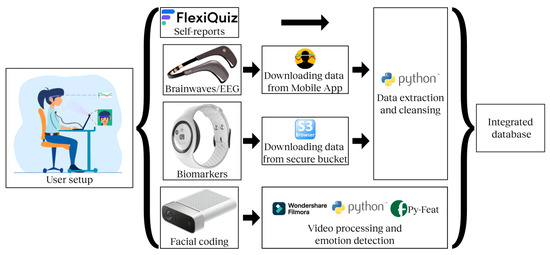

4. Dataset Processes

This subsection provides an overview of the NPFC-Test experiment data processes, including data collection, extraction, processing, and fusion. Figure 2 depicts a summary of the data processing pipeline.

Figure 2.

Data processing pipeline.

4.1. Data Collection

The following describes the configuration for each of the devices and software applications used for data collection in the NPFC-Test experiment. Before starting the digital test, each participant was assigned a unique ID, with which they were identified in each of the files for each device, later integrated into a single dataset.

- FlexiQuiz: The digital test, hosted on the web platform FlexiQuiz.com, was started by entering the unique ID assigned to the participant. Once this was carried out, the Muse 2 and Empatica EmbracePlus devices were placed on the user, ensuring proper synchronization and proper camera configuration for the Azure Kinect. Once ready, the participant started with the test. FlexiQuiz stored information on the time spent in each of the sections, as well as the answers selected in the self-reports.

- Muse 2: The Muse 2 headband was connected via Bluetooth to a mobile application, Mind Monitor [22]. This app allowed the recording of brainwave activity raw data and absolute band powers in a csv file, in addition to providing a real-time visualization of the measurements being taken. The app was configured to make a continuous measurement of data. This means that the device made approximately 260 measurements per second.

- Embraceplus: The Empatica EmbracePlus bracelet was synchronized with a mobile app developed by Empatica, called Care Lab. This app, when properly synchronized with the device, sends all data to the cloud, which can be accessed through an Amazon Secure Bucket. The data are stored in avro files, each containing a maximum of 15 min of information. Depending on the starting point of a 15-min cycle, a single participant generated up to 3 avro files.

- Azure Kinect: For the recording of the video with the Azure Kinect camera, the parameters were set to a duration of 1380 s (23 min), a quality of 720 pixels, and we deactivated the depth setting and the IMU mode of the camera. For the frequency of frames taken in the video, the default value was left, which is 30 frames per second. The latter to reduce the size of the video and later computational processing. For the recording of the videos, the Developer Kit (DK) Recorder was used, which uses a command-line utility to record data from the sensors SDK to a file.

4.2. Data Extraction

The following describes the procedures conducted for data extraction, recognizing that each device used in the NPFC-Test experiment collects, manages, and saves information in different structures and formats.

- FlexiQuiz: The platform generated xlsx files containing the start and end times of the NPFC-Test for each participant, as well as the duration of each task. These files were later used to process the video recordings.

- Muse 2: The Mind Monitor app, where the Muse 2 headband was paired and where the recording was carried out, generated csv files containing the participants’ brainwave activity and timestamps for the duration of the NPFC-Test. Once the recording was finished, the csv files were sent to a Dropbox folder for extraction and further processing.

- Embraceplus: The Avro files generated by the Embraceplus bracelet, which included the physiological activity and corresponding timestamps of the participants, were downloaded in a zip file via the Amazon Secure Bucket connected to the Care Lab monitoring platform.

- Azure Kinect: The video recordings of the participants, captured using the Azure Kinect camera, were stored on the computer utilized for the NPFC-Test. Subsequently, the recordings were transferred to a designated folder containing a Python script, where they were edited, processed, and analyzed for the purpose of emotion recognition. To enhance the efficiency of processing and emotion detection, as well as to ensure the manageability of the data, a sampling rate of one frame per second (i.e., one out of every 30 frames) was applied.

4.3. Data Processing

The following describes the procedures conducted for video and tabular data processing. All tabular data were processed using Python 3.11.

4.3.1. FlexiQuiz

The data included in the xlsx files generated by FlexiQuiz did not require additional processing; therefore, the files were simply merged into a single FlexiQuiz database.

4.3.2. EmbracePlus

The avro files generated by the Embraceplus bracelet were accessed by means of the avro Python library, which includes various modules for reading and processing data using dictionaries. Additional Python libraries—such as pandas, numpy, and biospy—were also employed for processing the physiological data corresponding to the participants.

Each avro file contained a dictionary of dictionaries with information on the different biomarkers that were recorded. Each biomarker had a different sampling frequency and different data granularity. Thus, a description on the procedures followed for each biomarker considered in this study is provided next.

- Temperature: The sampling frequency of this biomarker is 0.999755859375, which means it has been taken every second. The study is recorded every second, which means the only transformation we need for this biomarker is to check for null values and, once cleaned, move it into the dataframe.

- timestampStart → Timestamp: start of the temperature in the file.

- samplingFrequency → (float) Sampling frequency (Hz) of the sensor.

- values → Array of temperature values.

- Electrodermal Activity: This sensor measures the changes in conductivity produced in the skin due to the increases in the activity of sweat glands. This happens as a result of changing sympathetic nervous system activity.The sampling frequency for this biomarker is 3.9990363121032715, which means 4 samples per second.To start with the information transformation, we start to validate, and we do not have any null values. With the value of the sampling frequency, we know that we need to perform a transformation of the data for following up the granularity in the study.The sampling frequency shows us 4 samples per second. The transformation to the data was to aggregate the raw data per second, and we decided to use the arithmetic mean and calculate an average to obtain 1 sample per second.We continuously add it to the dataframe with the information of the temperature, assuring we have the same amount of records per biometric.dict_keys([‘timestampStart’, ‘samplingFrequency’, ‘values’]).

- TimestampStart -> Timestamp: start of the EDA in the file

- samplingFrequency -> (float) sampling frequency (Hz) of the sensors

- values -> array with electrodermal activity (µS) values

- Blood Volume Pulse (BVP): measures heart rate based on the volume of blood that passes through the tissue, its measurement is obtained by the use of a photoplethysmography (PPG) sensor. This component measures changes in blood volume in the arteries and capillaries that correspond to changes in the heart rate and blood flow (Jones,2018).Blood volume pulse is a popular method for monitoring the relative changes in peripheral blood flow, heart rate, and heart rate variability (Peper,Shaffer,Lin, ).BVP is a method of detecting heart beats by measuring the volume of blood passing the sensor in either red or infrared light. From BVP, you can calculate heart rate and heart rate variability (HRV). dict_keys([‘timestampStart’, ‘samplingFrequency’, ‘values’]).

- TimestampStart -> Timestamp: start of the BVP in the file

- samplingFrequency -> (float) sampling frequency (Hz) of the BVP

- values -> values of light absorption (nW)

Heart rate is one of the measurements that we take into account in the study, so since we do not have it as data per se, we calculated it from BVP with the help of a python library that allowed us to add the frequency at which we obtained the measurements, which allowed us to obtain the heart rate per second as a result.The typical output of the sensor is then a signal where each cardiac cycle is expressed as a pulse wave. From the BVP we can extract the heart rate (HR). Python has a package called biospy with a subpackage called signals. In this sub-package, we can find methods to process commmon physiological signals (biosignals).Package: Biospy Subpackage: biosppy.signals Module: biosppy.signals.bvpThe module biosppy.signals.bvp provides methods to process the blood volume pulse (BVP) signals.Process a raw BVP signal and extract the relevant signal features using default parameters.**Parameters:** - signal (array) – Raw BVP signal. - sampling_rate (int, float, optional) – Sampling frequency (Hz). - show (bool, optional) – If True, show a summary plot.**Returns:** - ts (array) – Signal time axis reference (seconds). - filtered (array) – Filtered BVP signal. - onsets (array) – Indices of BVP pulse onsets. - heart_rate_ts (array) – Heart rate time axis reference (seconds). - heart_rate (array) – Instantaneous heart rate (bpm).All the dataframes were joined using a common timestamp as the key to ensure uniform sampling rates across all datasets. This approach guarantees that each observation in the resulting dataframe corresponds to the same point in time, facilitating coherent analysis and interpretation of the data.

4.3.3. Muse 2

Start preparing the data and deleting all null values, next dropping all the columns that are not relevant for the study: ‘Accelerometer_X’, ‘Accelerometer_Y’, ‘Accelerometer_Z’, ‘Gyro_X’, ‘Gyro_Y’, ‘Gyro_Z’, ‘HSI_TP9’, ‘HSI_AF7’, ‘HSI_AF8’, ‘HSI_TP10’, ‘Battery’, ‘Elements’.

The data, originally recorded in microseconds, was transformed using a group-by operation to aggregate the information into second-level intervals.

Now, the dataframe is ready for joining all the biometrics information with the neural waves by the key, which, in this case, is the timestamp.

The CSV files generated using the Mind Monitor app contain the brain activity recorded from participants, along with additional information related to the operation of the Muse 2 device. These data include the following:

- Raw data from the four headband sensors, expressed in microvolts.

- Absolute band powers (Delta, Theta, Alpha, Beta, and Gamma) for each of the four sensors, expressed in decibels.

- Battery level, reported as a percentage.

- Connectivity status, encoded in binary format (connected/disconnected).

- Movement data, captured by the accelerometer and reported in units of gravity.

- Positioning data, obtained via the gyroscope, expressed in degrees per second.

4.3.4. Azure Kinect

The files generated by the Azure Kinect were first edited with Filmora Wondershare, where the videos were trimmed, removing excess footage at the beginning and end and leaving only the time when the participants were answering the NPFC-Test. Once the videos were trimmed to the total duration of the test, they were further divided into 9 segments, each corresponding to the time each participant spent on each of the tasks. The duration of each of these segments was obtained from the FlexQuiz database.

Next, the edited video files were processed in Python using the Py-Feat library. This library allowed for the analysis of participants’ faces in the video. The analysis reported the x and y positions of the points (landmarks) to calculate the facial action units (AU), a measure of how the AUs distort with facial expression, the number of the frame analyzed, and depending on the algorithm used, indicators for each of the 7 emotions detected.

The seven emotions that could be detected were: anger, disgust, fear, happiness, sadness, surprise, and neutrality. For this experiment, two different algorithms available within Py-Feat were used for emotion detection: “resmasknet”, which uses a residual masking network and returns a probability for each of the emotions, and “svm”, which stands for support vector machine (SVM), which indicates whether the emotion is detected, with a 1, or not detected, with a 0.

Once the results from Py-Feat processing were obtained, a database cleaning process was performed, where the coordinates in x, y, and z, as well as the AU values, were discarded, only preserving the emotion calculation values. Similarly, in this processing step, the video frame and timestamps within each task were kept, as well as the global frame and timestamps from the entire test. This cleaning process was carried out for each result from the Py-Feat algorithms “resmasknet” and “svm”. Finally, a join was performed with the FlexiQuiz database to include a unique timestamp for each row of the database, enabling later cross-referencing across all devices.

4.4. Data Fusion

In this section, the integration of multiple synchronized datasets is presented. Given that FlexiQuiz stores information regarding the time spent by each participant in each section of the NPFC-Test (i.e., timestamps), this platform served as the reference point for synchronizing the data collected by the remaining devices. With each set capturing data at consistent second intervals, we carefully merged them to extract a unified dataset. At the same time, we had an ID for every participant; this means we had the same amount of information for each participant for each stage of the experiment. Each participant in the experiment was identified with a unique ID. This ID was the connection between each stage of the experiment and the different data we were collecting. Once we finished each days collection, we started making the proper process for the data, and once all the data were processed in the same datetime, this means that all data to be processed were expressed in seconds and contained the same number of records within the 21-min duration of the experiment. Once an individual dataset is prepared for each participant, the integration process begins by combining all participant datasets into a single comprehensive dataset. This merging is performed chronologically, aligning data by common columns and ordering the entries by participant ID and datetime.

5. Data Records

The dataset comprises structured log data collected during cognitive task evaluations conducted in the Experiential Classroom of the Institute for the Future of Education, Tecnológico de Monterrey. Each record corresponds to a specific participant interaction during a given test session, and the data are organized into a set of core variables described below. In addition, the NPFC-Test dataset includes a diverse range of multimodal indicators. These are structured and synchronized through a common timestamp and are described as follows:

- Timestamp (Datetime): A unique date and time identifier in the format yyyy/mm/dd hh:mm:ss. This allows for the temporal sequencing of participant interactions.

- SubjectID (String): A unique participant code formatted as IFE-EC-NPFC-T003-NN, enabling anonymous yet traceable user identification.

- TestTime (Time): Indicates the elapsed time for the overall test session, captured in the format HH:MM:SS.

- TaskNum (Number): Encodes the task type using numeric identifiers. For instance,

- -

- Represents demographic data collection,

- -

- Corresponds to initial task calibration, and subsequent numbers denote cognitive or sensor-based tasks.

- TaskTime (Time): Captures the amount of time spent on each individual task, also recorded in HH:MM:SS format.

- Neuronal Activity (EEG): Derived from the Muse 2 headband, includes absolute band power values (Delta, Theta, Alpha, Beta, Gamma) per sensor. Data are represented in decibels and structured by second-level timestamps.

- Physiological Signals captured through the EmbracePlus device, which include

- -

- Electrodermal Activity (EDA): Measured in microsiemens (µS), at 1 Hz.

- -

- Blood Volume Pulse (BVP): Processed to derive heart rate (beats per minute).

- -

- Temperature: Recorded in degrees Celsius, one sample per second.

- Facial Coding Data: Extracted using the Azure Kinect and processed with the Py-Feat library, includes action units (AUs), encoded facial movement metrics.

- Emotion Classification: Probability scores (via ResMaskNet) and binary presence indicators (via SVM) for emotions: anger, disgust, fear, happiness, sadness, surprise, and neutrality.

- Self-Report Metrics: Likert-scale responses related to valence, arousal, and concentration, obtained after each task segment.

Each modality is integrated into the final dataset by aligning its records through the common timestamp variable.

Author Contributions

Conceptualization, L.F.M.-M. and J.A.R.-R.; methodology, L.F.M.-M. and L.E.G.-F.; software, M.F.-A. and S.N.T.-R.; validation, L.F.M.-M., L.E.G.-F. and M.F.-A.; formal analysis, M.F.-A.; investigation, S.N.T.-R.; resources, J.A.R.-R.; data curation, M.F.-A.; writing—original draft preparation, L.F.M.-M.; writing—review and editing, J.A.R.-R.; visualization, M.F.-A.; supervision, J.A.R.-R.; project administration, J.A.R.-R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the Institute for the Future of Education at Tecnológico de Monterrey.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of Tecnológico de Monterrey (protocol code 2023-CEI-IFE-016, approved on 3 November 2023).

Informed Consent Statement

Written informed consent has been obtained from the participants to publish this paper, ensuring the confidentiality and responsible use of any data that could lead to indirect identification.

Data Availability Statement

The complete dataset supporting the findings of this study has been made publicly available through the Institute for the Future of Education (IFE) Data Hub and can be accessed at the following link: https://doi.org/10.57687/FK2/ZXGVV0 [23].

Acknowledgments

The authors would like to express their gratitude to the Living Lab team at the Institute for the Future of Education in Tecnológico de Monterrey. The access to technological devices through the IFE’s Experiential Classroom–Learning Lab, coupled with their guidance and support, were fundamental to the completion of this work.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Correction Statement

This article has been republished with a minor correction to an author’s ORCID. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| NPFC | Neuronal, physiological, and facial coding |

| EC | Experiential classroom |

| IFE | Institute for the Future of Education |

| EEG | Electroencephalogram |

| EDA | Electrodermal activity |

| BVP | Blood volume pulse |

| HRV | Heart rate variability |

| HR | Heart rate |

| PPG | Photoplethysmography |

| CSV | Comma-separated values (data format) |

| SDK | Software Development Kit |

| ToF | Time-of-flight (used in depth cameras like Azure Kinect) |

| HCI | Human–computer interaction |

| AU | Action units (facial coding measurement units) |

| SVM | Support vector machine (machine learning algorithm) |

| Py-Feat | Python Facial Expression Analysis Toolbox |

| API | Application programming interface |

| LL | Living Lab |

| ID | Identifier |

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of Open Access Journals |

| MMLA | Multimodal learning analytics |

References

- Ochoa, X. Multimodal Learning Analytics—Rationale, Process, Examples, and Direction. In Handbook of Learning Analytics, 2nd ed.; Lang, C., Wise, A.F., Siemens, G., Gašević, D., Merceron, A., Eds.; Society for Learning Analytics Research (SoLAR): Beaumont, AB, Canada, 2022; Chapter 6; pp. 54–65. [Google Scholar] [CrossRef]

- Rapanta, C.; Botturi, L.; Goodyear, P.; Guàrdia, L.; Koole, M. Online University Teaching During and After the Covid-19 Crisis: Refocusing Teacher Presence and Learning Activity. Postdigital Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef] [PubMed]

- Morán-Mirabal, L.F.; Avarado-Uribe, J.; Ceballos, H.G. Using AI for Educational Research in Multimodal Learning Analytics. In What the AI Can Do: Knowledge Strengths, Biases and Resistances to Assume the Algorithmic Culture, 1st ed.; Cebral-Loureda, M., Rincón-Flores, E., Sanchez-Ante, G., Eds.; CRC Press: Boca Raton, FL, USA; Taylor & Francis Group: Boca Raton, FL, USA, 2023; Chapter 9; pp. 154–174. [Google Scholar] [CrossRef]

- Mu, S.; Cui, M.; Huang, X. Multimodal Data Fusion in Learning Analytics: A Systematic Review. Sensors 2020, 20, 6856. [Google Scholar] [CrossRef] [PubMed]

- Ouhaichi, H.; Spikol, D.; Vogel, B. Research trends in multimodal learning analytics: A systematic mapping study. Comput. Educ. Artif. Intell. 2023, 4, 100136. [Google Scholar] [CrossRef]

- Liu, Y.; Peng, S.; Song, T.; Zhang, Y.; Tang, Y.; Li, Z. Multi-Modal Emotion Recognition Based on Local Correlation Feature Fusion. Front. Neurosci. 2022, 16, 744737. [Google Scholar] [CrossRef]

- Horvers, A.; Tombeng, N.; Bosse, T.; Lazonder, A.W.; Molenaar, I. Emotion Recognition Using Wearable Sensors: A Review. Sensors 2021, 21, 7869. [Google Scholar] [CrossRef]

- Morán-Mirabal, L.F.; Ruiz-Ramírez, J.A.; González-Grez, A.A.; Torres-Rodríguez, S.N.; Ceballos, H. Applying the Living Lab Methodology for Evidence-Based Educational Technologies. In Proceedings of the 2025 IEEE Global Engineering Education Conference, EDUCON 2025, London, UK, 22–25 April 2025. [Google Scholar]

- Rojas Vistorte, A.O.; Deroncele-Acosta, A.; Martín Ayala, J.L.; Barrasa, A.; López-Granero, C.; Martí-González, M. Integrating artificial intelligence to assess emotions in learning environments: A systematic literature review. Front. Psychol. 2024, 15, 1387089. [Google Scholar] [CrossRef] [PubMed]

- Lian, H.; Lu, C.; Li, S.; Zhao, Y.; Tang, C.; Zong, Y. A Survey of Deep Learning-Based Multimodal Emotion Recognition: Speech, Text, and Face. Entropy 2023, 25, 1440. [Google Scholar] [CrossRef] [PubMed]

- Pekrun, R.; Linnenbrink-Garcia, L. Academic Emotions and Student Engagement. In Handbook of Research on Student Engagement; Christenson, S.L., Reschly, A.L., Wylie, C., Eds.; Springer: Boston, MA, USA, 2012; pp. 259–282. [Google Scholar] [CrossRef]

- Bustos-López, M.; Cruz-Ramírez, N.; Guerra-Hernández, A.; Sánchez-Morales, L.N.; Cruz-Ramos, N.A.; Alor-Hernández, G. Wearables for Engagement Detection in Learning Environments: A Review. Biosensors 2022, 12, 509. [Google Scholar] [CrossRef] [PubMed]

- Hernández-de Menéndez, M.; Morales-Menéndez, R.; Escobar, C.A.; Arinez, J. Biometric applications in education. Int. J. Interact. Des. Manuf. 2021, 15, 365–380. [Google Scholar] [CrossRef] [PubMed]

- Hernández-Mustieles, M.A.; Lima-Carmona, Y.E.; Pacheco-Ramírez, M.A.; Mendoza-Armenta, A.A.; Romero-Gómez, J.E.; Cruz-Gómez, C.F.; Rodríguez-Alvarado, D.C.; Arceo, A. Wearable Biosensor Technology in Education: A Systematic Review. Sensors 2024, 24, 2437. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Androsov, A.; Yan, H.; Chen, Y. Bridging Computer and Education Sciences: A Systematic Review of Automated Emotion Recognition in Online Learning Environments. Comput. Educ. 2024, 220, 105111. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; Frosolone, M.; Improta, G.; Moccaldi, N.; Pollastro, A. EEG-based measurement system for monitoring student engagement in learning 4.0. Sci. Rep. 2022, 12, 5857. [Google Scholar] [CrossRef] [PubMed]

- Boothe, M.; Yu, C.; Lewis, A.; Ochoa, X. Towards a Pragmatic and Theory-Driven Framework for Multimodal Collaboration Feedback. In Proceedings of the LAK22: 12th International Learning Analytics and Knowledge Conference, New York, NY, USA, 21–25 March 2022; pp. 507–513. [Google Scholar] [CrossRef]

- Mirabal, L.F.M.; Álvarez, L.M.M.; Ramirez, J.A.R. Muse 2 Headband Specifications (Neuronal Tracking), Reporte, ITESM, 2024. Available online: https://hdl.handle.net/11285/685108 (accessed on 18 June 2025).

- Morán Mirabal, L.F.; Favaroni Avila, M.; Ruiz Ramirez, J.A. Empatica Embrace Plus Specifications (Physiological Tracking); Report; ITESM: Monterrey, Mexico, 2024; Available online: https://hdl.handle.net/11285/685107 (accessed on 18 June 2025).

- Bamji, C.S.; Mehta, S.; Thompson, B.; Elkhatib, T.; Prather, L.A.; Snow, D.; O’Connor, P.; Payne, A.D.; Fenton, J.; Akbar, M. A 0.13 μm CMOS System-on-Chip for a 512 × 424 Time-of-Flight Image Sensor with Multi-Frequency Photo-Demodulation up to 130 MHz and 2 GS/s ADC. IEEE J. Solid-State Circuits 2015, 50, 303–319. [Google Scholar] [CrossRef]

- Microsoft Corporation. Azure Kinect Developer Kit Documentation; Microsoft Docs, 2023; Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/ (accessed on 18 June 2025).

- Mind Monitor. Available online: https://mind-monitor.com/ (accessed on 18 June 2025).

- Morán Mirabal, L.F.; Güemes Frese, L.E.; Favarony Avila, M.; Torres Rodríguez, S.N.; Ruiz Ramirez, J.A. NPFC-Test 23A: A Dataset for Assessing Neuronal, Physiological, and Facial Coding Attributes in a Human-Computer Interaction Learning Scenario; Tecnológico de Monterrey: Monterrey, Mexico, 2024. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).