Midpalatal Suture CBCT Image Quantitive Characteristics Analysis Based on Machine Learning Algorithm Construction and Optimization

Abstract

:1. Introduction

2. Materials and Methods

2.1. Midpalatal Suture CBCT Normalized Database of Growth Population

2.1.1. Samples

2.1.2. CBCT Examination

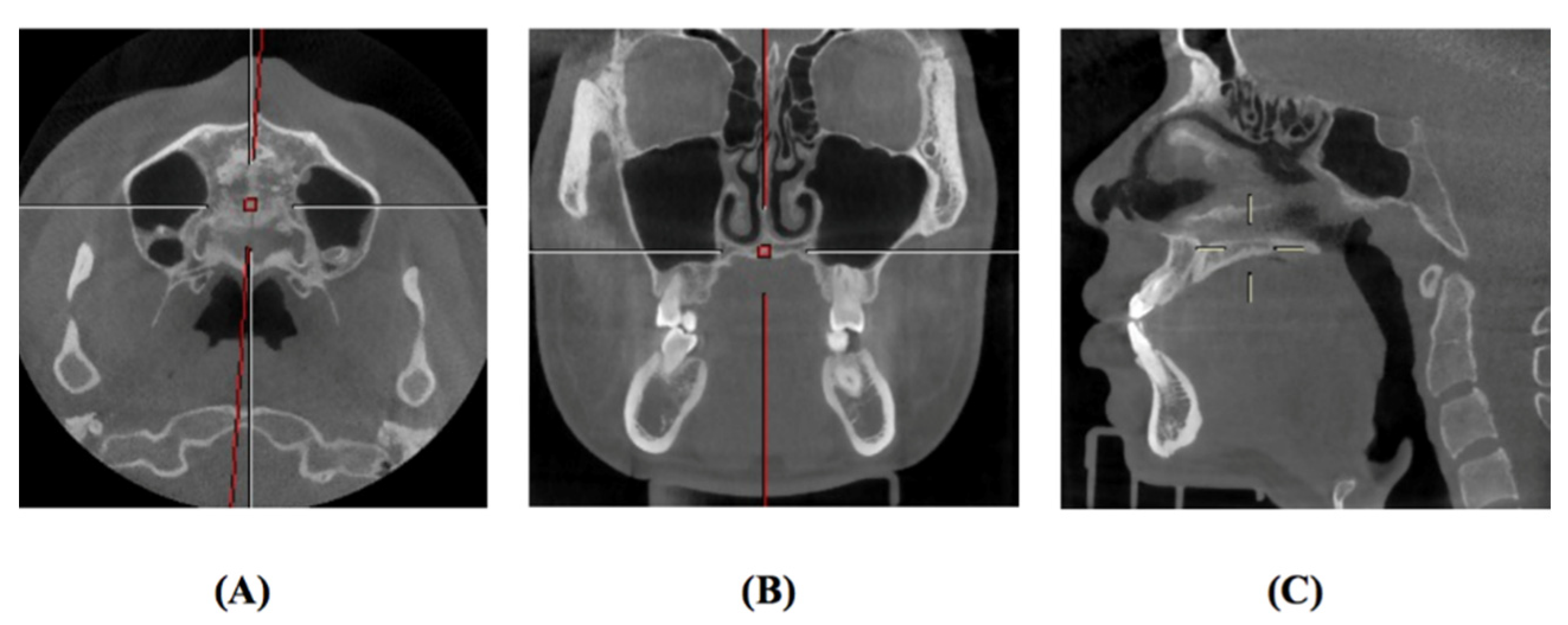

2.2. Region of Interest Labeling in Midpalatal Suture CBCT Images

2.3. Image Analysis Algorithm

2.3.1. Image Processing

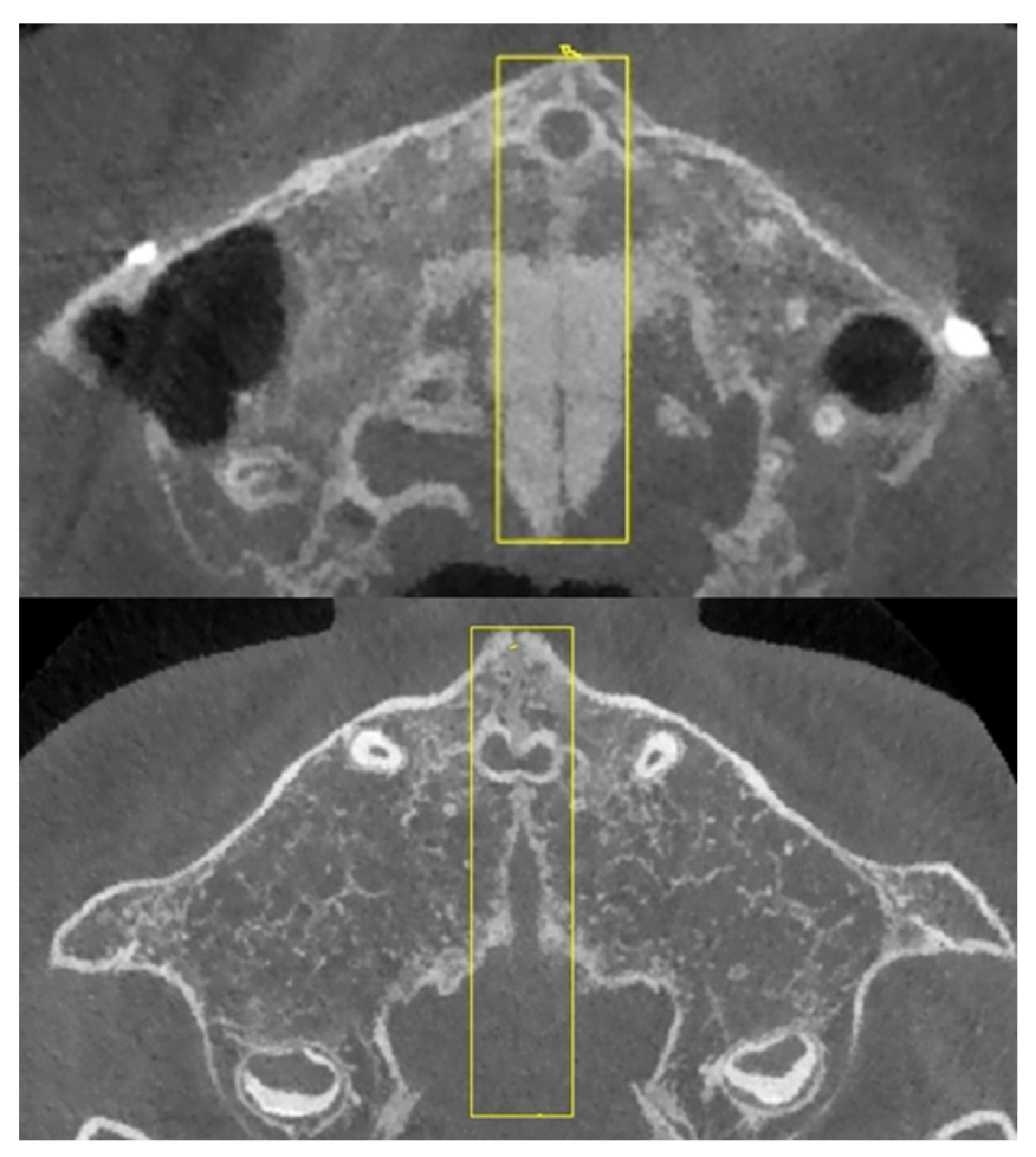

2.3.2. Image Fusion

2.3.3. Fused Image Optimization

2.4. Image Texture Feature Analysis Algorithm

2.5. Age Range Prediction of Midpalatal Suture CBCT Image Features

2.5.1. Datasets and Labels

- (1)

- Validation set: Out of the total samples, 10 typical samples were selected from each age range, and these 50 images were used as the validation set.

- (2)

- Test set: Out of the total samples, 20 typical samples were selected from each age range, and these 100 images were used as the test set.

- (3)

- Training set: Out of the total samples, the remaining 856 samples, apart from those used in the validation set and test set, were used as the training set.

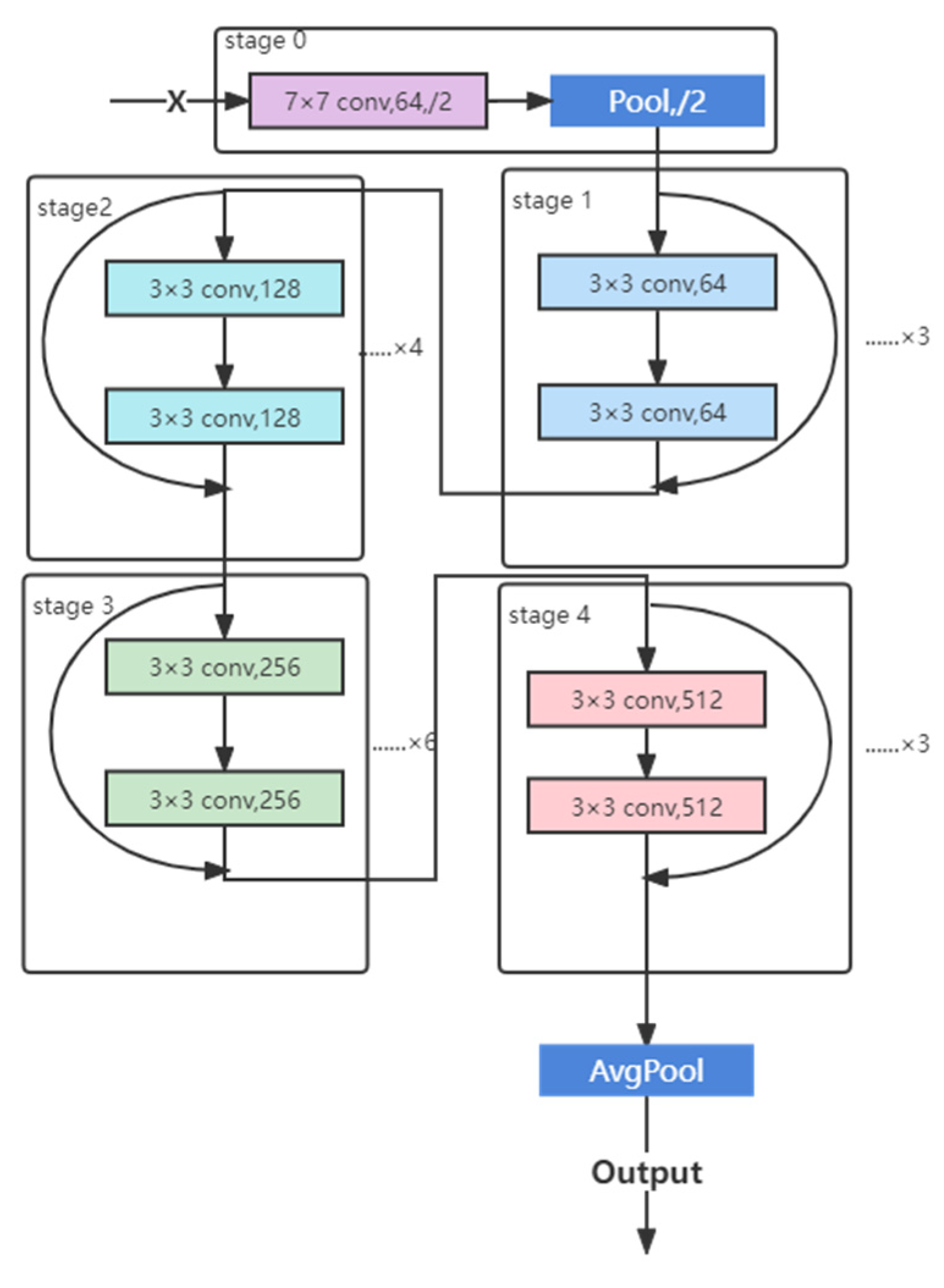

2.5.2. CNN

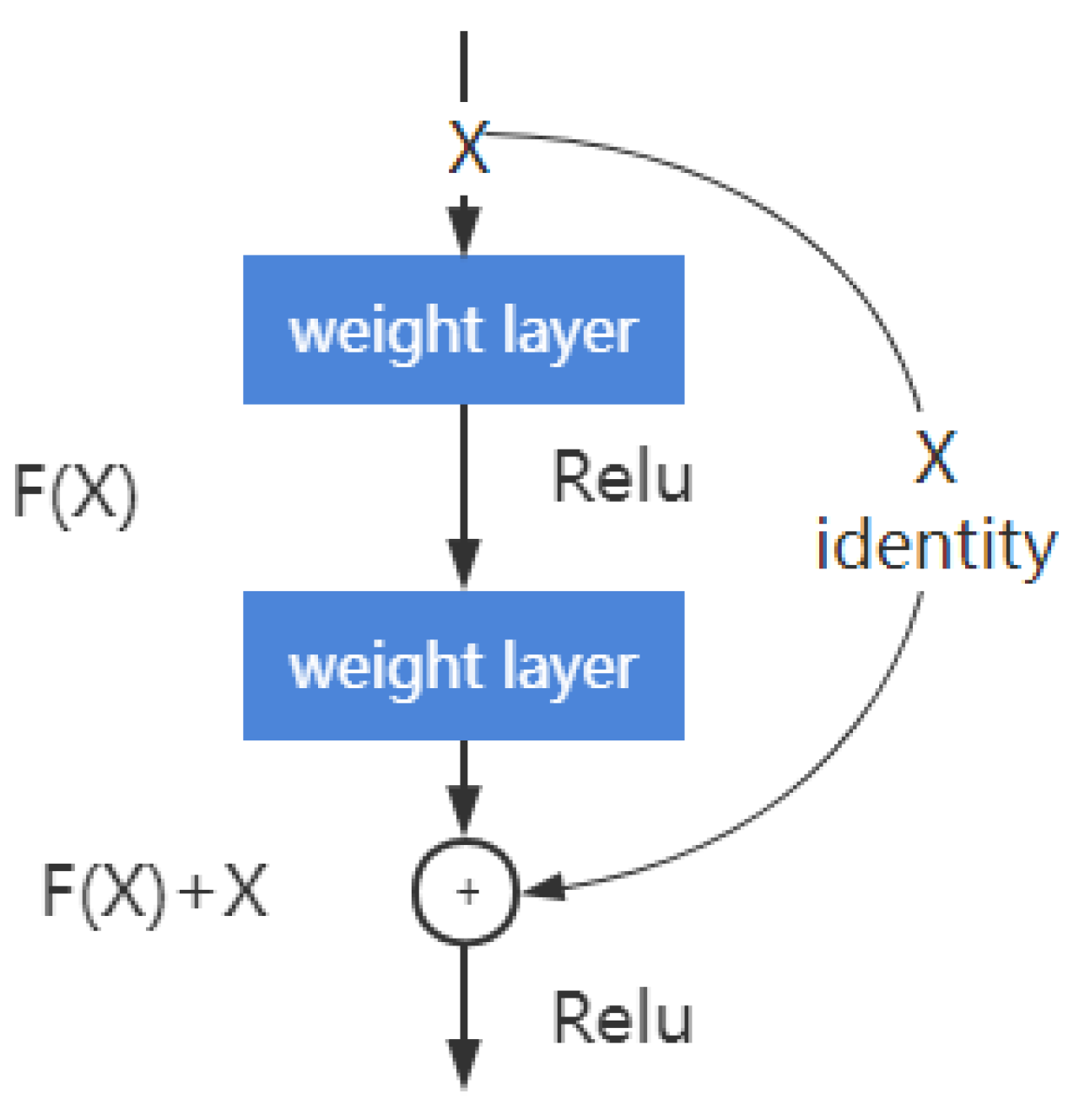

2.5.3. Deep Residual Learning

2.5.4. ResNet Structure

2.5.5. Hyperparameters Selection

2.5.6. Feature-Based Visualization

3. Results

3.1. Demographic Characteristic

3.2. Midpalatal Suture ROI Extraction and Image Fusion Algorithm

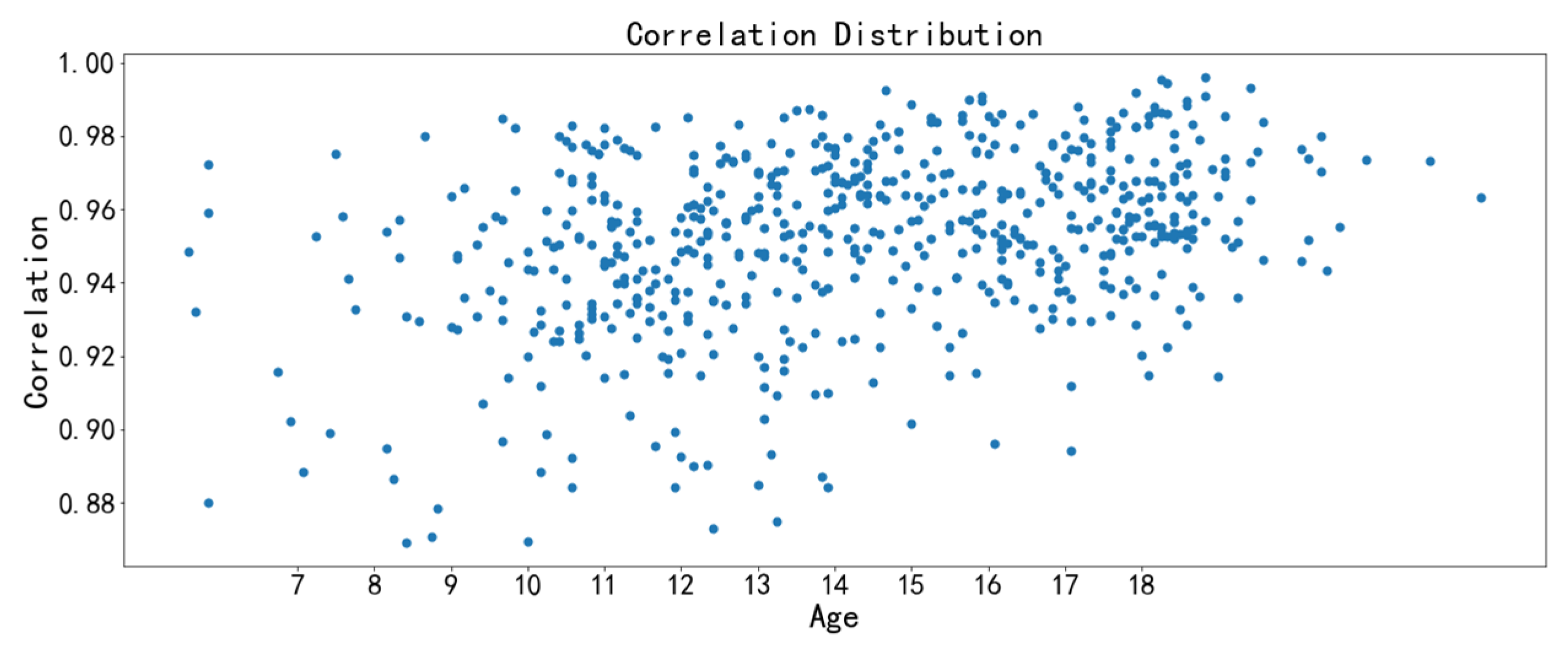

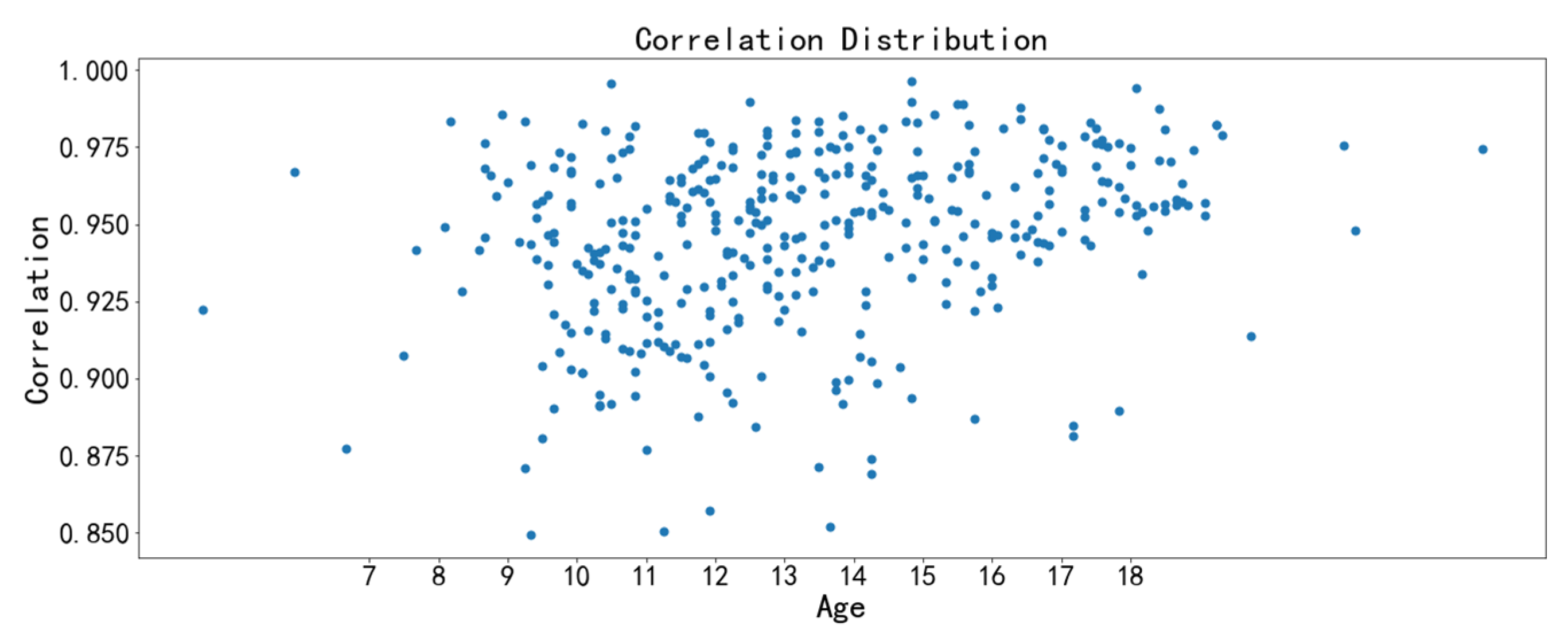

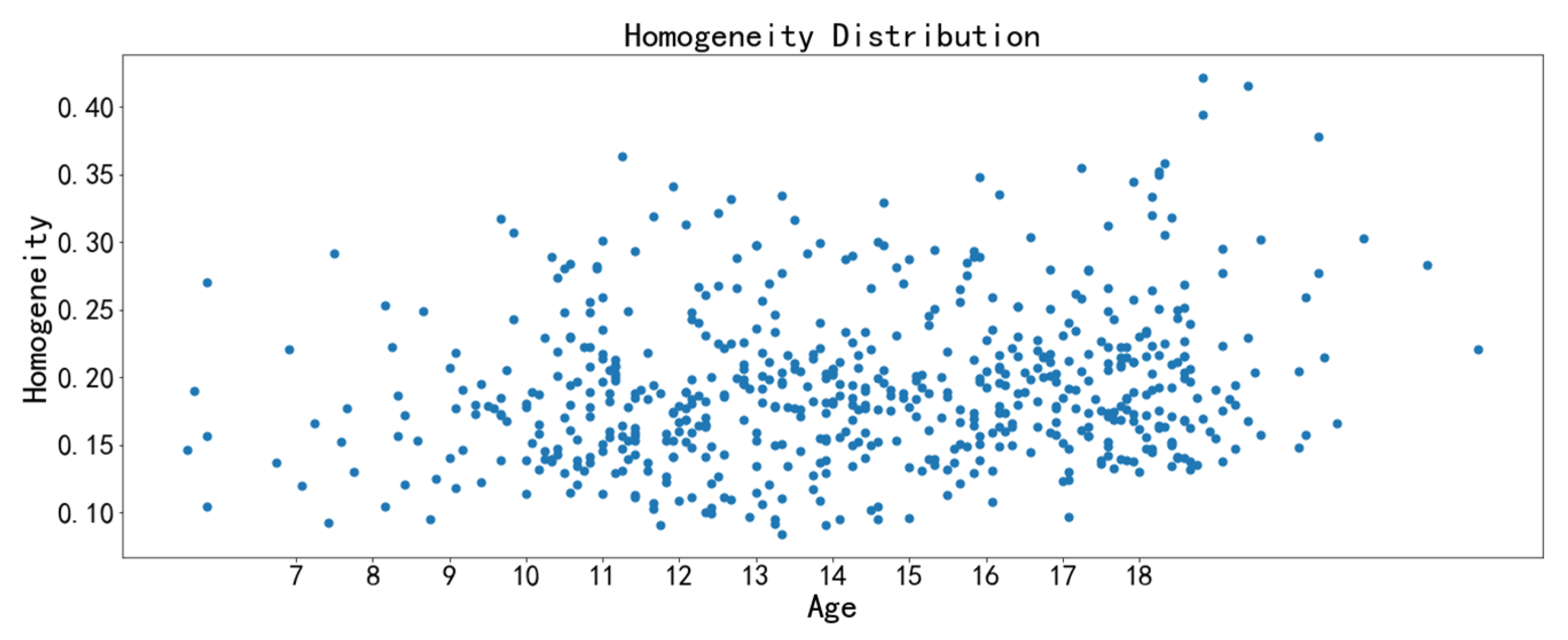

3.3. Image Feature Analysis

3.4. Age Range Prediction Model by Midpalatal Suture CBCT Image Features

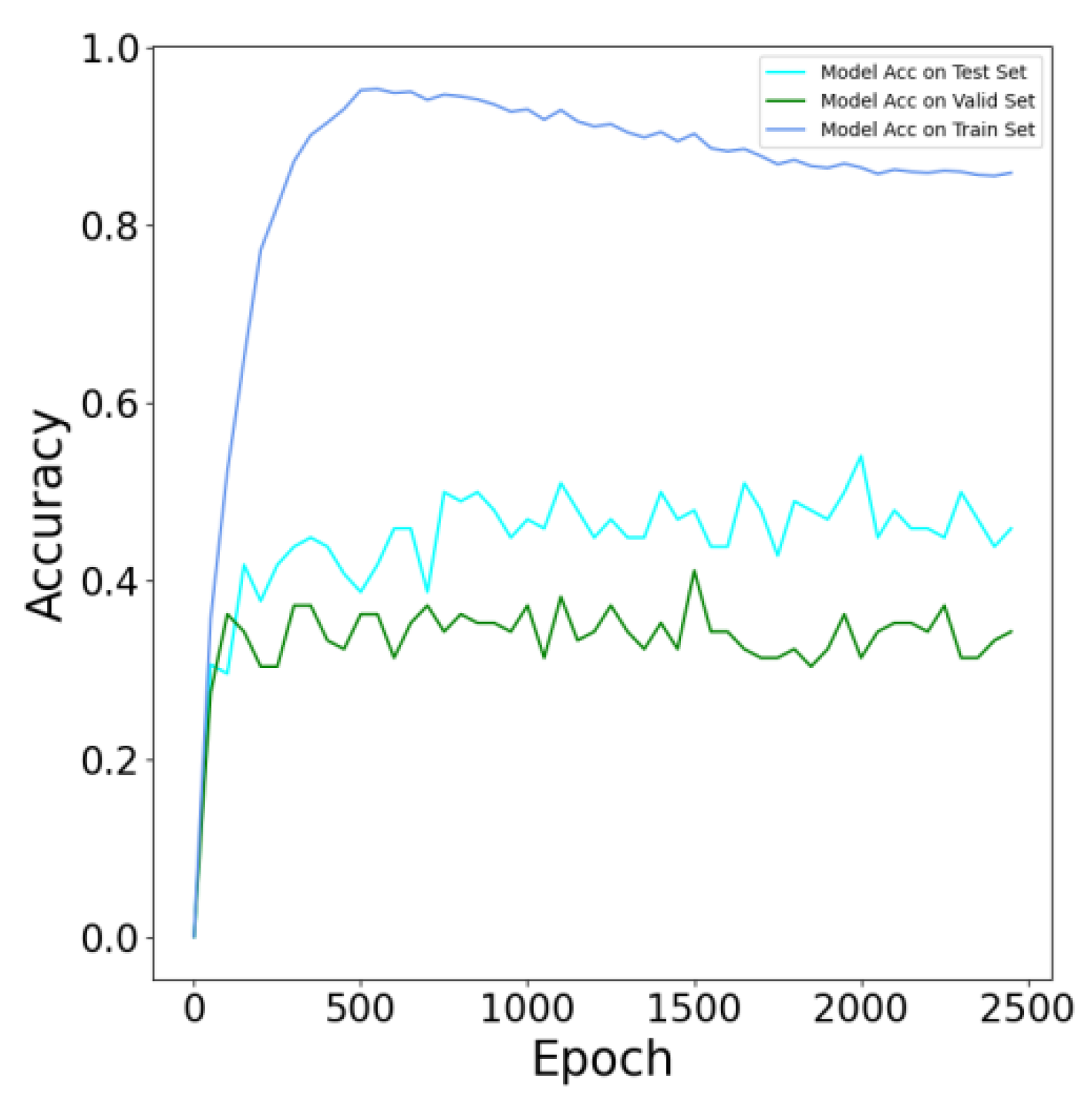

3.4.1. Model Evaluation

3.4.2. Evaluation of Model Performance

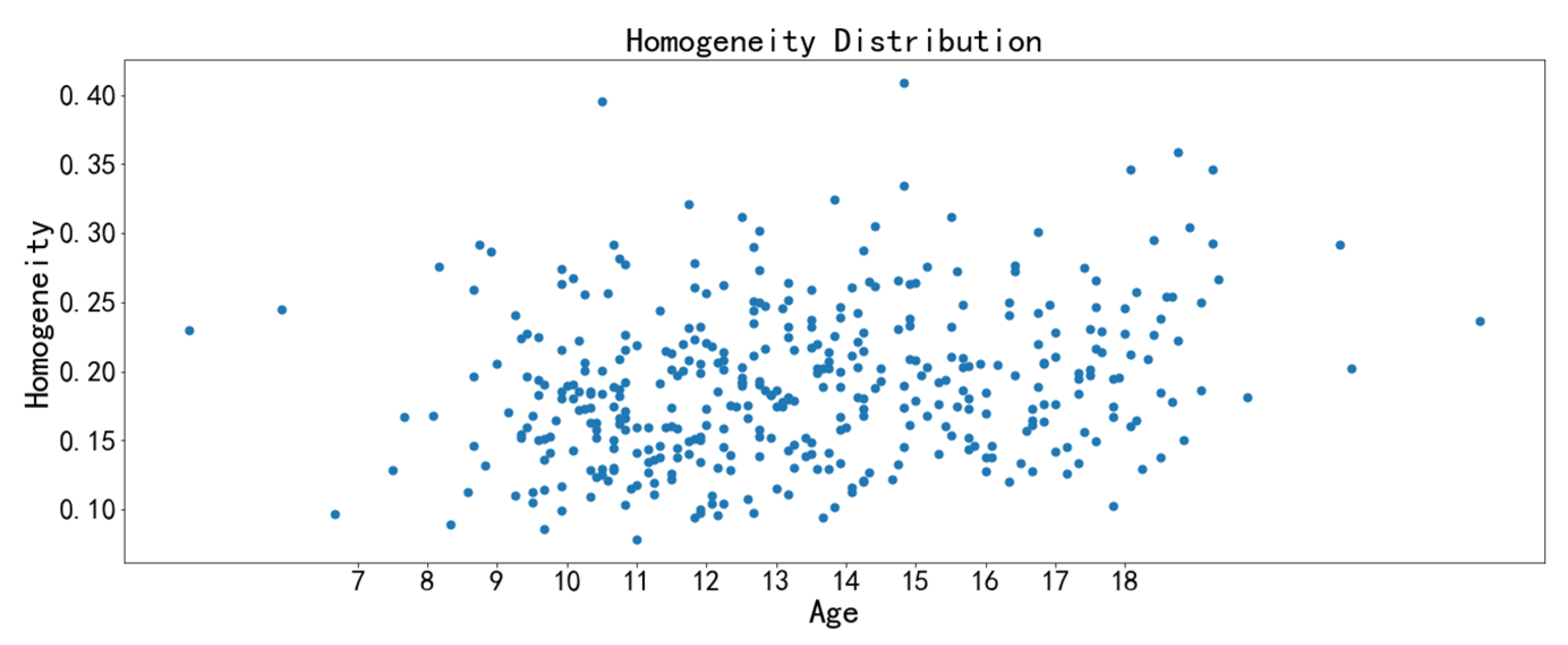

3.4.3. Feature-Based Visualization

4. Discussion

4.1. Innovative Midpalatal Suture Image Fusion Algorithm

4.2. Clinical Implications of Midpalatal Suture Image Texture Features

4.3. Clinical Significance of Age Range Prediction Model by Midpalatal Suture Image Features

5. Conclusions

- (1)

- We designed a midpalatal suture CBCT image fusion algorithm to utilize multi-slice valuable image information to improve the appraisal accuracy of midpalatal suture maturation and ossification status. This algorithm avoids the influence of CBCT examination orientation and the convex palatal vault, thus helping to show the overall perspective of midpalatal suture in one fused image.

- (2)

- The correlation feature and the homogeneity feature are the two texture features with the strongest relevance to chronological age. The midpalatal suture maturation and ossification status experience significant changes during the fast growth and development period. Furthermore, the overall performance of the age range prediction CNN model by midpalatal suture image features is satisfactory, especially in the youngest 4 to 10 years range and the oldest 17 to 23 years range. While for adolescents of 13 to 14 years range, the prediction performance is compromised, indicating that RME clinical effectiveness should be appraised by midpalatal suture image features directly rather than by chronological age for this age range.

- (3)

- There are some limitations to this study. Sample representativeness and sample size should be further improved and expanded by the addition of multicenter samples. Furthermore, the relationship between the midpalatal suture fused image features and maxillary transverse developmental status need to be further clarified to provide evidence for appraising suitable RME treatment timing.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Proffit, H.F.; Larson, B.; Sarver, D. Contemporary Orthodontics, 6th ed.; Elsevier: Amsterdam, The Netherlands, 2018. [Google Scholar]

- Da Silva Filho, O.G.; Magro, A.C.; Filho, L.C. Early treatment of the Class III malocclusion with rapid maxillary expansion and maxillary protraction. Am. J. Orthod. Dentofac. Orthop. 1998, 113, 196–203. [Google Scholar] [CrossRef]

- Guest, S.S.; McNamara, J.A.; Baccetti, T.; Franchi, L. Improving Class II malocclusion as a side-effect of rapid maxillary expansion: A prospective clinical study. Am. J. Orthod. Dentofac. Orthop. 2010, 138, 582–591. [Google Scholar] [CrossRef]

- Bishara, S.E.; Staley, R.N. Maxillary expansion: Clinical implications. Am. J. Orthod. Dentofac. Orthop. 1987, 91, 3–14. [Google Scholar] [CrossRef]

- Meazzini, M.C.; Corradi, F.; Mazzoleni, F.; De Ponti, E.; Maccagni, M.; Novelli, G.; Bozzetti, A. Circummaxillary Sutures in patients with apert, crouzon, and pfeiffer syndromes compared to nonsyndromic children: Growth, orthodontic, and surgical implications. Cleft Palate-Craniofac. J. 2021, 58, 299–305. [Google Scholar] [CrossRef]

- Quo, S.; Lo, L.F.; Guilleminault, C. Maxillary protraction to treat pediatric obstructive sleep apnea and maxillary retrusion: A preliminary report. Sleep Med. 2019, 60, 60–68. [Google Scholar] [CrossRef]

- Liu, S.; Xu, T.; Zou, W. Effects of rapid maxillary expansion on the midpalatal suture: A systematic review. Eur. J. Orthod. 2015, 37, 651–655. [Google Scholar] [CrossRef] [Green Version]

- Giliana, Z.; Serena, C.; Tiziana, D.; Carlo, C. Expansion of maxillary arches with crossbite: A systematic review of RCTs in the last 12 years. Eur. J. Orthod. 2013, 35, 29–37. [Google Scholar] [CrossRef] [Green Version]

- Hass, A.J. Rapid expansion of the maxillary dental arch and nasal cavity by opening the midpalatal suture. Angle Orthod. 1961, 31, 73–90. [Google Scholar]

- Angell, E.H. Treatment of irregularity of the permanent or adult teeth. Dental Cosmos 1860, 1, 541–544; 599–600. [Google Scholar]

- McNamara, J.A. Maxillary transverse deficiency. Am. J. Orthod. Dentofac. Orthop. 2000, 117, 567–570. [Google Scholar] [CrossRef]

- Cameron, R.C.; Baccetti, T.; Franchi, L.; McNamara, M.A. Treatment timing for rapid maxillary expansion. Angle Orthod. 2001, 71, 343–350. [Google Scholar] [CrossRef]

- Baysal, A.; Karadede, I.; Hekimoglu, S.; Ucar, F.; Ozer, T.; Veli, I.; Uysal, T. Evaluation of root resorption following rapid maxillary expansion using cone-beam computed tomography. Angle Orthod. 2012, 82, 488–494. [Google Scholar] [CrossRef] [Green Version]

- Rungcharassaeng, K.; Caruso, J.M.; Kan, J.Y.K.; Kim, J.; Taylor, G. Factors affecting buccal bone changes of maxillary posterior teeth after rapid maxillary expansion. Am. J. Orthod. Dentofac. Orthop. 2007, 132, 428.e1–428.e8. [Google Scholar] [CrossRef]

- Northway, W.M.; Meade, J.B., Jr. Surgically assisted rapid maxillary expansion: A comparison of technique, response, and stability. Angle Orthod. 1997, 67, 309–320. [Google Scholar] [CrossRef]

- Suri, L.; Taneja, P. Surgically assisted rapid palatal expansion: A literature review. Am. J. Orthod. Dentofac. Orthop. 2008, 133, 290–302. [Google Scholar] [CrossRef]

- Priyadarshini, J.; Mahesh, C.M.; Chandrashekar, B.S.; Sundara, A.; Arun, A.V.; Reddy, V.P. Stress and displacement patterns in the craniofacial skeleton with rapid maxillary expansion-a finite element method study. Prog. Orthod. 2017, 18, 17. [Google Scholar] [CrossRef] [Green Version]

- Korbmacher, H.; Schilling, A.; Püschel, K.; Amling, M.; Kahl-Nieke, B. Age-dependent three-dimensional micro-computed tomography analysis of the human midpalatal suture. J. Orofac. Orthop. 2007, 68, 364–376. [Google Scholar] [CrossRef]

- Willershausen, I.; Erbe, C.; Al-Maawi, S.; Orlowska, A.; Wehrbein, H.; Ghanaati, S. Development of a novel histological and histomorphometric evaluation protocol for a standardized description of the mid-palatal suture—An ex vivo study. J. Anat. 2019, 235, 180–188. [Google Scholar] [CrossRef]

- Fricke-Zecha, S.; Gruber, R.M.; Dullin, C.; Zapf, A.; Kramer, F.J.; Kubein-Meesenburg, D.; Hahn, W. Measurement of the midpalatal suture width A comparison of flat-panel volume computed tomography to histomorphometric analysis in a porcine model. Angle Orthod. 2012, 82, 145–150. [Google Scholar] [CrossRef]

- Wehrbein, H.; Yildizhan, F. The mid-palatal suture in young adults. A radiological-histological investigation. Eur. J. Orthod. 2001, 23, 105–114. [Google Scholar] [CrossRef] [Green Version]

- Isfeld, D.; Lagravere, M.; Leon-Salazar, V.; Flores-Mir, C. Novel methodologies and technologies to assess mid-palatal suture maturation: A systematic review. Head Face Med. 2017, 13, 13. [Google Scholar] [CrossRef]

- Goswami, A.; Foley, L.; Weisbecker, V. Patterns and implications of extensive heterochrony in carnivoran cranial suture closure. J. Evol. Biol. 2013, 26, 1294–1306. [Google Scholar] [CrossRef] [Green Version]

- De Grauwe, A.; Ayaz, I.; Shujaat, S.; Dimitrov, S.; Gbadegbegnon, L.; Vannet, V.B.; Jacobs, R. CBCT in orthodontics: A systematic review on justification of CBCT in a pediatric population prior to orthodontic treatment. Eur. J. Orthod. 2019, 41, 381–389. [Google Scholar] [CrossRef]

- Gao, L.; Sun, J.; Zhou, X.J.; Yu, G.X. In vivo methods for evaluating human midpalatal suture maturation and ossification: An updated review. Int. Orthod. 2022, 20, 100634. [Google Scholar] [CrossRef]

- De Vos, W.; Casselman, J.; Swennen, G.R. Cone-beam computerized tomography (CBCT) imaging of the oral and maxillofacial region: A systematic review of the literature. Int. J. Oral. Maxillofac. Surg. 2009, 38, 609–625. [Google Scholar] [CrossRef]

- Grünheid, T.; Larson, C.E.; Larson, B.E. Midpalatal suture density ratio: A novel predictor of skeletal response to rapid maxillary expansion. Am. J. Orthod. Dentofac. Orthop. 2017, 151, 267–276. [Google Scholar] [CrossRef]

- Angelieri, F.; Cevidanes, L.H.; Franchi, L.; Gonçalves, J.R.; Benavides, E.; McNamara, J.A., Jr. Midpalatal suture maturation: Classification method for individual assessment before rapid maxillary expansion. Am. J. Orthod. Dentofac. Orthop. 2013, 144, 759–769. [Google Scholar] [CrossRef] [Green Version]

- Gao, L.; Gu, Y. Preliminary investigation on morphological stages of midpalatal suture maturation and its related distribution of chronological age in Chinese population. Chin. J. Orthod. 2020, 27, 61–66. [Google Scholar] [CrossRef]

- Kajan, Z.D.; Nasab, N.K.; Eghrari, N. Quantitative evaluation of midpalatal suture opening and its relation with zygomaticomaxillary suture status in patients aged 7–25 years using cone beam computed tomography images: In an Iranian Population. Contemp. Clin. Dent. 2018, 9, S89–S94. [Google Scholar] [CrossRef]

- Samra, D.A.; Hadad, R. Midpalatal suture: Evaluation of the morphological maturation stages via bone density. Prog. Orthod. 2018, 19, 29. [Google Scholar] [CrossRef] [Green Version]

- Zhe, X. Research on Feature Extraction and Its Application of Medical Image. Ph.D. Thesis, School of Information and Software Engineering, Chengdu, China, 2017. [Google Scholar]

- Alfaer, N.M.; Aljohani, H.M.; Abdel-Khalek, S.; Alghamdi, A.S.; Mansour, R.F. Fusion-based deep learning with nature-inspired algorithm for intracerebral haemorrhage diagnosis. J. Healthc. Eng. 2022, 18, 4409336. [Google Scholar] [CrossRef]

- El-Hag, N.A.; Sedik, A.; El-Banby, G.M.; El-Shafai, W.; Khalaf, A.A.M.; Al-Nuaimy, W.; El-Samie, A.F.E.; El-Hoseny, H.M. Utilization of image interpolation and fusion in brain tumor segmentation. Int. J. Numer. Method Biomed. Eng. 2021, 37, e3449. [Google Scholar] [CrossRef]

- Nayantara, P.V.; Kamath, S.; Manjunath, K.N.; Rajagopal, K.V. Computer-aided diagnosis of liver lesions using CT images: A systematic review. Comput. Biol. Med. 2020, 127, 104035. [Google Scholar] [CrossRef]

- Thoenissen, P.; Bucher, A.; Burck, I.; Sader, R.; Vogl, T.; Ghanaati, S. Image fusion improves interdisciplinary communication in the treatment of head and neck cancer. J. Craniofac Surg. 2022, 3. online ahead of print. [Google Scholar] [CrossRef]

- Wang, Y.H.; Li, G.; Ma, R.H.; Zhao, Y.P.; Zhang, H.; Meng, J.H.; Mu, C.C.; Sun, C.K.; Ma, X.C. Diagnostic efficacy of CBCT, MRI, and CBCT-MRI fused images in distinguishing articular disc calcification from loose body of temporomandibular joint. Clin. Oral. Investig. 2021, 25, 1907–1914. [Google Scholar] [CrossRef]

- Chen, H.H.; Xu, T.M. Research progress of artificial intelligence in the recognition of Dentofac. features. Chin. J. Orthod. 2022, 29, 37–41. [Google Scholar] [CrossRef]

- Chen, Y.T.; Jiao, Q.L.; Zhu, M.; Li, Q.Y. Evaluation of the maturation stage of the midpalatal suture with CNN. Head Neck Imaging 2020, 26, 115–119. [Google Scholar] [CrossRef]

- Bhataria, K.C.; Shah, B.K. A review of image fusion techniques. In Proceedings of the 2018 Second International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 15–16 February 2018. [Google Scholar]

- Yadav, J.; Dogra, A.; Goyal, B.; Agrawal, S. A review on image fusion methodologies and applications. Res. J. Pharm. Technol. 2017, 10, 1239. [Google Scholar] [CrossRef]

- Sahu, D.K.; Parsai, M. Different image fusion techniques—A critical review. Int. J. Mod. Eng. Res. 2012, 2, 4298–4301. [Google Scholar]

- Fan, X.Y.; Dau, M.Z.; Liu, C.X.; Wu, F.; Yan, X.D.; Feng, Y.; Feng, Y.Q.; Su, B.Q. Effect of image noise on the classification of skin lesions using deep convolutional neural networks. Tsinghua Sci. Technol. 2019, 25, 425–434. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Visual area of the lateral suprasylvian gyrus (Clare-Bishop area) of the cat. J. Physiol. 1969, 202, 251–260. [Google Scholar] [CrossRef] [Green Version]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zheng, G.Y.; Han, G.H.; Soomro, N.Q. An inception module CNN classifiers fusion method on pulmonary nodule diagnosis by signs. Tsinghua Sci. Technol. 2019, 25, 368–383. [Google Scholar] [CrossRef]

- Poojary, R.; Pai, A. Comparative study of model Optimization techniques in Fine-Tuned CNN Models. In Proceedings of the 2019 International Conference on Electrical and Computing Technologies and Applications (ICECTA), Ras Al Khaimah, United Arab Emirates, 19–21 November 2019. [Google Scholar]

- Oono, K.; Suzuki, T. Approximation and non-parametric estimation of ResNet-type convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Taiyuan, China, 8–10 November 2019. [Google Scholar]

- Karim, M.R.; Beyan, O.; Zappa, A.; Costa, I.G.; Decker, S. Deep learning-based clustering approaches for bioinformatics. Brief. Bioinform. 2021, 22, 393–415. [Google Scholar] [CrossRef] [Green Version]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Shenzhen, China, 10–13 July 2017. [Google Scholar]

- Shibusawa, N.; Endo, Y.; Morimoto, N.; Takahashi, I.; Miura, T. Mathematical modeling of palatal suture pattern formation: Morphological differences between sagittal and palatal sutures. Sci. Rep. 2021, 11, 8995. [Google Scholar] [CrossRef]

- Knaup, B.; Yildizhan, F.; Wehrbein, H. Age-related changes in the midpalatal suture. A histomorphometric study. J. Orofac. Orthop. 2004, 65, 467–474. [Google Scholar] [CrossRef]

- Persson, M.; Magnusson, B.C.; Thilander, B. Sutural closure in rabbit and man: A morphological and histochemical study. J. Anat. 1978, 125, 313–321. [Google Scholar] [CrossRef]

- Melsen, B. Palatal growth studied on human autopsy material. A histologic microradiographic study. Am. J. Orthod. 1975, 68, 42–54. [Google Scholar] [CrossRef]

- Latham, R.A. The development, structure and growth pattern of the human mid-palatal suture. J. Anat. 1971, 108, 10. [Google Scholar]

- Pritchard, J.J.; Scott, J.H.; Girgis, F.G. The structure and development of cranial and facial sutures. J. Anat. 1956, 90, 14. [Google Scholar]

- Mohanaiah, P.; Sathyanarayana, P.; GuruKumar, L. Image texture feature extraction using GLCM approach. Int. J. Sci. Res. Publ. 2013, 3, 1–5. [Google Scholar]

- Gadkari, D. Image Quality Analysis Using GLCM. Master’s Thesis, University of Central Florida, Orlando, FL, USA, 2004. [Google Scholar]

| Exclusion Criteria |

|---|

|

| Texture Feature | Description |

|---|---|

| Correlation | Correlation reflects the consistency of image texture. It is used to measure the similarity of spatial gray level co-occurrence matrix elements in row or column direction. |

| Homogeneity | Homogeneity is used to measure how much the local texture changes. A large value indicates that there is less change between different regions of the image texture, and the parts are more uniform. |

| Energy | Energy is the sum of the squares for the values of each element in the gray level co-occurrence matrix. It is a measure of the stability of the gray level change of the image texture and reflects the uniformity of the image gray level distribution and the thickness of the texture. A larger energy value indicates that the current texture is stable, with regular changes. |

| Contrast | Contrast reflects the clarity of the image and the depth of the texture grooves. The deeper the texture grooves, the greater the contrast is, and the clearer the visual effect will be. On the contrary, if the contrast is small, the grooves are shallow; thus, the effect will be fuzzy. |

| Dissimilarity | The dissimilarity reflects the total amount of local gray changes in the image. However, different from contrast, the weight of dissimilarity increases linearly with the distance between matrix elements and diagonal. |

| ASM (Angular Second Moment) | ASM is used to describe the uniformity of gray image distribution and the thickness of texture. If all values of GLCM are very close, the ASM value will be smaller. If the values of matrix elements differ greatly, the ASM value will be larger. |

| Hyperparameter | Value | Hyperparameter | Value |

|---|---|---|---|

| Learning Rate | 0.0001 | Decay Rate | 0.9000 |

| Decay Steps | 4000 | Weight Decay | 0.0001 |

| End Learning Rate | 0.0000 | Batch Size | 50 |

| Age Range | F | M | Age Range | F | M |

|---|---|---|---|---|---|

| [4, 5) | 0 | 1 | [14, 15) | 56 | 38 |

| [5, 6) | 5 | 1 | [15, 16) | 52 | 29 |

| [6, 7) | 2 | 1 | [16, 17) | 57 | 28 |

| [7, 8) | 7 | 2 | [17, 18) | 68 | 26 |

| [8, 9) | 11 | 10 | [18, 19) | 65 | 21 |

| [9, 10) | 22 | 32 | [19, 20) | 17 | 6 |

| [10, 11) | 50 | 51 | [20, 21) | 9 | 0 |

| [11, 12) | 61 | 48 | [21, 22) | 1 | 2 |

| [12, 13) | 61 | 51 | [22, 23) | 1 | 0 |

| [13, 14) | 65 | 48 | [23, 24) | 0 | 1 |

| Evaluation Parameters | |||||

|---|---|---|---|---|---|

| Label (Age Range) | AUC | Precision | Recall | F1-Score | Test Sample |

| 0 (4–10 years old) | 0.9106 | 0.5926 | 0.8000 | 0.6809 | 20 |

| 1 (11–12 years old) | 0.6825 | 0.4348 | 0.5000 | 0.4651 | 20 |

| 2 (13–14 years old) | 0.6581 | 0.6923 | 0.4500 | 0.5455 | 20 |

| 3 (15–16 years old) | 0.7262 | 0.6000 | 0.6000 | 0.6000 | 20 |

| 4 (17–23 years old) | 0.7887 | 0.5882 | 0.5000 | 0.5405 | 20 |

| Total test sample | 100 | ||||

| Average AUC | 0.7532 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, L.; Chen, Z.; Zang, L.; Sun, Z.; Wang, Q.; Yu, G. Midpalatal Suture CBCT Image Quantitive Characteristics Analysis Based on Machine Learning Algorithm Construction and Optimization. Bioengineering 2022, 9, 316. https://doi.org/10.3390/bioengineering9070316

Gao L, Chen Z, Zang L, Sun Z, Wang Q, Yu G. Midpalatal Suture CBCT Image Quantitive Characteristics Analysis Based on Machine Learning Algorithm Construction and Optimization. Bioengineering. 2022; 9(7):316. https://doi.org/10.3390/bioengineering9070316

Chicago/Turabian StyleGao, Lu, Zhiyu Chen, Lin Zang, Zhipeng Sun, Qing Wang, and Guoxia Yu. 2022. "Midpalatal Suture CBCT Image Quantitive Characteristics Analysis Based on Machine Learning Algorithm Construction and Optimization" Bioengineering 9, no. 7: 316. https://doi.org/10.3390/bioengineering9070316

APA StyleGao, L., Chen, Z., Zang, L., Sun, Z., Wang, Q., & Yu, G. (2022). Midpalatal Suture CBCT Image Quantitive Characteristics Analysis Based on Machine Learning Algorithm Construction and Optimization. Bioengineering, 9(7), 316. https://doi.org/10.3390/bioengineering9070316