A Review of Brain Activity and EEG-Based Brain–Computer Interfaces for Rehabilitation Application

Abstract

1. Introduction

2. Overview of EEG and BCI

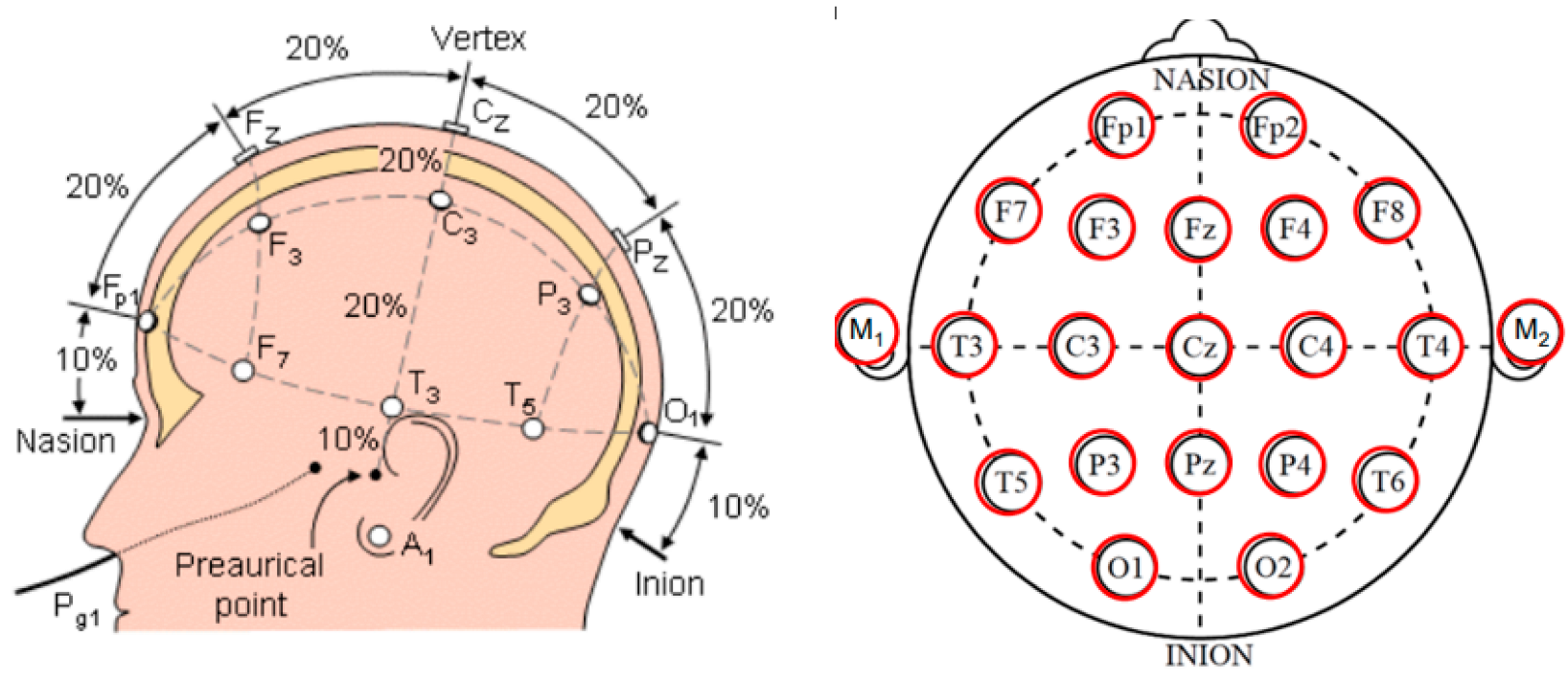

2.1. Electroencephalography (EEG)

2.2. BCI Has Different Paradigms Based on Exogenous and Endogenous EEG Signals

2.2.1. Endogenous EEG Signal

2.2.2. Exogenous Evoked Potentials, EEG Signal

3. EEG Control Strategies

3.1. EEG Signal Preparation Overview

3.2. Feature Extraction

3.3. Classification

4. Application of EEG in BCI Systems

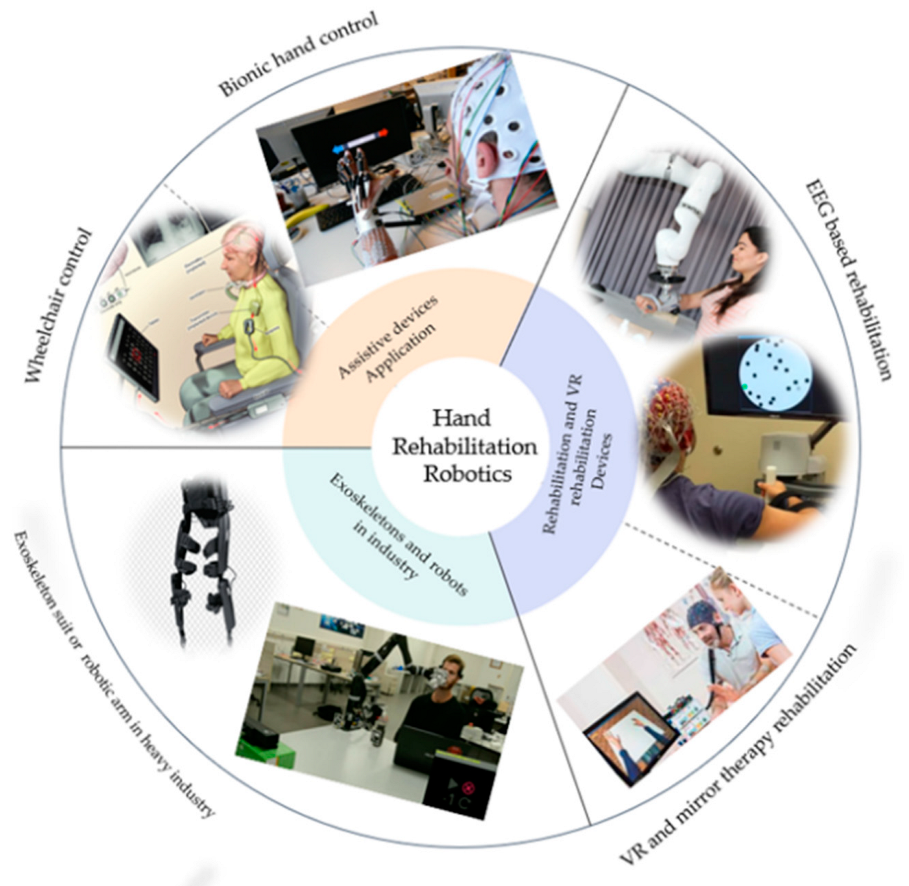

4.1. BCI-Assistive Robot Rehabilitation Application

4.2. BCI-Virtual Reality Rehabilitation Application

5. Conclusions

- The P300-BCI system is convenient for rehabilitation due to its effective cost, reliable performance, and variety of applications. Furthermore, many research groups integrated the P300 with VR technology for rehabilitation of an immersive experience for neurological diseases. MI offers a solid basis for BCI research and implementation, and the combination of MI-based BCI and VR systems increases the effectiveness of rehabilitation training for people with neurological diseases, particularly motor impairment. In VR feedback, there are obstacles in development and implementation. For example, people may struggle to focus on goals while ignoring the immersive virtual world, which can be distracting. Furthermore, the use of VR equipment is not consistent across the duration of experiments. Both characteristics diminish the efficacy of rehabilitation training. Researchers ran tests on several BCI feedback and VR platforms to discover a reliable approach.

- The most promising paradigm uses the MI-VR novel multiplatform prototype that improves attention by providing multimodal feedback in VR settings utilizing cutting-edge head-mounted displays. By integrating an immersive VR environment, sensory stimulation, and MI, the NeuRow system is a promising VR BCI system that can offer a holistic approach to MI-driven BCI.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lozada-Martínez, I.; Maiguel-Lapeira, J.; Torres-Llinás, D.; Moscote-Salazar, L.; Rahman, M.M.; Pacheco-Hernández, A. Letter: Need and Impact of the Development of Robotic Neurosurgery in Latin America. Neurosurgery 2021, 88, E580–E581. [Google Scholar] [CrossRef] [PubMed]

- He, B.; Yuan, H.; Meng, J.; Gao, S. Brain–computer interfaces. In Neural Engineering; Springer: Berlin/Heidelberg, Germany, 2020; pp. 131–183. [Google Scholar]

- Mrachacz-Kersting, N.; Jiang, N.; Stevenson, A.J.; Niazi, I.K.; Kostic, V.; Pavlovic, A.; Radovanovic, S.; Djuric-Jovicic, M.; Agosta, F.; Dremstrup, K.; et al. Efficient neuroplasticity induction in chronic stroke patients by an associative brain-computer interface. J. Neurophysiol. 2016, 115, 1410–1421. [Google Scholar] [CrossRef]

- Floriana, P.; Mattia, D. Brain-computer interfaces in neurologic rehabilitation practice. Handb. Clin. Neurol. 2020, 168, 101–116. [Google Scholar] [CrossRef]

- Mrachacz-Kersting, N.; Ibáñez, J.; Farina, D. Towards a mechanistic approach for the development of non-invasive brain-computer interfaces for motor rehabilitation. J. Physiol. 2021, 599, 2361–2374. [Google Scholar] [CrossRef]

- Soekadar, S.R.; Birbaumer, N.; Slutzky, M.W.; Cohen, L.G. Brain-machine interfaces in neurorehabilitation of stroke. Neurobiol. Dis. 2015, 83, 172–179. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Li, R.; Li, H.; Xu, K.; Shi, Y.; Wang, Q.; Yang, T.; Sun, X. Exploring the Use of Brain-Computer Interfaces in Stroke Neurorehabilitation. Biomed Res. Int. 2021, 2021, 9967348. [Google Scholar] [CrossRef] [PubMed]

- Cervera, M.A.; Soekadar, S.R.; Ushiba, J.; Millán, J.D.R.; Liu, M.; Birbaumer, N.; Garipelli, G. Brain-computer interfaces for post-stroke motor rehabilitation: A meta-analysis. Ann. Clin. Transl. Neurol. 2018, 25, 651–663. [Google Scholar] [CrossRef]

- López-Larraz, E.; Sarasola-Sanz, A.; Irastorza-Landa, N.; Birbaumer, N.; Ramos-Murguialday, A. Brain-machine interfaces for rehabilitation in stroke: A review. NeuroRehabilitation 2018, 43, 77–97. [Google Scholar] [CrossRef] [PubMed]

- Cameirão, M.S.; Badia, S.B.; Oller, E.D.; Verschure, P.F. Neurorehabilitation using the virtual reality based Rehabilitation Gaming System: Methodology, design, psychometrics, usability and validation. J. Neuroeng. Rehabil. 2010, 7, 48. [Google Scholar] [CrossRef]

- Zhou, Z.; Yin, E.; Liu, Y.; Jiang, J.; Hu, D. A novel task-oriented optimal design for P300-based brain-computer interfaces. J. Neural Eng. 2014, 11, 056003. [Google Scholar] [CrossRef] [PubMed]

- Lebedev, M.A.; Nicolelis, M.A. Brain-machine interfaces: From basic science to neuroprostheses and neurorehabilitation. Physiol. Rev. 2017, 97, 767–837. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Das, R.; Iversen, H.K.; Puthusserypady, S. Review on motor imagery based BCI systems for upper limb post-stroke neurorehabilitation: From designing to application. Comput. Biol. Med. 2020, 123, 103843. [Google Scholar] [CrossRef] [PubMed]

- Nicolas-Alonso, L.F.; Gomez-Gil, J. Brain computer interfaces, a review. Sensors 2012, 12, 1211–1279. [Google Scholar] [CrossRef]

- Ramadan, R.A.; Vasilakos, A.V. Brain computer interface: Control signals review. Neurocomputing 2017, 223, 26–44. [Google Scholar] [CrossRef]

- Waldert, S.; Pistohl, T.; Braun, C.; Ball, T.; Aertsen, A.; Mehring, C. A review on directional information in neural signals for brain-machine interfaces. J. Physiol.-Paris 2009, 103, 244–254. [Google Scholar] [CrossRef]

- Slutzky, M.W.; Flint, R.D. Physiological properties of brain-machine interface input signals. J. Neurophysiol. 2017, 118, 1329–1343. [Google Scholar] [CrossRef]

- Hauschild, M.; Mulliken, G.H.; Fineman, I.; Loeb, G.E.; Andersen, R.A. Cognitive signals for brain–machine interfaces in posterior parietal cortex including continuous 3D trajectory commands. Proc. Natl. Acad. Sci. USA 2012, 109, 17075–17080. [Google Scholar] [CrossRef]

- Abiri, R.; Borhani, S.; Sellers, E.W.; Jiang, Y.; Zhao, X. A comprehensive review of EEG-based brain-computer interface paradigms. J. Neural Eng. 2019, 16, 011001. [Google Scholar] [CrossRef]

- Borkowski, K.; Krzyżak, A.T. Assessment of the systematic errors caused by diffusion gradient inhomogeneity in DTI-computer simulations. NMR Biomed 2019, 32, e4130. [Google Scholar] [CrossRef]

- Birbaumer, N. Breaking the silence: Brain-computer interfaces (BCI) for communication and motor control. Psychophysiology 2006, 43, 517–532. (In English) [Google Scholar] [CrossRef]

- Shu, X.; Chen, S.; Yao, L.; Sheng, X.; Zhang, D.; Jiang, N.; Jia, J.; Zhu, X. Fast recognition of BCI-inefficient users using physiological features from EEG signals: A screening study of stroke patients. Front. Neurosci. 2018, 12, 93. [Google Scholar] [CrossRef]

- Ang, K.K.; Guan, C. Brain–computer interface for neurorehabilitation of the upper limb after stroke. Proc. IEEE 2015, 103, 944–953. [Google Scholar] [CrossRef]

- Cassidy, J.M.; Mark, J.I.; Cramer, S.C. Functional connectivity drives stroke recovery: Shifting the paradigm from correlation to causation. Brain 2021, 145, 1211–1228. [Google Scholar] [CrossRef]

- Raghavan, P. The nature of hand motor impairment after stroke and its treatment. Curr. Treat. Options Cardiovasc. Med. 2007, 9, 221–228. [Google Scholar] [CrossRef]

- Hakon, J.; Quattromani, M.J.; Sjölund, C.; Tomasevic, G.; Carey, L.; Lee, J.-M.; Ruscher, K.; Wieloch, T.; Bauer, A.Q. Multisensory stimulation improves functional recovery and resting-state functional connectivity in the mouse brain after stroke. NeuroImage Clin. 2018, 17, 717–730. [Google Scholar] [CrossRef]

- Nijenhuis, S.M.; Prange, G.B.; Amirabdollahian, F.; Sale, P.; Infarinato, F.; Nasr, N.; Mountain, G.; Hermens, H.J.; Stienen, A.H.; Buurke, J.H. Feasibility study of self-administered training at home using an arm and hand device with motivational gaming environment in chronic stroke. J. Neuroeng. Rehabil. 2015, 12, 89. [Google Scholar] [CrossRef]

- Jamil, N.; Belkacem, A.N.; Ouhbi, S.; Lakas, A. Noninvasive Electroencephalography Equipment for Assistive, Adaptive, and Rehabilitative Brain–Computer Interfaces: A Systematic Literature Review. Sensors 2021, 21, 4754. [Google Scholar] [CrossRef]

- Camargo-Vargas, D.; Callejas-Cuervo, M.; Mazzoleni, S. Brain-Computer Interface Systems for Upper and Lower Limb Rehabilitation: A Systematic Review. Sensors 2021, 21, 4312. [Google Scholar] [CrossRef]

- Mane, R.; Chouhan, T.; Guan, C. BCI for stroke rehabilitation: Motor and beyond. J. Neural Eng. 2020, 17, 041001. [Google Scholar] [CrossRef] [PubMed]

- Baniqued, P.D.E.; Stanyer, E.C.; Awais, M.; Alazmani, A.; Jackson, A.E.; Mon-Williams, M.A.; Mushtaq, F.; Holt, R.J. Brain–computer interface robotics for hand rehabilitation after stroke: A systematic review. J. Neuroeng. Rehabil. 2021, 18, 15. [Google Scholar] [CrossRef]

- He, Y.; Luu, T.P.; Nathan, K.; Nakagome, S.; Contreras-Vidal, J.L. Data Descriptor: A mobile brainbody imaging dataset recorded during treadmill walking with a brain-computer interface. Sci. Data 2018, 5, 180074. [Google Scholar] [CrossRef]

- Morley, A.; Hill, L.; Kaditis, A.G. 10–20 System EEG Placement. 2016, 34. Available online: https://www.ers-education.org/lrmedia/2016/pdf/298830.pdf (accessed on 25 October 2022).

- Liu, D.; Chen, W.; Pei, Z.; Wang, J. A brain-controlled lower-limb exoskeleton for human gait training. Rev. Sci. Instrum. 2017, 88, 104302. [Google Scholar] [CrossRef]

- Gordleeva, S.Y.; Lukoyanov, M.V.; Mineev, S.A.; Khoruzhko, M.A.; Mironov, V.I.; Kaplan, A.Y.; Kazantsev, V.B. Exoskeleton control system based on motor-imaginary brain-computer interface. Sovrem. Tehnol. Med. 2017, 9, 31–36. [Google Scholar] [CrossRef]

- Comani, S.; Velluto, L.; Schinaia, L.; Cerroni, G.; Serio, A.; Buzzelli, S.; Sorbi, S.; Guarnieri, B. Monitoring Neuro-Motor Recovery from Stroke with High-Resolution EEG, Robotics and Virtual Reality: A Proof of Concept. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 1106–1116. [Google Scholar] [CrossRef]

- Lechat, B.; Hansen, K.L.; Melaku, Y.A.; Vakulin, A.; Micic, G.; Adams, R.J.; Appleton, S.; Eckert, D.J.; Catcheside, P.; Zajamsek, B. A Novel Electroencephalogram-derived Measure of Disrupted Delta Wave Activity during Sleep Predicts All-Cause Mortality Risk. Ann. Am. Thorac. Soc. 2022, 19, 649–658. [Google Scholar] [CrossRef] [PubMed]

- Hussain, I.; Hossain, M.A.; Jany, R.; Bari, M.A.; Uddin, M.; Kamal, A.R.M.; Ku, Y.; Kim, J.S. Quantitative Evaluation of EEG-Biomarkers for Prediction of Sleep Stages. Sensors 2022, 22, 3079. [Google Scholar] [CrossRef]

- Tarokh, L.; Carskadon, M.A. Developmental changes in the human sleep EEG during early adolescence. Sleep 2010, 33, 801–809. [Google Scholar] [CrossRef]

- Grigg-Damberger, M.; Gozal, D.; Marcus, C.L.; Quan, S.F.; Rosen, C.L.; Chervin, R.D.; Wise, M.; Picchietti, D.L.; Sheldon, S.H.; Iber, C. The visual scoring of sleep and arousal in infants and children. J. Clin. Sleep Med. 2007, 3, 201–240. [Google Scholar] [CrossRef] [PubMed]

- Sekimoto, M.; Kajimura, N.; Kato, M.; Watanabe, T.; Nakabayashi, T.; Takahashi, K.; Okuma, T. Laterality of delta waves during all-night sleep. Psychiatry Clin. Neurosci. 1999, 53, 149–150. [Google Scholar] [CrossRef]

- Schechtman, L.; Harper, R.K.; Harpe, R.M. Distribution of slow-wave EEG activity across the night in developing infants. Sleep 1994, 17, 316–322. [Google Scholar] [CrossRef][Green Version]

- Anderson, A.J.; Perone, S.; Gartstein, M.A. Context matters: Cortical rhythms in infants across baseline and play. Infant Behav. Dev. 2022, 66, 101665. [Google Scholar] [CrossRef]

- Orekhova, E.; Stroganova, T.; Posikera, I.; Elam, M. EEG theta rhythm in infants and preschool children. Clin. Neurophysiol. 2006, 117, 1047–1062. [Google Scholar] [CrossRef]

- Mateos, D.M.; Krumm, G.; Arán Filippetti, V.; Gutierrez, M. Power Spectrum and Connectivity Analysis in EEG Recording during Attention and Creativity Performance in Children. Neuroscience 2022, 3, 25. [Google Scholar] [CrossRef]

- Goldman, R.I.; Stern, J.M.; Engel, J., Jr.; Cohen, M.S. Simultaneous EEG and fMRI of the alpha rhythm. Neuroreport 2002, 13, 2487–2492. [Google Scholar] [CrossRef]

- Tuladhar, M.; Nt, H.; Schoffelen, J.M.; Maris, E.; Oostenveld, R.; Jensen, O. Parieto-occipital sourcesaccount for the increase in alpha activity with working mem-ory load. Hum. Brain Mapp. 2007, 28, 785–792. [Google Scholar] [CrossRef]

- Rakhshan, V.; Hassani-Abharian, P.; Joghataei, M.; Nasehi, M.; Khosrowabadi, R. Effects of the Alpha, Beta, and Gamma Binaural Beat Brain Stimulation and Short-Term Training on Simultaneously Assessed Visuospatial and Verbal Working Memories, Signal Detection Measures, Response Times, and Intrasubject Response Time Variabilities: A Within-Subject Randomized Placebo-Controlled Clinical Trial. Biomed Res. Int. 2022, 2022, 8588272. [Google Scholar] [CrossRef]

- Kilavik, B.E.; Zaepffel, M.; Brovelli, A.; Mackay, W.A.; Riehle, A. The ups and downs of beta oscillations in the sensorimotor cortex. Exp. Neurol. 2013, 245, 15–26. [Google Scholar] [CrossRef]

- Darch, H.T.; Cerminara, N.L.; Gilchrist, I.D.; Apps, R. Pre-movement changes in sensorimotor beta oscillations predict motor adaptation drive. Sci. Rep. 2020, 10, 17946. [Google Scholar] [CrossRef]

- Al-Quraishi, M.S.; Elamvazuthi, I.; Daud, S.A.; Parasuraman, S.; Borboni, A. EEG-Based Control for Upper and Lower Limb Exoskeletons and Prostheses: A Systematic Review. Sensors 2018, 18, E3342. [Google Scholar] [CrossRef]

- Benasich, A.A.; Gou, Z.; Choudhury, N.; Harris, K.D. Early cognitive and language skills are linked to resting frontal gamma power across the first 3 years. Behav. Brain Res. 2008, 195, 215–222. [Google Scholar] [CrossRef]

- Ulloa, J.L. The Control of Movements via Motor Gamma Oscillations. Front. Hum. Neurosci. 2022, 15, 787157. [Google Scholar] [CrossRef]

- Fitzgibbon, S.P.; Pope, K.J.; Mackenzie, L.; Clark, C.R.; Willoughby, J.O. Cognitive tasks augment gamma EEG power. Clin. Neurophysiol. 2004, 115, 1802–1809. [Google Scholar] [CrossRef]

- Cannon, J.; McCarthy, M.M.; Lee, S.; Lee, J.; Börgers, C.; Whittington, M.A.; Kopell, N. Neurosystems: Brain rhythms and cognitive processing. Eur. J. Neurosci. 2014, 39, 705–719. [Google Scholar] [CrossRef]

- Del, R.; Millán, J.; Ferrez, P.W.; Galán, F.; Lew, E.; Chavarriaga, R. Non-invasive brain-machine interaction. Int. J. Pattern Recognit. Artif. Intell. 2008, 22, 959–972. [Google Scholar]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain- computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C.; Flotzinger, D.; Pregenzer, M. EEG-based discrimination between imagination of right and left hand movement. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 642–651. [Google Scholar] [CrossRef]

- Tang, Z.; Sun, S.; Zhang, S.; Chen, Y.; Li, C.; Chen, S. A brain-machine interface based on ERD/ERS for an upper-limb exoskeleton control. Sensors 2016, 16, 2050. [Google Scholar] [CrossRef]

- Grimm, F.; Walter, A.; Spüler, M.; Naros, G.; Rosenstiel, W.; Gharabaghi, A. Hybrid neuroprosthesis for the upper limb: Combining brain-controlled neuromuscular stimulation with a multi-joint arm exoskeleton. Front. Neurosci. 2016, 10, 367. [Google Scholar] [CrossRef]

- Formaggio, E.; Masiero, S.; Bosco, A.; Izzi, F.; Piccione, F.; Del Felice, A. Quantitative EEG Evaluation during Robot-Assisted Foot Movement. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1633–1640. [Google Scholar] [CrossRef]

- Murphy, D.P.; Bai, O.; Gorgey, A.S.; Fox, J.; Lovegreen, W.T.; Burkhardt, B.W.; Atri, R.; Marquez, J.S.; Li, Q.; Fei, D.Y. Electroencephalogram-based brain-computer interface and lower-limb prosthesis control: A case study. Front. Neurol. 2017, 8, 696. [Google Scholar] [CrossRef]

- Meng, J.; Zhang, S.; Bekyo, A.; Olsoe, J.; Baxter, B.; He, B. Noninvasive Electroencephalogram-Based Control of a Robotic Arm for Reach and Grasp Tasks. Sci. Rep. 2016, 6, 38565. [Google Scholar] [CrossRef]

- Hortal, E.; Planelles, D.; Resquin, F.; Climent, J.M.; Azorín, J.M.; Pons, J.L. Using a brain-machine interface to control a hybrid upper limb exoskeleton during rehabilitation of patients with neurological conditions. J. Neuroeng. Rehabil. 2015, 12, 92. [Google Scholar] [CrossRef] [PubMed]

- Kirchner, E.A.; Tabie, M.; Seeland, A. Multimodal movement prediction—Towards an individual assistance of patients. PLoS ONE 2014, 9, e85060. [Google Scholar] [CrossRef] [PubMed]

- Xu, R.; Jiang, N.; Mrachacz-Kersting, N.; Lin, C.; Prieto, G.A.; Moreno, J.C.; Pons, J.L.; Dremstrup, K.; Farina, D. A Closed-Loop Brain-Computer Interface Triggering an Active Ankle-Foot Orthosis for Inducing Cortical Neural Plasticity. IEEE Trans. Biomed. Eng. 2014, 61, 2092–2101. [Google Scholar] [PubMed]

- López-Larraz, E.; Trincado-Alonso, F.; Rajasekaran, V.; Pérez-Nombela, S.; del-Ama, A.J.; Aranda, J.; Minguez, J.; Gil-Agudo, A.; Montesano, L. Control of an ambulatory exoskeleton with a brain-machine interface for spinal cord injury gait rehabilitation. Front. Neurosci. 2016, 10, 359. [Google Scholar] [CrossRef]

- Kapgate, D.; Kalbande, D. A Review on Visual Brain Computer Interface. In Advancements of Medical Electronics; Springer: Berlin/Heidelberg, Germany, 2015; pp. 193–206. [Google Scholar]

- Gao, S.; Wang, Y.; Gao, X.; Hong, B. Visual and auditory brain–computer interfaces. IEEE Trans. Biomed. Eng. 2014, 61, 1436–1447. [Google Scholar]

- Nijboer, F.; Furdea, A.; Gunst, I.; Mellinger, J.; McFarland, D.J.; Birbaumer, N.; Kübler, A. An auditory brain–computer interface (BCI). J. Neurosci. Methods 2008, 167, 43–50. [Google Scholar] [CrossRef]

- Yao, L.; Sheng, X.; Zhang, D.; Jiang, N.; Farina, D.; Zhu, X. A BCI System Based on Somatosensory Attentional Orientation. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 81–90. [Google Scholar] [CrossRef]

- Wolpaw, J.R.; McFarland, D.J.; Vaughan, T.M. Brain-computer interface research at the Wadsworth Center. IEEE Trans. Rehabil. Eng. 2000, 8, 222–226. [Google Scholar] [CrossRef]

- Lee, K.; Liu, D.; Perroud, L.; Chavarriaga, R.; Millán, J.D.R. A brain-controlled exoskeleton with cascaded event-related desynchronization classifiers. Robot. Autom. Syst. 2017, 90, 15–23. [Google Scholar] [CrossRef]

- Polich, J. Updating P300: An integrative theory of P3a and P3b. Clin. Neurophysiol. 2007, 118, 2128–2148. [Google Scholar] [CrossRef]

- Kwak, N.-S.; Müller, K.-R.; Lee, S.-W. A lower limb exoskeleton control system based on steady state visual evoked potentials. J. Neural Eng. 2015, 12, 056009. [Google Scholar] [CrossRef]

- Chen, X.; Zhao, B.; Wang, Y.; Gao, X. Combination of high-frequency SSVEP-based BCI and computer vision for controlling a robotic arm. J. Neural Eng. 2019, 16, 026012. [Google Scholar] [CrossRef]

- Tariq, M.; Trivailo, P.M.; Simic, M. EEG-based BCI control schemes for lower-limb assistive-robots. Front. Hum. Neurosci. 2018, 12, 312. [Google Scholar] [CrossRef] [PubMed]

- Song, Y.; Cai, S.; Yang, L.; Li, G.; Wu, W.; Xie, L. A practical EEG-based human-machine interface to online control an upper-limb assist robot. Front. Neurorobot. 2020, 14, 32. [Google Scholar] [CrossRef]

- Aggarwal, S.; Chugh, N. Review of Machine Learning Techniques for EEG Based Brain Computer Interface. Arch. Comput. Methods Eng. 2022, 29, 3001–3020. [Google Scholar] [CrossRef]

- Qiu, S.; Li, Z.; He, W.; Zhang, L.; Yang, C.; Su, C.Y. Brain–machine interface and visual compressive sensing-based teleoperation control of an exoskeleton robot. IEEE Trans. Fuzzy Syst. 2016, 25, 58–69. [Google Scholar] [CrossRef]

- Horki, P.; Solis-Escalante, T.; Neuper, C.; Müller-Putz, G. Combined motor imagery and SSVEP based BCI control of a 2 DoF artificial upper limb. Med. Biol. Eng. Comput. 2011, 49, 567–577. [Google Scholar] [CrossRef]

- Bhattacharyya, S.; Konar, A.; Tibarewala, D. Motor imagery, P300 and error-related EEG-based robot arm movement control for rehabilitation purpose. Med. Biol. Eng. Comput. 2014, 52, 1007–1017. [Google Scholar] [CrossRef]

- Cecotti, H.; Graser, A. Convolutional neural networks for P300 detection with application to brain-computer interfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 433–445. [Google Scholar] [CrossRef]

- Hong, K.-S.; Khan, M.J. Hybrid brain–computer interface techniques for improved classification accuracy and increased number of commands: A review. Front. Neurorobot. 2017, 35. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Han, M.; Wang, J.; Wang, D.; Li, T. Efficient feature extraction framework for EEG signals classification. In Proceedings of the 2016 Seventh International Conference on Intelligent Control and Information Processing (ICICIP), Siem Reap, Cambodia, 1–4 December 2016; pp. 167–172. [Google Scholar] [CrossRef]

- Kumar, S.; Kumar, V.; Gupta, B. Feature extraction from EEG signal through one electrode device for medical application. In Proceedings of the 2015 1st International Conference on Next Generation Computing Technologies (NGCT), Dehradun, India, 4–5 September 2015; pp. 555–559. [Google Scholar] [CrossRef]

- Mehmood, R.M.; Du, R.; Lee, H.J. Optimal Feature Selection and Deep Learning Ensembles Method for Emotion Recognition From Human Brain EEG Sensors. IEEE Access 2017, 5, 14797–14806. [Google Scholar] [CrossRef]

- Roshdy, A.; Alkork, S.; Karar, A.S.; Mhalla, H.; Beyrouthy, T.; al Barakeh, Z.; Nait-ali, A. Statistical Analysis of Multi-channel EEG Signals for Digitizing Human Emotions. In Proceedings of the 2021 4th International Conference on Bio-Engineering for Smart Technologies (BioSMART), Virtual, 8–10 December 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Xu, S.; Hu, H.; Ji, L.; Wang, P. An Adaptive Graph Spectral Analysis Method for Feature Extraction of an EEG Signal. IEEE Sens. J. 2019, 19, 1884–1896. [Google Scholar] [CrossRef]

- Kang, W.-S.; Kwon, H.-O.; Moon, C.; Kim, J.K.; Yun, S.; Kim, S. EEG-fMRI features analysis of odorants stimuli with citralva and 2-mercaptoethanol. In Proceedings of the Sensors, 2013 IEEE, Baltimore, MD, USA, 3–6 November 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, K.; Xu, G.; Zheng, X.; Li, H.; Zhang, S.; Yu, Y.; Liang, R. application of transfer learning in EEG decoding based on brain-computer interfaces: A review. Sensors 2020, 20, 6321. [Google Scholar] [CrossRef] [PubMed]

- Thomas, J.; Maszczyk, T.; Sinha, N.; Kluge, N.; Dauwels, J. Deep learning-based classification forbrain-computer interfaces. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Ai, Q.; Liu, Z.; Meng, W.; Liu, Q.; Xie, S.Q. Machine Learning in Robot Assisted Upper Limb Rehabilitation: A Focused Review. IEEE Transactions on Cognitive and Developmental Systems; IEEE: Piscataway, NJ, USA, 2021. [Google Scholar]

- Lu, W.; Wei, Y.; Yuan, J.; Deng, Y.; Song, A. Tractor assistant driving control method based on EEG combined with RNN-TL deep learning algorithm. IEEE Access 2020, 8, 163269–163279. [Google Scholar] [CrossRef]

- Roy, S.; Chowdhury, A.; Mccreadie, K.; Prasad, G. Deep learning based inter-subject continuous decoding of motor imagery for practical brain-computer interfaces. Front. Neurosci. 2020, 14, 918. [Google Scholar] [CrossRef]

- Choi, J.; Kim, K.; Lee, J.; Lee, S.J.; Kim, H. Robust semi-synchronous bci controller for brain-actuated exoskeleton system. In Proceedings of the 2020 8th international winter conference on brain-computer interface (BCI), Gangwon, Repulic of Korea, 26–28 February 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Bi, L.; Fan, X.-A.; Liu, Y. EEG-based brain-controlled mobile robots: A survey. Human-Machine Systems. IEEE Trans. 2013, 43, 161–176. [Google Scholar]

- Birbaumer, N.; Ghanayim, N.; Hinterberger, T.; Iversen, I.; Kotchoubey, B.; Kübler, A.; Perelmouter, J.; Taub, E.; Flor, H. A spelling device for the paralysed. Nature 1999, 398, 297–298. [Google Scholar] [CrossRef] [PubMed]

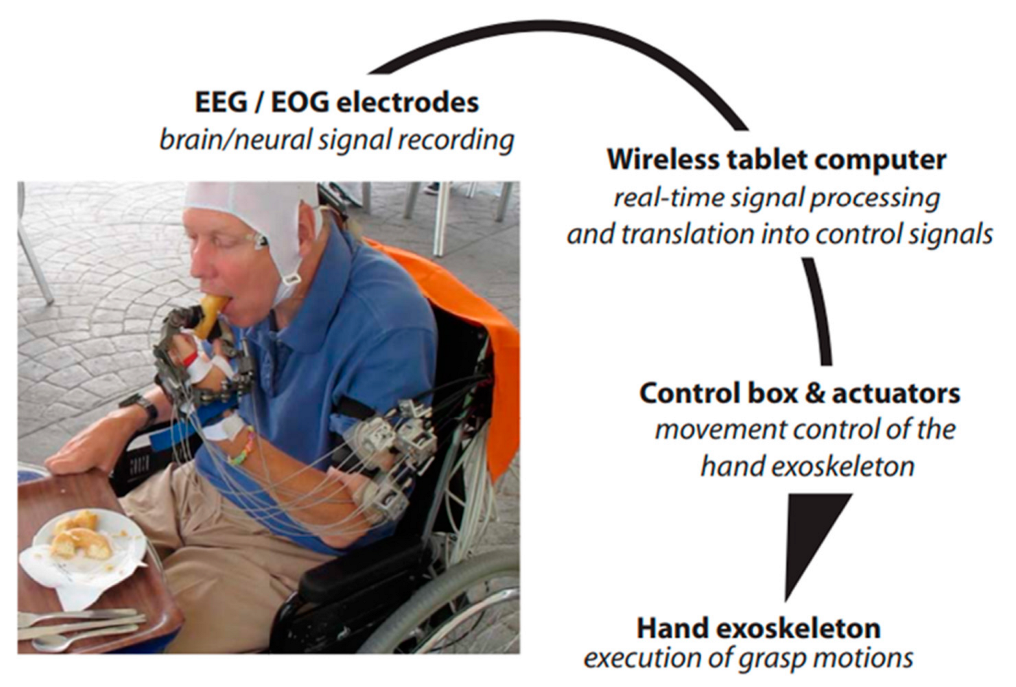

- Soekadar, S.R.; Witkowski, M.; Gómez, C.; Opisso, E.; Medina, J.; Cortese, M.; Cempini, M.; Carrozza, M.C.; Cohen, L.G.; Birbaumer, N.; et al. Hybrid EEG/EOG-based brain/neural hand exoskeleton restores fully independent daily living activities after quadriplegia. Sci. Robot. 2016, 1, eaag3296. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Wang, B.; Zhang, C.; Xiao, Y.; Wang, M.Y. An EEG/EMG/EOG-Based Multimodal Human-Machine Interface for Real-Time Control of a Soft Robot Hand. Front. Neurorobot. 2019, 13, 7. [Google Scholar] [CrossRef]

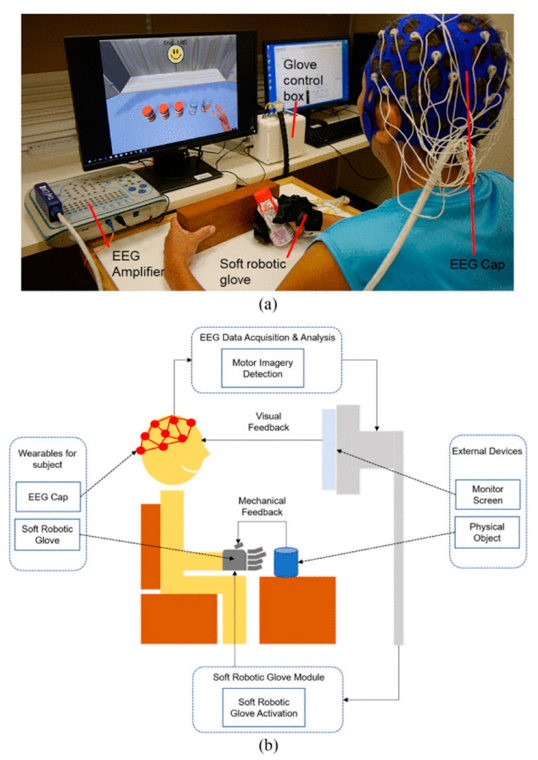

- Cheng, N.; Phua, K.S.; Lai, H.S.; Tam, P.K.; Tang, K.Y.; Cheng, K.K.; Yeow, R.C.; Ang, K.K.; Guan, C.; Lim, J.H. Brain-Computer Interface-Based Soft Robotic Glove Rehabilitation for Stroke. IEEE Trans. Biomed. Eng. 2020, 67, 3339–3351. [Google Scholar] [CrossRef] [PubMed]

- Jochumsen, M.; Janjua, T.A.M.; Arceo, J.C.; Lauber, J.; Buessinger, E.S.; Kæseler, R.L. Induction of Neural Plasticity Using a Low-Cost Open Source Brain-Computer Interface and a 3D-Printed Wrist Exoskeleton. Sensors 2021, 21, 572. [Google Scholar] [CrossRef]

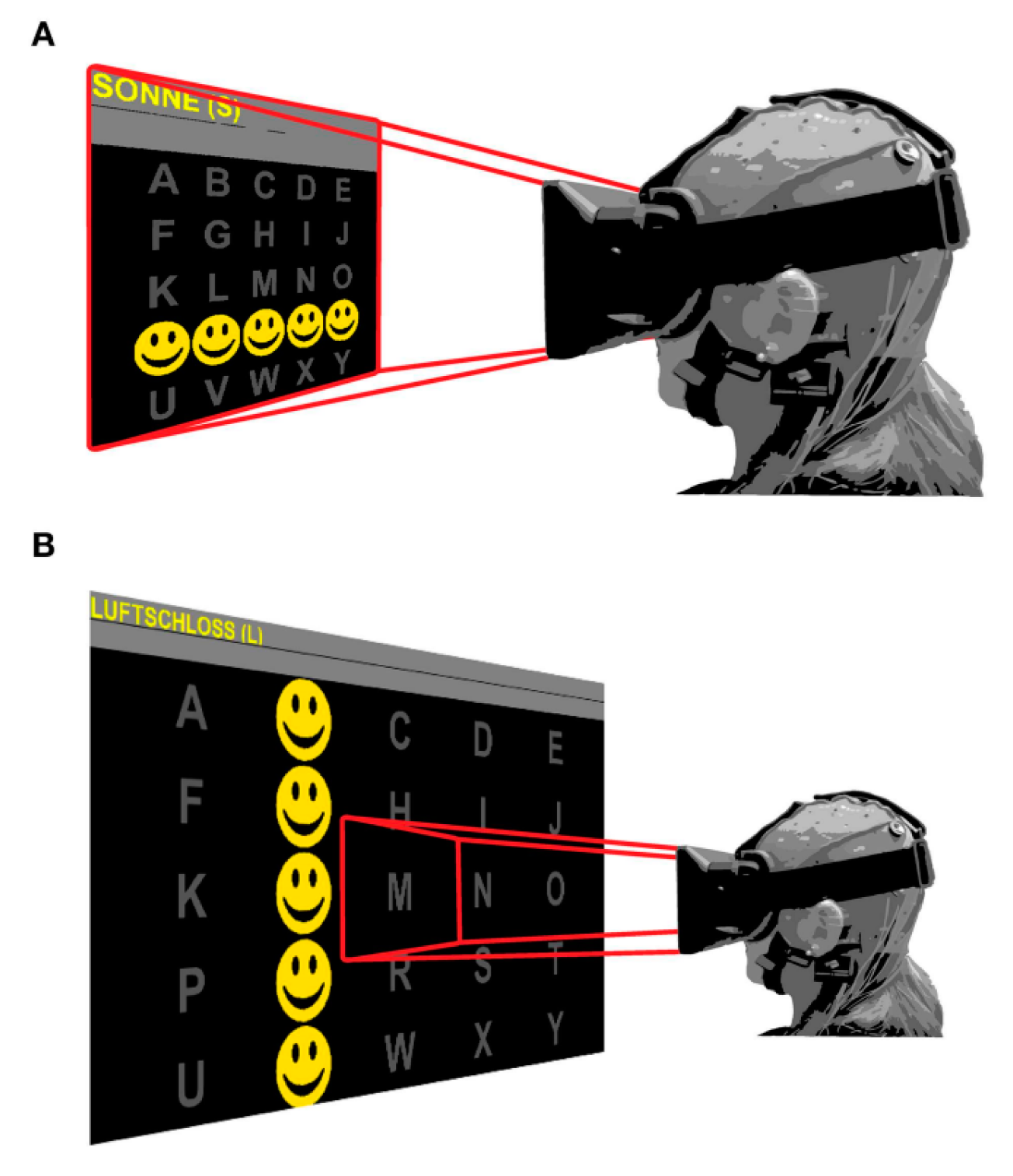

- Käthner, I.; Kübler, A.; Halder, S. Rapid P300 brain-computer interface communication with a head-mounted display. Front. Neurosci. 2015, 9, 207. [Google Scholar] [CrossRef] [PubMed]

- Ortner, R.; Irimia, D.; Scharinger, J.; Guger, C. A motor imagery-based braincomputer interface for stroke rehabilitation. Stud. Health Technol. Inf. 2012, 181, 319–323. [Google Scholar] [CrossRef]

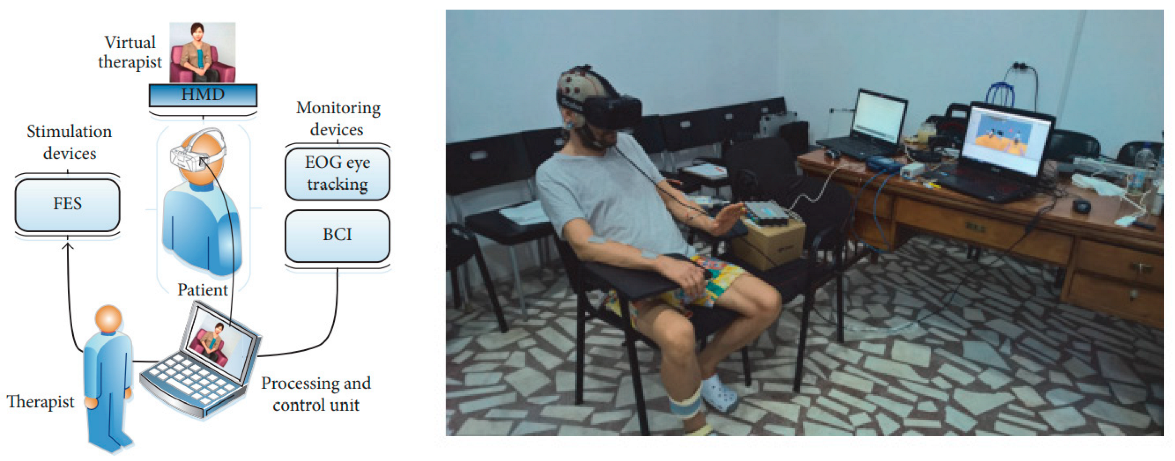

- Lupu, R.G.; Irimia, D.C.; Ungureanu, F.; Poboroniuc, M.; Moldoveanu, A. BCI and FES based therapy for stroke rehabilitation using VR facilities. Wirel Commun. Mob. Com. 2018, 2018, 4798359. [Google Scholar] [CrossRef]

| Rhythms | Rhythm Frequency Band (Hz) | Functions Related |

|---|---|---|

| Delta (δ) | 0.5–4 HZ | appear in infants and deep sleep [37,38,39,40,41,42]. |

| Theta (θ) | 4–8 HZ | It occurs in the parietal and temporal areas in children [43,44,45] |

| Alpha (α) | 8–13 HZ | It can be found in a wake adult. It also appears in the occipital area; however, it can be detected in the scalp frontal, and parietal regions [46,47,48]. |

| Beta (β) | 13–30 HZ | Decreasing the Beta rhythm reflects movement, planning a movement, imagining a movement, or preparation of movements. This decrease is most dominant in the contralateral motor cortex. These waves occur during movements and can be detected from the central and frontal scalp lope [49,50,51]. |

| Gamma (G) | >30 HZ | It is the higher rhythms that have frequencies of more than 30 Hz. It is related to the formation of ideas, language processing, and various types of learning [52,53,54,55] |

| Type of Application | Representative Works | BCI Paradigm | Description | No. of Subjects | Signal Type | Electrode Number | Accuracy |

|---|---|---|---|---|---|---|---|

| BCI-Assistive robot for Rehabilitation | Soekadar, S R et al. [99] | MI- EEG HOVs’ EOG | Help paraplegic patients to control the exoskeleton hand for daily life activity | 6 | EEG-EOG | C3 | 84.96 ± 7.19% |

| Zhang Jinhua and et al. [100] | MI-EEG Left/right looking-EOG | 6 | EEG-EOG-EMG | 40 Ag/AgCl channels placed 10–20 System | 93.83% | ||

| N. Cheng et al. [101] | MI | Studied BCI-based Soft Robotic Glove applicability for stroke patient rehabilitation in daily life activities. | 11 | EEG | 24 Ag/AgCl channels placed 10–20 System | - | |

| Mads Jochumsen and et al. [102] | MI | Induction of Neural Plasticity Using a Low-Cost Open Source Brain-Computer Interface and a 3D-Printed Wrist Exoskeleton | 11 | EEG | F1, F2, C3, Cz, C4, P1, and P2 | 86 ± 12%; | |

| Kathner et al. [103] | P300 | Check if VR devices can achieve the same precision and rapid data transmission compared to the regular display methods | 18 + 1 person (ALS). 80 years | EEG-VR | Fz, Cz, P3, P4, PO7, POz, PO8, Oz | 96% | |

| BCI-virtual reality based for rehabilitation | Ortner et al. [104] | MI | training stroke patients to imagine left and right hands movements in VR scenes | 3 | EEG-VR | 63 positions | mean 90.4% |

| Robert Lupu et al. [105] | MI | Flow instruction of virtual therapists, to control virtual characters in VR scenes using MI. Motor function was improved. | 7 | EEG-FES EOG | 16 sensorimotor areas of channels sensorimotor areas | mean85.44% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orban, M.; Elsamanty, M.; Guo, K.; Zhang, S.; Yang, H. A Review of Brain Activity and EEG-Based Brain–Computer Interfaces for Rehabilitation Application. Bioengineering 2022, 9, 768. https://doi.org/10.3390/bioengineering9120768

Orban M, Elsamanty M, Guo K, Zhang S, Yang H. A Review of Brain Activity and EEG-Based Brain–Computer Interfaces for Rehabilitation Application. Bioengineering. 2022; 9(12):768. https://doi.org/10.3390/bioengineering9120768

Chicago/Turabian StyleOrban, Mostafa, Mahmoud Elsamanty, Kai Guo, Senhao Zhang, and Hongbo Yang. 2022. "A Review of Brain Activity and EEG-Based Brain–Computer Interfaces for Rehabilitation Application" Bioengineering 9, no. 12: 768. https://doi.org/10.3390/bioengineering9120768

APA StyleOrban, M., Elsamanty, M., Guo, K., Zhang, S., & Yang, H. (2022). A Review of Brain Activity and EEG-Based Brain–Computer Interfaces for Rehabilitation Application. Bioengineering, 9(12), 768. https://doi.org/10.3390/bioengineering9120768