Improving the Efficacy of Deep-Learning Models for Heart Beat Detection on Heterogeneous Datasets

Abstract

1. Introduction

Aim of This Study

2. Materials and Methods

2.1. Datasets

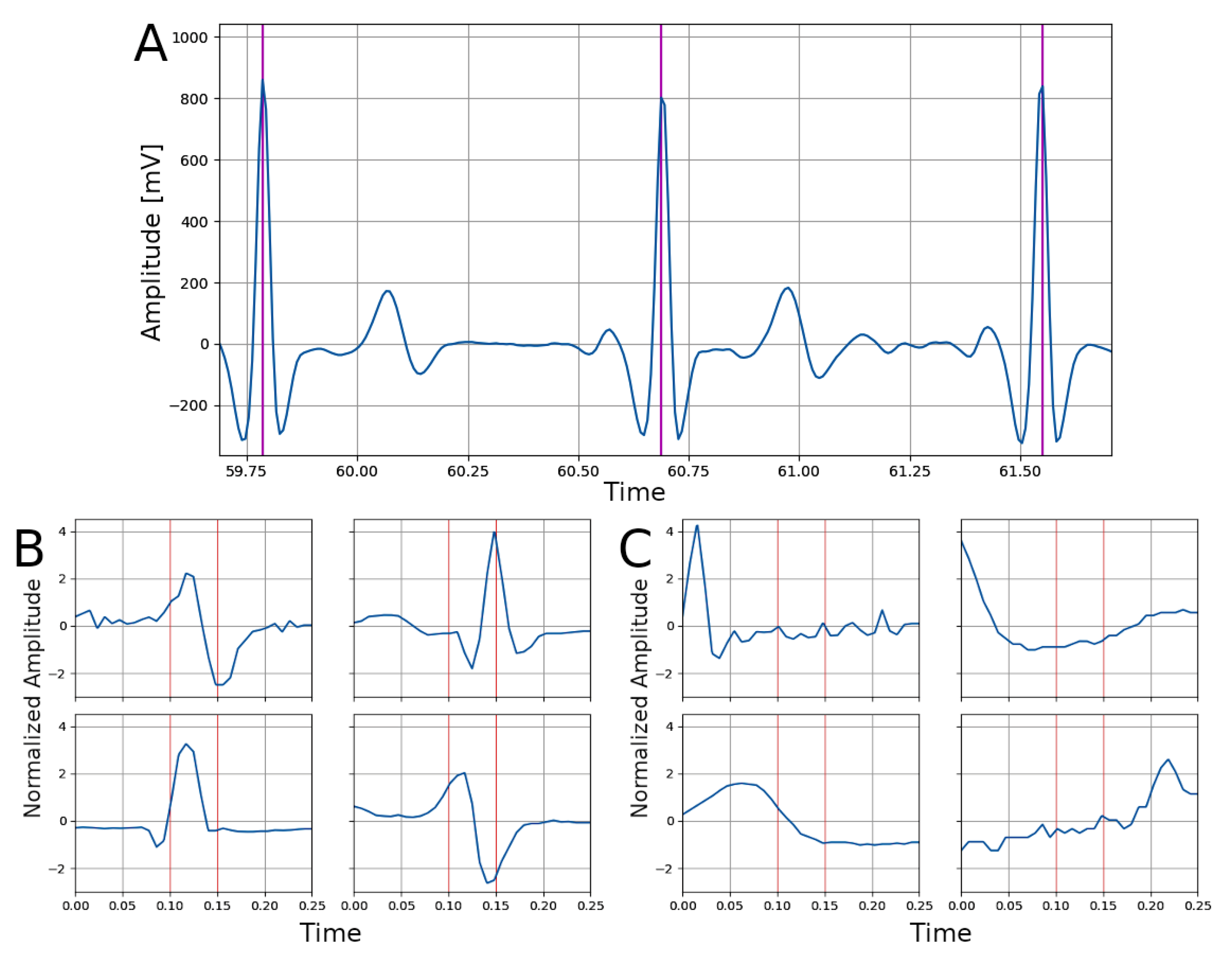

2.2. Signal Processing

2.3. Network Architecture

2.4. Network Training and Transfer Learning

2.5. Experiments

- (1)

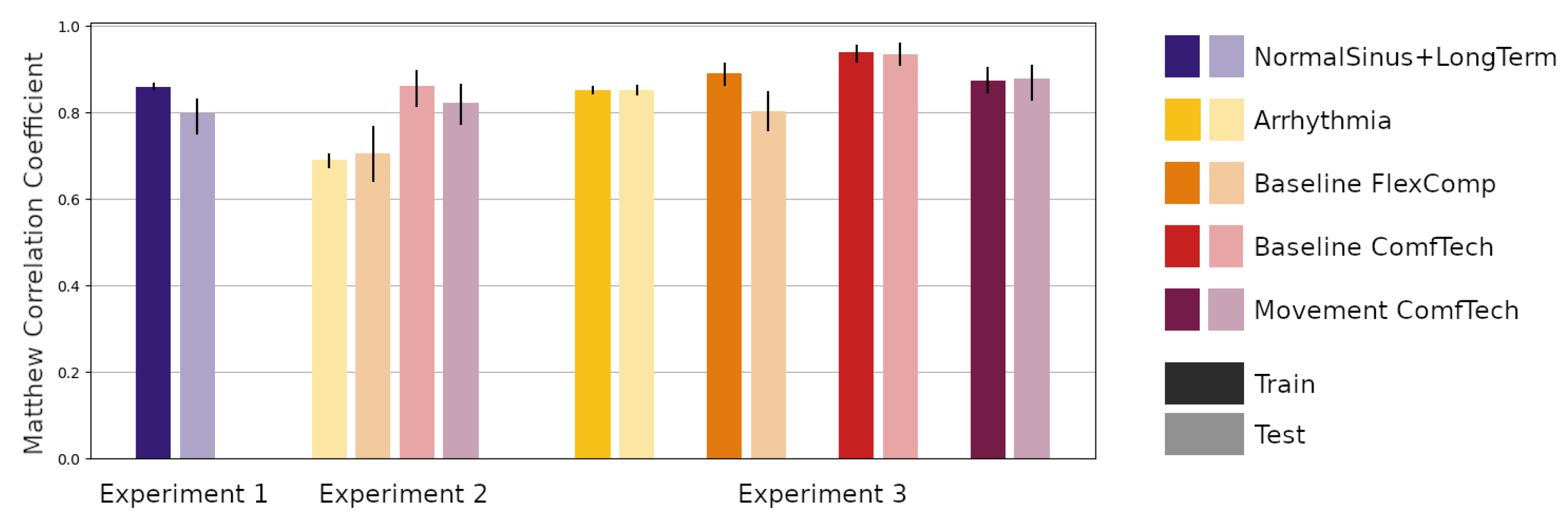

- Experiment 1—The first experiment aimed at reproducing the results of Silva and colleagues [25]. In this experiment, we trained the network using samples in the Training partition of the NormalSinus+LongTerm subsets and evaluated the performance on both the Training and Testing partition of the same subset. The predictive performance was also assessed in terms of the percentage of positive predicted samples (+p, also known as Precision), sensitivity (Se, also known as Recall) and F-score (F1), to be able to compare the results with the Silva et al. study;

- (2)

- Experiment 2—The second experiment aimed at evaluating the performance of the trained network on the Testing partition of the other subsets: (a) the Arrhythmia subset, representing a clinical population; (b) the Baseline FlexComp and (c) the Baseline ComfTech subsets, representing a normal population at rest with signals collected using another medical grade device and a wearable device respectively; (d) the Movement ComfTech subset, representing the same normal population during movement, using a wearable device;

- (3)

- Experiment 3—The third experiment aimed at assessing the feasibility and impact of transfer learning the trained network on the same subsets. The trained network was retrained on the Training partitions and evaluated on the Training and Testing partitions.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wagner, J.; Kim, J.; André, E. From physiological signals to emotions: Implementing and comparing selected methods for feature extraction and classification. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 940–943. [Google Scholar]

- Gabrieli, G.; Azhari, A.; Esposito, G. PySiology: A python package for physiological feature extraction. In Neural Approaches to Dynamics of Signal Exchanges; Springer: Berlin/Heidelberg, Germany, 2020; pp. 395–402. [Google Scholar]

- Gabrieli, G.; Balagtas, J.P.M.; Esposito, G.; Setoh, P. A Machine Learning approach for the automatic estimation of fixation-time data signals’ quality. Sensors 2020, 20, 6775. [Google Scholar] [CrossRef]

- Jothiramalingam, R.; Jude, A.; Patan, R.; Ramachandran, M.; Duraisamy, J.H.; Gandomi, A.H. Machine learning-based left ventricular hypertrophy detection using multi-lead ECG signal. Neural Comput. Appl. 2021, 33, 4445–4455. [Google Scholar] [CrossRef]

- Bulbul, H.I.; Usta, N.; Yildiz, M. Classification of ECG arrhythmia with machine learning techniques. In Proceedings of the 2017 16th IEEE International Conference on machine learning and Applications (ICMLA), Cancun, Mexico, 18–21 December 2017; pp. 546–549. [Google Scholar]

- Karthick, P.; Ghosh, D.M.; Ramakrishnan, S. Surface electromyography based muscle fatigue detection using high-resolution time-frequency methods and machine learning algorithms. Comput. Methods Programs Biomed. 2018, 154, 45–56. [Google Scholar] [CrossRef]

- Zontone, P.; Affanni, A.; Bernardini, R.; Piras, A.; Rinaldo, R. Stress detection through electrodermal activity (EDA) and electrocardiogram (ECG) analysis in car drivers. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), A Coruna, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar]

- Manzalini, A. Towards a Quantum Field Theory for Optical Artificial Intelligence. Ann. Emerg. Technol. Comput. (AETiC) 2019, 3, 1–8. [Google Scholar] [CrossRef]

- Sánchez-Sánchez, C.; Izzo, D.; Hennes, D. Learning the optimal state-feedback using deep networks. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Mukhopadhyay, A.K.; Samui, S. An experimental study on upper limb position invariant EMG signal classification based on deep neural network. Biomed. Signal Process. Control 2020, 55, 101669. [Google Scholar] [CrossRef]

- Bizzego, A.; Bussola, N.; Salvalai, D.; Chierici, M.; Maggio, V.; Jurman, G.; Furlanello, C. Integrating deep and radiomics features in cancer bioimaging. In Proceedings of the 2019 IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology (CIBCB), Siena, Italy, 9–11 July 2019; pp. 1–8. [Google Scholar]

- Tseng, H.H.; Wei, L.; Cui, S.; Luo, Y.; Ten Haken, R.K.; El Naqa, I. Machine learning and imaging informatics in Oncology. Oncology 2018, 98, 344–362. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Mobadersany, P.; Yousefi, S.; Amgad, M.; Gutman, D.A.; Barnholtz-Sloan, J.S.; Velázquez Vega, J.E.; Brat, D.J.; Cooper, L.A.D. Predicting cancer outcomes from histology and genomics using convolutional networks. Proc. Natl. Acad. Sci. USA 2018, 115, E2970–E2979. [Google Scholar] [CrossRef]

- Wieclaw, L.; Khoma, Y.; Fałat, P.; Sabodashko, D.; Herasymenko, V. Biometrie identification from raw ECG signal using deep learning techniques. In Proceedings of the 2017 9th IEEE International Conference on Intelligent Data Acquisition and Advanced Computing Systems: Technology and Applications (IDAACS), Bucharest, Romania, 21–23 September 2017; Volume 1, pp. 129–133. [Google Scholar]

- Mathews, S.M.; Kambhamettu, C.; Barner, K.E. A novel application of deep learning for single-lead ECG classification. Comput. Biol. Med. 2018, 99, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Xu, S.S.; Mak, M.W.; Cheung, C.C. Towards end-to-end ECG classification with raw signal extraction and deep neural networks. IEEE J. Biomed. Health Inform. 2018, 23, 1574–1584. [Google Scholar] [CrossRef]

- Yu, D.; Sun, S. A systematic exploration of deep neural networks for EDA-based emotion recognition. Information 2020, 11, 212. [Google Scholar] [CrossRef]

- Li, Q.; Mark, R.G.; Clifford, G.D. Robust heart rate estimation from multiple asynchronous noisy sources using signal quality indices and a Kalman filter. Physiol. Meas. 2007, 29, 15. [Google Scholar] [CrossRef]

- Tarassenko, L.; Townsend, N.; Clifford, G.; Mason, L.; Burton, J.; Price, J. Medical Signal Processing Using the Software Monitor. In DERA/IEE Workshop on Intelligent Sensor Processing (Ref. No. 2001/050); IET: London, UK, 2001; pp. 3/1–3/4. [Google Scholar] [CrossRef]

- Kohler, B.U.; Hennig, C.; Orglmeister, R. The principles of software QRS detection. IEEE Eng. Med. Biol. Mag. 2002, 21, 42–57. [Google Scholar] [CrossRef]

- Ebrahim, M.H.; Feldman, J.M.; Bar-Kana, I. A robust sensor fusion method for heart rate estimation. J. Clin. Monit. 1997, 13, 385–393. [Google Scholar] [CrossRef]

- Silva, P.; Luz, E.; Wanner, E.; Menotti, D.; Moreira, G. QRS detection in ECG signal with convolutional network. In Iberoamerican Congress on Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018; pp. 802–809. [Google Scholar]

- Silva, P.; Luz, E.; Silva, G.; Moreira, G.; Wanner, E.; Vidal, F.; Menotti, D. Towards better heartbeat segmentation with deep learning classification. Sci. Rep. 2020, 10, 1–13. [Google Scholar] [CrossRef]

- Chambrin, M.C. Alarms in the intensive care unit: How can the number of false alarms be reduced? Crit. Care 2001, 5, 1–5. [Google Scholar] [CrossRef] [PubMed]

- Eerikäinen, L.M.; Vanschoren, J.; Rooijakkers, M.J.; Vullings, R.; Aarts, R.M. Reduction of false arrhythmia alarms using signal selection and machine learning. Physiol. Meas. 2016, 37, 1204. [Google Scholar] [CrossRef] [PubMed]

- Gal, H.; Liel, C.; O’Connor Michael, F.; Idit, M.; Lerner, B.; Yuval, B. Machine learning applied to multi-sensor information to reduce false alarm rate in the ICU. J. Clin. Monit. Comput. 2020, 34, 339–352. [Google Scholar]

- Sendelbach, S.; Funk, M. Alarm fatigue: A patient safety concern. AACN Adv. Crit. Care 2013, 24, 378–386. [Google Scholar] [CrossRef]

- Drew, B.J.; Harris, P.; Zègre-Hemsey, J.K.; Mammone, T.; Schindler, D.; Salas-Boni, R.; Bai, Y.; Tinoco, A.; Ding, Q.; Hu, X. Insights into the problem of alarm fatigue with physiologic monitor devices: A comprehensive observational study of consecutive intensive care unit patients. PLoS ONE 2014, 9, e110274. [Google Scholar]

- Xie, H.; Kang, J.; Mills, G.H. Clinical review: The impact of noise on patients’ sleep and the effectiveness of noise reduction strategies in intensive care units. Crit. Care 2009, 13, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Sorkin, R.D. Why are people turning off our alarms? J. Acoust. Soc. Am. 1988, 84, 1107–1108. [Google Scholar] [CrossRef]

- Sujadevi, V.; Soman, K.; Vinayakumar, R. Real-time detection of atrial fibrillation from short time single lead ECG traces using recurrent neural networks. In The International Symposium on Intelligent Systems Technologies and Applications; Springer: Berlin/Heidelberg, Germany, 2017; pp. 212–221. [Google Scholar]

- Al Rahhal, M.M.; Bazi, Y.; Al Zuair, M.; Othman, E.; BenJdira, B. Convolutional neural networks for electrocardiogram classification. J. Med. Biol. Eng. 2018, 38, 1014–1025. [Google Scholar] [CrossRef]

- Ebrahimi, Z.; Loni, M.; Daneshtalab, M.; Gharehbaghi, A. A review on deep learning methods for ECG arrhythmia classification. Expert Syst. Appl. X 2020, 7, 100033. [Google Scholar] [CrossRef]

- Siontis, K.C.; Noseworthy, P.A.; Attia, Z.I.; Friedman, P.A. Artificial intelligence-enhanced electrocardiography in cardiovascular disease management. Nat. Rev. Cardiol. 2021, 18, 465–478. [Google Scholar] [CrossRef]

- Ko, W.Y.; Siontis, K.C.; Attia, Z.I.; Carter, R.E.; Kapa, S.; Ommen, S.R.; Demuth, S.J.; Ackerman, M.J.; Gersh, B.J.; Arruda-Olson, A.M.; et al. Detection of hypertrophic cardiomyopathy using a convolutional neural network-enabled electrocardiogram. J. Am. Coll. Cardiol. 2020, 75, 722–733. [Google Scholar] [CrossRef] [PubMed]

- Attia, Z.I.; Noseworthy, P.A.; Lopez-Jimenez, F.; Asirvatham, S.J.; Deshmukh, A.J.; Gersh, B.J.; Carter, R.E.; Yao, X.; Rabinstein, A.A.; Erickson, B.J.; et al. An artificial intelligence-enabled ECG algorithm for the identification of patients with atrial fibrillation during sinus rhythm: A retrospective analysis of outcome prediction. Lancet 2019, 394, 861–867. [Google Scholar] [CrossRef]

- Attia, Z.I.; Kapa, S.; Lopez-Jimenez, F.; McKie, P.M.; Ladewig, D.J.; Satam, G.; Pellikka, P.A.; Enriquez-Sarano, M.; Noseworthy, P.A.; Munger, T.M.; et al. Screening for cardiac contractile dysfunction using an artificial intelligence–enabled electrocardiogram. Nat. Med. 2019, 25, 70–74. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D. A survey of transfer learning. J. Big Data 2016, 3, 1–40. [Google Scholar] [CrossRef]

- Bizzego, A.; Bussola, N.; Chierici, M.; Maggio, V.; Francescatto, M.; Cima, L.; Cristoforetti, M.; Jurman, G.; Furlanello, C. Evaluating reproducibility of AI algorithms in digital pathology with DAPPER. PLoS Comput. Biol. 2019, 15, e1006269. [Google Scholar] [CrossRef] [PubMed]

- Farhadi, A.; Forsyth, D.; White, R. Transfer learning in sign language. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Rosenstein, M.T.; Marx, Z.; Kaelbling, L.P.; Dietterich, T.G. To transfer or not to transfer. In Proceedings of the NIPS: 2005 Workshop on Transfer Learning, Vancouver, BC, Canada, 5–8 December 2005; Volume 898, pp. 1–4. [Google Scholar]

- Salem, M.; Taheri, S.; Yuan, J.S. ECG arrhythmia classification using transfer learning from 2-dimensional deep CNN features. In Proceedings of the 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), Cleveland, OH, USA, 17–19 October 2018; pp. 1–4. [Google Scholar]

- Van Steenkiste, G.; van Loon, G.; Crevecoeur, G. Transfer learning in ECG classification from human to horse using a novel parallel neural network architecture. Sci. Rep. 2020, 10, 1–12. [Google Scholar]

- Weimann, K.; Conrad, T.O. Transfer learning for ECG classification. Sci. Rep. 2021, 11, 1–12. [Google Scholar]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, e215–e220. [Google Scholar] [CrossRef] [PubMed]

- Bizzego, A.; Gabrieli, G.; Furlanello, C.; Esposito, G. Comparison of wearable and clinical devices for acquisition of peripheral nervous system signals. Sensors 2020, 20, 6778. [Google Scholar] [CrossRef] [PubMed]

- Moody, G.B.; Mark, R.G. The impact of the MIT-BIH arrhythmia database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef]

- Bizzego, A.; Battisti, A.; Gabrieli, G.; Esposito, G.; Furlanello, C. pyphysio: A physiological signal processing library for data science approaches in physiology. SoftwareX 2019, 10, 100287. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Shang, W.; Sohn, K.; Almeida, D.; Lee, H. Understanding and improving convolutional neural networks via concatenated rectified linear units. In Proceedings of the International Conference on machine learning, New York, NY, USA, 20–22 June 2016; pp. 2217–2225. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. Adv. Neural Inf. Process. Syst. 2018, 31, 8778–8788. [Google Scholar]

- Zeiler, M.D. Adadelta: An adaptive learning rate method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Jurman, G.; Riccadonna, S.; Furlanello, C. A comparison of MCC and CEN error measures in multi-class prediction. PLoS ONE 2012, 7, e41882. [Google Scholar] [CrossRef]

- Sun, W.; Zheng, B.; Qian, W. Automatic feature learning using multichannel ROI based on deep structured algorithms for computerized lung cancer diagnosis. Comput. Biol. Med. 2017, 89, 530–539. [Google Scholar] [CrossRef]

- Arimura, H.; Soufi, M.; Kamezawa, H.; Ninomiya, K.; Yamada, M. Radiomics with artificial intelligence for precision medicine in radiation therapy. J. Radiat. Res. 2019, 60, 150–157. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Wang, Y.; Yu, J.; Guo, Y.; Cao, W. Deep learning based radiomics (DLR) and its usage in noninvasive IDH1 prediction for low grade glioma. Sci. Rep. 2017, 7, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Kontos, D.; Summers, R.M.; Giger, M. Special section guest editorial: Radiomics and deep learning. J. Med. Imaging 2017, 4, 041301. [Google Scholar] [CrossRef][Green Version]

- Haibe-Kains, B.; Adam, G.A.; Hosny, A.; Khodakarami, F.; Waldron, L.; Wang, B.; McIntosh, C.; Goldenberg, A.; Kundaje, A.; Greene, C.S.; et al. Transparency and reproducibility in artificial intelligence. Nature 2020, 586, E14–E16. [Google Scholar] [CrossRef]

- Gabrieli, G.; Bizzego, A.; Neoh, M.J.Y.; Esposito, G. fNIRS-QC: Crowd-Sourced Creation of a Dataset and machine learning Model for fNIRS Quality Control. Appl. Sci. 2021, 11, 9531. [Google Scholar] [CrossRef]

- Bizzego, A.; Gabrieli, G.; Esposito, G. Deep Neural Networks and transfer learning on a Multivariate Physiological Signal Dataset. Bioengineering 2021, 8, 35. [Google Scholar] [CrossRef] [PubMed]

- Bizzego, A.; Gabrieli, G.; Azhari, A.; Setoh, P.; Esposito, G. Computational methods for the assessment of empathic synchrony. In Progresses in Artificial Intelligence and Neural Systems; Springer: Berlin/Heidelberg, Germany, 2021; pp. 555–564. [Google Scholar]

- Gabrieli, G.; Bornstein, M.H.; Manian, N.; Esposito, G. Assessing Mothers’ Postpartum Depression From Their Infants’ Cry Vocalizations. Behav. Sci. 2020, 10, 55. [Google Scholar] [CrossRef] [PubMed]

| Dataset Name | Training | Testing | ||||

|---|---|---|---|---|---|---|

| N | Segments | % BEAT | N | Segments | % BEAT | |

| NormalSinus+LongTerm | 17 | 240,000 | 7.37 | 8 | 80,000 | 8.47 |

| Arrhythmia | 32 | 230,000 | 6.19 | 16 | 110,000 | 7.07 |

| Baseline FlexComp | 12 | 14,748 | 6.48 | 6 | 7384 | 6.43 |

| Baseline ComfTech | 12 | 14,741 | 6.62 | 6 | 7385 | 6.19 |

| Movement ComfTech | 12 | 14,886 | 7.33 | 6 | 7443 | 6.68 |

| Metric | Training Partition | Testing Partition |

|---|---|---|

| MCC | 0.860 [0.855, 0.866] | 0.797 [0.751, 0.830] |

| +p | 86.7% [85.9, 87.6] | 85.3% [81.3, 90.5] |

| Sensitivity | 87.3% [86.6, 88.2] | 78.3% [71.9, 82.3] |

| F-score | 0.870 [0.864, 0.876] | 0.815 [0.772, 0.853] |

| Dataset Name | Experiment 2 | Experiment 3 | |

|---|---|---|---|

| Testing Partition | Training Partition | Testing Partition | |

| Arrhythmia | 0.690 [0.675, 0.703] | 0.852 [0.844, 0.859] | 0.852 [0.843, 0.861] |

| Baseline FlexComp | 0.706 [0.642, 0.767] | 0.852 [0.864, 0.913] | 0.803 [0.760, 0.847] |

| Baseline ComfTech | 0.861 [0.815, 0.895] | 0.939 [0.917, 0.954] | 0.935 [0.911, 0.960] |

| Movement ComfTech | 0.822 [0.774, 0.865] | 0.874 [0.846, 0.902] | 0.879 [0.830, 0.907] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bizzego, A.; Gabrieli, G.; Neoh, M.J.Y.; Esposito, G. Improving the Efficacy of Deep-Learning Models for Heart Beat Detection on Heterogeneous Datasets. Bioengineering 2021, 8, 193. https://doi.org/10.3390/bioengineering8120193

Bizzego A, Gabrieli G, Neoh MJY, Esposito G. Improving the Efficacy of Deep-Learning Models for Heart Beat Detection on Heterogeneous Datasets. Bioengineering. 2021; 8(12):193. https://doi.org/10.3390/bioengineering8120193

Chicago/Turabian StyleBizzego, Andrea, Giulio Gabrieli, Michelle Jin Yee Neoh, and Gianluca Esposito. 2021. "Improving the Efficacy of Deep-Learning Models for Heart Beat Detection on Heterogeneous Datasets" Bioengineering 8, no. 12: 193. https://doi.org/10.3390/bioengineering8120193

APA StyleBizzego, A., Gabrieli, G., Neoh, M. J. Y., & Esposito, G. (2021). Improving the Efficacy of Deep-Learning Models for Heart Beat Detection on Heterogeneous Datasets. Bioengineering, 8(12), 193. https://doi.org/10.3390/bioengineering8120193