1. Introduction

Advances in neural network technology have made many tasks that once required human experts partially automatable. Assisting physicians with making diagnoses is one such task. In countries with aging populations such as Japan, the use of deep learning models is considered a promising way to mitigate healthcare labor shortages and constrained budgets. In fact, some deep learning models have already been used to solve various medical problems, including in the analysis of CT images [

1,

2] and mitigating the limitations of CT imaging under adverse conditions [

3].

Convolutional neural networks (CNNs) were the dominant paradigm in the computer vision field throughout the 2010s. Starting with AlexNet [

4] in 2012, successive architectures, such as VGG [

5], GoogLeNet [

6], and ResNet [

7], continually updated the state of the art in image classification and recognition. Early attempts at semantic segmentation involved adapting these CNNs to a sliding window paradigm, in which each local patch is classified in turn. Although this approach demonstrated that deep networks can learn pixel-level features, the heavy overlap between neighboring windows caused massive computational redundancy and very low throughput.

To eliminate such inefficiency, a new generation of end-to-end segmentation networks emerged. Modern research typically traces this lineage back to Fully Convolutional Networks (FCNs) [

8], followed by the encoder–decoder UNet [

9] and its derived models, most notably Attention UNet [

10]; TransUNet [

11], which integrates Vision Transformer (ViT) [

12] layers employing self-attention [

13]; and Swin-UNet [

14], a pure Transformer UNet developed on the basis of the Swin Transformer [

15]. These architectures have been widely adopted for the segmentation of computed tomography (CT) images.

In our experiments, these models were found to perform well when CT scans are optimally contrast-enhanced; however, their performance degrades markedly when the contrast is insufficient or absent. As contrast agents cannot always be administered (e.g., due to patient contraindications or in resource-limited settings), it is necessary to develop models that can reliably localize and segment the aorta under low-contrast conditions.

Although redesigning the architecture’s backbone or adding extra inputs/parameters may alleviate this problem, such changes often increase GPU memory consumption and hinder deployment. A more practical alternative is to enhance existing models with lightweight components, rather than making radical architectural changes.

Fully 3D segmentation networks capture rich volumetric context, but the cubic growth of the feature map’s size incurs prohibitive GPU memory and computational costs. Patch-based 3D inputs reduce memory usage but compromise global spatial coherence. In contrast, purely 2D models are efficient but fail to capture inter-slice context. A pragmatic compromise is the use of 2.5D inputs [

16], namely, short stacks of adjacent slices that retain some through-plane information while preserving a near-2D memory footprint.

Among the architectures that leverage 2.5D data, RNN-like networks [

17] are particularly attractive. Their sequential design naturally encodes the dependencies between slices while their memory consumption remains comparable to that of standard 2D models, thereby providing volumetric context without sacrificing deployability.

In this study, we propose an ASC module that leverages prior anatomical information about the aorta to automatically enhance aortic contrast, which is designed for integration into RNN-like models. The overall network adopts an RNN-like structure to process 2.5D inputs, thereby incorporating spatial context. As each sub-model still operates on 2D inputs and only a single 2D segmenter is used, the GPU memory burden is not increased appreciably relative to the baselines. Meanwhile, the ASC module’s streamlined design enables seamless integration with common medical image segmentation backbones and significantly improves its segmentation accuracy for CT images with insufficient or no contrast.

2. Materials and Methods

Our proposed method is based on the assumption that, as neural networks are inspired by the structure of biological neurons, they should exhibit similar behaviors in terms of recognizing clearly enhanced structures, such as the contrast-enhanced aorta in CT images. Furthermore, incorporating certain prior knowledge can increase the prediction accuracy of neural network models. In our proposed method, such prior knowledge is represented by spatially guided automatic contrast enhancement.

2.1. Network Architecture

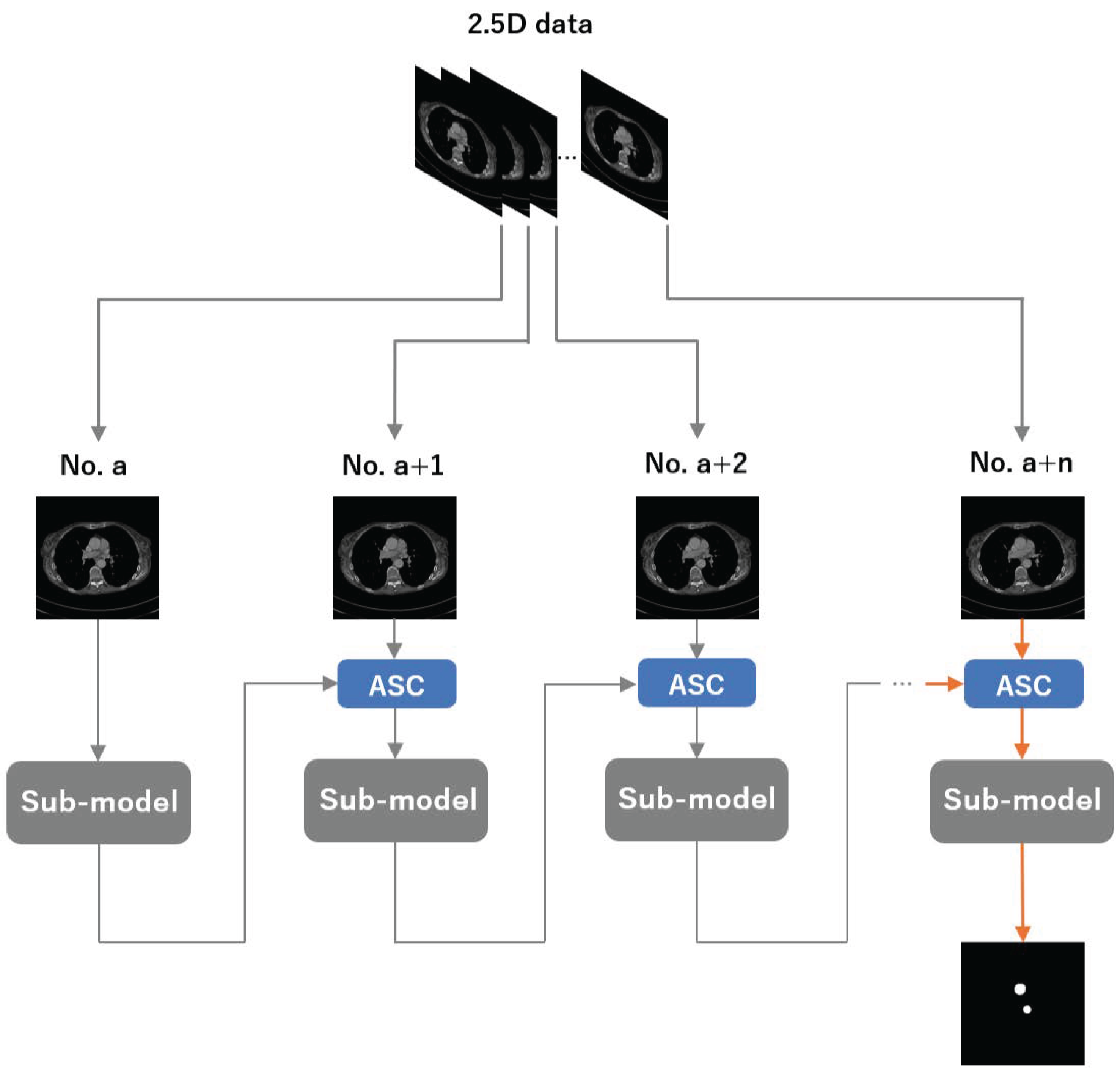

An overview of our method is shown in

Figure 1. We group n adjacent CT slices into a 2.5D input, where the per-slice sub-model is a 2D segmenter (instantiated as UNet, Attention U-Net, TransUNet, or Swin-UNet). Let

a denote the slice index. We first feed the

a-th slice into the sub-model and obtain its segmentation. We then pass this prediction to the ASC module to construct an enhanced map, combine it with the

-th slice, and feed the enhanced input back to the same sub-model. Repeating this process

times yields the final segmentation for the target slice.

2.2. ASC Module

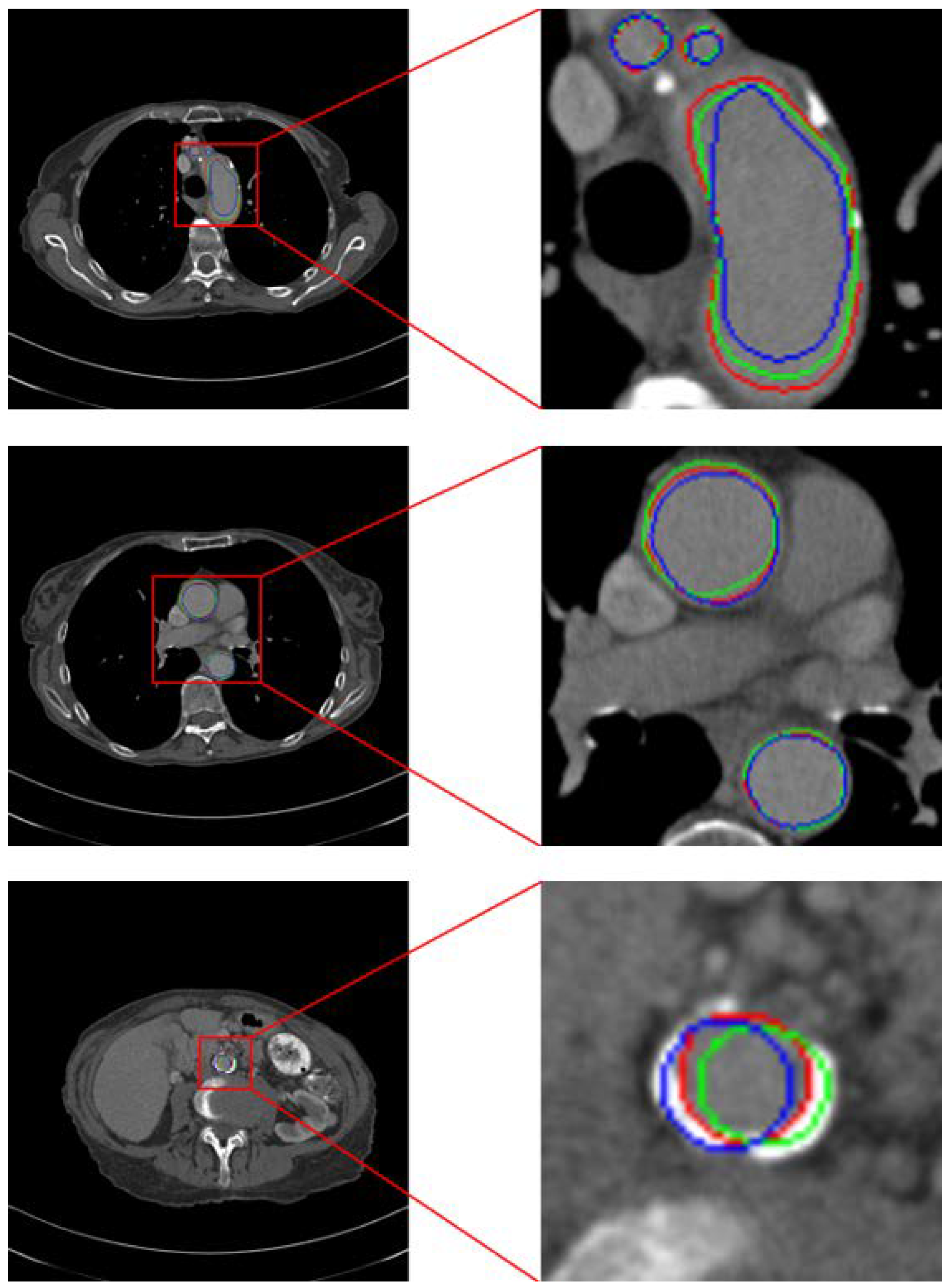

In the ASC module (see

Figure 2), the prediction for the previous CT slice is used to construct an enhanced map, which amplifies the aortic intensity in the current slice before it is fed to the sub-model, thus realizing automatic contrast enhancement. Concretely, we apply element-wise multiplication between the current CT image

and the enhanced map

:

where

denotes the enhanced input that is passed to the sub-model and ⊙ indicates pixel-wise multiplication.

Some related works have applied element-wise masking (i.e., multiplying the image by a binary mask) to force the network to focus on regions of interest [

17]. However, such hard masking can suppress contextual cues and inter-organ relationships. Moreover, unlike organs with well-defined boundaries such as the lungs, the aorta is difficult to delineate from surrounding tissues under insufficient contrast enhancement. Some pipelines further rely on two separate networks [

18,

19] (e.g., one for localization and one for segmentation) or a two-pass scheme at different resolutions, which increases computational cost and model complexity. Alternatively, soft attention methods integrate features from adjacent slices to improve target slice segmentation; however, they typically reduce interpretability and incur higher computational overhead.

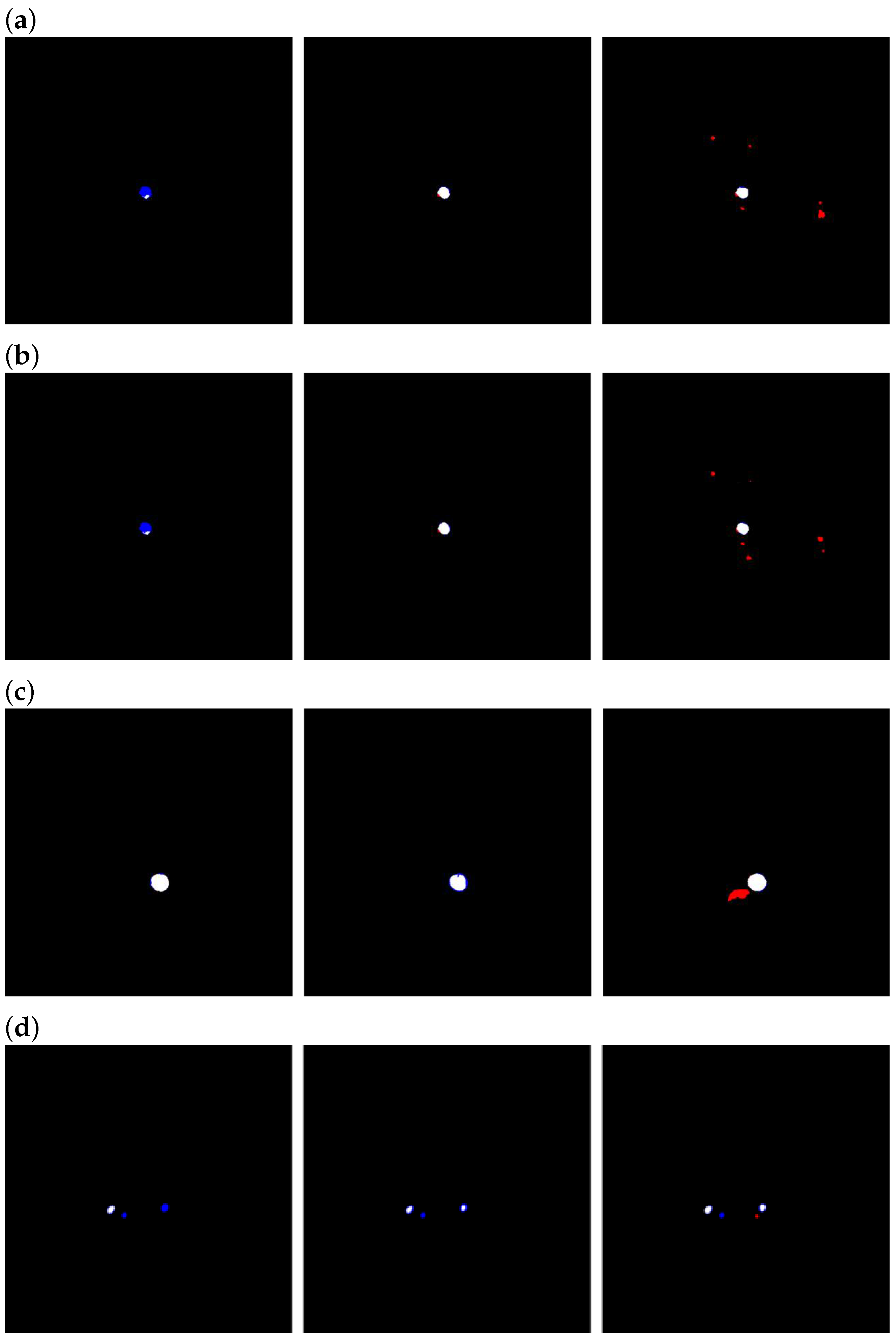

Therefore, we adopt a hard attention-type gating scheme to improve model performance. As shown in

Figure 3, the aorta typically exhibits only minor positional shifts and generally similar morphology in adjacent CT slices. Leveraging this property, we generate an enhancement map using the previous slice’s prediction to guide the current slice. Nevertheless, small inter-slice differences remain, and the aorta’s positional offset varies across cases as a function of slice thickness. To increase the likelihood that the enhancement region covers the entire aorta despite these shifts, we expand the region using the strategy illustrated in

Figure 4.

We split the previous prediction into

kernels and, when the average pixel value in one kernel exceeds a minimum value

, the kernel value is adjusted to enhance the pixel values. Otherwise, the kernel value is adjusted to 1.0:

After performing element-wise multiplication, the pixel values in the high-confidence mask region which had been predicted as representing the aorta are enhanced.

2.3. Dataset

The Aortic Vessel Tree (AVT) CTA dataset [

20], which includes ground-truth segmentations, was used in this study. To simulate the two cases in which CT images are optimally contrasted and poorly/non-contrasted, we split the dataset based on the CT values at the aorta locations in the CT images into two levels, as detailed in

Table 1. The aorta CT values in Level 1 are over 250 [HU], while those in Level 2 are in the range of [100, 250] [HU].

2.4. Data Form

It is necessary to enable shuffling in the DataLoader to randomize the data and ensure that the model can readily access the input samples, thus allowing it to learn the relationships between the slices used to generate the enhanced map and the target slice to be segmented. For training, we grouped adjacent slices into a block and fed them as input to the model.

As it is difficult to guarantee the same number of CT slices across all cases, during this process it was also ensured that valid data were always supplied within each batch. To allow the model to fully adapt to using the previous slice’s prediction to create an enhanced map for the next slice, and to enable the model to learn potential inter-slice connections, the number of slices contained in each block should not be too small. Suppose that we enclose only two slices in a block; then, only one slice is enhanced by the enhanced map, which is also the slice that is used to produce the overall prediction. In this case, the slice used to build the enhanced map remains a non-enhanced low-contrast CT image; therefore, the enhancement effect is likely to be inaccurate. As the baseline 2D models attain higher DSC and IoU values on well-contrasted CT images, the predictions on the enhanced slices within a block should become increasingly accurate. At the same time, the number of opportunities for the model to learn the relationships among the slices in a block is ; that is, to make the model more adaptable to predicting a case sequentially during inference, should be as large as possible. On the other hand, as the time required for training and inference in our method is roughly proportional to the number of slices in each block, the number of slices per block should not be set too large to reduce the training and inference times.

In light of the above considerations, we argue that selecting an appropriate number of slices represents a necessary trade-off between segmentation accuracy and the computational costs of training and inference.

In our experiment, we set a block consisting of 4 CT slices to be fed into our RNN-like architecture, thus ensuring that the models have a sufficient chance to learn the relationships between slices. In particular, if one block is composed of slices from No. to No. , the next block should be made up of slices from No. to No. . To allow the model to process sufficient slices to automatically contrast the position of the aorta exactly, we compared the last slice’s prediction with the corresponding ground-truth mask. The resolution of each CT slice was pixels.

2.5. Settings

To ensure a fair comparison, all models were trained with the same settings in the main experiments: batch size = 4, learning rate = 1 × 10−6, and 200 training epochs. We used the Adam optimizer. All slices within each block had a resolution of 512 × 512 pixels.

For TransUNet, the number of Transformer layers, hidden size, MLP ratio, and number of heads were set to 12, 768, 4, and 12, respectively.

For Swin-UNet, as the input resolution was 512 × 512 rather than 224 × 224, and we increased the window size from 7 to 16. As only the aorta and background were considered in this task, the number of output classes in the final segmentation head was set to 2.

For the ASC module, the enhancement kernel size was set to 16 × 16. The enhancement factor was 1.1 for Level 1 and 1.4 for Level 2.

2.6. Evaluation

In the experiment, we used the Dice Similarity Coefficient (DSC) as the primary evaluation metric, while the Intersection-over-Union (IoU) is reported as a complementary metric. Let

denote the binary prediction mask and

the corresponding ground-truth mask. Define

, and

. Then, the above-mentioned metrics are computed using

4. Discussion

We propose a method that effectively improves segmentation accuracy on low-contrast or non-contrast-enhanced CT scans. As shown in

Table 2a, we were pleasantly surprised to observe positive effects on well-contrasted scans as well, suggesting the existence of an optimal intensity range for training deep aorta-segmentation models. In this study we used a fixed enhancement factor (EF), set to 1.1 for all Level 1 data and 1.4 for all Level 2 data, selected via validation. Using a fixed scalar avoids introducing additional networks—thereby preventing extra GPU memory overhead (see

Table 4)—and preserves the overall time complexity (see

Table 5). Nevertheless, even within Level 1 data, aortic intensities vary across patients and along different segments of the aorta. Because a multiplicative EF

amplifies such variability, the post-enhancement aortic intensity will not always fall within the presumed “optimal” range. As a future direction, we plan to adapt the ASC module and the segmentation network so that the enhancement factor becomes adaptive or learnable, enabling finer-grained enhancement of CT data while controlling the usage of GPU memory growth.

Table 7 summarizes the Dice Similarity Coefficient (DSC) and Intersection over Union (IoU) values for the UNet baseline and the UNet augmented with the ASC module, evaluated on the held-out test sets R9 and R17. Across both test sets, the ASC module yields consistent—and often substantial—improvements in segmentation performance on low/non-contrast CT scans. We acknowledge the limitation of the relatively small test sets; this study primarily introduces a methodological framework. As part of subsequent clinical validation, we will expand to multi-center datasets and conduct formal statistical significance testing to further substantiate these findings.

As a trade-off for higher accuracy, our method substantially reduced throughput relative to the baseline (see

Table 2a,b). Nevertheless, even when processing a 1000-slice CT volume with the slowest configuration, that is, when using TransUNet as the sub-model, the inference time was only about 2 minutes. We consider this latency acceptable for frontline practice, particularly in clinical workflows where segmentation accuracy typically takes precedence over marginal speed.

Regarding the effect of the number of slices in the ablation study, we observed that using four slices per block yielded worse metrics than when using three slices per block. From the standpoint of limiting the training and inference times, this finding is encouraging—it suggests that the proposed ASC module and training strategy can deliver noticeable gains at a relatively small time cost, rather than requiring a larger block to chase marginal long-tailed benefits. We attribute this phenomenon to error accumulation. As the segmentations of CT slices used to generate enhanced maps are not perfectly accurate, the anticipated improvement, namely, that the enhanced map would become increasingly accurate as the number of slices per block grows, did not materialize [

23].

Regarding the enhancement kernel size (

), we interpreted the observations as reflecting a balance between cross-slice alignment and background amplification. When the voxel depth was small,

yielded the highest IoU and the second-best Dice score; however, when the voxel depth was medium or large,

led to a substantial drop in both Dice and IoU values. As shown in

Figure 6, our dataset has an uneven distribution of voxel depths, with noticeably fewer medium/low-density scans than high-density scans. This may indicate that

is more prone to vulnerability when there is slight misalignment between the enhancement map and the target slice.

With , the performance improved gradually as voxel depth decreased. The peak accuracy was lower than that achieved with or ; we suspect that a slice spacing of 0.625 mm is still too large for to realize its peak performance. In addition, as a smaller enhancement kernel tends to confine mistakes within the aortic lumen rather than spuriously enhancing surrounding tissues, false positive regions remain limited and false negatives do not expand markedly as voxel depth increases. As a result, does not suffer sharp degradation when the voxel depth grows.

In contrast, while

attained the highest Dice scores across all conditions, its IoU values on medium- and high-density data were inferior to those of

. As illustrated in

Figure 5, a larger kernel offers more complete coverage of the aortic region yet simultaneously amplifies nearby tissues, increasing the false positive area. As per Equation (

6), the IoU penalizes false positives more stringently than Dice, which explains the divergence between these metrics. From the perspective of readability (smaller false positive area), while still seeking high overall performance, we therefore adopted

as the main experimental setting.

To clarify the relationship between enhancement kernel size and voxel depth more definitively, it is necessary—while acknowledging the constraints and scarcity of medical imaging data—to strive for a more balanced distribution of voxel depths in the training data without sacrificing overall sample size.

Nevertheless, as shown in

Table 7, our method performs strongly not only on the high-density dataset R9 (voxel depth = 0.625 mm), where more training data are available, but also yields substantial gains on the medium-density dataset R17 (voxel depth = 2.5 mm), where the amount of available training data is smaller. We posit that although performance correlates with the enhancement kernel size and the volume of training data at a given voxel depth, the proposed ASC module can still confer benefits even when training data at similar densities are scarce. This underscores the potential of the ASC module and its associated training strategy.