Intelligence Architectures and Machine Learning Applications in Contemporary Spine Care

Abstract

1. Introduction

2. Methodology

2.1. Literature Search Strategy

2.2. Inclusion and Exclusion Criteria

2.3. Data Extraction and Synthesis

2.4. Risk of Bias and Validation Considerations

3. Current Applications of AI in Imaging and Radiological Analysis

3.1. Automated Detection and Classification of Spinal Pathologies

3.2. Advanced Morphometric Analysis and Quantitative Assessment

3.3. AI’s Ability to Enhance Workflow

4. Surgical Planning and Robotic-Assisted Interventions

4.1. Advanced Preoperative Planning and Simulation

4.2. Robotic-Assisted Surgical Execution

4.3. Integration with Advanced Navigation and Guidance Systems

4.4. Functional Outcome Prediction and Treatment Optimization

4.5. Cost-Effectiveness Analysis

5. Genomic Applications and Precision Medicine

5.1. Genome-Wide Association Studies in Spine Surgery Risk Assessment

5.2. Pharmacogenomics and Personalized Pain Management

5.3. Multi-Omics Analysis

6. Clinical Decision Support and Documentation Systems

6.1. Ambient Clinical Intelligence and Documentation Automation

6.2. Clinical Decision Support Systems

7. Current Challenges, Limitations, and Implementation Barriers

7.1. Technical and Algorithmic Limitations

7.2. Regulatory and Validation Challenges

7.3. Clinical Integration and Workflow Challenges

7.4. Data Quality, Generalization, and Statistical Stability

7.5. Bias, Fairness, and Subgroup Performance

7.6. External Validation, Robustness, and Failure-Mode Testing

7.7. The Gap Between Promising Research and Clinical Reality

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

Appendix A. Technical Background on CNNs

Appendix A.1. Rationale and Overview

Appendix A.2. Architecture, Layers and Feature Hierarchy

Appendix A.3. Training, Tasks, and Outputs

References

- Voter, A.F.; Larson, M.E.; Garrett, J.W.; Yu, J.J. Diagnostic Accuracy and Failure Mode Analysis of a Deep-Learning Algorithm for the Detection of Cervical Spine Fractures. AJNR. Am. J. Neuroradiol. 2021, 42, 1550–1556. [Google Scholar] [CrossRef]

- Page, J.H.; Moser, F.G.; Maya, M.M.; Prasad, R.; Pressman, B.D. Opportunistic CT Screening-Machine Learning Algorithm Identifies Majority of Vertebral Compression Fractures: A Cohort Study. JBMR Plus 2023, 7, e10778. [Google Scholar] [CrossRef]

- Nigru, A.S.; Benini, S.; Bonetti, M.; Bragaglio, G.; Frigerio, M.; Maffezzoni, F.; Leonardi, R. External validation of SpineNetV2 on a comprehensive set of radiological features for grading lumbosacral disc pathologies. N. Am. Spine Soc. J. 2024, 20, 100564. [Google Scholar] [CrossRef]

- Elhaddad, M.; Hamam, S. AI-Driven Clinical Decision Support Systems: An Ongoing Pursuit of Potential. Cureus 2024, 16, e57728. [Google Scholar] [CrossRef]

- O’Connor, T.E.; O’Hehir, M.M.; Khan, A.; Mao, J.Z.; Levy, L.C.; Mullin, J.P.; Pollina, J. Mazor X Stealth Robotic Technology: A Technical Note. World Neurosurg. 2021, 145, 435–442. [Google Scholar] [CrossRef]

- Sigala, R.E.; Lagou, V.; Shmeliov, A.; Atito, S.; Kouchaki, S.; Awais, M.; Prokopenko, I.; Mahdi, A.; Demirkan, A. Machine Learning to Advance Human Genome-Wide Association Studies. Genes 2023, 15, 34. [Google Scholar] [CrossRef] [PubMed]

- U.S. Food and Drug Administration. 510(k) Summary: HealthVCF (K192901); U.S. Food and Drug Administration: Silver Spring, MD, USA, 2020. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf19/K192901.pdf (accessed on 20 August 2025).

- Monchka, B.A.; Schousboe, J.T.; Davidson, M.J.; Kimelman, D.; Hans, D.; Raina, P.; Leslie, W.D. Development of a manufacturer-independent convolutional neural network for the automated identification of vertebral compression fractures in vertebral fracture assessment images using active learning. Bone 2022, 161, 116427. [Google Scholar] [CrossRef] [PubMed]

- Gstoettner, M.; Sekyra, K.; Walochnik, N.; Winter, P.; Wachter, R.; Bach, C.M. Inter- and intraobserver reliability assessment of the Cobb angle: Manual versus digital measurement tools. Eur. Spine J. 2007, 16, 1587–1592. [Google Scholar] [CrossRef] [PubMed]

- Jamaludin, A.; Kadir, T.; Zisserman, A. SpineNet: Automated classification and evidence visualization in spinal MRIs. Med. Image Anal. 2017, 41, 63–73. [Google Scholar] [CrossRef] [PubMed]

- Grob, A.; Loibl, M.; Jamaludin, A.; Winklhofer, S.; Fairbank, J.C.T.; Fekete, T.; Porchet, F.; Mannion, A.F. External validation of the deep learning system “SpineNet” for grading radiological features of degeneration on MRIs of the lumbar spine. Eur. Spine J. 2022, 31, 2137–2148. [Google Scholar] [CrossRef]

- McSweeney, T.P.; Tiulpin, A.; Saarakkala, S.; Niinimäki, J.; Windsor, R.; Jamaludin, A.; Kadir, T.; Karppinen, J.; Määttä, J. External Validation of SpineNet, an Open-Source Deep Learning Model for Grading Lumbar Disk Degeneration MRI Features, Using the Northern Finland Birth Cohort 1966. Spine (Phila Pa 1976). 2023, 48, 484–491. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Small, J.E.; Osler, P.; Paul, A.B.; Kunst, M. CT Cervical Spine Fracture Detection Using a Convolutional Neural Network. AJNR. Am. J. Neuroradiol. 2021, 42, 1341–1347. [Google Scholar] [CrossRef] [PubMed]

- van den Wittenboer, G.J.; van der Kolk, B.Y.M.; Nijholt, I.M.; Langius-Wiffen, E.; van Dijk, R.A.; van Hasselt, B.A.A.M.; Podlogar, M.; van den Brink, W.A.; Bouma, G.J.; Schep, N.W.L.; et al. Diagnostic accuracy of an artificial intelligence algorithm versus radiologists for fracture detection on cervical spine CT. Eur. Radiol. 2024, 34, 5041–5048. [Google Scholar] [CrossRef]

- Kumar, R.; Gosain, A.; Saintyl, J.J.; Zheng, A.; Chima, K.; Cassagnol, R. Bridging gaps in orthopedic residency admissions: Embracing diversity beyond research metrics. Can. Med. Educ. J. 2025, 16, 68–70. [Google Scholar] [CrossRef]

- Smith, A.; Picheca, L.; Mahood, Q. Robotic Surgical Systems for Orthopedics: Emerging Health Technologies; Canadian Agency for Drugs and Technologies in Health: Ottawa, ON, Canda, 2022. Available online: https://www.ncbi.nlm.nih.gov/books/NBK602663/ (accessed on 15 August 2025).

- Bhimreddy, M.; Hersh, A.M.; Jiang, K.; Weber-Levine, C.; Davidar, A.D.; Menta, A.K.; Judy, B.F.; Lubelski, D.; Bydon, A.; Weingart, J.; et al. Accuracy of Pedicle Screw Placement Using the ExcelsiusGPS Robotic Navigation Platform: An Analysis of 728 Screws. Int. J. Spine Surg. 2024, 18, 712–720. [Google Scholar] [CrossRef]

- Yeh, Y.C.; Weng, C.H.; Huang, Y.J.; Fu, C.J.; Tsai, T.T.; Yeh, C.Y. Deep learning approach for automatic landmark detection and alignment analysis in whole-spine lateral radiographs. Sci. Rep. 2021, 11, 7618. [Google Scholar] [CrossRef]

- Ammarullah, M.I. Integrating finite element analysis in total hip arthroplasty for childhood hip disorders: Enhancing precision and outcomes. World J. Orthop. 2025, 16, 98871. [Google Scholar] [CrossRef]

- Clark, P.; Kim, J.; Aphinyanaphongs, Y. Marketing and US Food and Drug Administration Clearance of Artificial Intelligence and Machine Learning Enabled Software in and as Medical Devices: A Systematic Review. JAMA Netw. Open 2023, 6, e2321792. [Google Scholar] [CrossRef] [PubMed]

- Salo, V.; Määttä, J.; Sliz, E.; FinnGen; Reimann, E.; Mägi, R.; Estonian Biobank Research Team; Reis, K.; Elhanas, A.G.; Reigo, A.; et al. Genome-wide meta-analysis conducted in three large biobanks expands the genetic landscape of lumbar disc herniations. Nat. Commun. 2024, 15, 9424. [Google Scholar] [CrossRef]

- Haberle, T.; Cleveland, C.; Snow, G.L.; Barber, C.; Stookey, N.; Thornock, C.; Younger, L.; Mullahkhel, B.; Ize-Ludlow, D. The impact of nuance DAX ambient listening AI documentation: A cohort study. J. Am. Med. Inform. Assoc. 2024, 31, 975–979. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.J.; Devon-Sand, A.; Ma, S.P.; Jeong, Y.; Crowell, T.; Smith, M.; Liang, A.S.; Delahaie, C.; Hsia, C.; Shanafelt, T.; et al. Ambient artificial intelligence scribes: Physician burnout and perspectives on usability and documentation burden. J. Am. Med. Inform. Assoc. 2025, 32, 375–380. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef]

- Lin, W.-C.; Tu, Y.-C.; Lin, H.-Y.; Tseng, M.-H. A Comparison of Deep Learning Techniques for Pose Recognition in Up-and-Go Pole Walking Exercises Using Skeleton Images and Feature Data. Electronics 2025, 14, 1075. [Google Scholar] [CrossRef]

- Su, Z.; Adam, A.; Nasrudin, M.F.; Prabuwono, A.S. Proposal-Free Fully Convolutional Network: Object Detection Based on a Box Map. Sensors 2024, 24, 3529. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Du, J.; Huang, Y.; Hao, D.; Zhao, Z.; Chang, Z.; Zhang, X.; Gao, S.; He, B. Comparison of the S8 navigation system and the TINAVI orthopaedic robot in the treatment of upper cervical instability. Sci. Rep. 2024, 14, 6487. [Google Scholar] [CrossRef] [PubMed]

- Kirchner, G.J.; Kim, A.H.; Kwart, A.H.; Weddle, J.B.; Bible, J.E. Reported Events Associated With Spine Robots: An Analysis of the Food and Drug Administration’s Manufacturer and User Facility Device Experience Database. Glob. Spine J. 2023, 13, 855–860. [Google Scholar] [CrossRef] [PubMed]

- Murata, K.; Endo, K.; Aihara, T.; Suzuki, H.; Sawaji, Y.; Matsuoka, Y.; Nishimura, H.; Takamatsu, T.; Konishi, T.; Maekawa, A.; et al. Artificial intelligence for the detection of vertebral fractures on plain spinal radiography. Sci. Rep. 2020, 10, 20031. [Google Scholar] [CrossRef]

- Derkatch, S.; Kirby, C.; Kimelman, D.; Jozani, M.J.; Davidson, J.M.; Leslie, W.D. Identification of Vertebral Fractures by Convolutional Neural Networks to Predict Nonvertebral and Hip Fractures: A Registry-based Cohort Study of Dual X-ray Absorptiometry. Radiology 2019, 293, 405–411. [Google Scholar] [CrossRef]

- Singh, M.; Tripathi, U.; Patel, K.K.; Mohit, K.; Pathak, S. An efficient deep learning based approach for automated identification of cervical vertebrae fracture as a clinical support aid. Sci. Rep. 2025, 15, 25651. [Google Scholar] [CrossRef]

- Golla, A.K.; Lorenz, C.; Buerger, C.; Lossau, T.; Klinder, T.; Mutze, S.; Arndt, H.; Spohn, F.; Mittmann, M.; Goelz, L. Cervical spine fracture detection in computed tomography using convolutional neural networks. Phys. Med. Biol. 2023, 68, 115010. [Google Scholar] [CrossRef]

- Li, K.Y.; Ye, H.B.; Zhang, Y.L.; Huang, J.W.; Li, H.L.; Tian, N.F. Enhancing Diagnostic Accuracy of Fresh Vertebral Compression Fractures With Deep Learning Models. Spine 2025, 50, E330–E335. [Google Scholar] [CrossRef] [PubMed]

- Kuo, R.Y.L.; Harrison, C.; Curran, T.A.; Jones, B.; Freethy, A.; Cussons, D.; Stewart, M.; Collins, G.S.; Furniss, D. Artificial Intelligence in Fracture Detection: A Systematic Review and Meta-Analysis. Radiology 2022, 304, 50–62. [Google Scholar] [CrossRef]

- Chen, H.Y.; Hsu, B.W.; Yin, Y.K.; Lin, F.H.; Yang, T.H.; Yang, R.S.; Lee, C.K.; Tseng, V.S. Application of deep learning algorithm to detect and visualize vertebral fractures on plain frontal radiographs. PLoS ONE 2021, 16, e0245992. [Google Scholar] [CrossRef]

- Namireddy, S.R.; Gill, S.S.; Peerbhai, A.; Kamath, A.G.; Ramsay, D.S.C.; Ponniah, H.S.; Salih, A.; Jankovic, D.; Kalasauskas, D.; Neuhoff, J.; et al. Artificial intelligence in risk prediction and diagnosis of vertebral fractures. Sci. Rep. 2024, 14, 30560. [Google Scholar] [CrossRef]

- Hallinan, J.T.P.D.; Zhu, L.; Yang, K.; Makmur, A.; Algazwi, D.A.R.; Thian, Y.L.; Lau, S.; Choo, Y.S.; Eide, S.E.; Yap, Q.V.; et al. Deep Learning Model for Automated Detection and Classification of Central Canal, Lateral Recess, and Neural Foraminal Stenosis at Lumbar Spine MRI. Radiology 2021, 300, 130–138. [Google Scholar] [CrossRef]

- Van Timmeren, J.E.; Cester, D.; Tanadini-Lang, S.; Alkadhi, H.; Baessler, B. Radiomics in medical imaging—“how-to” guide and critical reflection. Insights Into Imaging 2020, 11, 91. [Google Scholar] [CrossRef]

- Cao, J.; Li, Q.; Zhang, H.; Wu, Y.; Wang, X.; Ding, S.; Chen, S.; Xu, S.; Duan, G.; Qiu, D.; et al. Radiomics model based on MRI to differentiate spinal multiple myeloma from metastases: A two-center study. J. Bone Oncol. 2024, 45, 100599. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Zhang, S.B.; Yang, S.; Ge, X.Y.; Ren, C.X.; Wang, S.J. CT-based radiomics predicts adjacent vertebral fracture after percutaneous vertebral augmentation. Eur. Spine J. 2025, 34, 528–536. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Qin, S.; Zhao, W.; Wang, Q.; Liu, K.; Xin, P.; Yuan, H.; Zhuang, H.; Lang, N. MRI feature-based radiomics models to predict treatment outcome after stereotactic body radiotherapy for spinal metastases. Insights Into Imaging 2023, 14, 169. [Google Scholar] [CrossRef]

- Bendtsen, M.G.; Hitz, M.F. Opportunistic Identification of Vertebral Compression Fractures on CT Scans of the Chest and Abdomen, Using an AI Algorithm, in a Real-Life Setting. Calcif. Tissue Int. 2024, 114, 468–479. [Google Scholar] [CrossRef]

- Windsor, R.; Jamaludin, A.; Kadir, T.; Zisserman, A. Automated detection, labelling and radiological grading of clinical spinal MRIs. Sci. Rep. 2024, 14, 14993. [Google Scholar] [CrossRef]

- Galbusera, F.; Bassani, T.; Panico, M.; Sconfienza, L.M.; Cina, A. A fresh look at spinal alignment and deformities: Automated analysis of a large database of 9832 biplanar radiographs. Front. Bioeng. Biotechnol. 2022, 10, 863054. [Google Scholar] [CrossRef]

- Li, H.; Qian, C.; Yan, W.; Fu, D.; Zheng, Y.; Zhang, Z.; Meng, J.; Wang, D. Use of Artificial Intelligence in Cobb Angle Measurement for Scoliosis: Retrospective Reliability and Accuracy Study of a Mobile App. J. Med. Internet Res. 2024, 26, e50631. [Google Scholar] [CrossRef] [PubMed]

- Chui, C.E.; He, Z.; Lam, T.P.; Mak, K.K.; Ng, H.R.; Fung, C.E.; Chan, M.S.; Law, S.W.; Lee, Y.W.; Hung, L.A.; et al. Deep Learning-Based Prediction Model for the Cobb Angle in Adolescent Idiopathic Scoliosis Patients. Diagnostics 2024, 14, 1263. [Google Scholar] [CrossRef]

- Deng, Y.; Wang, C.; Hui, Y.; Li, Q.; Li, J.; Luo, S.; Sun, M.; Quan, Q.; Yang, S.; Hao, Y. Ctspine1k: A large-scale dataset for spinal vertebrae segmentation in computed tomography. arXiv 2021, arXiv:2105.14711. [Google Scholar]

- Lee, W.S.; Ahn, S.M.; Chung, J.W.; Kim, K.O.; Kwon, K.A.; Kim, Y.; Sym, S.; Shin, D.; Park, I.; Lee, U.; et al. Assessing Concordance With Watson for Oncology, a Cognitive Computing Decision Support System for Colon Cancer Treatment in Korea. JCO Clin. Cancer Inform. 2018, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Cina, A.; Galbusera, F. Advancing spine care through AI and machine learning: Overview and applications. EFORT Open Rev. 2024, 9, 422–433. [Google Scholar] [CrossRef]

- Leis, A.; Fradera, M.; Peña-Gómez, C.; Aguilera, P.; Hernandez, G.; Parralejo, A.; Ramírez-Anguita, J.M.; Mayer, M.A. Real World Data and Real World Evidence Using TriNetX: The TauliMar Clinical Research Network. Stud. Health Technol. Inform. 2025, 327, 759–760. [Google Scholar] [CrossRef]

- Chouffani El Fassi, S.; Abdullah, A.; Fang, Y.; Natarajan, S.; Masroor, A.B.; Kayali, N.; Prakash, S.; Henderson, G.E. Not all AI health tools with regulatory authorization are clinically validated. Nat. Med. 2024, 30, 2718–2720. [Google Scholar] [CrossRef]

- Mienye, I.D.; Swart, T.G.; Obaido, G.; Jordan, M.; Ilono, P. Deep Convolutional Neural Networks in Medical Image Analysis: A Review. Information 2025, 16, 195. [Google Scholar] [CrossRef]

- Baur, D.; Kroboth, K.; Heyde, C.E.; Voelker, A. Convolutional Neural Networks in Spinal Magnetic Resonance Imaging: A Systematic Review. World Neurosurg. 2022, 166, 60–70. [Google Scholar] [CrossRef]

- Neha, F.; Bhati, D.; Shukla, D.K.; Dalvi, S.M.; Mantzou, N.; Shubbar, S. U-net in medical image segmentation: A review of its applications across modalities. arXiv 2024, arXiv:2412.02242. [Google Scholar] [CrossRef]

- Watanabe, A.; Ketabi, S.; Namdar, K.; Khalvati, F. Improving disease classification performance and explainability of deep learning models in radiology with heatmap generators. Front. Radiol. 2022, 2, 991683. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, F.; Xu, J.; Zhao, Q.; Huang, C.; Yu, Y.; Yuan, H. Automated detection and classification of acute vertebral body fractures using a convolutional neural network on computed tomography. Front. Endocrinol. 2023, 14, 1132725. [Google Scholar] [CrossRef] [PubMed]

- Liawrungrueang, W.; Han, I.; Cholamjiak, W.; Sarasombath, P.; Riew, K.D. Artificial Intelligence Detection of Cervical Spine Fractures Using Convolutional Neural Network Models. Neurospine 2024, 21, 833–841. [Google Scholar] [CrossRef] [PubMed]

- Monchka, B.A.; Kimelman, D.; Lix, L.M.; Leslie, W.D. Feasibility of a generalized convolutional neural network for automated identification of vertebral compression fractures: The Manitoba Bone Mineral Density Registry. Bone 2021, 150, 116017. [Google Scholar] [CrossRef]

- Inoue, T.; Maki, S.; Furuya, T.; Mikami, Y.; Mizutani, M.; Takada, I.; Okimatsu, S.; Yunde, A.; Miura, M.; Shiratani, Y.; et al. Automated fracture screening using an object detection algorithm on whole-body trauma computed tomography. Sci. Rep. 2022, 12, 16549. [Google Scholar] [CrossRef]

- Husarek, J.; Hess, S.; Razaeian, S.; Ruder, T.D.; Sehmisch, S.; Müller, M.; Liodakis, E. Artificial intelligence in commercial fracture detection products: A systematic review and meta-analysis of diagnostic test accuracy. Sci. Rep. 2024, 14, 23053. [Google Scholar] [CrossRef] [PubMed]

- Paik, S.; Park, J.; Hong, J.Y.; Han, S.W. Deep learning application of vertebral compression fracture detection using mask R-CNN. Sci. Rep. 2024, 14, 16308. [Google Scholar] [CrossRef]

- Pereira, R.F.B.; Helito, P.V.P.; Leão, R.V.; Rodrigues, M.B.; Correa, M.F.P.; Rodrigues, F.V. Accuracy of an artificial intelligence algorithm for detecting moderate-to-severe vertebral compression fractures on abdominal and thoracic computed tomography scans. Radiol. Bras. 2024, 57, e20230102. [Google Scholar] [CrossRef]

- Ruitenbeek, H.C.; Oei, E.H.; Schmahl, B.L.; Bos, E.M.; Verdonschot, R.J.; Visser, J.J. Towards clinical implementation of an AI-algorithm for detection of cervical spine fractures on computed tomography. Eur. J. Radiol. 2024, 173, 111375. [Google Scholar] [CrossRef]

- Zhang, W.; Chen, Z.; Su, Z.; Wang, Z.; Hai, J.; Huang, C.; Wang, Y.; Yan, B.; Lu, H. Deep learning-based detection and classification of lumbar disc herniation on magnetic resonance images. JOR Spine 2023, 6, e1276. [Google Scholar] [CrossRef]

- Wang, A.; Wang, T.; Liu, X.; Fan, N.; Yuan, S.; Du, P.; Zang, L. Automated diagnosis and grading of lumbar intervertebral disc degeneration based on a modified YOLO framework. Front. Bioeng. Biotechnol. 2025, 13, 1526478. [Google Scholar] [CrossRef]

- Tumko, V.; Kim, J.; Uspenskaia, N.; Honig, S.; Abel, F.; Lebl, D.R.; Hotalen, I.; Kolisnyk, S.; Kochnev, M.; Rusakov, A.; et al. A neural network model for detection and classification of lumbar spinal stenosis on MRI. Eur. Spine J. 2024, 33, 941–948. [Google Scholar] [CrossRef]

- Shahid, A.; Kim, J.; Byon, S.S.; Hong, S.; Lee, I.; Lee, B.D. An end-to-end pipeline for automated scoliosis diagnosis with standardized clinical reporting using SNOMED CT. Sci. Rep. 2025, 15, 17274. [Google Scholar] [CrossRef]

- Xie, J.; Yang, Y.; Jiang, Z.; Zhang, K.; Zhang, X.; Lin, Y.; Shen, Y.; Jia, X.; Liu, H.; Yang, S.; et al. MRI radiomics-based decision support tool for a personalized classification of cervical disc degeneration: A two-center study. Front. Physiol. 2024, 14, 1281506. [Google Scholar] [CrossRef] [PubMed]

- Qin, C.; Dai, L.P.; Zhang, Y.L.; Wu, R.C.; Du, K.L.; Zhang, C.Q.; Liu, W.G. The value of MRI radiomics in distinguishing different types of spinal infections. Comput. Methods Programs Biomed. 2025, 264, 108719. [Google Scholar] [CrossRef]

- Jamaludin, A.; Kadir, T.; Zisserman, A.; McCall, I.; Williams, F.M.K.; Lang, H.; Buchanan, E.; Urban, J.P.G.; Fairbank, J.C.T. ISSLS PRIZE in Clinical Science 2023: Comparison of degenerative MRI features of the intervertebral disc between those with and without chronic low back pain. An exploratory study of two large female populations using automated annotation. Eur. Spine J. 2023, 32, 1504–1516. [Google Scholar] [CrossRef] [PubMed]

- Han, Z.; Wei, B.; Mercado, A.; Leung, S.; Li, S. Spine-GAN: Semantic segmentation of multiple spinal structures. Med. Image Anal. 2018, 50, 23–35. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Mei, F.; Xie, Q. Novel automated spinal ultrasound segmentation approach for scoliosis visualization. Front. Physiol. 2022, 13, 1051808. [Google Scholar] [CrossRef]

- Galbusera, F.; Niemeyer, F.; Wilke, H.J.; Bassani, T.; Casaroli, G.; Anania, C.; Costa, F.; Brayda-Bruno, M.; Sconfienza, L.M. Fully automated radiological analysis of spinal disorders and deformities: A deep learning approach. Eur. Spine J. 2019, 28, 951–960. [Google Scholar] [CrossRef]

- Taphoorn, M.J.; Claassens, L.; Aaronson, N.K.; Coens, C.; Mauer, M.; Osoba, D.; Stupp, R.; Mirimanoff, R.O.; van den Bent, M.J.; Bottomley, A.; et al. An international validation study of the EORTC brain cancer module (EORTC QLQ-BN20) for assessing health-related quality of life and symptoms in brain cancer patients. Eur. J. Cancer 2010, 46, 1033–1040. [Google Scholar] [CrossRef] [PubMed]

- Hawkins, J.R.; Olson, M.P.; Harouni, A.; Qin, M.M.; Hess, C.P.; Majumdar, S.; Crane, J.C. Implementation and prospective real-time evaluation of a generalized system for in-clinic deployment and validation of machine learning models in radiology. PLOS Digit. Health 2023, 2, e0000227. [Google Scholar] [CrossRef]

- Tang, H.; Hong, M.; Yu, L.; Song, Y.; Cao, M.; Xiang, L.; Zhou, Y.; Suo, S. Deep learning reconstruction for lumbar spine MRI acceleration: A prospective study. Eur. Radiol. Exp. 2024, 8, 67. [Google Scholar] [CrossRef]

- Estler, A.; Hauser, T.K.; Brunnée, M.; Zerweck, L.; Richter, V.; Knoppik, J.; Örgel, A.; Bürkle, E.; Adib, S.D.; Hengel, H.; et al. Deep learning-accelerated image reconstruction in back pain-MRI imaging: Reduction of acquisition time and improvement of image quality. La Radiol. Medica 2024, 129, 478–487. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Xu, M.; Jiang, B.; Dong, Q.; Xia, Y.; Zhou, T.; Lin, X.; Ma, Y.; Jiang, S.; Zhang, Z.; et al. Diagnostic interchangeability of deep-learning based Synth-STIR images generated from T1 and T2 weighted spine images. Eur. Radiol. 2025, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Kaniewska, M.; Zecca, F.; Obermüller, C.; Ensle, F.; Deininger-Czermak, E.; Lohezic, M.; Guggenberger, R. Deep learning reconstruction of zero-echo time sequences to improve visualization of osseous structures and associated pathologies in MRI of cervical spine. Insights Into Imaging 2025, 16, 29. [Google Scholar] [CrossRef]

- Park, S.; Kang, J.H.; Moon, S.G. Diagnostic performance of lumbar spine CT using deep learning denoising to evaluate disc herniation and spinal stenosis. Eur. Radiol. 2025, 1–10. [Google Scholar] [CrossRef]

- Greffier, J.; Hamard, A.; Pereira, F.; Barrau, C.; Pasquier, H.; Beregi, J.P.; Frandon, J. Image quality and dose reduction opportunity of deep learning image reconstruction algorithm for CT: A phantom study. Eur. Radiol. 2020, 30, 3951–3959. [Google Scholar] [CrossRef]

- Fischer, G.; Schlosser, T.P.C.; Dietrich, T.J.; Kim, O.C.-H.; Zdravkovic, V.; Martens, B.; Fehlings, M.G.; Jans, L.; Vereecke, E.; Stienen, M.N.; et al. Radiological evaluation and clinical implications of deep learning- and MRI-based synthetic CT for the assessment of cervical spine injuries. Eur. Radiol. 2025, 1–13. [Google Scholar] [CrossRef]

- Bousson, V.; Benoist, N.; Guetat, P.; Attané, G.; Salvat, C.; Perronne, L. Application of artificial intelligence to imaging interpretations in the musculoskeletal area: Where are we? Where are we going? Jt. Bone Spine 2023, 90, 105493. [Google Scholar] [CrossRef] [PubMed]

- Bharadwaj, U.U.; Chin, C.T.; Majumdar, S. Practical applications of artificial intelligence in spine imaging: A review. Radiol. Clin. N. Am. 2024, 62, 355–370. [Google Scholar] [CrossRef]

- American College of Radiology. Cord compression—AI in Your Practice: AI Use Cases; Creative Commons 4.0. Available online: https://www.acr.org/Data-Science-and-Informatics/AI-in-Your-Practice/AI-Use-Cases/Use-Cases/Cord-Compression (accessed on 20 August 2025).

- Ramadanov, N.; John, P.; Hable, R.; Schreyer, A.G.; Shabo, S.; Prill, R.; Salzmann, M. Artificial intelligence-guided distal radius fracture detection on plain radiographs in comparison with human raters. J. Orthop. Surg. Res. 2025, 20, 468. [Google Scholar] [CrossRef]

- Harper, J.P.; Lee, G.R.; Pan, I.; Nguyen, X.V.; Quails, N.; Prevedello, L.M. External Validation of a Winning AI-Algorithm from the RSNA 2022 Cervical Spine Fracture Detection Challenge. AJNR. Am. J. Neuroradiol. 2025, 46, 1852–1858. [Google Scholar] [CrossRef]

- U.S. Food and Drug Administration. K241211: CoLumbo—510(k) Premarket Notification Decision Summary. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf24/K241211.pdf (accessed on 15 August 2024).

- U.S. Food and Drug Administration. K241108: RemedyLogic AI MRI Lumbar Spine Reader—510(k) Decision Summary. Available online: https://www.accessdata.fda.gov/cdrh_docs/pdf24/K241108.pdf (accessed on 30 October 2024).

- Gupta, A.; Hussain, M.; Nikhileshwar, K.; Rastogi, A.; Rangarajan, K. Integrating Large language models into radiology workflow: Impact of generating personalized report templates from summary. Eur. J. Radiol. 2025, 189, 112198. [Google Scholar] [CrossRef] [PubMed]

- Gertz, R.J.; Beste, N.C.; Dratsch, T.; Lennartz, S.; Bremm, J.; Iuga, A.I.; Bunck, A.C.; Laukamp, K.R.; Schönfeld, M.; Kottlors, J. From dictation to diagnosis: Enhancing radiology reporting with integrated speech recognition in multimodal large language models. Eur. Radiol. 2025, 1–9. [Google Scholar] [CrossRef]

- Busch, F.; Hoffmann, L.; Dos Santos, D.P.; Makowski, M.R.; Saba, L.; Prucker, P.; Hadamitzky, M.; Navab, N.; Kather, J.N.; Truhn, D.; et al. Large language models for structured reporting in radiology: Past, present, and future. Eur. Radiol. 2025, 35, 2589–2602. [Google Scholar] [CrossRef]

- Huang, J.; Wittbrodt, M.T.; Teague, C.N.; Karl, E.; Galal, G.; Thompson, M.; Chapa, A.; Chiu, M.L.; Herynk, B.; Linchangco, R.; et al. Efficiency and Quality of Generative AI–Assisted Radiograph Reporting. JAMA Netw. Open 2025, 8, e2513921. [Google Scholar] [CrossRef]

- Voinea, Ș.V.; Mămuleanu, M.; Teică, R.V.; Florescu, L.M.; Selișteanu, D.; Gheonea, I.A. GPT-driven radiology report generation with fine-tuned llama 3. Bioengineering 2024, 11, 1043. [Google Scholar] [CrossRef]

- Jorg, T.; Halfmann, M.C.; Stoehr, F.; Arnhold, G.; Theobald, A.; Mildenberger, P.; Müller, L. A novel reporting workflow for automated integration of artificial intelligence results into structured radiology reports. Insights Into Imaging 2024, 15, 80. [Google Scholar] [CrossRef]

- Wang, J.; Miao, J.; Zhan, Y.; Duan, Y.; Wang, Y.; Hao, D.; Wang, B. Spine Surgical Robotics: Current Status and Recent Clinical Applications. Neurospine 2023, 20, 1256–1271. [Google Scholar] [CrossRef]

- Anderson, P.A.; Kadri, A.; Hare, K.J.; Binkley, N. Preoperative bone health assessment and optimization in spine surgery. Neurosurg. Focus 2020, 49, E2. [Google Scholar] [CrossRef]

- Jia, S.H.; Weng, Y.Z.; Wang, K.; Qi, H.; Yang, Y.; Ma, C.; Lu, W.W.; Wu, H. Performance evaluation of an AI-based preoperative planning software application for automatic selection of pedicle screws based on CT images. Front. Surg. 2023, 10, 1247527. [Google Scholar] [CrossRef]

- Scherer, M.; Kausch, L.; Bajwa, A.; Neumann, J.-O.; Ishak, B.; Naser, P.; Vollmuth, P.; Kiening, K.; Maier-Hein, K.; Unterberg, A. Automatic Planning Tools for Lumbar Pedicle Screws: Comparison of DL vs atlas-based planning. J. Clin. Med. 2023, 12, 2646. [Google Scholar] [CrossRef]

- Chazen, L.; Lim, E.; Li, Q.; Sneag, D.B.; Tan, E.T. Deep-learning reconstructed lumbar spine 3D MRI for surgical planning equivalency to CT. Eur. Spine J. 2023, 33, 4144–4154. [Google Scholar]

- Ao, Y.; Esfandiari, H.; Carrillo, F.; Laux, C.J.; As, Y.; Li, R.; Van Assche, K.; Davoodi, A.; Cavalcanti, N.A.; Farshad, M.; et al. SafeRPlan: Safe Deep Reinforcement Learning for Intraoperative Planning of Pedicle Screw Placement. Comput. Biol. Med. 2024, 99, 103345. [Google Scholar] [CrossRef] [PubMed]

- Johnson & Johnson MedTech. DePuy Synthes Launches Its First Active Spine Robotics and Navigation Platform; Johnson & Johnson Newsroom: Weehawken, NJ, USA, 2024. [Google Scholar]

- Quiceno, E.; Soliman, M.A.R.; Khan, A.; Mullin, J.P.; Pollina, J. How Do Robotics and Navigation Facilitate Minimally Invasive Spine Surgery? A Case Series and Narrative Review. Neurosurgery 2025, 96, S84–S93. [Google Scholar] [CrossRef]

- Khalsa, S.S.S.; Mummaneni, P.V.; Chou, D.; Park, P. Present and Future Spinal Robotic and Enabling Technologies. Oper. Neurosurg. 2021, 21 (Suppl. S1), S48–S56. [Google Scholar] [CrossRef]

- Haida, D.M.; Mohr, P.; Won, S.Y.; Möhlig, T.; Holl, M.; Enk, T.; Hanschen, M.; Huber-Wagner, S. Hybrid-3D robotic suite in spine and trauma surgery—Experiences in 210 patients. J. Orthop. Surg. Res. 2024, 19, 565. [Google Scholar] [CrossRef] [PubMed]

- Judy, B.F.; Pennington, Z.; Botros, D.; Tsehay, Y.; Kopparapu, S.; Liu, A.; Theodore, N.; Zakaria, H.M. Spine Image Guidance and Robotics: Exposure, Education, Training, and the Learning Curve. Int. J. Spine Surg. 2021, 15, S28–S37. [Google Scholar] [CrossRef] [PubMed]

- Ellis, C.A.; Gu, P.; Sendi, M.S.E.; Huddleston, D.; Mahmoudi, B. A Cloud-based Framework for Implementing Portable Machine Learning Pipelines for Neural Data Analysis. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2019, 2019, 4466–4469. [Google Scholar] [CrossRef]

- Wood, J.S.; Purzycki, A.; Thompson, J.; David, L.R.; Argenta, L.C. The Use of Brainlab Navigation in Le Fort III Osteotomy. J. Craniofacial Surg. 2015, 26, 616–619. [Google Scholar] [CrossRef]

- Asada, T.; Subramanian, T.; Simon, C.Z.; Singh, N.; Hirase, T.; Araghi, K.; Lu, A.Z.; Mai, E.; Kim, Y.E.; Tuma, O.; et al. Level-specific comparison of 3D navigated and robotic arm-guided screw placement: An accuracy assessment of 1210 pedicle screws in lumbar surgery. Spine J. 2024, 24, 1872–1880. [Google Scholar] [CrossRef] [PubMed]

- Lefranc, M.; Peltier, J. Evaluation of the ROSA™ Spine robot for minimally invasive surgical procedures. Expert Rev. Med. Devices 2016, 13, 899–906. [Google Scholar] [CrossRef] [PubMed]

- Pojskić, M.; Bopp, M.; Nimsky, C.; Carl, B.; Saβ, B. Initial Intraoperative Experience with Robotic-Assisted Pedicle Screw Placement with Cirq® Robotic Alignment: An Evaluation of the First 70 Screws. J. Clin. Med. 2021, 10, 5725. [Google Scholar] [CrossRef]

- Kumar, R.; Waisberg, E.; Ong, J.; Lee, A.G. The potential power of Neuralink—How brain-machine interfaces can revolutionize medicine. Expert Rev. Med. Devices 2025, 22, 521–524. [Google Scholar] [CrossRef] [PubMed]

- Liebmann, F.; von Atzigen, M.; Stütz, D.; Wolf, J.; Zingg, L.; Suter, D.; Cavalcanti, N.A.; Leoty, L.; Esfandiari, H.; Snedeker, J.G.; et al. Automatic registration with continuous pose updates for marker-less surgical navigation in spine surgery. Npj Digit. Med. 2023, 91, 103027. [Google Scholar] [CrossRef]

- Ansari, T.S.; Maik, V.; Naheem, M.; Ram, K.; Lakshmanan, M.; Sivaprakasam, M. A Hybrid-Layered System for Image-Guided Navigation and Robot-Assisted Spine Surgery. arXiv 2024, arXiv:2406.04644. [Google Scholar]

- Paladugu, P.S.; Ong, J.; Nelson, N.; Kamran, S.A.; Waisberg, E.; Zaman, N.; Kumar, R.; Dias, R.D.; Lee, A.G. Generative Adversarial Networks in Medicine: Important Considerations for this Emerging Innovation in Artificial Intelligence. Ann. Biomed. Eng. 2023, 51, 2130–2142. [Google Scholar] [CrossRef]

- Lee, R.; Ong, J.; Waisberg, E.; Ben-David, G.; Jaiswal, S.; Arogundade, E.; Lee, A.G. Applications of Artificial Intelligence in Neuro-Ophthalmology: Neuro-Ophthalmic Imaging Patterns and Implementation Challenges. Neuro-Ophthalmol. 2025, 49, 273–284. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Al-Dulaimi, K.; Salhi, A.; Alammar, Z.; Fadhel, M.A.; Albahri, A.S.; Alamoodi, A.H.; Albahri, O.S.; Hasan, A.F.; Bai, J.; et al. Comprehensive review of deep learning in orthopaedics: Applications, challenges, trustworthiness, and fusion. Artif. Intell. Med. 2024, 155, 102935. [Google Scholar] [CrossRef]

- Baydili, İ.; Tasci, B.; Tasci, G. Artificial Intelligence in Psychiatry: A Review of Biological and Behavioral Data Analyses. Diagnostics 2025, 15, 434. [Google Scholar] [CrossRef] [PubMed]

- Minerva, F.; Giubilini, A. Is AI the Future of Mental Healthcare? Topoi 2023, 42, 809–817. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Stuart, E.M.; Aboujaoude, E. Racial bias in AI-mediated psychiatric diagnosis and treatment: A qualitative comparison of four large language models. npj Digit. Med. 2025, 8, 332. [Google Scholar] [CrossRef]

- Miller, D.J.; Lastella, M.; Scanlan, A.T.; Bellenger, C.; Halson, S.L.; Roach, G.D.; Sargent, C. A validation study of the WHOOP strap against polysomnography to assess sleep. J. Sports Sci. 2020, 38, 2631–2636. [Google Scholar] [CrossRef] [PubMed]

- Wikimedia Commons. What-Is-nlp; Wikimedia Commons: San Francisco, CA, USA, 2024; Available online: https://commons.wikimedia.org/w/index.php?title=File:What-is-nlp.png&oldid=839312883 (accessed on 15 August 2025).

- Grosicki, G.J.; Fielding, F.; Kim, J.; Chapman, C.J.; Olaru, M.; Hippel, V.; Holmes, K.E. Wearing WHOOP More Frequently Is Associated with Better Biometrics and Healthier Sleep and Activity Patterns. Sensors 2025, 25, 2437. [Google Scholar] [CrossRef]

- Kumar, R.; Waisberg, E.; Ong, J.; Paladugu, P.; Amiri, D.; Nahouraii, R.; Jagadeesan, R.; Tavakkoli, A. Integration of Multi-Modal Imaging and Machine Learning Visualization Techniques to Optimize Structural Neuroimaging. Preprints 2024, 2024, 2024111128. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine; Division of Behavioral and Social Sciences and Education; Board on Behavioral, Cognitive, and Sensory Sciences; Committee on Future Directions for Applying Behavioral Economics to Policy. Beatty, A., Moffitt, R., Buttenheim, A., Eds.; Behavioral Economics: Policy Impact and Future Directions; National Academies Press (US): Washington, DC, USA, 2023. Available online: https://www.ncbi.nlm.nih.gov/books/NBK593518/ (accessed on 6 September 2025).

- Fayers, P.; Bottomley, A.; EORTC Quality of Life Group. Quality of life research within the EORTC-the EORTC QLQ-C30. Eur. J. Cancer 2002, 38 (Suppl. S4), S125–S133. [Google Scholar] [CrossRef]

- Bushnell, D.M.; Atkinson, T.M.; McCarrier, K.P.; Liepa, A.M.; DeBusk, K.P.; Coons, S.J. Patient-Reported Outcome Consortium’s NSCLC Working Group Non-Small Cell Lung Cancer Symptom Assessment Questionnaire: Psychometric Performance and Regulatory Qualification of a Novel Patient-Reported Symptom Measure. Curr. Ther. Res. 2021, 95, 100642. [Google Scholar] [CrossRef]

- Kumar, R.; Sporn, K.; Khanna, A.; Paladugu, P.; Gowda, C.; Ngo, A.; Jagadeesan, R.; Zaman, N.; Tavakkoli, A. Integrating Radiogenomics and Machine Learning in Musculoskeletal Oncology Care. Diagnostics 2025, 15, 1377. [Google Scholar] [CrossRef]

- Lee, Y.S.; Cho, D.C.; Kim, K.T. Navigation-Guided/Robot-Assisted Spinal Surgery: A Review Article with cost-effectiveness emphasis. Neurospine 2024, 21, 8. [Google Scholar] [CrossRef]

- Tkachenko, A.A.; Changalidis, A.I.; Maksiutenko, E.M.; Nasykhova, Y.A.; Barbitoff, Y.A.; Glotov, A.S. Replication of Known and Identification of Novel Associations in Biobank-Scale Datasets: A Survey Using UK Biobank and FinnGen. Genes 2024, 15, 931. [Google Scholar] [CrossRef] [PubMed]

- Kurki, M.I.; Karjalainen, J.; Palta, P.; Sipilä, T.P.; Kristiansson, K.; Donner, K.M.; Reeve, M.P.; Laivuori, H.; Aavikko, M.; Kaunisto, M.A.; et al. FinnGen provides genetic insights from a well-phenotyped isolated population. Nature 2023, 613, 508–518. [Google Scholar] [CrossRef] [PubMed]

- Theodore, N.; Ahmed, A.K.; Fulton, T.; Mousses, S.; Yoo, C.; Goodwin, C.R.; Danielson, J.; Sciubba, D.M.; Giers, M.B.; Kalani, M.Y.S. Genetic Predisposition to Symptomatic Lumbar Disk Herniation in Pediatric and Young Adult Patients. Spine 2019, 44, E640–E649. [Google Scholar] [CrossRef]

- Yang, J.; Xu, W.; Chen, D.; Liu, Y.; Hu, X. Evidence from Mendelian randomization analysis combined with meta-analysis for the causal validation of the relationship between 91 inflammatory factors and lumbar disc herniation. Medicine 2024, 103, e40323. [Google Scholar] [CrossRef] [PubMed]

- Scott, H.; Panin, V.M. The role of protein N-glycosylation in neural transmission. Glycobiology 2014, 24, 407–417. [Google Scholar] [CrossRef]

- He, M.; Zhou, X.; Wang, X. Glycosylation: Mechanisms, biological functions and clinical implications. Signal Transduct. Target. Ther. 2024, 9, 194. [Google Scholar] [CrossRef]

- Ayoub, A.; McHugh, J.; Hayward, J.; Rafi, I.; Qureshi, N. Polygenic risk scores: Improving the prediction of future disease or added complexity? Br. J. Gen. Pract. 2022, 72, 396–398. [Google Scholar] [CrossRef]

- Singh, O.; Verma, M.; Dahiya, N.; Senapati, S.; Kakkar, R.; Kalra, S. Integrating Polygenic Risk Scores (PRS) for Personalized Diabetes Care: Advancing Clinical Practice with Tailored Pharmacological Approaches. Diabetes Ther. 2025, 16, 149–168. [Google Scholar] [CrossRef]

- Sporn, K.; Kumar, R.; Paladugu, P.; Ong, J.; Sekhar, T.; Vaja, S.; Hage, T.; Waisberg, E.; Gowda, C.; Jagadeesan, R.; et al. Artificial Intelligence in Orthopedic Medical Education: A Comprehensive Review of Emerging Technologies and Their Applications. Int. Med. Educ. 2025, 4, 14. [Google Scholar] [CrossRef]

- Krupkova, O.; Cambria, E.; Besse, L.; Besse, A.; Bowles, R.; Wuertz-Kozak, K. The potential of CRISPR/Cas9 genome editing for the study and treatment of intervertebral disc pathologies. JOR Spine 2018, 1, e1003. [Google Scholar] [CrossRef]

- Dean, L.; Kane, M. Codeine Therapy and CYP2D6 Genotype. In Medical Genetics Summaries; Pratt, V.M., Scott, S.A., Pirmohamed, M., Eds.; National Center for Biotechnology Information (US): Bethesda, MD, USA, 2025. Available online: https://www.ncbi.nlm.nih.gov/books/NBK100662/ (accessed on 15 August 2025).

- Li, B.; Sangkuhl, K.; Keat, K.; Whaley, R.M.; Woon, M.; Verma, S.; Dudek, S.; Tuteja, S.; Verma, A.; Whirl-Carrillo, M.; et al. How to Run the Pharmacogenomics Clinical Annotation Tool (PharmCAT). Clin. Pharmacol. Ther. 2023, 113, 1036–1047. [Google Scholar] [CrossRef]

- Tippenhauer, K.; Philips, M.; Largiadèr, C.; Sariyar, M. Using the PharmCAT tool for Pharmacogenetic clinical decision support. Brief. Bioinform. 2024, 25, bbad452. [Google Scholar] [CrossRef]

- Sadee, W.; Wang, D.; Hartmann, K.; Toland, A.E. Pharmacogenomics: Driving Personalized Medicine. Pharmacol. Rev. 2023, 75, 789–814. [Google Scholar] [CrossRef]

- Guarneri, M.; Scola, L.; Giarratana, R.M.; Bova, M.; Carollo, C.; Vaccarino, L.; Calandra, L.; Lio, D.; Balistreri, C.R.; Cottone, S. MIF rs755622 and IL6 rs1800795 Are Implied in Genetic Susceptibility to End-Stage Renal Disease (ESRD). Genes 2022, 13, 226. [Google Scholar] [CrossRef]

- Górczyńska-Kosiorz, S.; Tabor, E.; Niemiec, P.; Pluskiewicz, W.; Gumprecht, J. Associations between the VDR Gene rs731236 (TaqI) Polymorphism and Bone Mineral Density in Postmenopausal Women from the RAC-OST-POL. Biomedicines 2024, 12, 917. [Google Scholar] [CrossRef]

- De La Vega, R.E.; van Griensven, M.; Zhang, W.; Coenen, M.J.; Nagelli, C.V.; Panos, J.A.; Peniche Silva, C.J.; Geiger, J.; Plank, C.; Evans, C.H.; et al. Efficient healing of large osseous segmental defects using optimized chemically modified messenger RNA encoding BMP-2. Sci. Adv. 2022, 8, eabl6242. [Google Scholar] [CrossRef] [PubMed]

- Robinson, C.; Dalal, S.; Chitneni, A.; Patil, A.; Berger, A.A.; Mahmood, S.; Orhurhu, V.; Kaye, A.D.; Hasoon, J. A Look at Commonly Utilized Serotonin Noradrenaline Reuptake Inhibitors (SNRIs) in Chronic Pain. Health Psychol. Res. 2022, 10, 32309. [Google Scholar] [CrossRef] [PubMed]

- Wiffen, P.J.; Derry, S.; Bell, R.F.; Rice, A.S.; Tölle, T.R.; Phillips, T.; Moore, R.A. Gabapentin for chronic neuropathic pain in adults. Cochrane Database Syst. Rev. 2017, 6, CD007938. [Google Scholar] [CrossRef] [PubMed]

- Mukherjee, A.; Abraham, S.; Singh, A.; Balaji, S.; Mukunthan, K.S. From Data to Cure: A Comprehensive Exploration of Multi-omics Data Analysis for Targeted Therapies. Mol. Biotechnol. 2025, 67, 1269–1289. [Google Scholar] [CrossRef]

- Assi, I.Z.; Landzberg, M.J.; Becker, K.C.; Renaud, D.; Reyes, F.B.; Leone, D.M.; Benson, M.; Michel, M.; Gerszten, R.E.; Opotowsky, A.R. Correlation between Olink and SomaScan proteomics platforms in adults with a Fontan circulation. Int. J. Cardiol. Congenit. Heart Dis. 2025, 20, 100584. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Liu, B.; Dong, X.; Wu, J.; Sun, C.; Xi, L.; Cheng, R.; Wu, B.; Wang, H.; Tong, S.; et al. Clinical severity prediction in children with osteogenesis imperfecta caused by COL1A1/2 defects. Osteoporos. Int. 2022, 33, 1373–1384. [Google Scholar] [CrossRef]

- Kohn, M.S.; Sun, J.; Knoop, S.; Shabo, A.; Carmeli, B.; Sow, D.; Syed-Mahmood, T.; Rapp, W. IBM’s Health Analytics and Clinical Decision Support. Yearb. Med. Inform. 2014, 9, 154–162. [Google Scholar] [CrossRef]

- Morsbach, F.; Zhang, Y.H.; Martin, L.; Lindqvist, C.; Brismar, T. Body composition evaluation with computed tomography: Contrast media and slice thickness cause methodological errors. Nutrition 2019, 59, 50–55. [Google Scholar] [CrossRef]

- Riem, L.; DuCharme, O.; Cousins, M.; Feng, X.; Kenney, A.; Morris, J.; Tapscott, S.J.; Tawil, R.; Statland, J.; Shaw, D.; et al. AI driven analysis of MRI to measure health and disease progression in FSHD. Sci. Rep. 2024, 14, 15462. [Google Scholar] [CrossRef]

- Moraes da Silva, W.; Cazella, S.C.; Rech, R.S. Deep learning algorithms to assist in imaging diagnosis in individuals with disc herniation or spondylolisthesis: A scoping review. Int. J. Med. Inform. 2025, 201, 105933. [Google Scholar] [CrossRef]

- Murto, N.; Lund, T.; Kautiainen, H.; Luoma, K.; Kerttula, L. Comparison of lumbar disc degeneration grading between deep learning model SpineNet and radiologist: A longitudinal study with a 14-year follow-up. Eur. Spine J. 2025, 1–7. [Google Scholar] [CrossRef]

- van Wulfften Palthe, O.D.R.; Tromp, I.; Ferreira, A.; Fiore, A.; Bramer, J.A.M.; van Dijk, N.C.; DeLaney, T.F.; Schwab, J.H.; Hornicek, F.J. Sacral chordoma: A clinical review of 101 cases with 30-year experience in a single institution. Spine J. 2019, 19, 869–879. [Google Scholar] [CrossRef] [PubMed]

- O’Hanlon, C.E.; Kranz, A.M.; DeYoreo, M.; Mahmud, A.; Damberg, C.L.; Timbie, J. Access, Quality, And Financial Performance Of Rural Hospitals Following Health System Affiliation. Health Aff. 2019, 38, 2095–2104. [Google Scholar] [CrossRef]

- Vaughan, L.; Edwards, N. The problems of smaller, rural and remote hospitals: Separating facts from fiction. Future Healthc. J. 2020, 7, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Ramedani, S.; George, D.R.; Leslie, D.L.; Kraschnewski, J. The bystander effect: Impact of rural hospital closures on the operations and financial well-being of surrounding healthcare institutions. J. Hosp. Med. 2022, 17, 901–906. [Google Scholar] [CrossRef] [PubMed]

- Kumar, R.; Waisberg, E.; Ong, J.; Paladugu, P.; Sporn, K.; Chima, K.; Amiri, D.; Zaman, N.; Tavakkoli, A. Precision health monitoring in spaceflight with integration of lower body negative pressure and advanced large language model artificial intelligence. Life Sci. Space Res. 2025, 47, 57–60. [Google Scholar] [CrossRef]

- Agency for Healthcare Research and Quality. 2022 National Healthcare Quality and Disparities Report; Agency for Healthcare Research and Quality (US): Rockville, MD, USA, 2022. Available online: https://www.ncbi.nlm.nih.gov/books/NBK587178/ (accessed on 15 August 2025).

- Gillespie, E.F.; Santos, P.M.G.; Curry, M.; Salz, T.; Chakraborty, N.; Caron, M.; Fuchs, H.E.; Vicioso, N.L.; Mathis, N.; Kumar, R.; et al. Implementation Strategies to Promote Short-Course Radiation for Bone Metastases. JAMA Netw. Open 2024, 7, e2411717. [Google Scholar] [CrossRef]

- Allen, J.; Shepherd, C.; Heaton, T.; Behrens, N.; Dorius, A.; Grimes, J. Differences in Medicare payment and practice characteristics for orthopedic surgery subspecialties. Proc. Bayl. University. Med. Cent. 2024, 38, 175–178. [Google Scholar] [CrossRef]

- Lee, C.; Britto, S.; Diwan, K. Evaluating the Impact of Artificial Intelligence (AI) on Clinical Documentation Efficiency and Accuracy Across Clinical Settings: A Scoping Review. Cureus 2024, 16, e73994. [Google Scholar] [CrossRef]

- Seh, A.H.; Zarour, M.; Alenezi, M.; Sarkar, A.K.; Agrawal, A.; Kumar, R.; Khan, R.A. Healthcare Data Breaches: Insights and Implications. Healthcare 2020, 8, 133. [Google Scholar] [CrossRef] [PubMed]

- Iglesias, L.L.; Bellón, P.S.; Del Barrio, A.P.; Fernández-Miranda, P.M.; González, D.R.; Vega, J.A.; González Mandly, A.A.; Blanco, J.A.P. A primer on deep learning and convolutional neural networks for clinicians. Insights Into Imaging 2021, 12, 117. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Miranda, P.M.; Fraguela, E.M.; de Linera-Alperi, M.Á.; Cobo, M.; Del Barrio, A.P.; González, D.R.; Vega, J.A.; Iglesias, L.L. A retrospective study of deep learning generalization across two centers and multiple models of X-ray devices using COVID-19 chest-X rays. Sci. Rep. 2024, 14, 14657. [Google Scholar] [CrossRef] [PubMed]

- Roccetti, M.; Delnevo, G.; Casini, L.; Cappiello, G. Is bigger always better? A controversial journey to the center of machine learning design, with uses and misuses of big data for predicting water meter failures. J. Big Data 2019, 6, 70. [Google Scholar] [CrossRef]

- Kim, T.H.; Srinivasulu, A.; Chinthaginjala, R.; Zhao, X.; Obaidur Rab, S.; Tera, S.P. Improving CNN predictive accuracy in COVID-19 health analytics. Sci. Rep. 2025, 15, 29864. [Google Scholar] [CrossRef]

- Varoquaux, G.; Cheplygina, V. Machine learning for medical imaging: Methodological failures and recommendations for the future. NPJ Digit. Med. 2022, 5, 48. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Zhang, H.; Gichoya, J.W.; Katabi, D.; Ghassemi, M. The limits of fair medical imaging AI in real-world generalization. Nat. Med. 2024, 30, 2838–2848. [Google Scholar] [CrossRef] [PubMed]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M.; et al. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ Clin. Res. Ed. 2024, 385, e078378. [Google Scholar] [CrossRef]

- Yu, A.C.; Mohajer, B.; Eng, J. External Validation of Deep Learning Algorithms for Radiologic Diagnosis: A Systematic Review. Radiol. Artif. Intell. 2022, 4, e210064. [Google Scholar] [CrossRef] [PubMed]

| AI/ML Application Area | Key Tools/Systems | Validation Status | Clinical Benefit | Limitations |

|---|---|---|---|---|

| Fracture Detection and Classification | Zebra HealthJOINT [24,25], Aidoc Cervical Spine AI [1,26,27,28] | FDA Approved; Real-world validation | Reduced under-detection; Improved triage accuracy | Limited chronic fracture detection; Sensitivity varies |

| Spinal Segmentation and Grading | SpineNetV2 [3,29,30,31], Multimodal Segmentation Platforms | External validation across modalities | Automated grading of stenosis, disk degeneration | Performance may vary across demographics |

| Morphometric Analysis | CobbAngle Pro Version 1 [32,33,34], Yeh et al. Ensemble Model [35] | Validated vs. clinical experts | Reduced measurement error; Field-applicable | Dependence on image quality |

| Ultrasound-based Imaging | UGBNet [36], Attention-Unet [7] | Peer-reviewed feasibility studies | Segmentation of low-contrast images | Noise sensitivity in complex anatomy |

| Muscle Quality Quantification | CTSpine1K [37,38], TrinetX [38] | Open-source annotated datasets | Cross-sectional muscle area and fat infiltration | Need for standardized protocols |

| Preoperative Planning | Mazor X [3,5,39,40], ExcelsiusGPS [5,39,41] | Clinical integration with robotic systems | Optimized screw trajectory, virtual planning | Variable accuracy in deformed anatomy |

| Robotic Execution | VELYS [10,20], ROSA Spine [5,42], Mazor Robotics [10] | FDA-cleared, commercial use | Real-time trajectory correction; Error reduction | Cost and infrastructure requirements |

| Navigation and Guidance | Brainlab Curve [43], Medtronic StealthStation [10] | Integrated AI + imaging validation | Adaptive navigation; Improved pedicle accuracy | Setup complexity; Intraoperative variability |

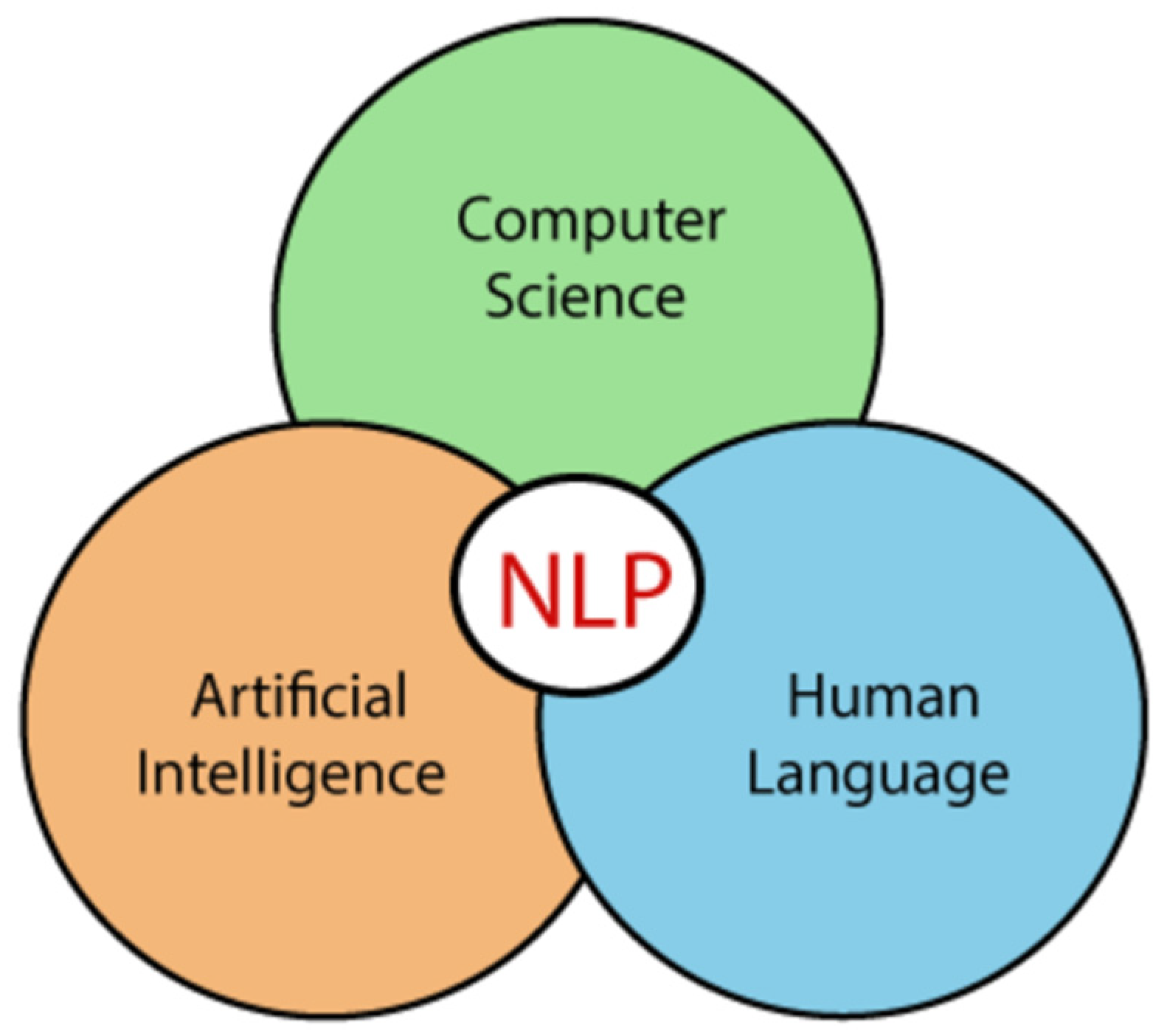

| Outcome Prediction | GNNs, Transformers, Sentiment NLP [44,45,46,47] | Ongoing studies; Cross-disciplinary use | Predict functional recovery, mental health monitoring | Integration of heterogeneous data types |

| Cost-Effectiveness and QOL Modeling | Dynamic Simulations, Complexity Economics | Emerging models; Not yet widespread | Forecasting long-term impact; Behavioral insights | Lack of spine-specific QOL instruments |

| Category | Barrier | Technical Detail | Clinical/Operational Consequence |

|---|---|---|---|

| Imaging and Model Generalizability | Cross-Vendor Imaging Variability | Heterogeneity in scanner vendor output (e.g., GE vs. Siemens vs. Philips) causes domain shift in AI models; non-uniform slice thickness and FOV distort CNN feature extraction layers. | Decreased classification precision for compression fractures; high false-negative rates in under-standardized imaging environments. |

| Hardware-Induced Artifacts | Metallic Implant Interference | Titanium-induced susceptibility artifacts in T1/T2 MRI sequences disrupt segmentation accuracy in deep neural networks like SpineNet and V-Net variants. | Invalidated predictions in post-fusion patients; potential for underestimation of central canal and foraminal compromise. |

| Pathological Heterogeneity | Low Representation of Rare Tumors | Model sensitivity drops when exposed to rare presentations (e.g., sacral chordomas, extradural myxopapillary ependymomas) due to weak class priors and minimal edge-case training data. | False negatives in tumor surveillance; unreliable outputs for oncological follow-up assessments. |

| Training Data Bias | Geographic and Socioeconomic Overfitting | Training sets skewed toward tertiary care centers cause latent space misalignment for rural/underserved demographics; manifests as calibration drift in diagnostic AI systems. | Inaccurate prioritization in triage algorithms; potential exacerbation of healthcare disparities. |

| Model Explainability | Opacity in Neural Attribution Maps | Lack of saliency map interpretability or explainable AI (XAI) frameworks in real-time decision support; attention-based models still fall short in spine-specific pathologies. | Limited clinician trust in AI output; inability to validate or refute system recommendations during multidisciplinary rounds. |

| Infrastructure and Cost | High-Cost HPC Requirements | Inference latency optimization via GPU clusters (e.g., NVIDIA A100) requires capital investment exceeding $500k; suboptimal throughput without federated inference pipelines. | Barriers to adoption in rural and small private clinics; delayed implementation in mid-tier health systems. |

| Regulatory and Legal Complexity | Validation of Continuous Learning Systems | Regulatory frameworks not equipped for post-deployment model drift; challenge in validating self-updating AI modules under FDA’s Good Machine Learning Practice (GMLP) guidelines. | Post-market liability ambiguity; disincentivizes procurement by risk-averse hospital administrators. |

| Workflow and Physician Engagement | Non-Interoperability with Legacy EHRs | Lack of native HL7/FHIR compliance in AI tools (e.g., DeepScribe); interface incompatibility leads to fragmented data workflows and redundancy in documentation. | Cognitive overload and duplication of work; rejection by high-volume providers. |

| Patient-Centric Barriers | Privacy Anxiety from Data Breaches | 2024 cyberattack exposure of biometric and imaging datasets undermines patient confidence in AI-driven diagnostics; hesitancy persists even with federated learning protocols. | Consent withdrawal and decreased utilization of AI-assisted care; limits scalability of patient-facing applications. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumar, R.; Dougherty, C.; Sporn, K.; Khanna, A.; Ravi, P.; Prabhakar, P.; Zaman, N. Intelligence Architectures and Machine Learning Applications in Contemporary Spine Care. Bioengineering 2025, 12, 967. https://doi.org/10.3390/bioengineering12090967

Kumar R, Dougherty C, Sporn K, Khanna A, Ravi P, Prabhakar P, Zaman N. Intelligence Architectures and Machine Learning Applications in Contemporary Spine Care. Bioengineering. 2025; 12(9):967. https://doi.org/10.3390/bioengineering12090967

Chicago/Turabian StyleKumar, Rahul, Conor Dougherty, Kyle Sporn, Akshay Khanna, Puja Ravi, Pranay Prabhakar, and Nasif Zaman. 2025. "Intelligence Architectures and Machine Learning Applications in Contemporary Spine Care" Bioengineering 12, no. 9: 967. https://doi.org/10.3390/bioengineering12090967

APA StyleKumar, R., Dougherty, C., Sporn, K., Khanna, A., Ravi, P., Prabhakar, P., & Zaman, N. (2025). Intelligence Architectures and Machine Learning Applications in Contemporary Spine Care. Bioengineering, 12(9), 967. https://doi.org/10.3390/bioengineering12090967