Eye Tracking-Enhanced Deep Learning for Medical Image Analysis: A Systematic Review on Data Efficiency, Interpretability, and Multimodal Integration

Abstract

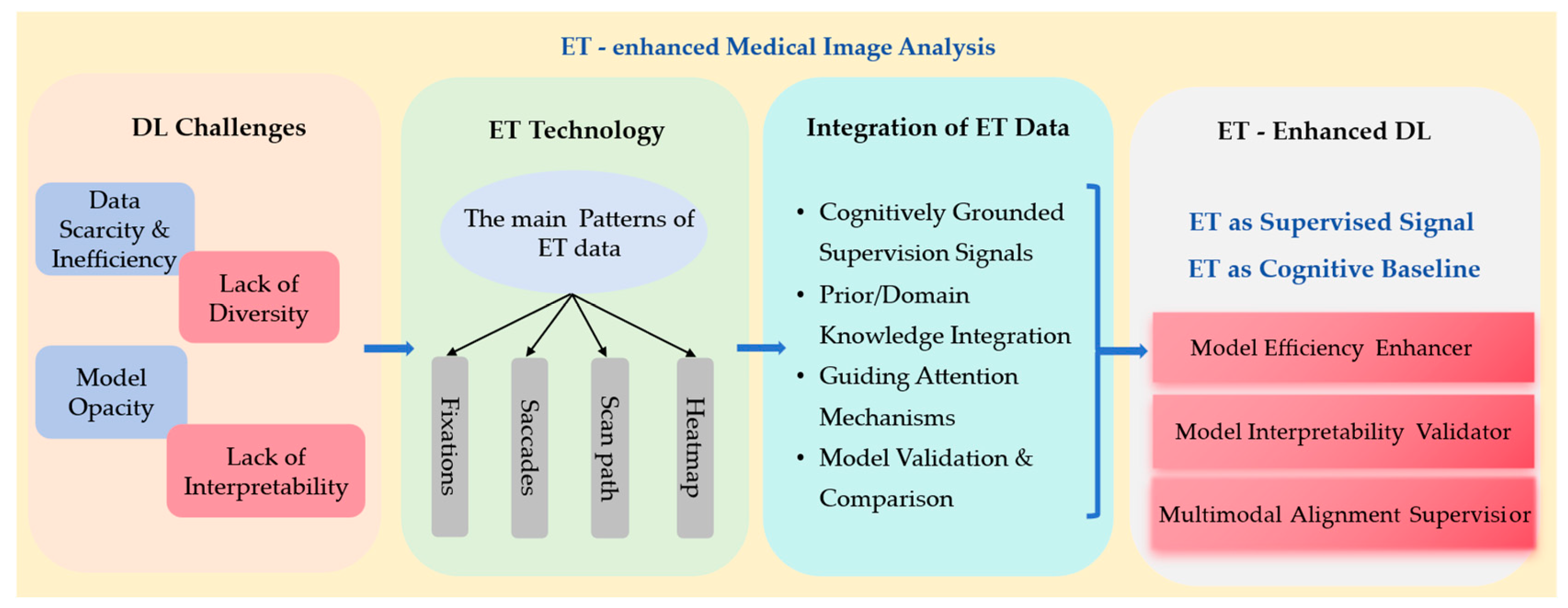

1. Introduction

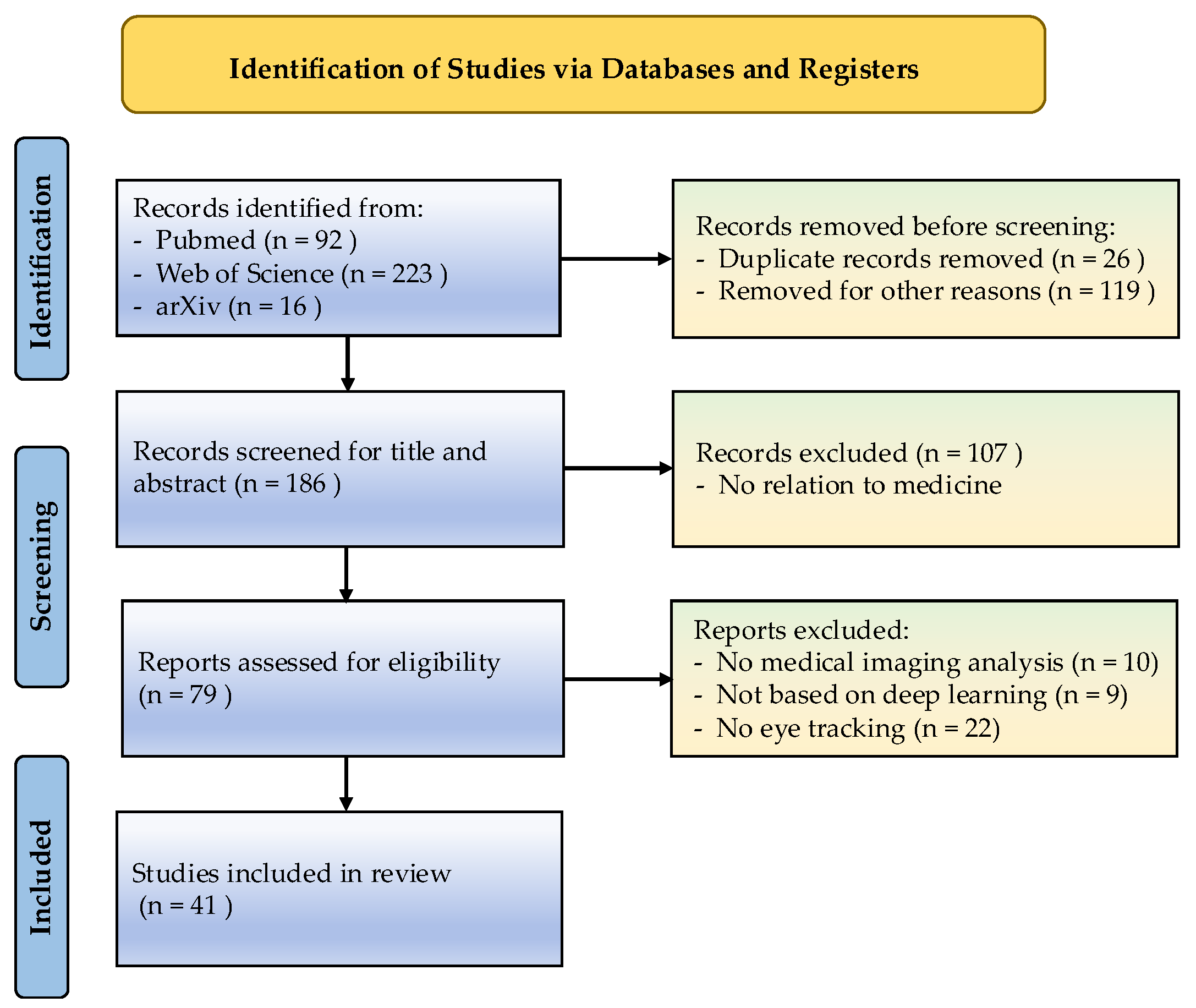

2. Methods

2.1. Eligibility Criteria

2.2. Search Strategy and Literature Selection

2.3. Data Extraction and Quantitative Synthesis

2.4. ET Data Quality and Consistency

3. Results

3.1. ET Data and Patterns Used

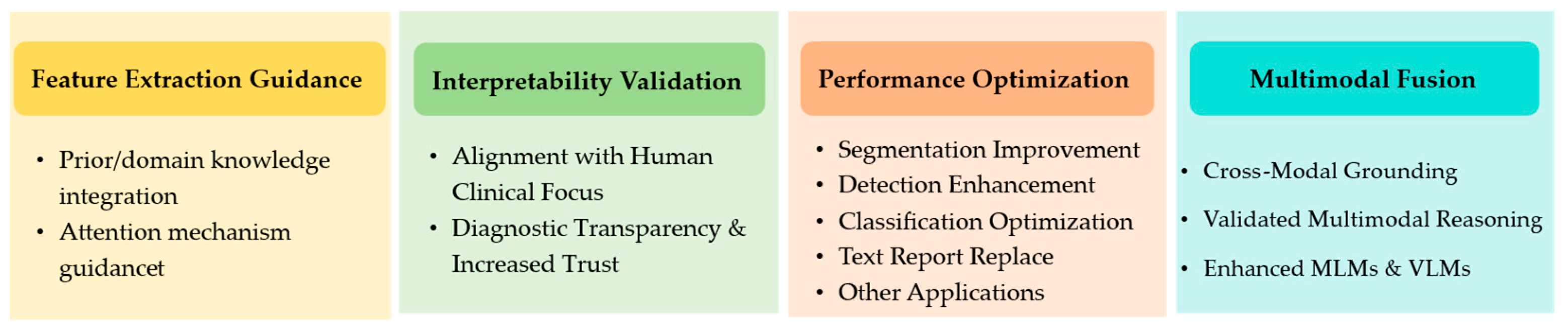

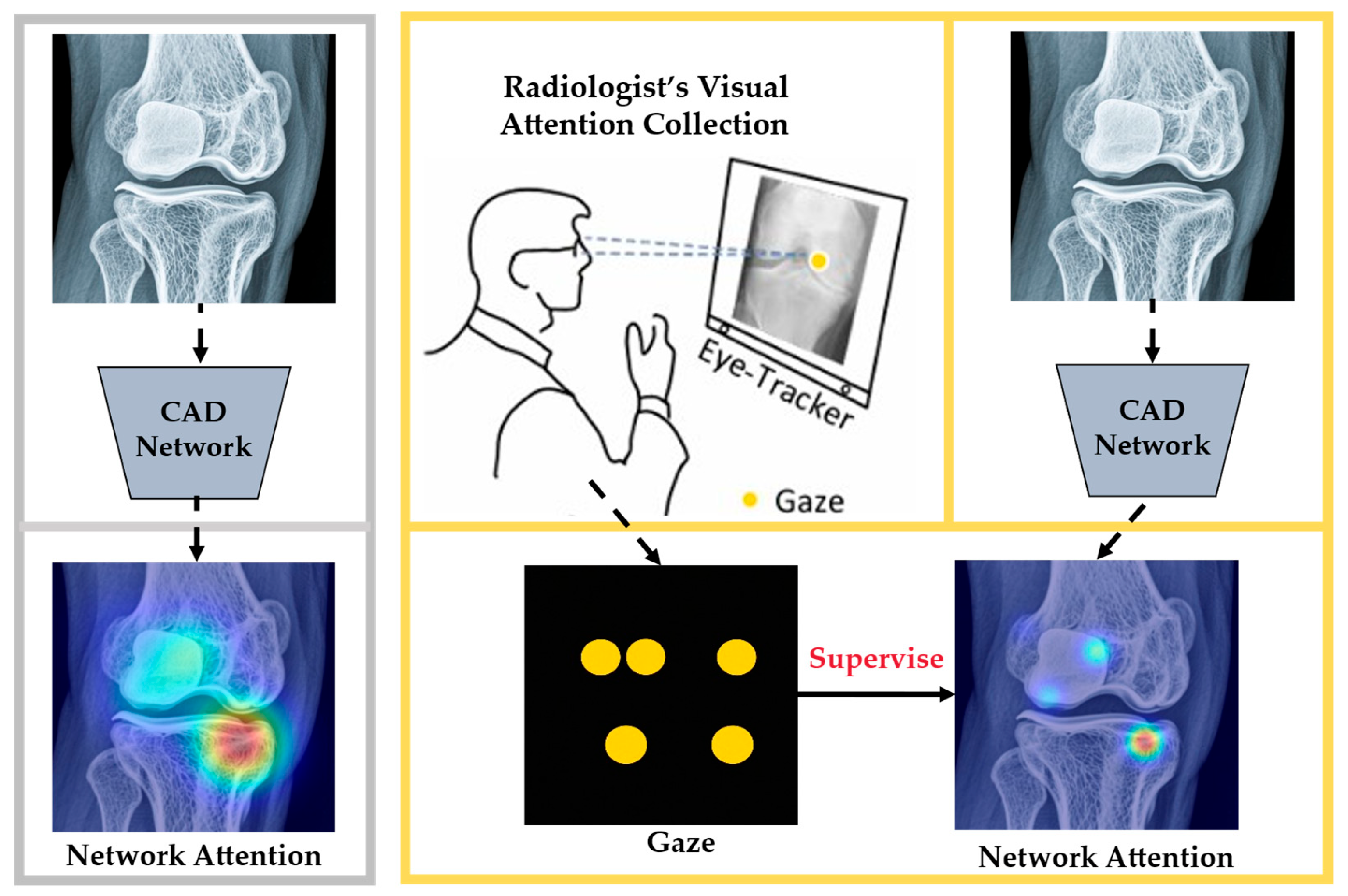

3.2. Commonly Used ET for Feature Extraction

3.2.1. Effective Feature Extraction Is Fundamental to DL Models

3.2.2. ET Used to Guide the Feature Extraction of DL

| Ref. | ET Patterns | Feature Extraction Method | Imaging Modality (Diseases) | DL Models | Observations and Highlights |

|---|---|---|---|---|---|

| [40] | Fixation distribution | Weighted fixation maps served as an auxiliary imaging modality (concatenated with fundus images) and as supervised masks to guide feature extraction. | Fundus images (diabetic retinopathy) | ResNet-18 | Using weighted fixation maps as auxiliary masks yielded the best performance, with an accuracy of 73.50% and an F1-score of 77.63%, confirming that gaze-guided feature extraction benefits diabetic retinopathy recognition. |

| [44] | Heatmaps | A saliency prediction model mimics radiologist-level visual attention, and the predicted gaze heatmap conditions positive pair generation via GCA, preserving critical information like abnormal areas in contrastive views. | Knee X-ray (knee osteoarthritis) | ResNet-50 | Predicted gaze heatmaps used in gaze-conditioned augmentation raised knee-OA classification accuracy from 55.31% to 58.81%, indicating that expert–attention-conditioned views outperform handcrafted augmentations. |

| [49] | Heatmaps and scan paths | Expert fixation heatmaps and scan path vectors are encoded as sparse attention weights, concatenated with the original image features along the channel dimension, and then fed into the model for joint feature extraction. | Colposcopy images (vulvovaginal candidiasis) | Gaze-DETR | Encoding expert heatmaps and scan paths as sparse attention improved detection: average precision increased across thresholds, recall reached 0.988, and false positives declined. |

3.3. ET Used to Validate the Interpretability of DL

3.3.1. Interpretability of DL in MIA

3.3.2. Interpretability Methods in DL

3.3.3. Application of ET in Validating Interpretability

3.4. ET Used to Improve the Performance of DL

3.4.1. ET Used as Prior Knowledge to Improve the Performance of DL

| Task/Domain | Reference | Gaze Processing | Year | Datasets | Type of Disease | DL Model | Highlights |

|---|---|---|---|---|---|---|---|

| Segmentation | Stember et al. [72] | ET mask | 2019 | Images from PubMed and Google images | meningioma | U-net [13] | ET-derived masks improved meningioma segmentation performance. |

| Stember et al. [73] | Gaze position/Fixation | 2021 | BraTS [83] | brain tumor | CNN models [84] | Gaze supervision enabled accurate brain tumor labeling and boosted downstream accuracy. | |

| Xie et al. [27] | Fixation heatmaps | 2024 | Inbreast [27] | breast cancer | U-net [13] | Gaze-supervised segmentation increased Dice and mIoU under limited data. | |

| Detection | Wang et al. [44] | Fixation heatmaps | 2023 | Knee X-ray images [85] | osteoarthritis | U-net [13] | Gaze-conditioned contrastive views increased knee X-ray detection accuracy. |

| Kong et al. [49] | Fixation heatmaps | 2024 | Private * | vulvovaginal candidiasis | Transformer [27] | Gaze-guided DETR raised AP/AR and reduced false positives in VVC screening. | |

| Wang et al. [75] | Fixation Points | 2023 | GrabCut dataset [86] and Berkeley dataset [87] | abdomen disease | SAM [88] | Eye gaze with SAM improved interactive segmentation mIoU. | |

| Colonnese et al. [76] | Fixation Points | 2024 | “Saliency4ASD” dataset [89] | ASD | RM3ASD [90], STAR-FC [91], AttBasedNet [92], and Gaze-Based Autism Classifier (GBAC) [76] | Gaze features improved ASD classification across accuracy, recall, and F1-score. | |

| Tian et al. [78] | Fixation Points | 2024 | Private * | glaucoma | U-net [13] | Expert gaze guided OCT localization with higher precision, recall, and F1-score. | |

| Classification | Huang et al. [79] | Fixation heatmaps | 2021 | BraTS [83] and the MIMIC-CXR-gaze [77] | brain tumor and chest disease | U-net [13], nnUnet [93], and DMFNet [94] | Gaze-aware attention strengthened segmentation/classification metrics with scarce data. |

| Ma et al. [9] | Fixation heatmaps | 2022 | Inbreast [70] and SIIM-ACR [71] | chest disease | ResNet [95], Swin Transformer [96], and EfficientNet [96] | Gaze-predicted saliency curtailed shortcut learning and improved ACC, F1-score, and AUC. | |

| Zhu et al. [80] | Fixation heatmaps | 2022 | The multi-modal CXR dataset [77] | heart disease and chest disease | ResNet [95] and Efficient Net [97] | Gaze-guided attention raised precision and recall in chest X-ray classification. | |

| Text report replacement | Zhao et al. [81] | Fixation heatmaps | 2024 | Inbreast [70] and Tufts dental dataset [70] | breast and dental diseases | ResNet [95] | Gaze-driven contrastive pretraining improved accuracy and AUC without text reports. |

3.4.2. Other Applications of ET in Enhancing MIA

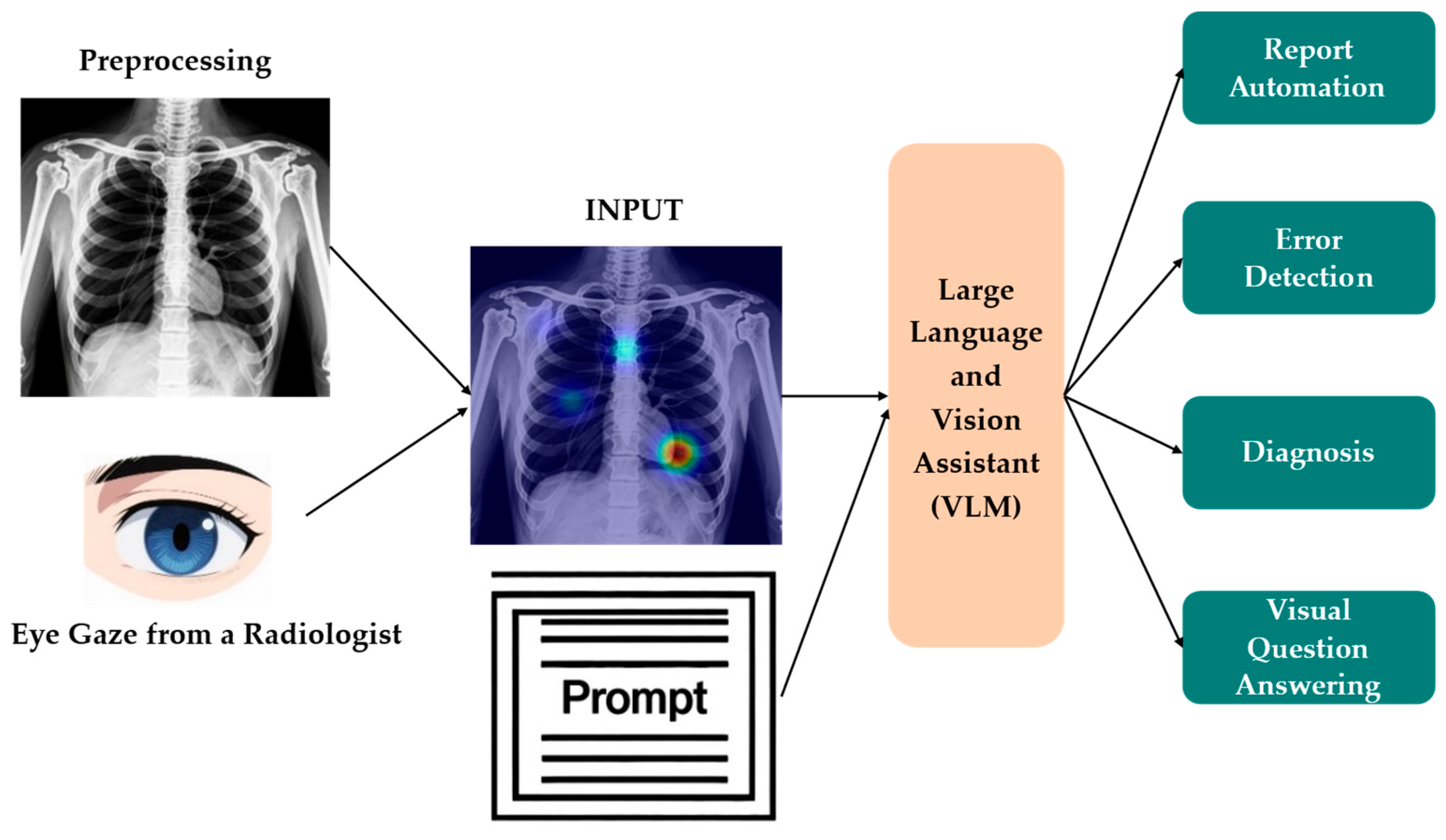

3.5. ET in MLMs and VLMs

3.5.1. Cross-Modal Alignment

3.5.2. ET-Supervised Training and Representation Learning

3.5.3. Interpretability and Validation of Multimodal Reasoning

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| DL | Deep learning |

| ET | Eye tracking |

| MIA | Medical image analysis |

| CNNs | Convolutional neural networks |

| DNNs | Deep neural networks |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| MLMs | Multimodal large models |

| VLMs | Vision–language models |

| CAD | Computer-aided diagnosis |

| C–CAD | Collaborative CAD |

| SM | Saliency map |

| McGIP | Medical Contrastive Gaze Image Pre-training |

| EGMA | Eye movement guided multimodal alignment |

| SOTA | State-of-the-art |

| ViT | Vision transformer |

| EG-ViT | Eye gaze guided vision transformer |

| SAM | Segment anything model |

| GCA | Gaze-conditioned augmentation |

| AUROC | Area Under the Receiver Operating Characteristic Curve |

References

- Suganyadevi, S.; Seethalakshmi, V.; Balasamy, K. A review on deep learning in medical image analysis. Int. J. Multimed. Inf. Retr. 2022, 11, 19–38. [Google Scholar] [CrossRef] [PubMed]

- Khera, R.; Simon, M.A.; Ross, J.S. Automation bias and assistive AI: Risk of harm from AI-driven clinical decision support. JAMA 2023, 330, 2255–2257. [Google Scholar] [CrossRef] [PubMed]

- Mir, A.N.; Rizvi, D.R. Advancements in deep learning and explainable artificial intelligence for enhanced medical image analysis: A comprehensive survey and future directions. Eng. Appl. Artif. Intell. 2025, 158, 111413. [Google Scholar] [CrossRef]

- Li, M.; Jiang, Y.; Zhang, Y.; Zhu, H. Medical image analysis using deep learning algorithms. Front. Public Health 2023, 11, 1273253. [Google Scholar] [CrossRef]

- Mazurowski, M.A.; Dong, H.; Gu, H.; Yang, J.; Konz, N.; Zhang, Y. Segment anything model for medical image analysis: An experimental study. Med. Image Anal. 2023, 89, 102918. [Google Scholar] [CrossRef]

- Sistaninejhad, B.; Rasi, H.; Nayeri, P. A review paper about deep learning for medical image analysis. Comput. Math. Method Med. 2023, 2023, 7091301. [Google Scholar] [CrossRef]

- Rana, M.; Bhushan, M. Machine learning and deep learning approach for medical image analysis: Diagnosis to detection. Multimed. Tools Appl. 2023, 82, 26731–26769. [Google Scholar] [CrossRef]

- Chen, X.; Wang, X.; Zhang, K.; Fung, K.; Thai, T.C.; Moore, K.; Mannel, R.S.; Liu, H.; Zheng, B.; Qiu, Y. Recent advances and clinical applications of deep learning in medical image analysis. Med. Image Anal. 2022, 79, 102444. [Google Scholar] [CrossRef]

- Ma, C.; Zhao, L.; Chen, Y.; Guo, L.; Zhang, T.; Hu, X.; Shen, D.; Jiang, X.; Liu, T. Rectify ViT shortcut learning by visual saliency. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 18013–18025. [Google Scholar] [CrossRef]

- Yu, K.; Chen, J.; Ding, X.; Zhang, D. Exploring cognitive load through neuropsychological features: An analysis using fNIRS-eye tracking. Med. Biol. Eng. Comput. 2025, 63, 45–57. [Google Scholar] [CrossRef]

- Meng, F.; Li, F.; Wu, S.; Yang, T.; Xiao, Z.; Zhang, Y.; Liu, Z.; Lu, J.; Luo, X. Machine learning-based early diagnosis of autism according to eye movements of real and artificial faces scanning. Front. Neurosci. 2023, 17, 1170951. [Google Scholar] [CrossRef]

- Castner, N.; Arsiwala-Scheppach, L.; Mertens, S.; Krois, J.; Thaqi, E.; Kasneci, E.; Wahl, S.; Schwendicke, F. Expert gaze as a usability indicator of medical AI decision support systems: A preliminary study. NPJ Digit. Med. 2024, 7, 199. [Google Scholar] [CrossRef]

- Geirhos, R.; Jacobsen, J.; Michaelis, C.; Zemel, R.; Brendel, W.; Bethge, M.; Wichmann, F.A. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2020, 2, 665–673. [Google Scholar] [CrossRef]

- Mall, S.; Krupinski, E.; Mello-Thoms, C. Missed cancer and visual search of mammograms: What feature based machine-learning can tell us that deep-convolution learning cannot. In Proceedings of the Medical Imaging 2019: Image Perception, Observer Performance, and Technology Assessment, San Diego, CA, USA, 20–21 February 2019. [Google Scholar]

- Mall, S.; Brennan, P.C.; Mello-Thoms, C. Modeling visual search behavior of breast radiologists using a deep convolution neural network. J. Med. Imaging 2018, 5, 1. [Google Scholar] [CrossRef] [PubMed]

- Shneiderman, B. Human-Centered AI, 1st ed.; Oxford University Press: Oxford, UK, 2022; pp. 62–135. [Google Scholar]

- Neves, J.; Hsieh, C.; Nobre, I.B.; Sousa, S.C.; Ouyang, C.; Maciel, A.; Duchowski, A.; Jorge, J.; Moreira, C. Shedding light on ai in radiology: A systematic review and taxonomy of eye gaze-driven interpretability in deep learning. Eur. J. Radiol. 2024, 172, 111341. [Google Scholar] [CrossRef]

- Moradizeyveh, S.; Tabassum, M.; Liu, S.; Newport, R.A.; Beheshti, A.; Di Ieva, A. When eye-tracking meets machine learning: A systematic review on applications in medical image analysis. arXiv 2024, arXiv:2403.07834. [Google Scholar] [CrossRef]

- Awasthi, A.; Ahmad, S.; Le, B.; Van Nguyen, H. Decoding radiologists’ intentions: A novel system for accurate region identification in chest X-ray image analysis. arXiv 2025, arXiv:2404.18981. [Google Scholar]

- Kim, Y.; Wu, J.; Abdulle, Y.; Gao, Y.; Wu, H. Enhancing human-computer interaction in chest X-ray analysis using vision and language model with eye gaze patterns. arXiv 2024, arXiv:2404.02370. [Google Scholar]

- Ibragimov, B.; Mello-Thoms, C. The use of machine learning in eye tracking studies in medical imaging: A review. IEEE J. Biomed. Health Inform. 2024, 28, 3597–3612. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; Mckenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Booth, A.; Clarke, M.; Dooley, G.; Ghersi, D.; Moher, D.; Petticrew, M.; Stewart, L. The nuts and bolts of PROSPERO: An international prospective register of systematic reviews. Syst. Rev. 2012, 1, 2. [Google Scholar] [CrossRef] [PubMed]

- Carter, B.T.; Luke, S.G. Best practices in eye tracking research. Int. J. Psychophysiol. 2020, 155, 49–62. [Google Scholar] [CrossRef]

- Dowiasch, S.; Wolf, P.; Bremmer, F. Quantitative comparison of a mobile and a stationary video-based eye-tracker. Behav. Res. Methods 2020, 52, 667–680. [Google Scholar] [CrossRef] [PubMed]

- Kaduk, T.; Goeke, C.; Finger, H.; König, P. Webcam eye tracking close to laboratory standards: Comparing a new webcam-based system and the EyeLink 1000. Behav. Res. Methods 2024, 56, 5002–5022. [Google Scholar] [CrossRef]

- Xie, J.; Zhang, Q.; Cui, Z.; Ma, C.; Zhou, Y.; Wang, W.; Shen, D. Integrating eye tracking with grouped fusion networks for semantic segmentation on mammogram images. IEEE Trans. Med. Imaging 2025, 44, 868–879. [Google Scholar] [CrossRef]

- Ma, C.; Jiang, H.; Chen, W.; Li, Y.; Wu, Z.; Yu, X.; Liu, Z.; Guo, L.; Zhu, D.; Zhang, T.; et al. Eye-gaze guided multi-modal alignment for medical representation learning. In Proceedings of the 38th Conference on Neural Information Processing Systems (NeurIPS 2024), Vancouver, BC, Canada, 9–15 December 2024. [Google Scholar]

- Pham, T.T.; Nguyen, T.; Ikebe, Y.; Awasthi, A.; Deng, Z.; Wu, C.C.; Nguyen, H.; Le, N. GazeSearch: Radiology findings search benchmark. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV 2025), Tucson, AZ, USA, 28 February–4 March 2025. [Google Scholar]

- Ma, C.; Zhao, L.; Chen, Y.; Wang, S.; Guo, L.; Zhang, T.; Shen, D.; Jiang, X.; Liu, T. Eye-gaze-guided vision transformer for rectifying shortcut learning. IEEE Trans. Med. Imaging 2023, 42, 3384–3394. [Google Scholar] [CrossRef]

- Attallah, O. Skin-CAD: Explainable deep learning classification of skin cancer from dermoscopic images by feature selection of dual high-level CNNs features and transfer learning. Comput. Biol. Med. 2024, 178, 108798. [Google Scholar] [CrossRef]

- Theng, D.; Bhoyar, K.K. Feature selection techniques for machine learning: A survey of more than two decades of research. Knowl. Inf. Syst. 2024, 66, 1575–1637. [Google Scholar] [CrossRef]

- Yan, P.; Sun, W.; Li, X.; Li, M.; Jiang, Y.; Luo, H. PKDN: Prior knowledge distillation network for bronchoscopy diagnosis. Comput. Biol. Med. 2023, 166, 107486. [Google Scholar] [CrossRef]

- Sarkar, S.; Wu, T.; Harwood, M.; Silva, A.C. A transfer learning-based framework for classifying lymph node metastasis in prostate cancer patients. Biomedicines 2024, 12, 2345. [Google Scholar] [CrossRef] [PubMed]

- Muksimova, S.; Umirzakova, S.; Iskhakova, N.; Khaitov, A.; Cho, Y.I. Advanced convolutional neural network with attention mechanism for alzheimer’s disease classification using MRI. Comput. Biol. Med. 2025, 190, 110095. [Google Scholar] [CrossRef] [PubMed]

- Allogmani, A.S.; Mohamed, R.M.; Al-Shibly, N.M.; Ragab, M. Enhanced cervical precancerous lesions detection and classification using archimedes optimization algorithm with transfer learning. Sci. Rep. 2024, 14, 12076. [Google Scholar] [CrossRef]

- Kok, E.M.; de Bruin, A.B.H.; Robben, S.G.F.; van Merriënboer, J.J.G. Looking in the same manner but seeing it differently: Bottom-up and expertise effects in radiology. Appl. Cogn. Psychol. 2012, 26, 854–862. [Google Scholar] [CrossRef]

- Kok, E.M.; Jarodzka, H. Before your very eyes: The value and limitations of eye tracking in medical education. Med. Educ. 2017, 51, 114–122. [Google Scholar] [CrossRef]

- Wang, S.; Ouyang, X.; Liu, T.; Wang, Q.; Shen, D. Follow my eye: Using gaze to supervise computer-aided diagnosis. IEEE Trans. Med. Imaging 2022, 41, 1688–1698. [Google Scholar] [CrossRef]

- Jiang, H.; Hou, Y.; Miao, H.; Ye, H.; Gao, M.; Li, X.; Jin, R.; Liu, J. Eye tracking based deep learning analysis for the early detection of diabetic retinopathy: A pilot study. Biomed. Signal Process. Control 2023, 84, 104830. [Google Scholar] [CrossRef]

- Dmitriev, K.; Marino, J.; Baker, K.; Kaufman, A.E. Visual analytics of a computer-aided diagnosis system for pancreatic lesions. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2174–2185. [Google Scholar] [CrossRef] [PubMed]

- Franceschiello, B.; Noto, T.D.; Bourgeois, A.; Murray, M.M.; Minier, A.; Pouget, P.; Richiardi, J.; Bartolomeo, P.; Anselmi, F. Machine learning algorithms on eye tracking trajectories to classify patients with spatial neglect. Comput. Methods. Programs. Biomed. 2022, 221, 106929. [Google Scholar] [CrossRef]

- Moinak, B.; Shubham, J.; Prateek, P. GazeRadar: A gaze and radiomics-guided disease localization framework. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2022, Singapore, 16 September 2022. [Google Scholar]

- Wang, S.; Zhao, Z.; Zhang, L.; Shen, D.; Wang, Q. Crafting good views of medical images for contrastive learning via expert-level visual attention. In Proceedings of the 37th Conference on Neural Information Processing Systems (NeurIPS 2023), New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Li, X.; Ding, H.; Yuan, H.; Zhang, W.; Pang, J.; Cheng, G.; Chen, K.; Liu, Z.; Loy, C.C. Transformer-based visual segmentation: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10138–10163. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16×16 words: Transformers for image recognition at scale. In Proceedings of the 9th International Conference on Learning Representations (ICLR 2021), Vienna, Austria (Converted to fully virtual due to COVID-19), 3–7 May 2021. [Google Scholar]

- He, K.; Gan, C.; Li, Z.; Rekik, I.; Yin, Z.; Ji, W.; Gao, Y.; Wang, Q.; Zhang, J.; Shen, D. Transformers in medical image analysis. Intell. Med. 2023, 3, 59–78. [Google Scholar] [CrossRef]

- Azad, R.; Kazerouni, A.; Heidari, M.; Aghdam, E.K.; Molaei, A.; Jia, Y.; Jose, A.; Roy, R.; Merhof, D. Advances in medical image analysis with vision transformers: A comprehensive review. Med. Image Anal. 2024, 91, 103000. [Google Scholar] [CrossRef]

- Kong, Y.; Wang, S.; Cai, J.; Zhao, Z.; Shen, Z.; Li, Y.; Fei, M.; Wang, Q. Gaze-DETR: Using expert gaze to reduce false positives in vulvovaginal candidiasis screening. arXiv 2024, arXiv:2405.09463. [Google Scholar] [CrossRef]

- Bhattacharya, M.; Jain, S.; Prasanna, P. RadioTransformer: A cascaded global-focal transformer for visual attention–guided disease classification. In Proceedings of the 17th European Conference on Computer Vision(ECCV), Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Castelvecchi, D. Can we open the black box of AI? Nature 2016, 538, 20–23. [Google Scholar] [CrossRef]

- Lipton, Z.C. The mythos of model interpretability. Commun. ACM 2018, 61, 36–43. [Google Scholar] [CrossRef]

- Hulsen, T. Explainable artificial intelligence (XAI): Concepts and challenges in healthcare. AI 2023, 4, 652–666. [Google Scholar] [CrossRef]

- Hildt, E. What is the role of explainability in medical artificial intelligence? A case-based approach. Bioengineering 2025, 12, 375. [Google Scholar] [CrossRef]

- Barredo Arrieta, A.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Awasthi, A.; Le, N.; Deng, Z.; Agrawal, R.; Wu, C.C.; Van Nguyen, H. Bridging human and machine intelligence: Reverse-engineering radiologist intentions for clinical trust and adoption. Comp. Struct. Biotechnol. J. 2024, 24, 711–723. [Google Scholar] [CrossRef]

- Bhati, D.; Neha, F.; Amiruzzaman, M. A survey on explainable artificial intelligence (XAI) techniques for visualizing deep learning models in medical imaging. J. Imaging 2024, 10, 239. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (XAI): Toward medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4793–4813. [Google Scholar] [CrossRef] [PubMed]

- Sadeghi, Z.; Alizadehsani, R.; Cifci, M.A.; Kausar, S.; Rehman, R.; Mahanta, P.; Bora, P.K.; Almasri, A.; Alkhawaldeh, R.S.; Hussain, S.; et al. A review of explainable artificial intelligence in healthcare. Comput. Electr. Eng. 2024, 118, 109370. [Google Scholar] [CrossRef]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. In Proceedings of the 2nd International Conference on Learning Representations (ICLR 2014), Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Smilkov, D.; Thorat, N.; Kim, B.; Egas, F.V.; Wattenberg, M. SmoothGrad: Removing noise by adding noise. In Proceedings of the 34th International Conference on Machine Learning (ICML) (ICML 2017), Sydney, NSW, Australia, 6–11 August 2017. [Google Scholar]

- Sundararajan, M.; Taly, A.; Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, NSW, Australia, 6–11 August 2017. [Google Scholar]

- Kapishnikov, A.; Venugopalan, S.; Avci, B.; Wedin, B.; Terry, M.; Bolukbasi, T. Guided integrated gradients: An adaptive path method for removing noise. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Brunyé, T.T.; Mercan, E.; Weaver, D.L.; Elmore, J.G. Accuracy is in the eyes of the pathologist: The visual interpretive process and diagnostic accuracy with digital whole slide images. J. Biomed. Inform. 2017, 66, 171–179. [Google Scholar] [CrossRef]

- Liu, S.; Chen, W.; Liu, J.; Luo, X.; Shen, L. GEM: Context-aware gaze EstiMation with visual search behavior matching for chest radiograph. arXiv 2024, arXiv:2408.05502. [Google Scholar]

- Kim, J.; Zhou, H.; Lipton, Z. Do you see what i see? A comparison of radiologist eye gaze to computer vision saliency maps for chest X-ray classification. In Proceedings of the International Conference on Machine Learning (ICML), Vienna, Austria, 23–24 July 2021. [Google Scholar]

- Khosravan, N.; Celik, H.; Turkbey, B.; Jones, E.C.; Wood, B.; Bagci, U. A collaborative computer aided diagnosis (c-CAD) system with eye-tracking, sparse attentional model, and deep learning. Med. Image Anal. 2019, 51, 101–115. [Google Scholar] [CrossRef]

- Aresta, G.; Ferreira, C.; Pedrosa, J.; Araujo, T.; Rebelo, J.; Negrao, E.; Morgado, M.; Alves, F.; Cunha, A.; Ramos, I.; et al. Automatic lung nodule detection combined with gaze information improves radiologists’ screening performance. IEEE J. Biomed. Health Inform. 2020, 24, 2894–2901. [Google Scholar] [CrossRef]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J.S. INbreast: Toward a full-field digital mammographic database. Acad. Radiol. 2012, 19, 236–248. [Google Scholar] [CrossRef]

- SIIM-ACR Pneumothorax Segmentation. Available online: https://www.kaggle.com/c/siim-acr-pneumothorax-segmentation (accessed on 15 May 2025).

- Stember, J.N.; Celik, H.; Krupinski, E.; Chang, P.D.; Mutasa, S.; Wood, B.J.; Lignelli, A.; Moonis, G.; Schwartz, L.H.; Jambawalikar, S.; et al. Eye tracking for deep learning segmentation using convolutional neural networks. J. Digit. Imaging 2019, 32, 597–604. [Google Scholar] [CrossRef]

- Stember, J.N.; Celik, H.; Gutman, D.; Swinburne, N.; Young, R.; Eskreis-Winkler, S.; Holodny, A.; Jambawalikar, S.; Wood, B.J.; Chang, P.D.; et al. Integrating eye tracking and speech recognition accurately annotates MR brain images for deep learning: Proof of principle. Radiol. Artif. Intell. 2021, 3, e200047. [Google Scholar] [CrossRef] [PubMed]

- Khosravan, N.; Celik, H.; Turkbey, B.; Cheng, R.; Mccreedy, E.; Mcauliffe, M.; Bednarova, S.; Jones, E.; Chen, X.; Choyke, P. Gaze2segment: A pilot study for integrating eye-tracking technology into medical image segmentation. In Proceedings of the 19th International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI 2016), Athens, Greece, 17–21 October 2016. [Google Scholar]

- Wang, B.; Aboah, A.; Zhang, Z.; Pan, H.; Bagci, U. GazeSAM: Interactive image segmentation with eye gaze and segment anything model. In Proceedings of the Gaze Meets Machine Learning Workshop, New Orleans, LA, USA, 30 November 2024. [Google Scholar]

- Colonnese, F.; Di Luzio, F.; Rosato, A.; Panella, M. Enhancing autism detection through gaze analysis using eye tracking sensors and data attribution with distillation in deep neural networks. Sensors 2024, 24, 7792. [Google Scholar] [CrossRef] [PubMed]

- Karargyris, A.; Kashyap, S.; Lourentzou, I.; Wu, J.T.; Sharma, A.; Tong, M.; Abedin, S.; Beymer, D.; Mukherjee, V.; Krupinski, E.A.; et al. Creation and validation of a chest X-ray dataset with eye-tracking and report dictation for AI development. Sci. Data 2021, 8, 92. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Sharma, A.; Mehta, S.; Kaushal, S.; Liebmann, J.M.; Cioffi, G.A.; Thakoor, K.A. Automated identification of clinically relevant regions in glaucoma OCT reports using expert eye tracking data and deep learning. Transl. Vis. Sci. Technol. 2024, 13, 24. [Google Scholar] [CrossRef]

- Huang, Y.; Li, X.; Yang, L.; Gu, L.; Zhu, Y.; Hirofumi, S.; Meng, Q.; Harada, T.; Sato, Y. Leveraging human selective attention for medical image analysis with limited training data. In Proceedings of the 32nd British Machine Vision Conference (BMVC 2021), Virtually/Online, 22–25 November 2021. [Google Scholar]

- Zhu, H.; Salcudean, S.; Rohling, R. Gaze-guided class activation mapping: Leveraging human attention for network attention in chest X-rays classification. In Proceedings of the 15th International Symposium on Visual Information Communication and Interaction (VINCI 2022), Chur, Switzerland (hybrid in-person + virtual), 16–18 August 2022. [Google Scholar]

- Zhao, Z.; Wang, S.; Wang, Q.; Shen, D. Mining gaze for contrastive learning toward computer-assisted diagnosis. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, AAAI 2024, Vancouver, BC, Canada, 20–27 February 2024. [Google Scholar]

- Panetta, K.; Rajendran, R.; Ramesh, A.; Rao, S.; Agaian, S. Tufts dental database: A multimodal panoramic X-ray dataset for benchmarking diagnostic systems. IEEE J. Biomed. Health Inform. 2022, 26, 1650–1659. [Google Scholar] [CrossRef]

- Menze, B.H.; Jakab, A.; Bauer, S.; Kalpathy-Cramer, J.; Farahani, K.; Kirby, J.; Burren, Y.; Porz, N.; Slotboom, J.; Wiest, R.; et al. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 2015, 34, 1993–2024. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Chen, P.; Gao, L.; Shi, X.; Allen, K.; Yang, L. Fully automatic knee osteoarthritis severity grading using deep neural networks with a novel ordinal loss. Comput. Med. Imaging. Graph. 2019, 75, 84–92. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. GrabCut: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A Database of Human Segmented Natural Images and Its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics; University of California at Berkeley: Berkeley, CA, USA, 2001. [Google Scholar]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.; et al. Segment anything. In Proceedings of the 20th IEEE/CVF International Conference on Computer Vision (ICCV 2023), Paris, France, 2–6 October 2023. [Google Scholar]

- Gutiérrez, J.; Che, Z.; Zhai, G.; Le Callet, P. Saliency4ASD: Challenge, dataset and tools for visual attention modeling for autism spectrum disorder. Signal Process. Image Commun. 2021, 92, 116092. [Google Scholar] [CrossRef]

- Wei, Q.; Dong, W.; Yu, D.; Wang, K.; Yang, T.; Xiao, Y.; Long, D.; Xiong, H.; Chen, J.; Xu, X.; et al. Early identification of autism spectrum disorder based on machine learning with eye-tracking data. J. Affect. Disord. 2024, 358, 326–334. [Google Scholar] [CrossRef] [PubMed]

- Liaqat, S.; Wu, C.; Duggirala, P.R.; Cheung, S.S.; Chuah, C.; Ozonoff, S.; Young, G. Predicting ASD diagnosis in children with synthetic and image-based eye gaze data. Signal Process. Image Commun. 2021, 94, 116198. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Zhao, Q. Attention-based autism spectrum disorder screening with privileged modality. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. Nnu-net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Liu, X.; Ding, M.; Zheng, J.; Li, J. 3d dilated multi-fiber network for real-time brain tumor segmentation in MRI. arXiv 2019, arXiv:1904.03355. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 26–30 June 2016. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Virtual, 11–17 October 2021. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (ICML 2019), Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Leveque, L.; Bosmans, H.; Cockmartin, L.; Liu, H. State of the art: Eye-tracking studies in medical imaging. IEEE Access 2018, 6, 37023–37034. [Google Scholar] [CrossRef]

- Zuo, F.; Jing, P.; Sun, J.; Duan, J.; Ji, Y.; Liu, Y. Deep learning-based eye-tracking analysis for diagnosis of alzheimer’s disease using 3d comprehensive visual stimuli. IEEE J. Biomed. Health Inform. 2024, 28, 2781–2793. [Google Scholar] [CrossRef]

- Kumar, S.; Rani, S.; Sharma, S.; Min, H. Multimodality fusion aspects of medical diagnosis: A comprehensive review. Bioengineering 2024, 11, 1233. [Google Scholar] [CrossRef]

- Baltrusaitis, T.; Ahuja, C.; Morency, L. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Warner, E.; Lee, J.; Hsu, W.; Syeda-Mahmood, T.; Kahn, C.E.; Gevaert, O.; Rao, A. Multimodal machine learning in image-based and clinical biomedicine: Survey and prospects. Int. J. Comput. Vis. 2024, 132, 3753–3769. [Google Scholar] [CrossRef]

- Kabir, R.; Haque, N.; Islam, M.S.; Marium-E-Jannat. A comprehensive survey on visual question answering datasets and algorithms. arXiv 2024, arXiv:2411.11150. [Google Scholar] [CrossRef]

- Masse, B.; Ba, S.; Horaud, R. Tracking gaze and visual focus of attention of people involved in social interaction. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2711–2724. [Google Scholar] [CrossRef]

- Peng, P.; Fan, W.; Shen, Y.; Liu, W.; Yang, X.; Zhang, Q.; Wei, X.; Zhou, D. Eye gaze guided cross-modal alignment network for radiology report generation. IEEE J. Biomed. Health Inform. 2024, 28, 7406–7419. [Google Scholar] [CrossRef] [PubMed]

- Lanfredi, R.B.; Zhang, M.; Auffermann, W.F.; Chan, J.; Duong, P.T.; Srikumar, V.; Drew, T.; Schroeder, J.D.; Tasdizen, T. REFLACX, a dataset of reports and eye-tracking data for localization of abnormalities in chest X-rays. Sci. Data 2022, 9, 350. [Google Scholar] [CrossRef] [PubMed]

- MIMIC-Eye: Integrating MIMIC Datasets with REFLACX and Eye Gaze for Multimodal Deep Learning Applications. Available online: https://physionet.org/content/mimic-eye-multimodal-datasets/1.0.0/ (accessed on 23 April 2025).

- Tutek, M.; Šnajder, J. Toward practical usage of the attention mechanism as a tool for interpretability. IEEE Access 2022, 10, 47011–47030. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Z.; Shen, Z.; Wang, B.; Wang, Q.; Shen, D. Improving self-supervised medical image pre-training by early alignment with human eye gaze information. IEEE Trans. Med. Imaging 2025, in press. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Z.; Zhuang, Z.; Ouyang, X.; Zhang, L.; Li, Z.; Ma, C.; Liu, T.; Shen, D.; Wang, Q. Learning better contrastive view from radiologist’s gaze. Pattern Recognit. 2025, 162, 111350. [Google Scholar] [CrossRef]

- Zeger, E.; Pilanci, M. Black boxes and looking glasses: Multilevel symmetries, reflection planes, and convex optimization in deep networks. arXiv 2024, arXiv:2410.04279. [Google Scholar] [CrossRef]

- Zhang, M.; Cui, Q.; Lü, Y.; Yu, W.; Li, W. A multimodal learning machine framework for alzheimer’s disease diagnosis based on neuropsychological and neuroimaging data. Comput. Ind. Eng. 2024, 197, 110625. [Google Scholar] [CrossRef]

- Kumar, P.; Khandelwal, E.; Tapaswi, M.; Sreekumar, V. Seeing eye to AI: Comparing human gaze and model attention in video memorability. arXiv 2025, arXiv:2311.16484. [Google Scholar]

- Khosravi, S.; Khan, A.R.; Zoha, A.; Ghannam, R. Self-directed learning using eye-tracking: A comparison between wearable head-worn and webcam-based technologies. In Proceedings of the IEEE Global Engineering Education Conference (EDUCON), Tunis, Tunisia, 28–31 March 2022. [Google Scholar]

- Kora, P.; Ooi, C.P.; Faust, O.; Raghavendra, U.; Gudigar, A.; Chan, W.Y.; Meenakshi, K.; Swaraja, K.; Plawiak, P.; Rajendra Acharya, U. Transfer learning techniques for medical image analysis: A review. Biocybern. Biomed. Eng. 2022, 42, 79–107. [Google Scholar] [CrossRef]

- Saxena, S.; Fink, L.K.; Lange, E.B. Deep learning models for webcam eye tracking in online experiments. Behav. Res. Methods 2024, 56, 3487–3503. [Google Scholar] [CrossRef] [PubMed]

- Valtakari, N.V.; Hooge, I.T.C.; Viktorsson, C.; Nyström, P.; Falck-Ytter, T.; Hessels, R.S. Eye tracking in human interaction: Possibilities and limitations. Behav. Res. Methods 2021, 53, 1592–1608. [Google Scholar] [CrossRef]

- Sharafi, Z.; Sharif, B.; Guéhéneuc, Y.; Begel, A.; Bednarik, R.; Crosby, M. A practical guide on conducting eye tracking studies in software engineering. Empir. Softw. Eng. 2020, 25, 3128–3174. [Google Scholar] [CrossRef]

- Guideline for Reporting Standards of Eye-Tracking Research in Decision Sciences. Available online: https://osf.io/preprints/psyarxiv/f6qcy_v1 (accessed on 25 March 2025).

- Seyedi, S.; Jiang, Z.; Levey, A.; Clifford, G.D. An investigation of privacy preservation in deep learning-based eye-tracking. Biomed. Eng. Online 2022, 21, 67. [Google Scholar] [CrossRef] [PubMed]

| List of Inclusion and Exclusion Criteria | |||

|---|---|---|---|

| Inclusion Criteria (IC) | Exclusion Criteria (EC) | ||

| IC1 | Should contain at least one of the keywords. | EC1 | Manuscripts containing duplicated passages lack originality or fail to contribute meaningful insights. |

| IC2 | Must be sourced from reputable academic databases, such as PubMed and Web of Science. | EC2 | The full-text publication could not be retrieved or accessed through available channels. |

| IC3 | Published after 2019 (inclusive). | EC3 | Study was rejected or contains a warning. |

| IC4 | Publications included peer-reviewed journal papers, conference or workshop papers, non-peer-reviewed papers, and preprints. | EC4 | Non-English documents or translations that exhibit structural disorganization, ambiguous phrasing, or critical information gaps. |

| IC5 | Selected studies must demonstrate a clear alignment with DL and ET’s focus on medical image analysis, with relevant titles, abstracts, and content. | EC5 | Papers unrelated to the application or development of DL or ET or medical image analysis. |

| Application Type | ET Patterns | Mechanism | Typical Case |

|---|---|---|---|

| As weakly supervised labels | Fixations | “Image + ET” dual-channel input uses ET data as a weakly supervised label, replaces manual box selection with the observation of fixations, and trains the model to perform lesion segmentation. | A novel radiologist gaze-guided weakly supervised segmentation framework [27] demonstrates superior performance in handling class scale variations. |

| Multimodal alignment | Heatmaps | Align the heatmaps with the words and sentences in the radiological reports to enhance the consistency of cross-modal retrieval and diagnosis of images and text. | The ET-guided multimodal alignment (EGMA) framework [28] precisely aligns sentence-level text with image regions using gaze heatmaps. |

| Visual search modeling | Saccades and scan paths | Train the model using the scan paths to predict the complete search trajectory of doctors when locating lesions. | The ChestSearch model [29] reproduces the chest X-ray diagnosis pathway on the GazeSearch dataset. |

| Interpretability validation | Heatmaps | Compare the model’s activation map with the expert’s gaze heatmaps to verify whether the model is focusing on clinically critical areas. | By incorporating the radiologists’ ET heatmaps, we can determine whether the attention mechanism of the model is reasonable [30]. |

| Task | Study (ET Strategy) | Modality and Disease | Dataset | Reported Metric(s) with ET | Highlights |

|---|---|---|---|---|---|

| Detection | Gaze-DETR [49] | Colposcopy (vulvovaginal candidiasis) | Colposcopy images | Average precision increased at different thresholds, and average recall increased to 0.988. | ET is encoded as sparse attention weights concatenated with image features. |

| Classification | RadioTransformer [50] | Chest X-ray (pneumonia) | MIMIC-CXR | F1-score ↑ and AUC ↑ | By integrating visual attention into the network, the model focuses on diagnostically relevant regions of interest, leading to higher confidence in decision-making. |

| Annotation (auxiliary to segmentation) | ET + speech for annotation [72] | Brain MRI (brain tumor lesion marking) | BraTS | Accuracy = 92% (training), 85% (independent test) | Supports scalable, high-quality supervision for DL. |

| Task/Domain | Multimodal Model Methods | ET Strategy | Year | Performance (Metrics) | Highlights |

|---|---|---|---|---|---|

| Radiology image classification and retrieval | EGMA [28] | Utilize radiologists’ fixation points to precisely align visual and textual elements within a dual-encoder framework. | 2024 | SOTA on multiple medical datasets (improved classification AUC and retrieval recall). | Gaze-guided alignment improved AUROC and retrieval through stronger image–text grounding. |

| Chest X-ray report generation | EGGCA-Net [105] | Integrate radiologists’ eye gaze regions (prior knowledge) to guide image–text feature alignment for report generation. | 2024 | Outperformed previous models on MIMIC-CXR. | ET-guided alignment produced more accurate, comprehensible radiology reports. |

| Chest X-ray analysis | VLMs incorporating ET data [20] | Leverage ET heatmaps overlaid on chest X-rays to highlight radiologists’ key focus areas during evaluation. | 2024 | Different evaluation metrics for different tasks; all the baseline models performed better with ET. | Adding gaze improved chest X-ray diagnostic accuracy across tasks. |

| Self-supervised medical image pre-training | GzPT [109] | Integrate ET with existing contrastive learning methods to focus on images with similar gaze patterns. | 2025 | SOTA on three medical datasets. | Gaze-similarity positives delivered SOTA pretraining and more interpretable features. |

| Knee X-ray classification | FocusContrast [110] | Use gaze to supervise the training for visual attention prediction. | 2025 | Consistently improved SOTA contrastive learning methods in classification accuracy. | Gaze-predicted attention consistently lifted knee X-ray classification. |

| Video memorability prediction | CNN + Transformer (CLIP-based spatio-temporal model) [113] | Predict memorability scores using an attention-based model aligned with human gaze fixations (collected via ET). | 2025 | Matched SOTA memorability prediction. | Model attention aligned with human gaze on memorable content, matching SOTA performance. |

| Chest radiograph abnormality diagnosis | TGID [19] | Predict radiology report intentions with temporal grounding, using fixation heatmap videos and embedded time steps as inputs. | 2025 | Superior to SOTA methods. | Temporal grounding from gaze improved intention detection beyond prior methods. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duan, J.; Zhang, M.; Song, M.; Xu, X.; Lu, H. Eye Tracking-Enhanced Deep Learning for Medical Image Analysis: A Systematic Review on Data Efficiency, Interpretability, and Multimodal Integration. Bioengineering 2025, 12, 954. https://doi.org/10.3390/bioengineering12090954

Duan J, Zhang M, Song M, Xu X, Lu H. Eye Tracking-Enhanced Deep Learning for Medical Image Analysis: A Systematic Review on Data Efficiency, Interpretability, and Multimodal Integration. Bioengineering. 2025; 12(9):954. https://doi.org/10.3390/bioengineering12090954

Chicago/Turabian StyleDuan, Jiangxia, Meiwei Zhang, Minghui Song, Xiaopan Xu, and Hongbing Lu. 2025. "Eye Tracking-Enhanced Deep Learning for Medical Image Analysis: A Systematic Review on Data Efficiency, Interpretability, and Multimodal Integration" Bioengineering 12, no. 9: 954. https://doi.org/10.3390/bioengineering12090954

APA StyleDuan, J., Zhang, M., Song, M., Xu, X., & Lu, H. (2025). Eye Tracking-Enhanced Deep Learning for Medical Image Analysis: A Systematic Review on Data Efficiency, Interpretability, and Multimodal Integration. Bioengineering, 12(9), 954. https://doi.org/10.3390/bioengineering12090954