Analyzing Retinal Vessel Morphology in MS Using Interpretable AI on Deep Learning-Segmented IR-SLO Images

Abstract

1. Introduction

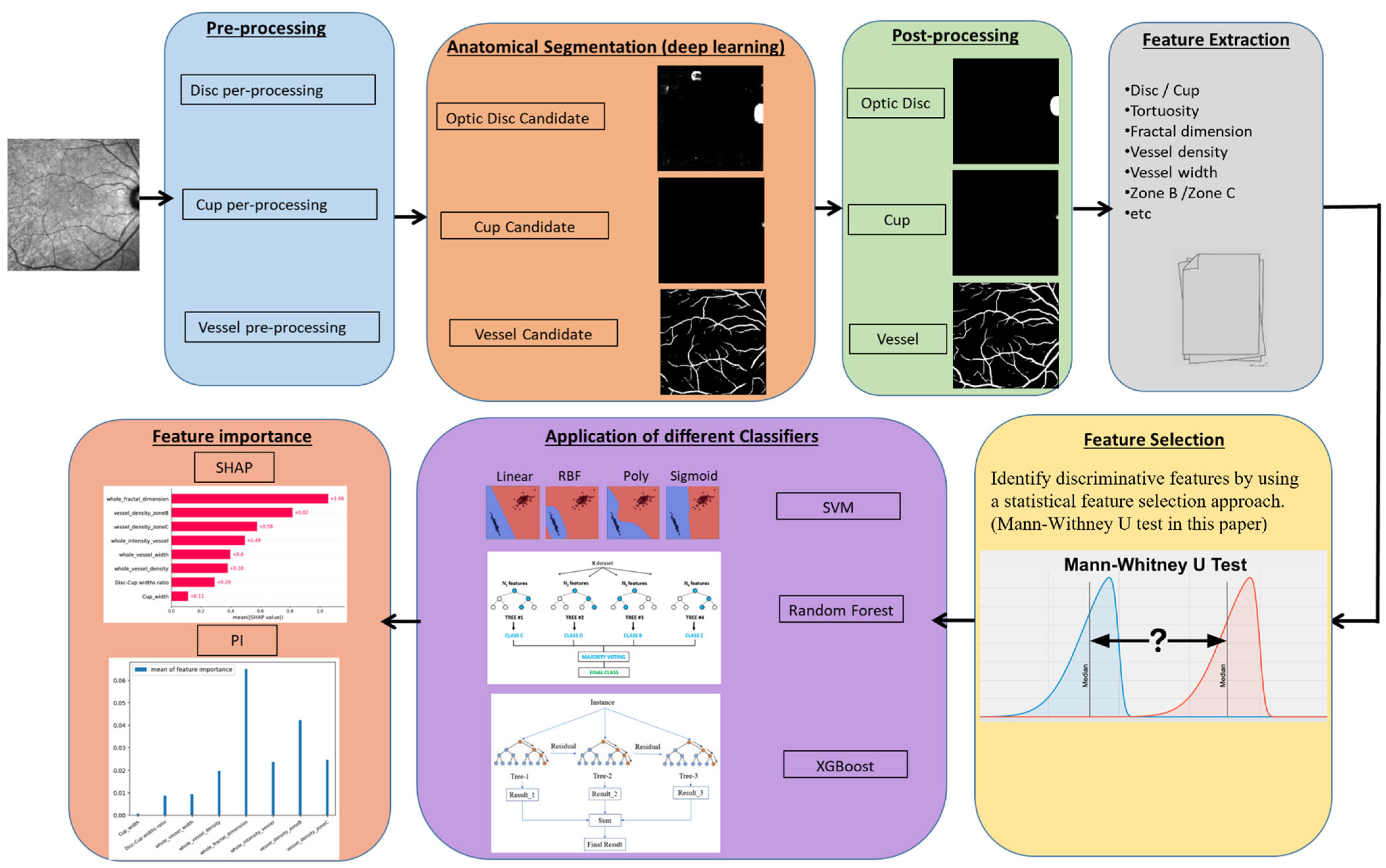

- Adaptation and validation of a deep learning model trained on fundus images for accurate segmentation of optic disc, cup, and retinal vessels in IR-SLO, demonstrating effective cross-domain transfer when IR-SLO-specific data are limited.

- Comprehensive feature importance analysis using SHAP across multiple machine learning models to identify key markers differentiating MS from healthy controls.

- The first detailed morphological assessment of IR-SLO images in MS, highlighting potential non-invasive retinal biomarkers for diagnosis.

2. Materials and Methods

2.1. Dataset

2.1.1. Internal Dataset

2.1.2. External Dataset

2.1.3. Test, Validation, and Train Data Splitting

2.1.4. Data Augmentation

2.2. Feature Extraction

2.2.1. Anatomical Segmentation

Pre-Processing

Optic Disc Candidates

Post Processing

- In order to eliminate noise pixels situated between the candidates, particularly in low-quality IR-SLO images exhibiting intensity variations despite applying the histogram matching method, morphological closing and opening operations, using a structural element in the shape of an ellipse, were performed on the binary images containing the candidates.

- Since the optic disc appears as bright areas in the inverted images that are generated during the pre-processing phase, dark regions cannot be identified as the optic disc. To filter out candidate areas with a low probability of being the optic disc, candidates with a mean intensity lower than a specific threshold were excluded. This threshold was determined based on the mean intensity values of all candidates in each image.

- Candidate regions in each image with an area smaller than a certain threshold were excluded (2300 pixels for candidates located on either side of the images and 3000 pixels for those located near the center of the images).

- The shape and area of the remained candidates were determined using connected component analysis, and those with a line shape or a low width-to-length ratio in their bounding box were eliminated. Candidate regions close to the center of the images were further filtered by removing those with a low length-to-width ratio within their bounding box. Ultimately, the final optic disc candidate was identified as the one with the greatest area.

- The final optic disc candidate underwent a blob detection algorithm to delineate the boundary of the optic disc. To achieve this, an ellipse transform was employed, considering that in some images only one arc of the optic disc may be visible. The algorithm used to calculate the boundary and width of the optic disc candidate positioned on the sides of the IR-SLO images is summarized in the Supplementary Figures S3 and S4.

Cup Segmentation

- The bounding box of the candidate should entirely fall within the optic disc boundary.

- The width-to-length or length-to-width ratio of the candidate must be less than 2, as the cup does not have a narrow oval shape.

- The area of the candidate must exceed a specific threshold, set at 700 in this work.

Vessel Segmentation

Binary Vessel Segmentation Map

Post Processing

2.2.2. Feature Measurement

2.3. Feature Selection

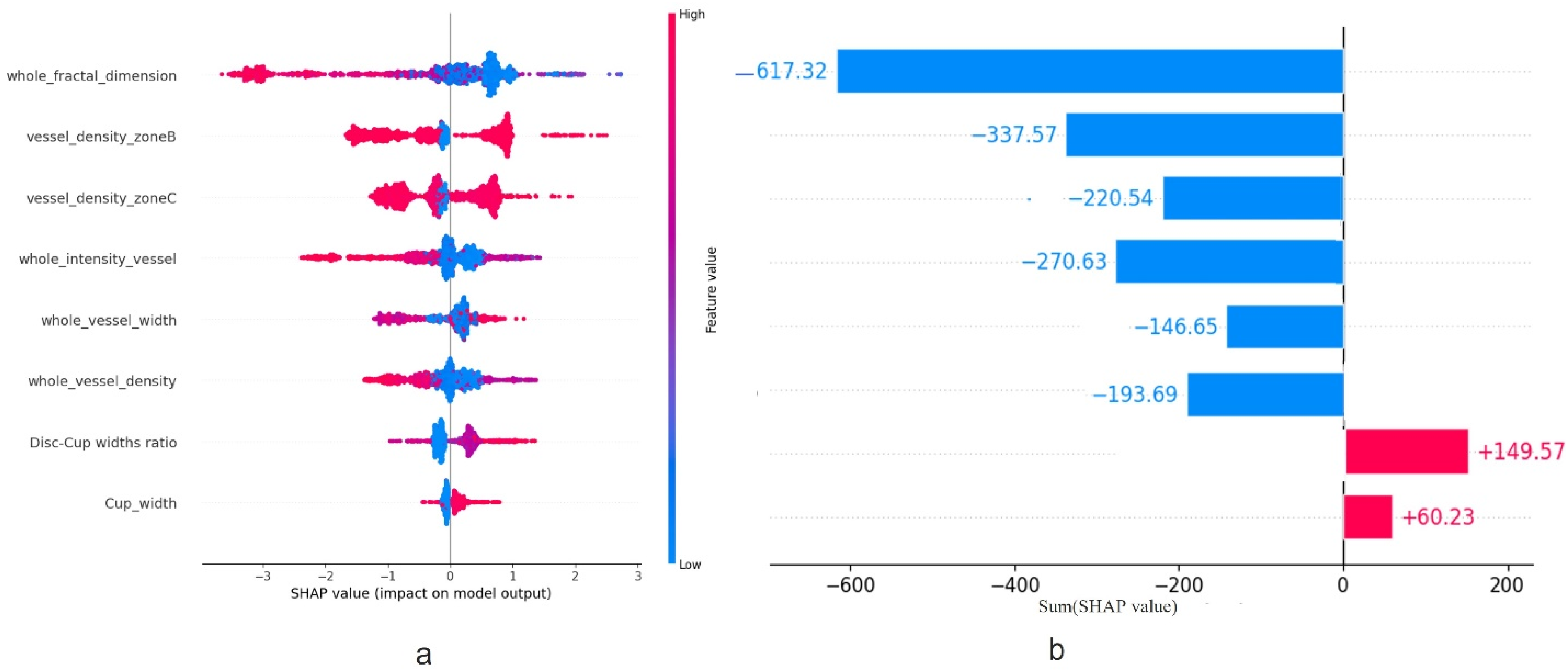

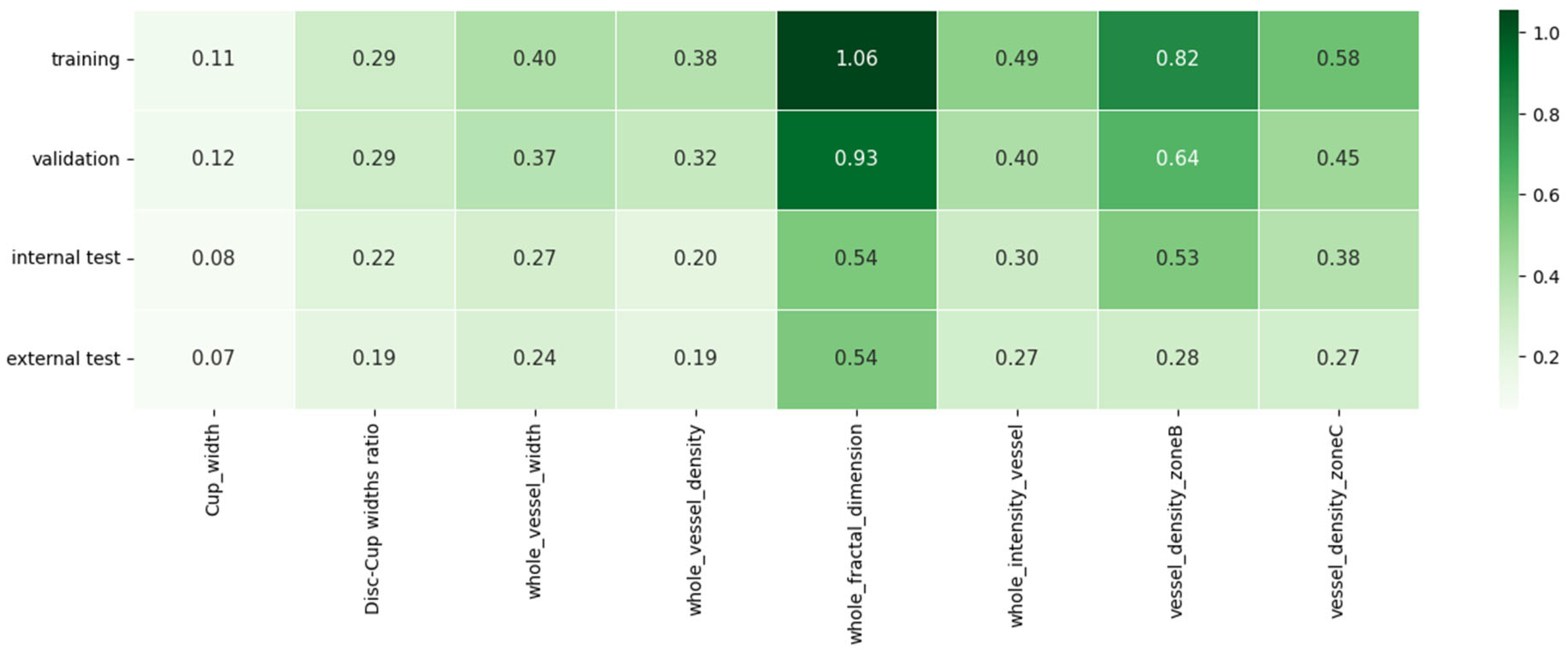

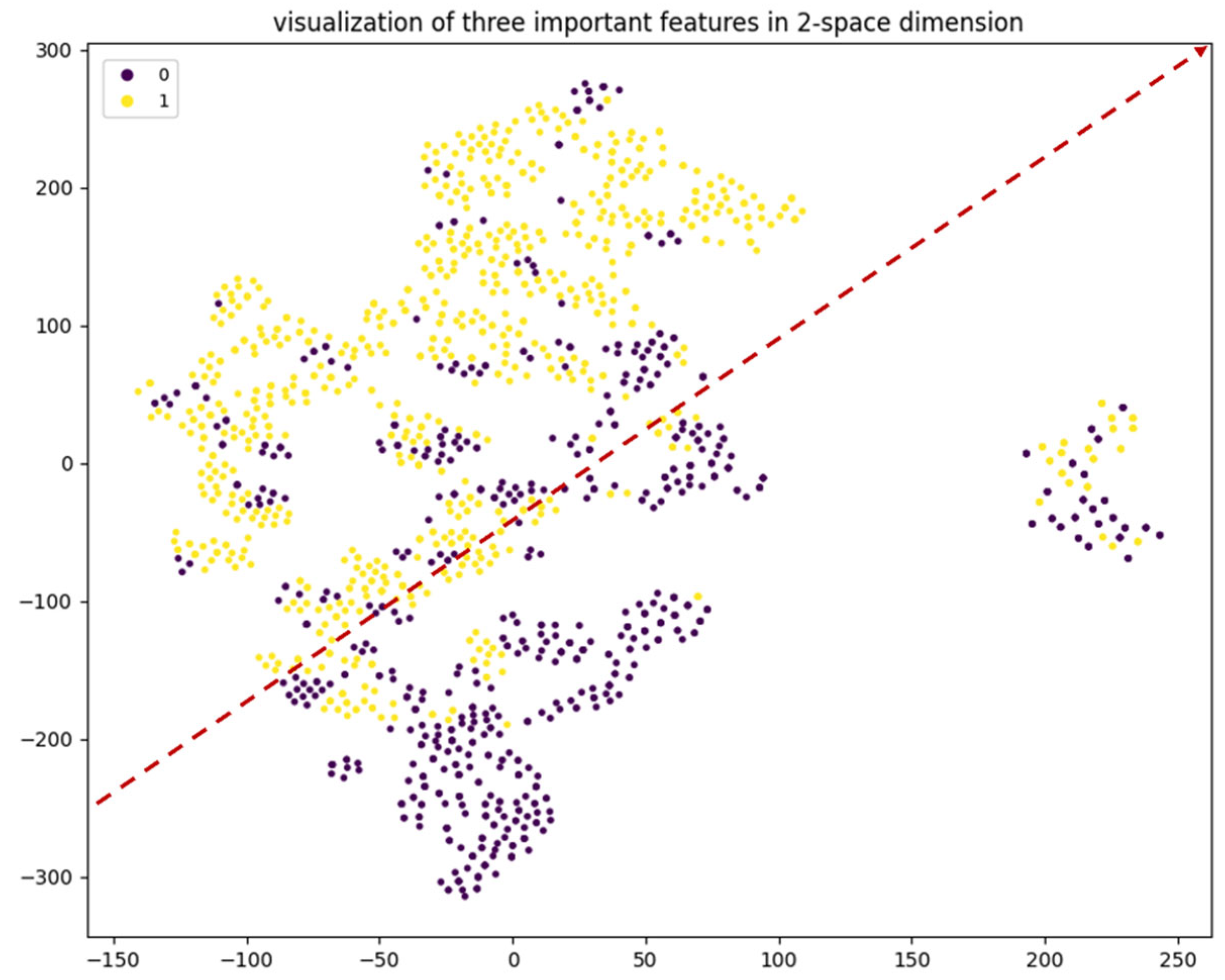

2.4. Feature Importance

Evaluation of the Classifiers

2.5. Comparison of Demographic Characteristics

3. Results

3.1. Segmentation

3.2. Feature Selection

3.3. Feature Importance

3.3.1. Classification

3.3.2. SHAP Calculations

3.4. Generalization

3.5. Robustness Analysis

4. Discussion

4.1. From Imaging to Insight: Clinical Relevance of Selected Retinal Features

4.2. Feature Importance Methods in Retinal Imaging

4.3. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MS | Multiple Sclerosis |

| HC | Healthy Control |

| CNS | Central Nervous System |

| OCT | Optical Coherence Tomography |

| RNFL | Retinal Nerve Fiber Layer |

| GCIPL | Ganglion Cell-Inner Plexiform Layer |

| AD | Alzheimer’s Disease |

| PD | Parkinson’s Disease |

| IR-SLO | Infrared Scanning Laser Ophthalmoscopy |

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| FI | Feature Importance |

| SHAP | SHapley Additive exPlanations |

| VD | Vessel Density |

| FD | Fractal Dimension |

| VI | Vessel Intensity |

| VW | Vessel Width |

| FS | Feature Selection |

| ML | Machine Learning |

| RF | Random Forest |

| SVM | Support Vector Machine |

| XGBoost | Extreme Gradient Boosting |

| ACC | Accuracy |

| AUROC | Area Under the Receiver Operating Characteristic |

| AUPRC | Area Under the Precision-Recall Curve |

| PR | Precision Recall |

| SE | Sensitivity |

| SP | Specificity |

| ROC | Receiver Operating Characteristic |

| RFE | Recursive Feature Elimination |

| EBM | Explainable Boosting Machine |

| t-SNE | t-distributed Stochastic Neighbor Embedding |

References

- Thompson, A.J.; Baranzini, S.E.; Geurts, J.; Hemmer, B.; Ciccarelli, O. Multiple sclerosis. Lancet 2018, 391, 1622–1636. [Google Scholar] [CrossRef] [PubMed]

- Thompson, A.J.; Banwell, B.L.; Barkhof, F.; Carroll, W.M.; Coetzee, T.; Comi, G.; Correale, J.; Fazekas, F.; Filippi, M.; Freedman, M.S.; et al. Diagnosis of multiple sclerosis: 2017 revisions of the McDonald criteria. Lancet Neurol. 2018, 17, 162–173. [Google Scholar] [CrossRef] [PubMed]

- Kashani, A.H.; Asanad, S.; Chan, J.W.; Singer, M.B.; Zhang, J.; Sharifi, M.; Khansari, M.M.; Abdolahi, F.; Shi, Y.; Biffi, A.; et al. Past, present and future role of retinal imaging in neurodegenerative disease. Prog. Retin. Eye Res. 2021, 83, 100938. [Google Scholar] [CrossRef]

- Petzold, A.; Balcer, L.J.; Calabresi, P.A.; Costello, F.; Frohman, T.C.; Frohman, E.M.; Martinez-Lapiscina, E.H.; Green, A.J.; Kardon, R.; Outteryck, O.; et al. Retinal layer segmentation in multiple sclerosis: A systematic review and meta-analysis. Lancet Neurol. 2017, 16, 797–812. [Google Scholar] [CrossRef]

- Costanzo, E.; Lengyel, I.; Parravano, M.; Biagini, I.; Veldsman, M.; Badhwar, A.; Betts, M.; Cherubini, A.; Llewellyn, D.J.; Lourida, I.; et al. Ocular Biomarkers for Alzheimer Disease Dementia: An Umbrella Review of Systematic Reviews and Meta-analyses. JAMA Ophthalmol. 2023, 141, 84–91. [Google Scholar] [CrossRef]

- Zhou, W.C.; Tao, J.X.; Li, J. Optical coherence tomography measurements as potential imaging biomarkers for Parkinson’s disease: A systematic review and meta-analysis. Eur. J. Neurol. 2021, 28, 763–774. [Google Scholar] [CrossRef]

- Fischer, J.; Otto, T.; Delori, F.; Pace, L.; Staurenghi, G. Scanning Laser Ophthalmoscopy (SLO). In High Resolution Imaging in Microscopy and Ophthalmology: New Frontiers in Biomedical Optics; Bille, J.F., Ed.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 35–57. [Google Scholar] [CrossRef]

- Kromer, R.; Shafin, R.; Boelefahr, S.; Klemm, M. An Automated Approach for Localizing Retinal Blood Vessels in Confocal Scanning Laser Ophthalmoscopy Fundus Images. J. Med Biol. Eng. 2016, 36, 485–494. [Google Scholar] [CrossRef][Green Version]

- Arian, R.; Aghababaei, A.; Soltanipour, A.; Khodabandeh, Z.; Rakhshani, S.; Iyer, S.; Ashtari, F.; Rabbani, H.; Kafieh, R. SLO-Net: Enhancing Multiple Sclerosis Diagnosis Beyond Optical Coherence Tomography Using Infrared Reflectance Scanning Laser Ophthalmoscopy Images. Transl. Vis. Sci. Technol. 2024, 13, 13. [Google Scholar] [CrossRef]

- Khodabandeh, Z.; Rabbani, H.; Ashtari, F.; Zimmermann, H.G.; Motamedi, S.; Brandt, A.U.; Paul, F.; Kafieh, R. Discrimination of multiple sclerosis using OCT images from two different centers. Mult. Scler. Relat. Disord. 2023, 77, 104846. [Google Scholar] [CrossRef]

- Danesh, H.; Steel, D.H.; Hogg, J.; Ashtari, F.; Innes, W.; Bacardit, J.; Hurlbert, A.; Read, J.C.A.; Kafieh, R. Synthetic OCT Data Generation to Enhance the Performance of Diagnostic Models for Neurodegenerative Diseases. Transl. Vis. Sci. Technol. 2022, 11, 10. [Google Scholar] [CrossRef]

- Garcia-Martin, E.; Ortiz, M.; Boquete, L.; Sánchez-Morla, E.M.; Barea, R.; Cavaliere, C.; Vilades, E.; Orduna, E.; Rodrigo, M.J. Early diagnosis of multiple sclerosis by OCT analysis using Cohen’s d method and a neural network as classifier. Comput. Biol. Med. 2021, 129, 104165. [Google Scholar] [CrossRef]

- Montolío, A.; Martín-Gallego, A.; Cegoñino, J.; Orduna, E.; Vilades, E.; Garcia-Martin, E.; Palomar, A.P.D. Machine learning in diagnosis and disability prediction of multiple sclerosis using optical coherence tomography. Comput. Biol. Med. 2021, 133, 104416. [Google Scholar] [CrossRef]

- Estiasari, R.; Diwyacitta, A.; Sidik, M.; Rida Ariarini, N.N.; Sitorus, F.; Marwadhani, S.S.; Maharani, K.; Imran, D.; Arpandy, R.A.; Pangeran, D.; et al. Evaluation of Retinal Structure and Optic Nerve Function Changes in Multiple Sclerosis: Longitudinal Study with 1-Year Follow-Up. Neurol. Res. Int. 2021, 2021, 5573839. [Google Scholar] [CrossRef] [PubMed]

- Murphy, O.C.; Kwakyi, O.; Iftikhar, M.; Zafar, S.; Lambe, J.; Pellegrini, N.; Sotirchos, E.S.; Gonzales-Caldito, N.; Ogboukiri, E.; Filippatou, A.; et al. Alterations in the retinal vasculature occur in multiple sclerosis and exhibit novel correlations with disability and visual function measures. Mult. Scler. 2020, 26, 815–828. [Google Scholar] [CrossRef] [PubMed]

- Feucht, N.; Maier, M.; Lepennetier, G.; Pettenkofer, M.; Wetzlmair, C.; Daltrozzo, T.; Scherm, P.; Zimmer, C.; Hoshi, M.-M.; Hemmer, B.; et al. Optical coherence tomography angiography indicates associations of the retinal vascular network and disease activity in multiple sclerosis. Mult. Scler. 2019, 25, 224–234. [Google Scholar] [CrossRef] [PubMed]

- Ulusoy, M.O.; Horasanlı, B.; Işık-Ulusoy, S. Optical coherence tomography angiography findings of multiple sclerosis with or without optic neuritis. Neurol. Res. 2020, 42, 319–326. [Google Scholar] [CrossRef]

- Wang, X.; Jia, Y.; Spain, R.; Potsaid, B.; Liu, J.J.; Baumann, B.; Hornegger, J.; Fujimoto, J.G.; Wu, Q.; Huang, D. Optical coherence tomography angiography of optic nerve head and parafovea in multiple sclerosis. Br. J. Ophthalmol. 2014, 98, 1368–1373. [Google Scholar] [CrossRef]

- Cain Bolton, R.; Kafieh, R.; Ashtari, F.; Atapour-Abarghouei, A. Diagnosis of Multiple Sclerosis by Detecting Asymmetry Within the Retina Using a Similarity-Based Neural Network. IEEE Access 2024, 12, 62975–62985. [Google Scholar] [CrossRef]

- Aghababaei, A.; Arian, R.; Soltanipour, A.; Ashtari, F.; Rabbani, H.; Kafieh, R. Discrimination of multiple sclerosis using scanning laser ophthalmoscopy images with autoencoder-based feature extraction. Mult. Scler. Relat. Disord. 2024, 88, 105743. [Google Scholar] [CrossRef]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I.; the Precise4Q consortium. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Making 2020, 20, 310. [Google Scholar] [CrossRef]

- Casalicchio, G.; Molnar, C.; Bischl, B. Visualizing the Feature Importance for Black Box Models. arXiv 2019, arXiv:1804.06620. [Google Scholar] [CrossRef]

- Remeseiro, B.; Bolon-Canedo, V. A review of feature selection methods in medical applications. Comput. Biol. Med. 2019, 112, 103375. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, T.S.; Shah, J.; Zhen, Y.N.B.; Chua, J.; Wong, D.W.K.; Nusinovici, S.; Tan, R.; Tan, G.; Schmetterer, L.; Tan, B. Ocular microvascular complications in diabetic retinopathy: Insights from machine learning. BMJ Open Diabetes Res. Care 2024, 12, e003758. [Google Scholar] [CrossRef] [PubMed]

- Hassan, D.; Gill, H.M.; Happe, M.; Bhatwadekar, A.D.; Hajrasouliha, A.R.; Janga, S.C. Combining transfer learning with retinal lesion features for accurate detection of diabetic retinopathy. Front. Med. 2022, 9, 1050436. [Google Scholar] [CrossRef]

- Cao, W.; Czarnek, N.; Shan, J.; Li, L. Microaneurysm Detection Using Principal Component Analysis and Machine Learning Methods. IEEE Trans. Nanobioscience 2018, 17, 191–198. [Google Scholar] [CrossRef]

- Singh, A.; Jothi Balaji, J.; Rasheed, M.A.; Jayakumar, V.; Raman, R.; Lakshminarayanan, V. Evaluation of Explainable Deep Learning Methods for Ophthalmic Diagnosis. Clin. Ophthalmol. 2021, 15, 2573–2581. [Google Scholar] [CrossRef]

- Bhandari, M.; Shahi, T.B.; Neupane, A. Evaluating Retinal Disease Diagnosis with an Interpretable Lightweight CNN Model Resistant to Adversarial Attacks. J. Imaging 2023, 9, 219. [Google Scholar] [CrossRef]

- Kooner, K.S.; Angirekula, A.; Treacher, A.H.; Al-Humimat, G.; Marzban, M.F.; Chen, A.; Pradhan, R.; Tunga, N.; Wang, C.; Ahuja, P.; et al. Glaucoma Diagnosis Through the Integration of Optical Coherence Tomography/Angiography and Machine Learning Diagnostic Models. Clin. Ophthalmol. 2022, 16, 2685–2697. [Google Scholar] [CrossRef]

- Oh, S.; Park, Y.; Cho, K.J.; Kim, S.J. Explainable Machine Learning Model for Glaucoma Diagnosis and Its Interpretation. Diagnostics 2021, 11, 510. [Google Scholar] [CrossRef]

- Wang, J.; Ji, J.; Zhang, M.; Lin, J.W.; Zhang, G.; Gong, W.; Cen, L.P.; Lu, Y.; Huang, X.; Huang, D.; et al. Automated Explainable Multidimensional Deep Learning Platform of Retinal Images for Retinopathy of Prematurity Screening. JAMA Netw. Open 2021, 4, e218758. [Google Scholar] [CrossRef]

- Aldughayfiq, B.; Ashfaq, F.; Jhanjhi, N.Z.; Humayun, M. Explainable AI for Retinoblastoma Diagnosis: Interpreting Deep Learning Models with LIME and SHAP. Diagnostics 2023, 13, 1932. [Google Scholar] [CrossRef]

- Wang, X.; Jiao, B.; Liu, H.; Wang, Y.; Hao, X.; Zhu, Y.; Xu, B.; Xu, H.; Zhang, S.; Jia, X.; et al. Machine learning based on Optical Coherence Tomography images as a diagnostic tool for Alzheimer’s disease. CNS Neurosci. Ther. 2022, 28, 2206–2217. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Hui, Y.; Zhang, X.; Zhang, S.; Lv, B.; Ni, Y.; Li, X.; Liang, X.; Yang, L.; Lv, H.; et al. Ocular biomarkers of cognitive decline based on deep-learning retinal vessel segmentation. BMC Geriatr. 2024, 24, 28. [Google Scholar] [CrossRef] [PubMed]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. Advances in Neural Information Processing Systems. Curran Associates, Inc. 2017. Available online: https://papers.nips.cc/paper_files/paper/2017/hash/8a20a8621978632d76c43dfd28b67767-Abstract.html (accessed on 31 July 2024).

- Guyon, I.; Weston, J.; Barnhill, S.; Vapnik, V. Gene Selection for Cancer Classification using Support Vector Machines. Mach. Learn. 2002, 46, 389–422. [Google Scholar] [CrossRef]

- Hernandez, M.; Ramon-Julvez, U.; Vilades, E.; Cordon, B.; Mayordomo, E.; Garcia-Martin, E. Explainable artificial intelligence toward usable and trustworthy computer-aided diagnosis of multiple sclerosis from Optical Coherence Tomography. PLoS ONE 2023, 18, e0289495. [Google Scholar] [CrossRef] [PubMed]

- Ciftci Kavaklioglu, B.; Erdman, L.; Goldenberg, A.; Kavaklioglu, C.; Alexander, C.; Oppermann, H.M.; Patel, A.; Hossain, S.; Berenbaum, T.; Yau, O.; et al. Machine learning classification of multiple sclerosis in children using optical coherence tomography. Mult. Scler. 2022, 28, 2253–2262. [Google Scholar] [CrossRef]

- Ashtari, F.; Ataei, A.; Kafieh, R.; Khodabandeh, Z.; Barzegar, M.; Raei, M.; Dehghani, A.; Mansurian, M. Optical Coherence Tomography in Neuromyelitis Optica spectrum disorder and Multiple Sclerosis: A population-based study. Mult. Scler. Relat. Disord. 2021, 47, 102625. [Google Scholar] [CrossRef]

- He, Y.; Carass, A.; Solomon, S.D.; Saidha, S.; Calabresi, P.A.; Prince, J.L. Retinal layer parcellation of optical coherence tomography images: Data resource for multiple sclerosis and healthy controls. Data Brief 2019, 22, 601–604. [Google Scholar] [CrossRef]

- Saeb, S.; Lonini, L.; Jayaraman, A.; Mohr, D.C.; Kording, K.P. The need to approximate the use-case in clinical machine learning. Gigascience 2017, 6, 1–9. [Google Scholar] [CrossRef]

- Wisely, C.E.; Richardson, A.; Henao, R.; Robbins, C.B.; Ma, J.P.; Wang, D.; Johnson, K.G.; Liu, A.J.; Grewal, D.S.; Fekrat, S. A Convolutional Neural Network Using Multimodal Retinal Imaging for Differentiation of Mild Cognitive Impairment from Normal Cognition. Ophthalmol. Sci. 2024, 4, 100355. Available online: https://www.ophthalmologyscience.org/article/S2666-9145(23)00087-8/fulltext (accessed on 31 July 2024). [CrossRef]

- Zhou, Y.; Wagner, S.K.; Chia, M.A.; Zhao, A.; Woodward-Court, P.; Xu, M.; Struyven, R.; Alexander, D.C.; Keane, P.A. AutoMorph: Automated Retinal Vascular Morphology Quantification Via a Deep Learning Pipeline. Transl. Vis. Sci. Technol. 2022, 11, 12. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Huang, F.; Zhang, J.; Dashtbozorg, B.; Abbasi-Sureshjani, S.; Sun, Y.; Long, X.; Yu, Q.; ter Haar Romeny, B.; Tan, T. Multi-modal and multi-vendor retina image registration. Biomed. Opt. Express 2018, 9, 410–422. [Google Scholar] [CrossRef] [PubMed]

- Lyu, X.; Yang, Q.; Xia, S.; Zhang, S. Construction of Retinal Vascular Trees via Curvature Orientation Prior. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 375–382. [Google Scholar]

- Falconer, K. Fractal Geometry: Mathematical Foundations and Applications, 3rd ed.; Wiley: Hoboken, NJ, USA, 2013; p. 386. [Google Scholar]

- Hart, W.E.; Goldbaum, M.; Côté, B.; Kube, P.; Nelson, M.R. Measurement and classification of retinal vascular tortuosity. Int. J. Med Inform. 1999, 53, 239–252. [Google Scholar] [CrossRef]

- Grisan, E.; Foracchia, M.; Ruggeri, A. A novel method for the automatic grading of retinal vessel tortuosity. IEEE Trans. Med Imaging 2008, 27, 310–319. [Google Scholar] [CrossRef]

- Cheung, C.Y.; Tay, W.T.; Mitchell, P.; Wang, J.J.; Hsu, W.; Lee, M.L.; Lau, Q.P.; Zhu, A.L.; Klein, R.; Saw, S.M.; et al. Quantitative and qualitative retinal microvascular characteristics and blood pressure. J. Hypertens. 2011, 29, 1380–1391. [Google Scholar] [CrossRef]

- Borah, P.; Ahmed, H.A.; Bhattacharyya, D.K. A statistical feature selection technique. Netw. Model. Anal. Health Inform. Bioinforma. 2014, 3, 55. [Google Scholar] [CrossRef]

- Kohl, M. Feature Selection in Statistical Classification. Int. J. Stat. Med. Res. 2012, 1, 177–178. [Google Scholar] [CrossRef]

- Pudjihartono, N.; Fadason, T.; Kempa-Liehr, A.W.; O’Sullivan, J.M. A Review of Feature Selection Methods for Machine Learning-Based Disease Risk Prediction. Front. Bioinform. 2022, 2, 927312. [Google Scholar] [CrossRef]

- Nachar, N. The Mann-Whitney U: A Test for Assessing Whether Two Independent Samples Come from the Same Distribution. Tutor. Quant. Methods Psychol. 2008, 4, 13–20. [Google Scholar] [CrossRef]

- Bhattacharya, A. Applied Machine Learning Explainability Techniques: Make ML Models Explainable and Trustworthy for Practical Applications Using LIME, SHAP, and More; Packt Publishing: Birmingham, UK, 2022; p. 306. [Google Scholar]

- Cervantes, J.; García-Lamont, F.; Rodríguez, L.; Lopez-Chau, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Akiba, T.; Sano, S.; Yanase, T.; Ohta, T.; Koyama, M. Optuna: A Next-generation Hyperparameter Optimization Framework. arXiv 2019, arXiv:1907.10902. [Google Scholar] [CrossRef]

- Louppe, G. Understanding Random Forests: From Theory to Practice. arXiv 2015, arXiv:1407.7502. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- van der Maaten, L.; Hinton, G. Visualizing Data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Lemmens, S.; Devulder, A.; Van Keer, K.; Bierkens, J.; De Boever, P.; Stalmans, I. Systematic Review on Fractal Dimension of the Retinal Vasculature in Neurodegeneration and Stroke: Assessment of a Potential Biomarker. Front. Neurosci. 2020, 14, 16. [Google Scholar] [CrossRef] [PubMed]

- McGrory, S.; Cameron, J.R.; Pellegrini, E.; Warren, C.; Doubal, F.N.; Deary, I.J.; Dhillon, B.; Wardlaw, J.M.; Trucco, E.; MacGillivray, T.J. The application of retinal fundus camera imaging in dementia: A systematic review. Alzheimer’s Dement. 2017, 6, 91–107. [Google Scholar] [CrossRef]

- Zekavat, S.M.; Raghu, V.K.; Trinder, M.; Ye, Y.; Koyama, S.; Honigberg, M.C.; Yu, Z.; Pampana, A.; Urbut, S.; Haidermota, S.; et al. Deep Learning of the Retina Enables Phenome- and Genome-Wide Analyses of the Microvasculature. Circulation 2022, 145, 134–150. [Google Scholar] [CrossRef]

- Jiang, H.; Delgado, S.; Tan, J.; Liu, C.; Rammohan, K.W.; DeBuc, D.C.; Lam, B.L.; Feuer, W.J.; Wang, J. Impaired retinal microcirculation in multiple sclerosis. Mult. Scler. 2016, 22, 1812–1820. [Google Scholar] [CrossRef]

- Liu, Y.; Delgado, S.; Jiang, H.; Lin, Y.; Hernandez, J.; Deng, Y.; Gameiro, G.R.; Wang, J. Retinal Tissue Perfusion in Patients with Multiple Sclerosis. Curr. Eye Res. 2019, 44, 1091–1097. [Google Scholar] [CrossRef]

- Drobnjak Nes, D.; Berg-Hansen, P.; de Rodez Benavent, S.A.; Høgestøl, E.A.; Beyer, M.K.; Rinker, D.A.; Veiby, N.; Karabeg, M.; Petrovski, B.É.; Celius, E.G.; et al. Exploring Retinal Blood Vessel Diameters as Biomarkers in Multiple Sclerosis. J. Clin. Med. 2022, 11, 3109. [Google Scholar] [CrossRef]

- Kallab, M.; Hommer, N.; Schlatter, A.; Bsteh, G.; Altmann, P.; Popa-Cherecheanu, A.; Pfister, M.; Werkmeister, R.M.; Schmidl, D.; Schmetterer, L.; et al. Retinal Oxygen Metabolism and Haemodynamics in Patients With Multiple Sclerosis and History of Optic Neuritis. Front. Neurosci. 2021, 15, 761654. [Google Scholar] [CrossRef]

- Garhofer, G.; Bek, T.; Boehm, A.G.; Gherghel, D.; Grunwald, J.; Jeppesen, P.; Kergoat, H.; Kotliar, K.; Lanzl, I.; Lovasik, J.V.; et al. Use of the retinal vessel analyzer in ocular blood flow research. Acta Ophthalmol. 2010, 88, 717–722. [Google Scholar] [CrossRef]

- Einarsdottir, A.B.; Olafsdottir, O.B.; Hjaltason, H.; Hardarson, S.H. Retinal oximetry is affected in multiple sclerosis. Acta Ophthalmol. 2018, 96, 528–530. [Google Scholar] [CrossRef]

- Svrčinová, T.; Hok, P.; Šínová, I.; Dorňák, T.; Král, M.; Hübnerová, P.; Mareš, J.; Kaňovský, P.; Šín, M. Changes in oxygen saturation and the retinal nerve fibre layer in patients with optic neuritis associated with multiple sclerosis in a 6-month follow-up. Acta Ophthalmol. 2020, 98, 841–847. [Google Scholar] [CrossRef]

- Hammer, M.; Vilser, W.; Riemer, T.; Schweitzer, D. Retinal vessel oximetry-calibration, compensation for vessel diameter and fundus pigmentation, and reproducibility. J. Biomed. Opt. 2008, 13, 054015. [Google Scholar] [CrossRef] [PubMed]

- Yang, R.; Dunn, J.F. Multiple sclerosis disease progression: Contributions from a hypoxia–inflammation cycle. Mult. Scler. 2019, 25, 1715–1718. [Google Scholar] [CrossRef] [PubMed]

- Halder, S.K.; Milner, R. Hypoxia in multiple sclerosis; is it the chicken or the egg? Brain 2021, 144, 402–410. [Google Scholar] [CrossRef] [PubMed]

- Arian, R.; Mahmoudi, E. Automatic choroid vascularity index calculation in optical coherence tomography images with low-contrast sclerochoroidal junction using deep learning. Photonics 2023, 10, 234. [Google Scholar] [CrossRef]

| Isfahan (n = 106) | Johns Hopkins (n = 35) | p-Value | ||

|---|---|---|---|---|

| Mean age (±SD) | MS | 34.29 (±8.24) | 41.97 (±8.77) | 0.001 * |

| HC | 31.59 (±7.80) | 35.77 (±13.03) | 0.631 | |

| All | 32.48 (±8.01) | 39.49 (±10.94) | 0.001 * | |

| Gender (female/male) | MS | 34/1 | 17/4 | 0.040 * |

| HC | 55/16 | 12/2 | 0.490 | |

| All | 89/17 | 29/6 | 0.878 | |

| Structure | Dice Coefficient (%) | F1 Score (%) | IoU (%) | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Retinal Vessels | 97.0 | 96.8 | 94.2 | 95.5 | 98.7 |

| Optic Disc | 91.0 | 90.6 | 84.5 | 89.2 | 97.3 |

| Optic Cup | 94.5 | 94.1 | 89.8 | 93.0 | 98.0 |

| Model | ACC | AUROC | AUPRC | F1 | SP | SE | PR |

|---|---|---|---|---|---|---|---|

| SVM (kernel: RBF) | 79.88 ± 5.73% | 85.25 ± 6.55% | 84.83 ± 8.64% | 79.67 ± 5.97% | 78.23 ± 9.98% | 79.40 ± 12.88% | 78.95 ± 6.94% |

| RF | 78.99 ± 6.55% | 85.09 ± 8.93% | 85.16 ± 8.59% | 78.80 ± 6.64% | 76.52 ± 8.89% | 79.91 ± 14.47% | 77.58 ± 6.15% |

| XGBoost | 82.37 ± 4.91% | 86.28 ± 8.12% | 85.37 ± 8.32% | 82.14 ± 5.06% | 78.63 ± 9.56% | 83.79 ± 11.12% | 80.39 ± 6.55% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Soltanipour, A.; Arian, R.; Aghababaei, A.; Ashtari, F.; Zhou, Y.; Keane, P.A.; Kafieh, R. Analyzing Retinal Vessel Morphology in MS Using Interpretable AI on Deep Learning-Segmented IR-SLO Images. Bioengineering 2025, 12, 847. https://doi.org/10.3390/bioengineering12080847

Soltanipour A, Arian R, Aghababaei A, Ashtari F, Zhou Y, Keane PA, Kafieh R. Analyzing Retinal Vessel Morphology in MS Using Interpretable AI on Deep Learning-Segmented IR-SLO Images. Bioengineering. 2025; 12(8):847. https://doi.org/10.3390/bioengineering12080847

Chicago/Turabian StyleSoltanipour, Asieh, Roya Arian, Ali Aghababaei, Fereshteh Ashtari, Yukun Zhou, Pearse A. Keane, and Raheleh Kafieh. 2025. "Analyzing Retinal Vessel Morphology in MS Using Interpretable AI on Deep Learning-Segmented IR-SLO Images" Bioengineering 12, no. 8: 847. https://doi.org/10.3390/bioengineering12080847

APA StyleSoltanipour, A., Arian, R., Aghababaei, A., Ashtari, F., Zhou, Y., Keane, P. A., & Kafieh, R. (2025). Analyzing Retinal Vessel Morphology in MS Using Interpretable AI on Deep Learning-Segmented IR-SLO Images. Bioengineering, 12(8), 847. https://doi.org/10.3390/bioengineering12080847