Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke

Abstract

1. Introduction

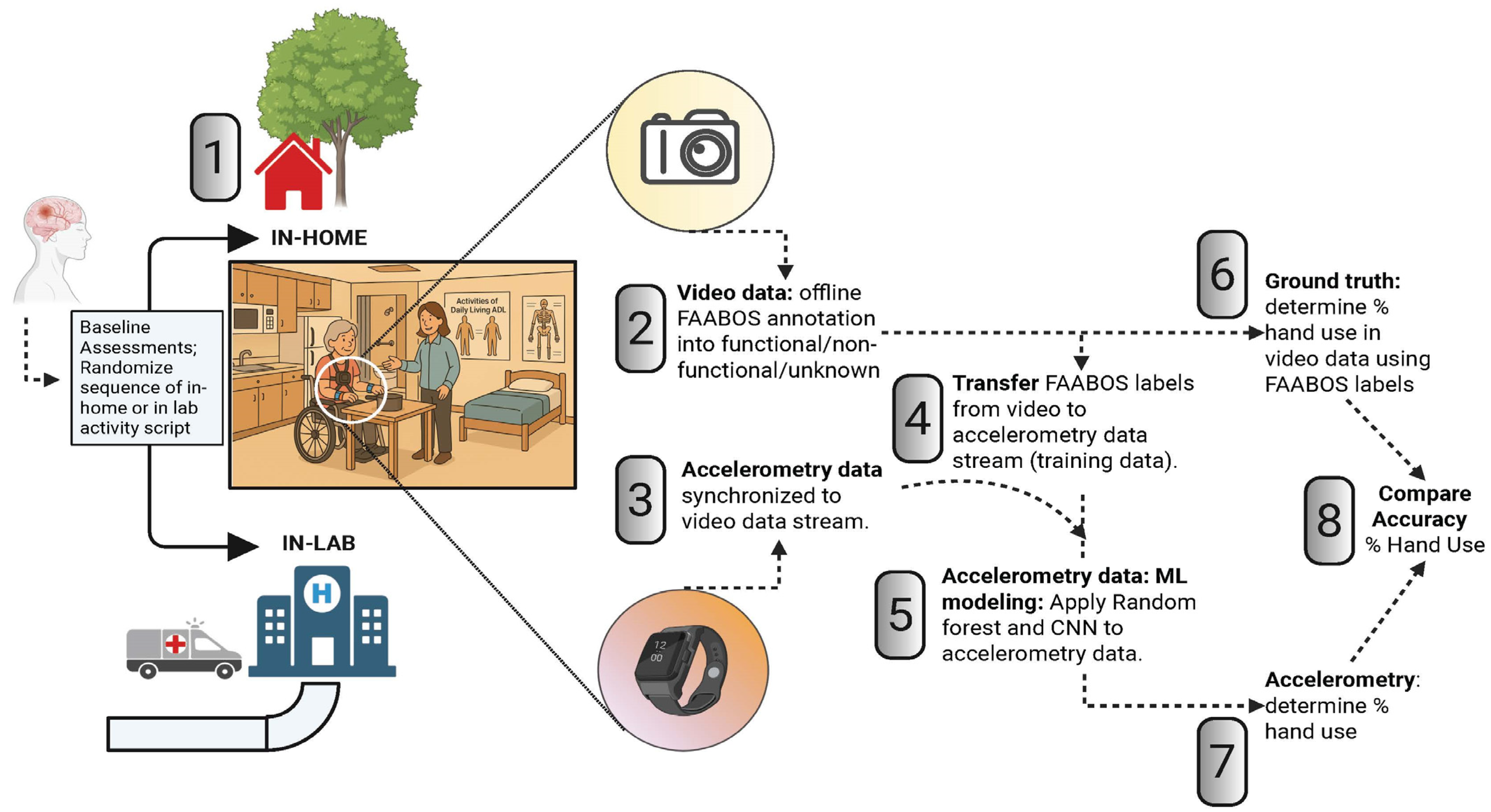

2. Materials and Methods

2.1. Participants

2.2. Apparatus and Measures

2.2.1. Clinical Testing

2.2.2. Activity Script:

2.2.3. Accelerometry and Video Data Recording

2.3. Data Processing

2.3.1. Video Annotation

2.3.2. Application of Machine Learning Algorithms to Accelerometer Data

2.3.3. Statistical Analysis

3. Results

3.1. Video (Ground Truth) Data Shows a Capacity–Performance Gap In-Lab and At-Home

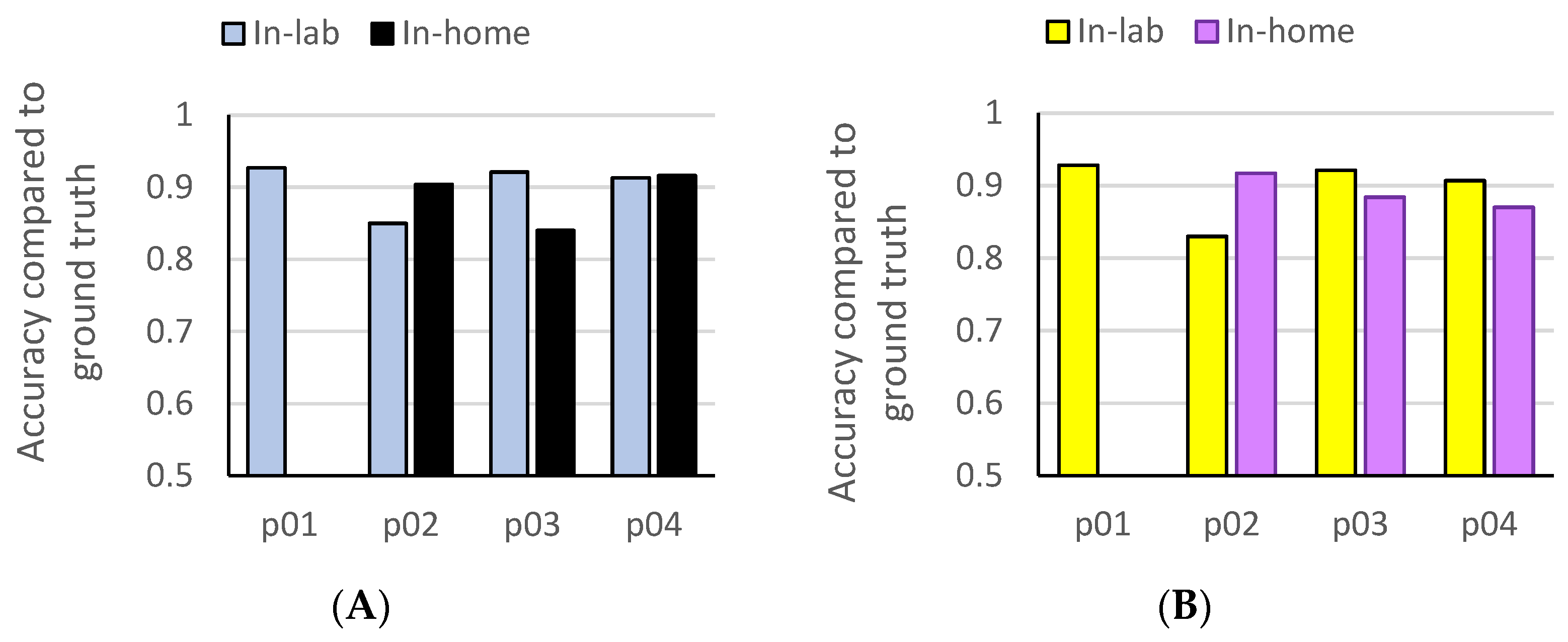

3.2. Accelerometry-Based Amount of Functional Hand Use In-Lab and At-Home

3.3. Prediction of At-Home Functional Hand Use from Machine Learning Models Trained on In-Lab Data

4. Discussion

Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADL/IADLs | Activities and Instrumental Activities of Daily Living |

| ARAT | Action Research Arm Test |

| CNN | Convolution Neural Networks |

| FAABOS | Functional Arm Activity Behavioral Observation System |

| RF | Random Forest |

| UE | Upper extremity |

| UEFM | Upper-Extremity Fugl–Meyer |

References

- Yozbatiran, N.; Der-Yeghiaian, L.; Cramer, S.C. A standardized approach to performing the action research arm test. Neurorehabil Neural Repair 2008, 22, 78–90. [Google Scholar] [CrossRef] [PubMed]

- Fugl-Meyer, A.R.; Jaasko, L.; Leyman, I.; Olsson, S.; Steglind, S. The post-stroke hemiplegic patient. 1. a method for evaluation of physical performance. Scand. J. Rehabil. Med. 1975, 7, 13–31. [Google Scholar] [CrossRef] [PubMed]

- van der Lee, J.H.; Beckerman, H.; Lankhorst, G.J.; Bouter, L.M. The responsiveness of the Action Research Arm test and the Fugl-Meyer Assessment scale in chronic stroke patients. J. Rehabil. Med. 2001, 33, 110–113. [Google Scholar] [PubMed]

- Gladstone, D.J.; Danells, C.J.; Black, S.E. The fugl-meyer assessment of motor recovery after stroke: A critical review of its measurement properties. Neurorehabil Neural Repair. 2002, 16, 232–240. [Google Scholar] [CrossRef]

- Woodbury, M.L.; Velozo, C.A.; Richards, L.G.; Duncan, P.W. Rasch analysis staging methodology to classify upper extremity movement impairment after stroke. Arch. Phys. Med. Rehabil. 2013, 94, 1527–1533. [Google Scholar] [CrossRef]

- Bushnell, C.; Bettger, J.P.; Cockroft, K.M.; Cramer, S.C.; Edelen, M.O.; Hanley, D.; Katzan, I.L.; Mattke, S.; Nilsen, D.M.; Piquado, T.; et al. Chronic Stroke Outcome Measures for Motor Function Intervention Trials: Expert Panel Recommendations. Circ. Cardiovasc. Qual. Outcomes 2015, 8, S163–S169. [Google Scholar] [CrossRef]

- Geed, S.; Lane, C.J.; Nelsen, M.A.; Wolf, S.L.; Winstein, C.J.; Dromerick, A.W. Inaccurate Use of the Upper Extremity Fugl-Meyer Negatively Affects Upper Extremity Rehabilitation Trial Design: Findings From the ICARE Randomized Controlled Trial. Arch. Phys. Med. Rehabil. 2020, 102, 270–279. [Google Scholar] [CrossRef]

- Geed, S.; Grainger, M.L.; Mitchell, A.; Anderson, C.C.; Schmaulfuss, H.L.; Culp, S.A.; McCormick, E.R.; McGarry, M.R.; Delgado, M.N.; Noccioli, A.D.; et al. Concurrent validity of machine learning-classified functional upper extremity use from accelerometry in chronic stroke. Front. Physiol. 2023, 14, 1116878. [Google Scholar] [CrossRef]

- Lundquist, C.B.; Nguyen, B.T.; Hvidt, T.B.; Stabel, H.H.; Christensen, J.R.; Brunner, I. Changes in Upper Limb Capacity and Performance in the Early and Late Subacute Phase After Stroke. J. Stroke Cerebrovasc. Dis. Off. J. Natl. Stroke Assoc. 2022, 31, 106590. [Google Scholar] [CrossRef]

- Doman, C.A.; Waddell, K.J.; Bailey, R.R.; Moore, J.L.; Lang, C.E. Changes in Upper-Extremity Functional Capacity and Daily Performance During Outpatient Occupational Therapy for People With Stroke. Am. J. Occup. Ther. Off. Publ. Am. Occup. Ther. Assoc. 2016, 70, 7003290040p1–7003290040p11. [Google Scholar] [CrossRef]

- Lang, C.E.; Holleran, C.L.; Strube, M.J.; Ellis, T.D.; Newman, C.A.; Fahey, M.; DeAngelis, T.R.; Nordahl, T.J.; Reisman, D.S.; Earhart, G.M.; et al. Improvement in the Capacity for Activity Versus Improvement in Performance of Activity in Daily Life During Outpatient Rehabilitation. J. Neurol. Phys. Ther. 2023, 47, 16–25. [Google Scholar] [CrossRef]

- Rand, D.; Eng, J.J. Disparity between functional recovery and daily use of the upper and lower extremities during subacute stroke rehabilitation. Neurorehabil Neural Repair. 2012, 26, 76–84. [Google Scholar] [CrossRef] [PubMed]

- Taub, E.; Uswatte, G.; Mark, V.W.; Morris, D.M.; Barman, J.; Bowman, M.H.; Bryson, C.; Delgado, A.; Bishop-McKay, S. Method for Enhancing Real-World Use of a More Affected Arm in Chronic Stroke. Stroke 2013, 44, 1383–1388. [Google Scholar] [CrossRef] [PubMed]

- Wolf, S.L.; Thompson, P.A.; Winstein, C.J.; Miller, J.P.; Blanton, S.R.; Nichols-Larsen, D.S.; Morris, D.M.; Uswatte, G.; Taub, E.; Light, K.E. The EXCITE stroke trial. Stroke 2010, 41, 2309–2315. [Google Scholar] [CrossRef] [PubMed]

- Winstein, C.J.; Wolf, S.L.; Dromerick, A.W.; Lane, C.J.; Nelsen, M.A.; Lewthwaite, R.; Cen, S.Y.; Azen, S.P. Effect of a task-oriented rehabilitation program on upper extremity recovery following motor stroke: The icare randomized clinical trial. JAMA 2016, 315, 571–581. [Google Scholar] [CrossRef]

- Dromerick, A.W.; Geed, S.; Barth, J.; Brady, K.; Giannetti, M.L.; Mitchell, A.; Edwardson, M.A.; Tan, M.T.; Zhou, Y.; Newport, E.L.; et al. Critical Period After Stroke Study (CPASS): A phase II clinical trial testing an optimal time for motor recovery after stroke in humans. Proc. Natl. Acad. Sci. USA 2021, 118, e2026676118. [Google Scholar] [CrossRef]

- Brunner, I.; Skouen, J.S.; Hofstad, H.; Aßmus, J.; Becker, F.; Sanders, A.M.; Pallesen, H.; Qvist Kristensen, L.; Michielsen, M.; Thijs, L.; et al. Virtual Reality Training for Upper Extremity in Subacute Stroke (VIRTUES): A multicenter RCT. Neurology 2017, 89, 2413–2421. [Google Scholar] [CrossRef]

- Dromerick, A.W.; Lang, C.E.; Birkenmeier, R.L.; Wagner, J.M.; Miller, J.P.; Videen, T.O.; Powers, W.J.; Wolf, S.L.; Edwards, D.F. Very Early Constraint-Induced Movement during Stroke Rehabilitation (VECTORS): A single-center RCT. Neurology 2009, 73, 195–201. [Google Scholar] [CrossRef]

- Barth, J.; Geed, S.; Mitchell, A.; Lum, P.S.; Edwards, D.F.; Dromerick, A.W. Characterizing upper extremity motor behavior in the first week after stroke. PLoS ONE 2020, 15, e0221668. [Google Scholar] [CrossRef]

- Gebruers, N.; Truijen, S.; Engelborghs, S.; Nagels, G.; Brouns, R.; De Deyn, P.P. Actigraphic measurement of motor deficits in acute ischemic stroke. Cerebrovasc. Dis. 2008, 26, 533–540. [Google Scholar] [CrossRef]

- Gebruers, N.; Vanroy, C.; Truijen, S.; Engelborghs, S.; De Deyn, P.P. Monitoring of physical activity after stroke: A systematic review of accelerometry-based measures. Arch. Phys. Med. Rehabil. 2010, 91, 288–297. [Google Scholar] [CrossRef] [PubMed]

- Gebruers, N.; Truijen, S.; Engelborghs, S.; De Deyn, P.P. Predictive value of upper-limb accelerometry in acute stroke with hemiparesis. J. Rehabil. Res. Dev. 2013, 50, 1099–1106. [Google Scholar] [CrossRef] [PubMed]

- Mathew, S.P.; Dawe, J.; Musselman, K.E.; Petrevska, M.; Zariffa, J.; Andrysek, J.; Biddiss, E. Measuring functional hand use in children with unilateral cerebral palsy using accelerometry and machine learning. Dev. Med. Child Neurol. 2024, 66, 1380–1389. [Google Scholar] [CrossRef] [PubMed]

- Dawe, J.; Yang, J.F.; Fehlings, D.; Likitlersuang, J.; Rumney, P.; Zariffa, J.; Musselman, K.E. Validating Accelerometry as a Measure of Arm Movement for Children With Hemiplegic Cerebral Palsy. Phys. Ther. 2019, 99, 721–729. [Google Scholar] [CrossRef]

- Srinivasan, S.; Amonkar, N.; Kumavor, P.D.; Bubela, D. Measuring Upper Extremity Activity of Children With Unilateral Cerebral Palsy Using Wrist-Worn Accelerometers: A Pilot Study. Am. J. Occup. Ther. Off. Publ. Am. Occup. Ther. Assoc. 2024, 78, 7802180050. [Google Scholar] [CrossRef]

- Sequeira, S.B.; Grainger, M.L.; Mitchell, A.M.; Anderson, C.C.; Geed, S.; Lum, P.; Giladi, A.M. Machine Learning Improves Functional Upper Extremity Use Capture in Distal Radius Fracture Patients. Plast. Reconstr. Surg. Glob. Open 2022, 10, e4472. [Google Scholar] [CrossRef]

- Urbin, M.A.; Waddell, K.J.; Lang, C.E. Acceleration metrics are responsive to change in upper extremity function of stroke survivors. Arch. Phys. Med. Rehabil. 2015, 96, 854–861. [Google Scholar] [CrossRef]

- Lum, P.S.; Shu, L.; Bochniewicz, E.M.; Tran, T.; Chang, L.C.; Barth, J.; Dromerick, A.W. Improving Accelerometry-Based Measurement of Functional Use of the Upper Extremity After Stroke: Machine Learning Versus Counts Threshold Method. Neurorehabil Neural Repair. 2020, 34, 1078–1087. [Google Scholar] [CrossRef]

- Van der Lee, J.H.; De Groot, V.; Beckerman, H.; Wagenaar, R.C.; Lankhorst, G.J.; Bouter, L.M. The intra- and interrater reliability of the action research arm test: A practical test of upper extremity function in patients with stroke. Arch. Phys. Med. Rehabil. 2001, 82, 14–19. [Google Scholar] [CrossRef]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar]

- Veale, J.F. Edinburgh Handedness Inventory-Short Form: A revised version based on confirmatory factor analysis. Laterality 2014, 19, 164–177. [Google Scholar] [CrossRef] [PubMed]

- Uswatte, G.; Taub, E.; Morris, D.; Light, K.; Thompson, P. The Motor Activity Log-28 assessing daily use of the hemiparetic arm after stroke. Neurology 2006, 67, 1189–1194. [Google Scholar] [CrossRef] [PubMed]

- Duncan, P.W.; Wallace, D.; Lai, S.M.; Johnson, D.; Embretson, S.; Laster, L.J. The stroke impact scale version 2.0. Evaluation of reliability, validity, and sensitivity to change. Stroke 1999, 30, 2131–2140. [Google Scholar] [CrossRef] [PubMed]

- Duncan, P.W.; Wallace, D.; Studenski, S.; Lai, S.M.; Johnson, D. Conceptualization of a new stroke-specific outcome measure: The stroke impact scale. Top. Stroke Rehabil. 2001, 8, 19–33. [Google Scholar] [CrossRef]

- Schlaug, G.; Cassarly, C.; Feld, J.A.; Wolf, S.L.; Rowe, V.T.; Fritz, S.; Chhatbar, P.Y.; Shinde, A.; Su, Z.; Broderick, J.P.; et al. Safety and efficacy of transcranial direct current stimulation in addition to constraint-induced movement therapy for post-stroke motor recovery (TRANSPORT2): A phase 2, multicentre, randomised, sham-controlled triple-blind trial. Lancet Neurol. 2025, 24, 400–412. [Google Scholar] [CrossRef]

| PID | p01 | p02 | p03 | p04 |

|---|---|---|---|---|

| Age (years) | 76 | 68 | 46 | 71 |

| Sex | Female | Male | Male | Male |

| Race | AA | White | White | White |

| Ethnicity | Hispanic | Hispanic | Hispanic | Hispanic |

| Stroke type | Ischemic | Ischemic | Ischemic | Ischemic |

| Months post-stroke | 126.9 | 7.5 | 26.2 | 37.5 |

| Affected arm | Dominant | Dominant | Dominant | Dominant |

| Concordance | Concordant | Concordant | Concordant | Concordant |

| NIHSS motor arm (Impaired) | 3 | 2 | 1 | 1 |

| NIHSS motor arm (Unimpaired) | 0 | 0 | 0 | 0 |

| ARAT (Impaired) | 9 | 40 | 56 | 50 |

| ARAT (Unimpaired) | 56 | 57 | 57 | 57 |

| UEFM (Impaired) | 22 | 48 | 60 | 58 |

| UEFM (Unimpaired) | 66 | 66 | 66 | 66 |

| PID | Functional Use from Video (Ground Truth) | |||||

|---|---|---|---|---|---|---|

| In-Lab | At-Home | |||||

| Impaired | Unimpaired | Use Ratio | Impaired | Unimpaired | Use Ratio | |

| p01 | 0.173 | 0.943 | 0.183 | 0 | 0.864 | 0 |

| p02 | 0.611 | 0.967 | 0.631 | 0.149 | 0.838 | 0.177 |

| p03 | 0.765 | 0.830 | 0.922 | 0.820 | 0.936 | 0.876 |

| p04 | 0.885 | 0.863 | 1.025 | 0.922 | 0.903 | 1.021 |

| In-Lab Data | At-Home Data | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Use % | Accuracy | Use % | Accuracy | |||||||

| PID | # of Samples | RF | CNN | RF | CNN | # of Samples | RF | CNN | RF | CNN |

| p01 | 573 | 0.148 | 0.126 | 0.927 | 0.928 | 540 | NA * | NA * | NA * | NA * |

| p02 | 1029 | 0.596 | 0.579 | 0.850 | 0.830 | 864 | 0.093 | 0.097 | 0.904 | 0.917 |

| p03 | 521 | 0.833 | 0.839 | 0.921 | 0.921 | 362 | 0.903 | 0.92 | 0.840 | 0.884 |

| p04 | 356 | 0.938 | 0.935 | 0.913 | 0.907 | 641 | 0.969 | 0.997 | 0.916 | 0.870 |

| PID | Accuracy | Functional Use | ||||

|---|---|---|---|---|---|---|

| Predicted | Absolute Error | |||||

| RF | CNN | RF | CNN | RF | CNN | |

| p01 | 0.980 | 0.928 | 0.020 | 0.311 | 0.020 | 0.311 |

| p02 | 0.620 | 0.620 | 0.420 | 0.420 | 0.271 | 0.271 |

| p03 | 0.800 | 0.826 | 0.950 | 0.925 | 0.130 | 0.105 |

| p04 | 0.874 | 0.921 | 0.924 | 0.969 | 0.002 | 0.047 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nieto, E.M.; Lujan, E.; Mendoza, C.A.; Arriaga, Y.; Fierro, C.; Tran, T.; Chang, L.-C.; Gurovich, A.N.; Lum, P.S.; Geed, S. Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke. Bioengineering 2025, 12, 615. https://doi.org/10.3390/bioengineering12060615

Nieto EM, Lujan E, Mendoza CA, Arriaga Y, Fierro C, Tran T, Chang L-C, Gurovich AN, Lum PS, Geed S. Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke. Bioengineering. 2025; 12(6):615. https://doi.org/10.3390/bioengineering12060615

Chicago/Turabian StyleNieto, Estevan M., Edaena Lujan, Crystal A. Mendoza, Yazbel Arriaga, Cecilia Fierro, Tan Tran, Lin-Ching Chang, Alvaro N. Gurovich, Peter S. Lum, and Shashwati Geed. 2025. "Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke" Bioengineering 12, no. 6: 615. https://doi.org/10.3390/bioengineering12060615

APA StyleNieto, E. M., Lujan, E., Mendoza, C. A., Arriaga, Y., Fierro, C., Tran, T., Chang, L.-C., Gurovich, A. N., Lum, P. S., & Geed, S. (2025). Accelerometry and the Capacity–Performance Gap: Case Series Report in Upper-Extremity Motor Impairment Assessment Post-Stroke. Bioengineering, 12(6), 615. https://doi.org/10.3390/bioengineering12060615